Question Answering (QA)

by using

Convolutional Deep Neural Networks

presented by:

Saeid Balaneshinkordan

Severyn, Aliaksei, and Alessandro Moschitti.

"Learning to Rank

Short Text Pairs with

Convolutional Deep Neural Networks"

InProceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 373-382. ACM, 2015.

Based on:

Question Answering

ref: https://web.stanford.edu/class/cs124/lec/qa.pdf

Question Answering (QA) - an NLP task

Question:

ref: https://web.stanford.edu/class/cs124/lec/qa.pdf

What do worms eat?

Potential Answers:

1- Worms eat grass

2- Horses with worms eat grass

3- Birds eat worms

4- Grass is eaten by worms

Question Answering (QA) - an NLP task

syntactic similarity features and external resources can be used in feature-based models:

Too Complex

Alternative solution:

Deep Learning

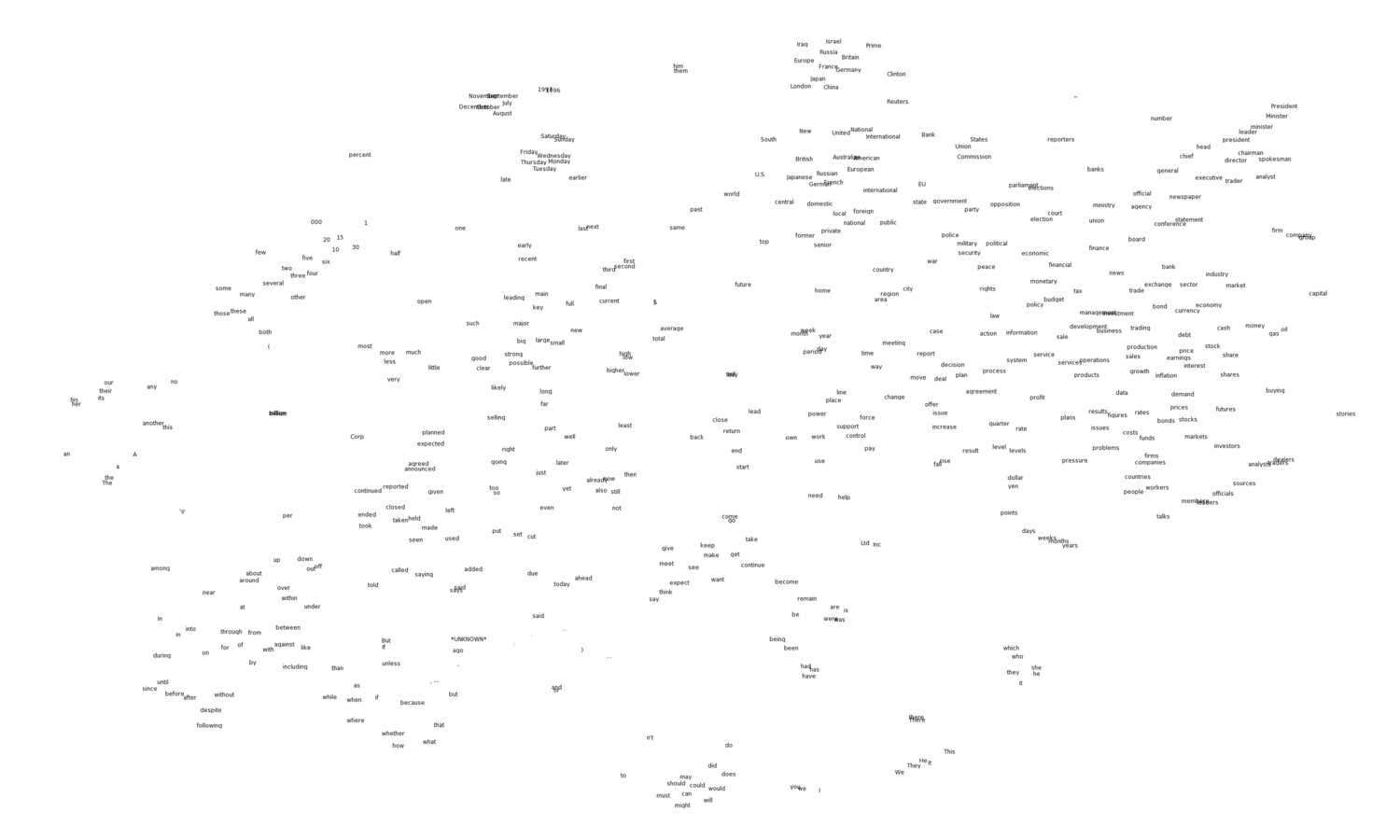

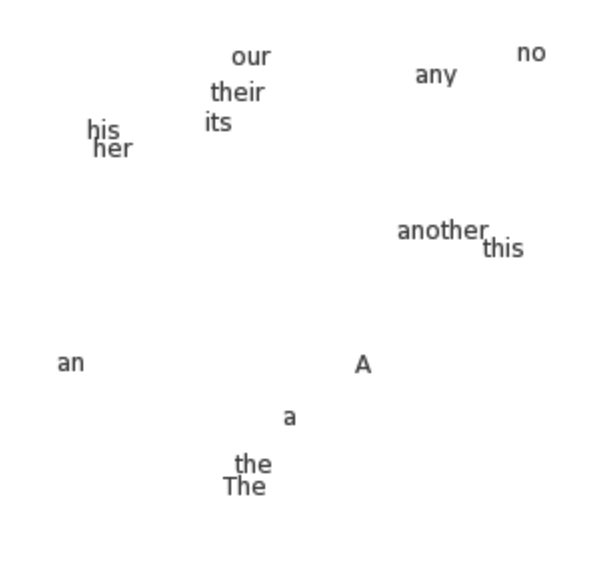

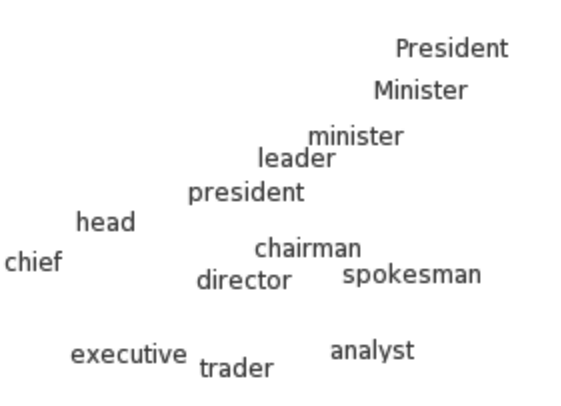

word embeddings: presentation of words as a dense vector.

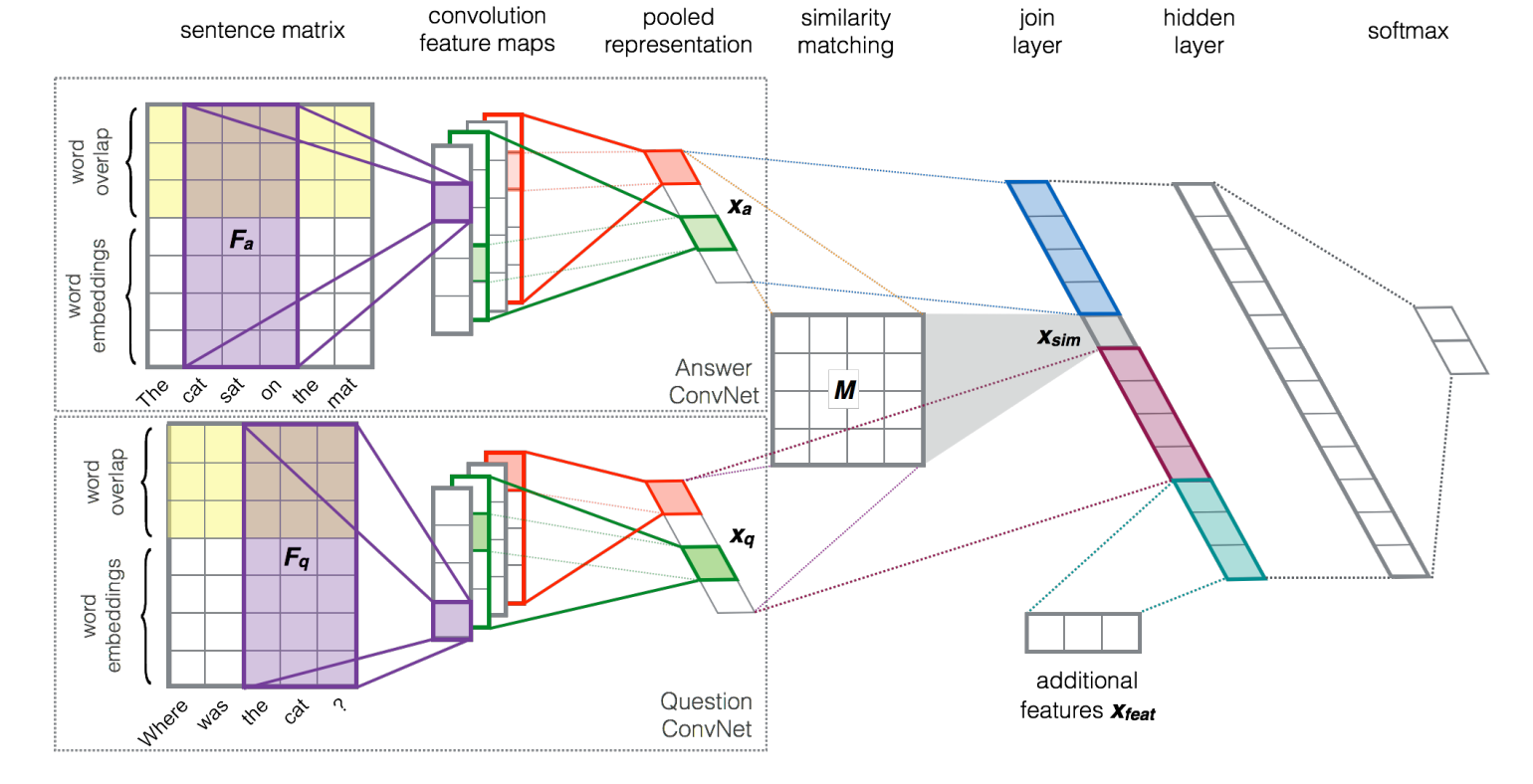

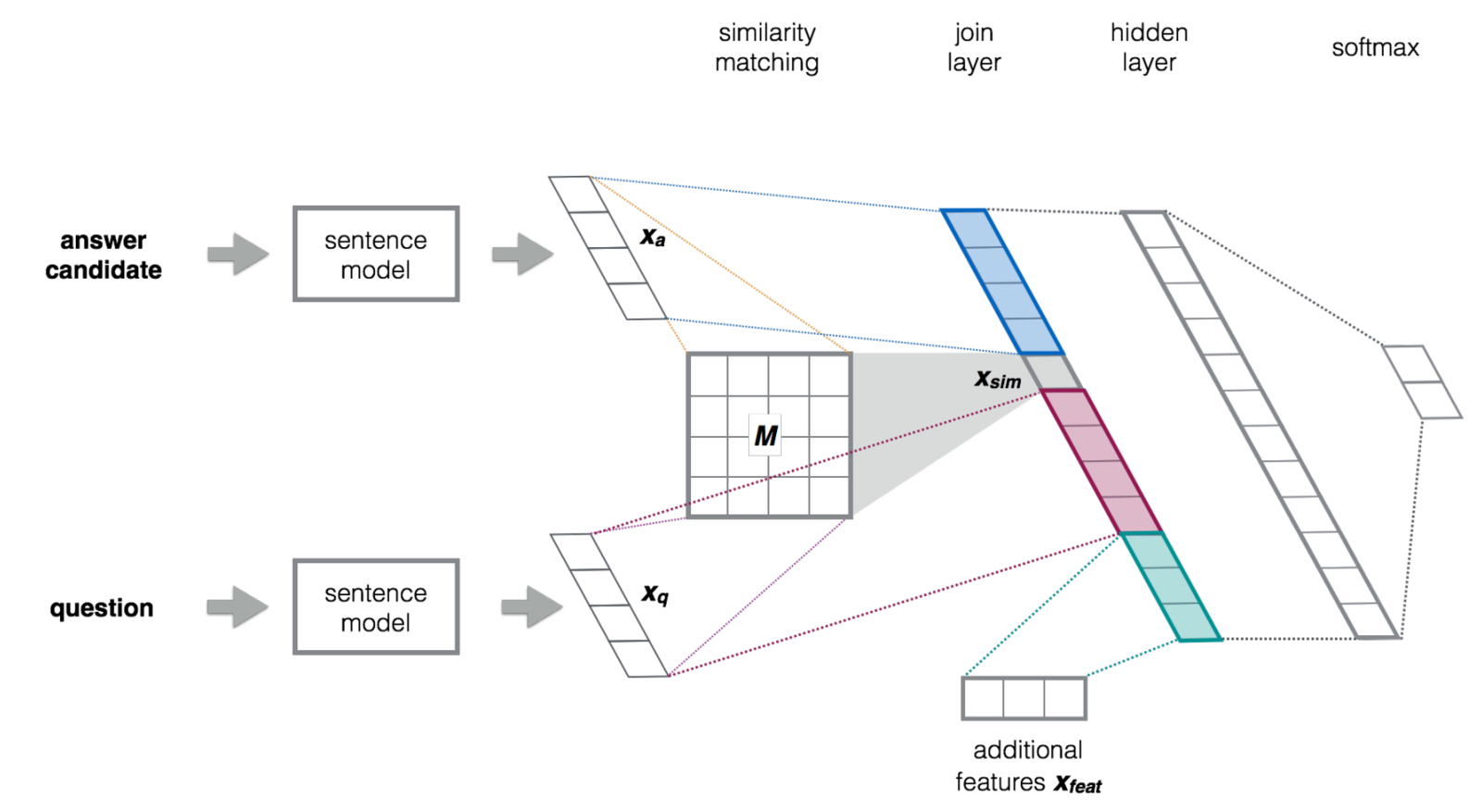

deep learning architecture for reranking short text pairs:

ref: http://colah.github.io/posts/2014-07-NLP-RNNs-Representations/

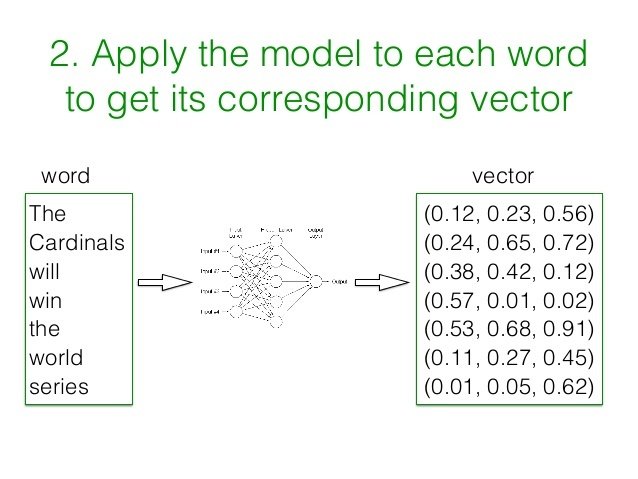

Word Embeddings

word2vec:

Group of (shallow, two-layer neural networks) models used to produce word embeddings.

ref: http://www.slideshare.net/andrewkoo/word2vec-algorithm

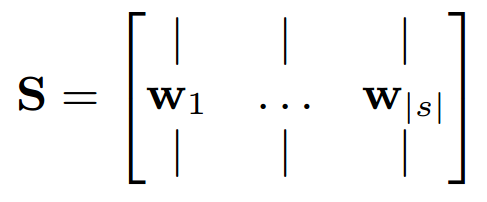

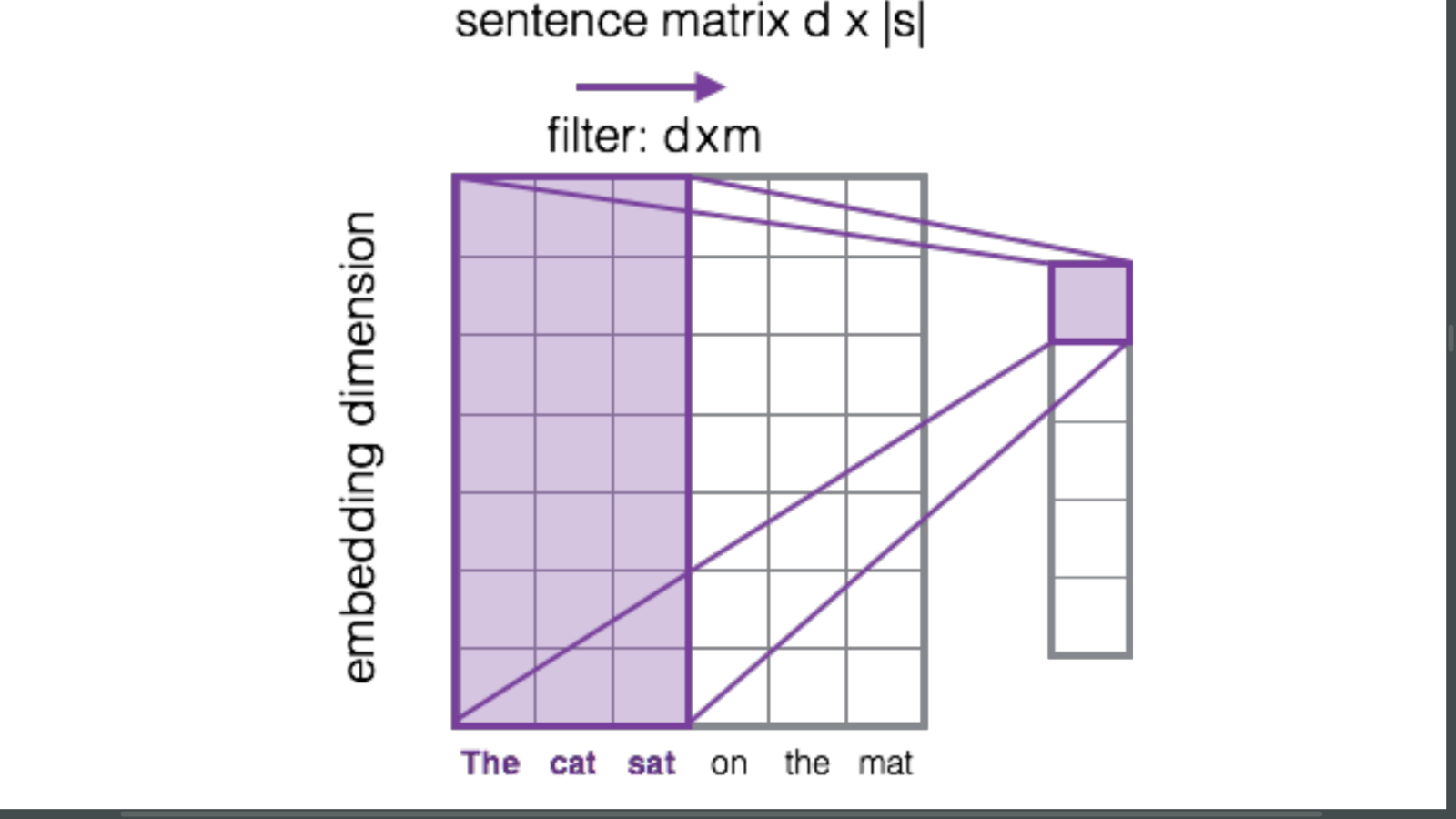

Sentence Matrix:

Embeddings Matrix:

sentence (seq. of words):

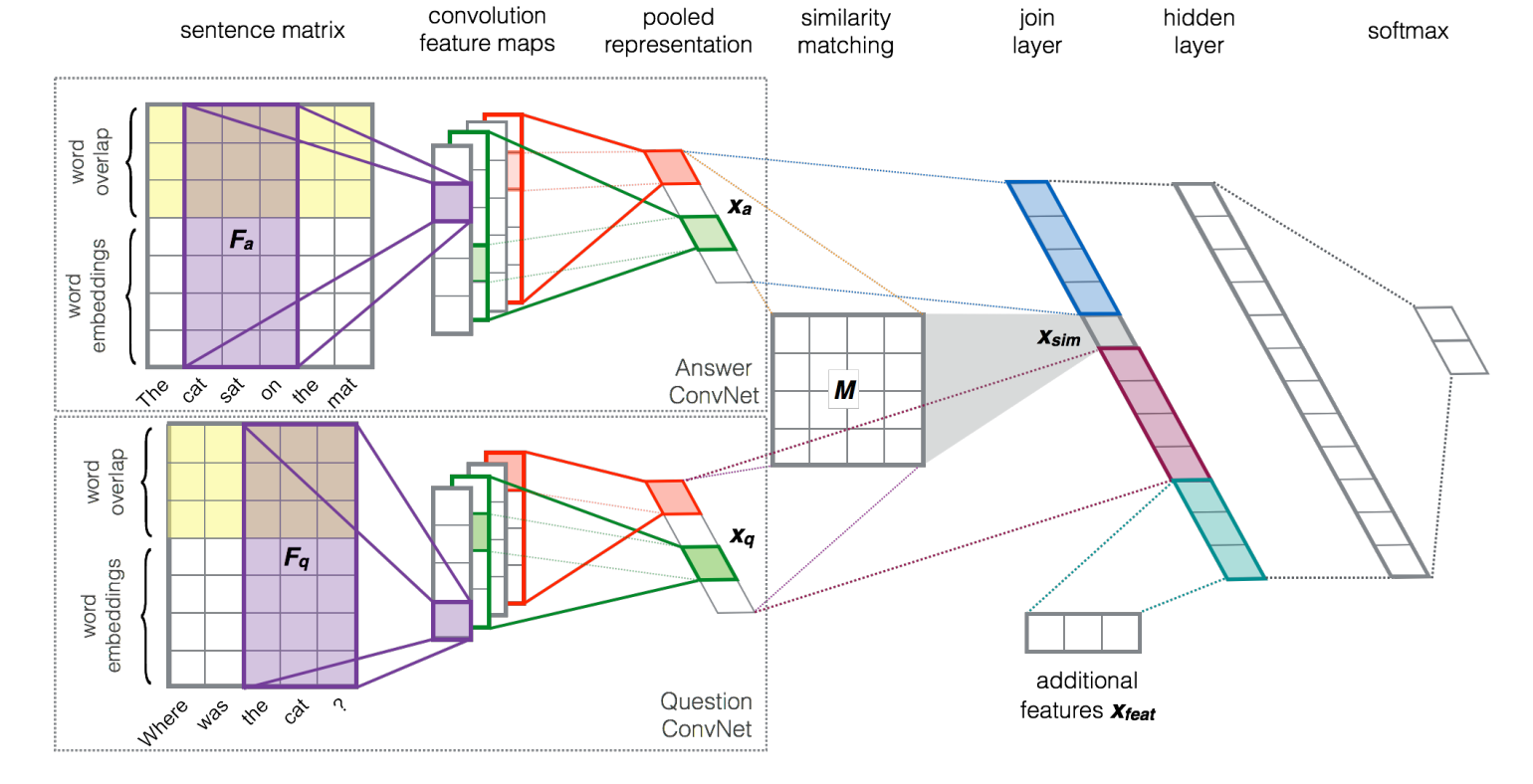

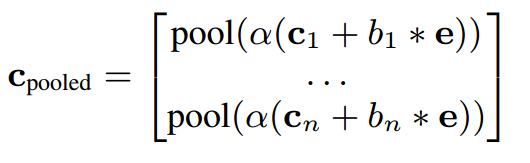

Deep ConvNet - Architecture

similarity match

Mapping sentences to fixed-size vectors

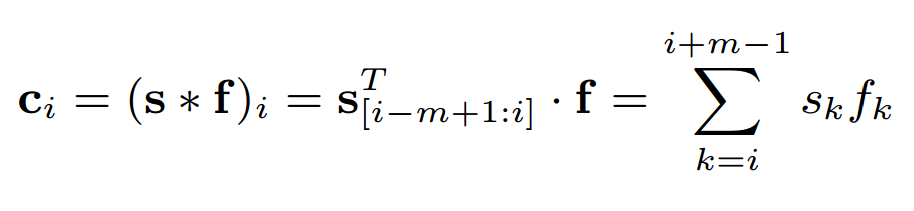

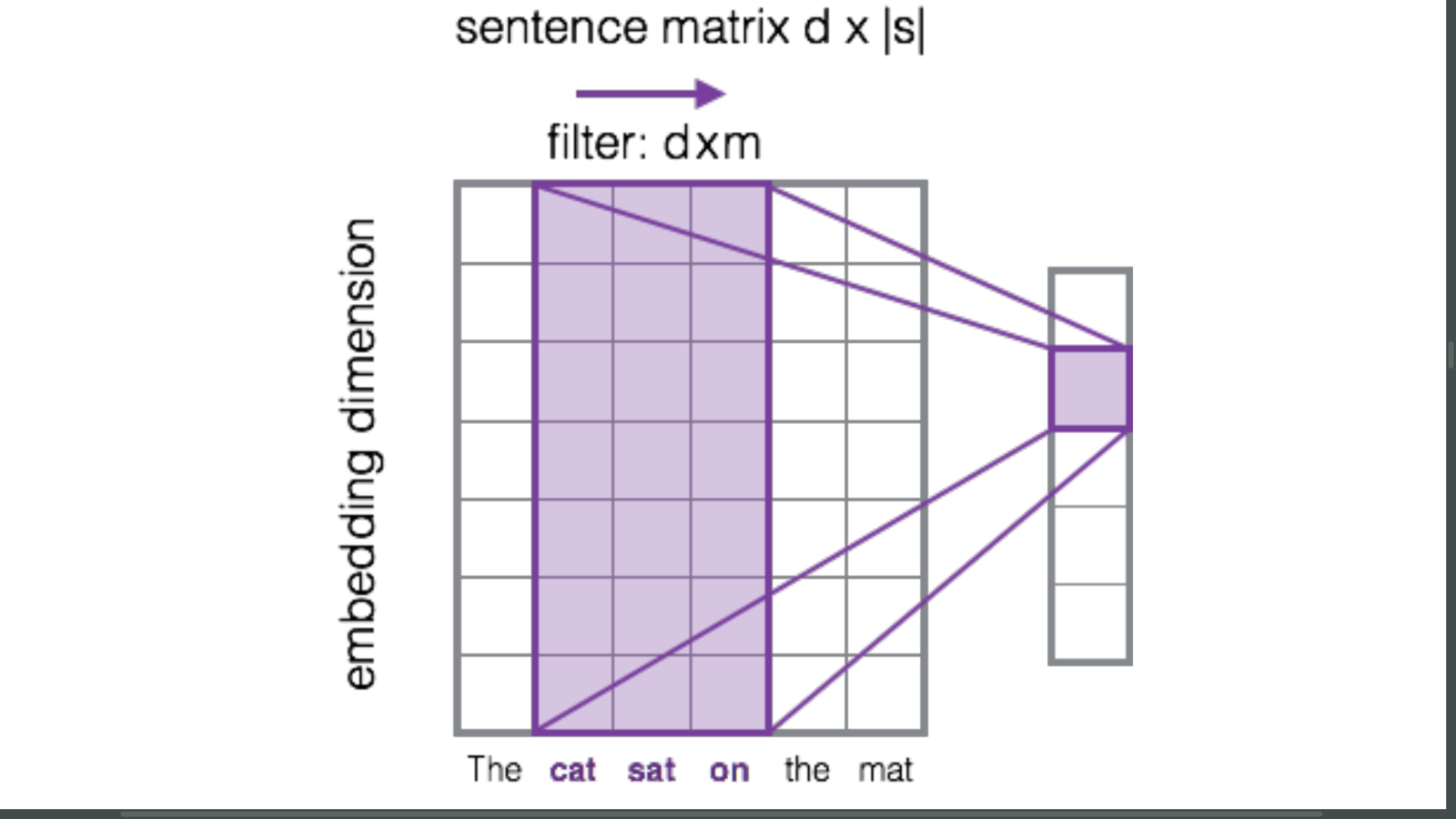

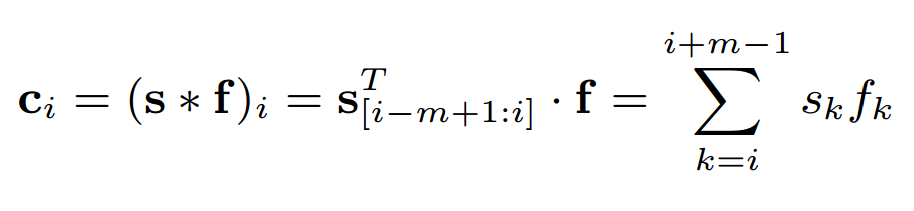

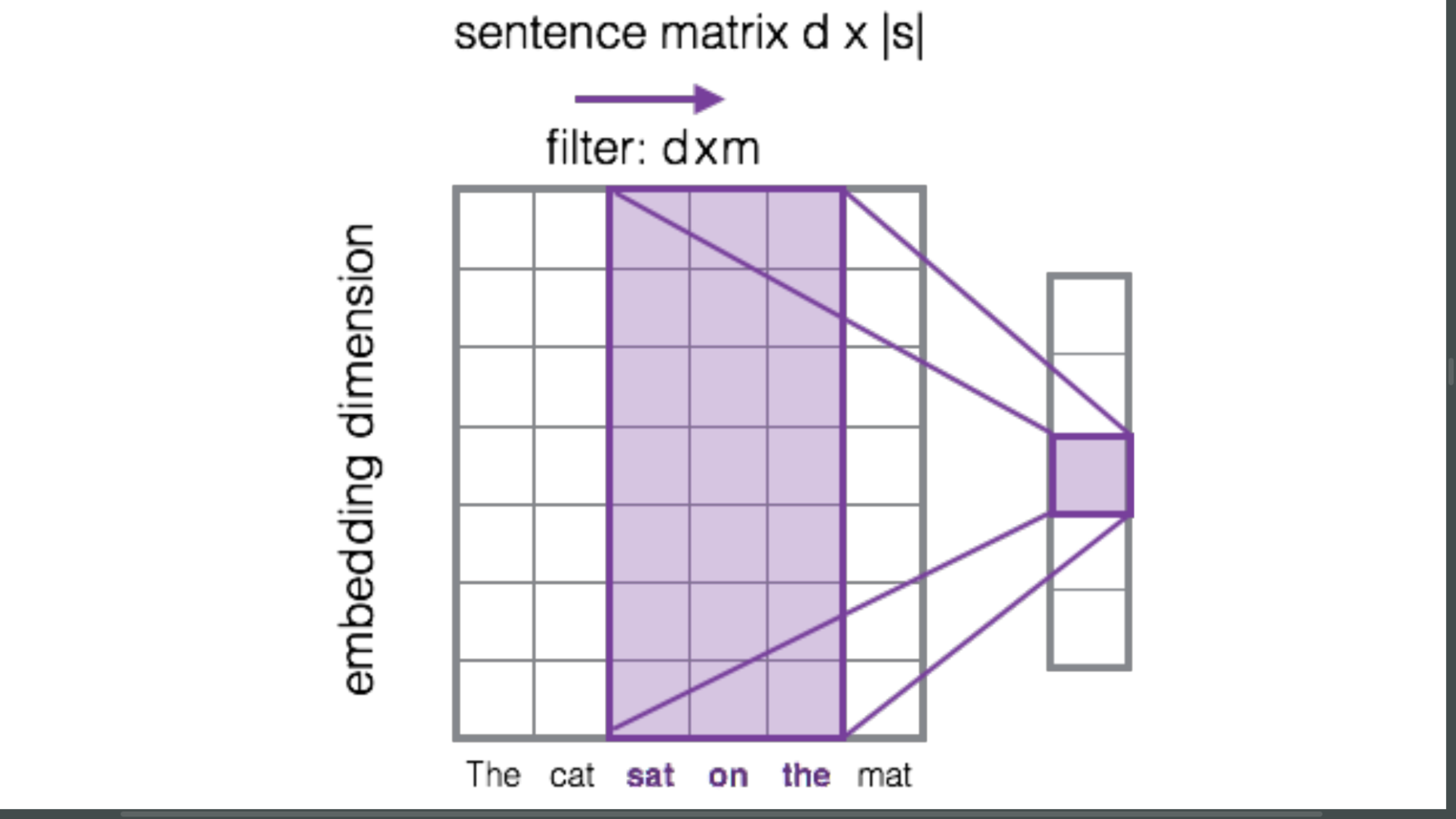

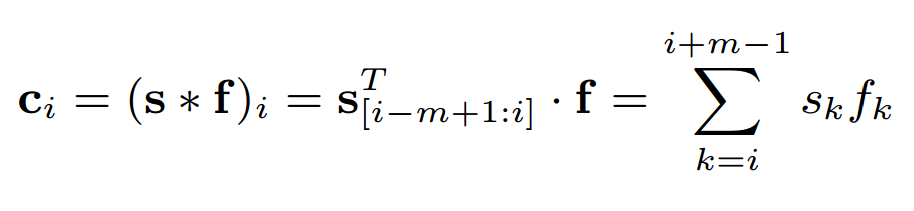

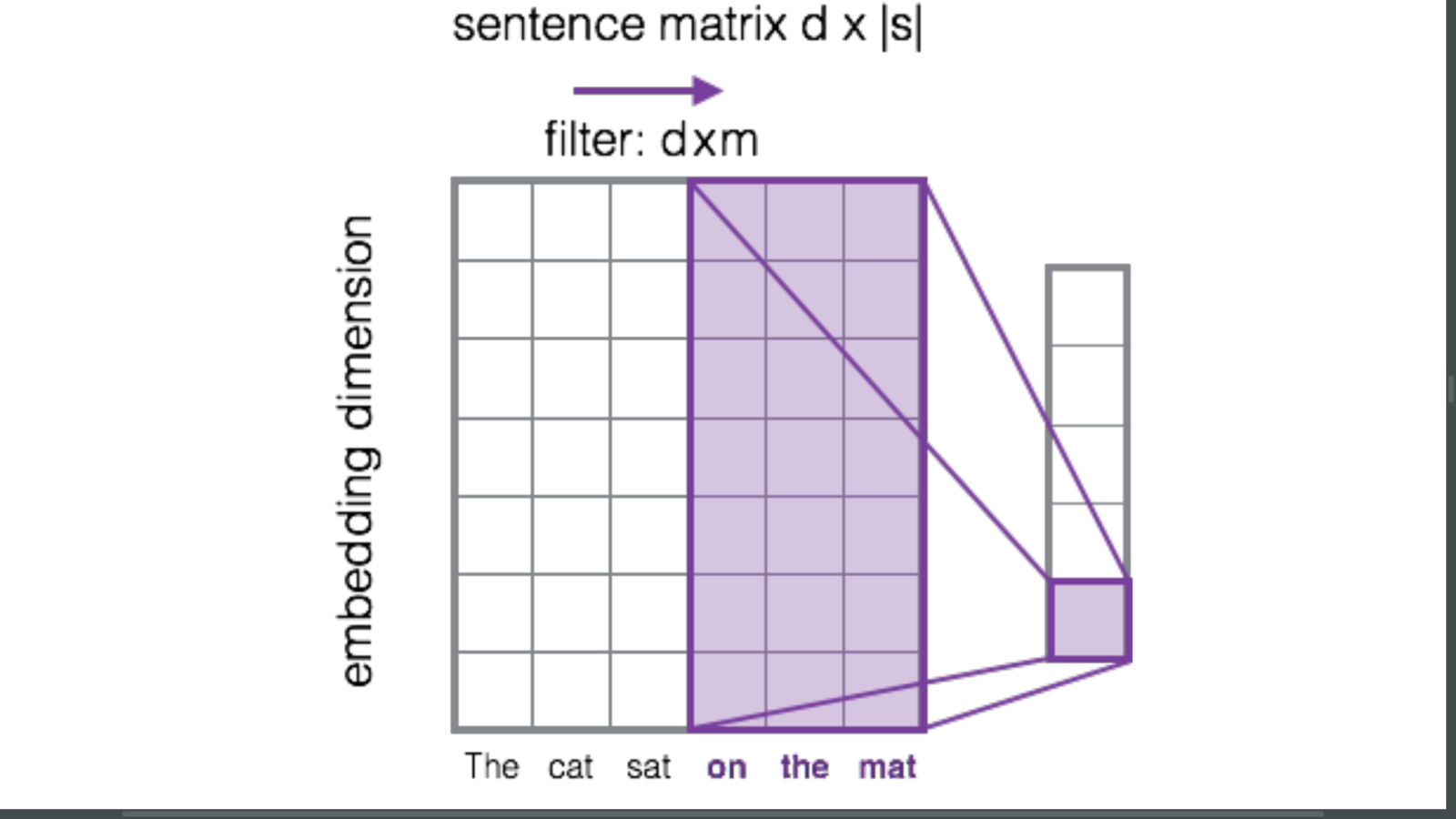

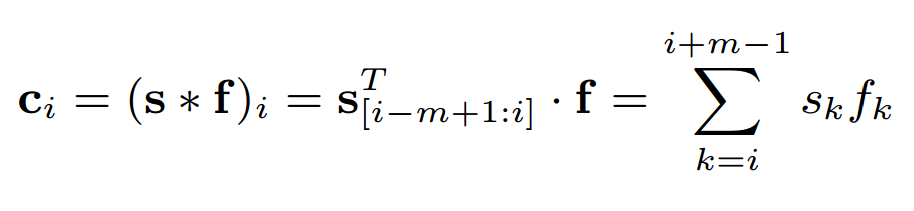

The aim of the convolutional layer is to extract patterns, i.e., discriminative word sequences found within the input sentences that are common throughout the training instances.

Convolution feature maps:

convolution feature maps

i = 0

m = 3

convolution feature maps

i = 1

m = 3

convolution feature maps

i = 2

m = 3

convolution feature maps

i = 3

m = 3

best choice of m = ?

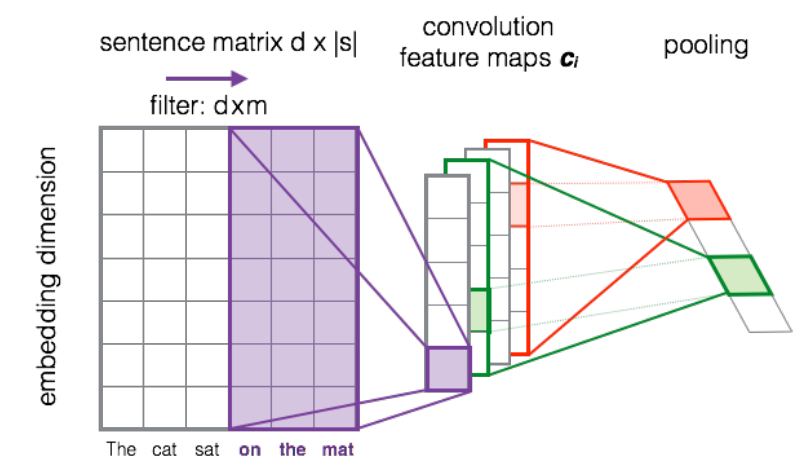

goal: to aggregate the information and reduce the representation

result of the pooling operation:

: activation function (nonlinear)

choices: Sigmoid (logistic), tanh(), max(0, x), ...

goal: to have non-linear decision boundries

: pool operation

choices: avg, max, k-max

Pooling

bias

unit vector

Pair Matching

vec. rep. of query

vec. rep. of doc.

similarity matrix

(optimized during the training)

Pair Representation

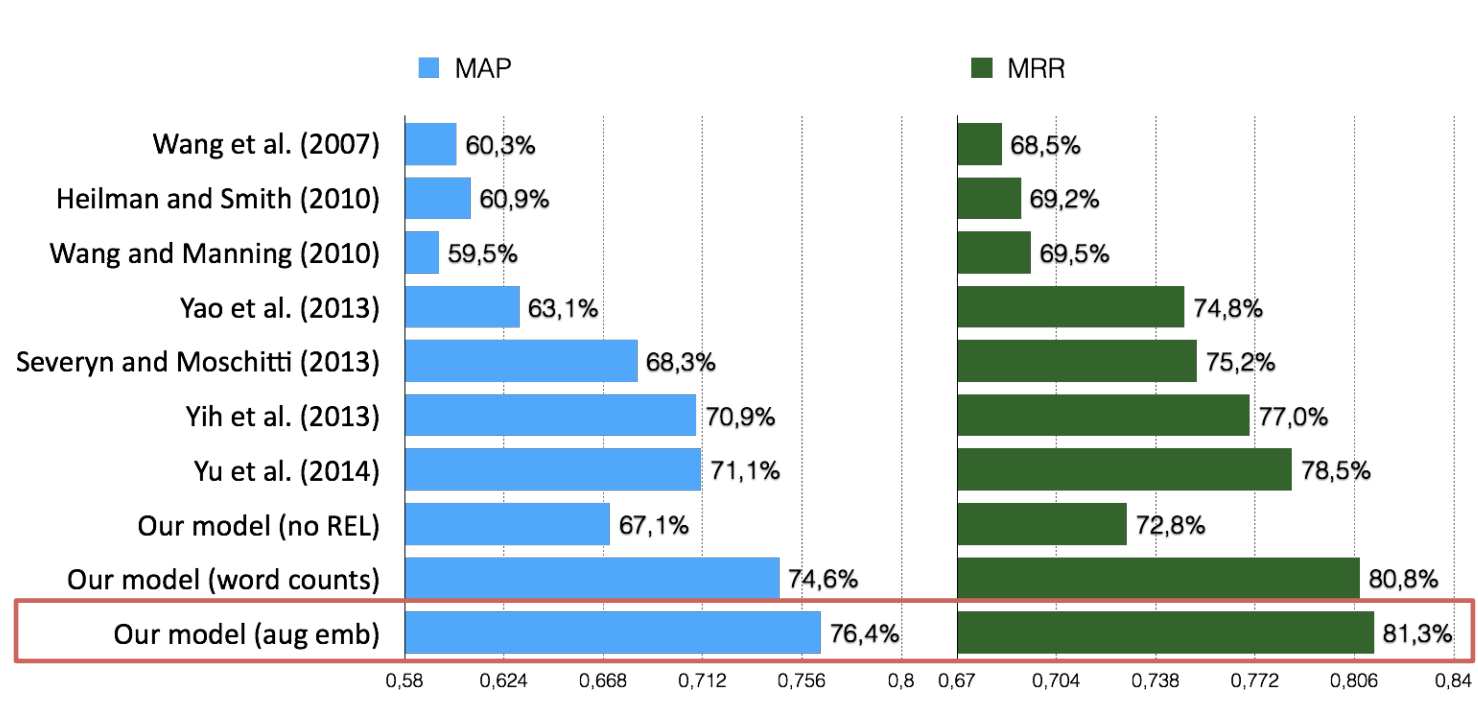

Results

Severyn et al. (2015) - no relational information

Severyn et al. (2015) - word counts features

Severyn et al. (2015) - augmented embeddings

Yu et al. (2014) - Deep learning model

Yih et al. (2013) - Distributional word vector

Severyn & Moschitti (2013) - Syntactic feature based models

Yao et al. (2013) - Syntactic feature based models

Wang & Manning (2010) - Syntactic feature based models

Heilman & Smith (2010) - Syntactic feature based models

Wang et al. (2010) - Syntactic feature based models

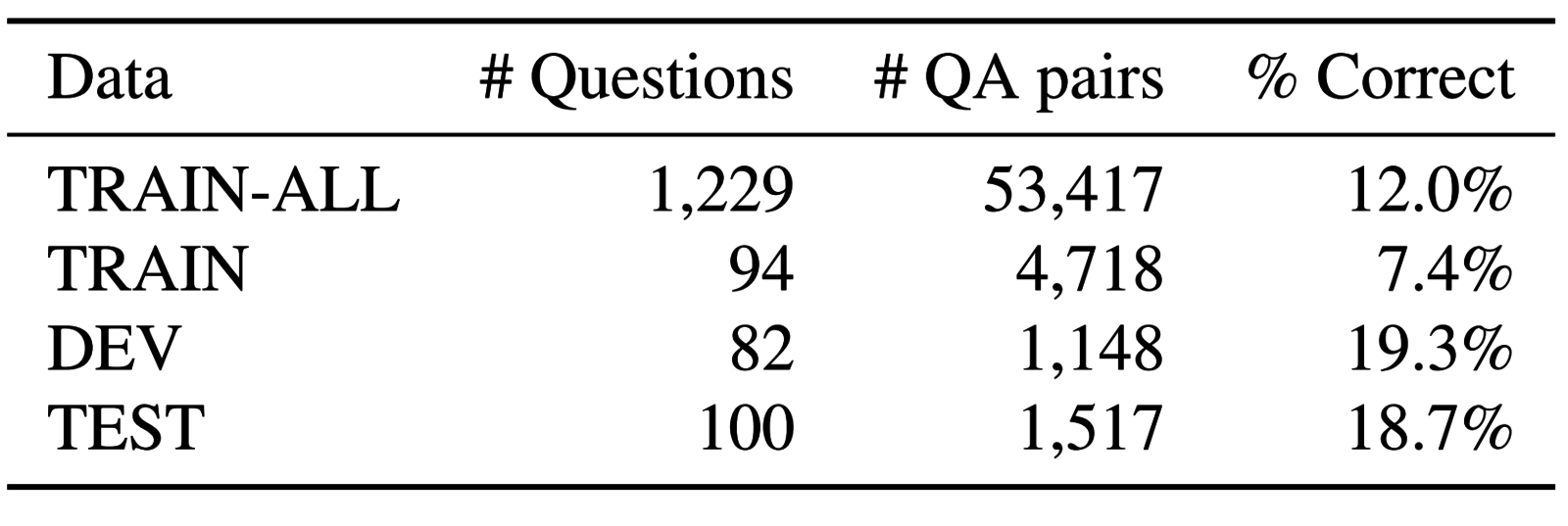

collection: TREC QA Dataset

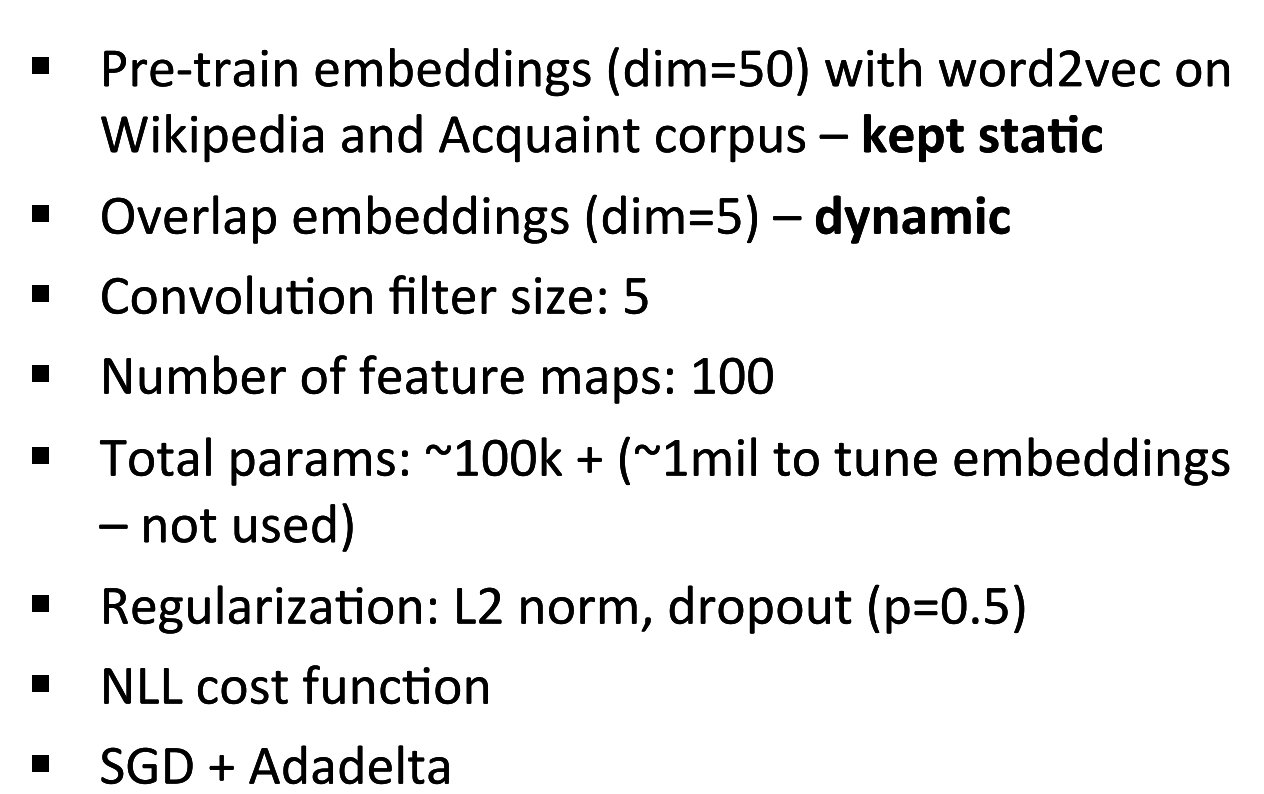

Experiments: TREC QA data

Experiments: TREC QA data

Question_Answering_Convolutional_Deep_Neural_Networks

By Saeid Balaneshin Kordan

Question_Answering_Convolutional_Deep_Neural_Networks

Question Answering by using Convolutional Deep Neural Networks (review of Severyn et al. (2015))

- 1,714