Deep Learning HMC

Building Topological Samplers for Lattice QCD

Sam Foreman

May, 2021

MCMC in Lattice QCD

- Generating independent gauge configurations is a MAJOR bottleneck for LatticeQCD.

- As the lattice spacing, \(a \rightarrow 0\), the MCMC updates tend to get stuck in sectors of fixed gauge topology.

- This causes the number of steps needed to adequately sample different topological sectors to increase exponentially.

Critical slowing down!

Markov Chain Monte Carlo (MCMC)

- Goal: Draw independent samples from a target distribution, \(p(x)\)

- Starting from some initial state \(x_{0}\sim \mathcal{N}(0, \mathbb{1})\) , we generate proposal configurations \(x^{\prime}\)

- Use Metropolis-Hastings acceptance criteria

Issues with MCMC

- Generate proposal \(x^{\prime}\):

\(x^{\prime} = x + \delta\), where \(\delta \sim \mathcal{N}(0, \mathbb{1})\)

\(\big\{ x_{0}, x_{1}, x_{2}, \ldots, x_{m-1}, x_{m}, x_{m+1}, \ldots, x_{n-2}, x_{n-1}, x_{n} \big\}\)

1. Construct chain:

\(\big\{ x_{0}, x_{1}, x_{2}, \ldots, x_{m-1}, x_{m}, x_{m+1}, \ldots, x_{n-2}, x_{n-1}, x_{n} \big\}\)

2. Thermalize ("burn-in"):

\(\big\{ x_{0}, x_{1}, x_{2}, \ldots, x_{m-1}, x_{m}, x_{m+1}, \ldots, x_{n-2}, x_{n-1}, x_{n} \big\}\)

3. Drop correlated samples ("thinning"):

dropped configurations

Inefficient!

Hamiltonian Monte Carlo (HMC)

-

Introduce fictitious momentum:

\(v\sim\mathcal{N}(0, 1)\)

-

Target distribution:

\(p(x)\propto e^{-S(x)}\)

-

Joint target distribution:

-

The joint \((x, v)\) system obeys Hamilton's Equations:

lift to phase space

HMC: Leapfrog Integrator

(trajectory)

-

Hamilton's Eqs:

-

Hamiltonian:

-

\(N_{\mathrm{LF}}\) leapfrog steps:

Leapfrog Integrator

2. Full-step \(x\)-update:

3. Half-step \(v\)-update:

1. Half-step \(v\)-update:

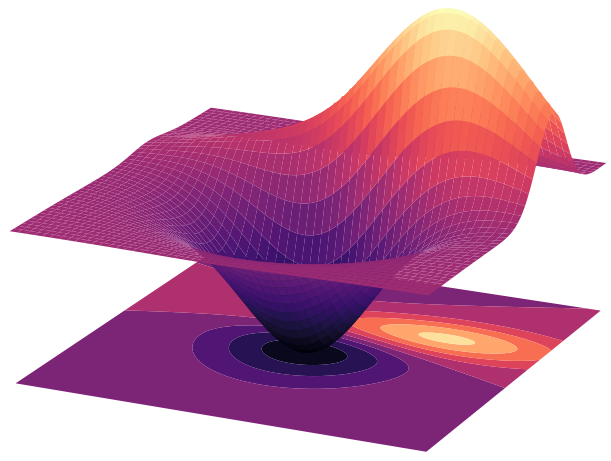

HMC: Issues

- Cannot easily traverse low-density zones.

- What do we want in a good sampler?

- Fast mixing

- Fast burn-in

- Mix across energy levels

- Mix between modes

- Energy levels selected randomly \(\longrightarrow\) slow mixing!

Stuck!

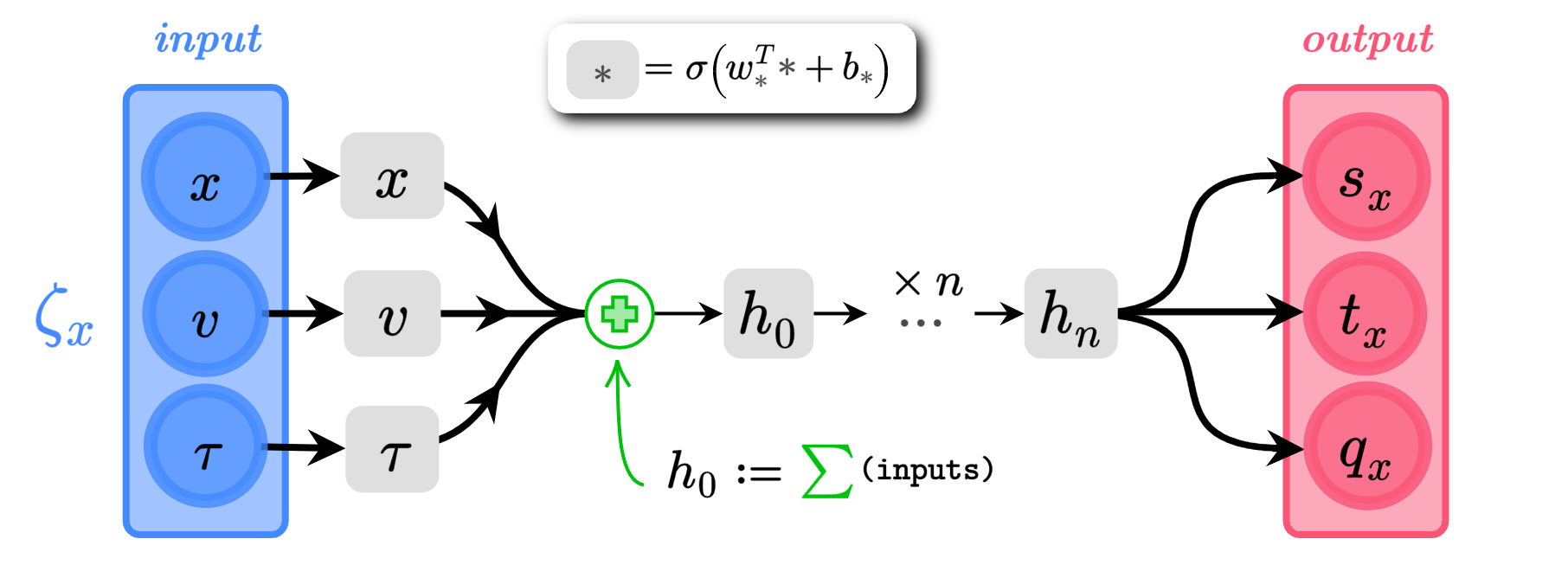

Leapfrog Layer

- Introduce a persistent direction \(d \sim \mathcal{U}(+,-)\) (forward/backward)

- Introduce a discrete index \(k \in \{1, 2, \ldots, N_{\mathrm{LF}}\}\) to denote the current leapfrog step

- Let \(\xi = (x, v, \pm)\) denote a complete state, then the target distribution is given by

- Each leapfrog step transforms \(\xi_{k} = (x_{k}, v_{k}, \pm) \rightarrow (x''_{k}, v''_{k} \pm) = \xi''_{k}\) by passing it through the \(k^{\mathrm{th}}\) leapfrog layer

Leapfrog Layer

where \((s_{v}^{k}, q^{k}_{v}, t^{k}_{v})\), and \((s_{x}^{k}, q^{k}_{x}, t^{k}_{x})\), are parameterized by neural networks

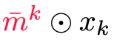

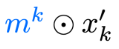

- \(x\)-update \((d = +)\):

(\(m_{t}\)\(\odot x\)) -independent

masks:

Momentum (\(v_{k}\)) scaling

Gradient \(\partial_{x}S(x_{k})\) scaling

Translation

- \(v\)-update \((d = +)\):

(\(v\)-independent)

- Each leapfrog step transforms

by passing it through the \(k^{\mathrm{th}}\) leapfrog layer.

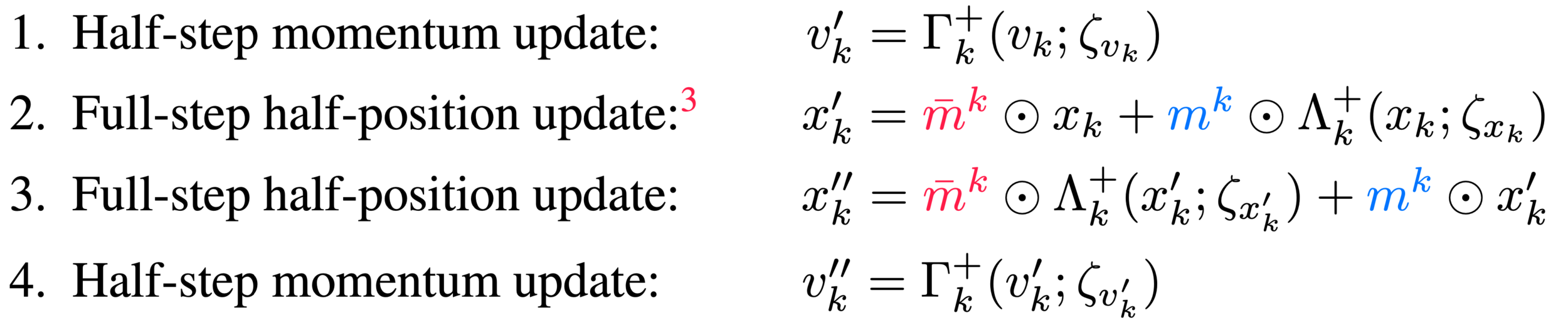

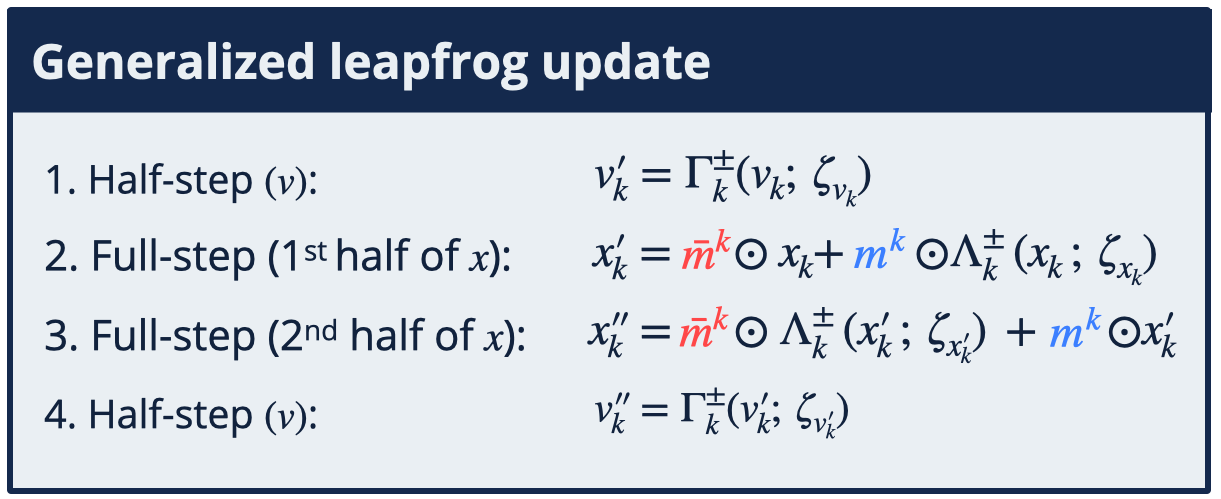

L2HMC: Generalized Leapfrog

- Complete (generalized) update:

- Half-step \(v\) update:

- Full-step \(\frac{1}{2} x\) update:

- Full-step \(\frac{1}{2} x\) update:

- Half-step \(v\) update:

Leapfrog Layer

masks:

Stack of fully-connected layers

Example: GMM \(\in\mathbb{R}^{2}\)

\(A(\xi',\xi)\) = acceptance probability

\(A(\xi'|\xi)\cdot\delta(\xi',\xi)\) = avg. distance

Note:

\(\xi'\) = proposed state

\(\xi\) = initial state

- Maximize expected squared jump distance:

- Define the squared jump distance:

HMC

L2HMC

Training Algorithm

construct trajectory

Compute loss + backprop

Metropolis-Hastings accept/reject

re-sample

momentum

+ direction

Annealing Schedule

- Introduce an annealing schedule during the training phase:

(varied slowly)

e.g. \( \{0.1, 0.2, \ldots, 0.9, 1.0\}\)

(increasing)

- Target distribution becomes:

- For \(\|\gamma_{t}\| < 1\), this helps to rescale (shrink) the energy barriers between isolated modes

- Allows our sampler to explore previously inaccessible regions of the target distribution

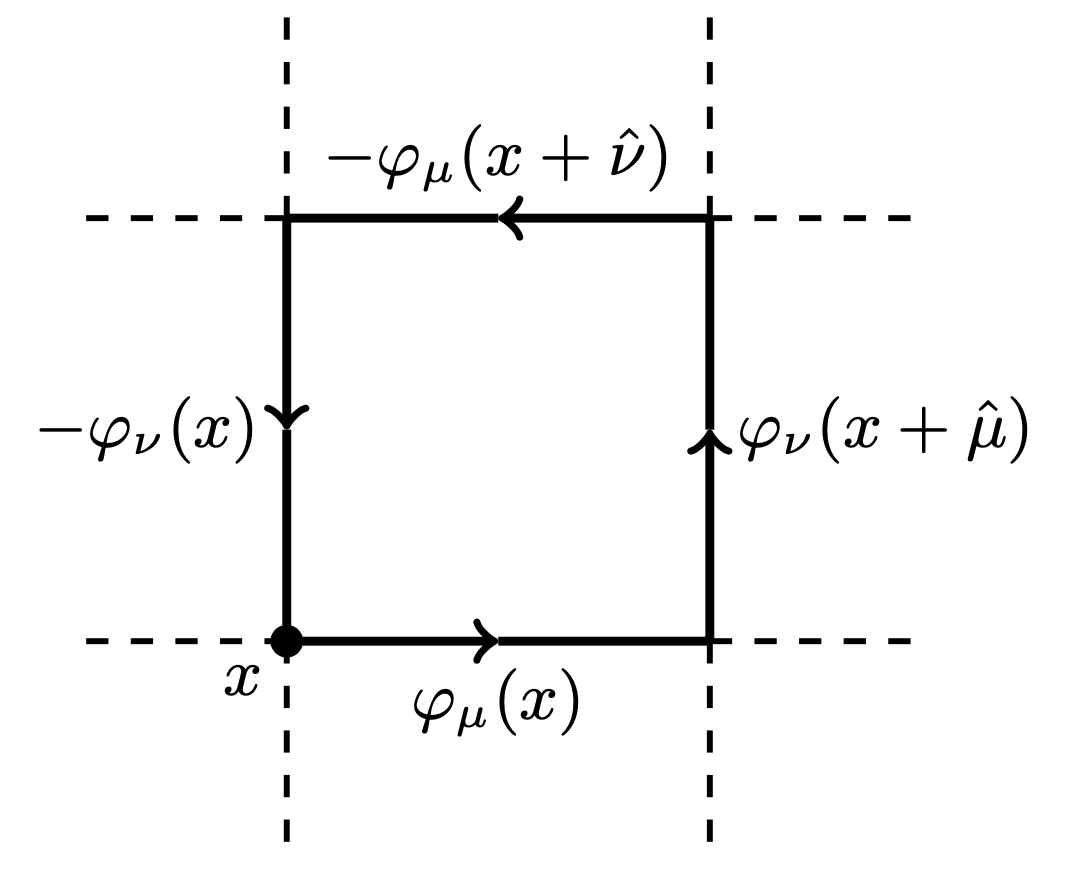

- Wilson action:

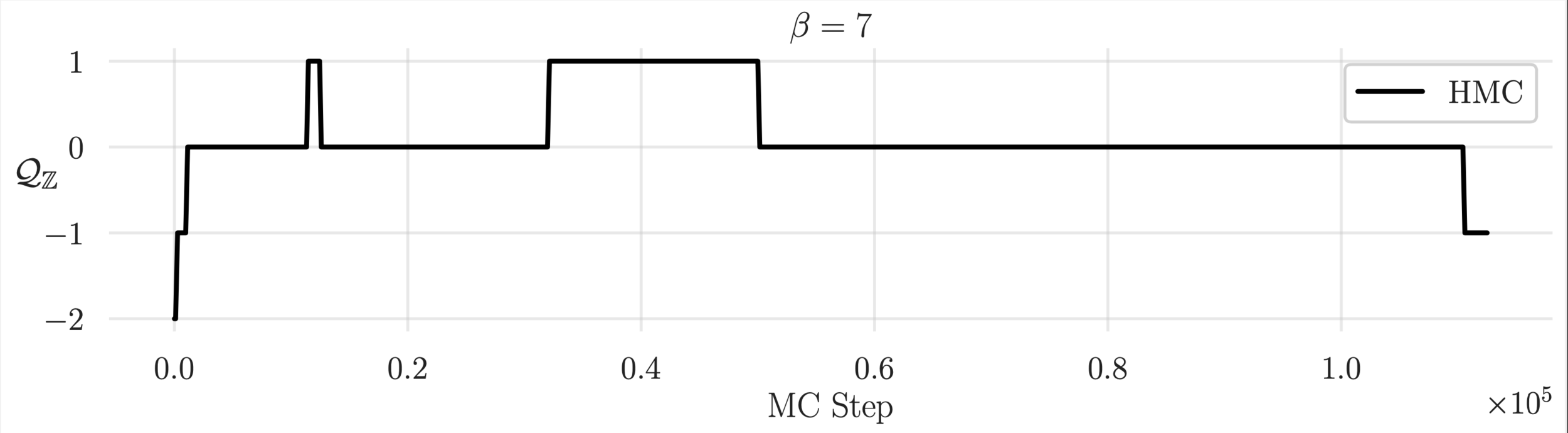

- Topological charge:

continuous, differentiable

discrete, hard to work with

- Link variables:

Lattice Gauge Theory

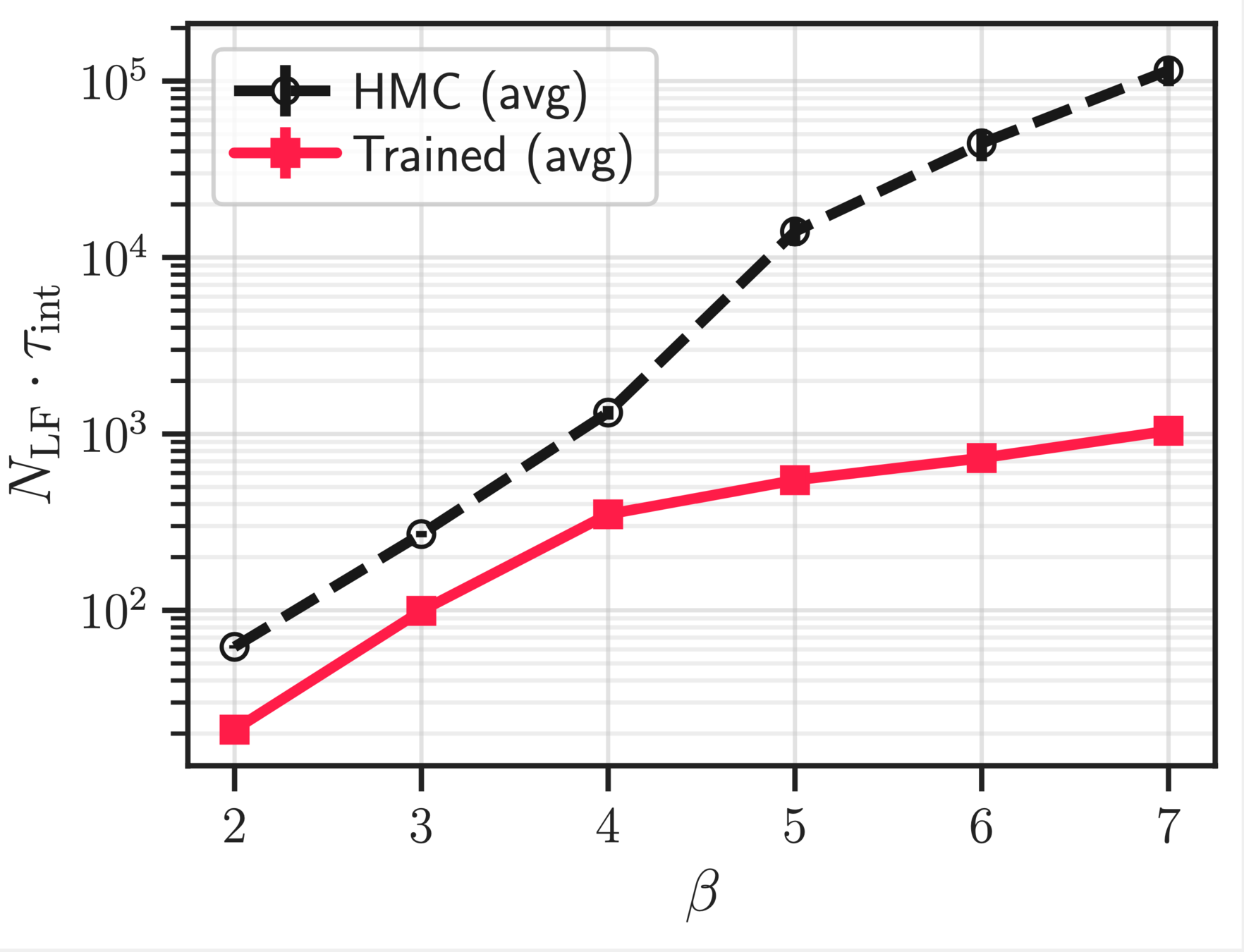

- We maximize the expected squared charge difference:

Loss function: \(\mathcal{L}(\theta)\)

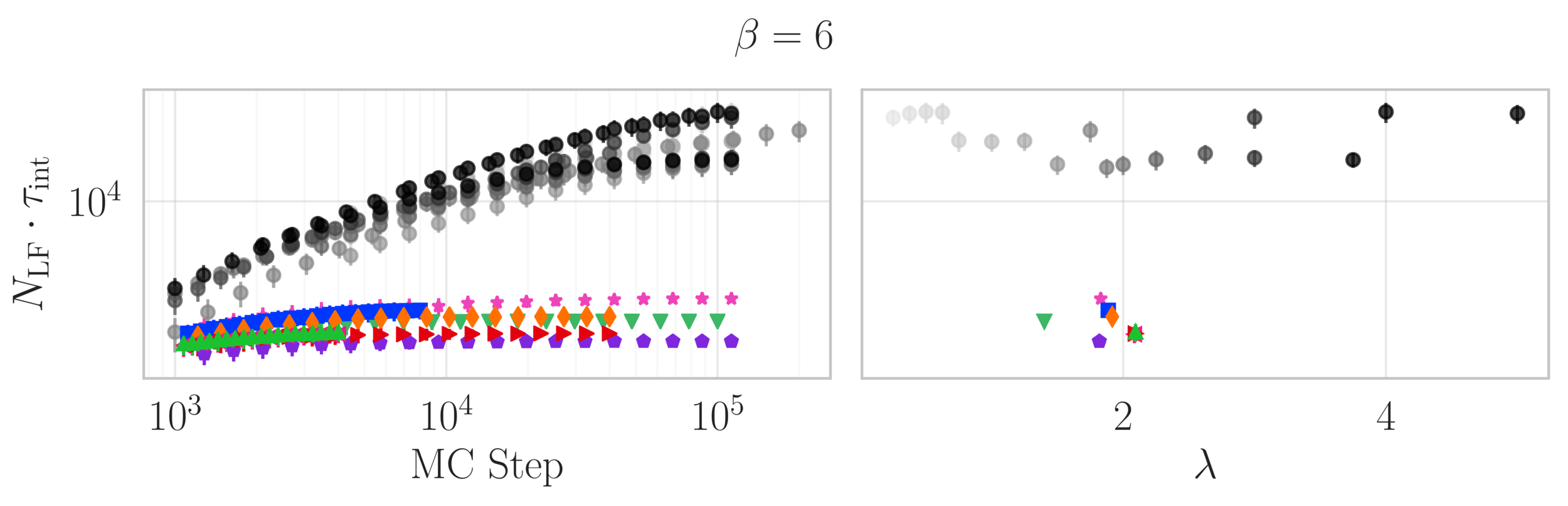

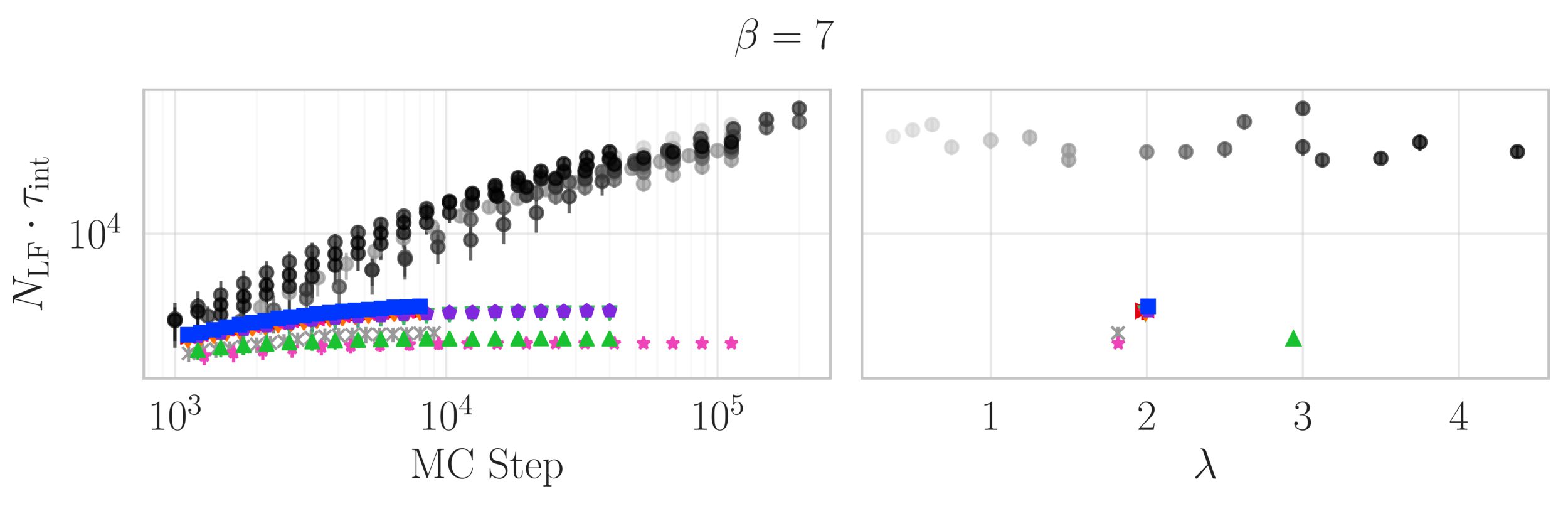

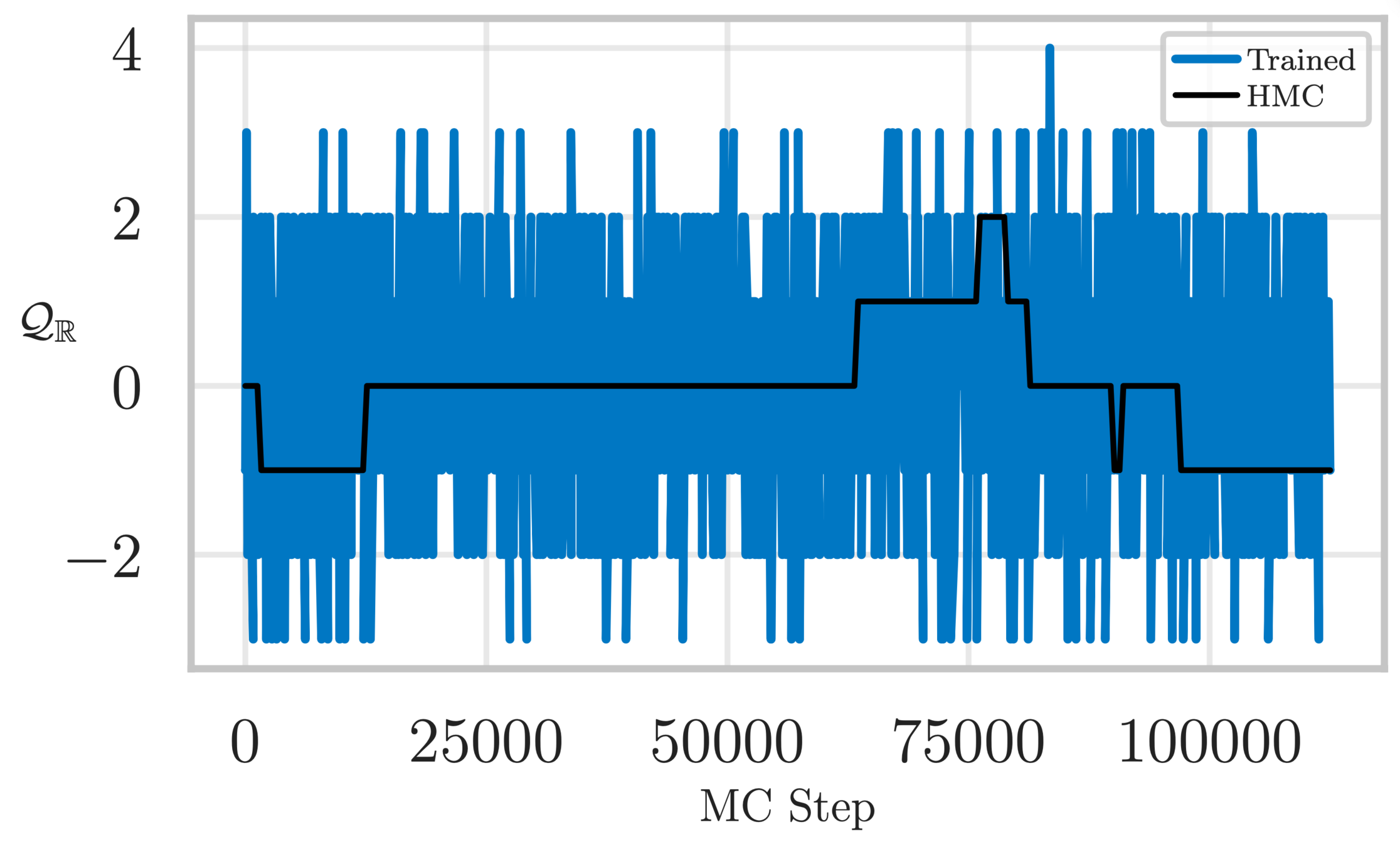

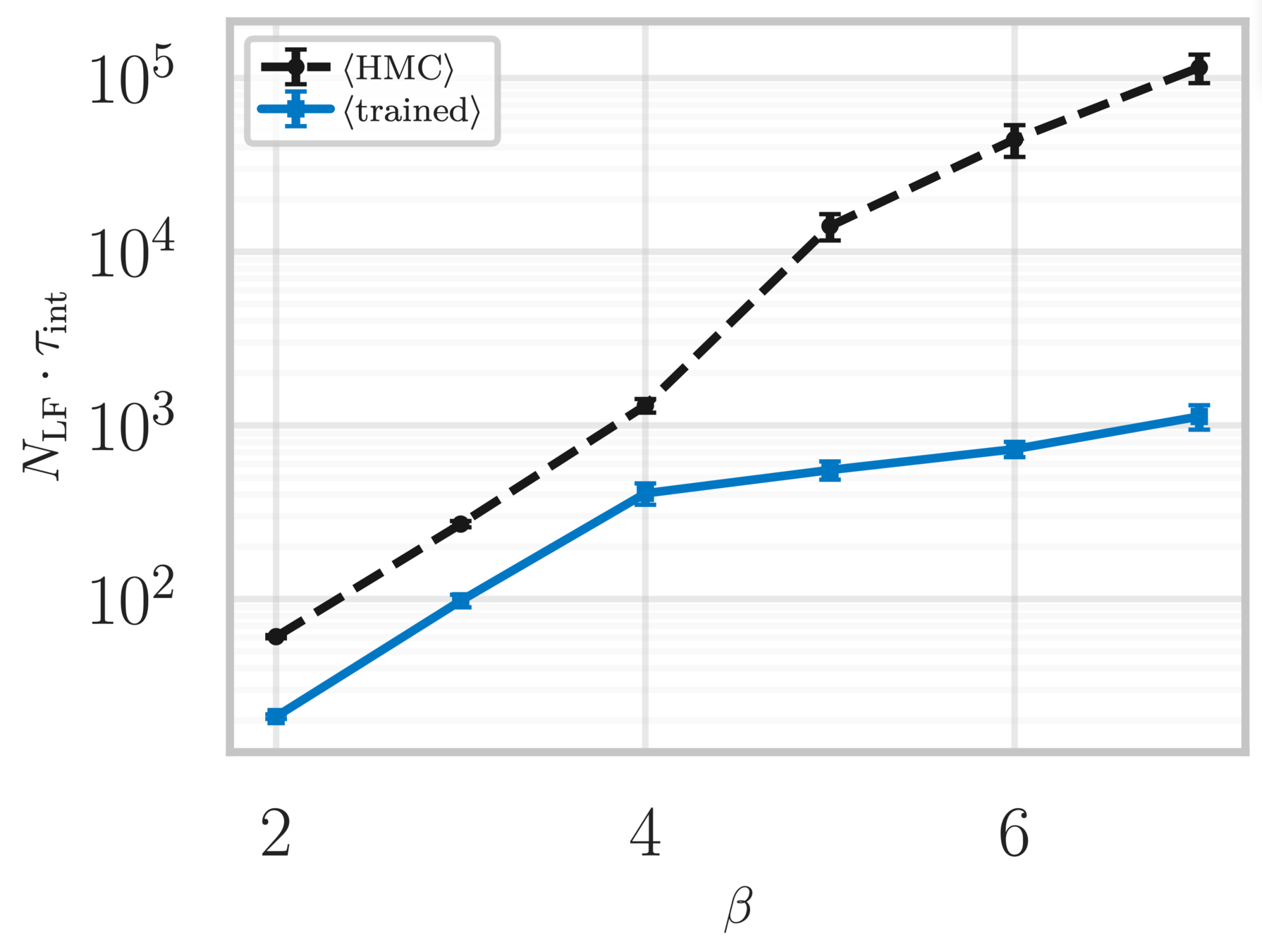

Results

- We maximize the expected squared charge difference:

Interpretation

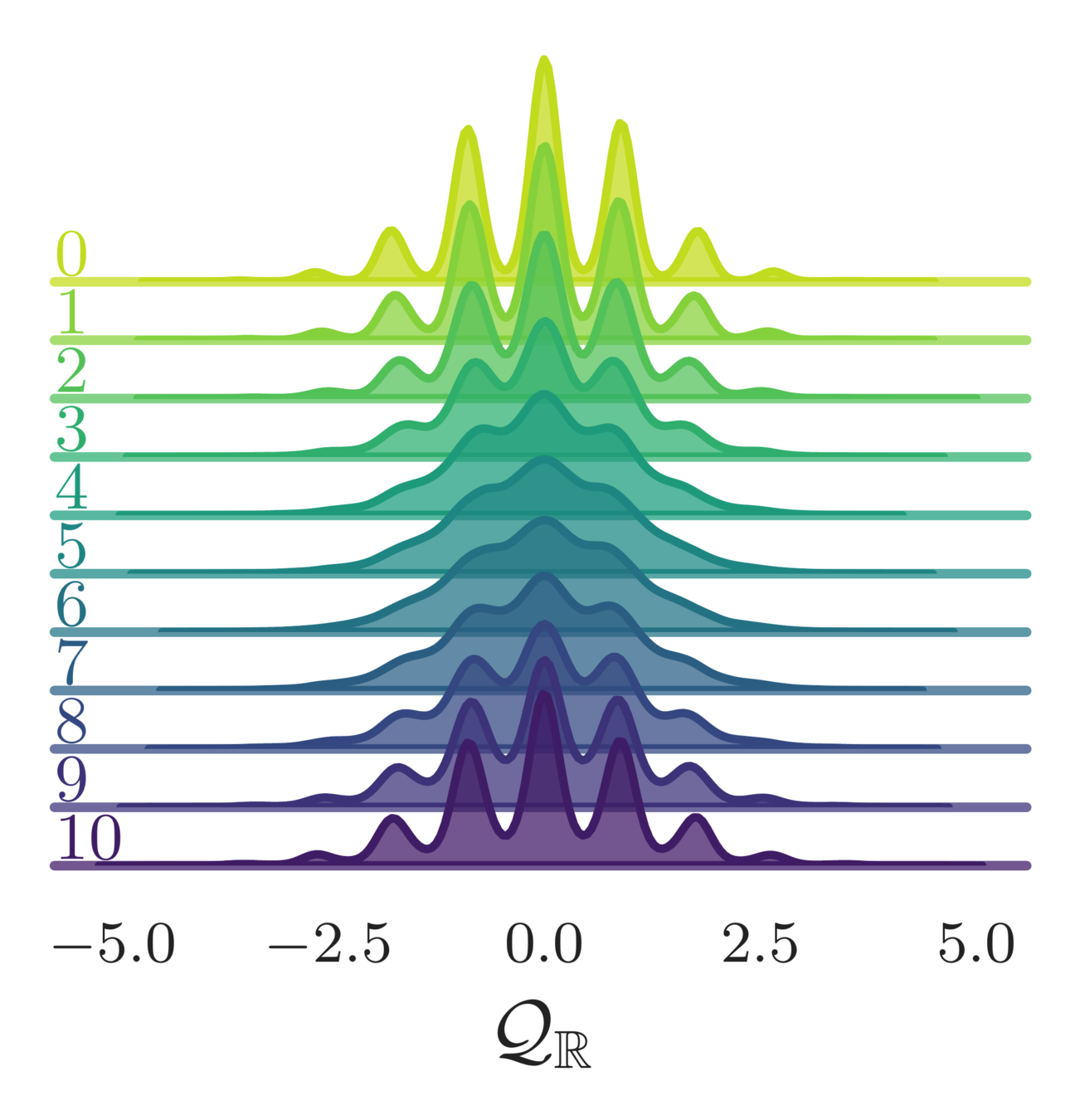

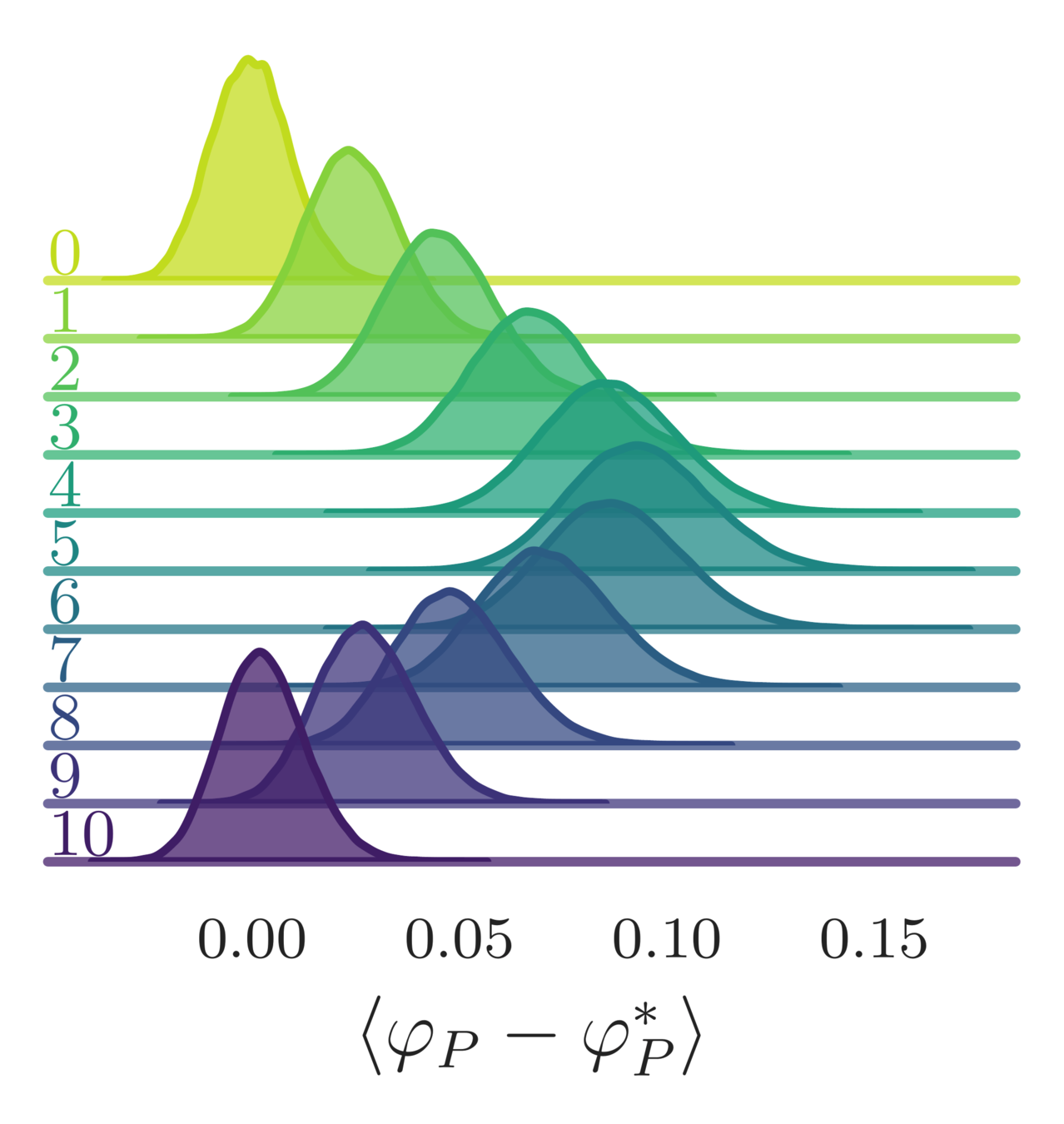

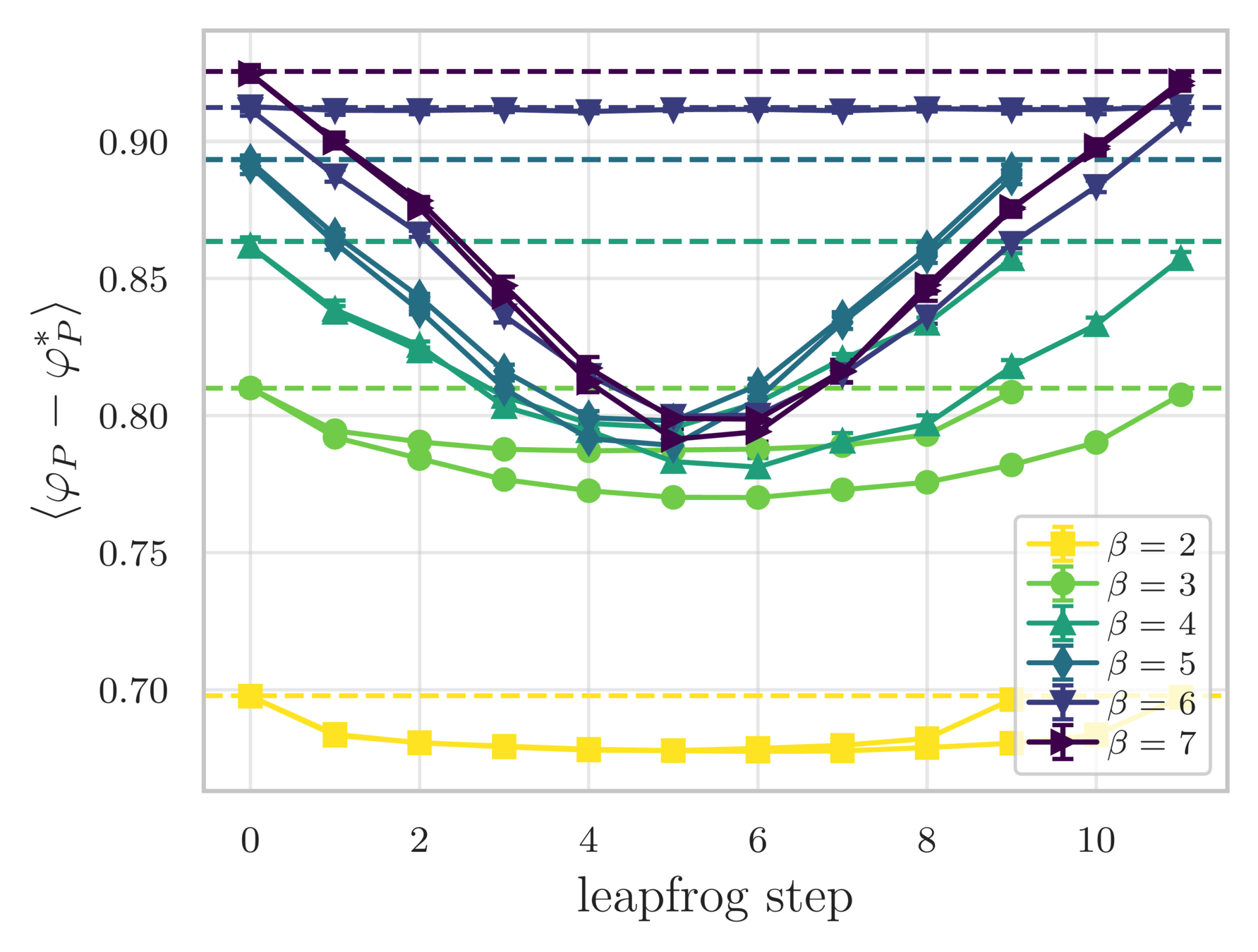

- Look at how different quantities evolve over a single trajectory

- See that the sampler artificially increases the energy during the first half of the trajectory (before returning to original value)

Leapfrog step

variation in the avg. plaquette

continuous topological charge

shifted energy

GMM: Autocorrelation

L2HMC

HMC

Lattice Gauge Theory

- Topological Loss Function:

\(A(\xi^{\prime}|\xi) = \) "acceptance probability"

where:

Lattice Gauge Theory

- Variation in the average plaquette, \(\langle\varphi_{P}-\varphi^{*}\rangle\)

- where \(\varphi^{*} = I_{1}(\beta)/I_{0}(\beta)\) is the exact (\(\infty\)-volume) result

leapfrog step

(MD trajectory)

Topological charge history

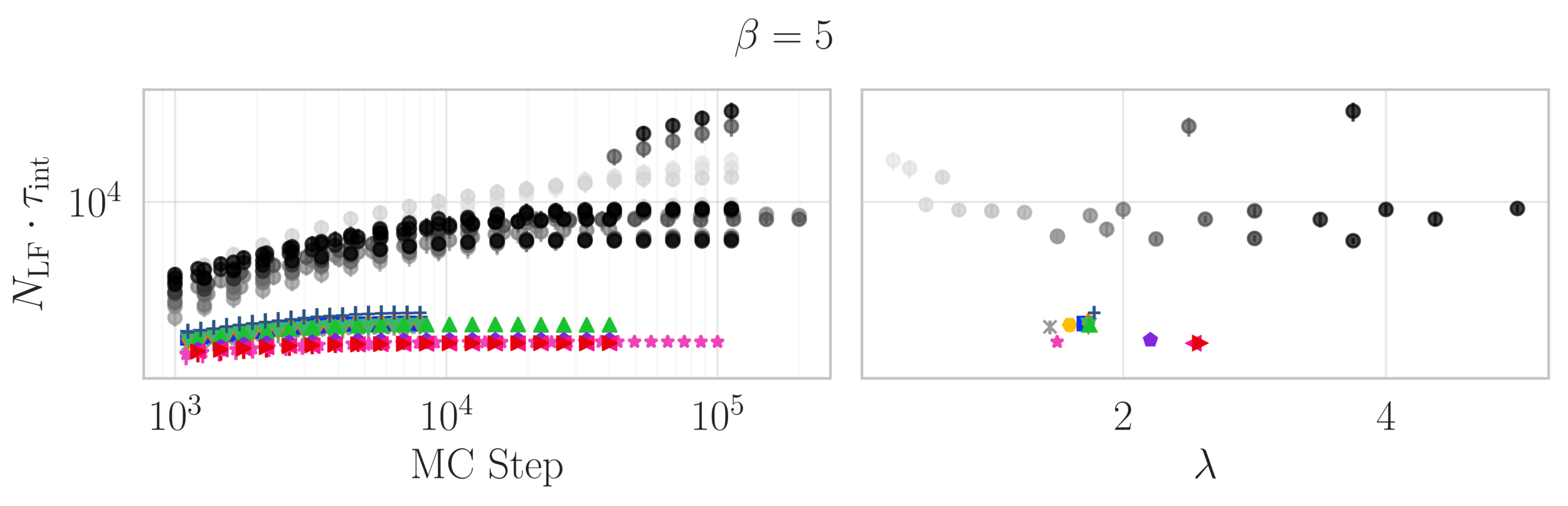

~ cost / step

continuum limit

Estimate of the Integrated autocorrelation time of \(\mathcal{Q}_{\mathbb{R}}\)

Generalized Leapfrog Update

Jacobian:

\(\left|\frac{\partial v''_{k}}{\partial v_{k}}\right|=\exp\left(\frac{1}{2}\varepsilon^{k}_{v} s_{v}^{k}(\zeta_{v_{k}})\right)\), \(\left|\frac{\partial x''_{k}}{\partial x_{k}}\right|=\exp\left(\varepsilon^{k}_{v} s_{v}^{k}(\zeta_{v_{k}})\right)\)

*

*

Update complementary indices determined by and

L2HMC: Generalized Leapfrog

Main idea

- Introduce \((s_{x}, t_{x}, q_{x})\), \((s_{v}, t_{v}, q_{v})\) into the leapfrog updates, which are parameterized by weights \(\theta\) in a neural network.

- Introduce a binary direction variable, \(d\sim\mathcal{U}(+,-)\)

- Denote a complete state by \(\xi = (x, v, d)\), with target distribution \(p(\xi)\):

Jacobian:

\(\left|\frac{\partial v''_{k}}{\partial v_{k}}\right|=\exp\left(\frac{1}{2}\varepsilon^{k}_{v} s_{v}^{k}(\zeta_{v_{k}})\right)\), \(\left|\frac{\partial x''_{k}}{\partial x_{k}}\right|=\exp\left(\varepsilon^{k}_{v} s_{v}^{k}(\zeta_{v_{k}})\right)\)

*

*

Text

Example: GMM \(\in\mathbb{R}^{2}\)

\(A(\xi',\xi)\) = acceptance probability

\(A(\xi'|\xi)\cdot\delta(\xi',\xi)\) = avg. distance

Note:

- Define the squared jump distance:

- Maximize expected squared jump distance:

Encourages large moves

Penalizes small moves

HMC

L2HMC

where:

L2HMC: Generalized Leapfrog

Generalized \(x\)-update:

Momentum (\(v_{k}\)) scaling

(\(v\)) independent

Gradient \(\partial_{x}S(x_{k})\) scaling

Translation

(\(m_{t}\)\(\odot x\)) independent

Neural Networks

Generalized \(v\)-update:

(masks)

Network Architecture

(\(\alpha_{s}, \alpha_{q}\) are trainable parameters)

\(x\), \(v\) \(\in \mathbb{R}^{n}\)

\(s_{x}\),\(q_{x}\),\(t_{x}\) \(\in \mathbb{R}^{n}\)

4096

8192

1024

2048

512

Scaling test: Training

\(4096 \sim 1.73\times\)

\(8192 \sim 2.19\times\)

\(1024 \sim 1.04\times\)

\(2048 \sim 1.29\times\)

\(512\sim 1\times\)

Scaling test: Training

Scaling test: Training

\(8192\sim \times\)

4096

1024

2048

512

Scaling test: Inference

L2HMC: Generalized Leapfrog

- Define (\(v\)-independent): \(\zeta_{v_{k}} \equiv (x_{k}, \partial_{x}S(x_{k}), \tau(k))\)

Momentum scaling

Gradient scaling

Translation

- Introduce generalized \(v\)-update, \(\Gamma^{+}_{k}(v_{k};\zeta_{v_{k}})\):

- Define (\(x\)-independent): \(\zeta_{x_{k}} = (x_{k}, v_{k}, \tau(k))\)

- Introduce generalized \(x\)-update, \(\Lambda^{+}_{k}(x_{k};\zeta_{x_{k}})\)

momentum

position

L2HMC: Modified Leapfrog

- Split the position, \(x\), update into two sub-updates:

- Introduce a binary mask \(m^{t} \in\{0, 1\}^{n}\) and its complement \(\bar{m}^{t}\).

- \(m^{t}\) is drawn uniformly from the set of binary vectors satisfying \(\sum_{i=1}^{n}m_{i}^{t} = \lfloor{\frac{n}{2}\rfloor}\) (i.e. half of the entries of \(m^{t}\) are 0 and half are 1.)

- Introduce a binary direction variable \(d \in \{-1, 1\}\), drawn from a uniform distribution.

- Denote the complete augmented state as \(\xi \equiv (x, v, d)\).

(forward direction, \(d = +1\))

Momentum scaling

Gradient scaling

Translation

inputs

L2HMC: Modified Leapfrog

- Writing the action of the new leapfrog integrator as an operator \(\mathbf{L}_{\theta}\), parameterized by \(\theta\).

- Applying this operator \(M\) times successively to \(\xi\):

- The "flip" operator \(\mathbf{F}\) reverses \(d\): \(\mathbf{F}\xi = (x, v, -d)\).

- Write the complete dynamics step as:

(trajectory length)

L2HMC: Accept/Reject

- Fortunately, the Jacobian can be computed efficiently, and only depends on \(S_{x}, S_{v}\) and all the state variables, \(\zeta_{i}\).

- This has the effect of deforming the energy landscape:

- Accept the proposed configuration, \(\xi^{\prime}\) with probability:

- \(A(\xi^{\prime}|\xi) = \min{\left(1, \frac{p(\mathbf{FL}_{\theta}\xi)}{p(\xi)}\left|\frac{\partial\left[\mathbf{FL}\xi\right]}{\partial\xi^{T}}\right|\right)}\)

\(|\mathcal{J}| \neq 1\)

Unlike HMC,

L2HMC: Loss function

Encourages typical moves to be large

Penalizes sampler if unable to move effectively

scale parameter

"distance" between \(\xi, \xi^{\prime}\): \(\delta(\xi, \xi^{\prime}) = \|x - x^{\prime}\|^{2}_{2}\)

-

Idea: MINIMIZE the autocorrelation time (time needed for samples to be independent).

- Done by MAXIMIZING the "distance" traveled by the integrator.

- Note:

\(\delta \times A = \) "expected" distance

L2HMC: \(U(1)\) Lattice Gauge Theory

Wilson action:

where:

L2HMC: \(U(1)\) Lattice Gauge Theory

Wilson action:

where:

L2HMC

HMC

L2HMC

HMC

Thanks for listening!

Interested?

github.com/saforem2/l2hmc-qcd

Machine Learning in Lattice QCD

Sam Foreman

02/10/2020

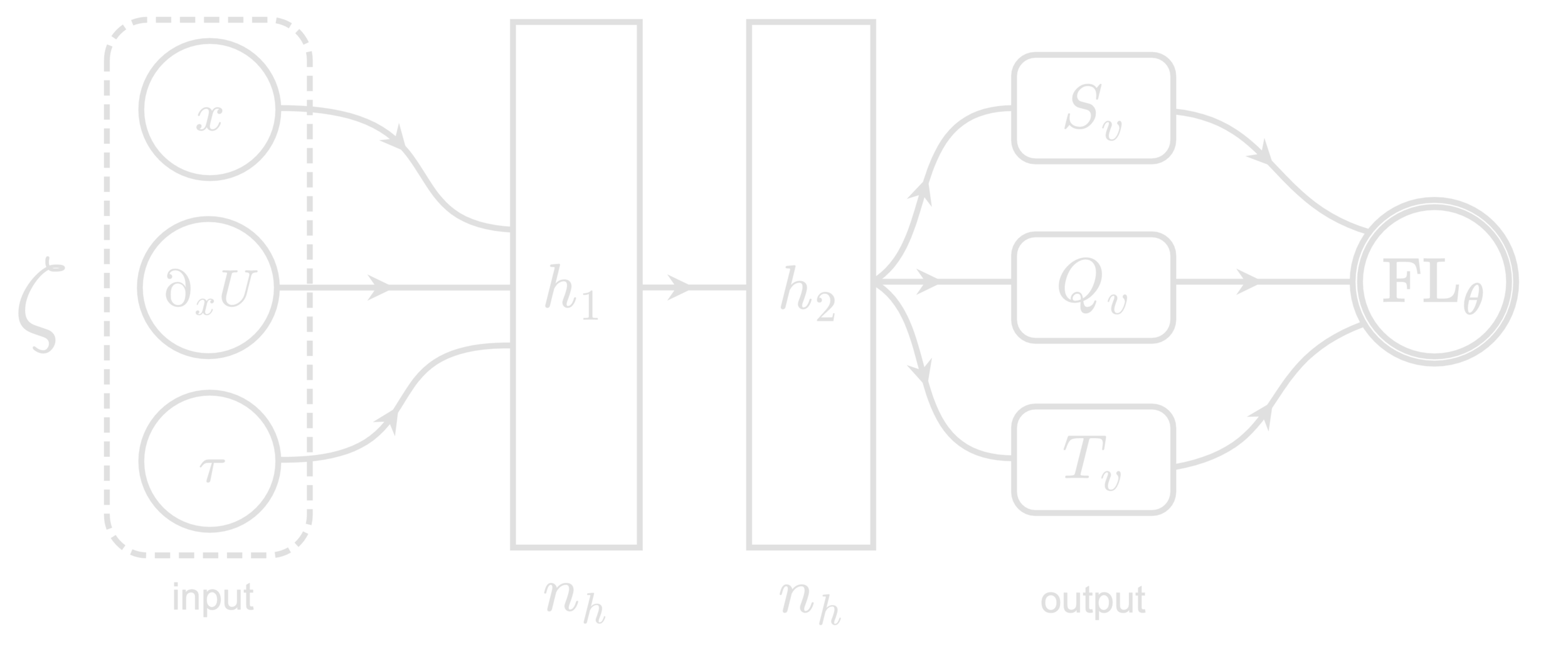

Network Architecture

Build model,

initialize network

Run dynamics, Accept/Reject

Calculate

Backpropagate

Finished

training?

Save trained

model

Run inference

on saved model

Train step

Hamiltonian Monte Carlo (HMC)

- Integrating Hamilton's equations allows us to move far in state space while staying (roughly) on iso-probability contours of \(p(x, v)\)

Integrate \(H(x, v)\):

\(t \longrightarrow t + \varepsilon\)

Project onto target parameter space \(p(x, v) \longrightarrow p(x)\)

\(v \sim p(v)\)

Markov Chain Monte Carlo (MCMC)

- Goal: Generate an ensemble of independent samples drawn from the desired target distribution \(p(x)\).

- This is done using the Metropolis-Hastings accept/reject algorithm:

-

Given:

- Initial distribution, \(\pi_{0}\)

- Proposal distribution, \(q(x^{\prime}|x)\)

-

Update:

- Sample \(x^{\prime} \sim q(\cdot | x)\)

- Accept \(x^{\prime}\) with probability \(A(x^{\prime}|x)\)

if \(q(x^{\prime}|x) = q(x|x^{\prime})\)

HMC: Leapfrog Integrator

- Integrate Hamilton's equations numerically using the leapfrog integrator.

- The leapfrog integrator proceeds in three steps:

Update momenta (half step):

Update position (full step):

Update momenta (half step):

Issues with MCMC

- Need to wait for the chain to "burn in" (become thermalized)

- Nearby configurations on the chain are correlated with each other.

- Multiple steps needed to produce independent samples ("mixing time")

- Measurable via integrated autocorrelation time, \(\tau^{\mathrm{int}}_{\mathcal{O}}\)

- Multiple steps needed to produce independent samples ("mixing time")

Smaller \(\tau^{\mathrm{int}}_{\mathcal{O}}\longrightarrow\) less computational cost!

correlated!

burn-in

L2HMC: Learning to HMC

- L2HMC generalizes HMC by introducing 6 new functions, \(S_{\ell}, T_{\ell}, Q_{\ell}\), for \(\ell = x, v\) into the leapfrog integrator.

- Given an analytically described distribution, L2HMC provides a statistically exact sampler, with highly desirable properties:

- Fast burn-in.

- Fast mixing.

Ideal for lattice QCD due to critical slowing down!

-

Idea: MINIMIZE the autocorrelation time (time needed for samples to be independent).

- Can be done by MAXIMIZING the "distance" traveled by the integrator.

HMC: Leapfrog Integrator

- Write the action of the leapfrog integrator in terms of an operator \(L\), acting on the state \(\xi \equiv (x, v)\):

- The acceptance probability is then given by:

- Introduce a "momentum-flip" operator, \(\mathbf{F}\):

Determinant of the Jacobian, \(\|\mathcal{J}\|\)

(for HMC)

L2HMC: Generalized Leapfrog

- Define (\(v\)-independent): \(\zeta_{v_{k}} \equiv (x_{k}, \partial_{x}S(x_{k}), \tau(k))\)

Momentum scaling

Gradient scaling

Translation

- Introduce generalized \(v\)-update, \(\Gamma^{+}_{k}(v_{k};\zeta_{v_{k}})\):

- For \(\zeta_{x_{k}} = (x_{k}, v_{k}, \tau(k))\)

- And the generalized \(x\)-update, \(\Lambda^{+}_{k}(x_{k};\zeta_{x_{k}})\)

momentum

position

Copy of DLHMC_MIT_lattice

By Sam Foreman

Copy of DLHMC_MIT_lattice

Machine Learning in Lattice QCD

- 376