Neural Field Transformations

(for lattice gauge theory)

Sam Foreman

2021-03-11

Introduction

-

LatticeQCD:

- Non-perturbative approach to solving the QCD theory of the strong interaction between quarks and gluons

-

Calculations in LatticeQCD proceed in 3 steps:

- Gauge field generation: Use Markov Chain Monte Carlo (MCMC) methods for sampling independent gauge field (gluon) configurations.

- Propagator calculations: Compute how quarks propagate in these fields ("quark propagators")

- Contractions: Method for combining quark propagators into correlation functions and observables.

Motivation: Lattice QCD

- Generating independent gauge configurations is a major bottleneck in the HEP/LatticeQCD workflow

- As the lattice spacing \(a\rightarrow 0\), configurations get stuck

stuck

\(a\)

continuum limit

Markov Chain Monte Carlo (MCMC)

- Goal: Draw independent samples from a target distribution, \(p(x)\)

- Starting from some initial state \(x_{0}\) (randomly chosen), we generate proposal configurations \(x^{\prime}\)

- Use Metropolis-Hastings acceptance criteria

Metropolis-Hastings: Accept/Reject

import numpy as np

def metropolis_hastings(p, steps=1000):

x = 0. # initialize config

samples = np.zeros(steps)

for i in range(steps):

x_prime = x + np.random.randn() # proposed config

if np.random.rand() < p(x_prime) / p(x): # compute A(x'|x)

x = x_prime # accept proposed config

samples[i] = x # accumulate configs

return samples

As

,

Issues with MCMC

- Generate proposal configurations

dropped configurations

Inefficient!

- Construct chain:

- Account for thermalization ("burn-in"):

- Account for correlations between states ("thinning"):

Hamiltonian Monte Carlo (HMC)

-

Target distribution:

\(p(x)\propto e^{-S(x)}\)

-

Introduce fictitious momentum:

-

Joint target distribution, \(p(x, v)\)

\(p(x, v) = p(x)\cdot p(v) = e^{-S(x)}\cdot e^{-\frac{1}{2}v^{T}v} = e^{-\mathcal{H(x,v)}}\)

-

The joint \((x, v)\) system obeys Hamilton's Equations:

\(v\sim\mathcal{N}(0, 1)\)

\(\dot{x} = \frac{\partial\mathcal{H}}{\partial v}\)

\(\dot{v} = -\frac{\partial\mathcal{H}}{\partial x}\)

\(S(x)\) is the action

(potential energy)

HMC: Leapfrog Integrator

\(\dot{v}=-\frac{\partial\mathcal{H}}{\partial x}\)

\(\dot{x}=\frac{\partial\mathcal{H}}{\partial v}\)

Hamilton's Equations:

2. Full-step position update:

1. Half-step momentum update:

3. Half-step momentum update:

HMC: Issues

-

Cannot easily traverse low-density zones.

-

\(A(\xi^{\prime}|\xi)\approx 0\)

-

-

What do we want in a good sampler?

- Fast mixing

- Fast burn-in

- Mix across energy levels

- Mix between modes

-

Energy levels selected randomly

-

\(v\sim \mathcal{N}(0, 1)\) \(\longrightarrow\) slow mixing!

-

(especially for Lattice QCD)

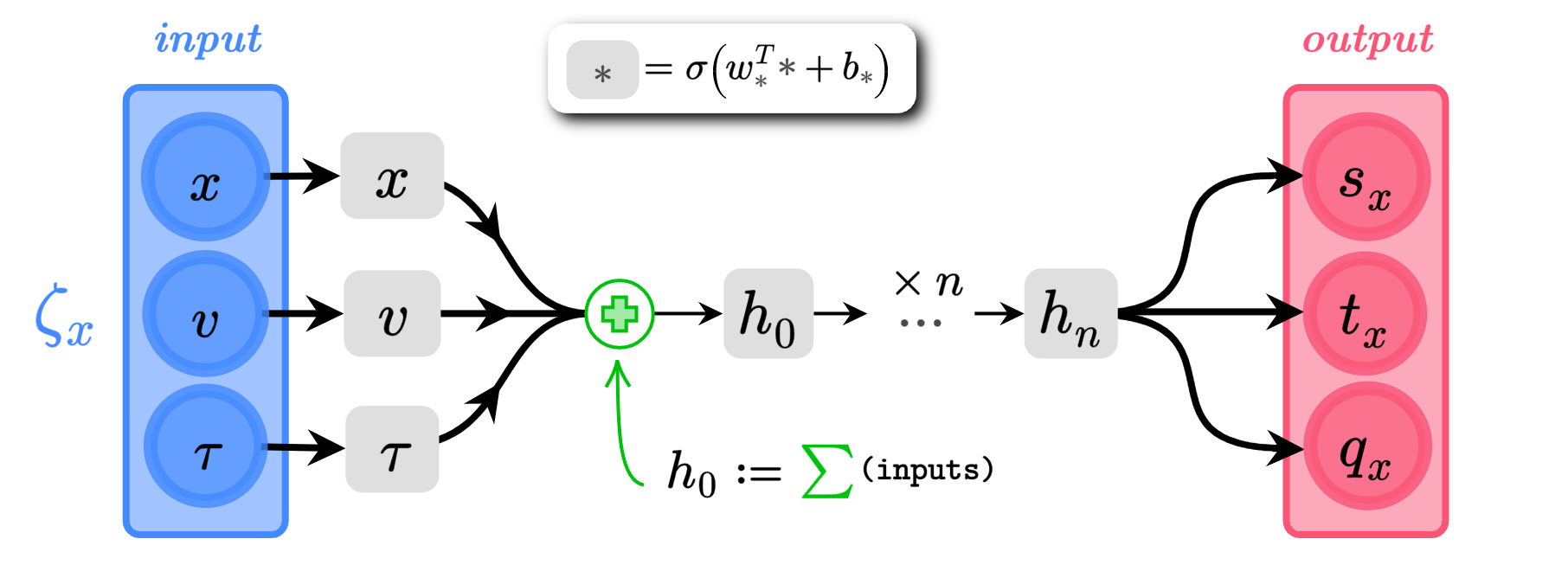

L2HMC: Generalized Leapfrog

-

Main idea:

- Introduce six auxiliary functions, \((s_{x}, t_{x}, q_{x})\), \((s_{v}, t_{v}, q_{v})\) into the leapfrog updates, which are parameterized by weights \(\theta\) in a neural network.

-

Notation:

-

Introduce a binary direction variable, \(d\sim\mathcal{U}(+,-)\)

- distributed independently of \(x\), \(v\)

- Denote a complete state by \(\xi = (x, v, d)\), with target distribution \(p(\xi)\):

-

Introduce a binary direction variable, \(d\sim\mathcal{U}(+,-)\)

L2HMC: Generalized Leapfrog

Momentum scaling

Gradient scaling

Translation

momentum

position

- Introduce generalized \(v\)-update, \(v^{\prime}_{k} = \Gamma^{+}_{k}(v_{k};\zeta_{v_{k}})\):

with \(\zeta_{x_{k}} = (x_{k}, v_{k}, \tau(k))\)

- And the generalized \(x\)-update, \(x^{\prime}_{k} = \Lambda^{+}_{k}(x_{k};\zeta_{x_{k}})\)

with (\(v\)-independent) \(\zeta_{v_{k}} \equiv (x_{k}, \partial_{x}S(x_{k}), \tau(k))\)

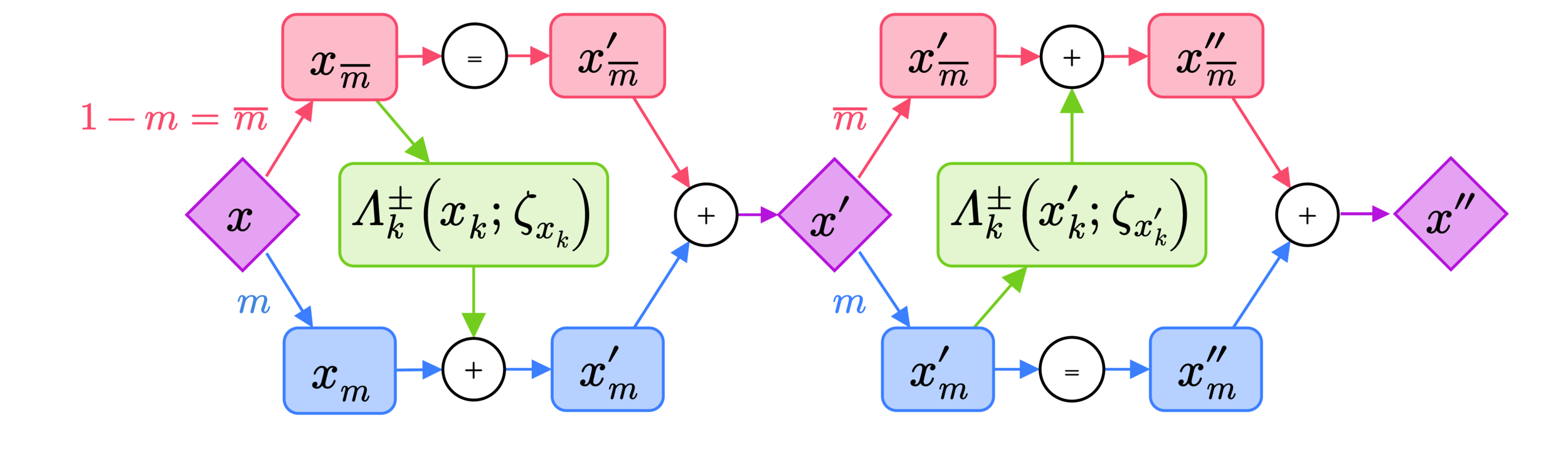

L2HMC: Generalized Leapfrog

- Complete (generalized) update:

- Half-step momentum update:

- Full-step half-position update:

- Full-step half-position update:

- Half-step momentum update:

Note:

split via \(m^t\)

Network Architecture

(\(\alpha_{s}, \alpha_{q}\) are trainable parameters)

\(x\), \(v\) \(\in \mathbb{R}^{d}\)

\(s_{x}\),\(q_{x}\),\(t_{x}\) \(\in \mathbb{R}^{d}\)

Loss function, \(\mathcal{L}(\theta)\)

- Goal: Maximize "expected squared jump distance" (ESJD), \(A(\xi^{\prime}|\xi)\cdot \delta(\xi^{\prime}, \xi)\):

- Define the "squared jump distance":

where:

L2HMC

HMC

GMM: Autocorrelation

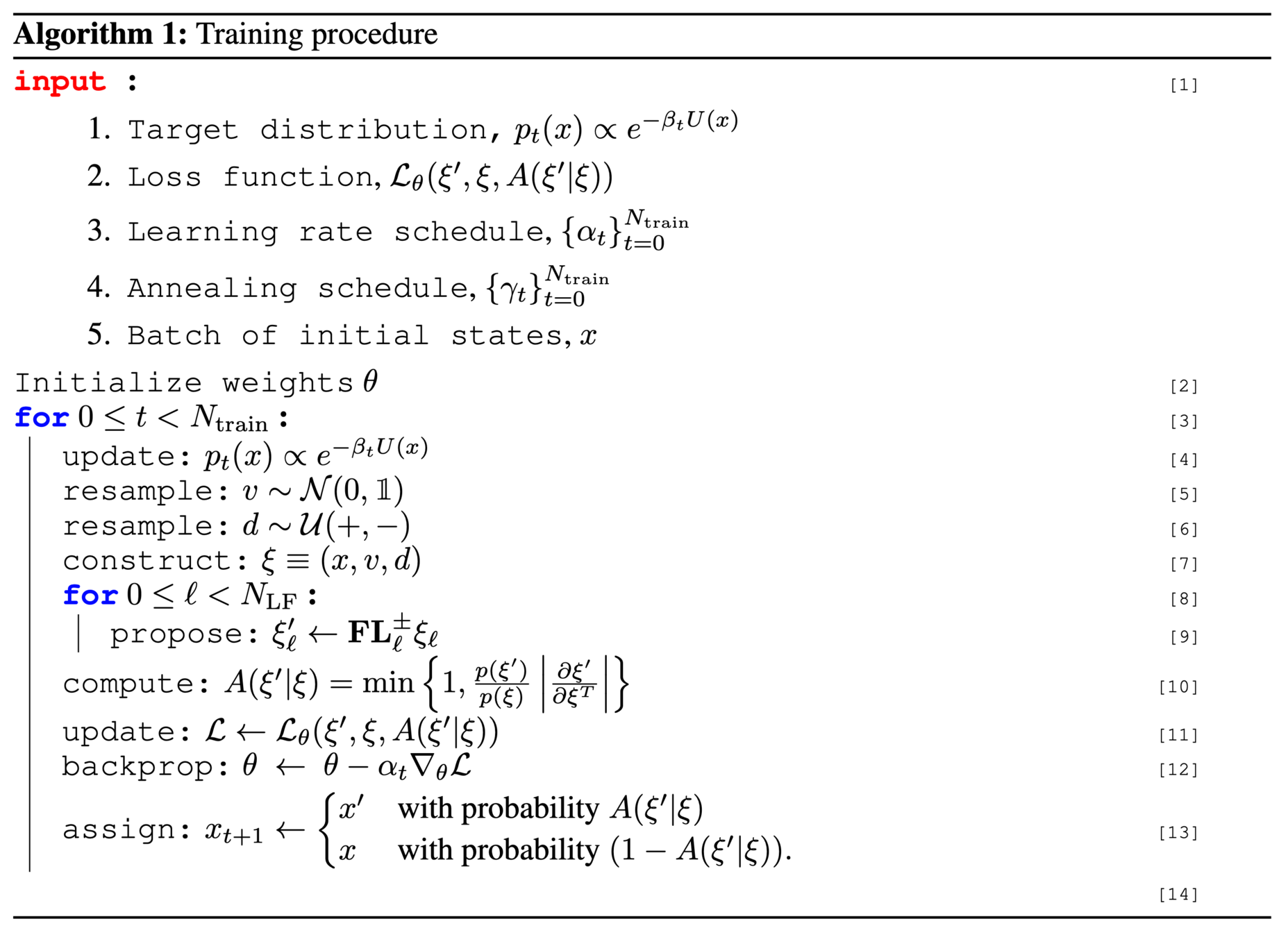

Annealing Schedule

- Introduce an annealing schedule during the training phase:

- For \(\|\gamma_{t}\| < 1\), this helps to rescale (shrink) the energy barriers between isolated modes

- Allows our sampler to explore previously inaccessible regions of the target distribution

- Target distribution becomes:

(varied slowly)

\(= \{0.1, 0.2, \ldots, 0.9, 1.0\}\)

(increasing)

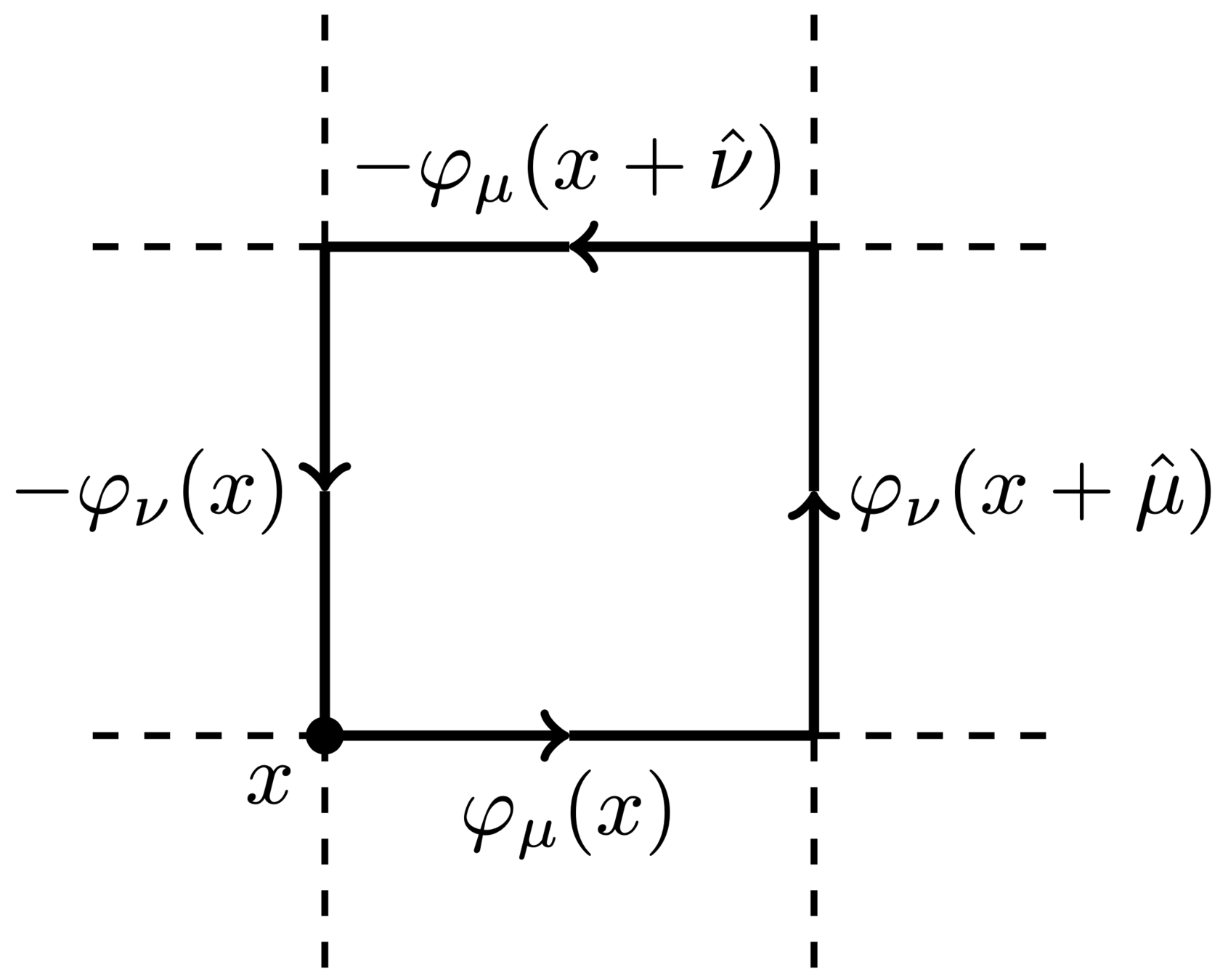

Lattice Gauge Theory

- Link variables:

- Wilson action:

- Topological charge:

(real-valued)

(integer-valued)

Lattice Gauge Theory

- Topological Loss Function:

\(A(\xi^{\prime}|\xi) = \) "acceptance probability"

where:

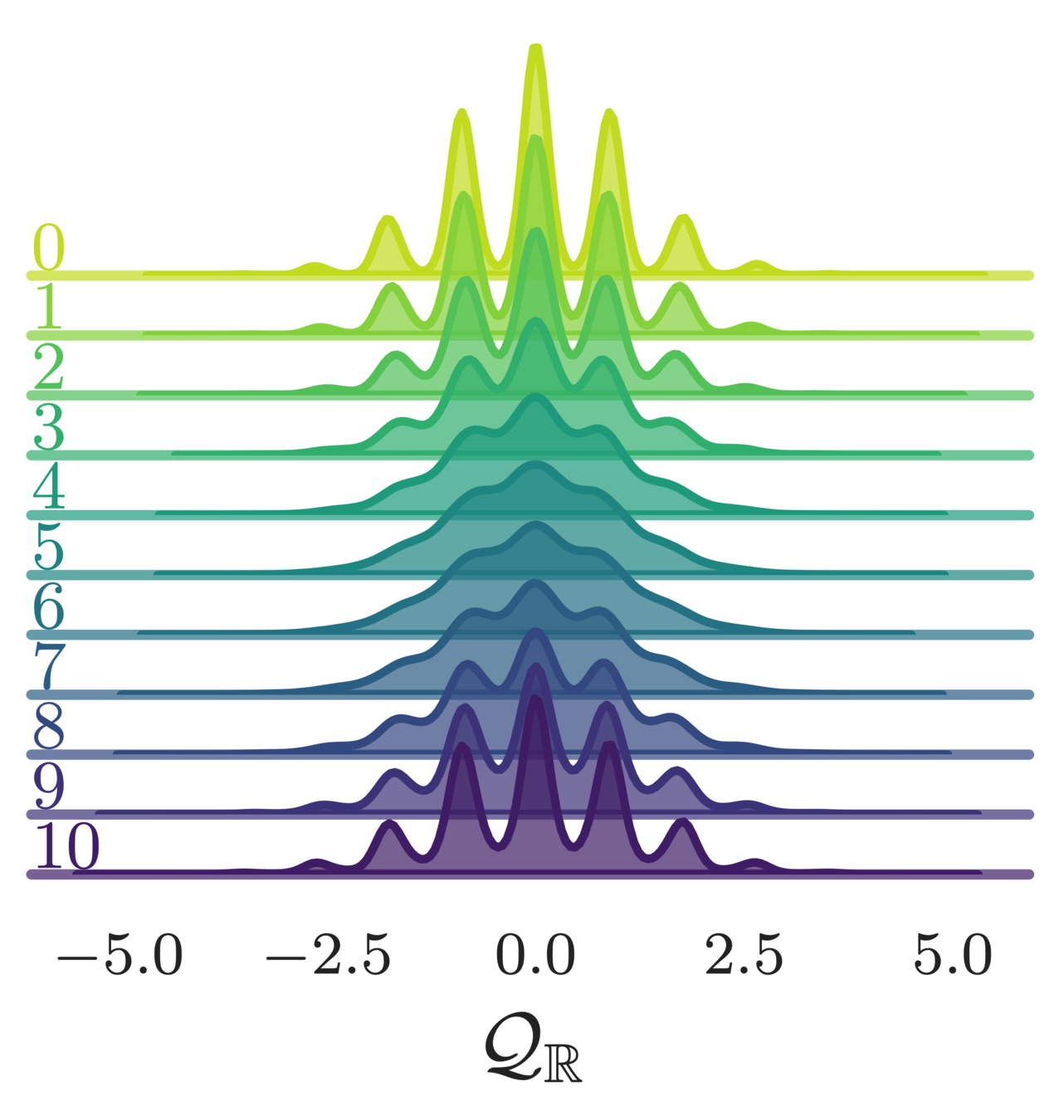

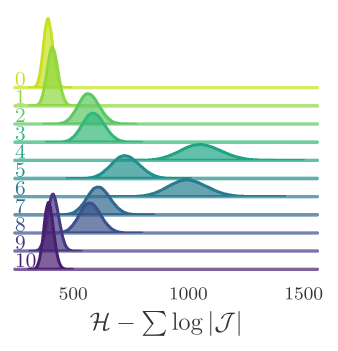

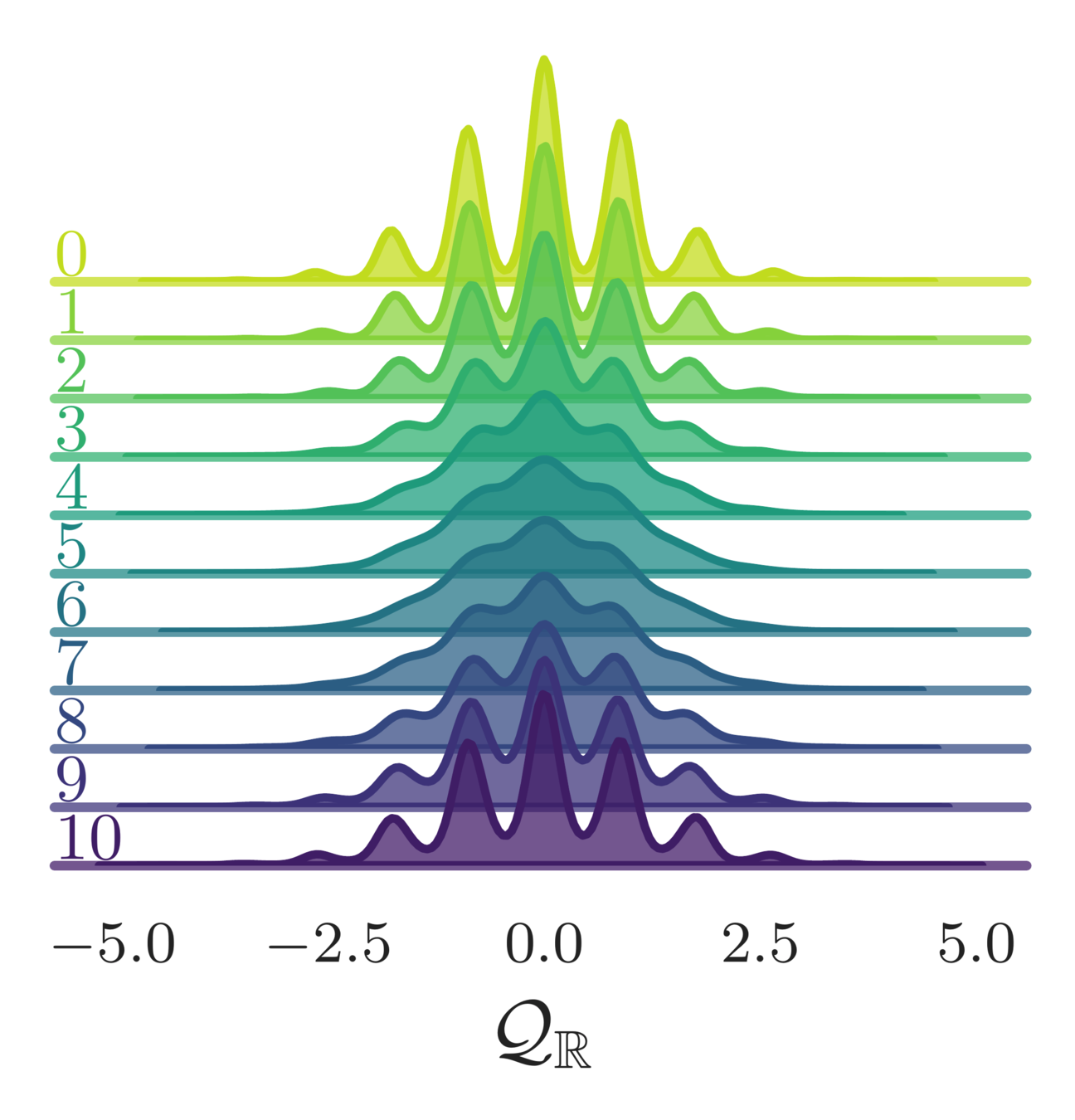

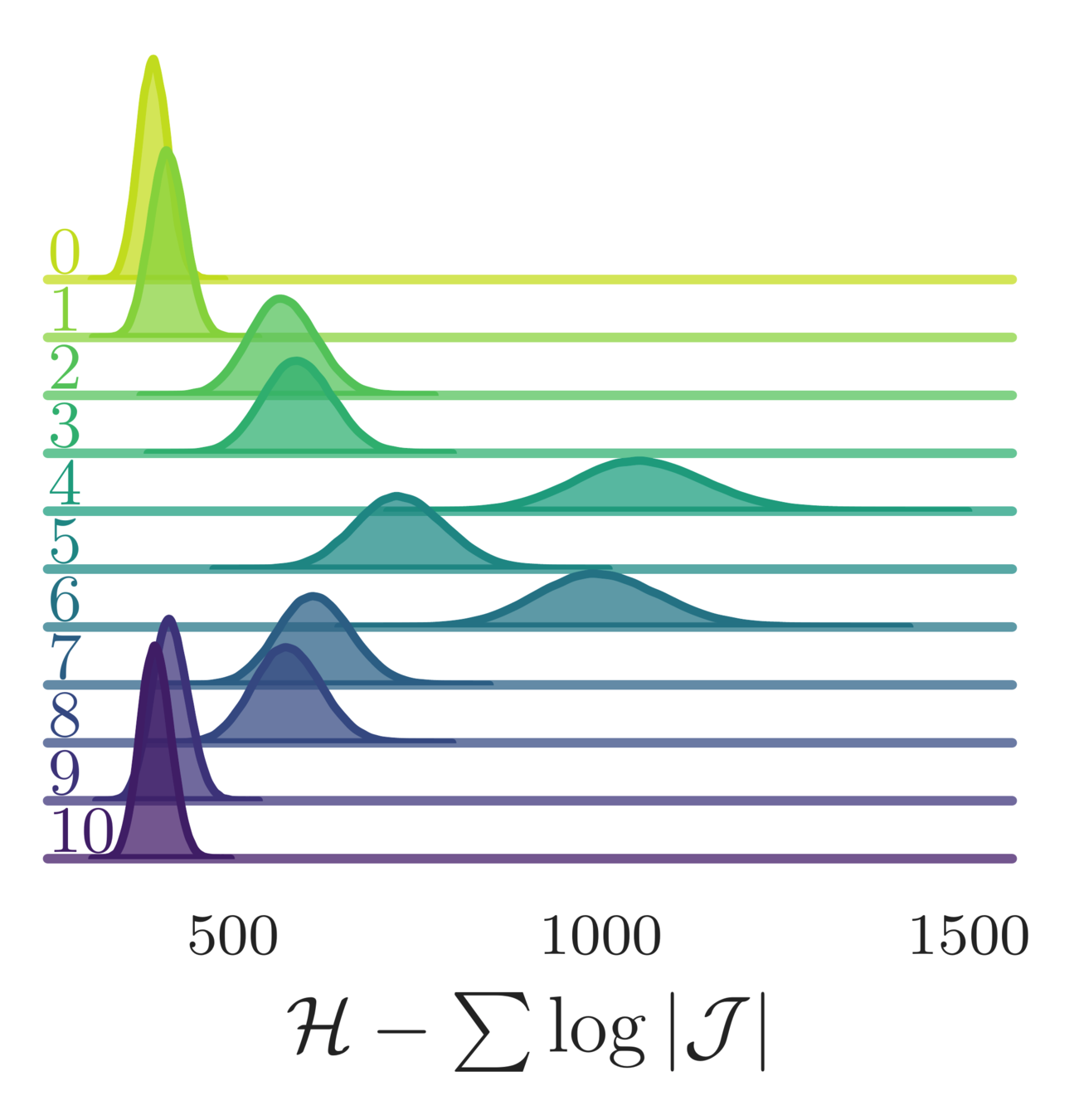

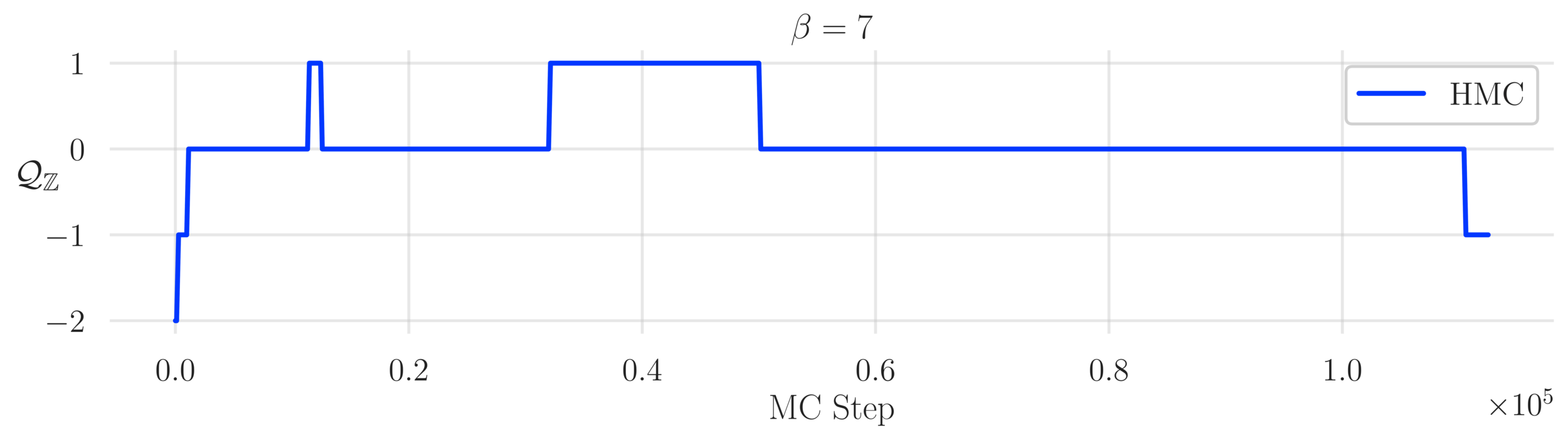

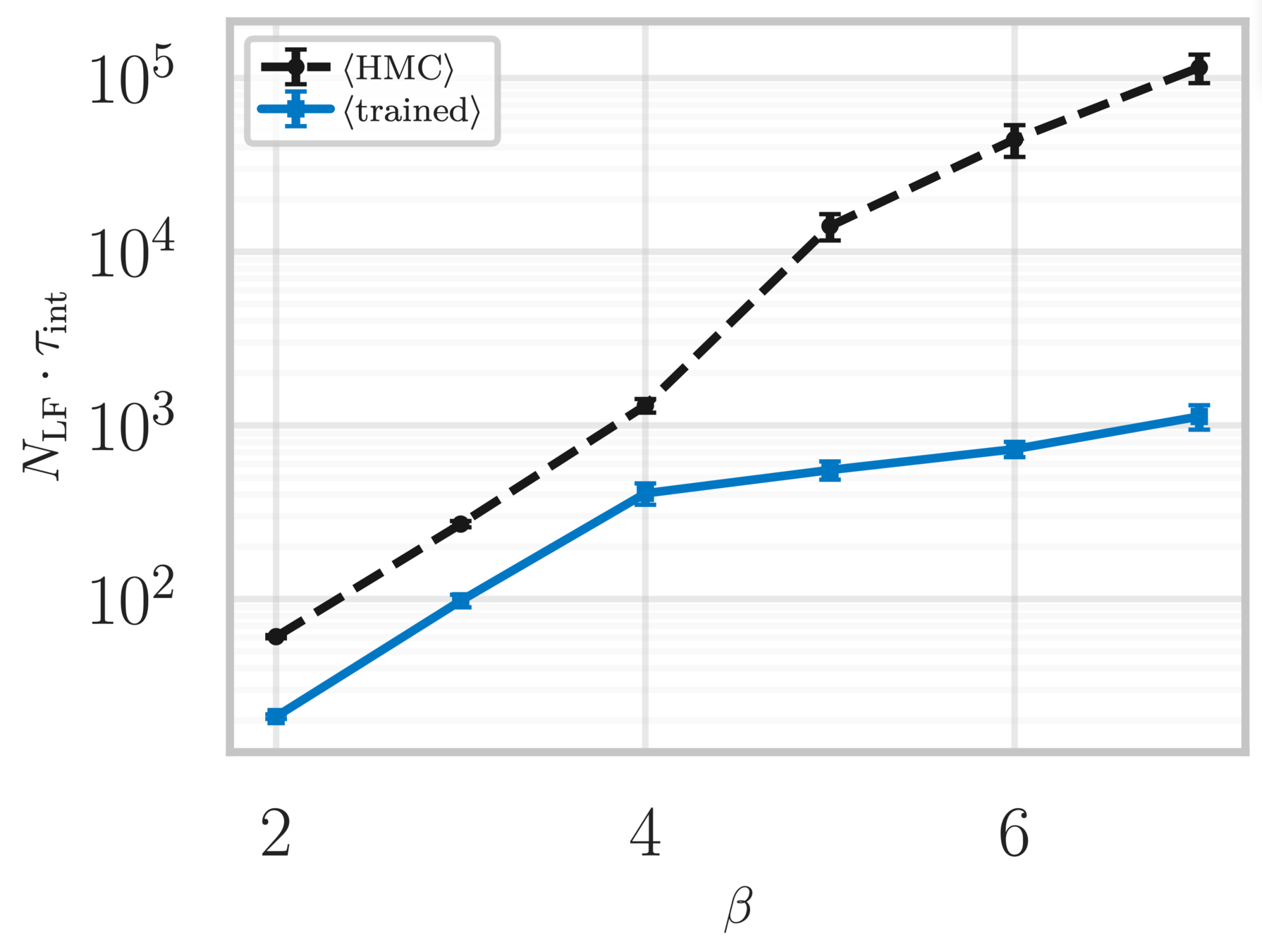

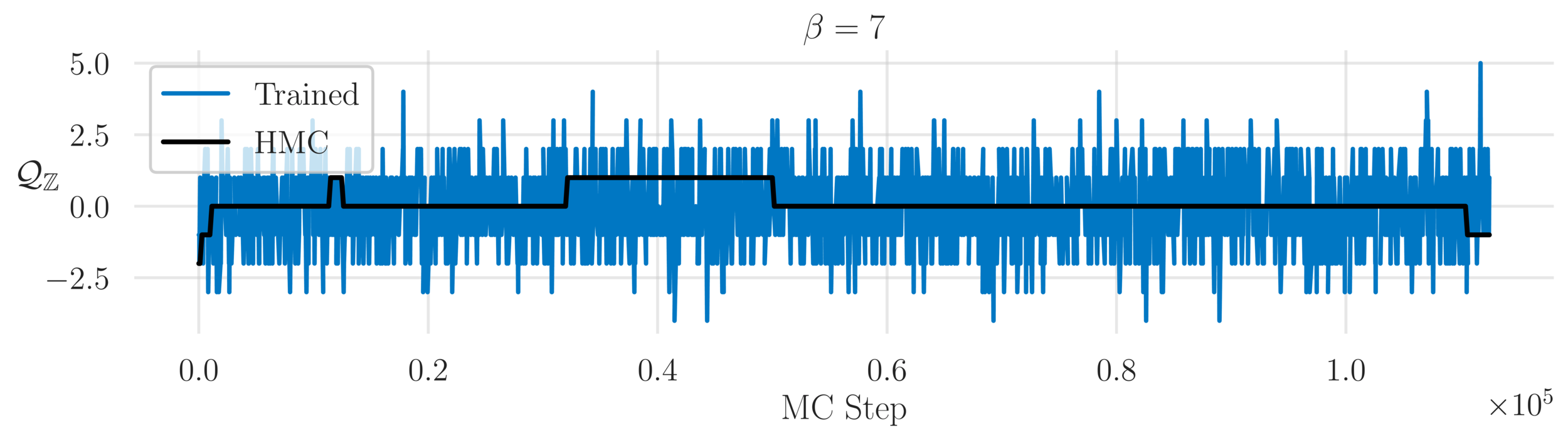

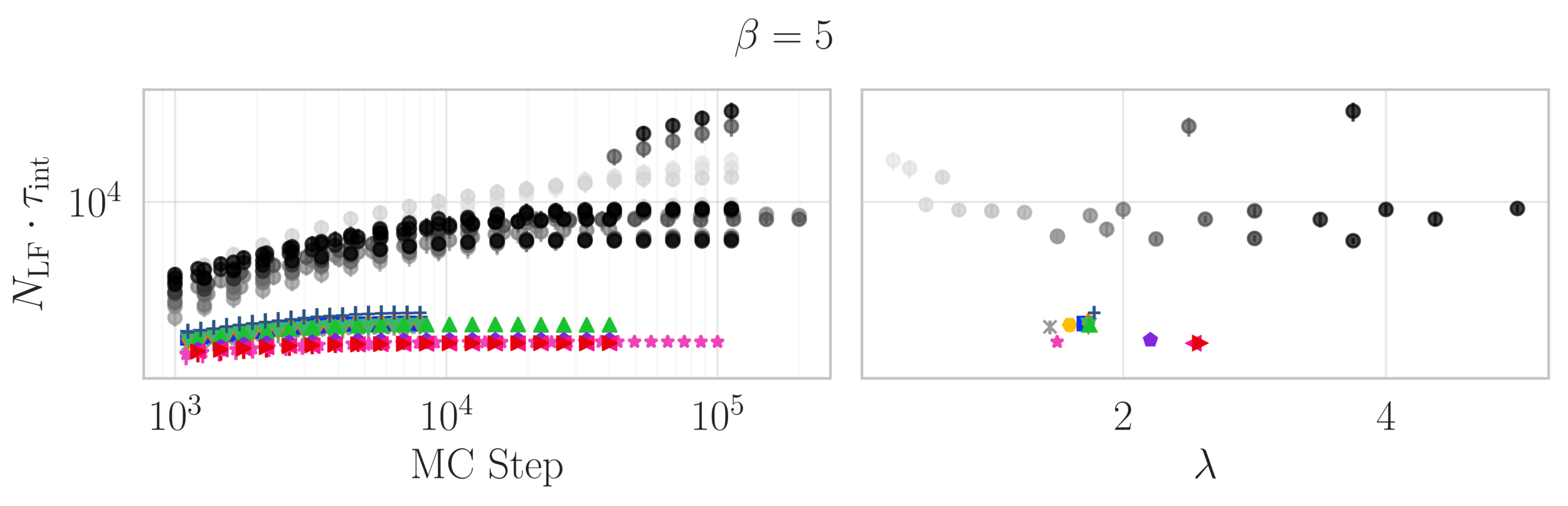

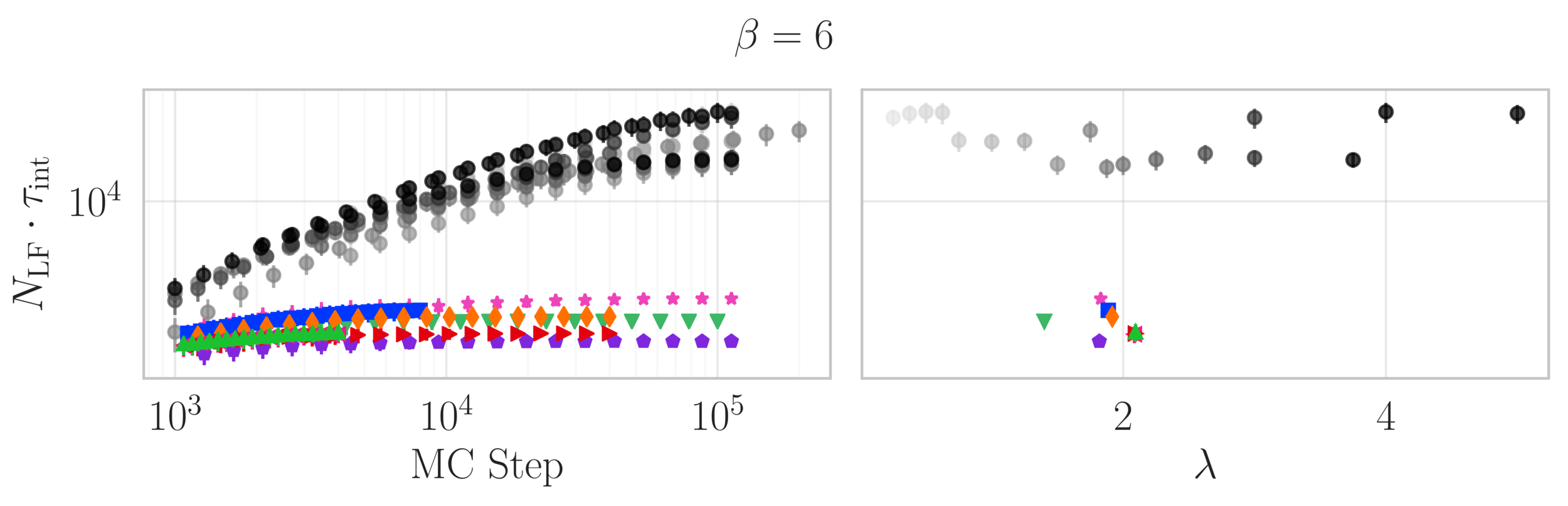

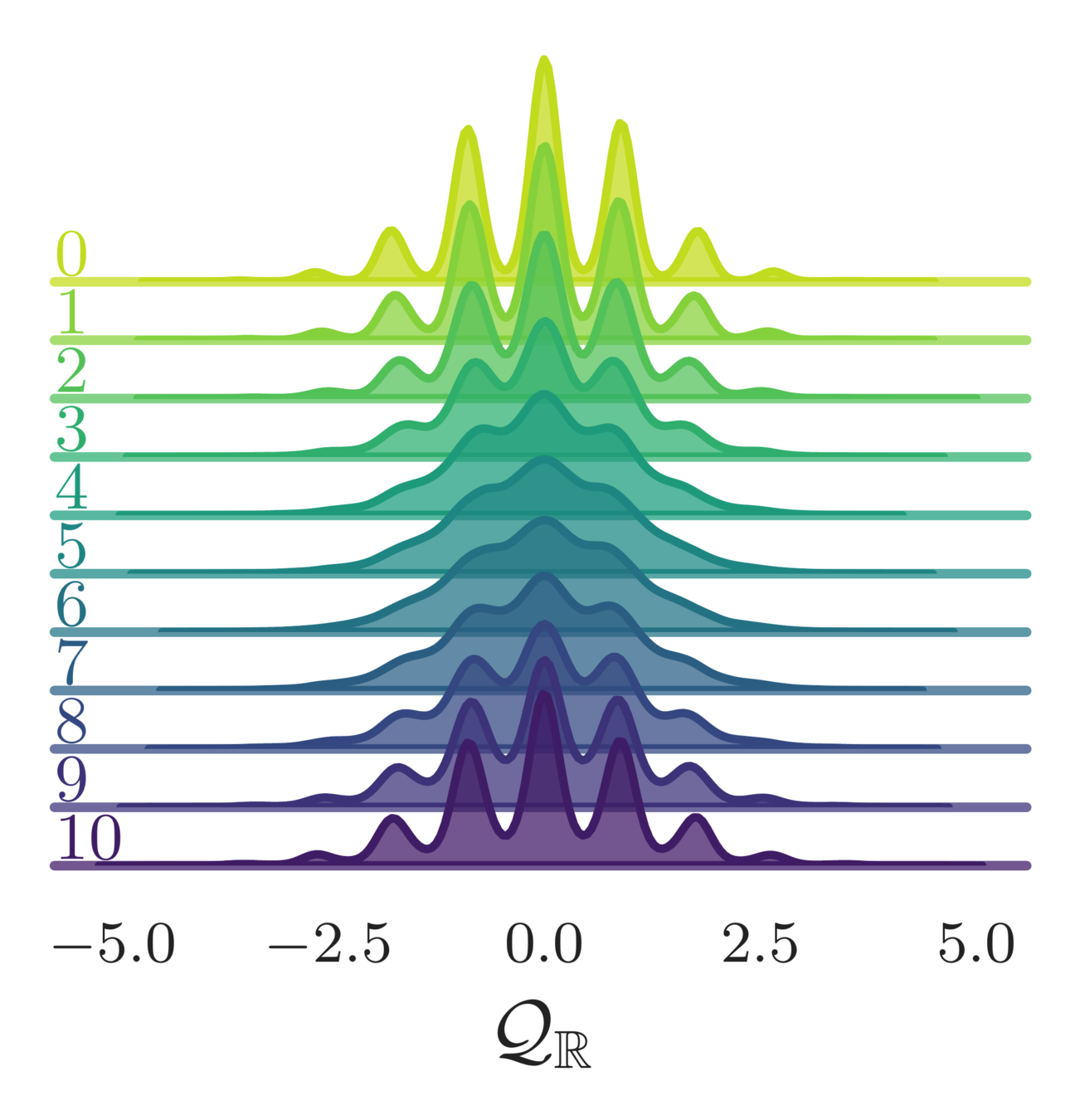

Topological charge history

~ cost / step

continuum limit

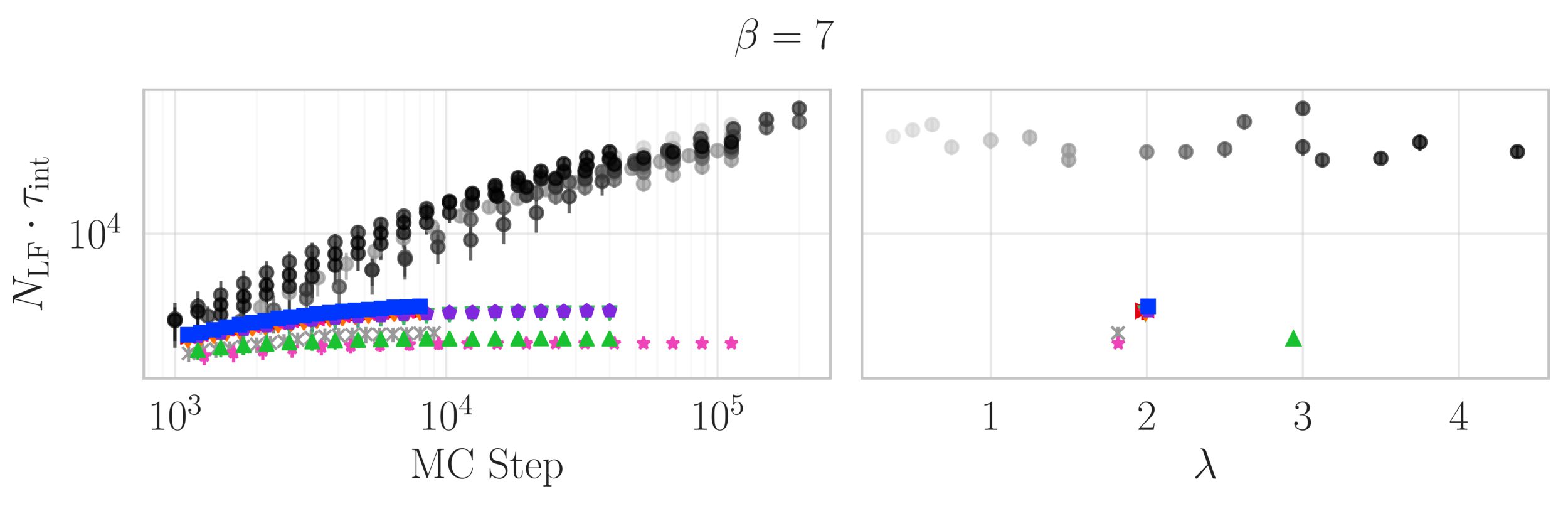

Estimate of the Integrated autocorrelation time of \(\mathcal{Q}_{\mathbb{R}}\)

L2HMC

HMC

Lattice Gauge Theory

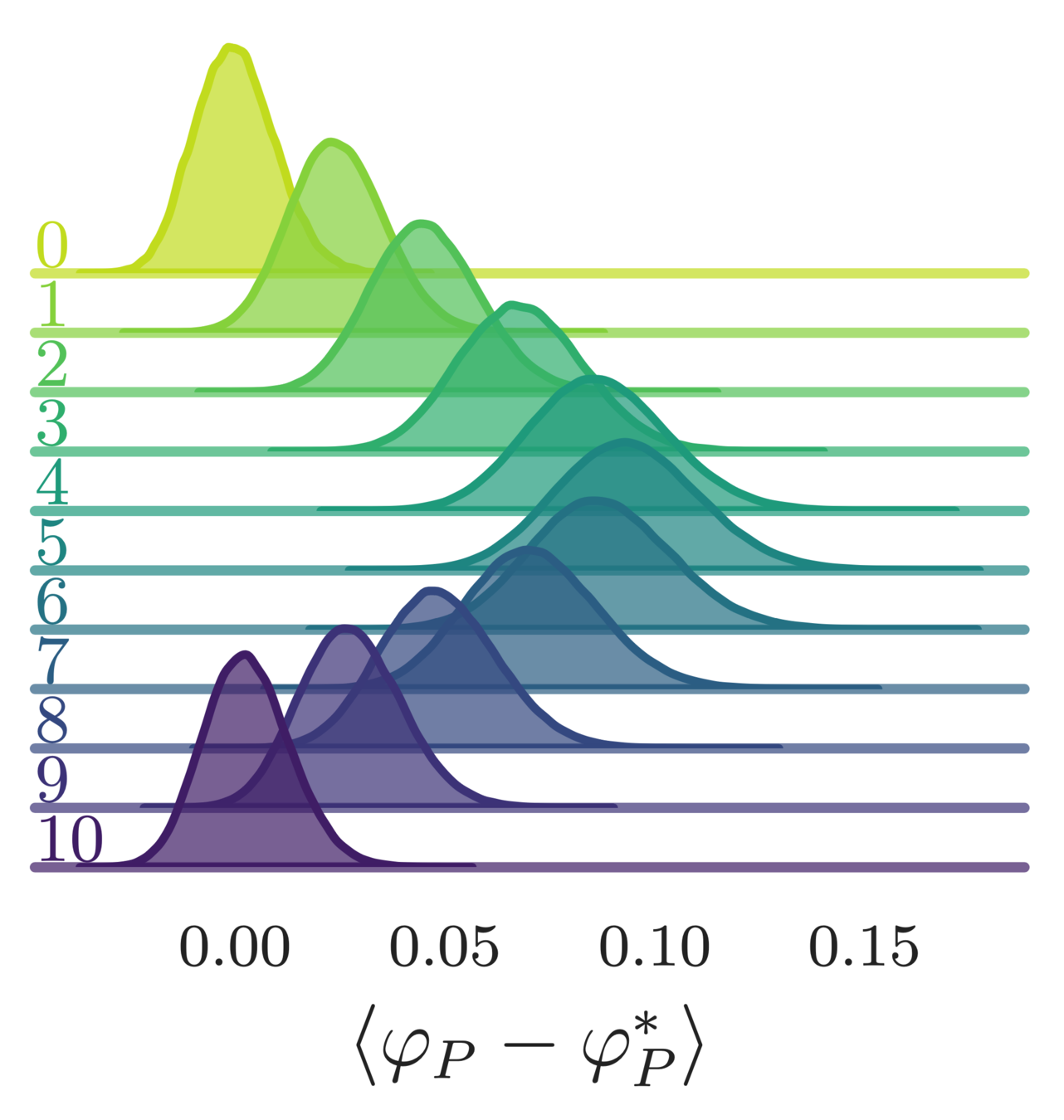

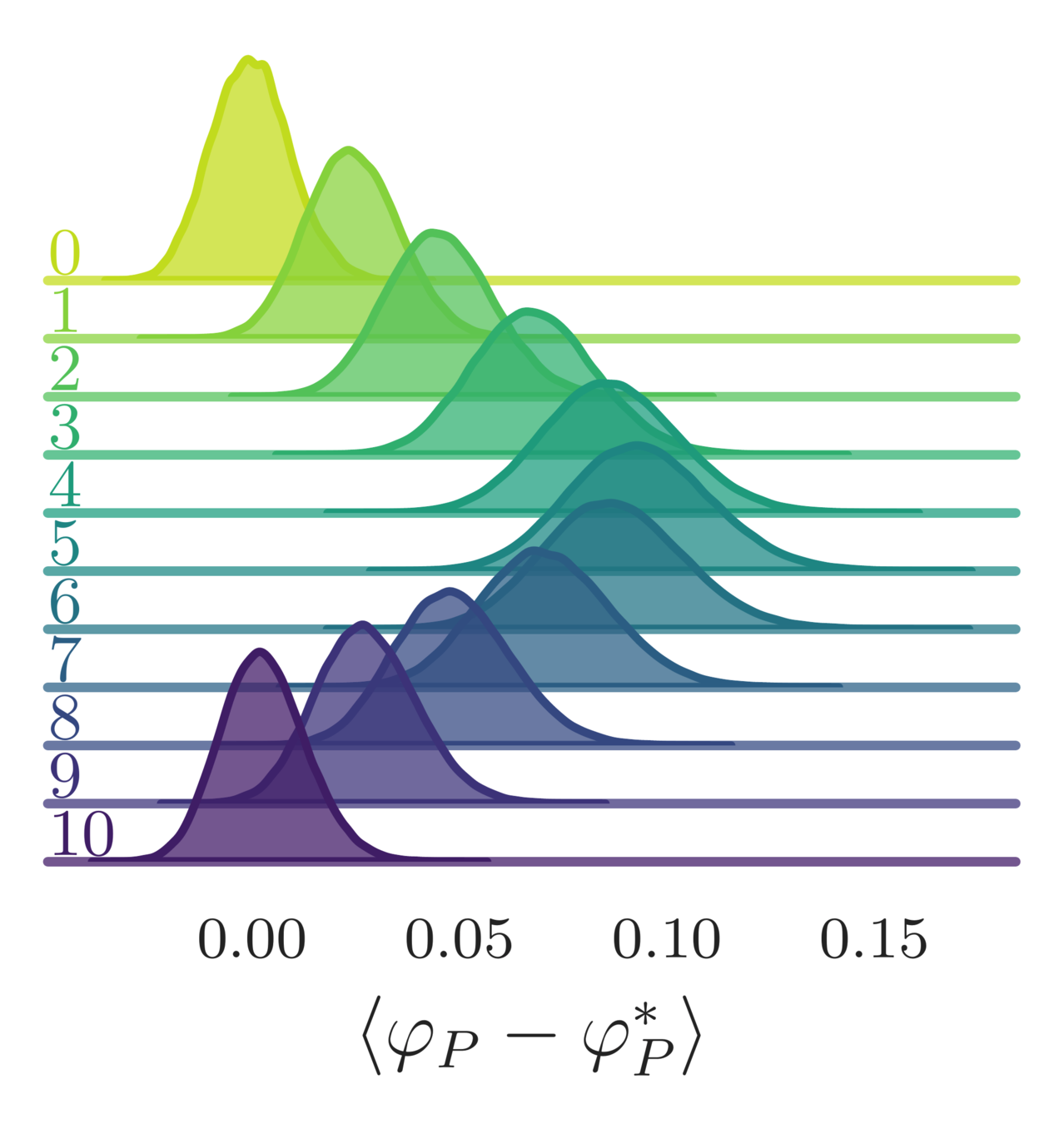

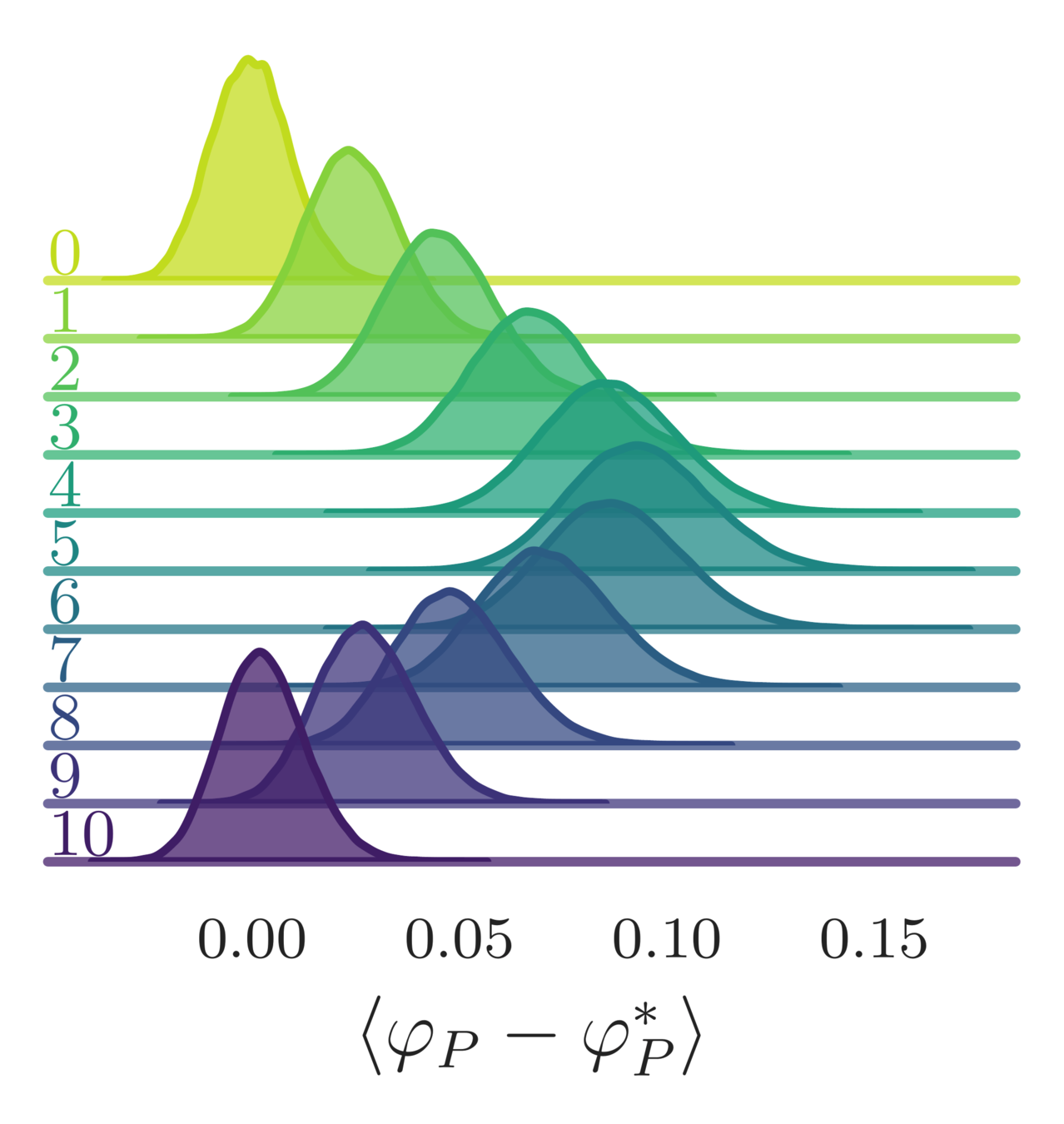

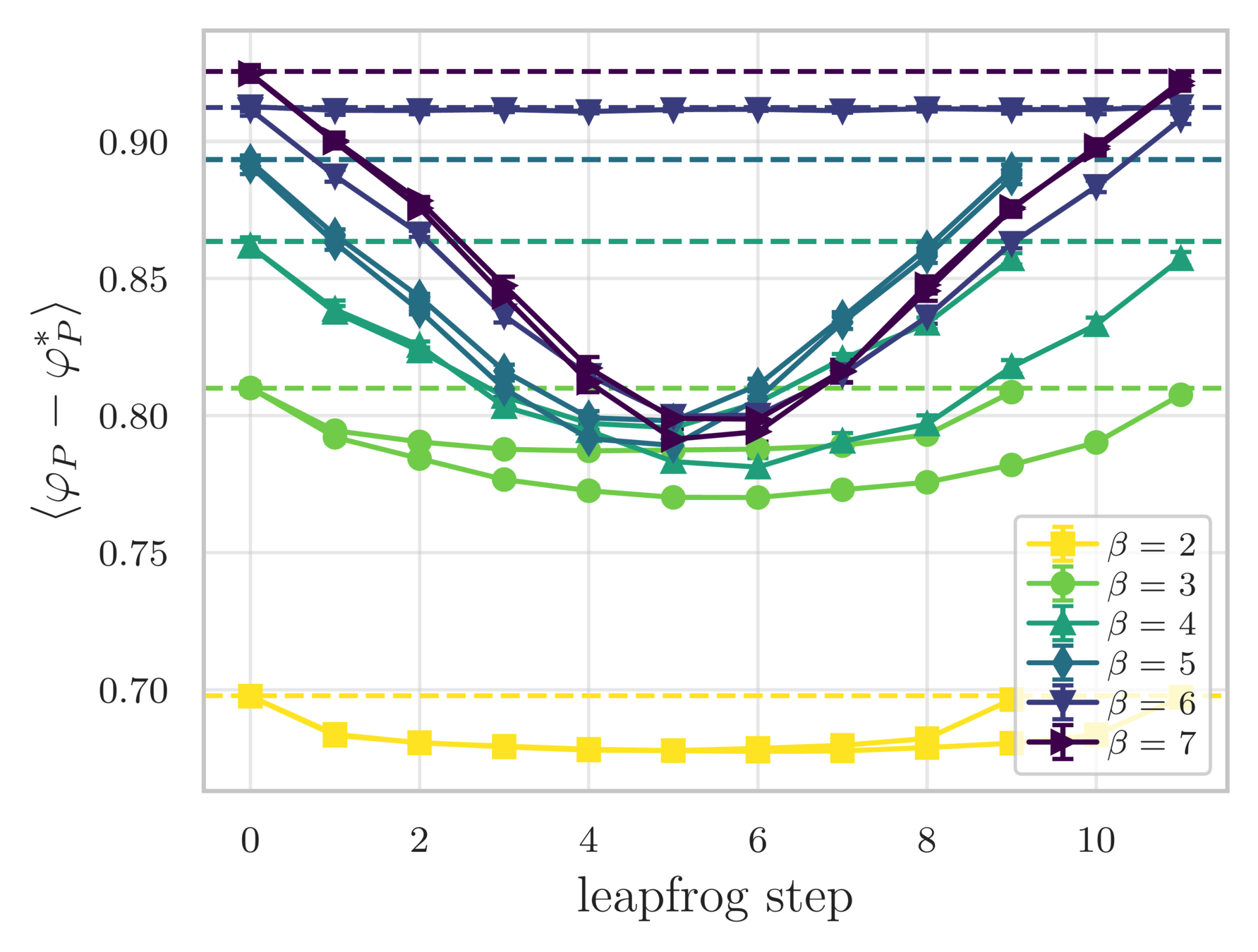

- Error in the average plaquette, \(\langle\varphi_{P}-\varphi^{*}_{P}\rangle\)

- where \(\varphi^{*}_{P} = I_{1}(\beta)/I_{0}(\beta)\) is the exact (\(\infty\)-volume) result

leapfrog step

(MD trajectory)

4096

8192

1024

2048

512

Scaling test: Training

\(4096 \sim 1.73\times\)

\(8192 \sim 2.19\times\)

\(1024 \sim 1.04\times\)

\(2048 \sim 1.29\times\)

\(512\sim 1\times\)

\(4096 \sim 1.73\times\)

\(8192 \sim 2.19\times\)

\(1024 \sim 1.04\times\)

\(2048 \sim 1.29\times\)

\(512\sim 1\times\)

Scaling test: Training

Scaling test: Training

\(8192\sim \times\)

4096

1024

2048

512

Scaling test: Inference

Thanks!

l2hmc-qcd

By Sam Foreman

l2hmc-qcd

L2HMC algorithm applied to simulations in Lattice QCD

- 348