Docker, Compose, K8S and everything else

Satyajit Ghana

Deep Learning @ Inkers

root@inkers:~# whoami

C (99), C++ (98, 11, 14, 17), Python, Java, Dart, Haskell, JavaScript, TypeScript, R, LaTeX, MATLAB

- Web Developer (React, Angular, Django)

- App Developer (Flutter, Android)

- Deep Learning (Vision & NLP w/ PyTorch)

- DevOps & ProdOps (AWS, Docker, K8S)

- Ex Competitive Programmer (CodeChef 4*)

- Chaos Tester

Docker

WHAT DOCKER 🐳 IS

- Containerization Platform

- Platform for developing, shipping, and running applications

- Great for DevOps, everyone gets the same dev environment

- A way to deliver high-performing & scalable applications

- Prevents the "but it works on my machine (╯‵□′)╯︵┻━┻"

- Built entirely using Go

- Much more efficient than Hypervisors or VM Systems

WHAT DOCKER IS NOT

- Virtual Machine / Lightweight VM

- A way to secure/obscure your code

- A Sandbox machine

- A way to backup your data

- Solution to multi-OS testing (docker uses host OS)

- Performance Booster (docker uses your host kernel)

INSTALLING DOCKER

DOCKER DESKTOP

Windows

https://hub.docker.com/editions/community/docker-ce-desktop-windows

Mac

https://hub.docker.com/editions/community/docker-ce-desktop-mac

DOCKER ENGINE

Linux (Ubuntu)

PLAY WITH DOCKER

- Open https://labs.play-with-docker.com/

- Create a new Instance

$ docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

b8dfde127a29: Pull complete

Digest: sha256:0fe98d7debd9049c50b597ef1f85b7c1e8cc81f59c8d623fcb2250e8bec85b38

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/BASIC DOCKER COMMAND

Commands:

attach Attach local standard input, output, and error streams to a running container

build Build an image from a Dockerfile

commit Create a new image from a container's changes

cp Copy files/folders between a container and the local filesystem

create Create a new container

diff Inspect changes to files or directories on a container's filesystem

events Get real time events from the server

exec Run a command in a running container

export Export a container's filesystem as a tar archive

history Show the history of an image

images List images

import Import the contents from a tarball to create a filesystem image

info Display system-wide information

inspect Return low-level information on Docker objects

kill Kill one or more running containers

load Load an image from a tar archive or STDIN

login Log in to a Docker registry

logout Log out from a Docker registry

logs Fetch the logs of a container

pause Pause all processes within one or more containers

port List port mappings or a specific mapping for the container

ps List containers

pull Pull an image or a repository from a registry

push Push an image or a repository to a registry

rename Rename a container

restart Restart one or more containers

rm Remove one or more containers

rmi Remove one or more images

run Run a command in a new container

save Save one or more images to a tar archive (streamed to STDOUT by default)

search Search the Docker Hub for images

start Start one or more stopped containers

stats Display a live stream of container(s) resource usage statistics

stop Stop one or more running containers

tag Create a tag TARGET_IMAGE that refers to SOURCE_IMAGE

top Display the running processes of a container

unpause Unpause all processes within one or more containers

update Update configuration of one or more containers

version Show the Docker version information

wait Block until one or more containers stop, then print their exit codes

Run 'docker COMMAND --help' for more information on a command.C++ EXAMPLE

A Simple C++ Program

#include <iostream>

#include "mylib.hpp"

auto main(int argc, char **argv) -> int {

std::cout << "Hello, world! from Docker" << std::endl;

std::cout << std::endl;

double a, b;

std::cout << "Enter number A: ";

std::cin >> a;

std::cout << "Enter number B: ";

std::cin >> b;

auto sum = mylib::add_nums(a, b);

auto prod = mylib::mul_nums(a, b);

std::cout << "Sum: " << sum << std::endl;

std::cout << "Product: " << prod << std::endl;

return 0;

}Dockerfile

FROM gcc:9.4.0 as builder

RUN apt-get update -y && apt-get install -y --no-install-recommends \

cmake

WORKDIR /opt

ADD . .

RUN cmake . && make && ctest --output-on-failure

FROM alpine:3.14 as runtime

RUN apk upgrade --no-cache && \

apk add --no-cache libc6-compat libgcc libstdc++

WORKDIR /opt/hello-world

COPY --from=builder /opt/helloworld ./

CMD ["./helloworld"]This is the BASE IMAGE

Dockerfile

FROM gcc:9.4.0 as builder

RUN apt-get update -y && apt-get install -y --no-install-recommends \

cmake

WORKDIR /opt

ADD . .

RUN cmake . && make && ctest --output-on-failure

FROM alpine:3.14 as runtime

RUN apk upgrade --no-cache && \

apk add --no-cache libc6-compat libgcc libstdc++

WORKDIR /opt/hello-world

COPY --from=builder /opt/helloworld ./

CMD ["./helloworld"]APT Packages

Dockerfile

FROM gcc:9.4.0 as builder

RUN apt-get update -y && apt-get install -y --no-install-recommends \

cmake

WORKDIR /opt

ADD . .

RUN cmake . && make && ctest --output-on-failure

FROM alpine:3.14 as runtime

RUN apk upgrade --no-cache && \

apk add --no-cache libc6-compat libgcc libstdc++

WORKDIR /opt/hello-world

COPY --from=builder /opt/helloworld ./

CMD ["./helloworld"]Add the source files

Dockerfile

FROM gcc:9.4.0 as builder

RUN apt-get update -y && apt-get install -y --no-install-recommends \

cmake

WORKDIR /opt

ADD . .

RUN cmake . && make && ctest --output-on-failure

FROM alpine:3.14 as runtime

RUN apk upgrade --no-cache && \

apk add --no-cache libc6-compat libgcc libstdc++

WORKDIR /opt/hello-world

COPY --from=builder /opt/helloworld ./

CMD ["./helloworld"]Build the Target Executable and Do Tests

Dockerfile

FROM gcc:9.4.0 as builder

RUN apt-get update -y && apt-get install -y --no-install-recommends \

cmake

WORKDIR /opt

ADD . .

RUN cmake . && make && ctest --output-on-failure

FROM alpine:3.14 as runtime

RUN apk upgrade --no-cache && \

apk add --no-cache libc6-compat libgcc libstdc++

WORKDIR /opt/hello-world

COPY --from=builder /opt/helloworld ./

CMD ["./helloworld"]This is the Runtime's BASE IMAGE

Dockerfile

FROM gcc:9.4.0 as builder

RUN apt-get update -y && apt-get install -y --no-install-recommends \

cmake

WORKDIR /opt

ADD . .

RUN cmake . && make && ctest --output-on-failure

FROM alpine:3.14 as runtime

RUN apk upgrade --no-cache && \

apk add --no-cache libc6-compat libgcc libstdc++

WORKDIR /opt/hello-world

COPY --from=builder /opt/helloworld ./

CMD ["./helloworld"]Runtime's packages

Dockerfile

FROM gcc:9.4.0 as builder

RUN apt-get update -y && apt-get install -y --no-install-recommends \

cmake

WORKDIR /opt

ADD . .

RUN cmake . && make && ctest --output-on-failure

FROM alpine:3.14 as runtime

RUN apk upgrade --no-cache && \

apk add --no-cache libc6-compat libgcc libstdc++

WORKDIR /opt/hello-world

COPY --from=builder /opt/helloworld ./

CMD ["./helloworld"]Copy the executable from builder container to runtime container

Dockerfile

FROM gcc:9.4.0 as builder

RUN apt-get update -y && apt-get install -y --no-install-recommends \

cmake

WORKDIR /opt

ADD . .

RUN cmake . && make && ctest --output-on-failure

FROM alpine:3.14 as runtime

RUN apk upgrade --no-cache && \

apk add --no-cache libc6-compat libgcc libstdc++

WORKDIR /opt/hello-world

COPY --from=builder /opt/helloworld ./

CMD ["./helloworld"]This command is run when we do docker run

TEST-DRIVEN-DEVELOPMENT

with GTest

#include <gtest/gtest.h>

// ideally this will be a shared library, but for now it's easier to just

#include "../mylib.hpp"

TEST(AdditionTest, CanAddIntegers) {

auto sum = mylib::add_nums(3, 4);

EXPECT_EQ(7, sum);

}

TEST(MultiplicationTest, CanMultiplyIntegers) {

auto product = mylib::mul_nums(3, 4);

EXPECT_EQ(12, product);

}

TEST(ThrowsExceptionTest, CanThrowException) {

EXPECT_THROW(mylib::throw_exception(), std::runtime_error);

}

TEST-DRIVEN-DEVELOPMENT

$ docker build -t hello-cpp .

#13 15.98 [100%] Built target gmock_main

#13 15.99 Test project /opt

#13 16.00 Start 1: AdditionTest.CanAddIntegers

#13 16.00 1/3 Test #1: AdditionTest.CanAddIntegers .............. Passed 0.00 sec

#13 16.00 Start 2: MultiplicationTest.CanMultiplyIntegers

#13 16.00 2/3 Test #2: MultiplicationTest.CanMultiplyIntegers ...***Failed 0.00 sec

#13 16.00 Running main() from /opt/_deps/googletest-src/googletest/src/gtest_main.cc

#13 16.00 Note: Google Test filter = MultiplicationTest.CanMultiplyIntegers

#13 16.00 [==========] Running 1 test from 1 test suite.

#13 16.00 [----------] Global test environment set-up.

#13 16.00 [----------] 1 test from MultiplicationTest

#13 16.00 [ RUN ] MultiplicationTest.CanMultiplyIntegers

#13 16.00 /opt/src/tests/test_basics.cc:13: Failure

#13 16.00 Expected equality of these values:

#13 16.00 12

#13 16.00 product

#13 16.00 Which is: 0

#13 16.00 [ FAILED ] MultiplicationTest.CanMultiplyIntegers (0 ms)

#13 16.00 [----------] 1 test from MultiplicationTest (0 ms total)

#13 16.00

#13 16.00 [----------] Global test environment tear-down

#13 16.00 [==========] 1 test from 1 test suite ran. (0 ms total)

#13 16.00 [ PASSED ] 0 tests.

#13 16.00 [ FAILED ] 1 test, listed below:

#13 16.00 [ FAILED ] MultiplicationTest.CanMultiplyIntegers

#13 16.00

#13 16.00 1 FAILED TEST

#13 16.00

#13 16.00 Start 3: ThrowsExceptionTest.CanThrowException

#13 16.01 3/3 Test #3: ThrowsExceptionTest.CanThrowException .... Passed 0.00 sec

#13 16.01

#13 16.01 67% tests passed, 1 tests failed out of 3

#13 16.01

#13 16.01 Total Test time (real) = 0.02 sec

#13 16.01

#13 16.01 The following tests FAILED:

#13 16.01 2 - MultiplicationTest.CanMultiplyIntegers (Failed)

#13 16.01 Errors while running CTest

------

executor failed running [/bin/sh -c cmake . && make && ctest --output-on-failure]: exit code: 8Inker's Fridays are Test-Driven-Fridays (@_@;)

OUR EXAMPLE LIB

#pragma once

namespace mylib {

template <typename T> T add_nums(T a, T b) { return a + b; }

template <typename T> T mul_nums(T a, T b) { return a * b; }

void throw_exception() { throw std::runtime_error("I will throw!"); }

} // namespace mylib

OUR EXAMPLE LIB

#pragma once

namespace mylib {

template <typename T> T add_nums(T a, T b) { return a + b; }

template <typename T> T mul_nums(T a, T b) { return a * b; }

void throw_exception() { throw std::runtime_error("I will throw!"); }

} // namespace mylib

what do you think will happen if we do add_nums(2, 'A') ?

OUR EXAMPLE LIB

#15 9.076 [ 33%] Built target gtest_main

#15 9.089 [ 41%] Building CXX object CMakeFiles/test_basics.dir/src/tests/test_basics.cc.o

#15 9.897 /opt/src/tests/test_basics.cc: In member function 'virtual void AdditionTest_CanAddIntegers_Test::TestBody()':

#15 9.897 /opt/src/tests/test_basics.cc:8:8: error: conflicting declaration 'auto sum'

#15 9.897 8 | auto sum = mylib::add_nums(2, 'A');

#15 9.897 | ^~~

#15 9.897 /opt/src/tests/test_basics.cc:7:8: note: previous declaration as 'int sum'

#15 9.897 7 | auto sum = mylib::add_nums(3, 4);

#15 9.897 | ^~~

#15 9.897 /opt/src/tests/test_basics.cc:8:36: error: no matching function for call to 'add_nums(int, char)'

#15 9.897 8 | auto sum = mylib::add_nums(2, 'A');

#15 9.897 | ^

#15 9.897 In file included from /opt/src/tests/test_basics.cc:4:

#15 9.897 /opt/src/tests/../mylib.hpp:4:25: note: candidate: 'template<class T> T mylib::add_nums(T, T)'

#15 9.897 4 | template <typename T> T add_nums(T a, T b) { return a + b; }

#15 9.897 | ^~~~~~~~

#15 9.897 /opt/src/tests/../mylib.hpp:4:25: note: template argument deduction/substitution failed:

#15 9.897 /opt/src/tests/test_basics.cc:8:36: note: deduced conflicting types for parameter 'T' ('int' and 'char')

#15 9.897 8 | auto sum = mylib::add_nums(2, 'A');

#15 9.897 | ^

#15 10.19 make[2]: *** [CMakeFiles/test_basics.dir/build.make:76: CMakeFiles/test_basics.dir/src/tests/test_basics.cc.o] Error 1

#15 10.19 make[1]: *** [CMakeFiles/Makefile2:139: CMakeFiles/test_basics.dir/all] Error 2

#15 10.19 make: *** [Makefile:146: all] Error 2

------

executor failed running [/bin/sh -c cmake . && make && ctest --output-on-failure]: exit code: 2DOCKER BUILD & RUN

$ docker build -t hello-cpp . # no errors on build, we can now run !

$ docker run -it --rm hello-cpp

Hello, world! from Docker

Enter number A: 43

Enter number B: 96

Sum: 139

Product: 4128PYTHON 🐍 EXAMPLE

BASIC FASTAPI EXAMPLE

import sys

import requests

from fastapi import FastAPI

from fastapi.responses import Response

version = f"{sys.version_info.major}.{sys.version_info.minor}"

app = FastAPI()

@app.get("/")

async def read_root():

message = f"Hello world! From FastAPI running on Uvicorn with Gunicorn. Using Python {version}"

return {"message": message}

@app.get("/cat")

async def read_cat():

try:

cat_api = "http://thecatapi.com/api/images/get?format=src"

response = requests.get(cat_api)

return Response(content=response.content, media_type=response.headers["content-type"])

except:

return {"message": "Error in fetching cat, Network request failed"}

Dockerfile

FROM tiangolo/uvicorn-gunicorn-fastapi:python3.8

ADD requirements.txt .

RUN pip install -r requirements.txt

EXPOSE 8000

COPY ./app /app

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "8000"]DOCKER BUILD & RUN

docker build -t hello-fastapi .

docker run -it --rm -p 8000:8000 hello-fastapi

INFO: Started server process [1]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)

http://localhost:8000/cat

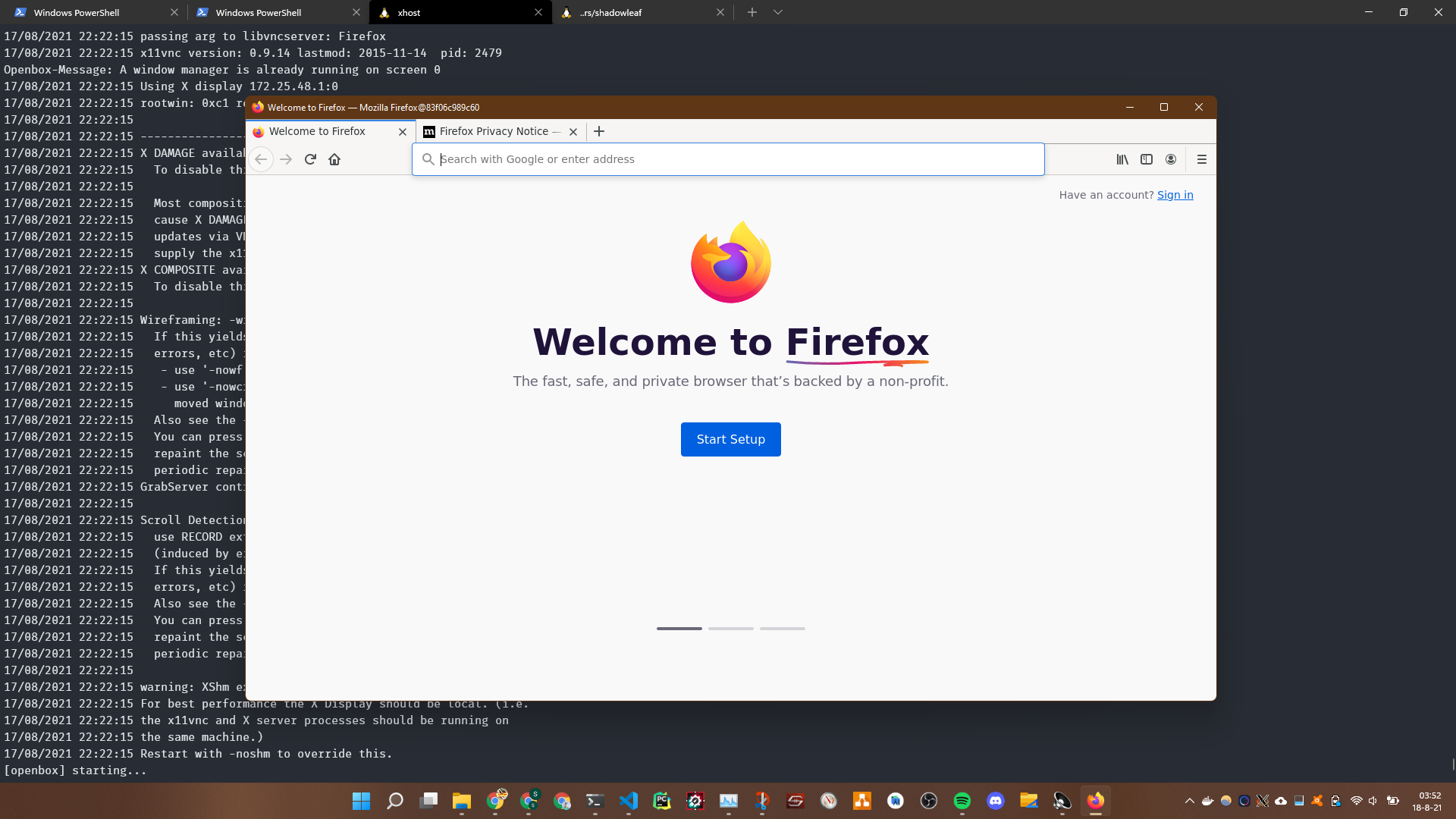

DISPLAY 🖥️

in container

Just pass the DISPLAY ENV Variable

xhost +

docker run -ti --rm \

-e DISPLAY=$DISPLAY \

-v /tmp/.X11-unix:/tmp/.X11-unix \

-v /docker/appdata/firefox:/config:rw \

--shm-size 2g \

jlesage/firefox:latest

Common way to Debug

- Make sure xeyes works inside the running container

- is xhost + set?

- make sure DISPLAY variable is set correct

sudo apt install x11-apps

xeyes

GPU 🔥 SUpport

add: --GPUS=all

That's It :)

docker run --rm --gpus all nvidia/cuda:11.0-base nvidia-smi

Wed Aug 18 03:58:47 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.50 Driver Version: 471.21 CUDA Version: 11.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 Off | N/A |

| N/A 65C P8 N/A / N/A | 75MiB / 4096MiB | ERR! Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

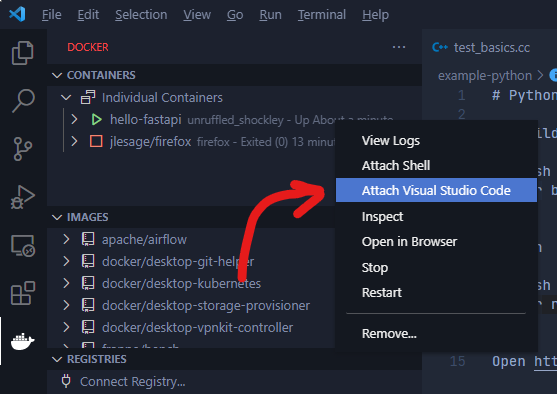

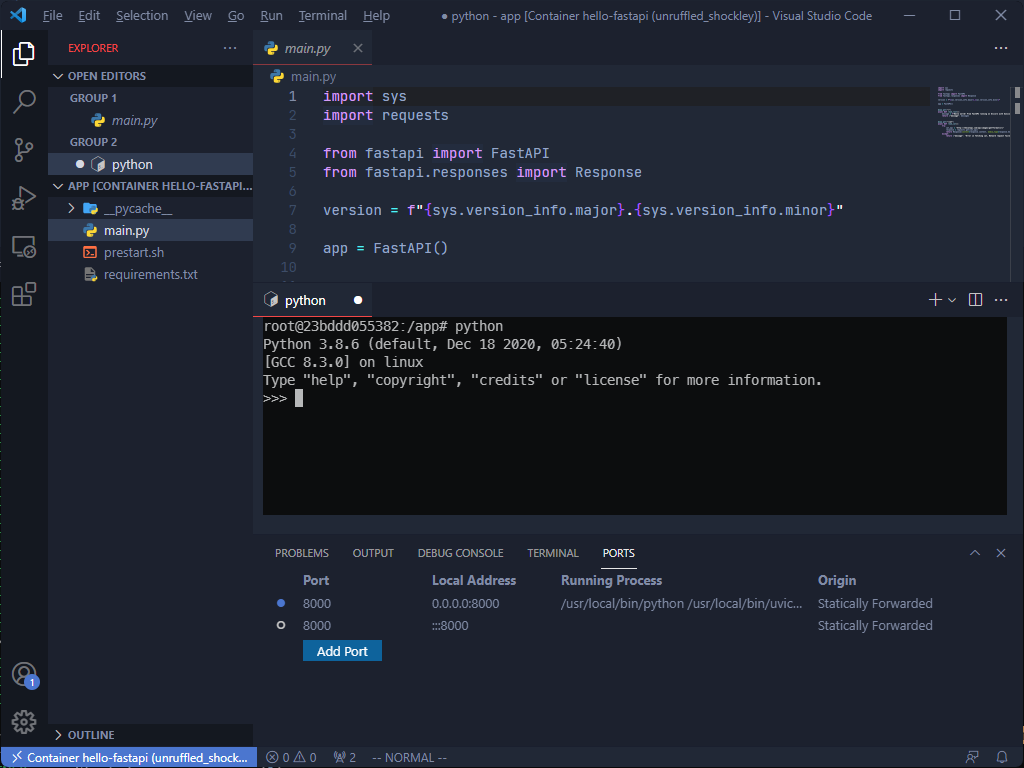

+-----------------------------------------------------------------------------+Using with VSCode 💻

BUT ! the changes to the files wont be saved !

- Solved by mounting VOLUMES in docker run !

- --volume /home/ubuntu/app:/opt/src

- Now changes in /opt/src inside the container will update on /home/ubuntu/app as well !

- Ref: https://docs.docker.com/storage/volumes/

PRO TIp

#!/bin/bash

docker build -t dshnet -f docker/Dockerfile .

#!/bin/bash

set -e

SOURCE="${BASH_SOURCE[0]}"

while [ -h "$SOURCE" ] ; do SOURCE="$(readlink "$SOURCE")"; done

DOCKER_DIR="$( cd -P "$( dirname "$SOURCE" )" && pwd )"

REPO_ROOT="$( cd -P "$( dirname "$DOCKER_DIR" )" && pwd )"

DOCKER_VERSION=$(docker version -f "{{.Server.Version}}")

DOCKER_MAJOR=$(echo "$DOCKER_VERSION"| cut -d'.' -f 1)

if [ "${DOCKER_MAJOR}" -ge 19 ]; then

runtime="--gpus=all"

else

runtime="--runtime=nvidia"

fi

# no need to do `xhost +` anymore

XSOCK=/tmp/.X11-unix

XAUTH=/tmp/.docker.xauth

touch $XAUTH

xauth nlist $DISPLAY | sed -e 's/^..../ffff/' | xauth -f $XAUTH nmerge -

# specify the image, very important

IMAGE="raster-vision-pytorch"

RUNTIME=$runtime

# if you are running notebooks and want to sync them to your hdd, specify the FULL location here

NOTEBOOKS_DIR=/mnt/inkers1TB/INKERS/Satyajit/DSHNet/notebooks

docker run ${RUNTIME} --privileged -it --rm \

--volume=${REPO_ROOT}/:/opt/src/ \

--volume=${NOTEBOOKS_DIR}:/opt/notebooks/ \

--volume=$XSOCK:$XSOCK:rw \

--volume=$XAUTH:$XAUTH:rw \

--volume=/dev:/dev:rw \

--device /dev/dri \

--net=host \

--ipc=host \

--shm-size=1gb \

--env="XAUTHORITY=${XAUTH}" \

--env="DISPLAY=${DISPLAY}" \

${IMAGE}

docker/run docker/build

PRO TIp

- modify the image names, directory paths in docker/run and docker/build

- now just run docker/build to build the image and docker/run to run the image

- mounts the current directory into the container on /opt/src

- takes care of DISPLAY and no need for xhost +

- passes physically connected devices into the container, including /dev/video* devices

- net and ipc are mapped with host, so no issues on that

- shm-size set to 1gb

Additional Reading

- A brief introduction to Docker with examples: https://docker-curriculum.com/

- Multi Arch Builds: https://blog.tensorclan.tech/multi-arch-docker-with-buildx

- Docker Best Practices (Official): https://docs.docker.com/develop/develop-images/dockerfile_best-practices/

- VSCode DevContainer (Developing inside a Container): https://code.visualstudio.com/docs/remote/containers

Docker

COMPOSE

What docker-compose 🐙 is

AND WHAT IT CAN DO

- Run multi-container applications and connect them together

- Limit Network, CPU, Memory, IPC, SHM and devices resources per container

- Run each container on a specific platform (arm64, amd64)

- Replicate and Scale Containers

- Attach Volumes and Persistent Storage

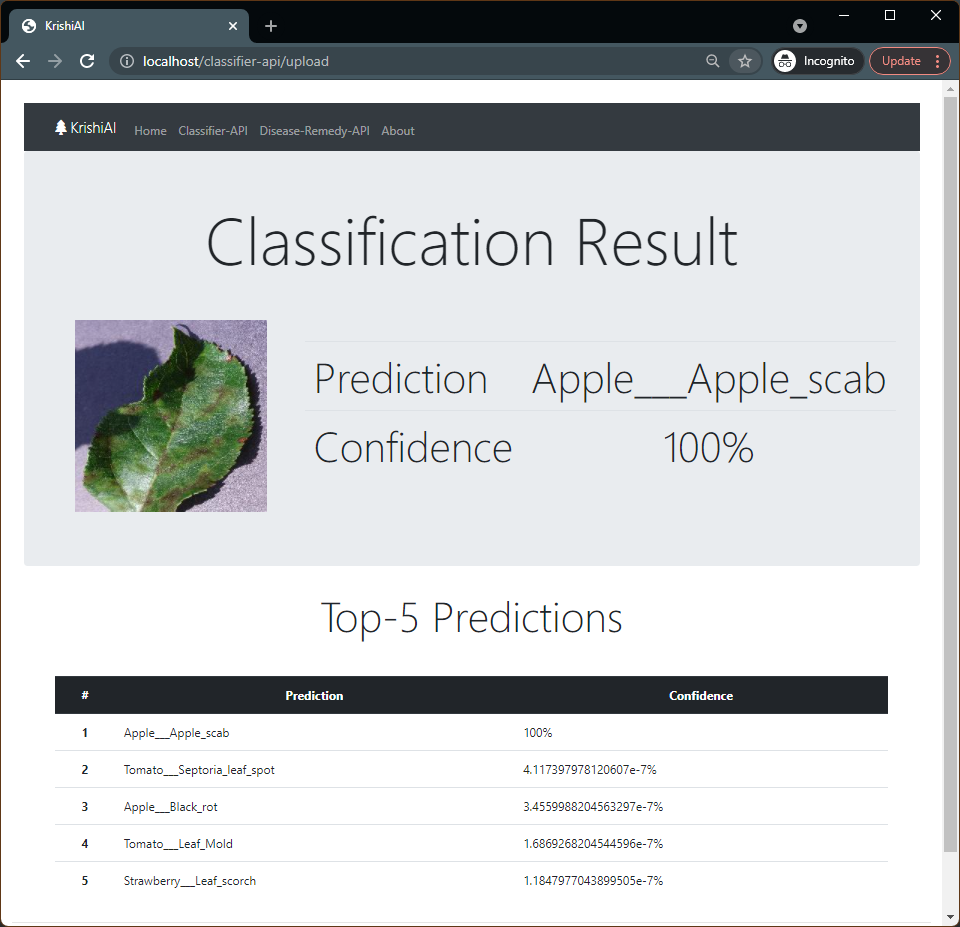

Example docker-compose.yaml

version: "3"

services:

krishiai-web:

image: satyajitghana/krishiai_backend:latest

container_name: web

environment:

- SERVING_HOSTNAME=tfserving

restart: always

ports:

- "80:3000"

networks:

- app-network

krishiai-tfserving:

image: satyajitghana/krishiai_model:latest

container_name: tfserving

restart: always

environment:

- MODEL_NAME=resnet50v2

ports:

- "8500:8500"

- "8501:8501"

networks:

- app-network

networks:

app-network:

driver: bridge

Example docker-compose.yaml

version: "3"

services:

krishiai-web:

image: satyajitghana/krishiai_backend:latest

container_name: web

environment:

- SERVING_HOSTNAME=tfserving

restart: always

ports:

- "80:3000"

networks:

- app-network

krishiai-tfserving:

image: satyajitghana/krishiai_model:latest

container_name: tfserving

restart: always

environment:

- MODEL_NAME=resnet50v2

ports:

- "8500:8500"

- "8501:8501"

networks:

- app-network

networks:

app-network:

driver: bridge

This version is compose specification, very important

more on it here: https://docs.docker.com/compose/compose-file/

Example docker-compose.yaml

version: "3"

services:

krishiai-web:

image: satyajitghana/krishiai_backend:latest

container_name: web

environment:

- SERVING_HOSTNAME=tfserving

restart: always

ports:

- "80:3000"

networks:

- app-network

krishiai-tfserving:

image: satyajitghana/krishiai_model:latest

container_name: tfserving

restart: always

environment:

- MODEL_NAME=resnet50v2

ports:

- "8500:8500"

- "8501:8501"

networks:

- app-network

networks:

app-network:

driver: bridge

This is the common bridge network between the containers

Instead of IP addresses we simply use the service name

RUN

docker-compose up

docker-compose up -d # to run in detached mode

docker-compse down # to shutdown all the compose servicesTRY IT OUT YOURSELF !

git clone https://github.com/satyajitghana/docker-compose-k8s-and-everything-else

cd docker-compose-k8s-and-everything-else/krishiai_docker

docker-compose upThis spins up 2 services

one tensorflow model serving over REST and gRPC

and other is the node services sending requests with images to the model for inference, both of them talk over their bridged network

DOCKER-compose specs:

KUBERNETES

(K8S)

Defining K8S

Mike Kail, CTO and cofounder at CYBRIC: “Let’s say an application environment is your old-school lunchbox. The contents of the lunchbox were all assembled well before putting them into the lunchbox [but] there was no isolation between any of those contents. The Kubernetes system provides a lunchbox that allows for just-in-time expansion of the contents (scaling) and full isolation between every unique item in the lunchbox and the ability to remove any item without affecting any of the other contents (immutability).”

Gordon Haff, technology evangelist, Red Hat: “Whether we’re talking about a single computer or a datacenter full of them, if every software component just selfishly did its own thing there would be chaos. Linux and other operating systems provide the foundation for corralling all this activity. Container orchestration builds on Linux to provide an additional level of coordination that combines individual containers into a cohesive whole.”

What K8S ☸️ is

AND WHAT IT CAN DO

- automating deployment, scaling, and management of containerized applications

- cluster together groups of hosts running Linux containers, and Kubernetes helps you easily and efficiently manage those clusters

Example Manifest File

apiVersion: v1

items:

- apiVersion: v1

kind: Service

metadata:

annotations:

kompose.cmd: kompose convert -f docker-compose.yaml -o kubemanifests.yaml

kompose.version: 1.19.0 (f63a961c)

creationTimestamp: null

labels:

io.kompose.service: krishiai-tfserving

name: krishiai-tfserving

spec:

type: LoadBalancer

ports:

- name: "8500"

port: 8500

targetPort: 8500

- name: "8501"

port: 8501

targetPort: 8501

selector:

io.kompose.service: krishiai-tfserving

status:

loadBalancer: {}

- apiVersion: v1

kind: Service

metadata:

annotations:

kompose.cmd: kompose convert -f docker-compose.yaml -o kubemanifests.yaml

kompose.version: 1.19.0 (f63a961c)

creationTimestamp: null

labels:

io.kompose.service: krishiai-web

name: krishiai-web

spec:

type: LoadBalancer

ports:

- name: "80"

port: 80

targetPort: 3000

selector:

io.kompose.service: krishiai-web

status:

loadBalancer: {}

- apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

kompose.cmd: kompose convert -f docker-compose.yaml -o kubemanifests.yaml

kompose.version: 1.19.0 (f63a961c)

creationTimestamp: null

labels:

io.kompose.service: krishiai-tfserving

name: krishiai-tfserving

spec:

replicas: 5

strategy: {}

selector:

matchLabels:

io.kompose.service: krishiai-tfserving

template:

metadata:

annotations:

kompose.cmd: kompose convert -f docker-compose.yaml -o kubemanifests.yaml

kompose.version: 1.19.0 (f63a961c)

creationTimestamp: null

labels:

io.kompose.service: krishiai-tfserving

spec:

containers:

- env:

- name: MODEL_NAME

value: resnet50v2

image: satyajitghana/krishiai_model:latest

# imagePullPolicy: Never

name: tfserving

ports:

- containerPort: 8500

- containerPort: 8501

resources: {}

restartPolicy: Always

status: {}

- apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

kompose.cmd: kompose convert -f docker-compose.yaml -o kubemanifests.yaml

kompose.version: 1.19.0 (f63a961c)

creationTimestamp: null

labels:

io.kompose.service: krishiai-web

name: krishiai-web

spec:

replicas: 5

strategy: {}

selector:

matchLabels:

io.kompose.service: krishiai-web

template:

metadata:

annotations:

kompose.cmd: kompose convert -f docker-compose.yaml -o kubemanifests.yaml

kompose.version: 1.19.0 (f63a961c)

creationTimestamp: null

labels:

io.kompose.service: krishiai-web

spec:

containers:

- env:

- name: SERVING_HOSTNAME

value: krishiai-tfserving

image: satyajitghana/krishiai_backend:latest

# imagePullPolicy: Never

name: web

ports:

- containerPort: 3000

resources: {}

restartPolicy: Always

status: {}

kind: List

metadata: {}

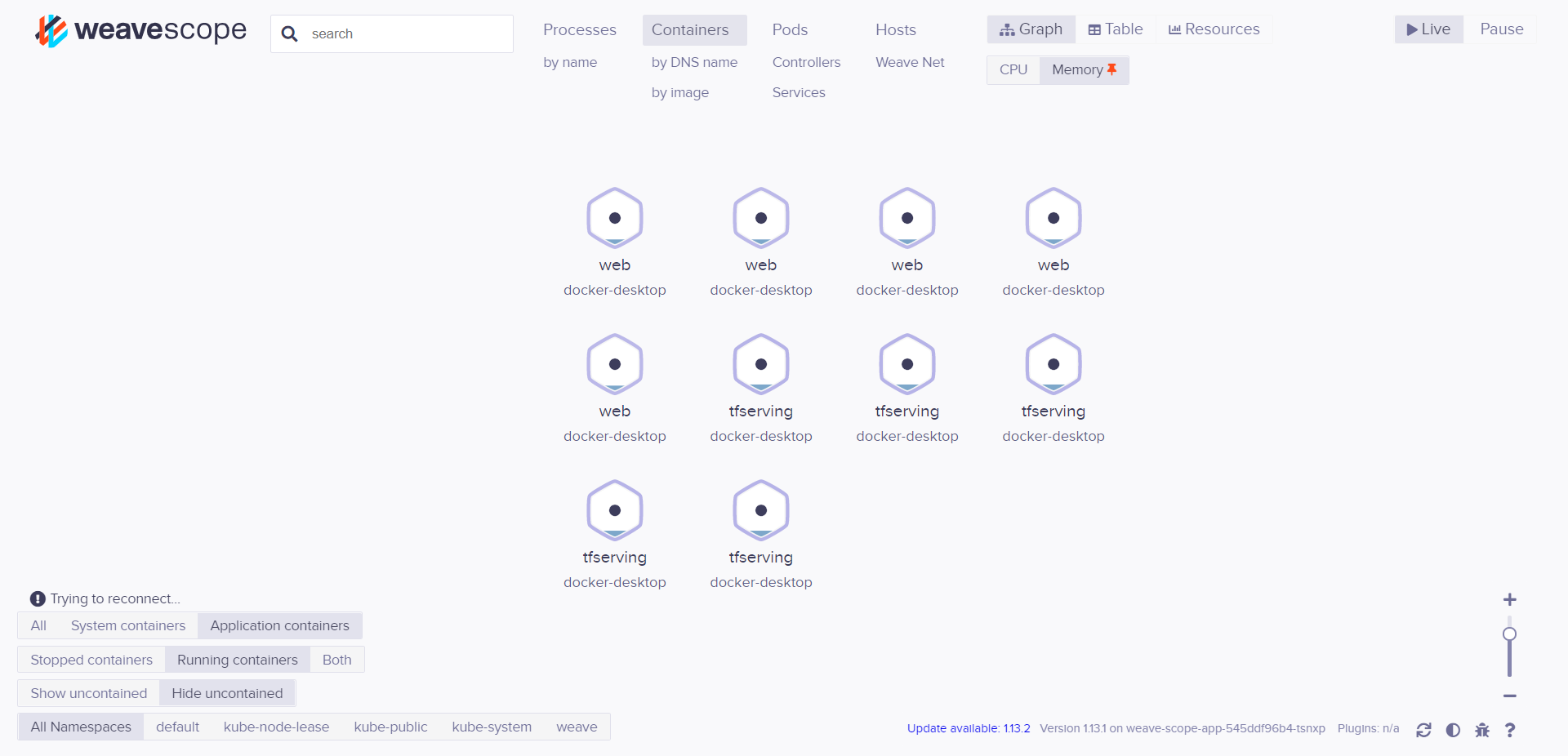

DEPLOYment example

git clone https://github.com/satyajitghana/docker-compose-k8s-and-everything-else

cd docker-compose-k8s-and-everything-else/krishiai_docker

kubectl apply -f kubemanifests.yaml # this will spinup all the pods

kubectl apply -f scope.yaml # this will turn up weavescope to visualize everything

kubectl port-forward -n weave "$(kubectl get -n weave pod --selector=weave-scope-component=app -o jsonpath='{.items..metadata.name}')" 4040

# to stop services

kubectl delete -f kubemanifests.yaml

kubectl delete -f scope.yaml

WEAVESCOPE

SCALING IN K8S

apiVersion: v1

items:

- apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

creationTimestamp: ...

name: hpa-name

namespace: default

resourceVersion: "664"

selfLink: ...

uid: ...

spec:

maxReplicas: 10

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: hpa-name

targetCPUUtilizationPercentage: 50

status:

currentReplicas: 0

desiredReplicas: 0

kind: List

metadata: {}

resourceVersion: ""

selfLink: ""Based on Specific Metrics

CPU, Memory, Number of Requests, or any Custom

K8S will scale the service

QUESTIONS ?

THANKS FOR COMING TO MY TED TALK ✌️

Docker, Compose, K8S and everything else

By Satyajit Ghana

Docker, Compose, K8S and everything else

- 121