Descriptive modelling of utterance transformations in chains:

short-term linguistic evolution in a large-scale online experiment

Sébastien Lerique

IXXI, École Normale Supérieure de Lyon

HBES 2018, Amsterdam

Camille Roth

médialab, Science Po, Paris

Adamic et al. (2016)

Online opinion dynamics

Transmission chains for cultural evolution theories

Short-term cultural evolution

Reviews by

Mesoudi and Whiten (2008)

Whiten et al. (2016)

Leskovec et al. (2009)

Boyd & Richerson (1985, 2005)

Sperber (1996)

Hierarchicalisation

(Mesoudi and Whiten, 2004)

Social & survival information

(Stubbersfield et al., 2015)

Negativity

(Bebbington et al., 2017)

Manual

Automated (computational)

In vitro

In vivo

Moussaïd et al. (2015)

Lauf et al. (2013)

Lerique & Roth (2018)

Danescu-Niculescu-Mizil et al. (2012)

Quotes in 2011 news on Strauss-Kahn

Claidière et al. (2014)

Cornish et al. (2013)

Word list recall (Zaromb et al. 2006)

Simple sentence recall (Potter & Lombardi 1990)

Evolution of linguistic content

No ecological content

Experiment setup

Control over experimental setting

Fast iterations

Scale similar to in vivo

Browser-based

Story transformations

At Dover, the finale of the bailiffs' convention. Their duties, said a speaker, are "delicate, dangerous, and insufficiently compensated."

depth in branch

At Dover, the finale of the bailiffs convention,their duty said a speaker are delicate, dangerous and detailed

At Dover, at a Bailiffs convention. a speaker said that their duty was to patience, and determination

In Dover, at a Bailiffs convention, the speaker said that their duty was to patience.

In Dover, at a Bailiffs Convention, the speak said their duty was to patience

At Dover, the finale of the bailiffs' convention. Their duties, said a speaker, are "delicate, dangerous, and insufficiently compensated."

At Dover, the finale of the bailiffs convention,their duty said a speaker are delicate, dangerous and detailed

Modelling transformations

Needleman and Wunsch (1970)

AGAACT-

| ||

-G-AC-G

AGAACT

GACG

Finding her son, Alvin, 69, hanged, Mrs Hunt, of Brighton, was so depressed she could not cut him down

Finding her son Arthur 69 hanged Mrs Brown from Brighton was so upset she could not cut him down

Finding her son Alvin 69 hanged Mrs Hunt of - - Brighton, was so depressed she could not cut him down

Finding her son Arthur 69 hanged Mrs - - Brown from Brighton was so upset she could not cut him down

Apply to utterances using NLP

At Dover, the finale of the bailiffs convention, their duty said a speaker are delicate, dangerous and detailed

At Dover, at a Bailiffs convention. a speaker said that their duty was to patience, and determination

At Dover the finale of the - - bailiffs convention - - - - their duty At Dover - - - - at a Bailiffs convention a speaker said that their duty said a speaker are delicate dangerous - - - and detailed - - - - - - - was to patience and - determination

At Dover the finale of the - - bailiffs convention |-Exchange-1------| their duty

At Dover - - - - at a Bailiffs convention a speaker said that their duty

said a speaker are delicate dangerous - - - and detailed -

|-Exchange-1------------------------| was to patience and - determination

said a speaker are delicate dangerous |-E2----|

|E2| a speaker - - - said that

said -

said that

\(\hookrightarrow E_1\)

\(\hookrightarrow E_2\)

Extend to build recursive deep alignments

Deep sequence alignments

Transformation diagrams

Results

Frequency

Frequency

Frequency

|chunk|

|chunk|

Deletion

Insertion

Replacement

Position in \(u\)

\(|u|_w\)

Number of operations vs. utterance length

Susceptibility vs. position in utterance

Deletions tend to gate other operations

Insertions relate to preceding deletions

Stubbersfield et al. (2015)

Bebbington et al. (2017)

Links the low-level with contrasted outcomes

Conclusion

In vivo & oral applications (social networks)

Parsimonious explanations of higher level evolution

Semantic parses, long-lived chains with recurring changes

Lots to do

Quantitative analysis of changes

Inner structure of transformations

WEIRD participants

Written text

No controlled context

Caveats

Very Soon™ on arXiv.org

Challenges with meaning

Can you think of anything else, Barbara, they might have told me about that party?

I've spoken to the other children who were there that day.

S

B

Abuser

The Devil's Advocate (1997)

?

Strong pragmatics (Scott-Phillips, 2017)

Access to context

Theory of the constitution of meaning

Challenges

Seeds

| #participants |

| #root utterances |

| tree size |

| Duration |

| Spam rate |

| Usable reformulations |

| 53 | 49 | 2 x 70 |

| 54 | 50 | 25/batch |

| 48 | 49 | 70 |

| 64min | 43min | 37min/batch |

| 22.4% + 3.5% | 0.8% + 0.6% | 1% + 0.1% |

| 1980 | 2411 | 3506 |

| Pilot 1 | Exp. A | Exp. A' |

Pilots

MemeTracker, WikiSource,

12 Angry Men, Tales,

News stories

Exp. A

Memorable/non-memorable quote pairs

(Danescu-Niculescu-Mizil et al., 2012)

Exp. A'

Nouvelles en trois lignes

(Fénéon, 1906)

“Hanging on to the door, a traveller a tad overweight caused his carriage to topple, in Bromley, and fractured his skull.”

“Three bears driven down from the heights of the Pyrenees by snow have been decimating the sheep of the valley.”

“A dozen hawkers who had been announcing news of a nonexistent anarchist bombing at King's Cross have been arrested.”

Live experiment

Alignment optimisation

\(\theta_{open}\)

\(\theta_{extend}\)

\(\theta_{mismatch}\)

\(\theta_{exchange}\) by hand

All transformations

Hand-coded training set size?

Train the \(\theta_*\) on hand-coded alignments

Simulate the training process: imagine we know the optimal \(\theta\)

1. Sample \(\theta^0 \in [-1, 0]^3\) to generate artificial alignments for all transformations

2. From those, sample \(n\) training alignments

3. Brute-force \(\hat{\theta}_1, ..., \hat{\theta}_m\) estimators of \(\theta_0\)

4. Evaluate the number of errors per transformation on the test set

Test set

10x

10x

\(\Longrightarrow\) 100-200 hand-coded alignments yield \(\leq\) 1 error/transformation

Gap open cost \(\rightarrow \theta_{open}\)

Gap extend cost \(\rightarrow \theta_{extend}\)

Item match-mismatch

Example data

Immediately after I become president I will confront this economic challenge head-on by taking all necessary steps

immediately after I become a president I will confront this economic challenge

Immediately after I become president, I will tackle this economic challenge head-on by taking all the necessary steps

This crisis did not develop overnight and it will not be solved overnight

the crisis did not developed overnight, and it will be not solved overnight

original

This, crisis, did, not, develop, overnight, and, it, will, not, be, solved, overnight

this, crisis, did, not, develop, overnight, and, it, will, not, be, solved, overnight

this, crisis, did, not, develop, overnight, and, it, will, not, be, solved, overnight

crisi, develop, overnight, solv, overnight

tokenize

lowercase & length > 2

stopwords

stem

The crisis didn't happen today won't be solved by midnight.

crisi, happen, today, solv, midnight

d = 0,6

Utterance-to-utterance distance

Aggregate trends

Size reduction

Transmissibility

Variability

Lexical evolution - POS

Step-wise

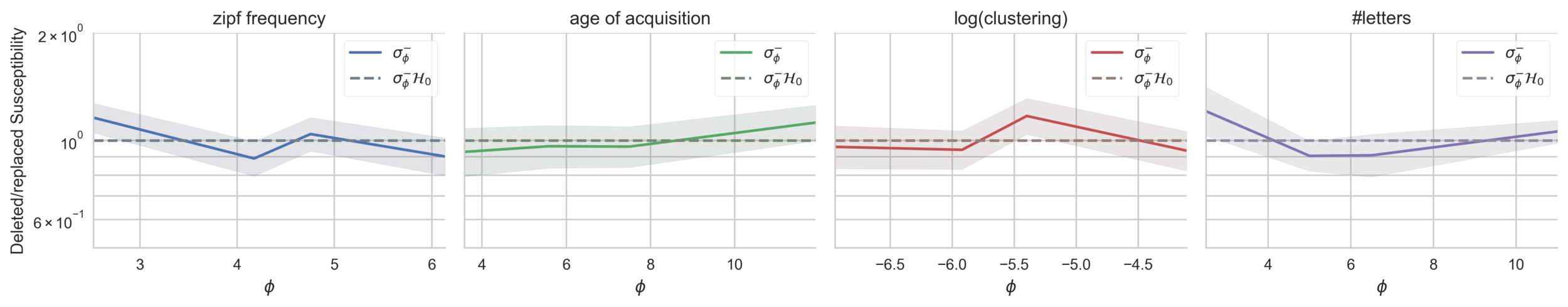

Susceptibility

Lexical evolution (1)

Step-wise

Susceptibility

Feature variation

Lexical evolution (2)

Along the branches

Descriptive modelling of utterance transformations in chains: short-term linguistic evolution in a large-scale online experiment

By Sébastien Lerique

Descriptive modelling of utterance transformations in chains: short-term linguistic evolution in a large-scale online experiment

Talk at HBES 2018, Amsterdam

- 2,171