Athletic Intelligence

for Legged Robots

Shamel Fahmi

December, 2022

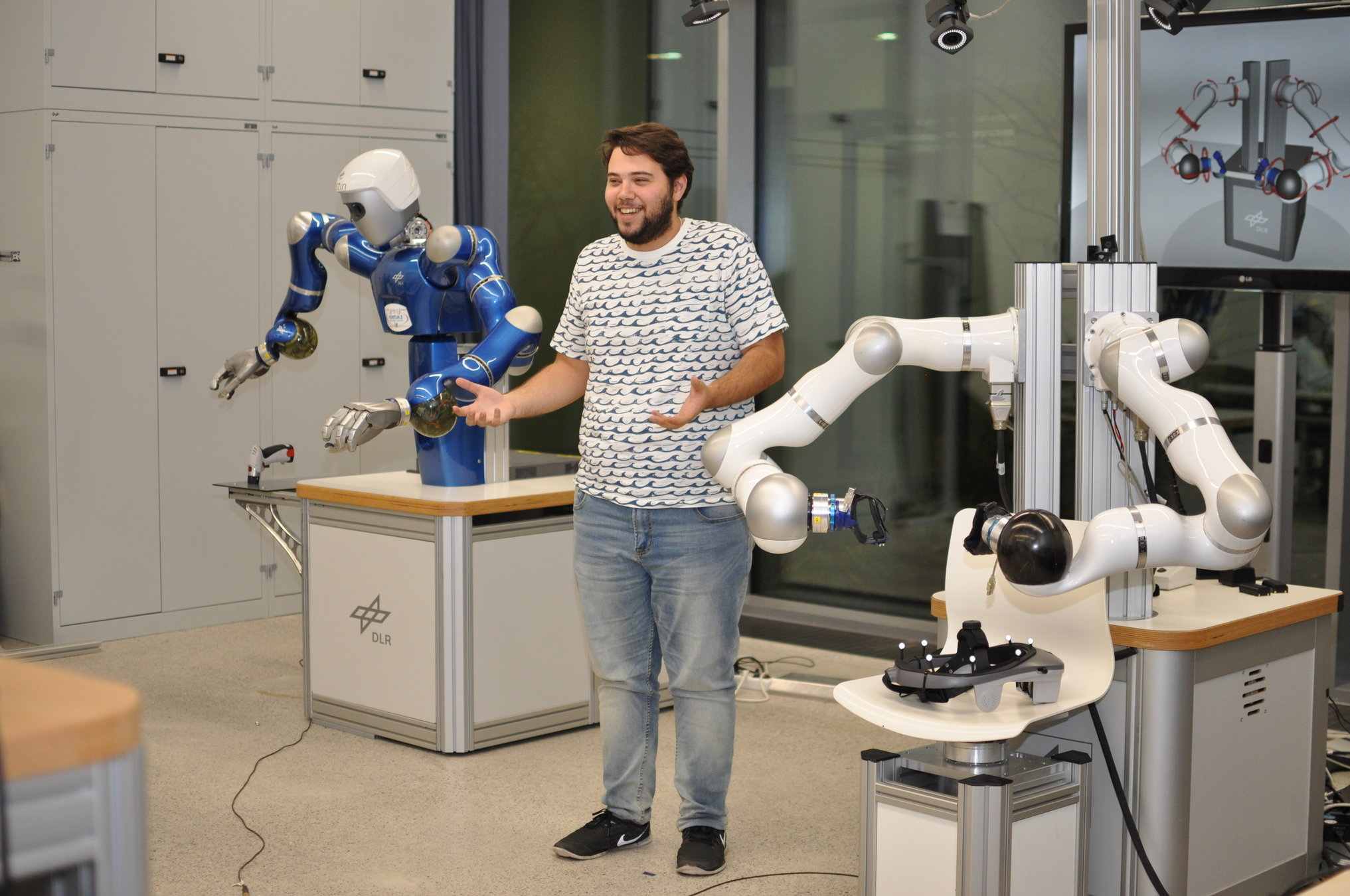

Dynamic Legged Systems Lab, IIT

-

Whole-Body Control

Extended the WBC framework to handle the full robot dynamics and challenging terrains, and to generalize beyond rigid contacts.

-

State Estimation

Investigated the effects of the standard state estimators on non-rigid contacts.

-

Vision-Based Planning

Developed a vision-based planning approach that plans footholds and body poses based on the robot's "learned" skills.

Biomimetic Robotics Lab, MIT

-

Whole-Body Control

Developing the WBC of the MIT Humanoid.

-

Reinforcement Learning

Building up the RL infrastructure for Mini-Cheetah.

Developing RL controllers for Mini-Cheetah and the MIT Humanoid.

-

State Estimation

Investigating data-driven approaches in state estimation.

Athletic Intelligence

for Legged Robots

Shamel Fahmi

December, 2022

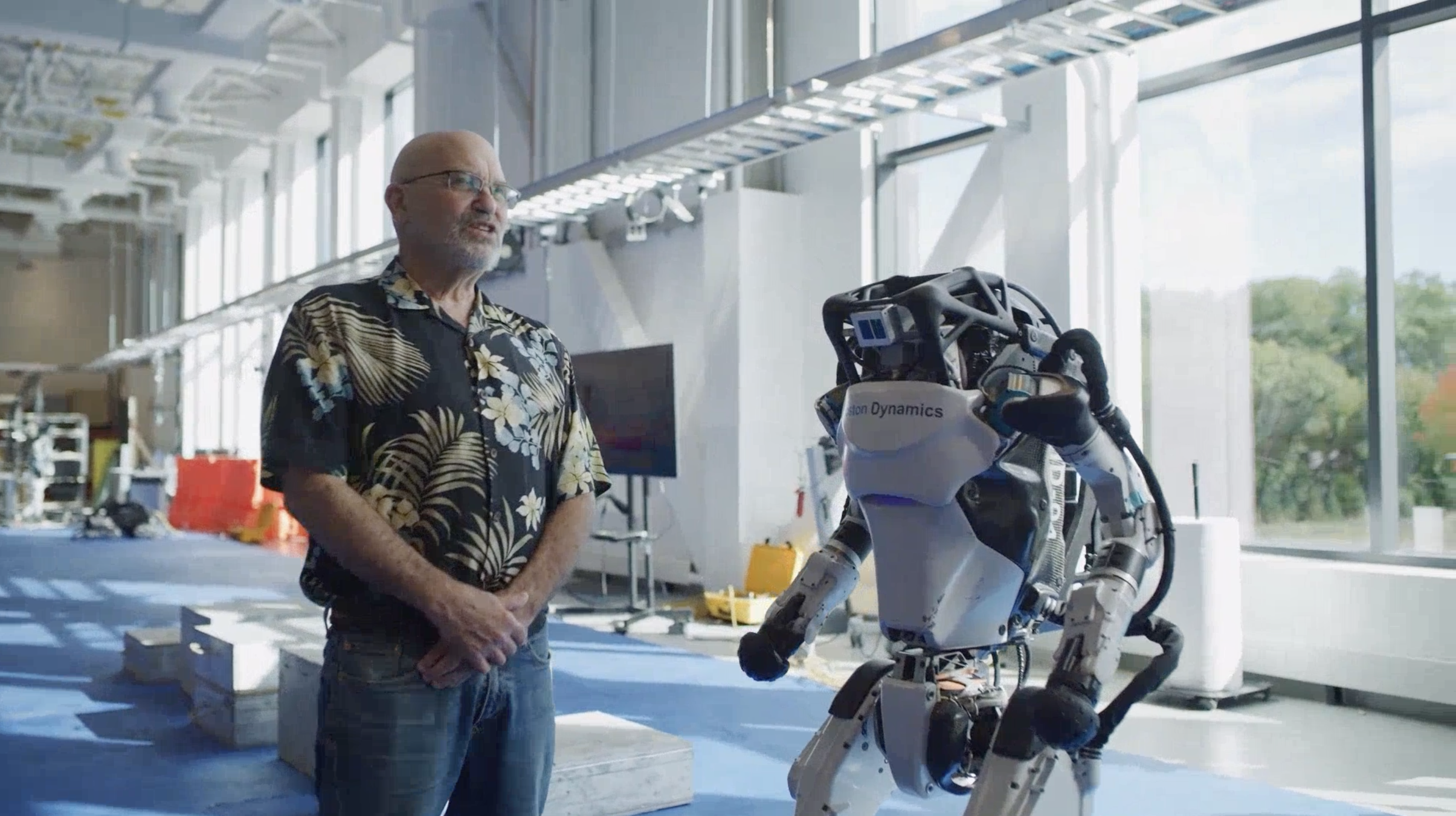

"Marc Raibert divided intelligence into Cognitive Intelligence (CI) and Athletic Intelligence (AtI). CI allows us to make abstract plans, and to understand and solve broader problems. AtI allows us to operate our bodies in such a way that we can balance, stand, walk, climb, etc. AtI also lets us do real-time perception so that we can interact with the world around us."

S. Fahmi, On Terrain-Aware Locomotion for Legged Robots, PhD. dissertation, Italian Institute of Technology, 2021.

M. Raibert and S. Kuindersma, Boston dynamics, the Challenges of Real-World Reinforcement Learning Workshop in NeurIPS, 2021.

Athletic Intelligence

for Legged Robots?

Athletic Intelligence allows the robot to take decisions based on the surrounding world using its perception.

decisions

world

perception

decisions

world

perception

Using Optimization and Learning

-

Control

-

State Estimation

-

Planning

-

Proprioception

-

Vision

-

Physical Properties

-

Geometry

STANCE: Whole-Body Control for Legged Robots

ViTAL: Vision-Based Terrain-Aware Locomotion Planning

MIMOC: Reinforcement Learning for Legged Robots

-

STANCE: Whole-Body Control for Legged Robots

-

ViTAL: Vision-Based Terrain-Aware Locomotion Planning

-

MIMOC: Reinforcement Learning for Legged Robots

-

About Me

-

Athletic Intelligence for Legged Robots

-

Final Thoughts

Outline

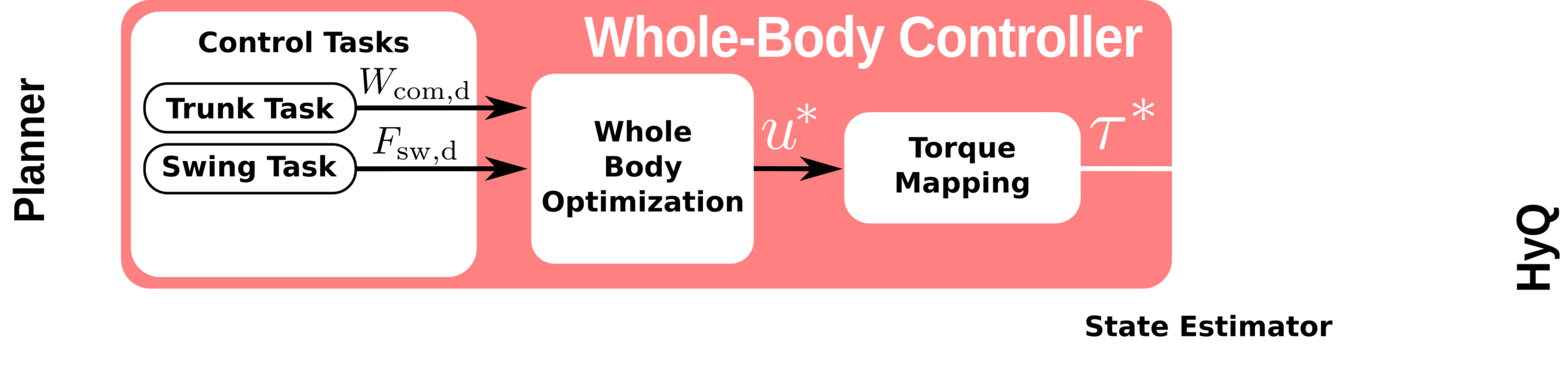

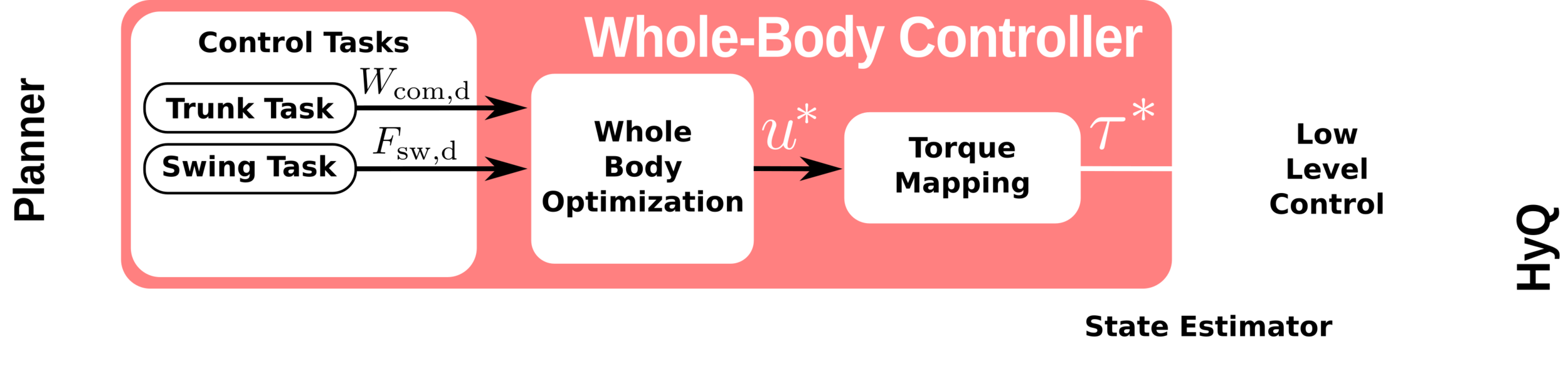

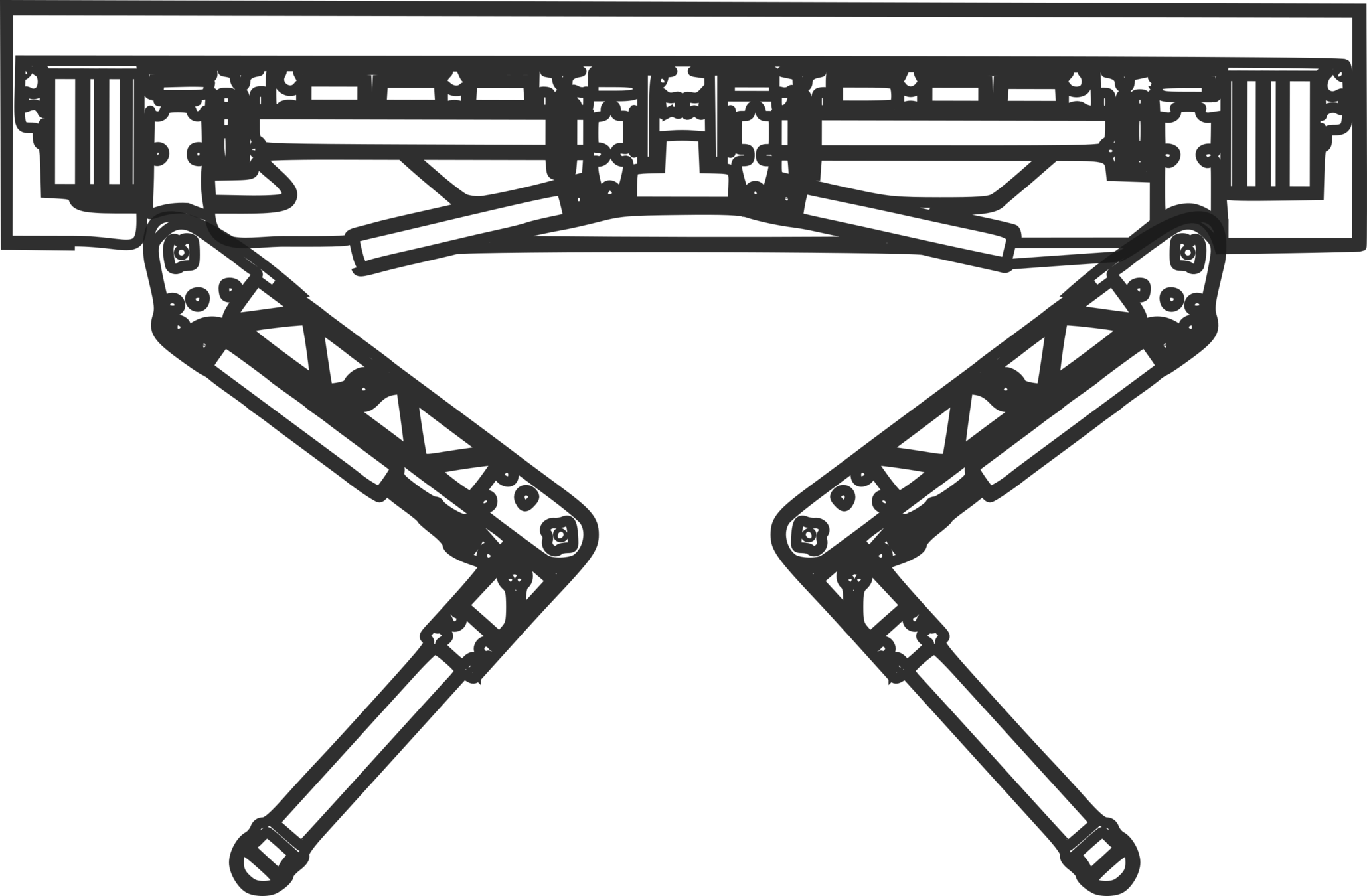

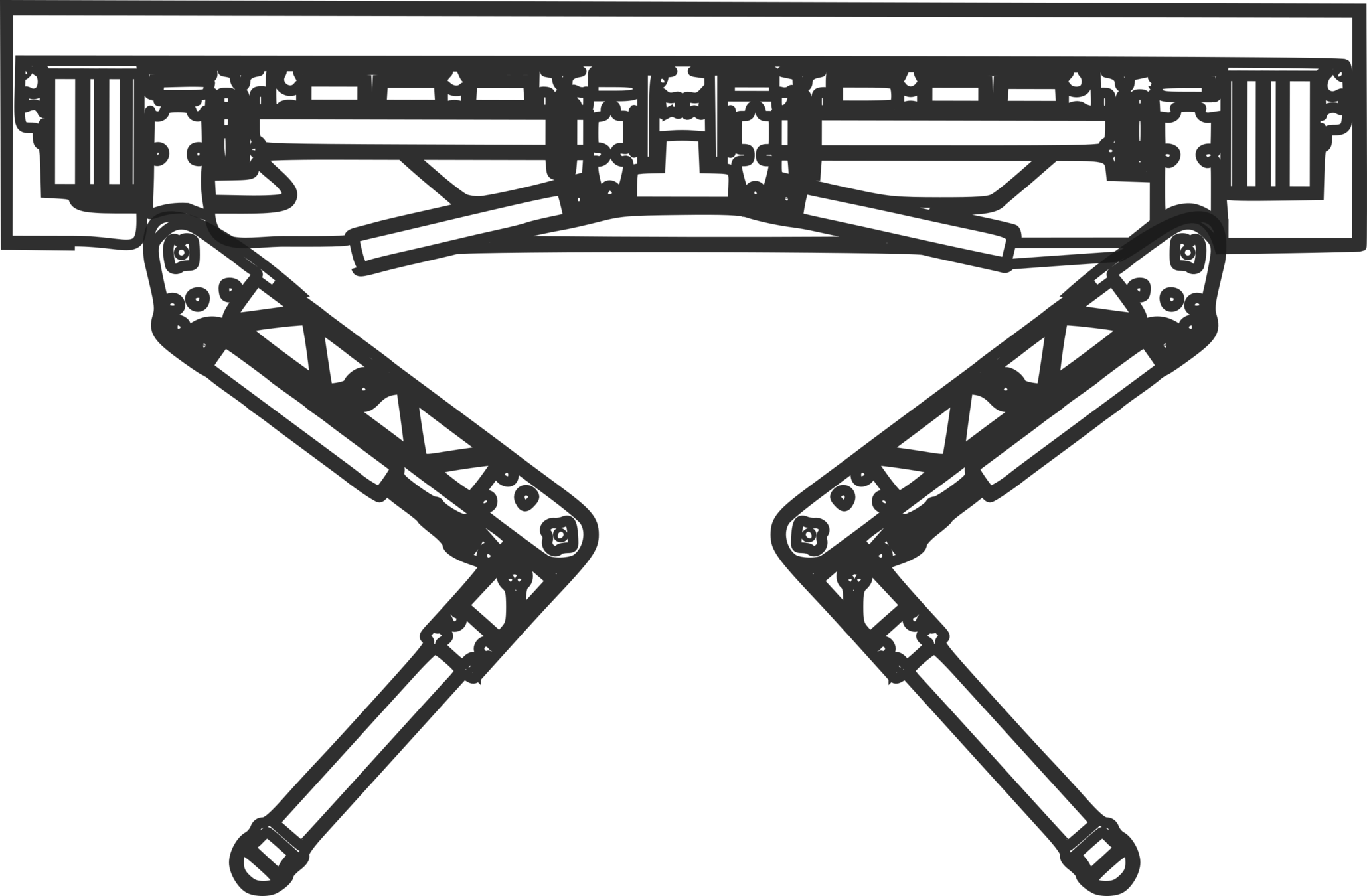

Whole-Body Control (WBC) Framework

Whole-Body Control (WBC) Objectives

-

Objectives

-

Track the planned references (body and feet) and maintain balance.

-

Reason about the robot's full dynamics, and joint and torque limits.

-

Respect contact constraints (friction cones, rigid contacts, unilaterality).

-

-

How to deal with all these objectives in an optimal way?

-

Cast these objectives as an optimization problem (QP).

-

Map the solution to joint torques using inverse dynamics.

Can the WBC handle all types of terrain?

-

Most of WBCs are not terrain-aware. They fail to generalize beyond rigid terrain.

-

Locomotion over soft terrain by itself is challenging because of the induced contact dynamics.

What is the problem?

-

Mismatch between what the WBC assumes vs. what is actually happening.

-

Drop the assumption of the rigid terrain. Use a more generic model that accounts for the terrain impedance.

-

But that’s not enough, how do you know the impedance parameters of the terrain.

-

Can we, estimate it?

How to solve it?

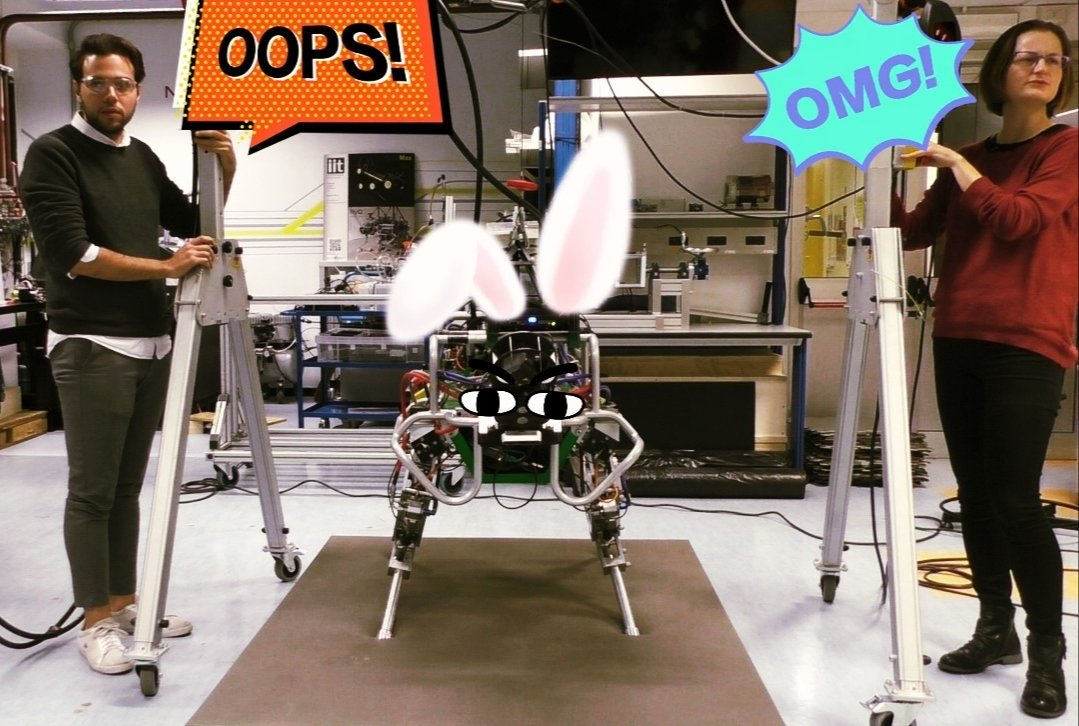

sWBC

Standard WBC (sWBC)

C3WBC

Reformulate the sWBC to account for the contact dynamics

C3 = compliant contact consistent

C3WBC

How will the new WBC know about the current terrain impedance parameters?

C3 = compliant contact consistent

?

C3WBC

Feedback the terrain impedance parameters using a Terrain Compliance Estimator (TCE)

C3 = compliant contact consistent

TCE

Simultaneously, at every control loop

C3WBC

TCE

C3WBC

TCE

Soft Terrain Adaptation aNd Compliance Estimation

(STANCE)

Published In:

† S. Fahmi, M. Focchi, A. Radulescu, G. Fink, V. Barasuol, and C. Semini,

“STANCE: Locomotion Adaptation over Soft Terrain,” IEEE Trans. Robot. (T-RO), vol. 36, no. 2, pp. 443–457, Apr. 2020, doi: 10.1109/TRO.2019.2954670.

S. Fahmi, C. Mastalli, M. Focchi, and C. Semini, “Passive Whole-Body Control for Quadruped Robots: Experimental Validation over Challenging Terrain,”

IEEE Robot. Automat. Lett. (RA-L), vol. 4, no. 3, pp. 2553–2560, Jul. 2019,

doi: 10.1109/LRA.2019.2908502.

† Finalist for the IEEE RAS Italian Chapter Young Author Best Paper Award, and for the IEEE RAS Technical Committee on Model-Based Optimization for Robotics Best Paper Award.

-

STANCE: Whole-Body Control for Legged Robots

-

ViTAL: Vision-Based Terrain-Aware Locomotion Planning

-

MIMOC: Reinforcement Learning for Legged Robots

-

About Me

-

Athletic Intelligence for Legged Robots

-

Final Thoughts

Outline

Locomotion Planning

Two ways to deal with locomotion planning:

1. Coupled Planning:

-

Plan the feet & base states simultaneously.

2. Decoupled planning:

-

Separate the plan into feet & base plans.

-

Planning the feet motion ⇒ foothold selection

-

Planning the body motion ⇒ pose adaptation

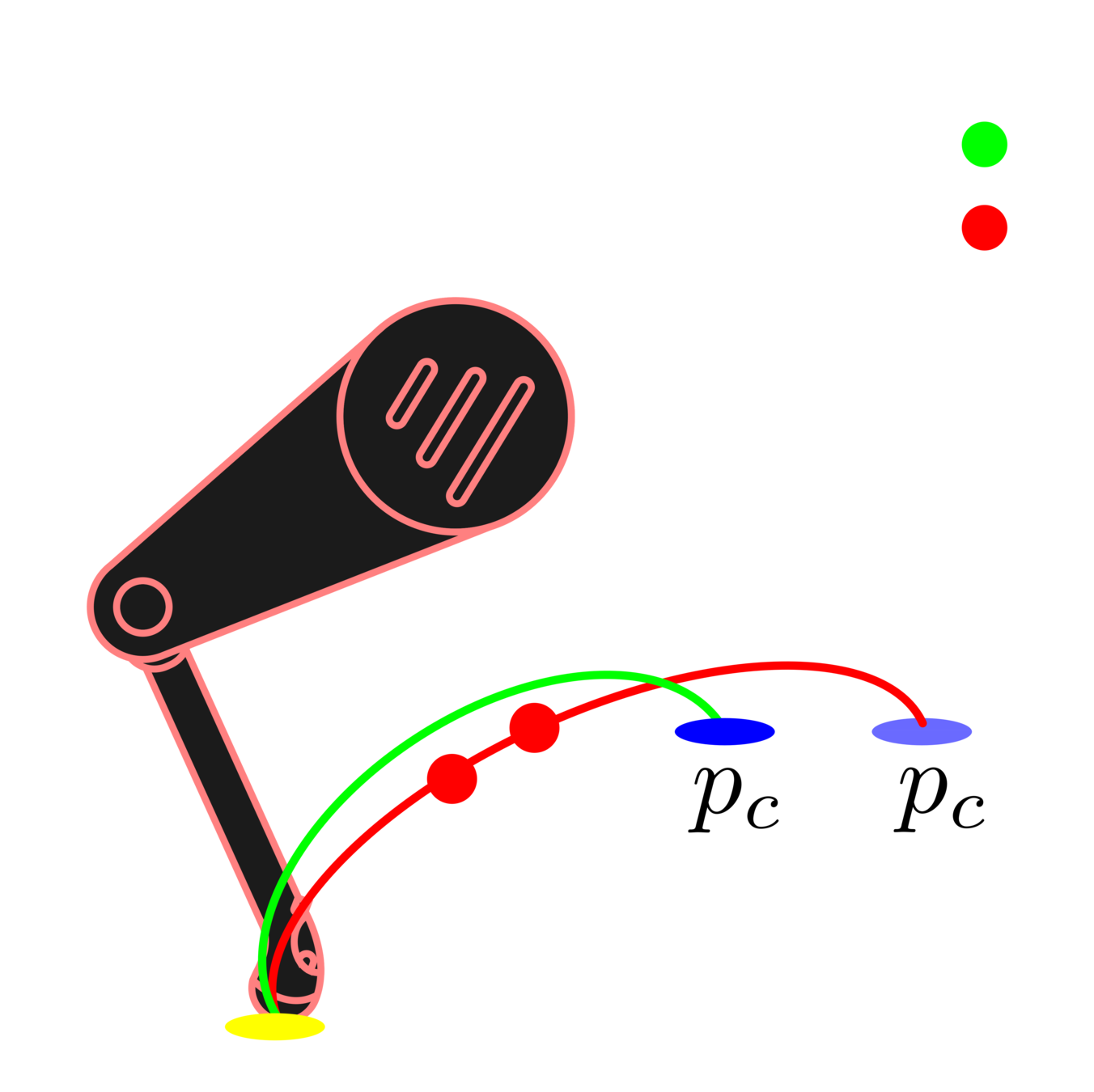

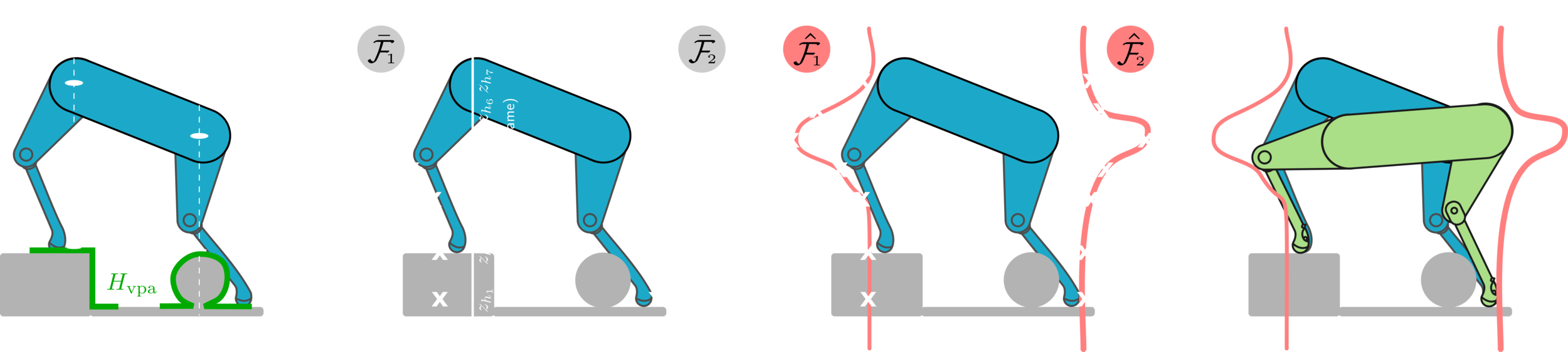

Vision-Based Foothold Adaptation (VFA)

Villarreal 2019

Barasuol 2015

Foothold Selection

V. Barasuol et al., Reactive Trotting with Foot Placement Corrections through Visual Pattern Classification, IROS, 2015.

O. Villarreal et al., Fast and Continuous Foothold Adaptation for Dynamic Locomotion through CNNs, RA-L, 2019.

Goal: Select footholds based on the terrain information and the robot's capabilities.

Pose Adaptation

-

Goal: find the optimal robot's pose that can:

-

Maximize the reachablity of the given selected footholds.

-

Avoid terrain collision and maintain static stability.

-

-

A pose optimizer to deal with these objectives.

M. Kalakrishnan et al., Learning, planning, and control for quadruped locomotion over challenging terrain, IJRR 2011.

P. Fankhauser et al., Robust Rough-Terrain Locomotion with a Quadrupedal Robot, IROS, 2018.

Kalakrishnan 2011

Fankhauser 2018

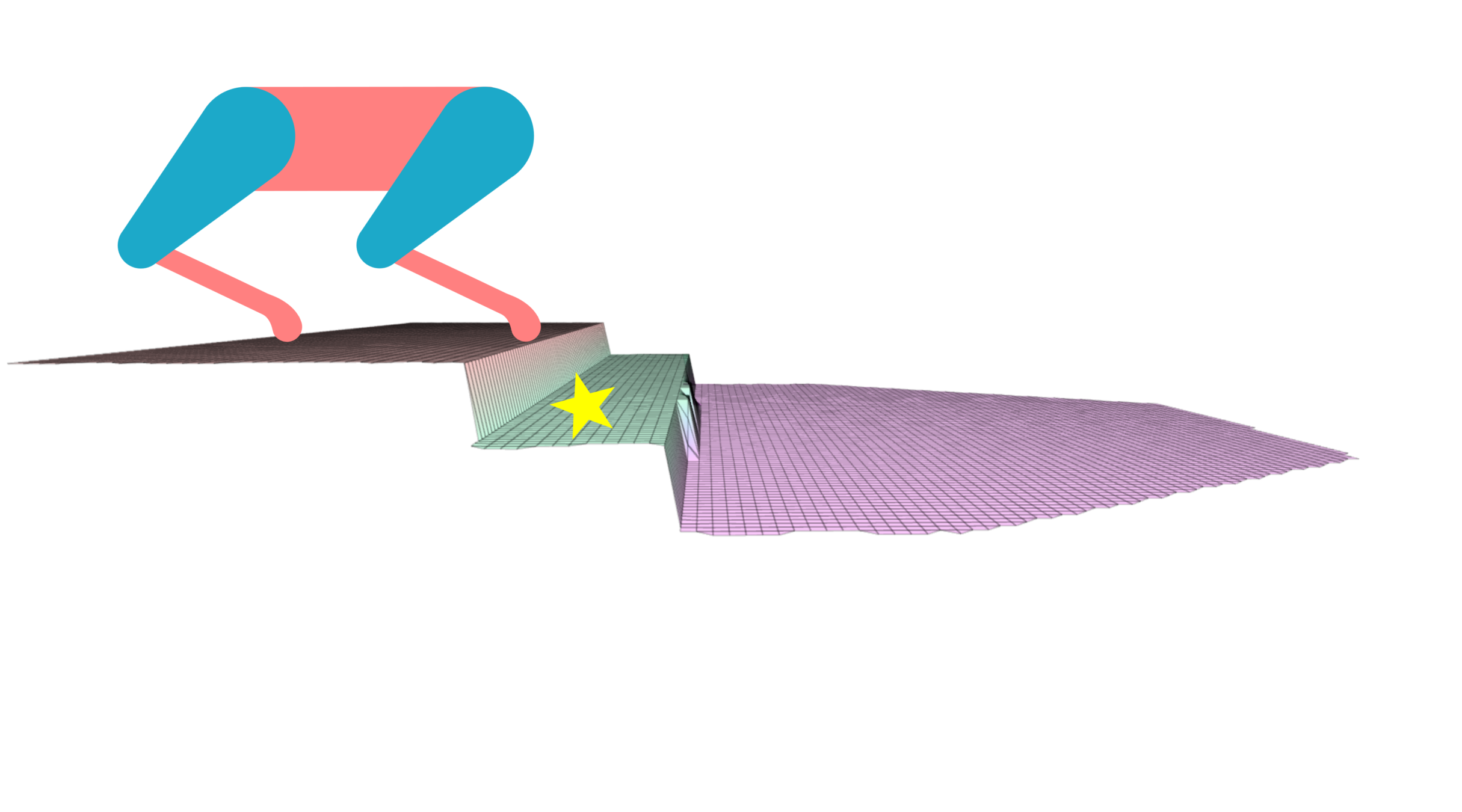

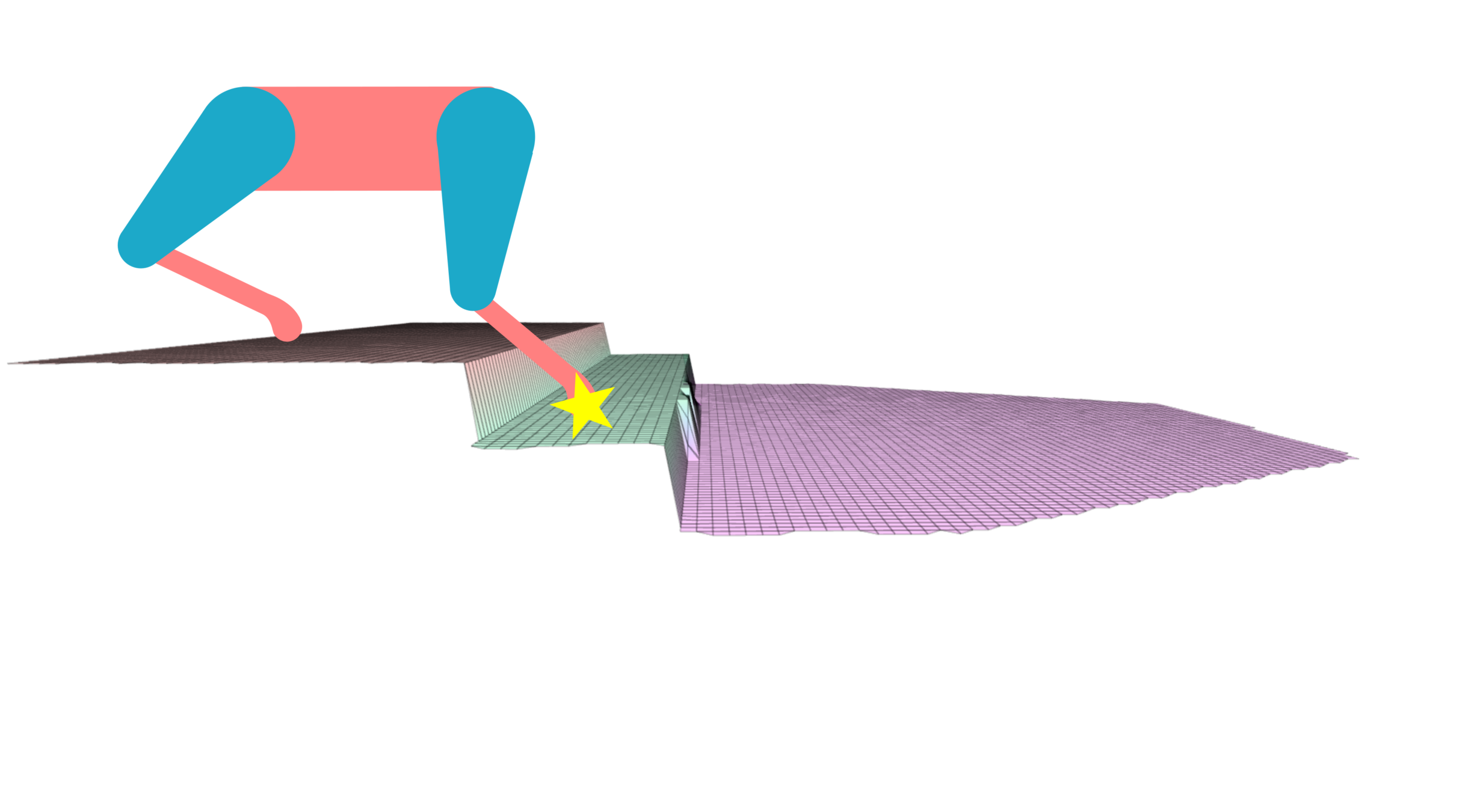

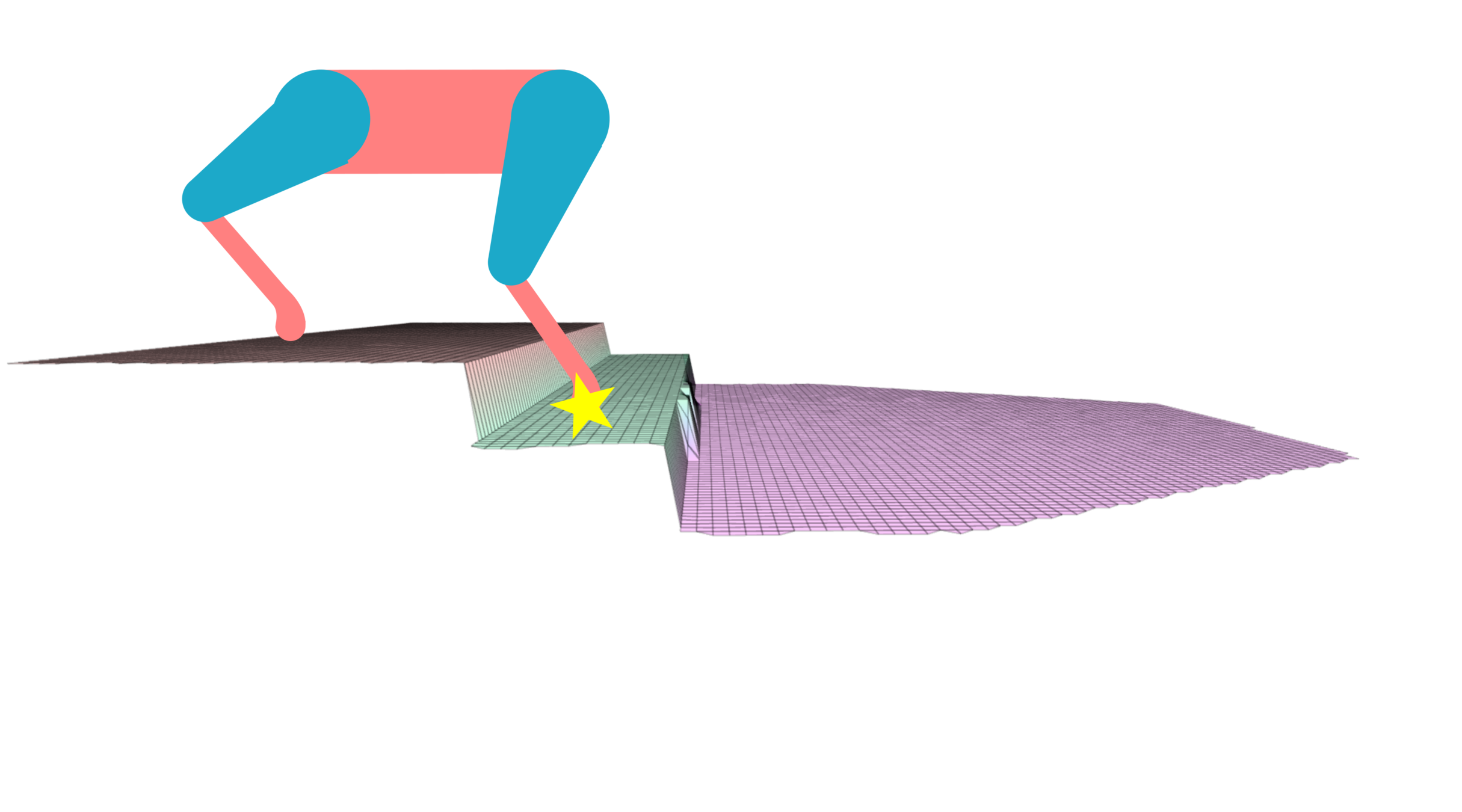

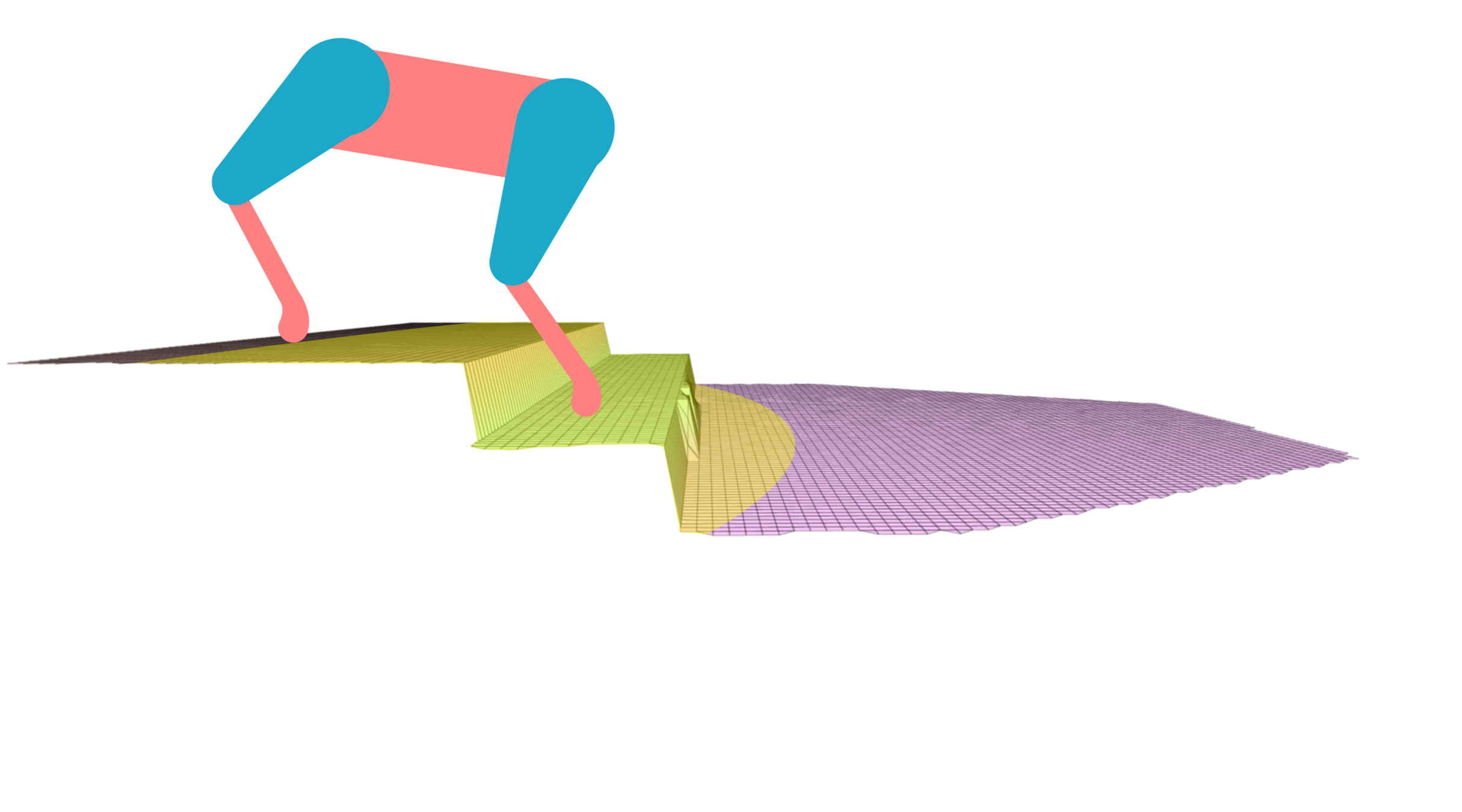

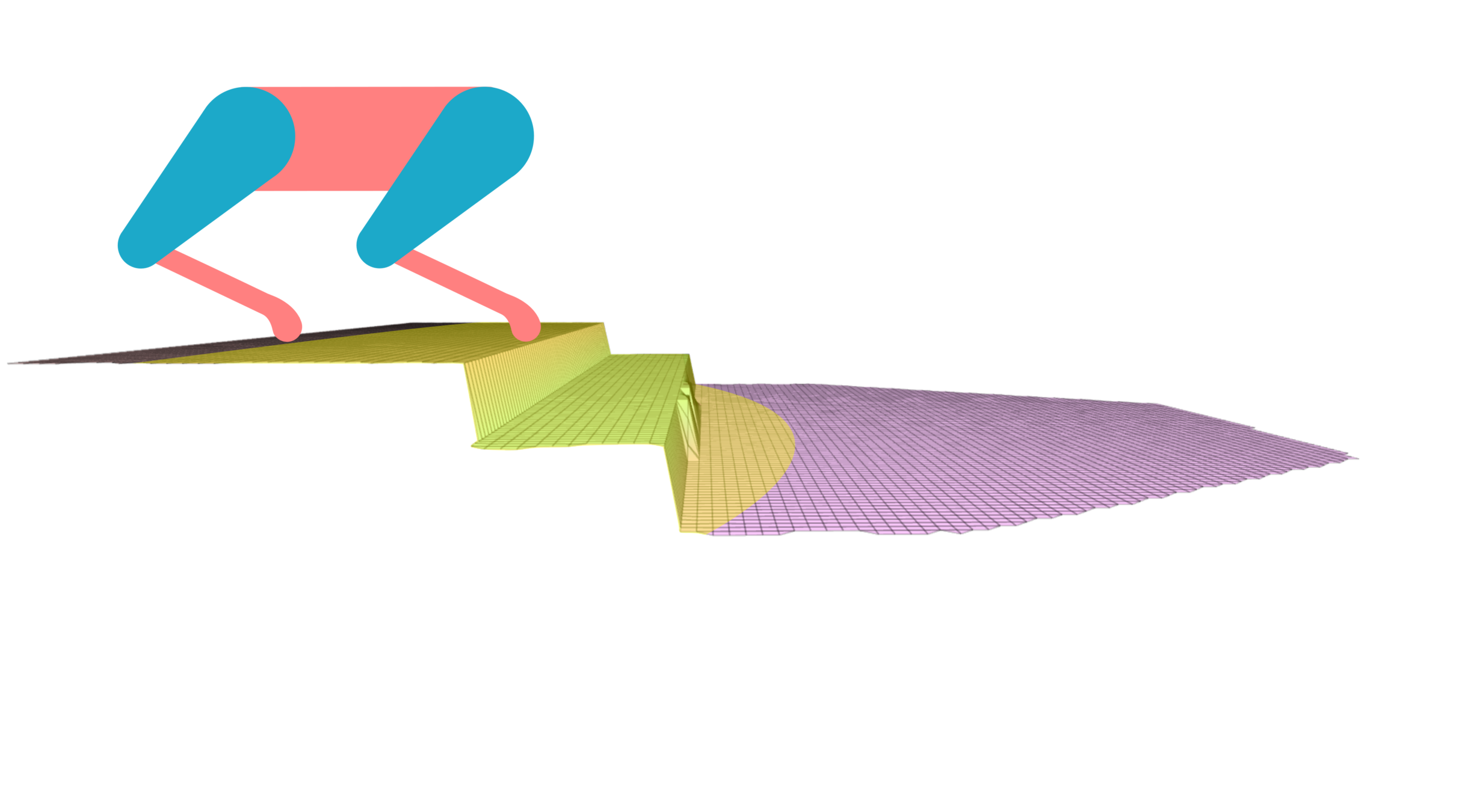

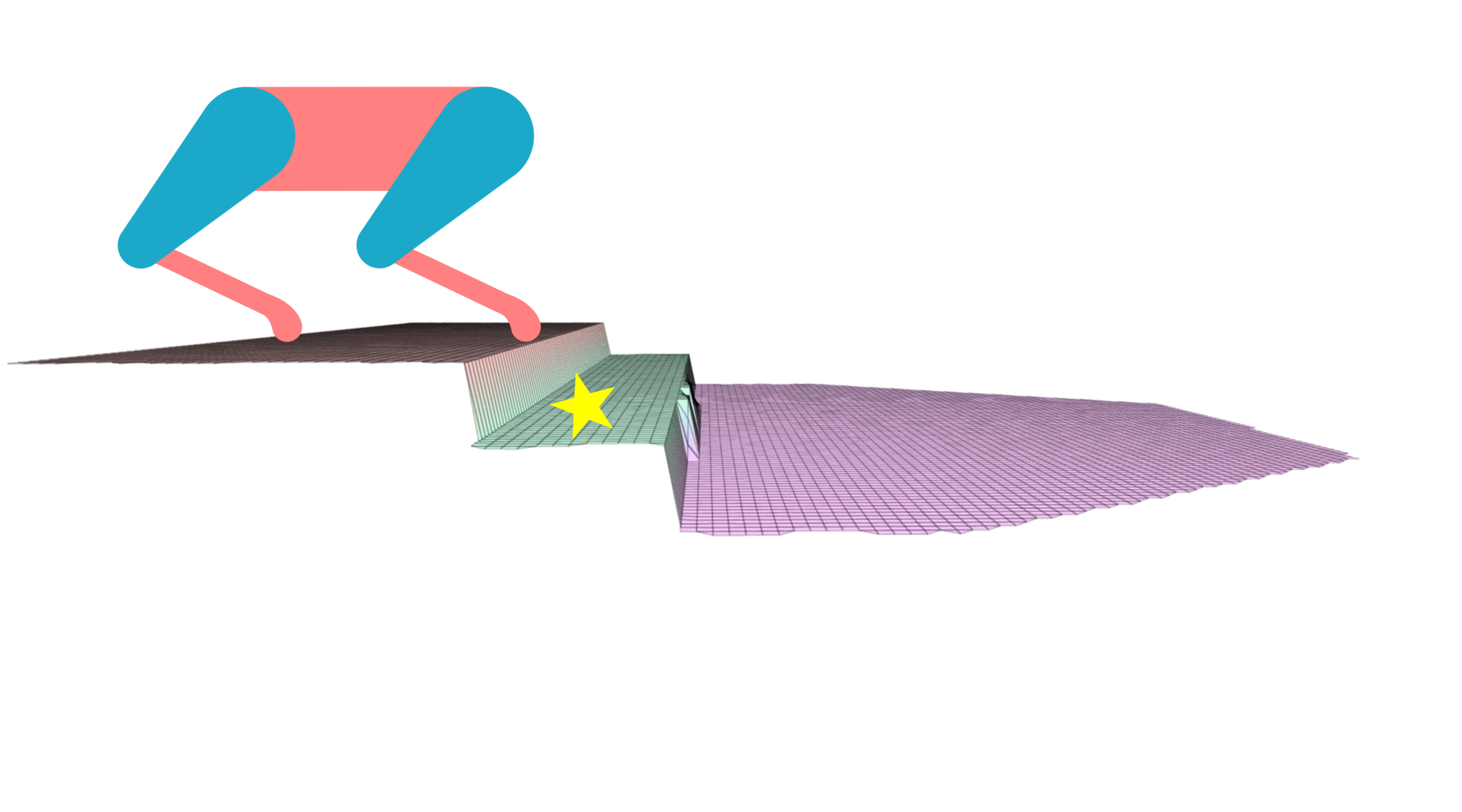

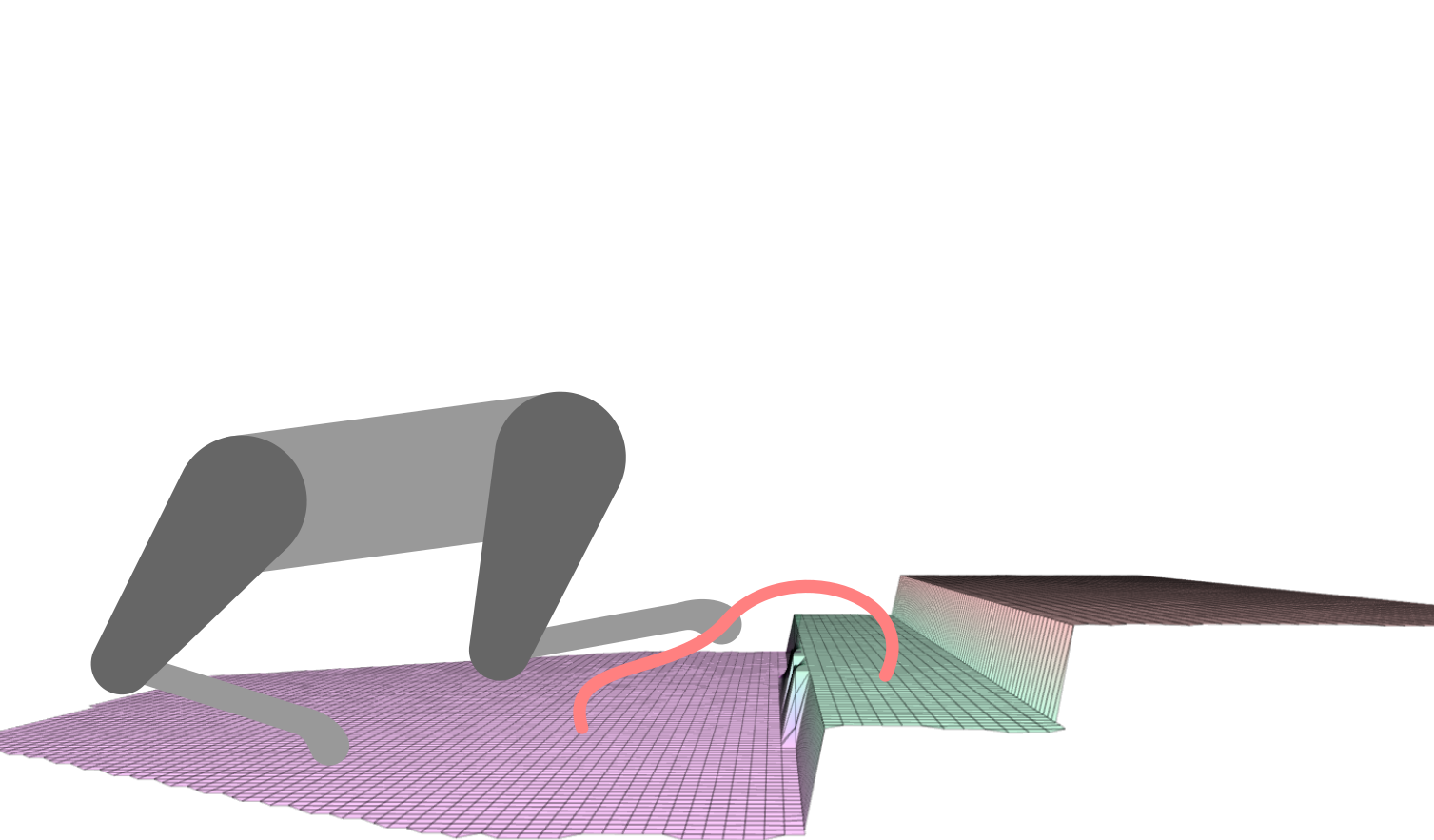

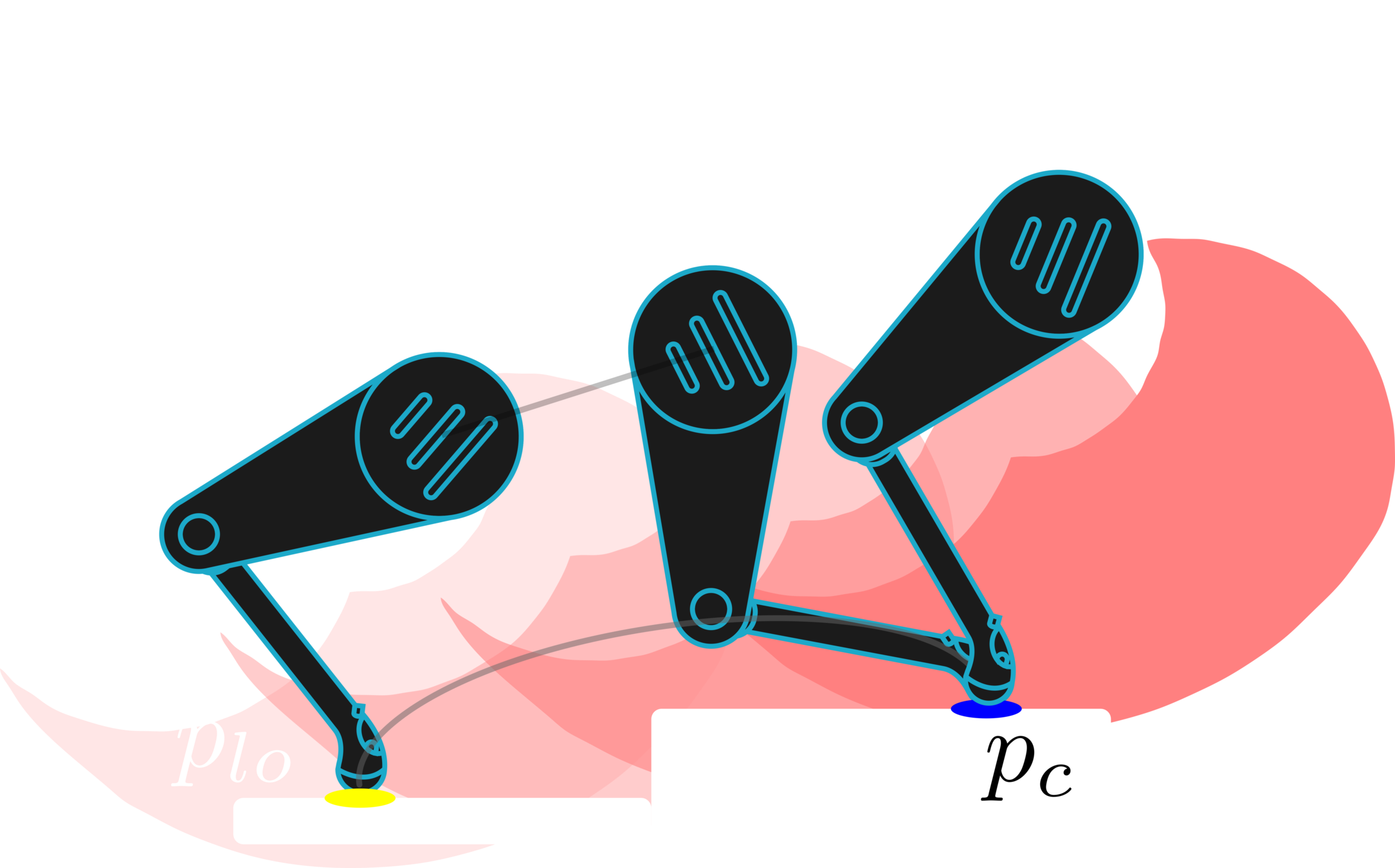

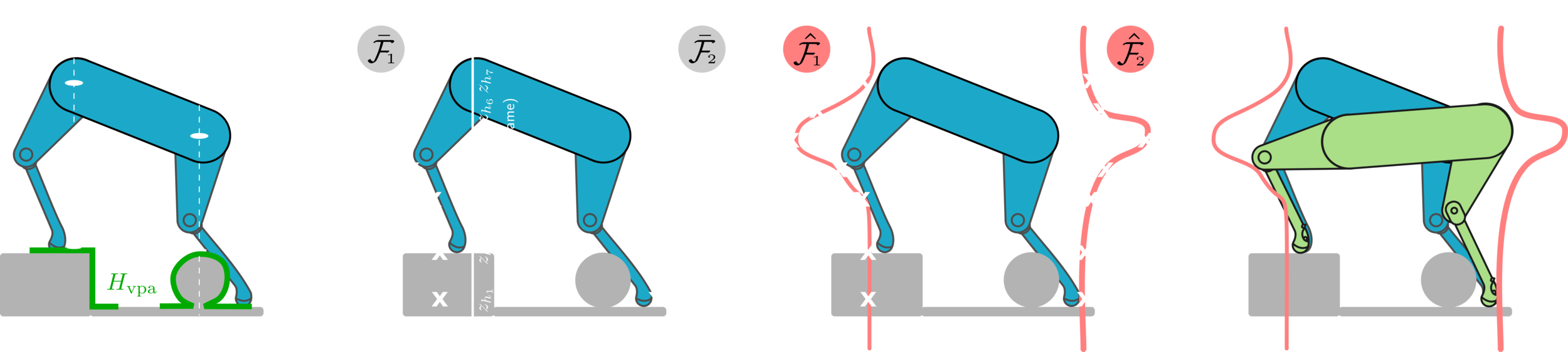

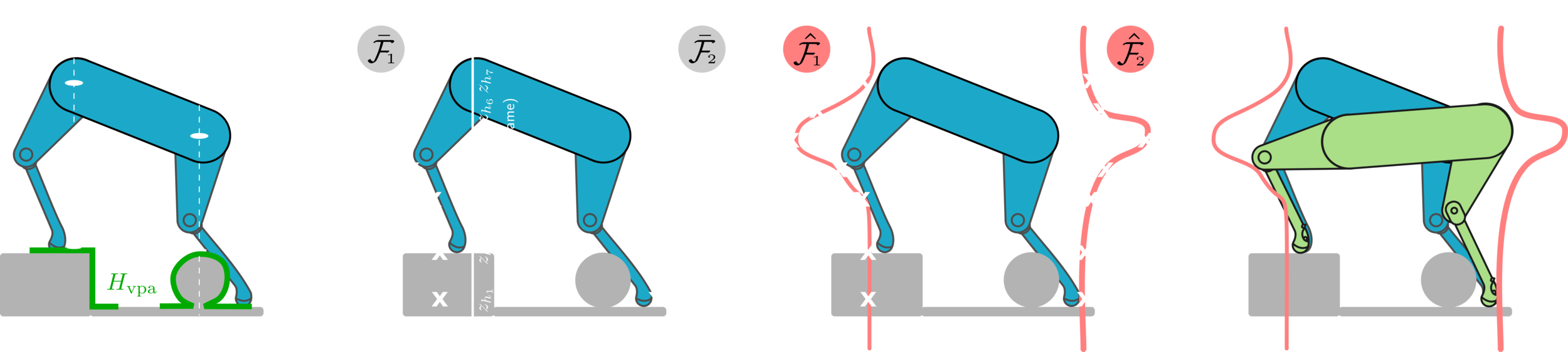

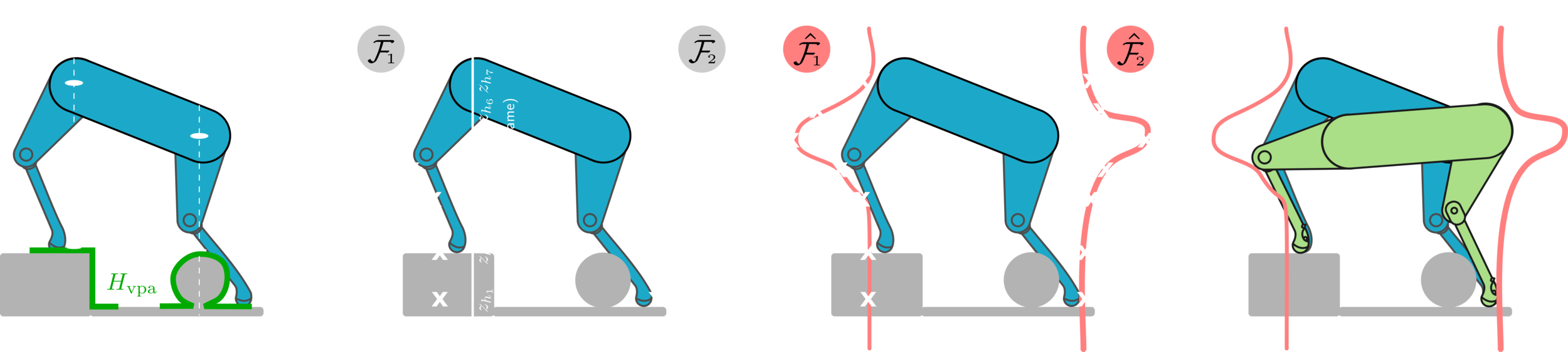

The Problem with Decoupled Planning

-

Current decoupled planning strategies focus on finding one optimal solution based on given selected footholds.

-

First, the footholds are selected.

-

Then, the pose is optimized based on that.

-

If the selected footholds are not reached (the robot gets disturbed), the robot may end up in a state with no reachable safe footholds.

-

Propose a different paradigm.

-

Instead of finding body poses that are optimal w.r.t given footholds,

-

we find body poses that will maximize the chances of the robot reaching safe footholds.

-

Put the robot in a state that if it gets disturbed, it remains around a set of solutions that are still safe & reachable.

How to Solve This Problem?

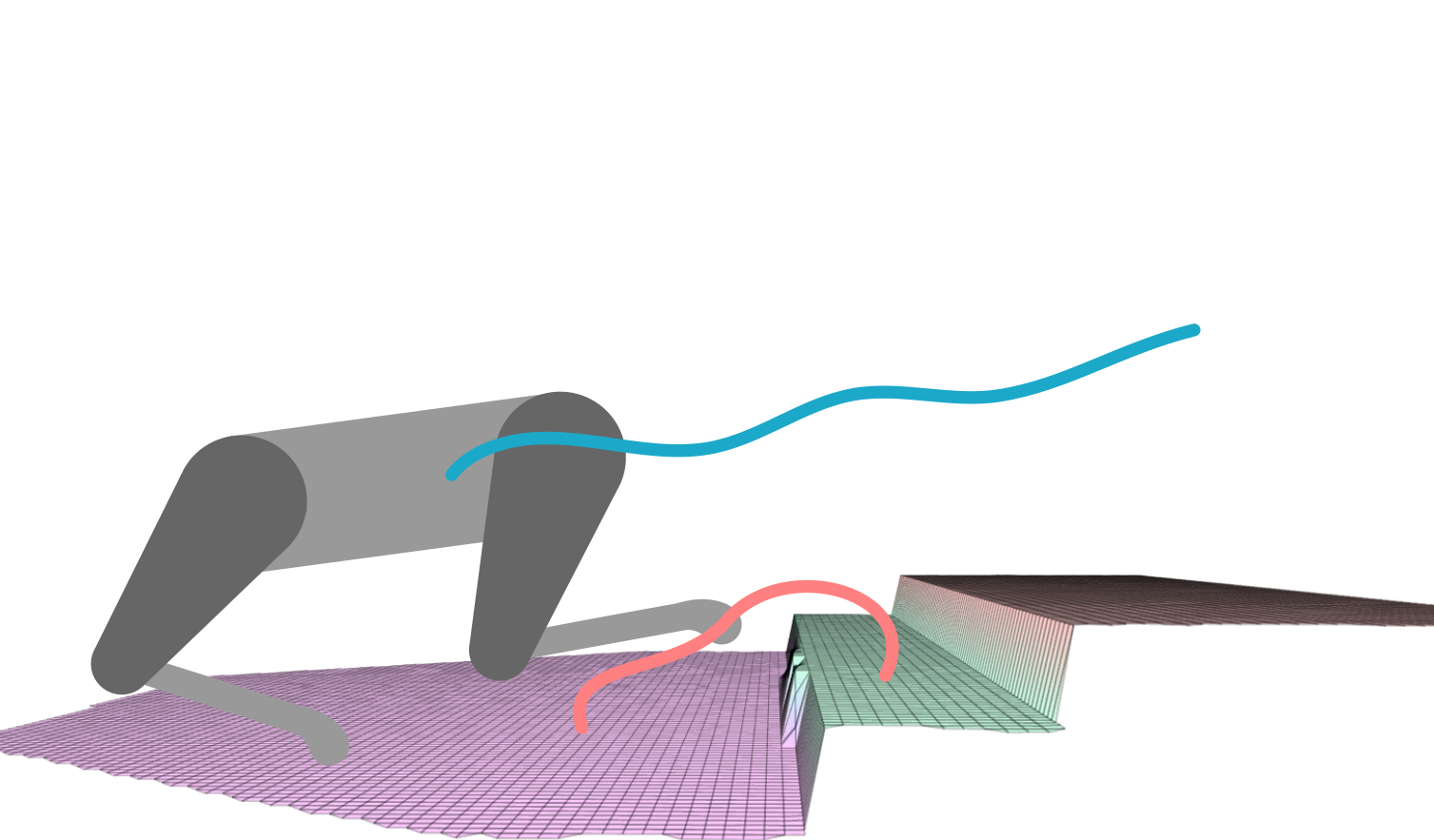

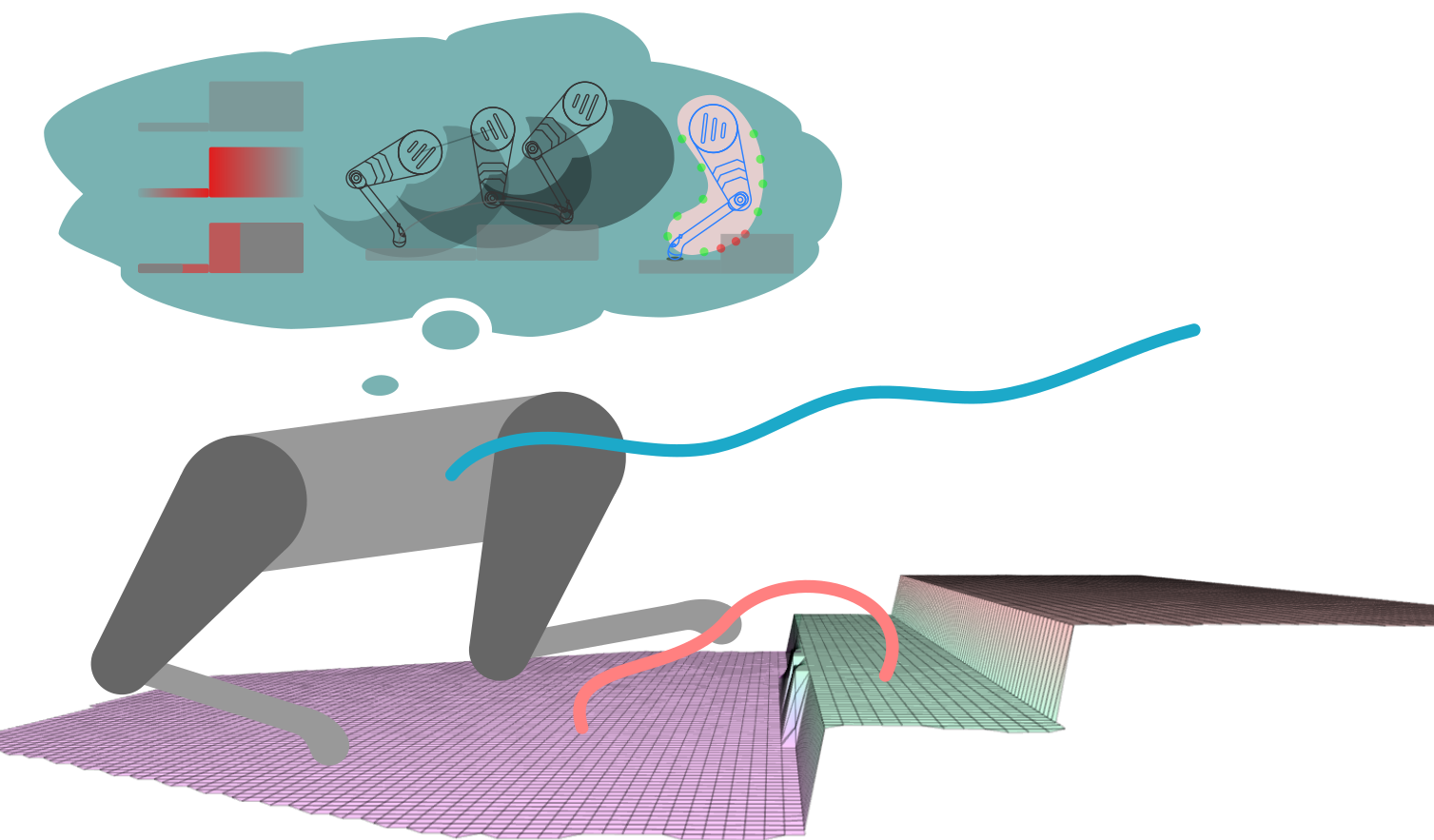

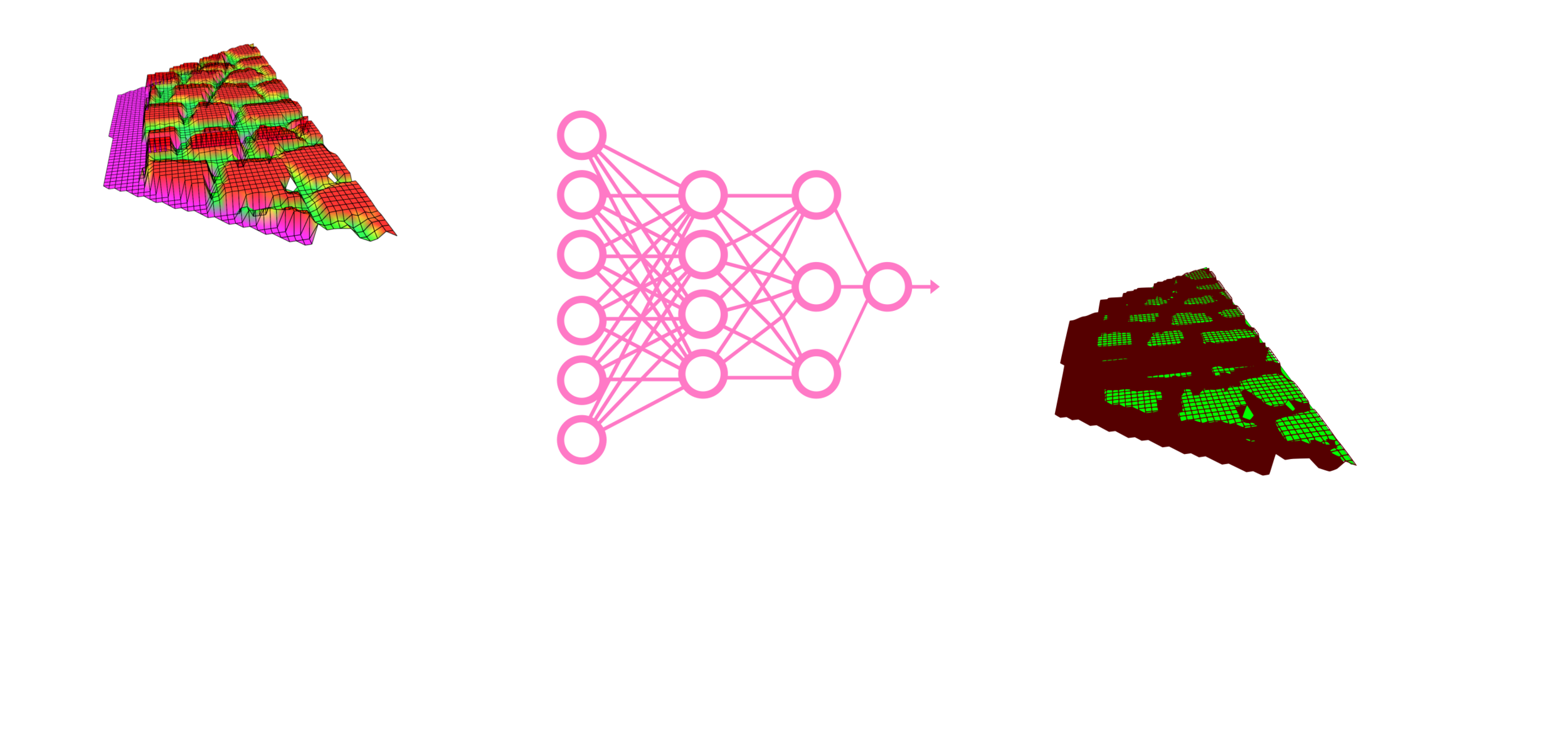

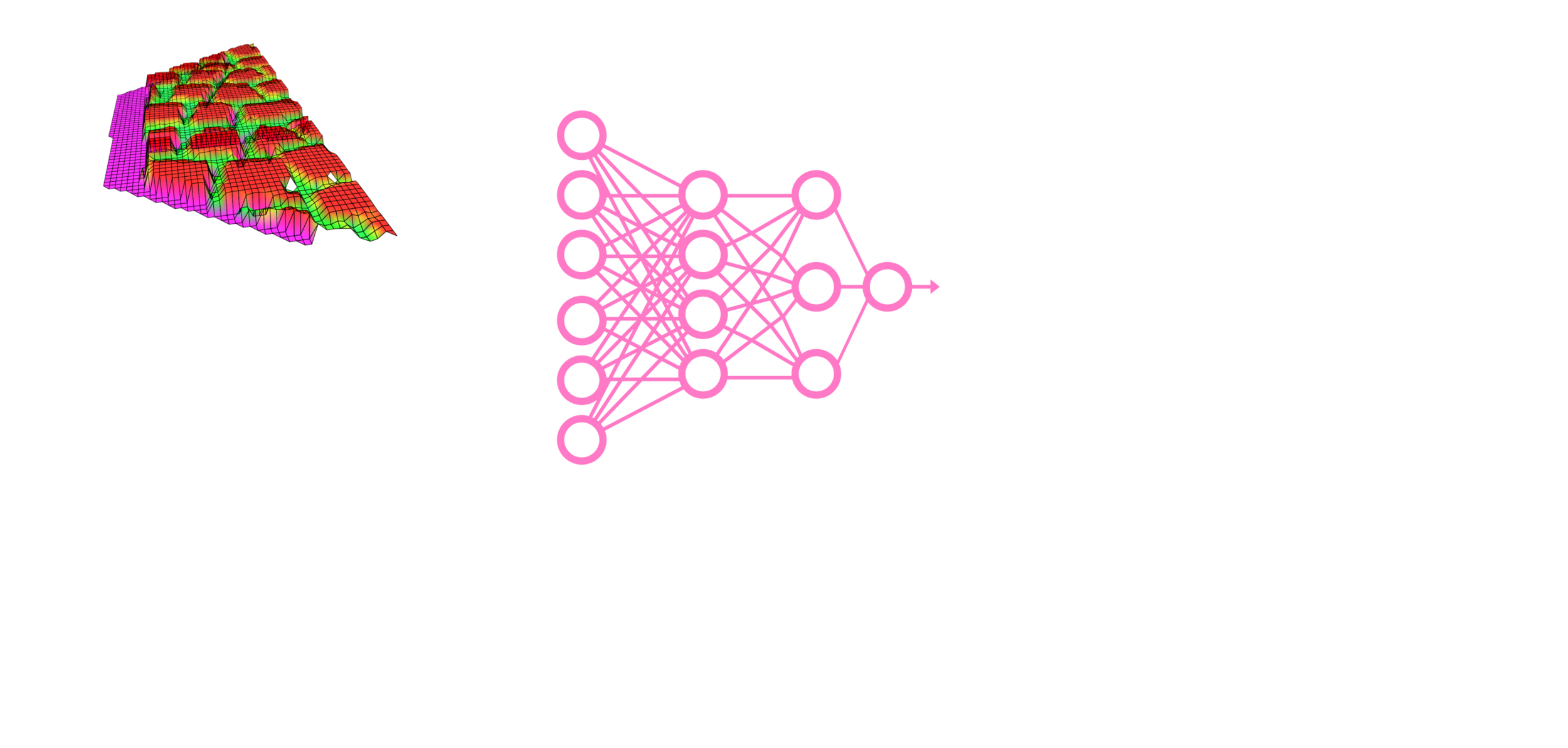

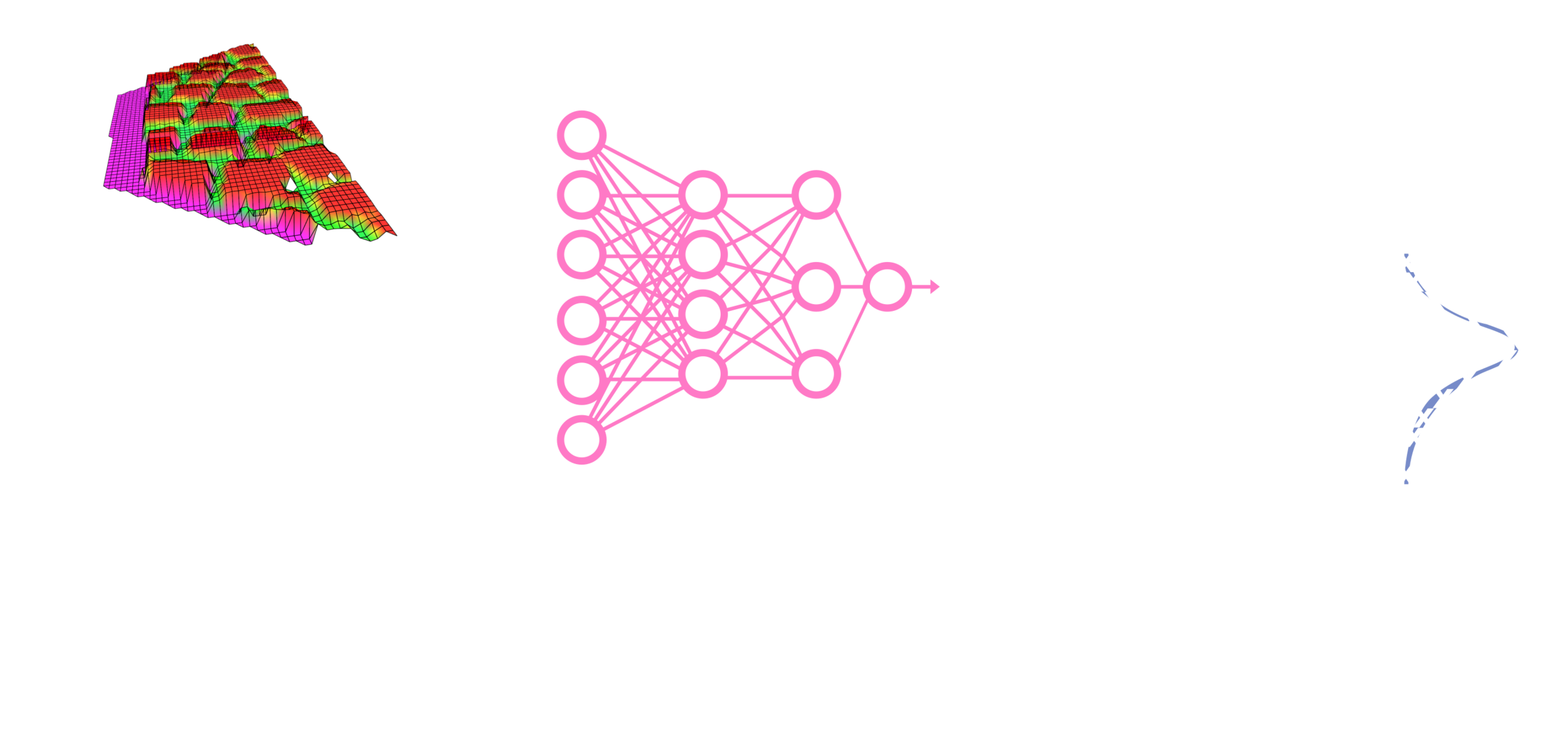

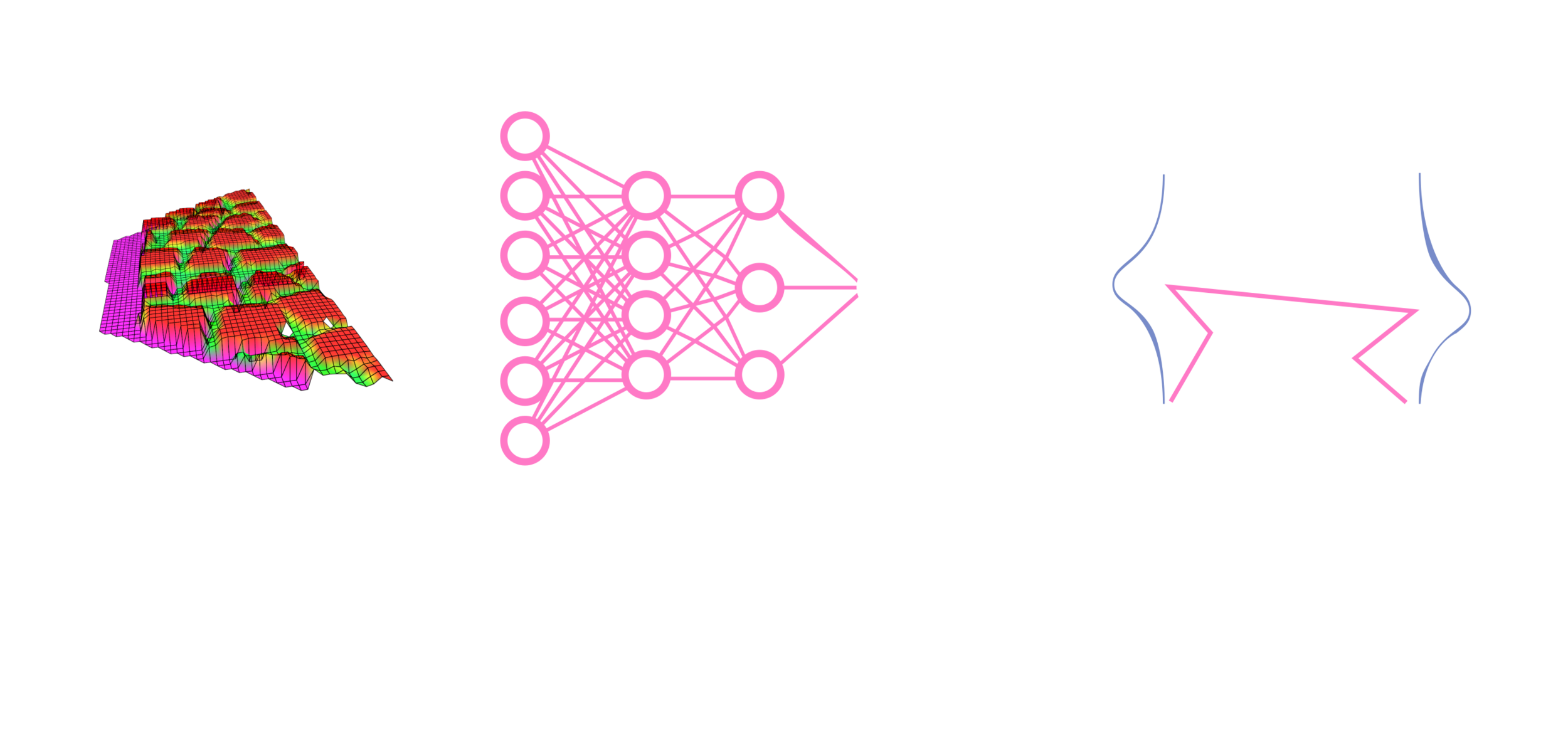

Vision-Based Terrain Aware Locomotion (ViTAL)

- ViTAL is an online vision-based locomotion planning strategy.

- ViTAL selects footholds based on the robot's skills

- Also, find the robot pose that maximizes the chances of success in reaching these footholds.

- The notion of safety & success emerges from skills that characterize the robot's capabilities.

Vision-Based Foothold Adaptation (VFA)

Vision-Based Pose Adaptation (VPA)

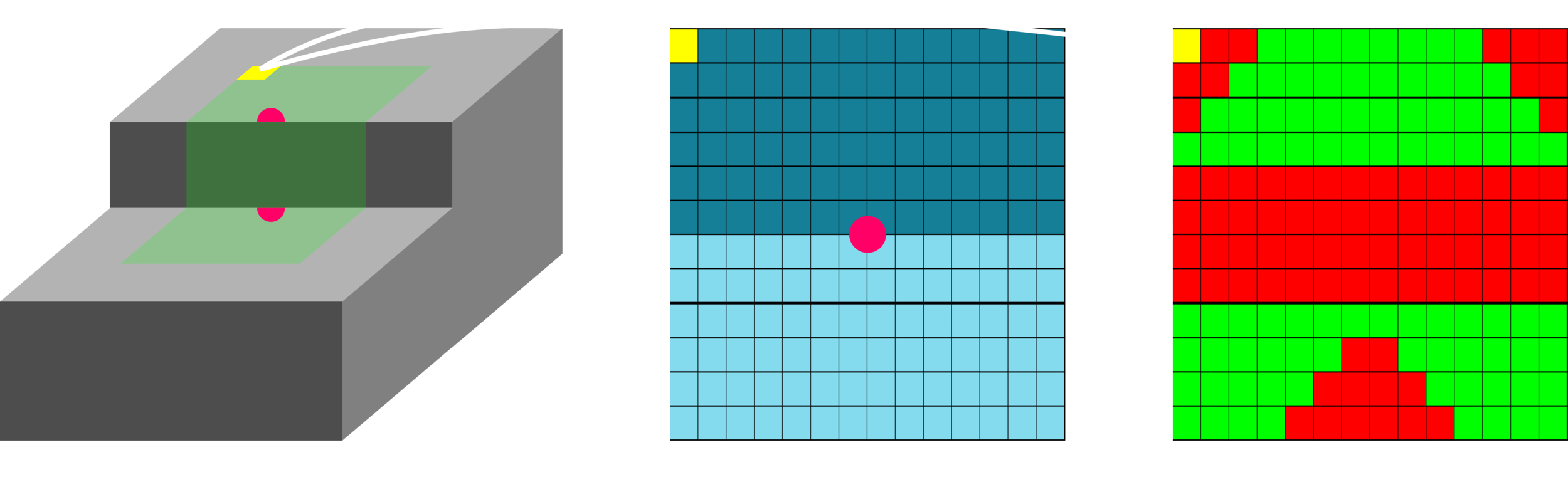

Foothold Evaluation Criteria (FEC)

What the robot is capable of doing?

- Avoid edges, corners, gaps, etc.

- Remain with the workspace of the legs.

- Avoid colliding with the terrain.

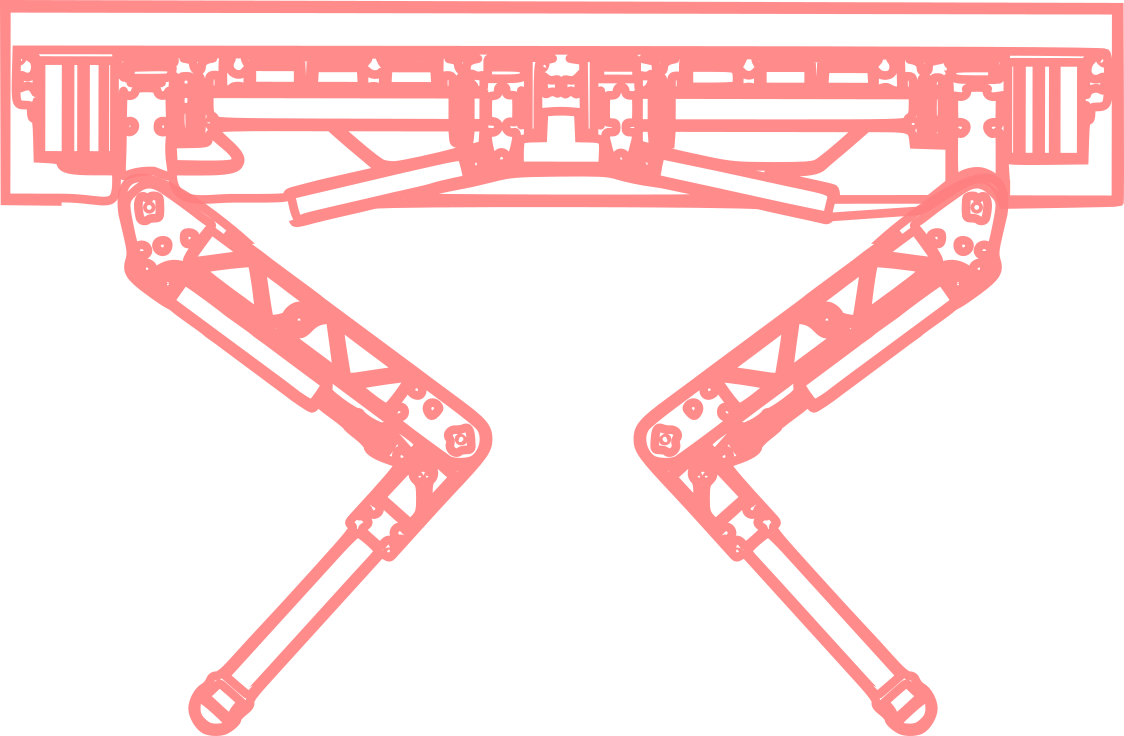

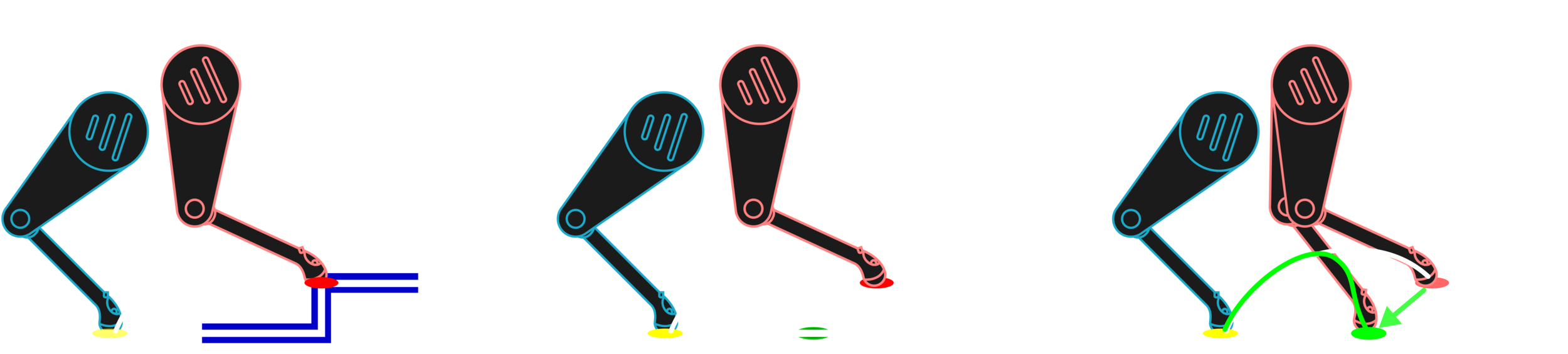

Foothold Evaluation Criteria (FEC)

The boolean matrix characterizes which pixel (location) in the heightmap is feasible (safe) or not based on the robot's capabilities (the criteria).

Foothold Evaluation Criteria (FEC)

Rejects footholds that cause foot trajectory collision.

- Foot Trajectory Collision (FTC)

Rejects footholds that are outside the workspace during the entire gait phase.

- Kinematic Feasibility (KF)

Rejects footholds that cause leg collision with the terrain.

- Leg Collision (LC)

- Terrain Roughness (TR)

Rejects footholds that are near holes, spikes, edges, and corners.

Vision-Based Foothold Adaptation (VFA)

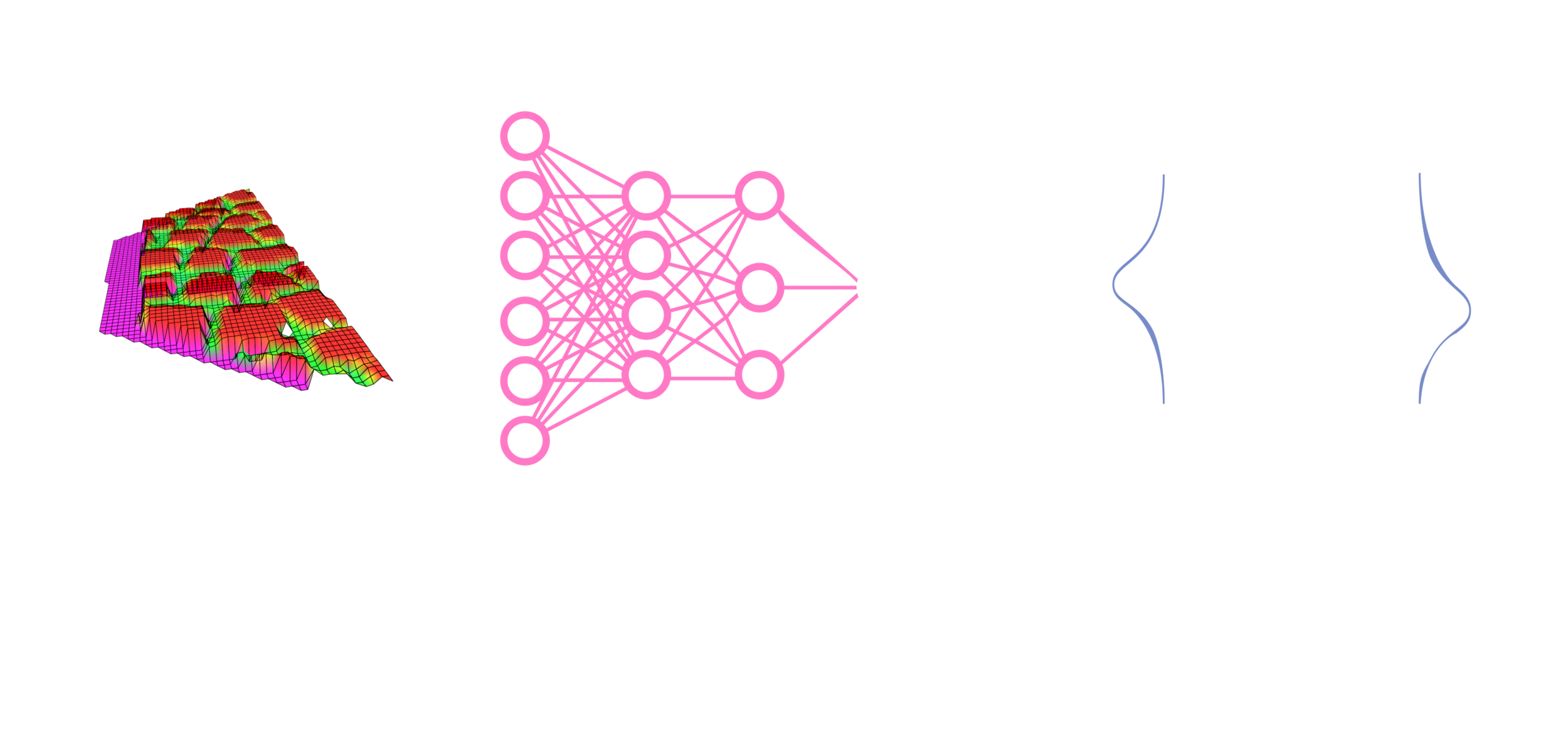

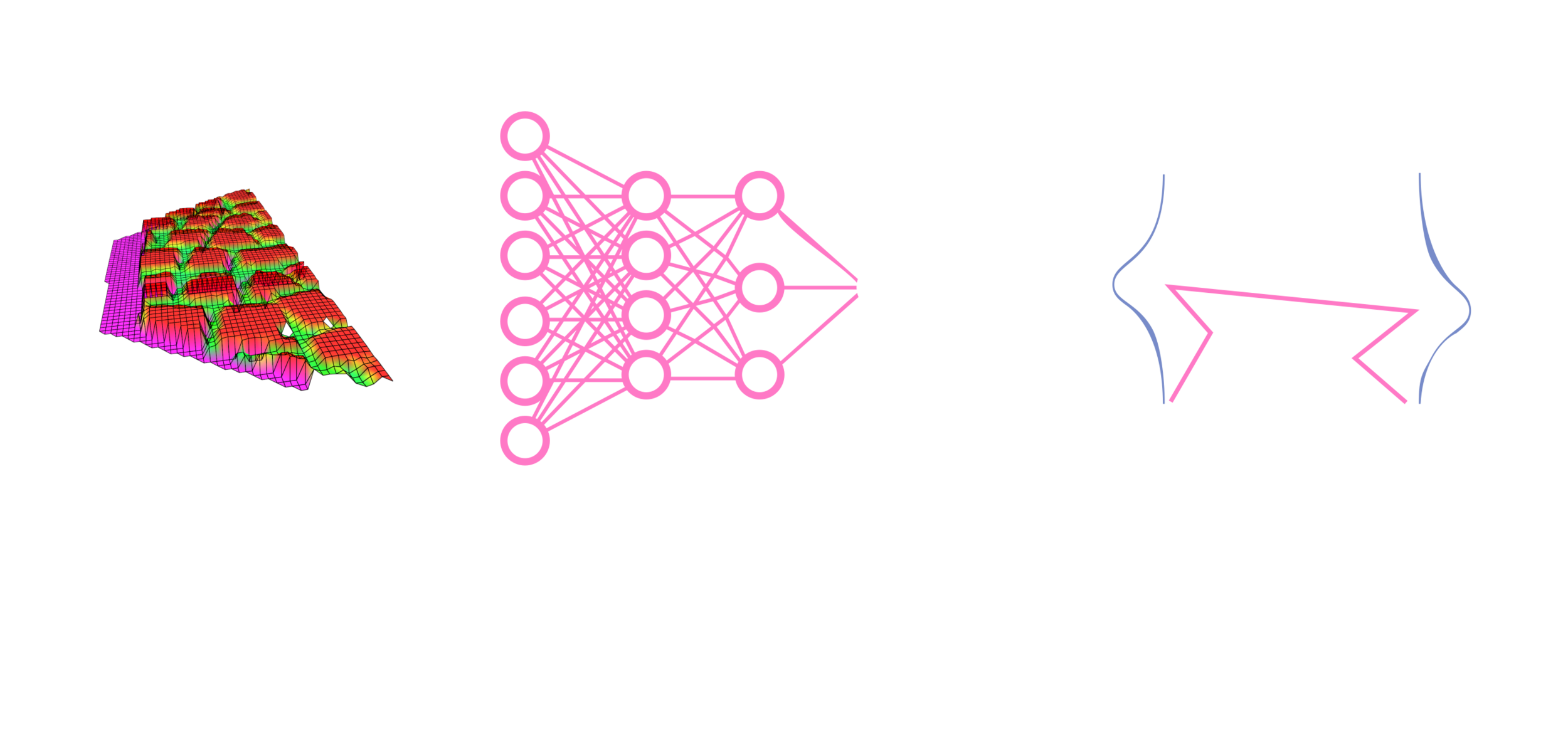

From the VFA to the VPA

Vision-Based Pose Adaptation (VPA)

front hips rising up

front hips lowering down

hind hips rising up

hind hips lowering down

Published In:

S. Fahmi, V. Barasuol, D. Esteban, O. Villarreal, and C. Semini,

“ViTAL: Vision-Based Terrain-Aware Locomotion for Legged Robots,” IEEE Trans. Robot. (T-RO), Nov. 2022, doi: 10.1109/TRO.2022.3222958.

-

STANCE: Whole-Body Control for Legged Robots

-

ViTAL: Vision-Based Terrain-Aware Locomotion Planning

-

MIMOC: Reinforcement Learning for Legged Robots

-

About Me

-

Athletic Intelligence for Legged Robots

-

Final Thoughts

Outline

Model-Based vs. Reinforcement Learning

-

Model-Based Control

-

Interpretable

-

Based on physics

-

Easier to tune & design

-

-

Reinforcement Learning

-

Model-free

-

Harder to interpret, design, & tune

-

Less sensitive to modeling & state estimation inaccuracies

-

-

Can we combine the best of both worlds?

-

Train an RL policy that tracks (imitates) reference trajectories from model-based optimal controllers?

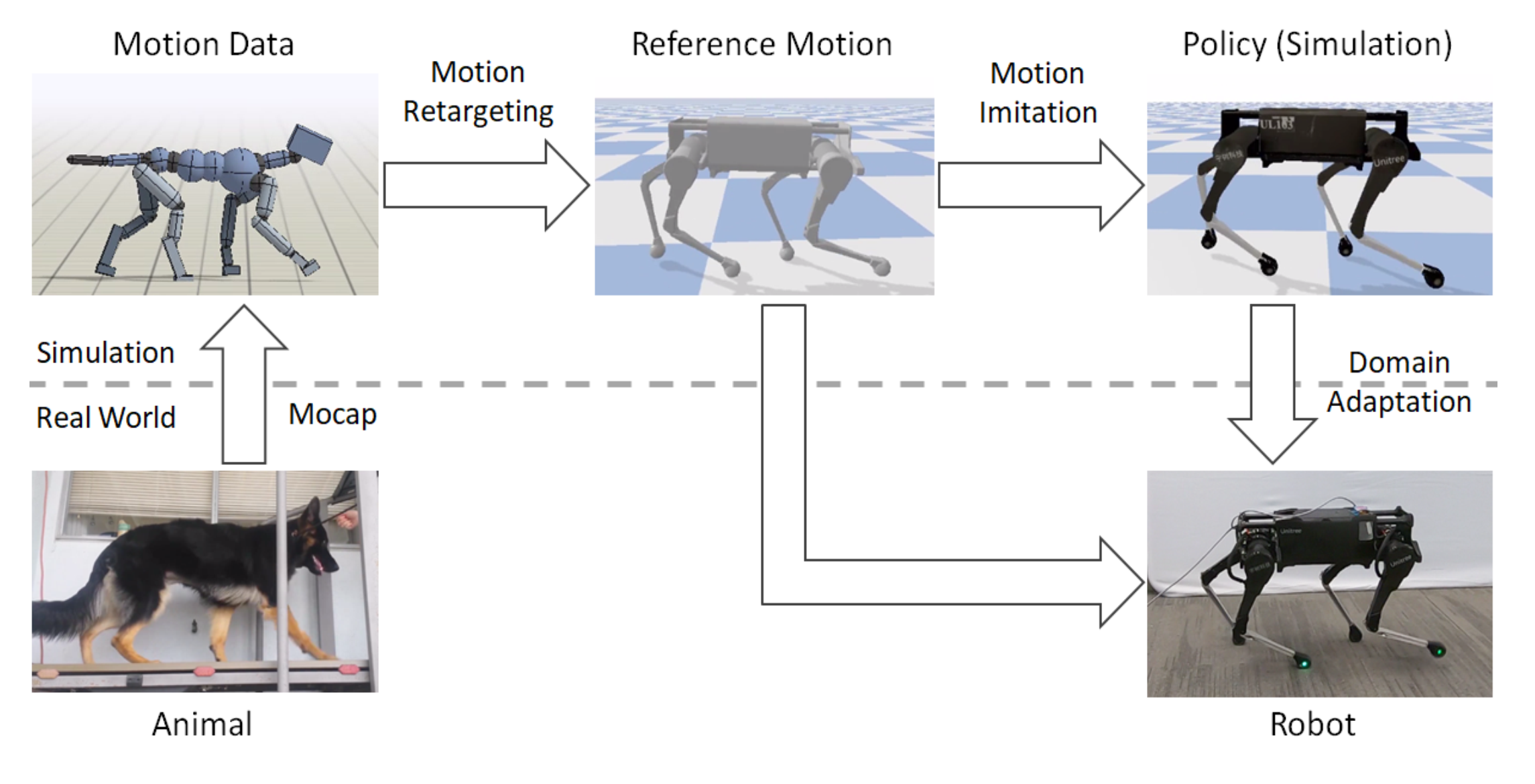

RL for Legged Robots

[1] N. Rudin et al., Learning to walk in minutes using massively parallel deep reinforcement learning, CoRL, 2021.

[2] G. Ji et al., Concurrent training of a control policy and a state estimator for dynamic and robust legged locomotion,” RA-L, 2022.

[3] J. Siekmann et al., Sim-to-real learning of all common bipedal gaits via periodic reward composition, ICRA, 2021

[4] X. B. Peng et al., Learning agile robotic locomotion skills by imitating animals, RSS 2020.

[5] X. B. Peng, et al., Deepmimic: Example-guided deep reinforcement learning of physics-based character skills,” ACM Trans. Graphics, 2018.

-

Heuristic Rewards [1-3]

-

Hand-crafted

-

Requires tedious tuning

-

-

Motion Imitation [4,5]

-

Uses MoCap (or video clips) ⇒ hard to get

-

Motion retargeting ⇒ kinematically inaccurate

-

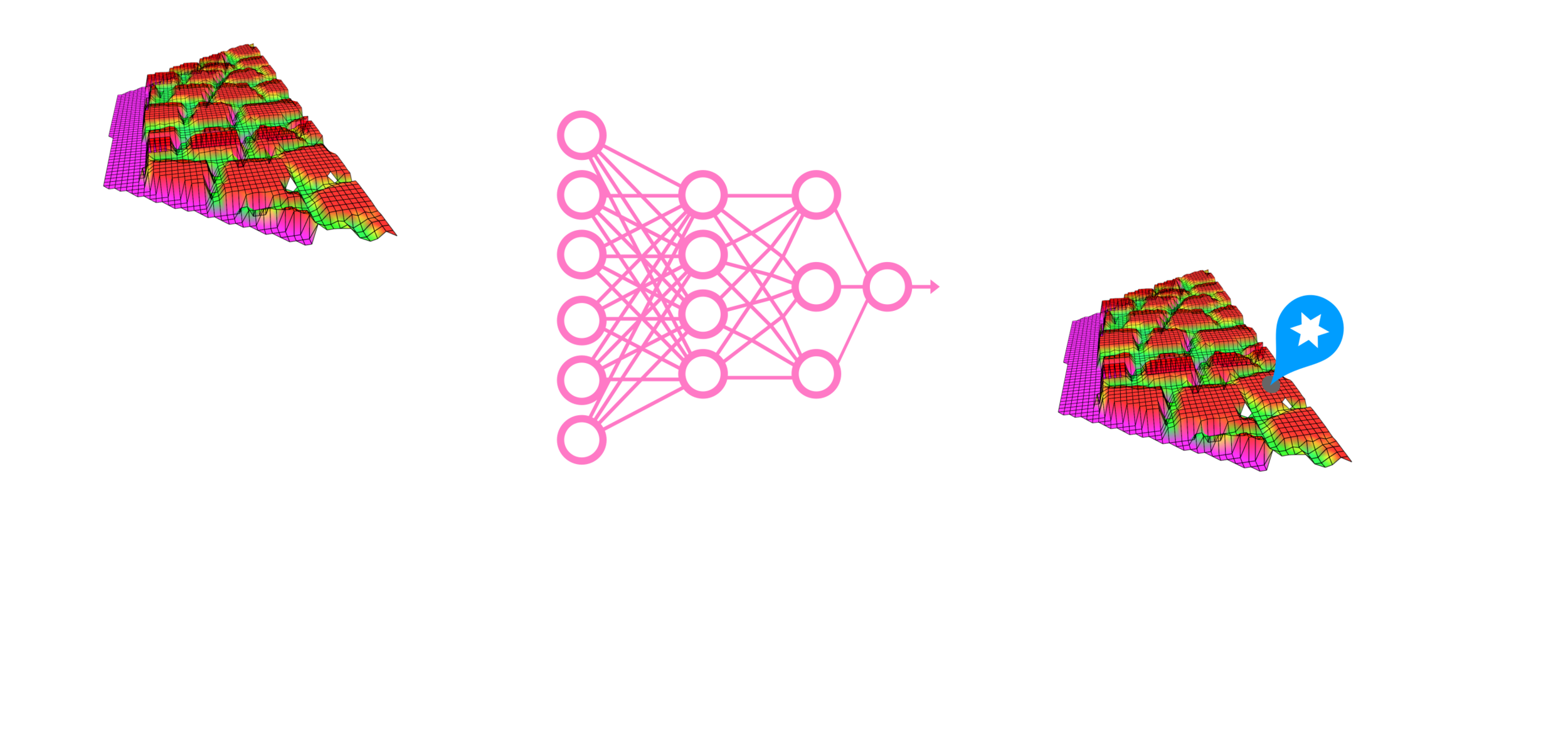

Proposed Approach

-

MIMOC is a locomotion controller that learns from model-based optimal controllers.

-

This is an RL approach via motion imitation.

-

Instead of MoCap, we rely on reference trajectories from model-based optimal controllers.

-

MIMOC: Motion Imitation from Model-based Optimal Control

MIMOC's Contributions

-

Unlike RL via motion imitation (MoCap), our references

-

Consider the robot's whole-body dynamics ⇒ dynamically feasible

-

Do not need motion retargeting (robot-specific) ⇒ kinematically feasible

-

Include torques and GRFs which are crucial for balance

-

-

As a result, compared to RL via motion imitation

-

The sim-to-real gap is significantly reduced

-

No fine-tuning stage and no tedious reward tuning were needed

-

-

Unlike model-based controllers

-

There were no issues with running the policy in real-time

-

The policy is less sensitive to state estimation noise and model inaccuracies

-

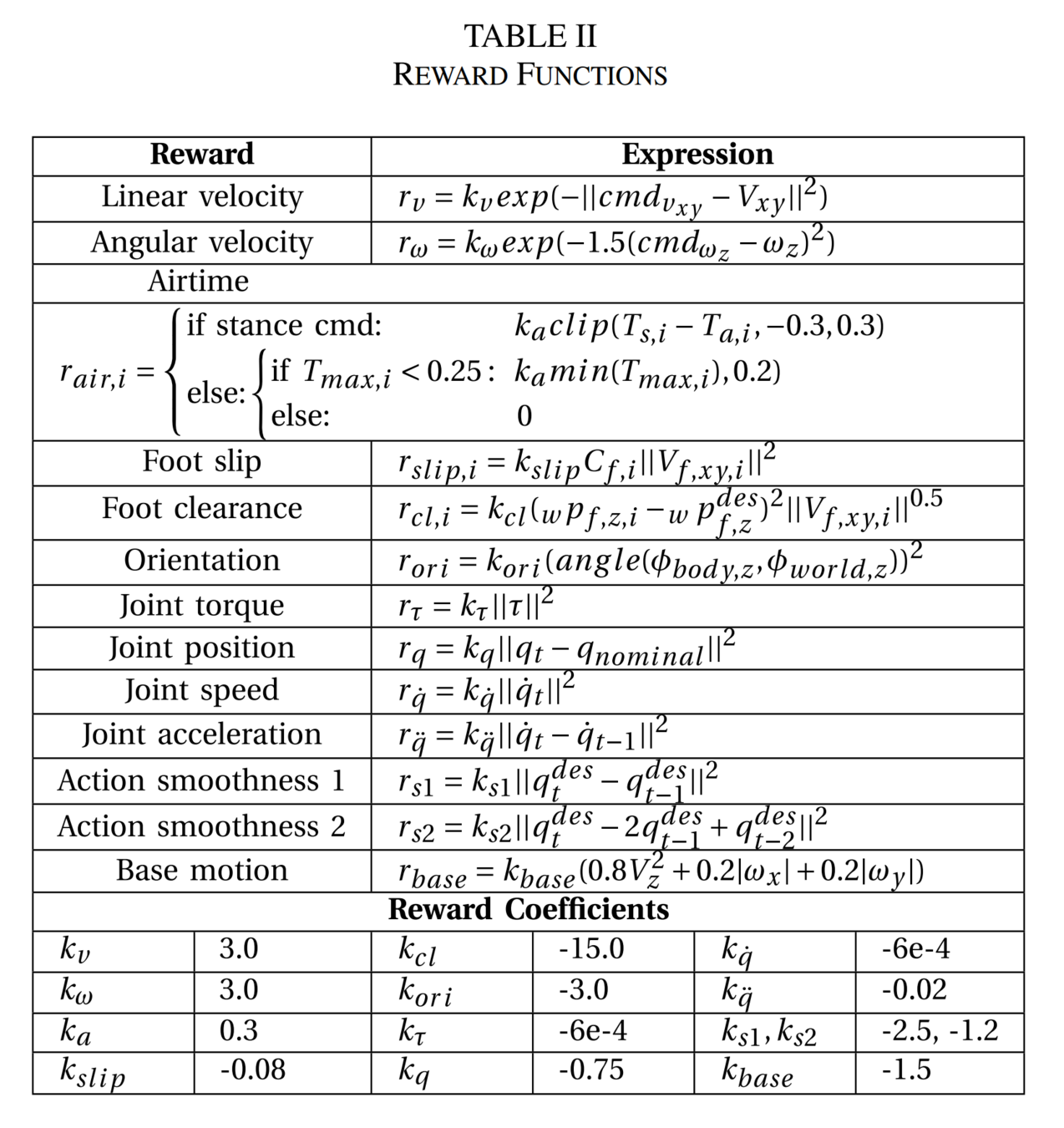

MIMOC's RL Framework

-

Inputs: commands, phase (clock), and (some) robot observations

-

Actions: joint set-points going through a PD controller at 100 Hz

-

The references are only used to calculate the rewards

-

The rewards are only tracking rewards, without hand-tuning

MIMOC's RL Framework

-

We used an MPC + WBC for the Mini-Cheetah and the MIT Humanoid

-

We used IsaacGym with the Legged Gym Implementation

-

(Did not talk about the policy training, rewards, observations, etc.)

D. Kim et al., Highly dynamic quadruped locomotion via whole-body impulse control and model predictive control, ArXiV, 2019.

N. Rudin et al., Learning to walk in minutes using massively parallel deep reinforcement learning, CoRL, 2021.

Published In:

A.J. Miller*, S. Fahmi*, M. Chignoli, and S. Kim, "Reinforcement Learning for Legged Robots: Motion Imitation from Model-Based Optimal Control," under review, in IEEE Int. Conf. Robot. Automat. (ICRA), 2023.

*equal contribution

-

STANCE: Whole-Body Control for Legged Robots

-

ViTAL: Vision-Based Terrain-Aware Locomotion Planning

-

MIMOC: Reinforcement Learning for Legged Robots

-

About Me

-

Athletic Intelligence for Legged Robots

Outline

-

Final Thoughts

-

Why the AI Institute?

-

My expectations

-

Why am I a good fit?

Final Thoughts

-

WBC vs. MPC

-

Hierarchical WBC (priority-based WBC)

-

Models in Optimization-Based Control (SRBD, Floating-Based Models, Full Model, etc.)

-

QP Solvers

-

Contact Models

-

WBC for Humanoids, does it differ from WBC for quadrupeds?

-

RL-Based Control vs. Model-Based Control

-

State Estimation for Legged Robots

-

Vision-Based Planning

-

Learning for Legged Robots

Let's Talk About

BP

By Shamel Fahmi

BP

- 107