Gaze behavior in online and in-person concert and film viewing

Shreshth Saxena, Maya B. Flannery, Joshua L. Schlichting, and Lauren K. Fink

Department of Psychology, Neuroscience, and Behaviour

McMaster University, Canada

Eye tracking in music and film

-

Index spatiotemporal visual attention

-

AND auditory attention

-

Investigate multimodal interactions (audio, visual, text, etc.)

-

Investigate links between behavioural and physiological responses

-

Inform cinematic and aesthetic choices

1. Fink LK, Hurley BK, Geng JJ, Janata P. A linear oscillator model predicts dynamic temporal attention and pupillary entrainment to rhythmic patterns. J Eye Mov Res. 2018 Nov 20;11(2):10.16910/jemr.11.2.12. doi: 10.16910/jemr.11.2.12. PMID: 33828695; PMCID: PMC7898576. 2. Alreja, A., Ward, M.J., Ma, Q. et al. A new paradigm for investigating real-world social behavior and its neural underpinnings. Behav Res 55, 2333–2352 (2023). https://doi.org/10.3758/s13428-022-01882-9

The Problem

-

Eye tracking hardware restricts naturalistic experimentation

-

Eye tracking hardware restricts naturalistic experimentation

-

Studies and findings have limited ecological validity

image source: https://www.ed.ac.uk/ppls/psychology

The Problem

Scaling eye tracking to naturalistic real-world settings

Scaling eye-tracking to naturalistic real-world settings

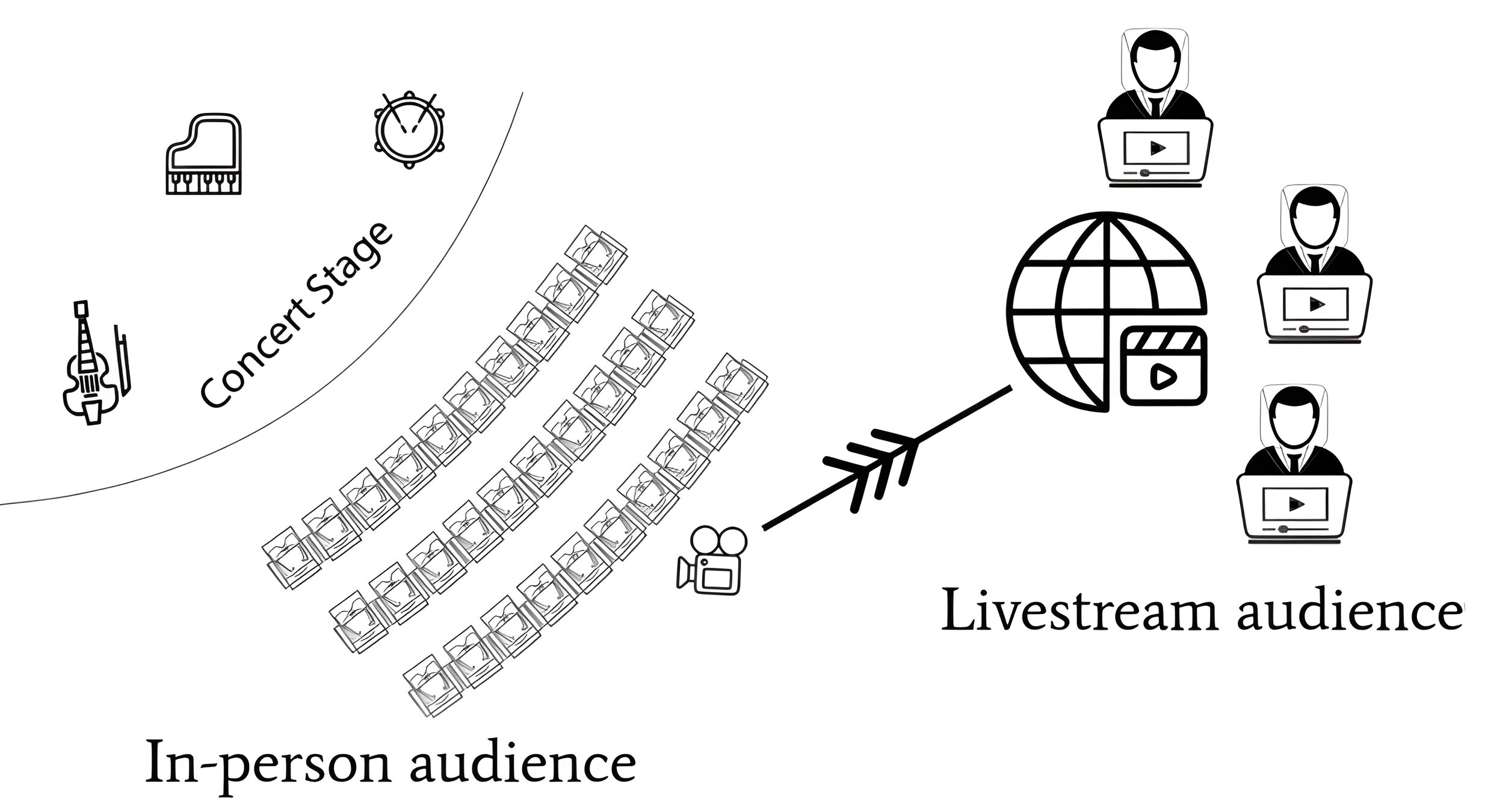

In-person events

Online streaming

Image source: Unsplash

Online streaming

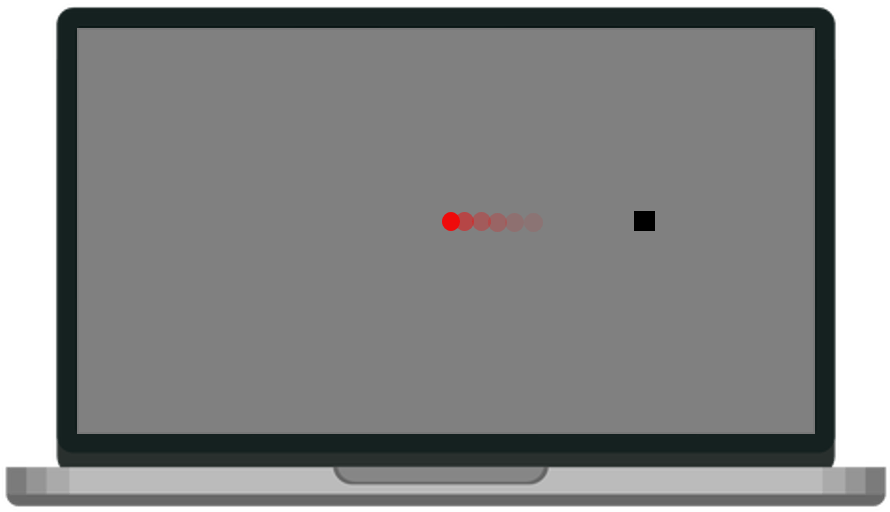

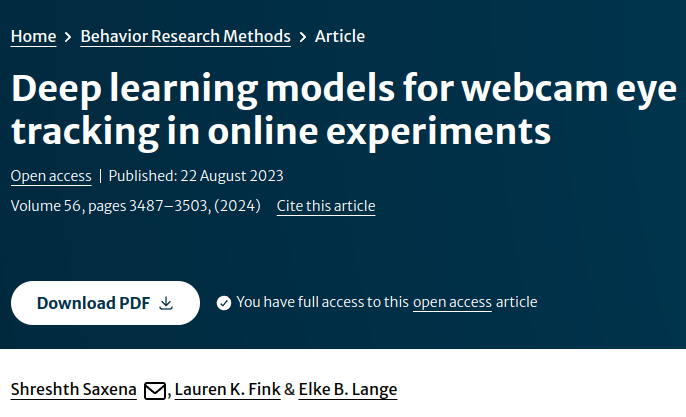

Saxena, Lange, & Fink. 2022. Towards efficient calibration for webcam eye-tracking in online experiments. ACM Symposium on Eye Tracking Research and Applications (ETRA '22). doi

Saxena, Fink, & Lange, 2023. Deep learning models for webcam eye tracking in online experiments. Behaviour Research Methods. doi

- Gaze prediction in unrestricted home environments with 2.4° accuracy

- Significant improvement over existing state-of-the-art WebGazer (3.8°) with support for longer duration trials.

- Analysis tools to measure gaze fixations, saccades, blinks, scanpaths, heatmaps, region-of-interests etc.

- Calibration techniques to further improve accuracy based on experimental setup

- Open source online-offline breakdown for data collection and analysis respectively

Online streaming

In-person events

Saxena, Lange, & Fink. 2022. Towards efficient calibration for webcam eye-tracking in online experiments. ACM Symposium on Eye Tracking Research and Applications (ETRA '22). doi

Saxena, Fink, & Lange, 2023. Deep learning models for webcam eye tracking in online experiments. Behaviour Research Methods. doi

- Gaze prediction in unrestricted home environments with 2.4° accuracy

- Significant improvement over existing state-of-the-art WebGazer (3.8°) with support for longer duration trials.

- Analysis tools to measure gaze fixations, saccades, blinks, scanpaths, heatmaps, region-of-interests etc.

- Calibration techniques to further improve accuracy based on experimental setup

- Open source online-offline breakdown for data collection and analysis respectively

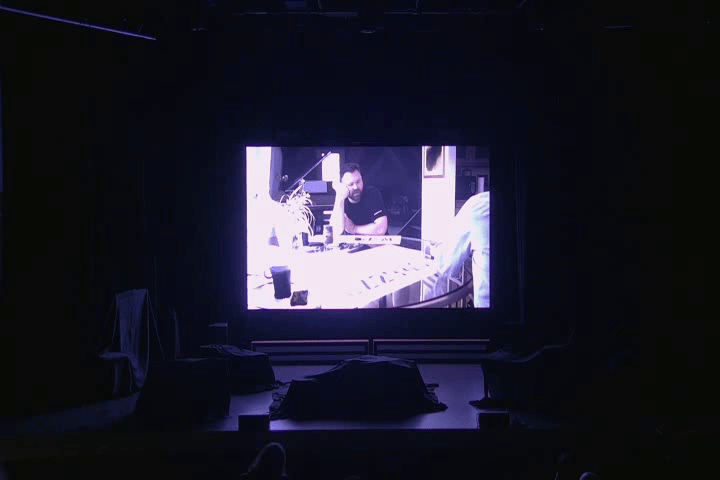

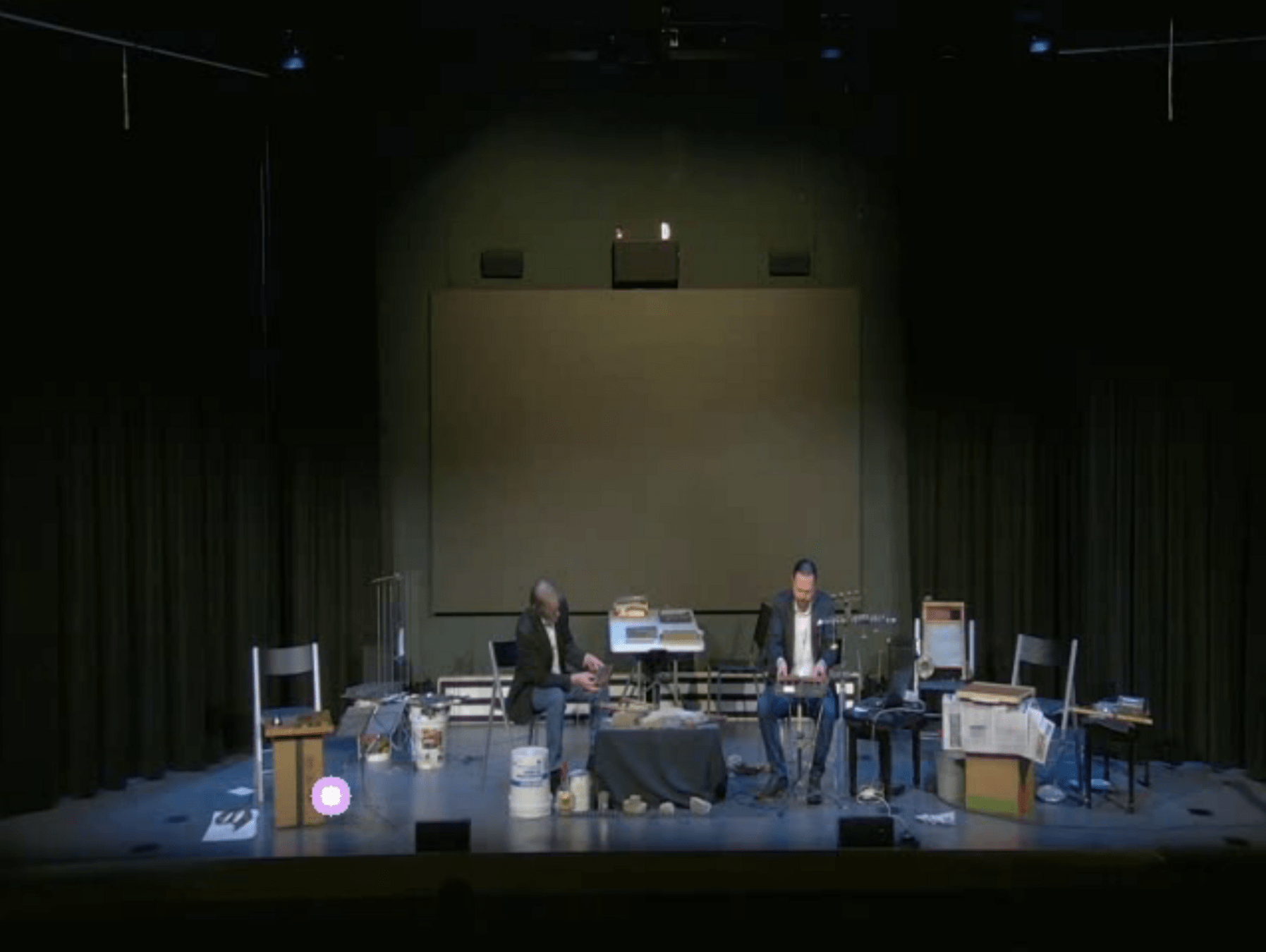

- Research concert hall

- 106 seat capacity

- Customizable room acoustics and spatial audio

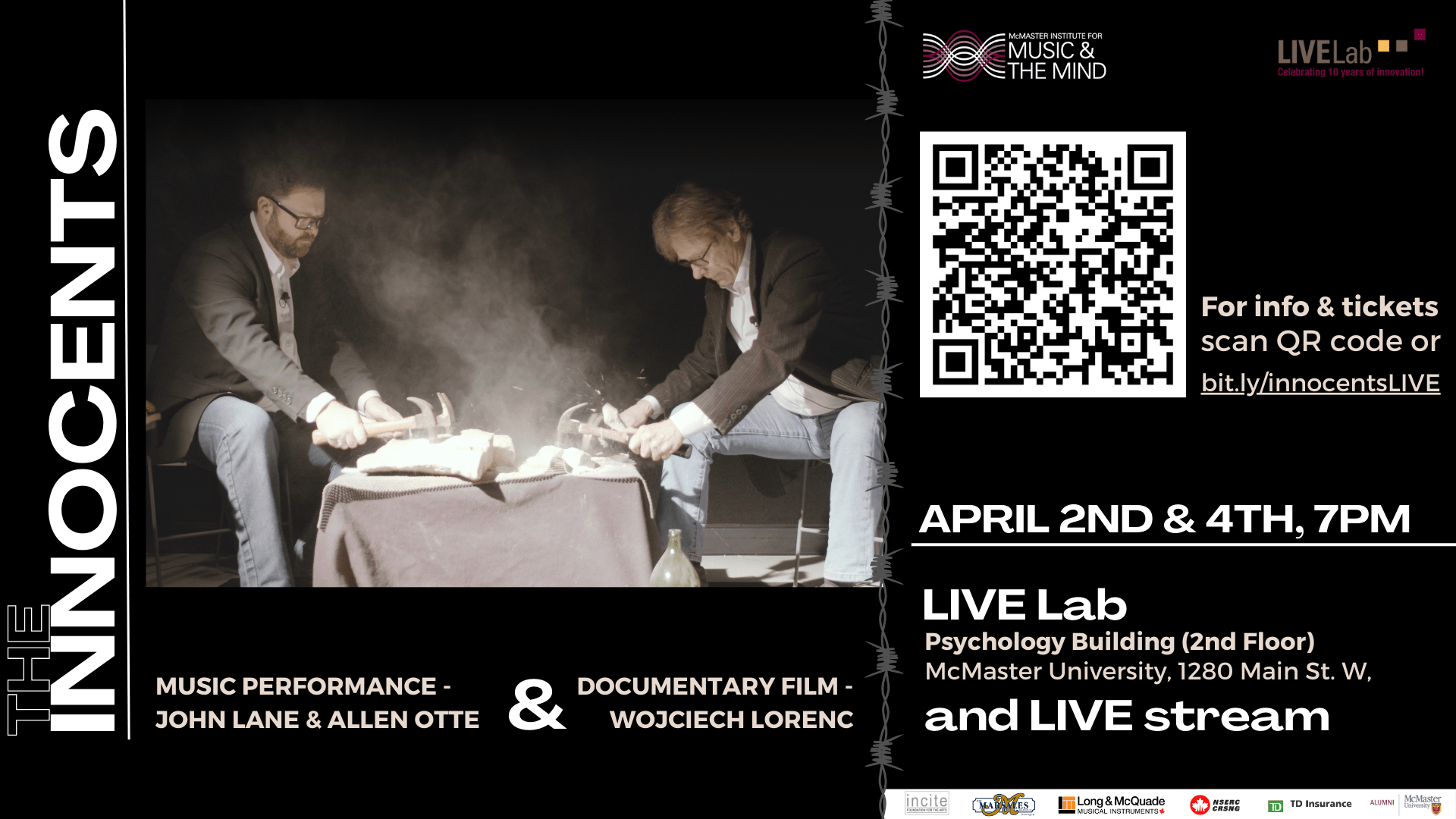

Current Study

(N=59)

(N=20)

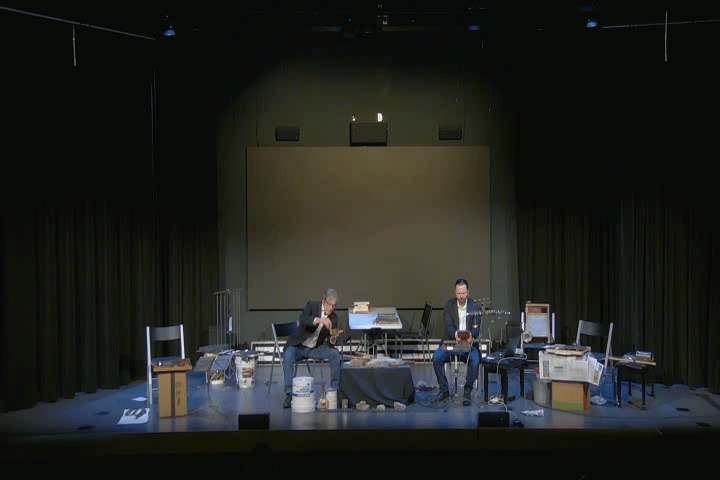

Day 1: Film -> Performance

Day 2: Performance -> Film

Film duration: 1 hour 20 minutes

Performance duration: ~1 hour

Set-up

N = 60 participants (30 each day)

-

Recruitment from university newsletters and public advertising

-

38 self-identified as women

-

Mean age = 34 years, range = (16, 82)

-

1 dropout on day 1 after the film screening session

Participants equipped with Pupil Labs Neon eye tracking glasses

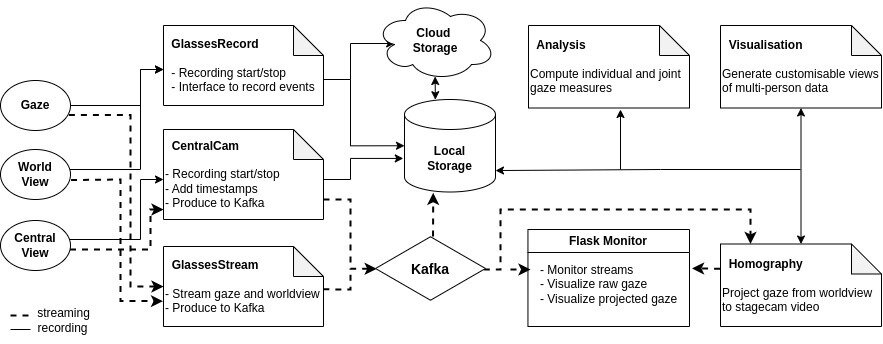

Methods: Multi-Person Eye Tracking

Challenges

- Multi-device management

- Time synchronisation

- Multi-perspective gaze data

- Analysis and visualisation tools

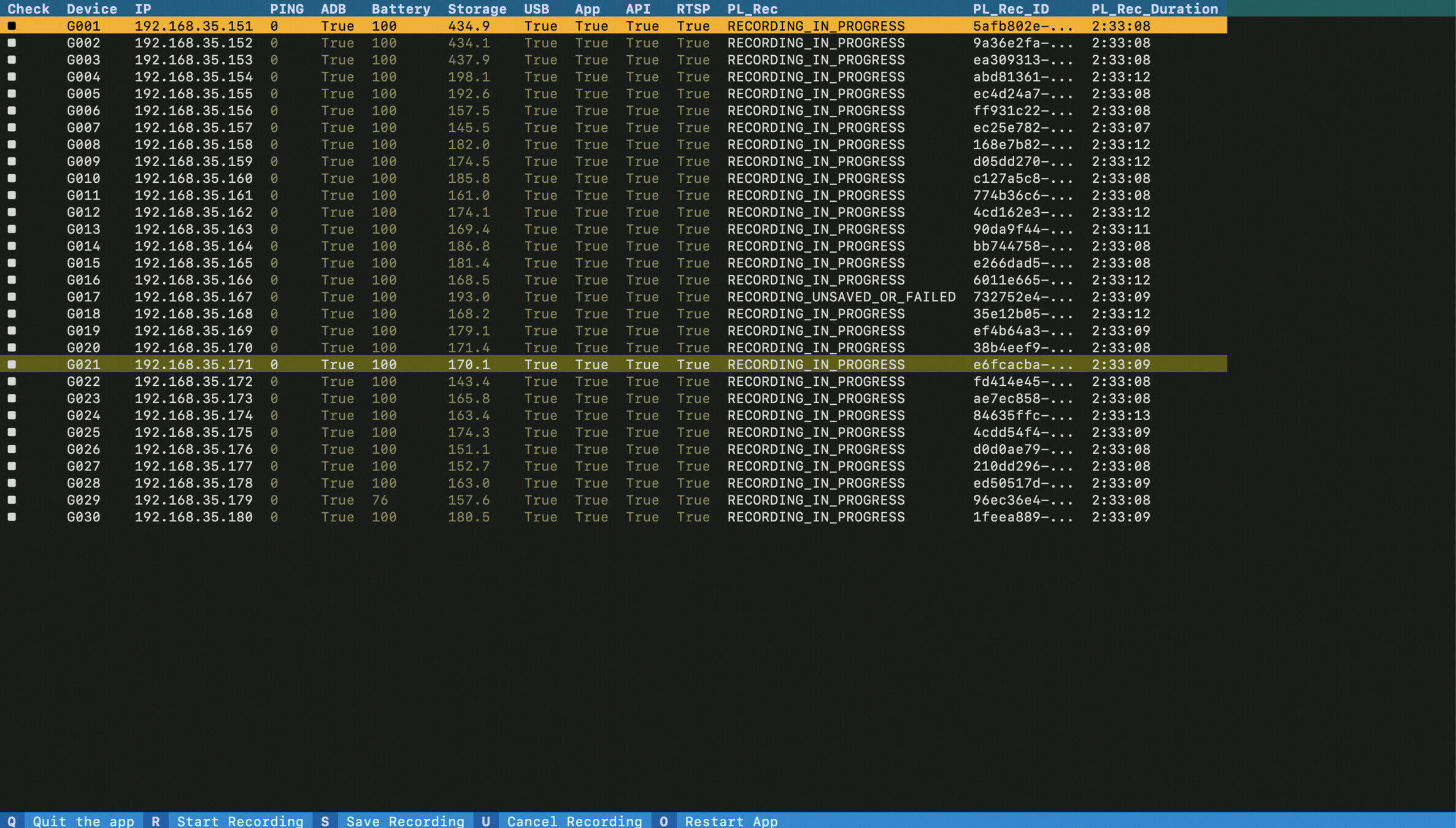

Multi-device management

- Excessive time and research assistants required to trigger events on each device

- Interference during film/concert

- Continuous monitoring

- Remote trouble-shooting

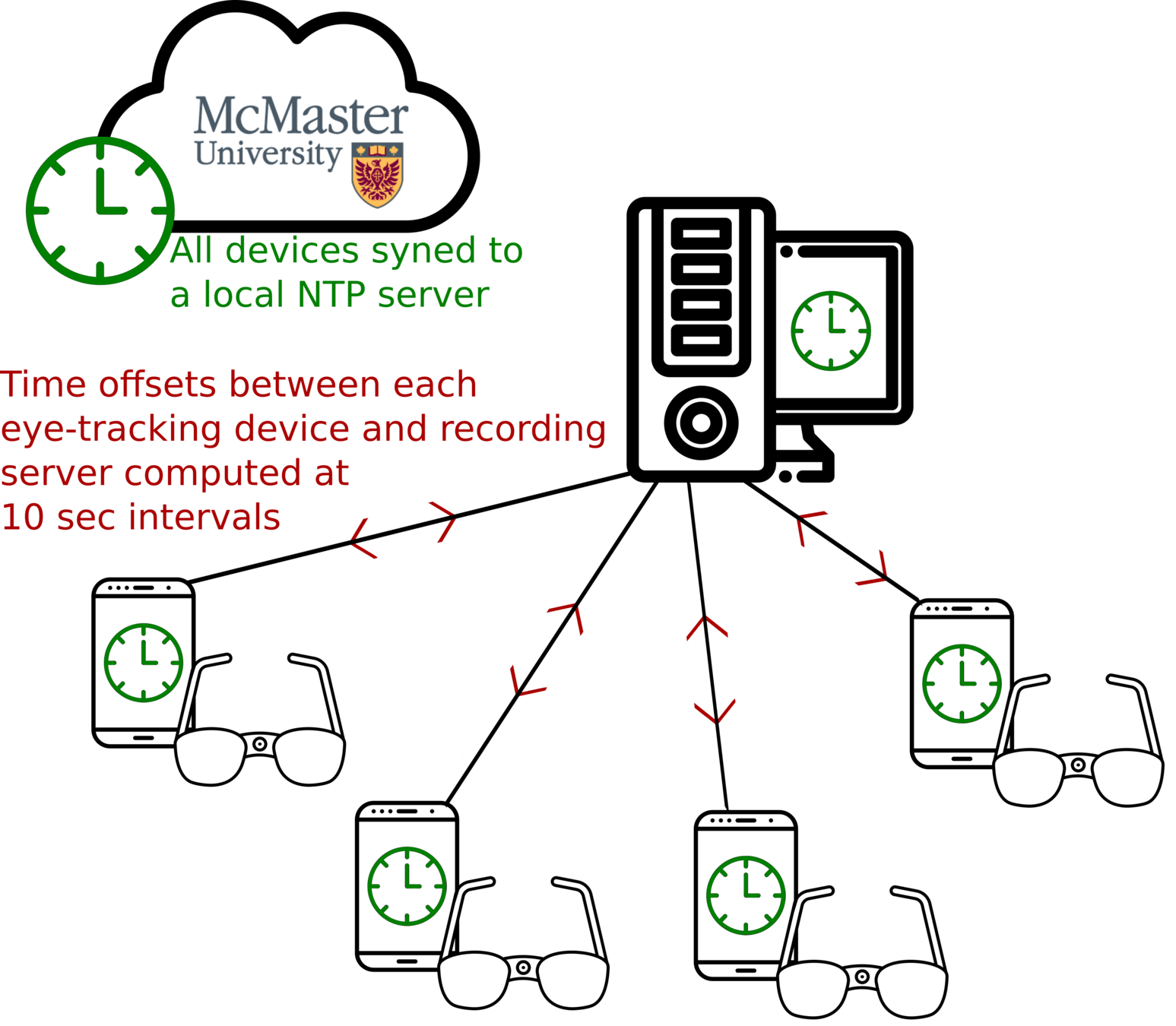

Time synchronisation

-

Network Time Protocol (NTP) used to synchronize all devices to a locally-hosted master clock

- Time offsets recorded periodically to correct for devices' internal clock drift

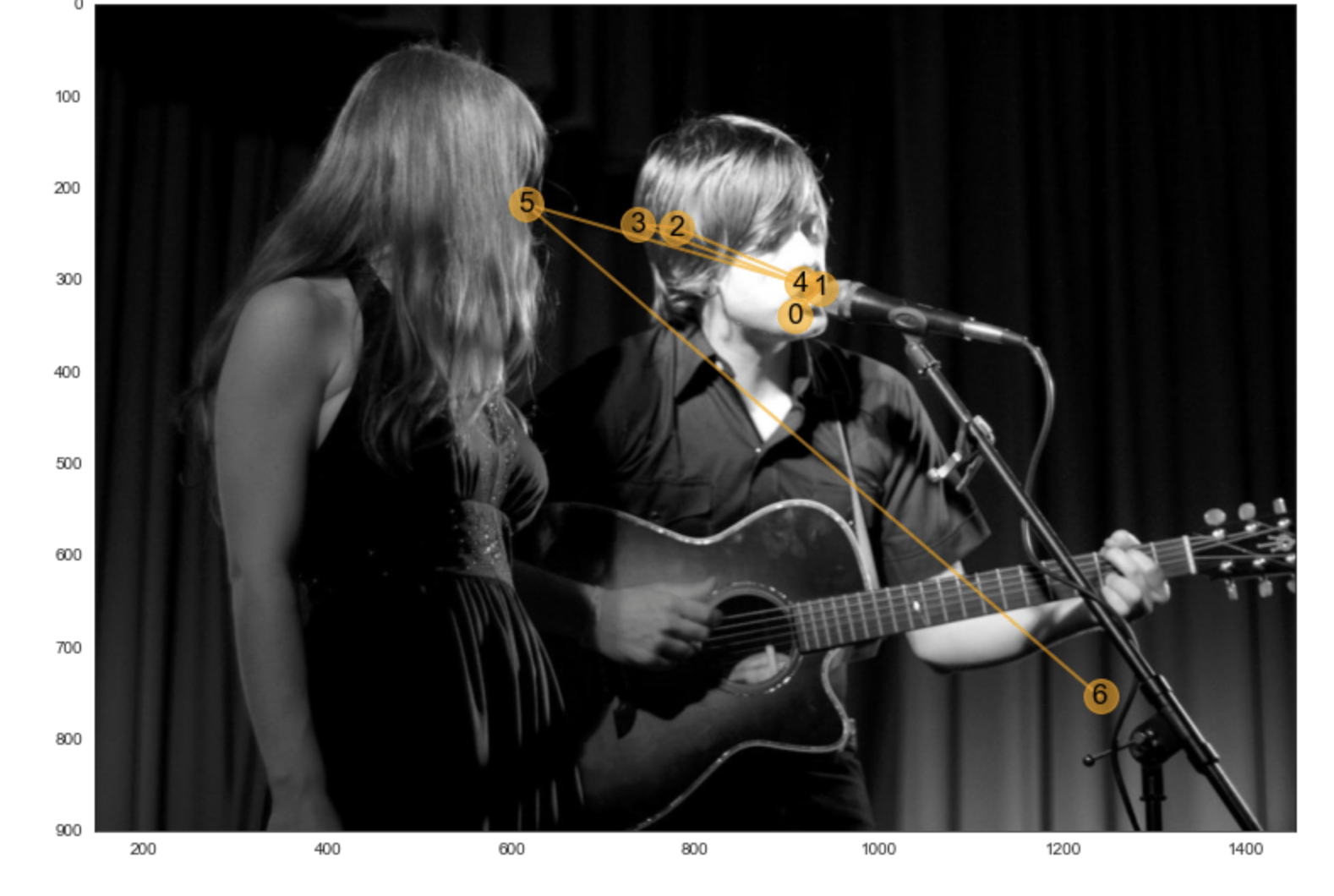

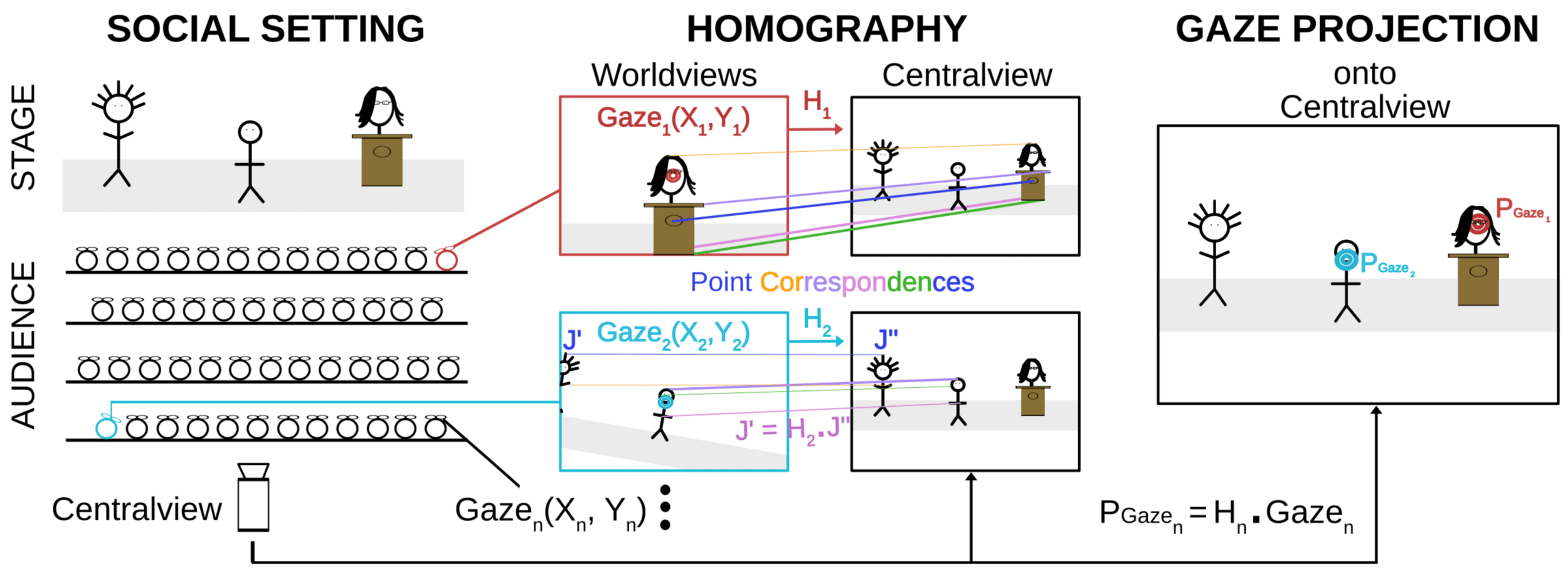

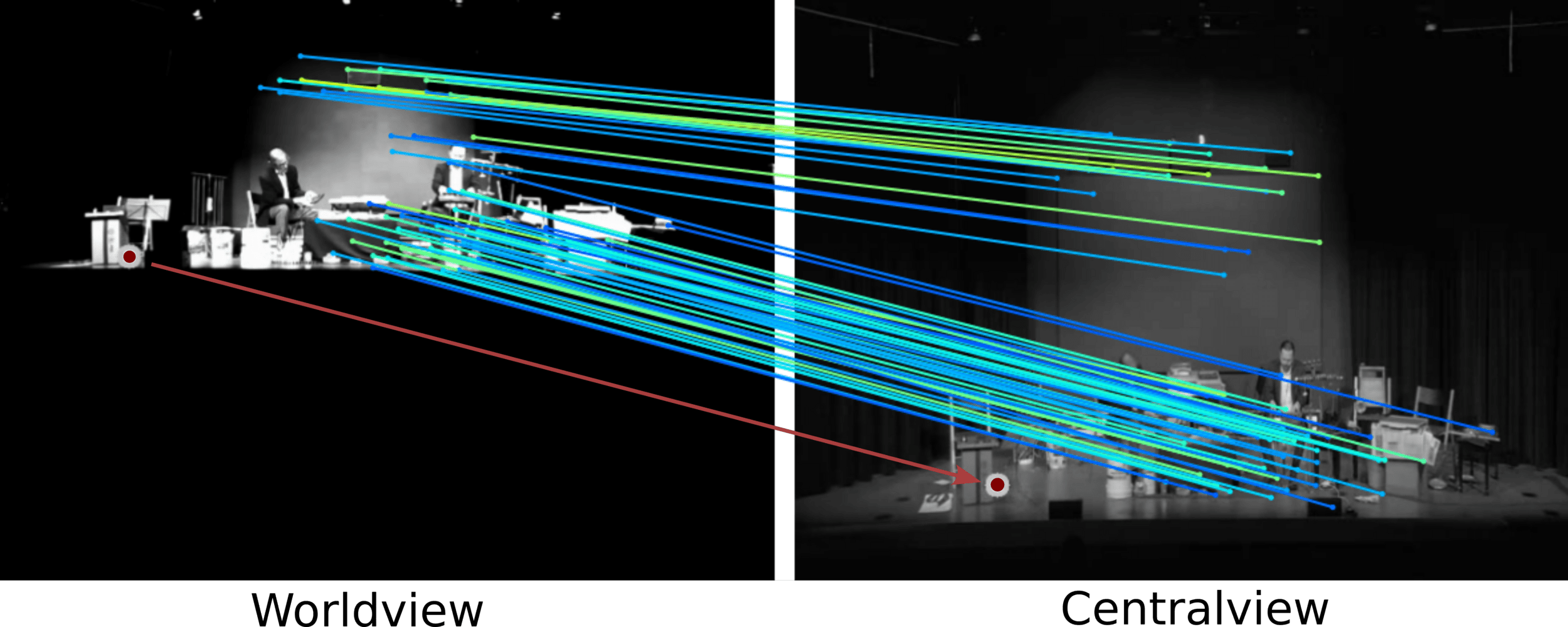

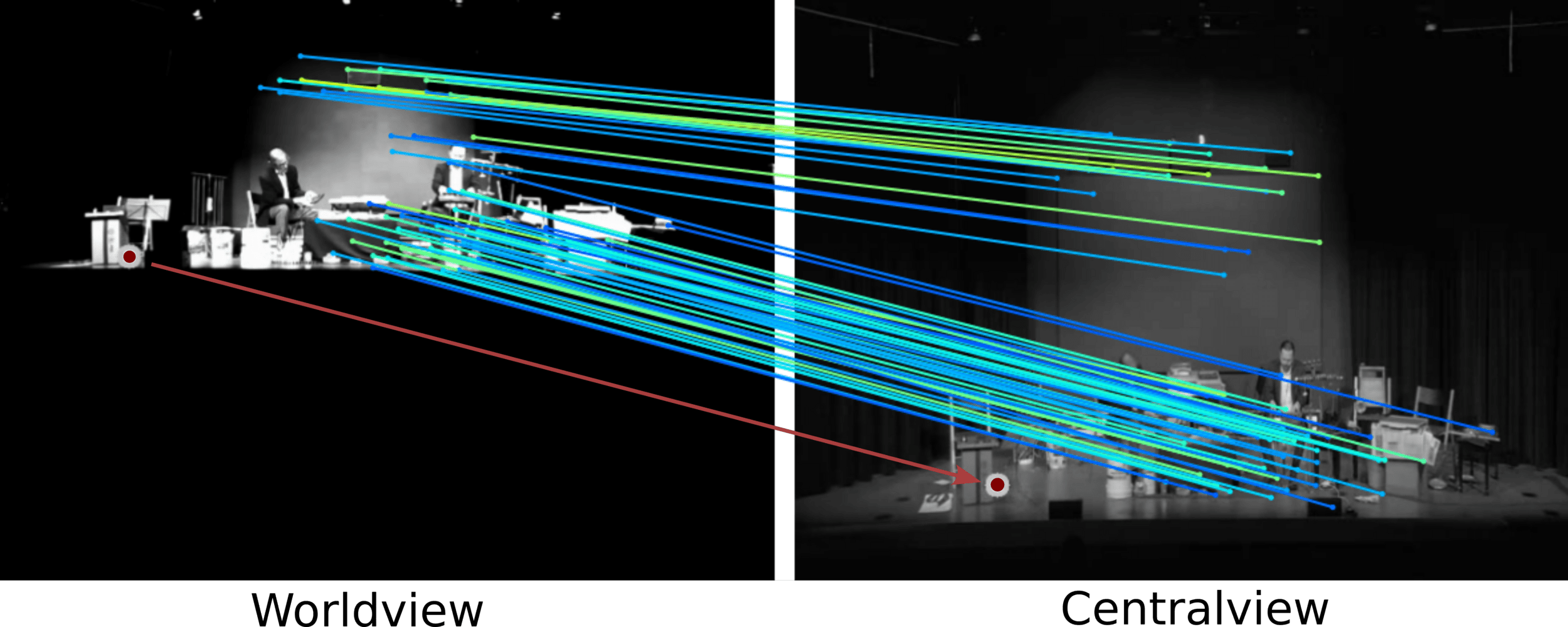

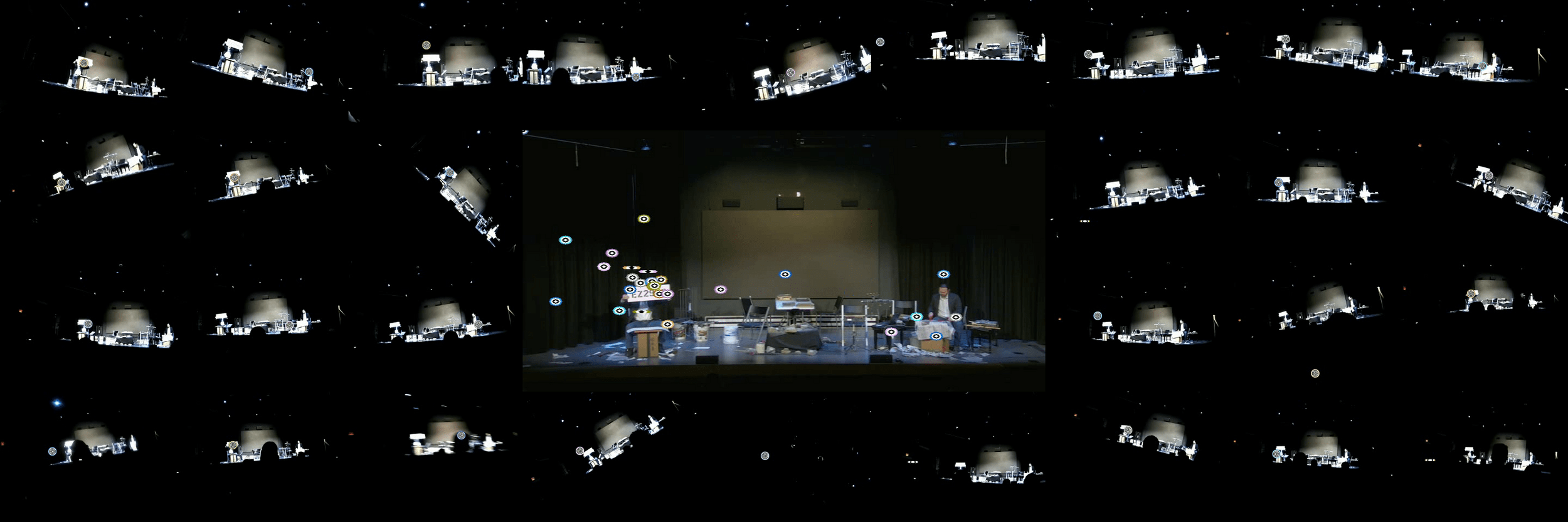

Multi-perspective gaze data

Worldviews

Multi-perspective gaze data

Multi-perspective gaze data

Multi-perspective gaze data

Multi-perspective gaze data

Multi-perspective gaze data

Analysis and visualization tools

Analysis and visualization tools

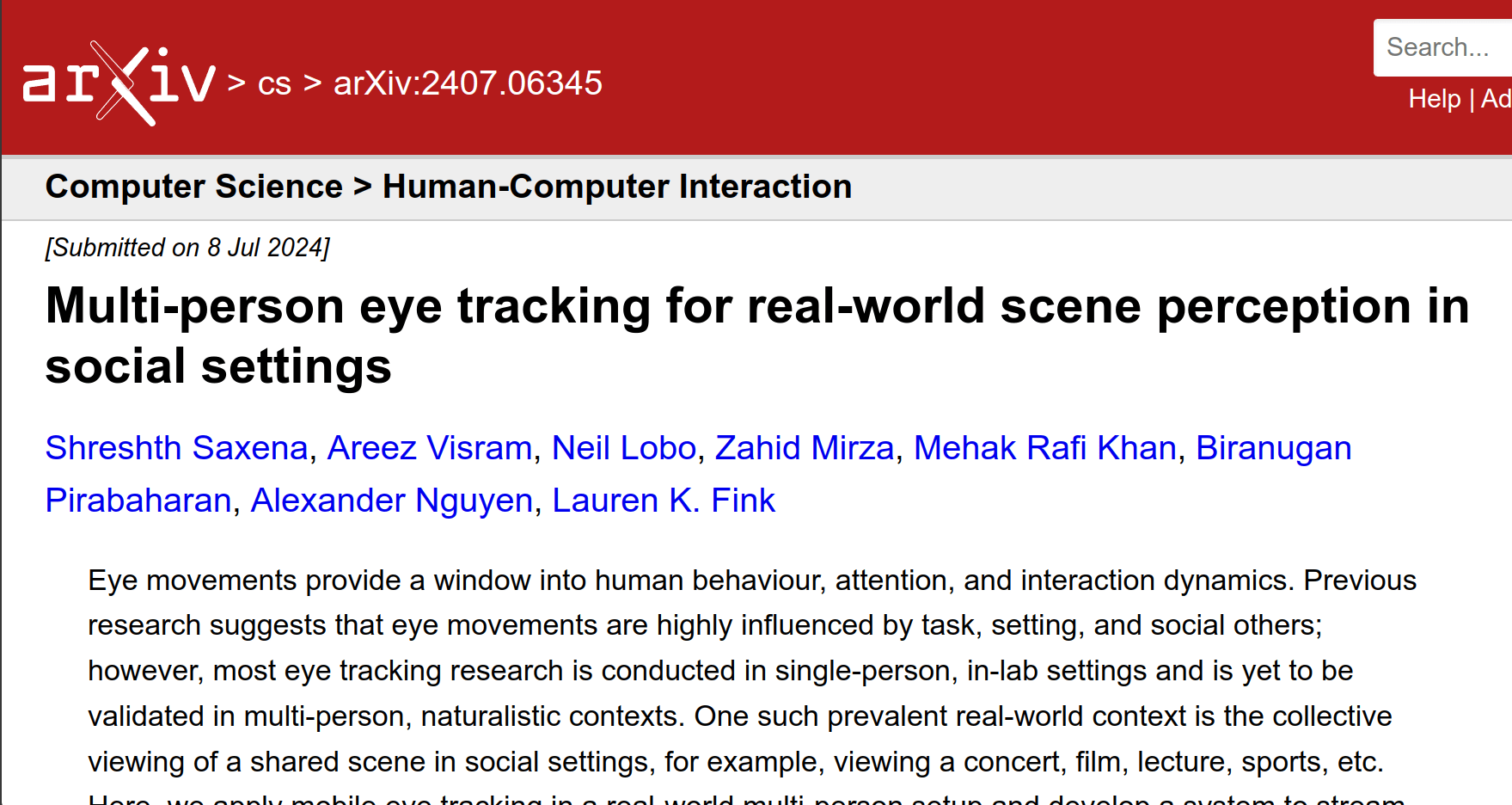

Software Framework

Saxena, Visram, Lobo, Mirza, Khan, Pirabaharan, Nguyen, & Fink. (2024). Multi-person eye tracking for real-world scene perception in social settings. arXiv preprint arXiv:2407.06345 https://doi.org/10.48550/arXiv.2407.06345

Text

Scaling eye-tracking to naturalistic real-world settings

In-person events

Online streaming

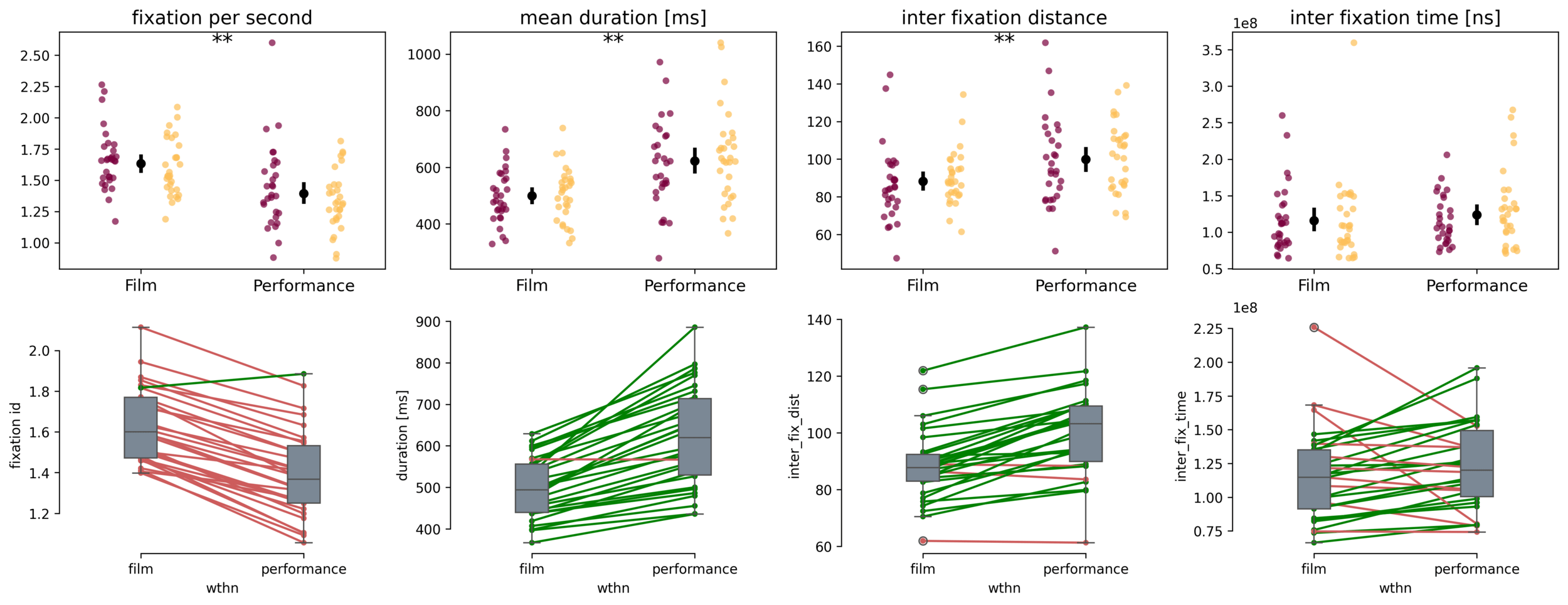

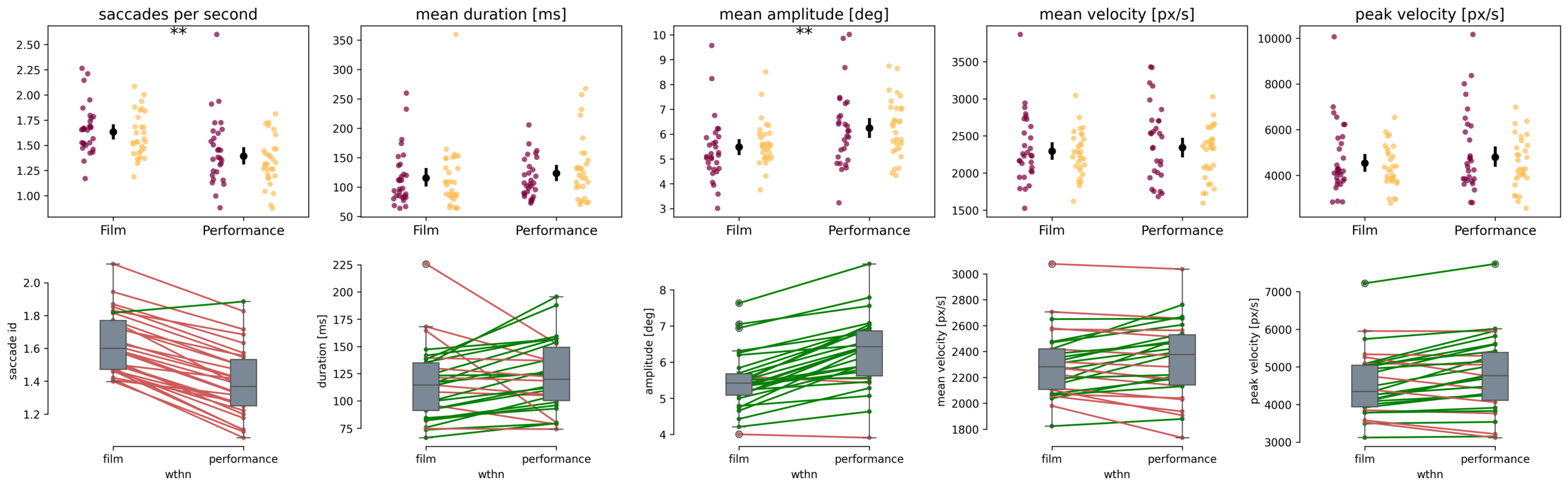

How do eye-movements differ between (in-person) film and concert viewing?

- Less number of fixations and saccades during performance viewing.

-

Longer fixations have been linked to more mental processing, higher interest, informativeness and uncertainty

-

but also daydreaming, disinterest or mind-wandering.

-

- Larger amplitude suggests broader spatial exploration.

Fixations and Saccades

Group 1

Group 2

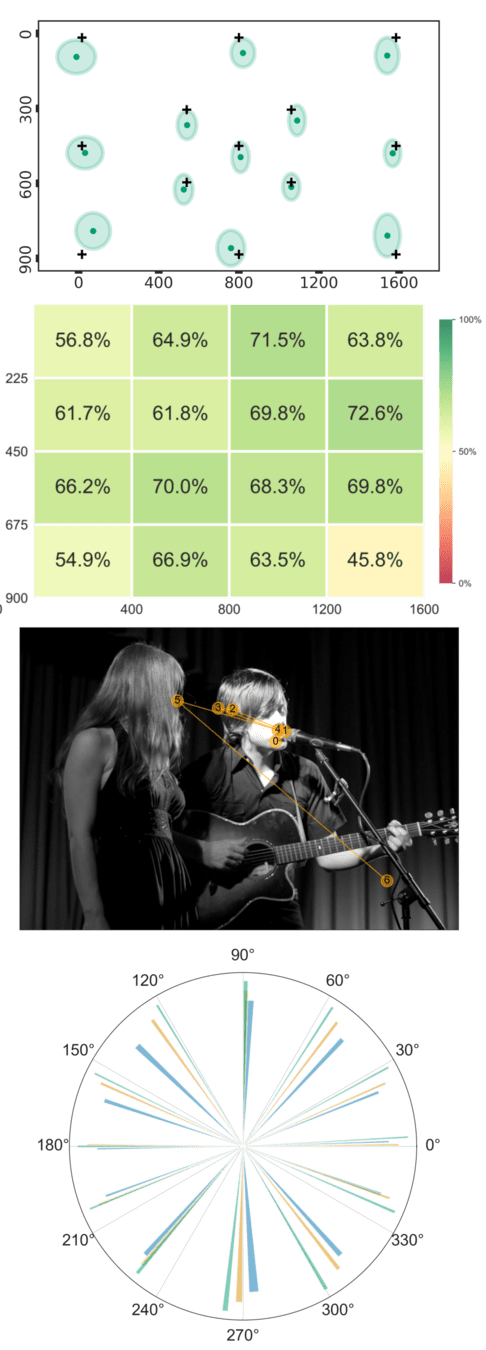

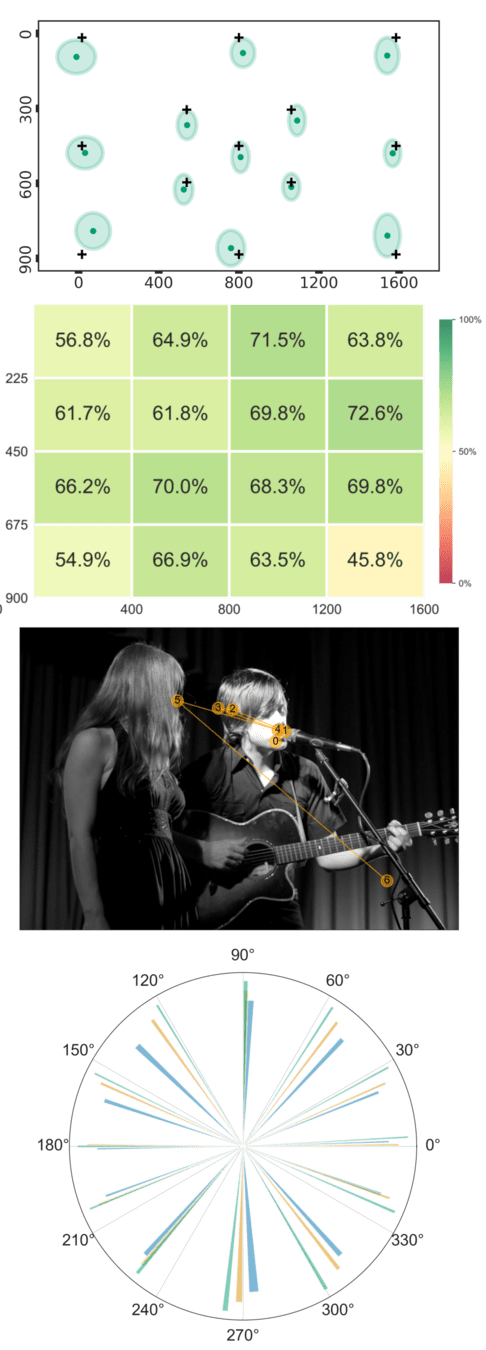

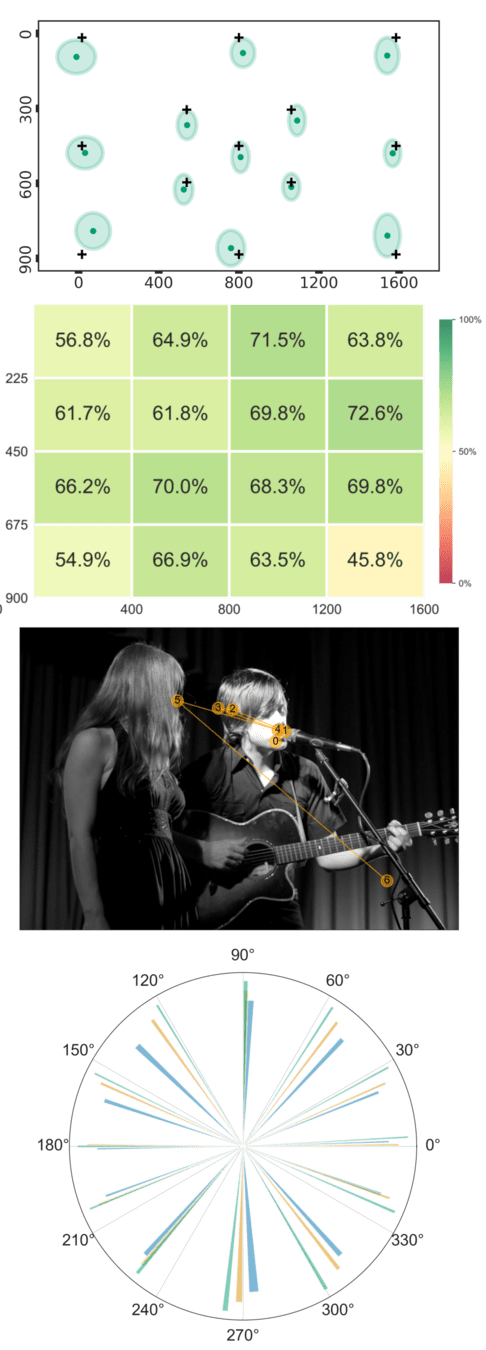

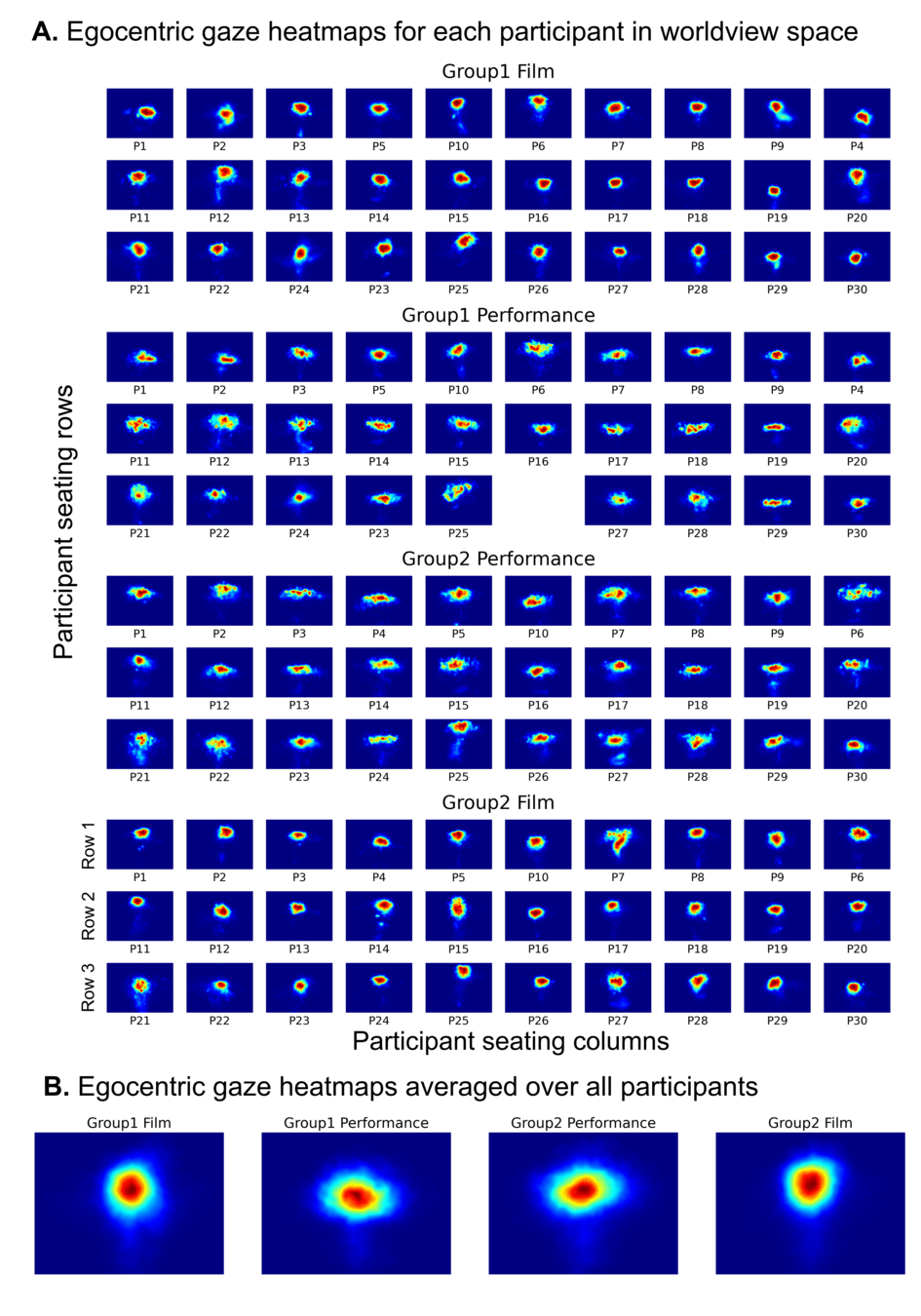

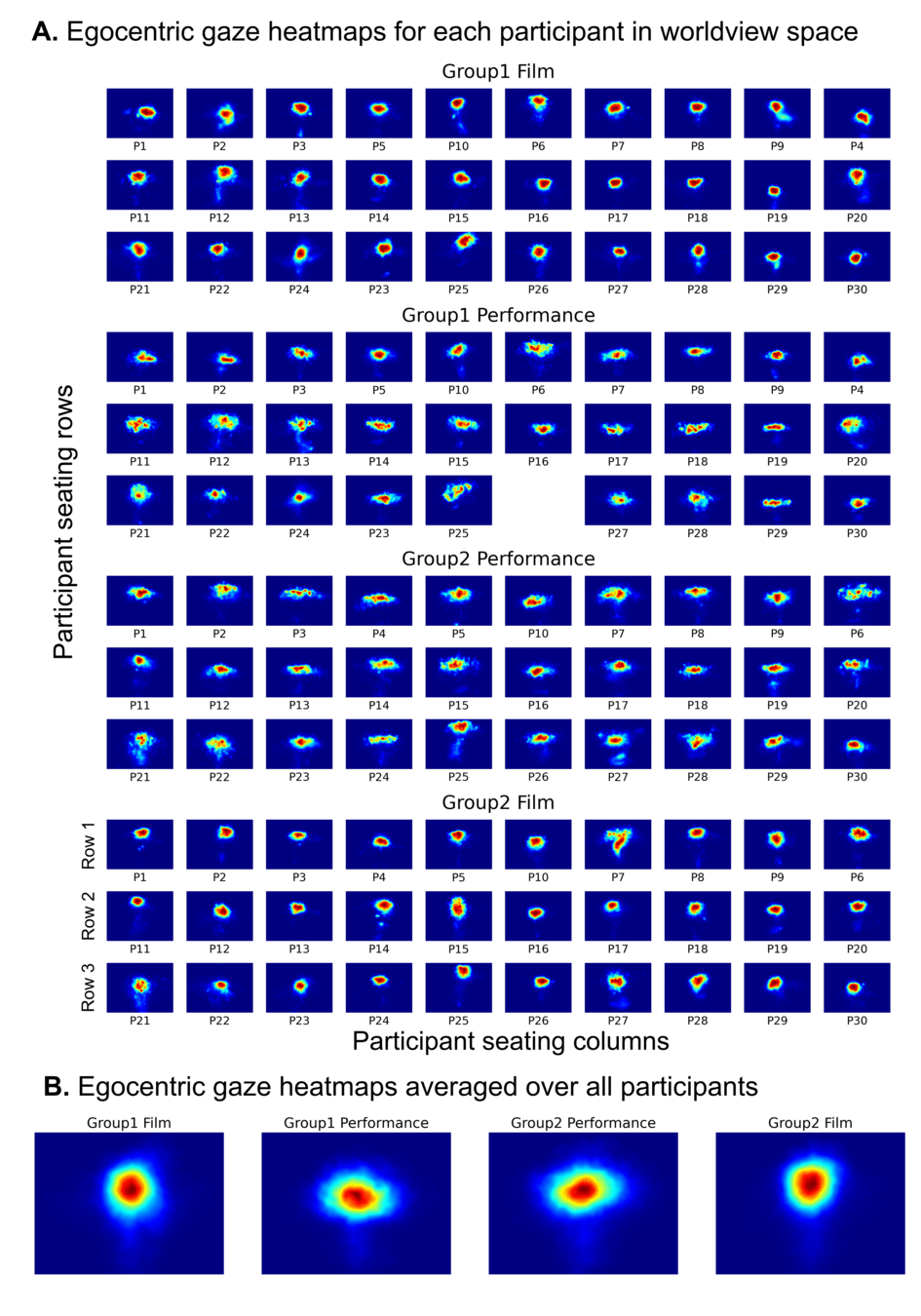

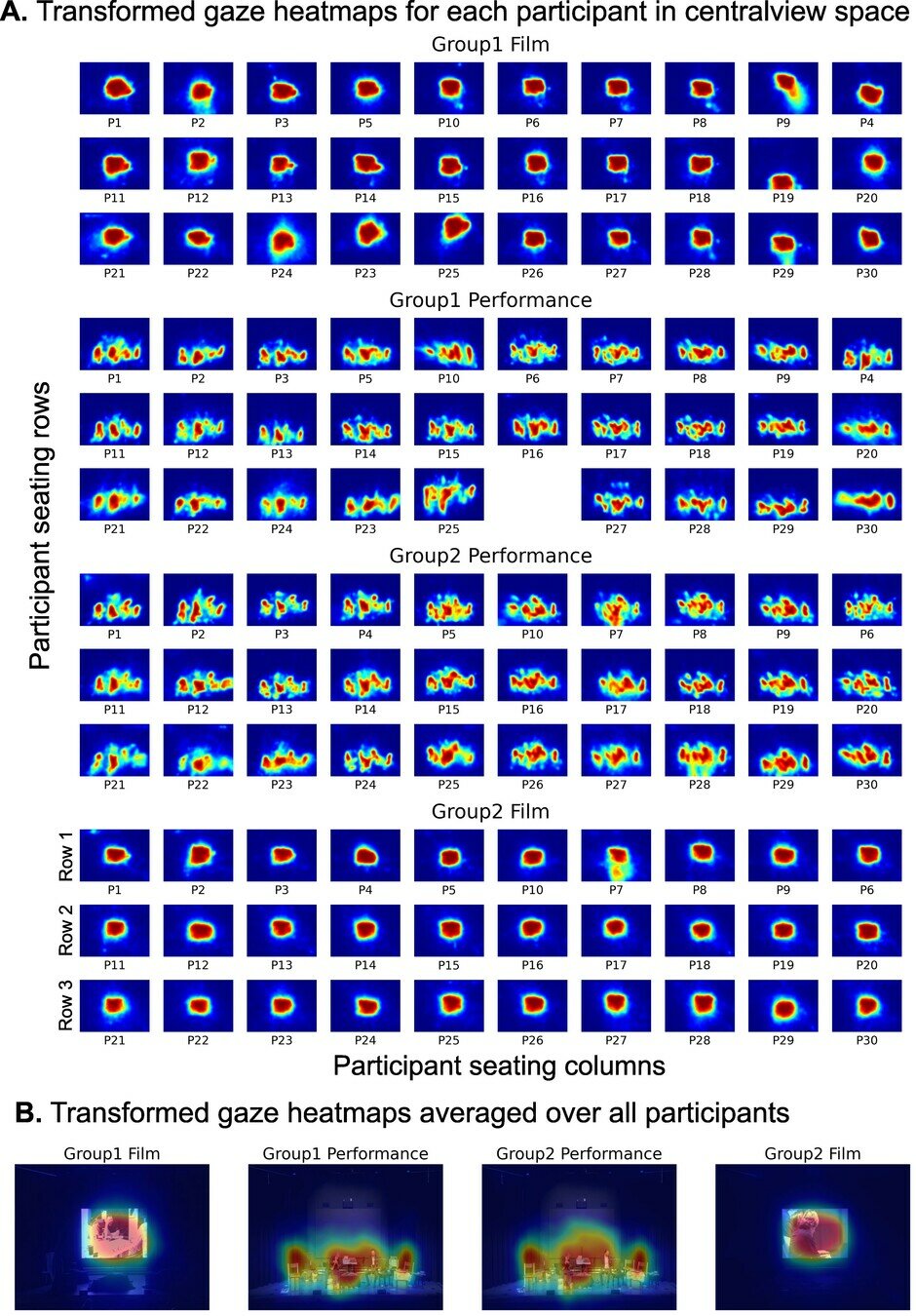

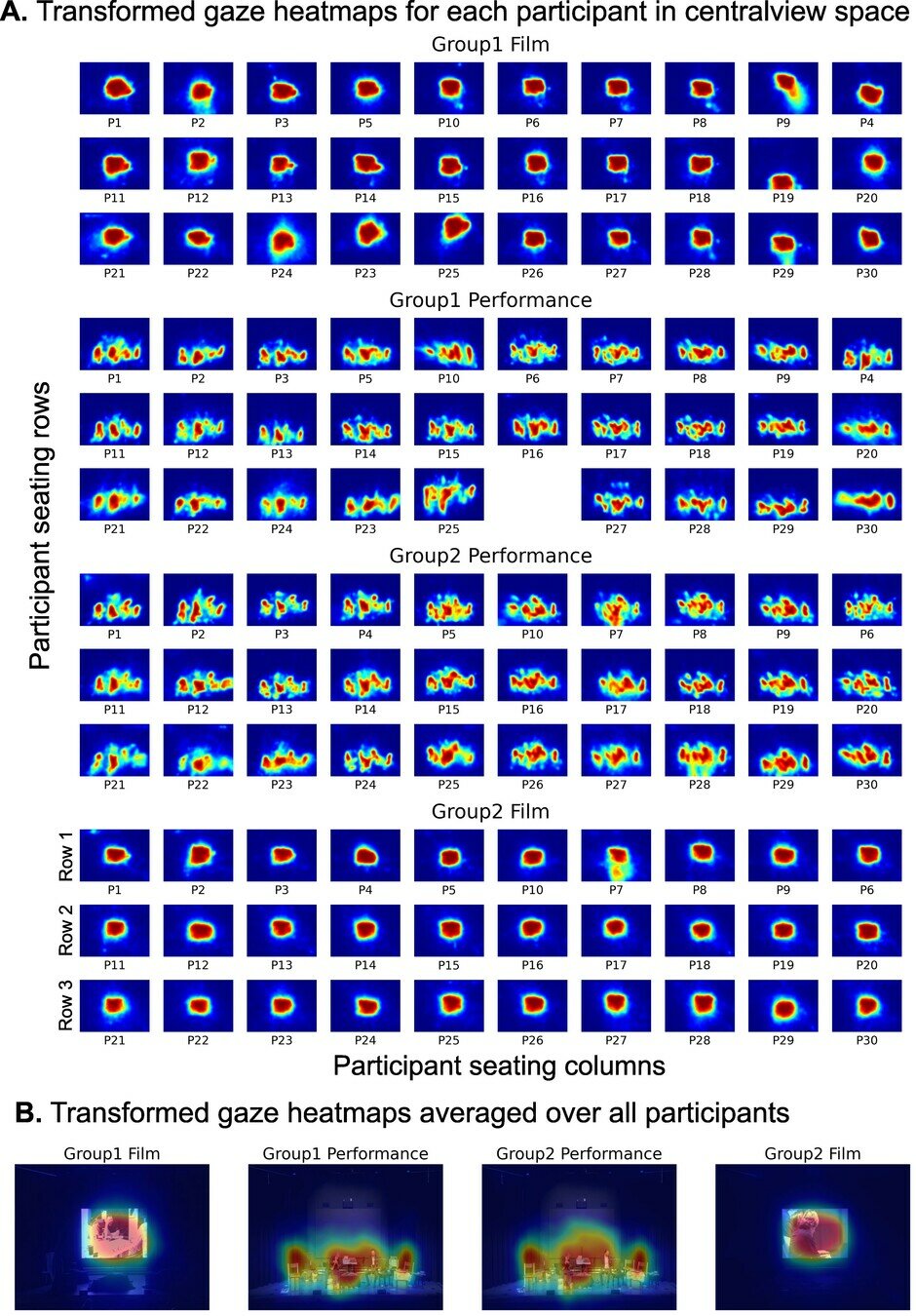

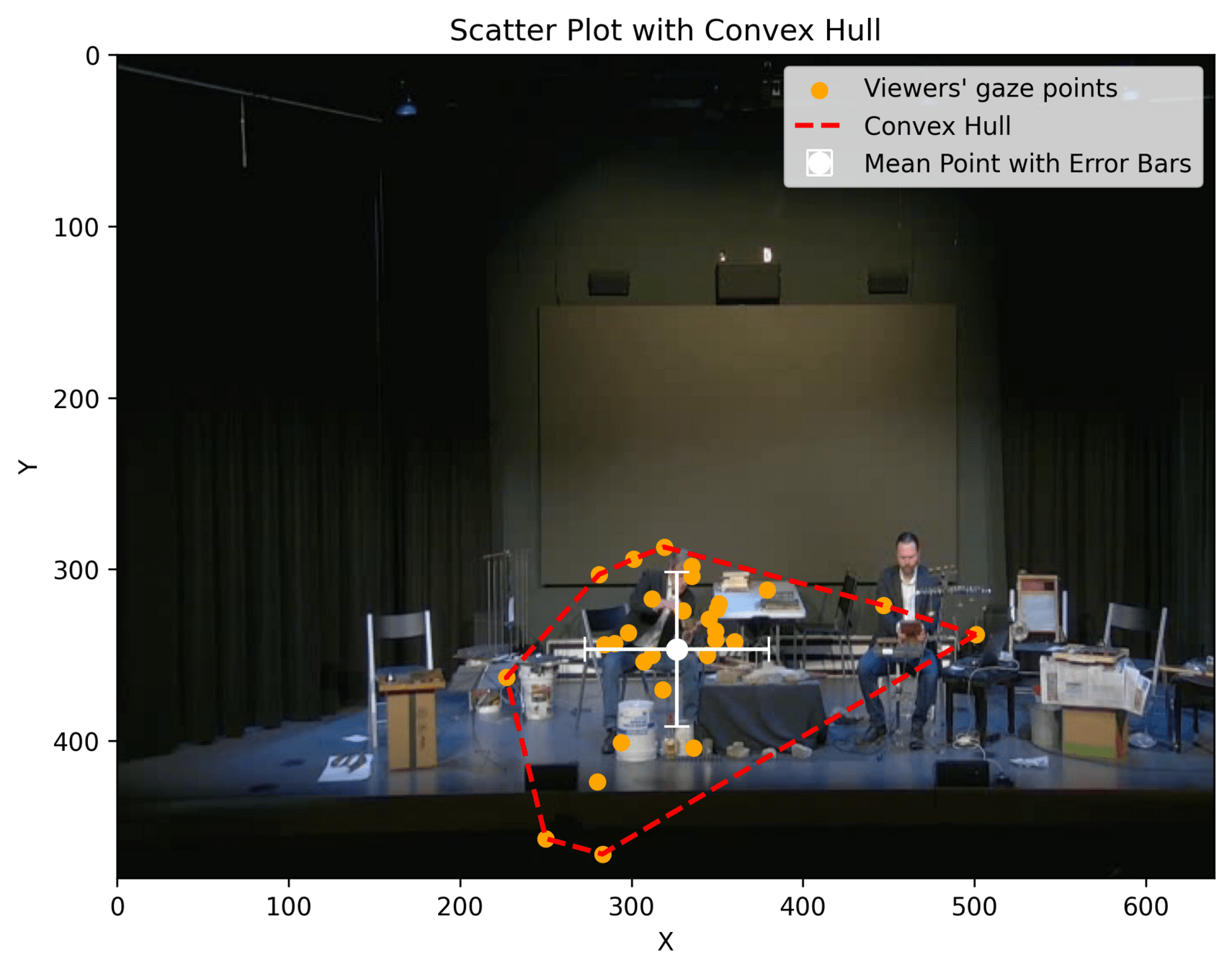

Spatial gaze analysis

(Gaze needs to be transformed to a static reference point - Centralview)

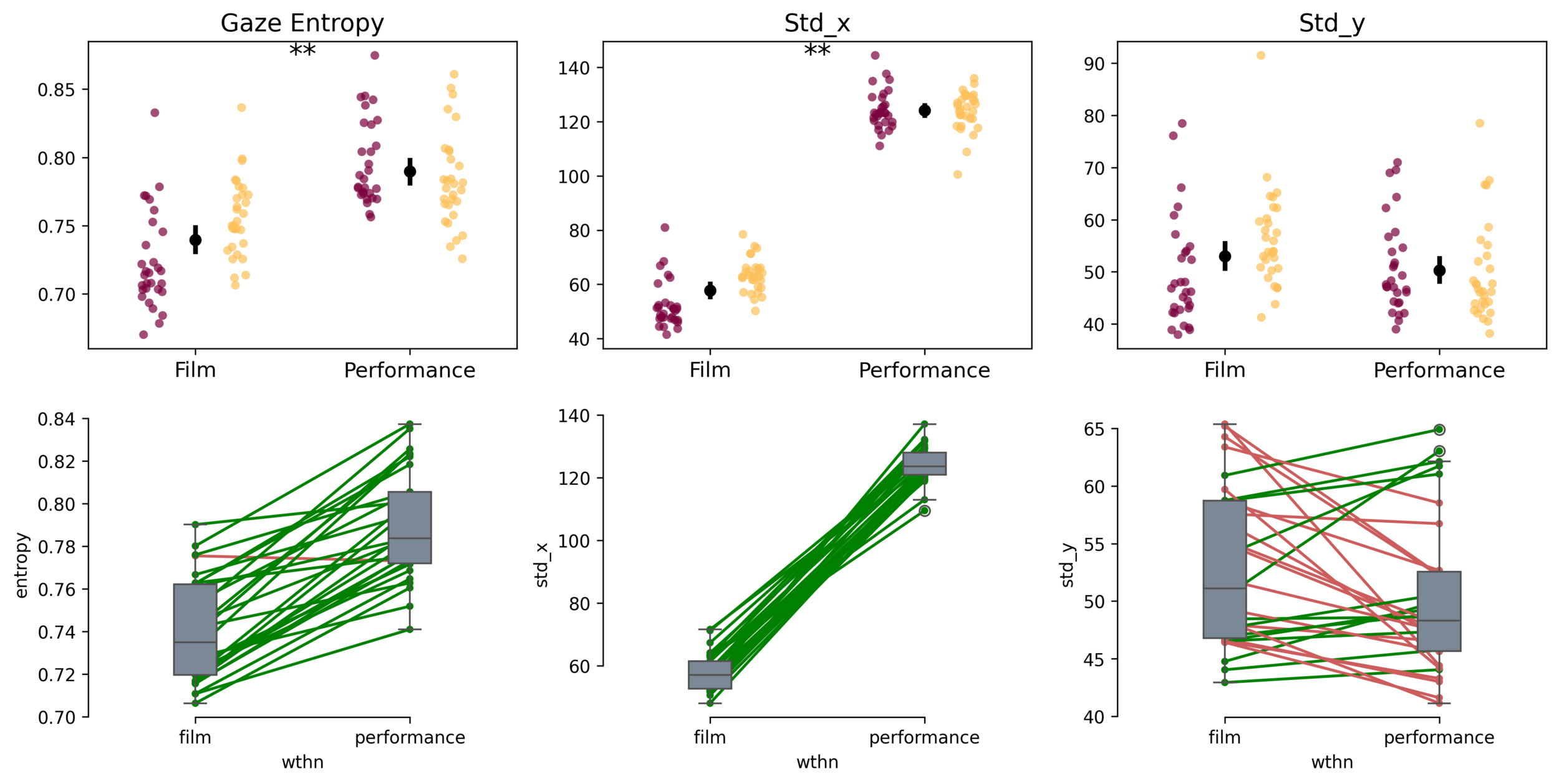

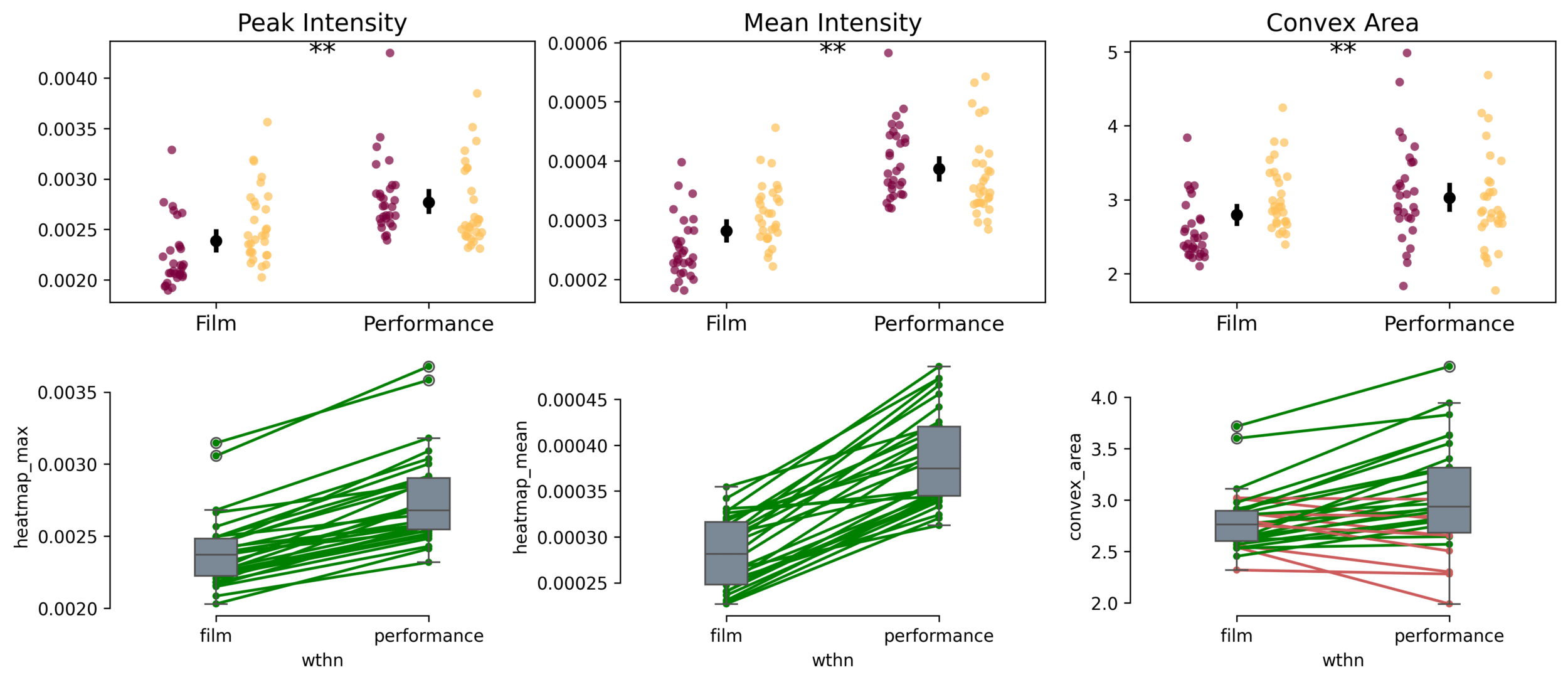

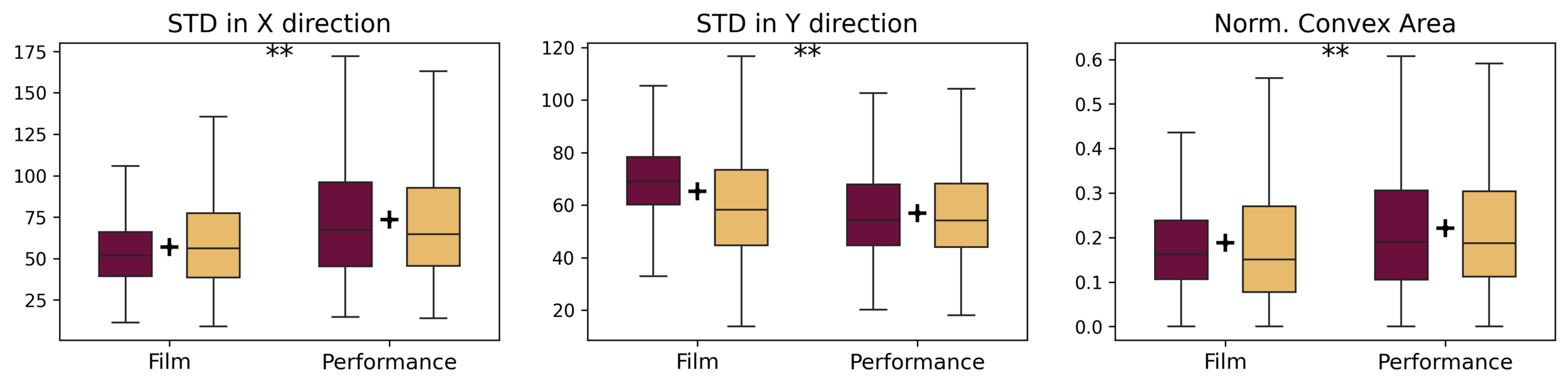

Gaze dispersion

Gaze dispersion

Gaze dispersion

- Transformed gaze allows between-participant spatial comparisons.

- Heatmaps can be further quantified to analyse gaze dispersion in the two mediums.

-

Gaze dispersion over the viewing space (centralview) is higher during performance viewing.

- Screen size could be an important factor here.

- The effect is only observed in horizontal direction (y-axis).

Gaze dispersion

Group 1

Group 2

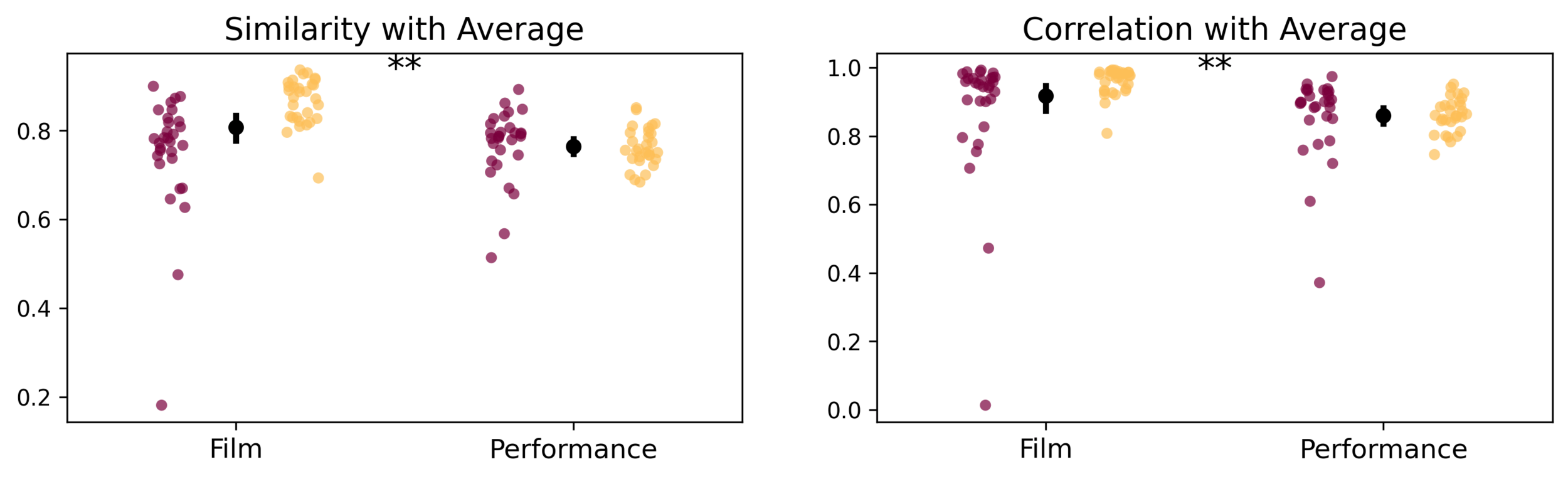

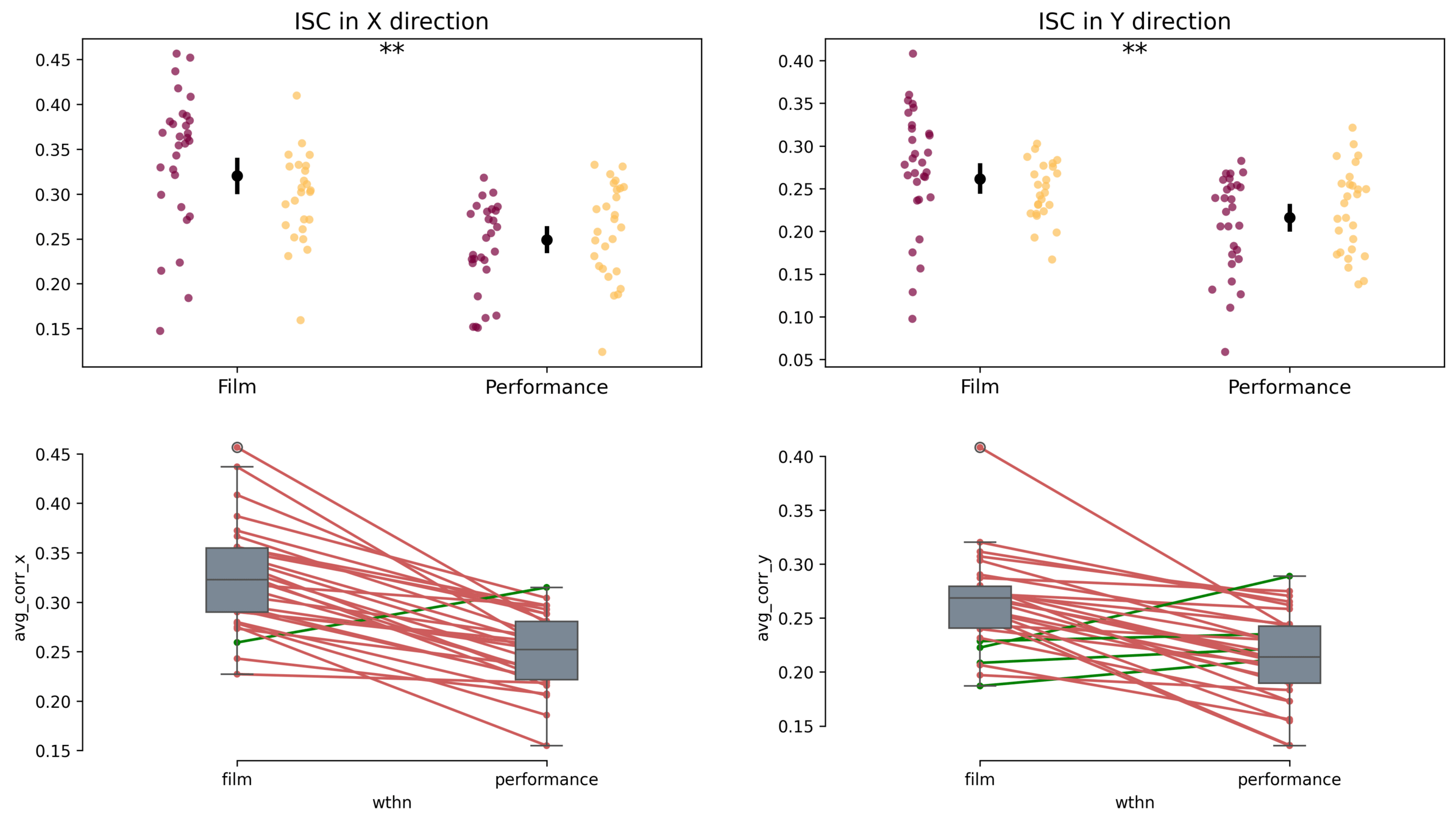

Inter Subject Similarity and Correlation

- Similarity = heatmap intersection; Correlation = Pearson's r

- Spatial distribution of audience gaze was more similar during film viewing.

- Temporal coordination is important to consider as well.

Spatial Similarity and Correlation

Group 1

Group 2

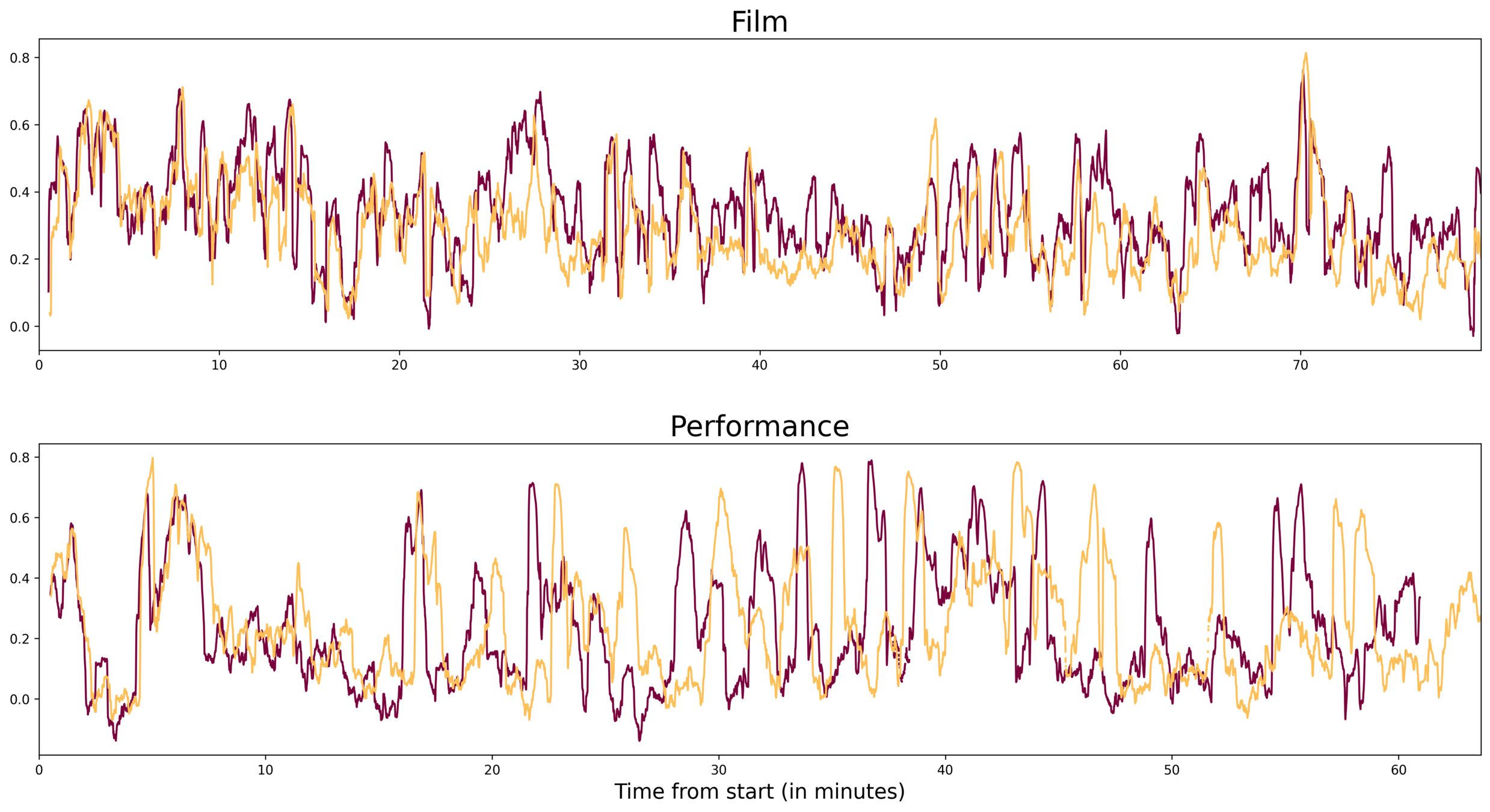

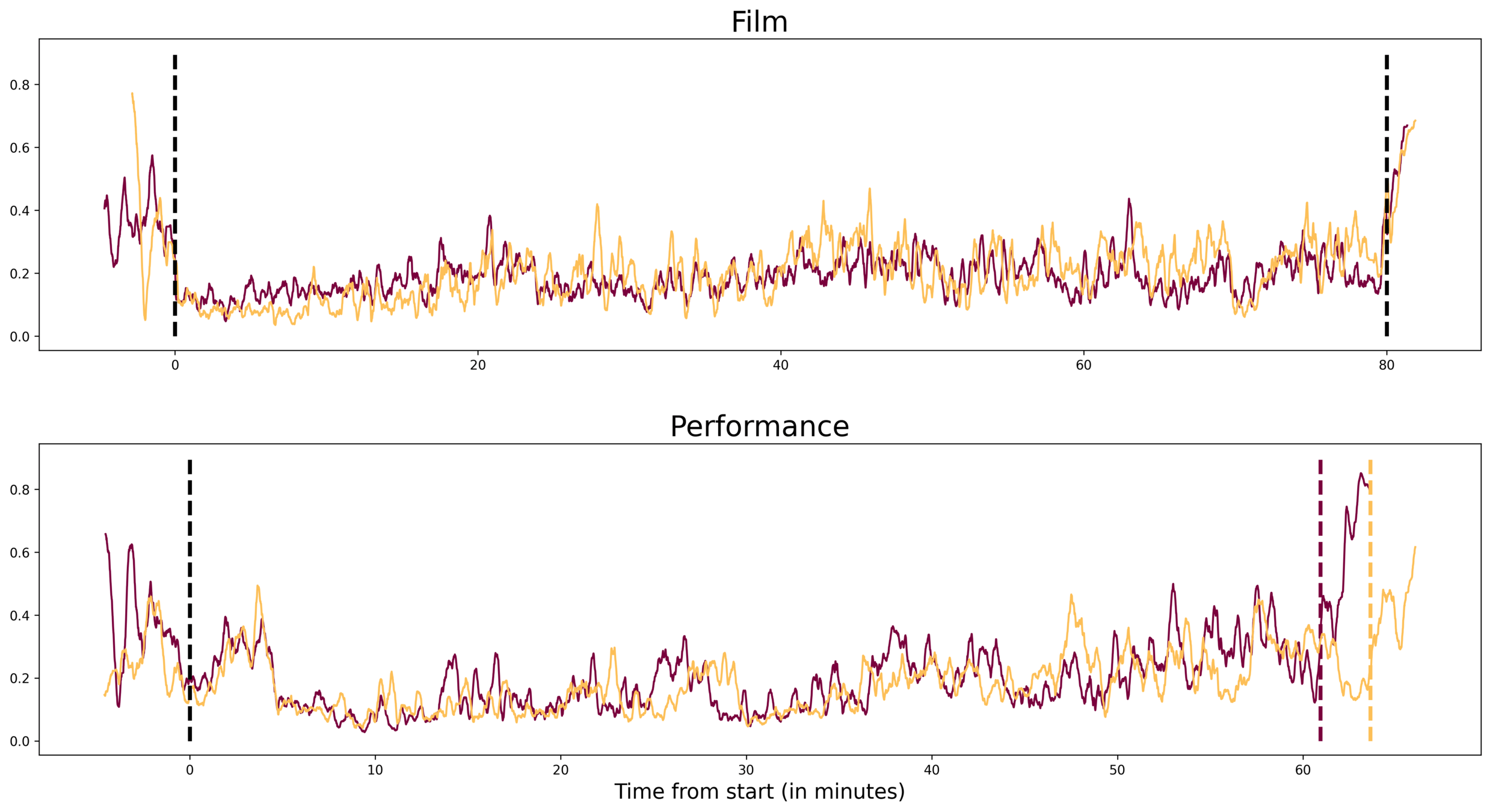

- Gaze synchrony in both mediums are tightly linked in the temporal domain

Inter-Subject Correlation over time

Group 1

Group 2

Short time frame: 30 second window rolling ISC mean

Long time frame: mean ISC over entire duration

Inter-Subject Correlation over time

Group 1

Group 2

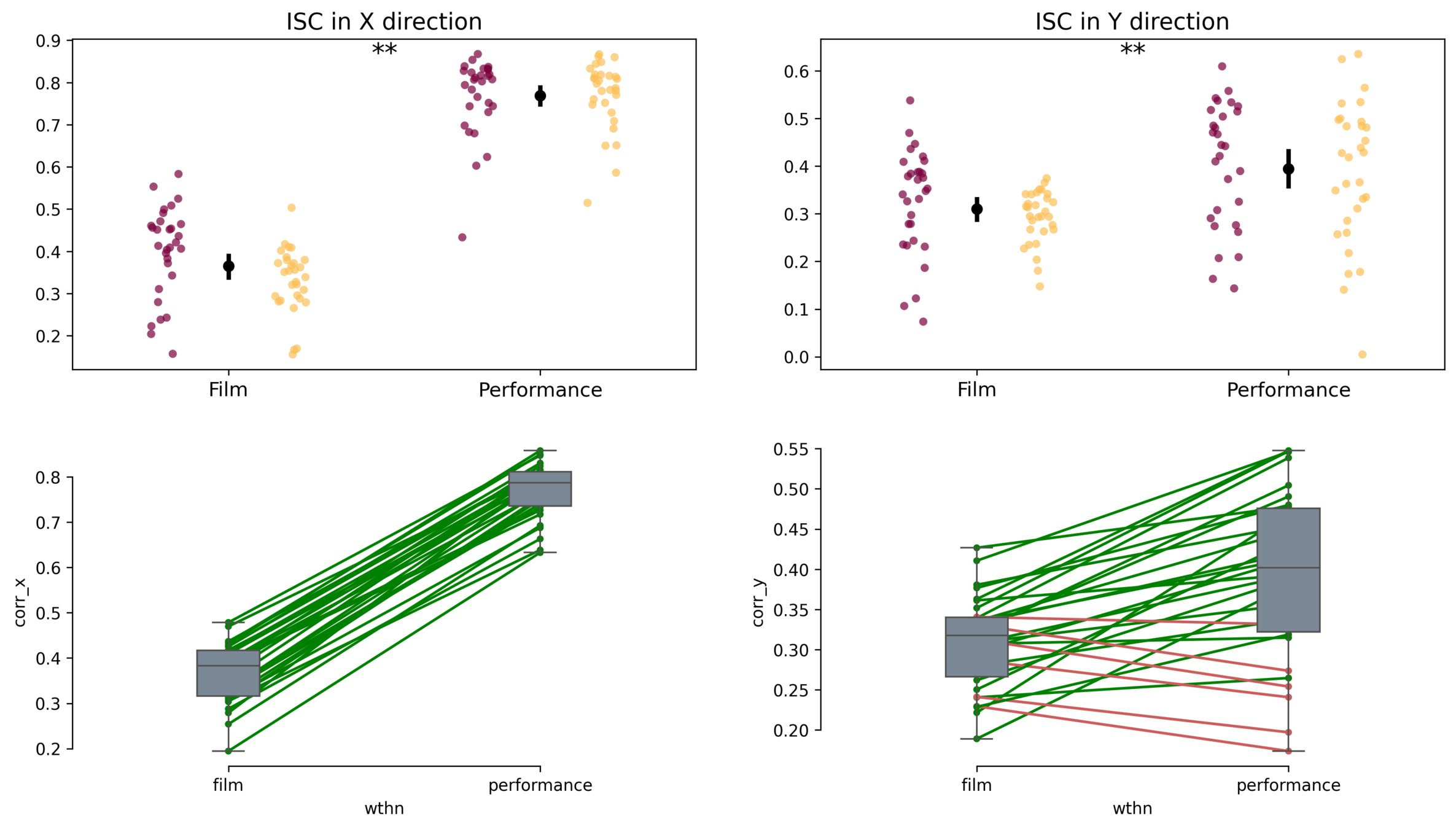

Joint gaze behaviour

Convex Hull, Std_x, Std_y

Joint Gaze Dispersion

Overall higher joint gaze dispersion during Performance

Group 1

Group 2

Joint Gaze Dispersion

- Joint Gaze dynamics in both mediums are tightly linked over the two days in the temporal domain

Group 1

Group 2

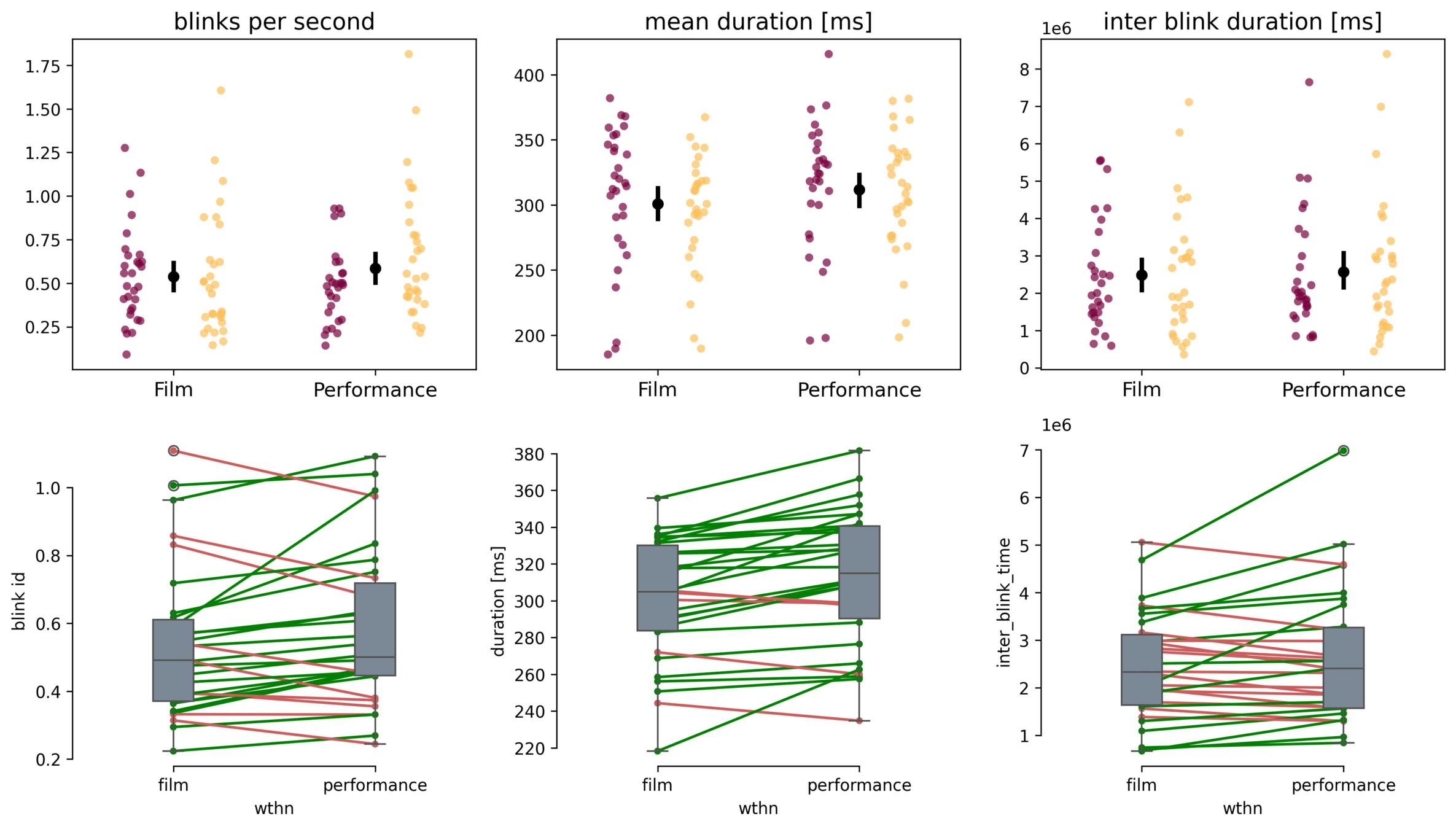

Additional measures

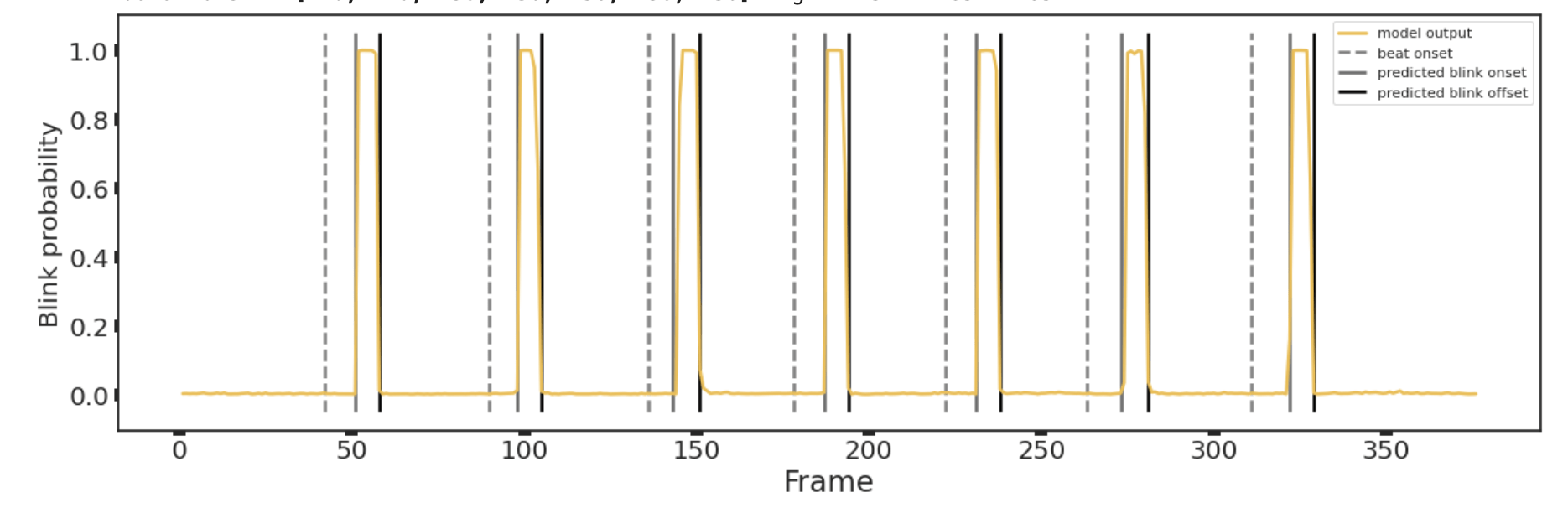

Blinks

- No significant differences in blink activity over entire durations

- However, temporal dynamics are not considered here.

Group 1

Group 2

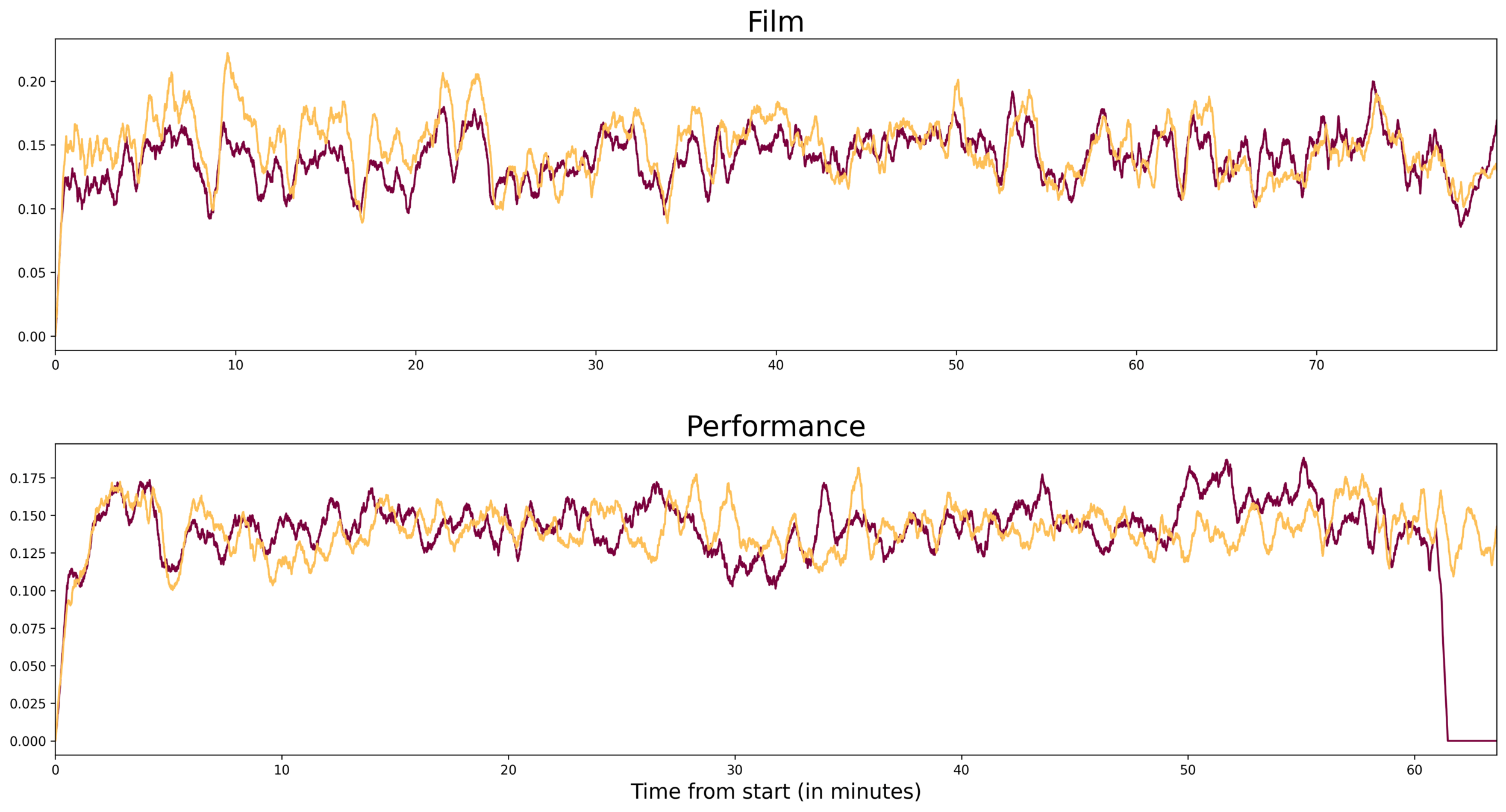

Blink Probability

- Similar to the temporal gaze measures, blink probability also seems to be highly similar across both days

Group 1

Group 2

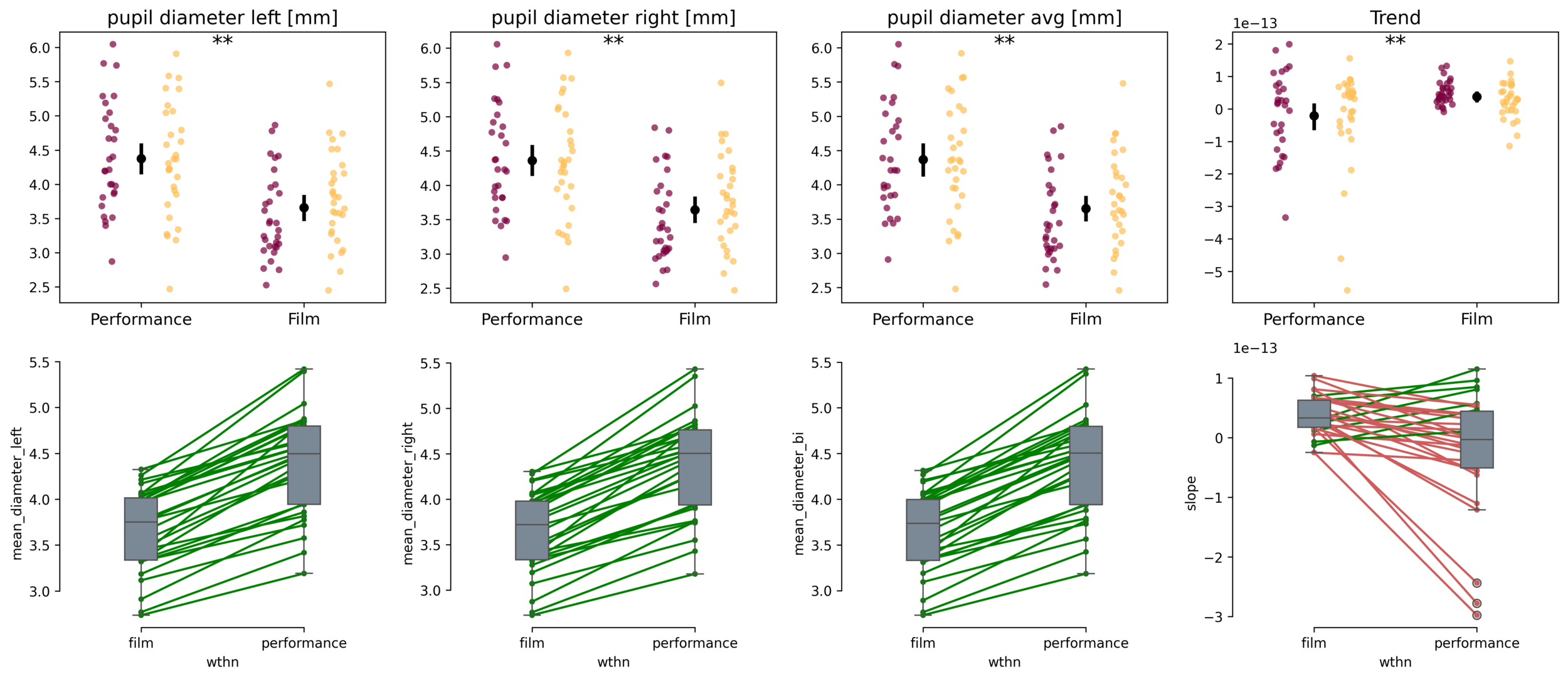

Pupil size

- Mean pupil size was lower during film viewing, however a stronger negative trend was observed during performance.

- Larger diameter has been linked to more mental workload, emotional arousal, and anticipation while a decrease in size can suggest drowsiness and fatigue.

- Screen brightness is an important factor to consider.

Group 1

Group 2

How do eye-movements differ between online and in-person viewing?

Future Directions

-

Analyse and compare the proposed eye-tracking measures from online participants

-

For the in-person audience, pupil activity is recorded in addition to gaze and blinks. Temporal pupil activity has been linked to audiovisual attention in short-duration trials. The collected data can be used to study long-duration dynamics

-

Evaluate the influence of auditory vs. visual stimulation on gaze, blinks, and pupil time series

Future Directions

Conclusions

- Tools to scale eye-tracking to realistic multi-person setups with large audiences

- Analysis methods and preliminary results from a real world study

- Spatiotemporal differences in eye movements when viewing a pre-recorded film vs. a LIVE event

- Comparison of in-person and online audience

- Future analysis linking eye-tracking to stimuli for audiovisual scene understanding

Thank you for your attention

saxens17@mcmaster.ca

Bold

By shreshth saxena

Bold

- 149