ShuffleNet

How do we reduce the computational complexity of CNN while without loss in accuracy while running on Mobile and Edge Devices?

Introduces two new operations:

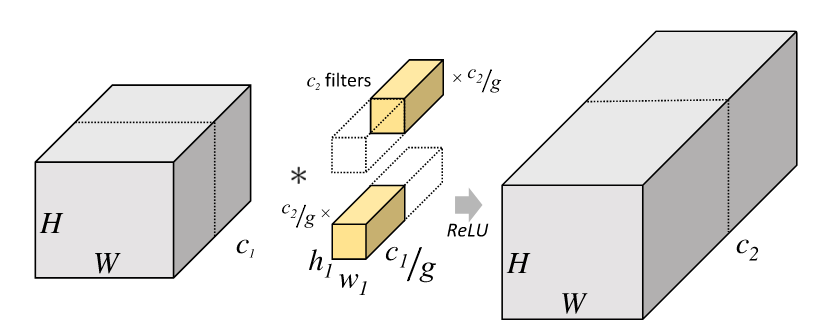

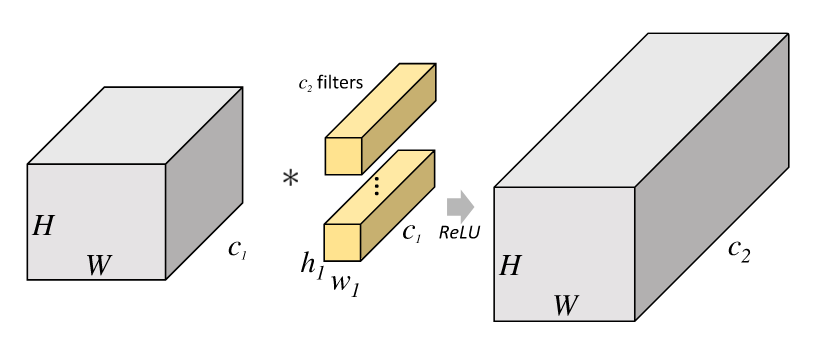

- Pointwise Group Convolutions

- Shuffle Operations

https://tinyurl.com/shufflenet

Current Solutions

- Increase depth (GoogLeNet)

- Residual Networks (ResNet)

- DepthWise Separable Convolutions (Xception, MobileNet, ResNeXt)

- Grouped Convolutions (AlexNet)

- RL (NasNet)

- Pruning, Quantisation, DFT/FFT

Problem With Group Convolutions

- ResNeXt only 3 × 3 layers are equipped with group convolutions. As a result, for each residual unit in ResNeXt the pointwise convolutions occupy 93.4% multiplication-adds

- if multiple group convolutions stack together, there is one side effect: outputs from a certain channel are only derived from a small fraction of input channels

- This property blocks information flow between channel groups and weakens representation

Solution

-

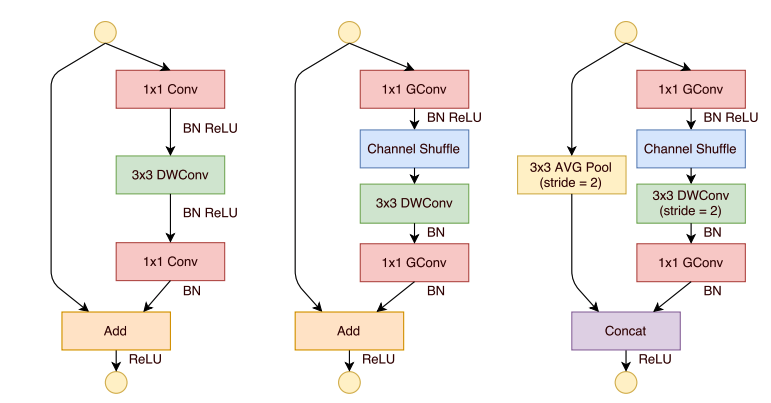

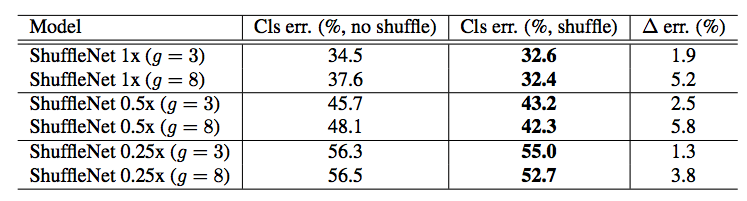

Channel Shuffle for Group Convolutions

-

If we allow group convolution to obtain input data from different groups, the input and output channels will be fully related

-

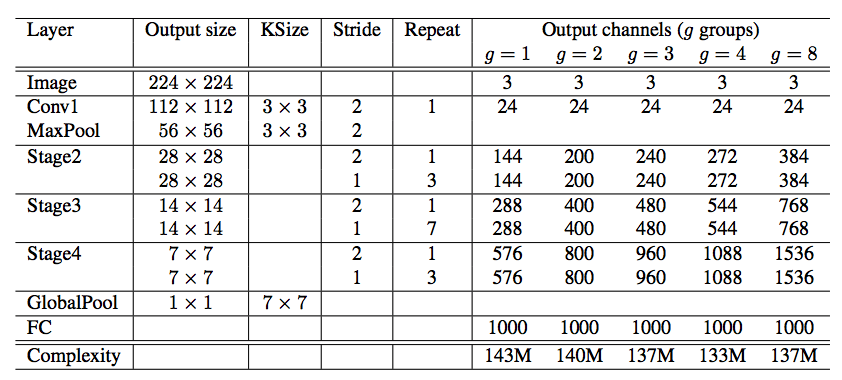

Architecture

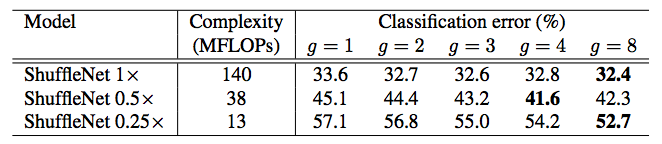

Result

Scaling Factor means scaling the number of filters in ShuffleNet 1× by s times thus overall complexity will be roughly s^2 times of ShuffleNet 1×

ShuffleNet

By Soham Chatterjee

ShuffleNet

- 1,907