Spanner: Google’s Globally-Distributed Database

Fernanda Mora

Luis Román

Corbett, James C., et al. "Spanner: Google’s globally distributed database." ACM Transactions on Computer Systems (TOCS) 31.3 (2013): 8.

Content

- Introduction

- Key ideas

- Implementation

- Concurrency control

- Evaluation

- Future work & Conclusions

Introduction

Storage now has multiple

requirements

- Scalability

- Responsiveness

- Durability and consistency

- Fault tolerant

- Easy to use

Previous attempts

-

Relational DBMS: MySQL, MS SQL, Oracle RDB

- Rich features (ACID)

- Difficult to scale

-

NoSQL: BigTable, Dynamo, Cassandra

- Highly scalable

- Limited API

Motivation

Spanner: Time will never be the same again

-Gustavo Fring

To build a transactional storage system replicated globally

¿What is the main idea behind Spanner?

How?

- Main enabler is introducing a global "proper" notion of time

Key ideas

Key ideas

- Relational data model with SQL and general purpose transactions

- External consistency: transactions can be ordered by their commit time, and commit times correspond to real world notions of time

- Paxos-based replication, with number of replicas and distance to replicas controllable

- Data partitioned across thousands of servers

Basic Diagram

Data center 1

Data center 2

Data center 3

Spanservers

...

Spanservers

Spanservers

...

...

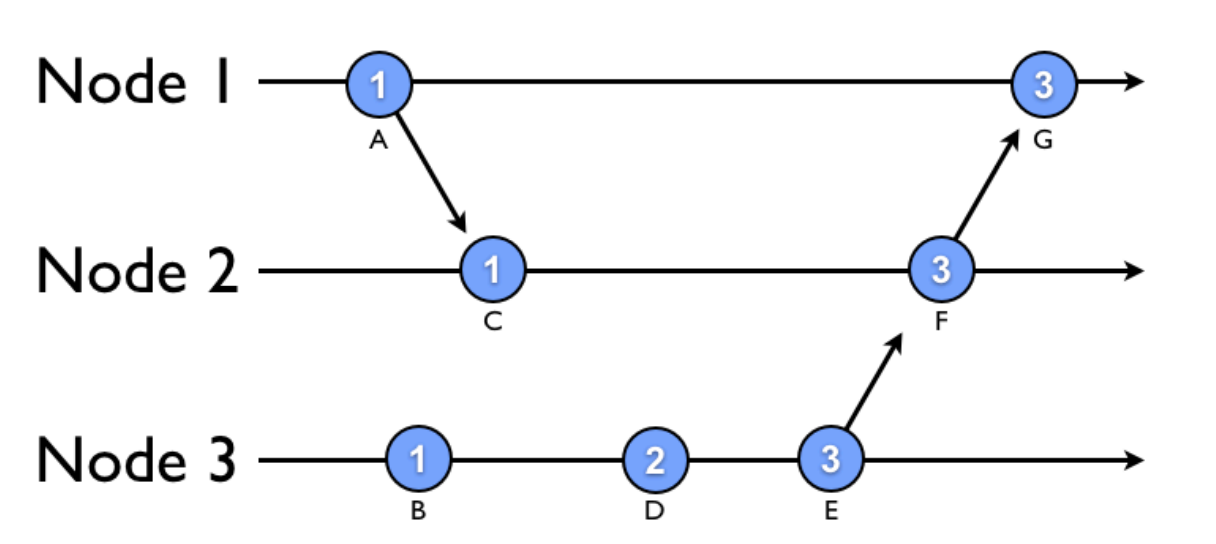

Replication

Replication

Millions of nodes, hundreds of datacenters, trillions of database rows

Implementation

Server organization

Storage data model: tablets

- Each spanserver has 100-1000 tablets

- Optimized data structure to track data by row/column and location (stored in Colossus)

- Table mapping:

- Tablet's state is tracked and stored

(key:string, timestamp:int64) -> stringStorage data model

- Each tablet has a Paxos state machine that mantains its log and state

- Paxos has long-lived leaders

- Paxos is used to maintain consistency among replicas: writes must initiate at the leader, reads from any recent replica

Paxos Group

Storage data model

- Each leader has a lock table with state for two phase locking, mapping range of keys to lock states

- Transaction manager supports distributed transactions and selects participant leader, which coordinates Paxos between participant groups

Data movement

- Directories: smallest units of data placement

- Looking for similarity or closeness

So how does Spanner distribute data?

External consistency

- All writes in a transaction are globally ordered by timestamp

- If the start of a transaction T2 occurs after the commit of a transaction T1, then

- We need sinchronized clocks to determine the most recent write to each objects

More difficulties!

-

Sinchronization algorithms

-

Implementation

-

Practical use

-

Global scale

First option: Lamport timestamps

-

But we can't distinguish concurrent events!

Another option: Vector clocks

-

Only partial order: what about concurrent events?!

How do we ensure that all nodes have consistent clock values?

- Use time synchronization (GPS + atomic clocks on some nodes)

- Plus network time synchronization protocols (where nodes exchange times with each other and adjust their clocks accordingly)

Can't there still be small differences between clocks on nodes?

- Yes: API TrueTime is able to estimate the accuracy of a node's clock, guaranteeing that

Can't there still be small differences between clocks on nodes?

- Yes: API TrueTime is able to estimate the accuracy of a node's clock, guaranteeing that

How does this function?

- Suppose T1 commits at time t1 on N1, and T2 commits at time t2 on N2. We want to ensure that T1 is assigned a commit time that is before T2's. How do we do this?

- We could do it trivially by ensuring that T2 doesn't commit until after t1.latest , e.g., that t2.earliest > t1.latest. But then N1 and N2 would have to know about all of each other's transactions.

- Instead, if we just ensure that T1 holds its locks until t1.latest, then we can ensure that T1 commits before T2 commits.

How is TrueTime implemented?

- Set of time master machines per data center

- Timeslave daemon per machine

GPS receivers

Atomic clocks

Concurrency control

xxx

Evaluation

Microbenchmarks

Distribution of TrueTime values, sampled right after timeslave daemon polls the time masters. 90th, 99th and 99.9th percentiles

Scalability

2PC scalability. Mean and sd over 10 runs

Avalability

Effect of killing servers on throughput

TrueTime

Distribution of TrueTime values (percentiles), sampled right after timeslave daemon polls the time masters.

Future Work & Conclusions

Future work

- Doing reads in parallel: non-trivial

- Support direct changes on Paxos configurations

- Reduce TrueTime < 1 ms

- Poor single-node performance

- Automatically movement of client-application processes

Summary

- Replica consistency: using Paxos

- Concurrency control: using two-phase locking

- Transaction coordinator: using 2PC

- Timestamps for transactions and data items

Global scale database with strict transactional guarantees

Conclusions

Easy-to-use

Semi-relational interface

SQL-based query language

Scalability

Automatic sharding

Fault tolerance

Consistent replication

External consistency

Wide-area distribution

Distribuidos_Presentación

By Sophie Germain

Distribuidos_Presentación

Presentación para la clase de Algoritmos

- 1,565