Machines Reading Maps

Machines Reading Maps Summit April 20-21, 2023

- Invite-only Stakeholder Introduction to MRM

- Public Introduction to MRM

- Invite-only Community-building discussion

- MRM/Recogito Workshop

Who

Machines Reading Maps (MRM) is a collaborative project of

- University of Southern California Digital Library

- Computer Science & Engineering Department at the University of Minnesota (US)

- The Alan Turing Institute (UK).

- The David Rumsey Map Collection (davidrumsey.com)

The project is funded by the

- United States’ National Endowment for the Humanities (NEH)

- United Kingdom’s Arts and Humanities Research Council (AHRC) under the first round of NEH/AHRC New Directions for Digital Scholarship.

- David & Abby Rumsey

What is the goal?

The goal of MRM is to make scanned historical map content easily searchable & support complex queries and Create a generalizeable ML pipeline that uses human collaboration to:

-

Process printed text on scanned maps

-

Enrich the printed text

-

Convert the printed text to structured data

Metadata search is insufficient

Why let

Machines Read Maps?

There are millions of scanned maps available, publicly, now.

The infrastructure that those maps are served from is well suited to this work

Modern data is insufficient

Existing spatial data sources only contain information about the present (modern placenames), but even those are incomplete...

Source: GNS, National Geospatial-Intelligence Agency

How does MRM work?

Image Cropping

Text Spotting

- Using TExt Spotting TRansformers (TESTR), a generic end-to-end text spotting framework using Transformers for text detection and recognition in the wild.

- TESTR is particularly effective when dealing with curved text-boxes where special cares are needed for the adaptation of the traditional bounding-box representations.

- Different from OCR, which is adept at extracting separate words, where here we are interested in FULL LOCATION PHRASES

Human Annotations

SynMap+ Training Data

- Use a generative adversarial style transfer network (CycleGAN) to convert an OSM image to the historical style,

- Associate the font, style and placement strategy according to the underlying geographical features

- Use "rule-based" labeling from the QGIS PAL API to place the text labels on the synthetic map background,

- designed an approach to automatically generate the polygon, centerline and local height annotation for the text labels.

SynthText Training Data

PatchTextSpotter & PatchtoMapMerging

Coordinate Converter

Coordinate Converter

PostOCR & Entity Linker

"This is unreasonably cool, and so visually pleasing, it nearly tickles. It also feels like I’m looking at one of those moments where everything changes."

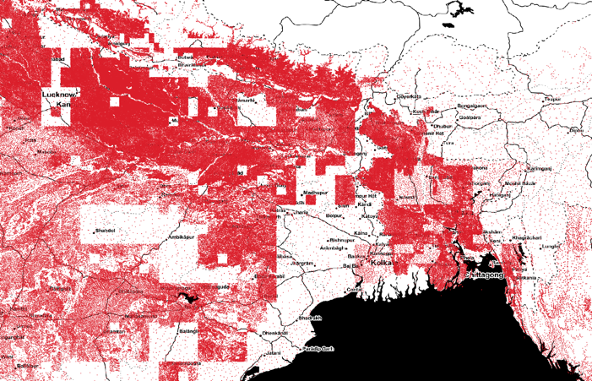

The Data

Next Steps: Build Community

More Info:

Machines Reading Maps

By Stace Maples

Machines Reading Maps

- 1,375