Decision Tree Classifiers

Suchin Gurururangan

Today

• Decision Tree Construction

• Decision Tree Prediction

• Advantages and Issues

Yesterday

• Bias-Variance Tradeoff

A brief history

- Developed by Breiman, Friedman, Olshen, Stone in early 80’s.

- Introduced tree-based modeling into the statistical mainstream

- Many variants:

- CART -- the classic, used in scikit learn

- CHAID

- C5.0

- ID3

Will Jane ride her bike to work today?

Day

Raining?

Temp

Humidity

Rode bike

D1

Yes

67

Low

Yes

D2

No

61

Med

Yes

D3

Yes

54

High

No

D4

No

84

High

Yes

D5

Yes

46

Low

No

D6

No

75

Med

Yes

Today

Yes

65

Low

?

Label

D7

Yes

65

High

No

D8

Yes

79

High

Yes

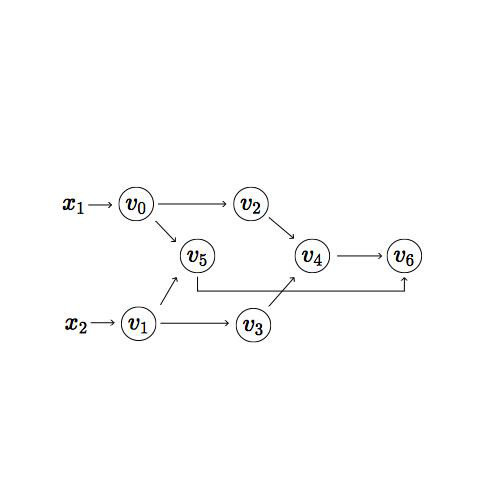

High level approach

- Pick feature to split training data samples into subsets

- Measure the purity of each subset

- If the subset is pure, then assign classification to majority class

- Else repeat

Training:

Prediction:

1. Use feature combinations to figure out which subset new sample is in

2. Assign new sample to corresponding class

Recursive Partitioning

Rain

Yes

No

Temp < 60

Temp > 60

3 Yes / 0 No

0 Yes / 2 No

2 Yes / 1 No

Humidity High

1 Yes / 1 No

2 Yes / 3 No

Humidity Low

1 Yes / 0 No

Prediction

Today

Yes

65

Low

?

Day

Raining?

Temp

Humidity

Rode bike

Rain

Yes

No

Temp < 60

Temp > 60

Humidity High

Humidity Low

1 Yes / 0 No

Yes

Question: What's the complexity of prediction on a binary decision tree?

How do we decide what attribute to split on?

Rain

Humidity

Yes

No

3 Yes / 0 No

2 Yes / 3 No

High

Med

Low

1 Yes / 1 No

2 Yes / 0 No

2 Yes / 2 No

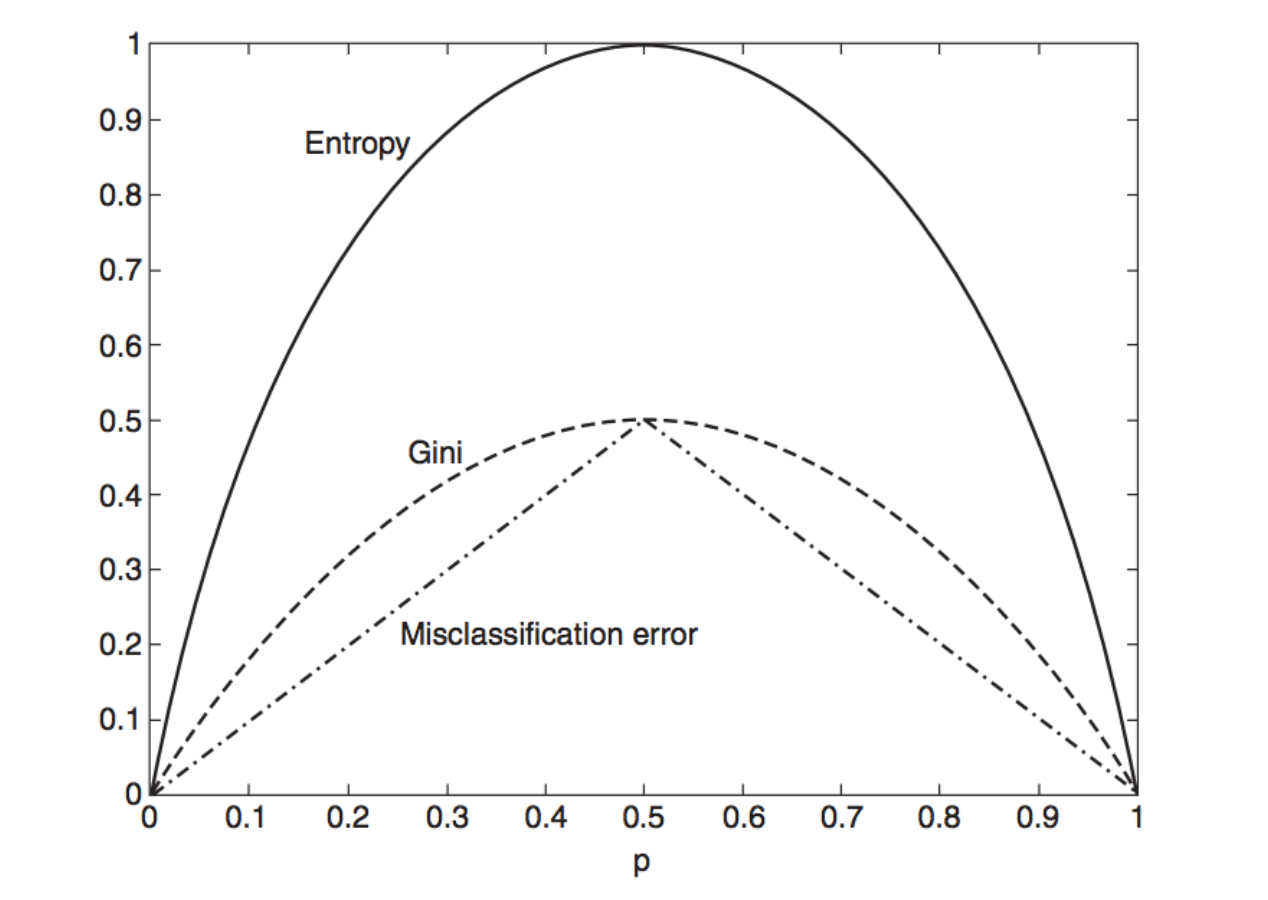

The purity (entropy, homogeneity) of the split.

A measure of confidence in the classification

Gini Impurity

Cross-Entropy Impurity

Misclassification Impurity

Exercise

| A | B | Label |

|---|---|---|

| T | F | Yes |

| T | T | Yes |

| T | T | Yes |

| T | F | No |

| T | T | Yes |

| F | F | No |

| F | F | No |

| F | F | No |

| T | T | No |

| T | F | No |

Calculate gini, entropy, and misclassification impurities of splitting on either A or B. Which feature is best to split on?

A

T

F

4 Yes / 3 No

0 Yes / 3 No

| T | F | |

|---|---|---|

| Gini | ||

| Cross Entropy | ||

| Misclassification |

B

T

F

3 Yes / 1 No

1 Yes / 5 No

| T | F | |

|---|---|---|

| Gini | ||

| Cross Entropy | ||

| Misclassification |

| A | B | |

|---|---|---|

| Gini | 0.49 | 0.68 |

| Cross Entropy | 0.3 | 0.44 |

| Misclassification | 0.42 | 0.42 |

Total Impurities

Split on feature A with Gini/Cross Entropy Impurity

Randomly choose with Misclassification Impurity

What if we chose day as our feature split?

Decision trees tend to overfit the training data.

Day

D1

D2

D3

D4

D5

1 Yes / 0 No

1 Yes / 0 No

1 Yes / 0 No

1 Yes / 0 No

1 Yes / 0 No

Next class:

- How do we avoid overfitting?

- How do we avoid unnecessary leaves and branches?

- Cost complexity pruning

- Cross-validation

deck

By Suchin Gururangan

deck

- 978