Poking with Cuda

Disclaimer

Cuda program

// test.cu

#include <iostream>

__global__ void inc(int *x) { x[0] = 42; }

int main() {

int *gpu_data;

cudaMalloc(&gpu_data, sizeof(int));

inc<<<1, 1>>>(gpu_data);

int cpu_data;

cudaMemcpy(&cpu_data, gpu_data, sizeof(int), cudaMemcpyDeviceToHost);

std::cout << cpu_data << '\n';

}

$ nvcc -l cudart inc.cu -o ./test

$ ./test

> 42Data crunching: SIMT

#include <iostream>

#include <vector>

#include <numeric>

__global__ void inc(int *x) {

int i = blockIdx.x * blockDim.x + threadIdx.x;

++x[i];

}

int main() {

std::vector<int> cpu(1024);

std::iota(cpu.begin(), cpu.end(), 1);

int *gpu;

cudaMalloc(&gpu, sizeof(int) * cpu.size());

cudaMemcpy(gpu, cpu.data(), sizeof(int) * cpu.size(), cudaMemcpyHostToDevice);

inc<<<4, 256>>>(gpu);

cudaMemcpy(cpu.data(), gpu, sizeof(int) * cpu.size(), cudaMemcpyDeviceToHost);

for (int x : cpu) { std::cout << x << ' '; }

}

$ nvcc -l cudart parallel.cu -o parallel

$ ./test

> 1 2 3 4 ... 1025disassembly

0000000000400c92 <main>:

...

400d0f: e8 cc fd ff ff call 400ae0 <__cudaPushCallConfiguration@plt>

400d14: 85 c0 test eax,eax

400d16: 75 0c jne 400d24 <main+0x92>

400d18: 48 8b 45 d8 mov rax,QWORD PTR [rbp-0x28]

400d1c: 48 89 c7 mov rdi,rax

400d1f: e8 db 01 00 00 call 400eff <inc(int*)>

...

0000000000400eff <inc(int*)>:

400eff: 55 push rbp

400f00: 48 89 e5 mov rbp,rsp

400f03: 48 83 ec 10 sub rsp,0x10

400f07: 48 89 7d f8 mov QWORD PTR [rbp-0x8],rdi

400f0b: 48 8b 45 f8 mov rax,QWORD PTR [rbp-0x8]

400f0f: 48 89 c7 mov rdi,rax

400f12: e8 b8 fe ff ff call 400dcf <__device_stub__Z3incPi(int*)>

400f17: 90 nop

400f18: c9 leave

400f19: c3 ret

0000000000400dcf <__device_stub__Z3incPi(int*)>:

...

400ea4: e8 2d 01 00 00 call 400fd6 <cudaError cudaLaunchKernel<char>(char const*, dim3, dim3, void**, unsigned long, CUstream_st*)>

...objdump -Mintel -d a.out | c++filtdisassembly

0000000000400c92 <main>:

...

400d0f: e8 cc fd ff ff call 400ae0 <__cudaPushCallConfiguration@plt>

400d14: 85 c0 test eax,eax

400d16: 75 0c jne 400d24 <main+0x92>

400d18: 48 8b 45 d8 mov rax,QWORD PTR [rbp-0x28]

400d1c: 48 89 c7 mov rdi,rax

400d1f: e8 db 01 00 00 call 400eff <inc(int*)>

...

0000000000400eff <inc(int*)>:

400eff: 55 push rbp

400f00: 48 89 e5 mov rbp,rsp

400f03: 48 83 ec 10 sub rsp,0x10

400f07: 48 89 7d f8 mov QWORD PTR [rbp-0x8],rdi

400f0b: 48 8b 45 f8 mov rax,QWORD PTR [rbp-0x8]

400f0f: 48 89 c7 mov rdi,rax

400f12: e8 b8 fe ff ff call 400dcf <__device_stub__Z3incPi(int*)>

400f17: 90 nop

400f18: c9 leave

400f19: c3 ret

0000000000400dcf <__device_stub__Z3incPi(int*)>:

...

400ea4: e8 2d 01 00 00 call 400fd6 <cudaError cudaLaunchKernel<char>(char const*, dim3, dim3, void**, unsigned long, CUstream_st*)>

...objdump -Mintel -d a.out | c++filt

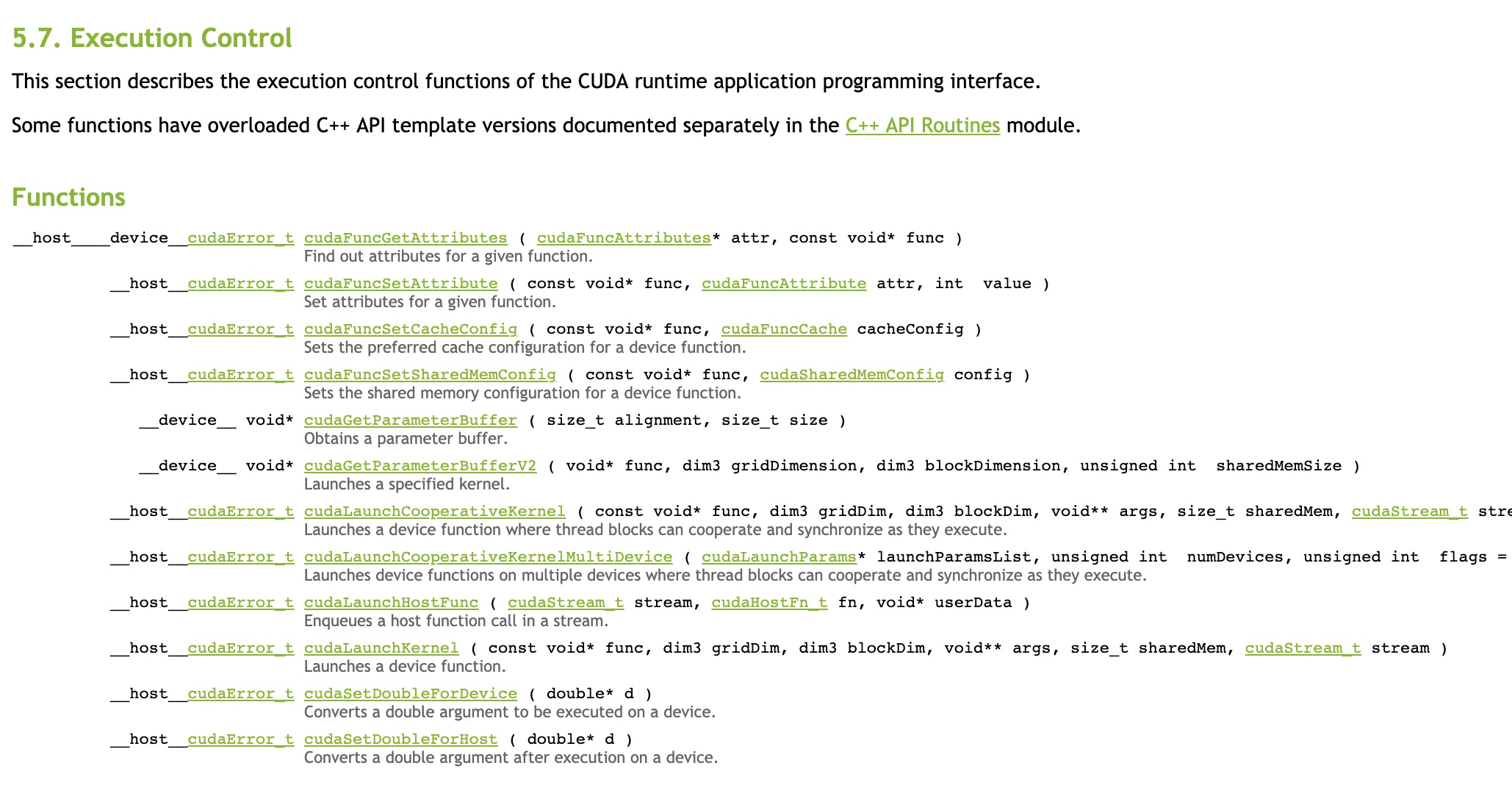

https://docs.nvidia.com/cuda/cuda-runtime-api/group__CUDART__EXECUTION.html

Hooking into it

Simple preload

// hook.so

#include <iostream>

extern "C" {

int cudaLaunchKernel() {

std::cout << "cudaLaunchKernel\n";

return 0;

}

}

$ c++ -shared -o hook.so

$ LD_PRELOAD=./hook ./test

> cudaLaunchKernel

> 0

Program broken

Try intercept zed

#include <cassert>

#include <sl/Camera.hpp>

int main(int argc, char** argv)

{

sl::InitParameters init_parameters;

sl::Camera camera;

init_parameters.input.setFromSVOFile("test.svo");

sl::ERROR_CODE result = camera.open(init_parameters);

assert(result == sl::ERROR_CODE::SUCCESS);

sl::Mat left;

result = camera.grab();

assert(result == sl::ERROR_CODE::SUCCESS);

result = camera.retrieveImage(left, sl::VIEW::LEFT);

assert(result == sl::ERROR_CODE::SUCCESS);

left.write("left.png");

std::cout << "done\n";

}$ c++ zed.cc -I/usr/local/cuda/include -I/usr/local/zed/include -L/usr/local/zed/lib -lsl_zed \

-o zed

$ LD_PRELOAD=./hook ./zed

> doneTry intercept zed

gdb ./zed(gdb) r

(gdb) info shared

From To Syms Read Shared Object Library

0x00007ffff7dd7ac0 0x00007ffff7df57c0 Yes /lib64/ld-linux-x86-64.so.2

0x00007fffef80a130 0x00007ffff068c039 Yes (*) /usr/local/zed/lib/libsl_zed.so

0x00007fffef3c36e0 0x00007fffef4b0e19 Yes (*) /usr/lib/x86_64-linux-gnu/libstdc++.so.6

0x00007fffef114b60 0x00007fffef124d1d Yes (*) /lib/x86_64-linux-gnu/libgcc_s.so.1

0x00007fffeed678b0 0x00007fffeeebabc4 Yes /lib/x86_64-linux-gnu/libc.so.6

0x00007fffeeb30a30 0x00007fffeeb3da51 Yes /lib/x86_64-linux-gnu/libpthread.so.0

0x00007fffee927da0 0x00007fffee92898e Yes /lib/x86_64-linux-gnu/libdl.so.2

0x00007fffee721100 0x00007fffee7241df Yes /lib/x86_64-linux-gnu/librt.so.1

0x00007fffed691a80 0x00007fffed9870c8 Yes (*) /usr/lib/x86_64-linux-gnu/libcuda.so.1

0x00007fffed3a6a70 0x00007fffed3bf363 Yes (*) /lib/x86_64-linux-gnu/libpng12.so.0

0x00007fffed18adb0 0x00007fffed19b500 Yes (*) /lib/x86_64-linux-gnu/libz.so.1

0x00007fffecf33d50 0x00007fffecf6de60 Yes (*) /usr/lib/x86_64-linux-gnu/libjpeg.so.8

0x00007fffeccd1360 0x00007fffecd147e0 Yes (*) /usr/lib/x86_64-linux-gnu/libturbojpeg.so.0

0x00007fffecabad00 0x00007fffecac6cb6 Yes (*) /lib/x86_64-linux-gnu/libusb-1.0.so.0

0x00007fffec5b42d0 0x00007fffec69b238 Yes (*) /usr/lib/nvidia-418/libnvcuvid.so.1

0x00007fffec29f600 0x00007fffec310d2a Yes /lib/x86_64-linux-gnu/libm.so.6

0x00007fffec06bc30 0x00007fffec08edd9 Yes (*) /usr/lib/x86_64-linux-gnu/libgomp.so.1

0x00007fffebe1ab80 0x00007fffebe4a6c8 Yes (*) /usr/lib/nvidia-418/libnvidia-fatbinaryloader.so.418.56

0x00007ffff7fb6750 0x00007ffff7fc7eca Yes (*) /lib/x86_64-linux-gnu/libudev.so.1Back to ./test

gdb ./test(gdb) r

(gdb) info shared

From To Syms Read Shared Object Library

0x00007ffff7dd7ac0 0x00007ffff7df57c0 Yes /lib64/ld-linux-x86-64.so.2

0x00007ffff7b69060 0x00007ffff7bbcca8 Yes (*) /usr/local/cuda-10.0/.../lib/libcudart.so.10.0

0x00007ffff78136e0 0x00007ffff7900e19 Yes (*) /usr/lib/x86_64-linux-gnu/libstdc++.so.6

0x00007ffff7564b60 0x00007ffff7574d1d Yes (*) /lib/x86_64-linux-gnu/libgcc_s.so.1

0x00007ffff71b78b0 0x00007ffff730abc4 Yes /lib/x86_64-linux-gnu/libc.so.6

0x00007ffff6f94da0 0x00007ffff6f9598e Yes /lib/x86_64-linux-gnu/libdl.so.2

0x00007ffff6d7ca30 0x00007ffff6d89a51 Yes /lib/x86_64-linux-gnu/libpthread.so.0

0x00007ffff6b71100 0x00007ffff6b741df Yes /lib/x86_64-linux-gnu/librt.so.1

0x00007ffff686b600 0x00007ffff68dcd2a Yes /lib/x86_64-linux-gnu/libm.so.6

0x00007ffff57d8a80 0x00007ffff5ace0c8 Yes (*) /usr/lib/x86_64-linux-gnu/libcuda.so.1

0x00007ffff54c6b80 0x00007ffff54f66c8 Yes (*) /usr/lib/nvidia-418/libnvidia-fatbinaryloader.so.418.56

Driver vs toolkit

Toolkit (SDK)

libcuda.so

user mode driver for GPU

kernel mode driver for GPU

Driver vs toolkit

$ dpkg -S /usr/local/cuda-10.0/targets/x86_64-linux/lib/libcudart.so.10.0.130

> cuda-cudart-10-0: /usr/local/cuda-10.0/targets/x86_64-linux/lib/libcudart.so.10.0.130$ dpkg -S /usr/lib/x86_64-linux-gnu/libcuda.so

> libcuda1-418: /usr/lib/x86_64-linux-gnu/libcuda.sohttps://docs.nvidia.com/cuda/cuda-driver-api/index.html

https://docs.nvidia.com/cuda/cuda-runtime-api/index.html

$ apt-cache show nvidia-418

Package: nvidia-418

Source: nvidia-graphics-drivers-418

Version: 418.56-0ubuntu0~gpu16.04.1

Recommends: nvidia-settings (>= 331.20), nvidia-prime (>= 0.5) | bumblebee, libcuda1-418, nvidia-opencl-icd-418

Replaces: nvidia-persistenced, nvidia-smi, xorg-driver-binary

Provides: nvidia-driver-binary, nvidia-persistenced, nvidia-smi, xorg-driver-binary, xorg-driver-video

Depends: x11-common (>= 1:7.0.0), make, sed (>> 3.0), dkms, linux-libc-dev, libc6-dev, patch, acpid, lib32gcc1, libc6-i386, passwd, adduser, xserver-xorg-legacy, libc6 (>= 2.7), libgcc1 (>= 1:4.2), libgl1, libwayland-client0 (>= 1.11.0), libwayland-server0 (>= 1.2.0), libx11-6, libxext6, xorg-video-abi-11 | xorg-video-abi-12 | xorg-video-abi-13 | xorg-video-abi-14 | xorg-video-abi-15 | xorg-video-abi-18 | xorg-video-abi-19 | xorg-video-abi-20 | xorg-video-abi-23 | xorg-video-abi-24, xserver-xorg-core

[...]libcuda.so.1

$ nm -D --defined-only /usr/lib/x86_64-linux-gnu/libcuda.so.1

0000000000262740 T cuMemcpyDtoHAsync

00000000002666d0 T cuMemcpyDtoHAsync_v2

000000000024c830 T cuMemcpyDtoHAsync_v2_ptsz

0000000000264fa0 T cuMemcpyDtoH_v2

000000000024af70 T cuMemcpyDtoH_v2_ptds

00000000002617b0 T cuMemcpyHtoA

0000000000261d30 T cuMemcpyHtoAAsync

0000000000265c90 T cuMemcpyHtoAAsync_v2

000000000024cbf0 T cuMemcpyHtoAAsync_v2_ptsz

00000000002656e0 T cuMemcpyHtoA_v2

000000000024b6b0 T cuMemcpyHtoA_v2_ptds

0000000000260ef0 T cuMemcpyHtoD

0000000000262570 T cuMemcpyHtoDAsync

00000000002664f0 T cuMemcpyHtoDAsync_v2

000000000024c650 T cuMemcpyHtoDAsync_v2_ptsz

0000000000264de0 T cuMemcpyHtoD_v2

000000000024adb0 T cuMemcpyHtoD_v2_ptds

0000000000267ca0 T cuMemcpyPeer

0000000000267e90 T cuMemcpyPeerAsync

000000000024c440 T cuMemcpyPeerAsync_ptsz

000000000024abc0 T cuMemcpyPeer_ptds

000000000024aa00 T cuMemcpy_ptds

[...]

000000000024a230 T cuIpcOpenMemHandle

0000000000255a60 T cuLaunch

000000000026b1d0 T cuLaunchCooperativeKernel

0000000000254ca0 T cuLaunchCooperativeKernelMultiDevice

00000000002549f0 T cuLaunchCooperativeKernel_ptsz

0000000000255be0 T cuLaunchGrid

0000000000255d90 T cuLaunchGridAsync

000000000026a180 T cuLaunchHostFunc

0000000000254e50 T cuLaunchHostFunc_ptsz

0000000000269e90 T cuLaunchKernel

0000000000254700 T cuLaunchKernel_ptsz

Hook libcuda.so.1

.globl cuMemsetD2D16_v2_ptds

.type cuMemsetD2D16_v2_ptds, @function

cuMemsetD2D16_v2_ptds:

sub rsp, 8

push rcx

push rdx

push rsi

push rdi

push r8

push r9

push r10

push r11

lea rdi, [rip + text_cuMemsetD2D16_v2_ptds]

call cuda_hook@plt

pop r11

pop r10

pop r9

pop r8

pop rdi

pop rsi

pop rdx

pop rcx

add rsp, 8

jmp rax

cuMemcpy2D_v2:

... repeat

... repeat for all symbolsCannot link libcuda.so.1 statically.

Python script to generate trampolines

// hook.cc

void* cuda_hook(const char* name) {

spdlog::info("[{}]", name);

return hook.resolve<void*>(name);

} class Hook {

public:

explicit Hook(const std::filesystem::path& filepath)

: filepath_(filepath) {}

template<typename T>

T resolve(const char* name)

{

static_assert(std::is_pointer_v<T>);

if (handle_ == nullptr) {

init();

}

void* fnc_addr = dlsym(handle_, name);

if (fnc_addr == nullptr) {

throw runtime_error("Could not find symbol {} in {}", name, filepath_);

}

return reinterpret_cast<T>(fnc_addr);

}

template<typename Ret, typename... Args>

Ret call(const char* name, Args... args)

{

using T = Ret(Args...);

auto f = resolve<T*>(name);

return f(args...);

}

private:

void init()

{

handle_ = dlopen(filepath_.string().c_str(), RTLD_GLOBAL | RTLD_NOW);

if (handle_ == nullptr) {

throw runtime_error("Could not load {}", filepath_);

}

}

std::filesystem::path filepath_;

void* handle_;

};Run test w/ hook

$ LD_LIBRARY_PATH=$PWD ./test [64/1802]

[2021-01-06 22:22:24.802] [info] [cuDriverGetVersion]

[2021-01-06 22:22:24.803] [info] [cuInit]

[2021-01-06 22:22:25.211] [info] [cuGetExportTable]

[2021-01-06 22:22:25.211] [info] [cuGetExportTable]

[2021-01-06 22:22:25.211] [info] [cuDeviceGetCount]

[2021-01-06 22:22:25.211] [info] [cuDeviceGet]

[2021-01-06 22:22:25.211] [info] [cuDeviceGetName]

[2021-01-06 22:22:25.211] [info] [cuDeviceTotalMem_v2]

[2021-01-06 22:22:25.211] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.211] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.211] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.211] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.211] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.211] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.211] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.211] [info] [cuDeviceGetAttribute]

...

[2021-01-06 22:22:25.212] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.212] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.212] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.212] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.212] [info] [cuDeviceGetAttribute]

[2021-01-06 22:22:25.212] [info] [cuDeviceGetUuid]

[2021-01-06 22:22:25.212] [info] [cuGetExportTable]

[2021-01-06 22:22:25.212] [info] [cuGetExportTable]

[2021-01-06 22:22:25.212] [info] [cuCtxGetCurrent]

[2021-01-06 22:22:25.212] [info] [cuCtxSetCurrent]

[2021-01-06 22:22:25.212] [info] [cuDevicePrimaryCtxRetain]

[2021-01-06 22:22:25.276] [info] [cuCtxGetCurrent]

[2021-01-06 22:22:25.276] [info] [cuCtxGetDevice]

[2021-01-06 22:22:25.276] [info] [cuModuleGetFunction]

[2021-01-06 22:22:25.277] [info] [cuMemAlloc_v2]

[2021-01-06 22:22:25.277] [info] [cuLaunchKernel]

[2021-01-06 22:22:25.277] [info] [cuMemcpyDtoH_v2]

42Code not broken

Run zed w/ hook

$ LD_LIBRARY_PATH=$PWD ./zed [64/1802]

[2021-01-06 22:28:47.144] [info] [cuDriverGetVersion]

[2021-01-06 22:28:47.144] [info] [cuInit]

[2021-01-06 22:28:47.512] [info] [cuGetExportTable]

[2021-01-06 22:28:47.512] [info] [cuGetExportTable]

[2021-01-06 22:28:47.512] [info] [cuDeviceGetCount]

[2021-01-06 22:28:47.512] [info] [cuDeviceGet]

[2021-01-06 22:28:47.512] [info] [cuDeviceGetName]

[2021-01-06 22:28:47.512] [info] [cuDeviceTotalMem_v2]

[2021-01-06 22:28:47.513] [info] [cuDeviceGetAttribute]

[2021-01-06 22:28:47.513] [info] [cuDeviceGetAttribute]

[2021-01-06 22:28:47.513] [info] [cuDeviceGetAttribute]

[2021-01-06 22:28:47.513] [info] [cuDeviceGetAttribute]

[2021-01-06 22:28:47.513] [info] [cuDeviceGetAttribute]

...

[2021-01-06 22:28:59.918] [info] [cuLaunchKernel] _Z12nv12_to_uyvyPKhjPhjjj

...

[2021-01-06 22:29:00.031] [info] [cuLaunchKernel] _ZN2sl6device7k_setToIfEEvT_PS2_jjj

...

[2021-01-06 22:29:00.372] [info] [cuLaunchKernel] _ZN15disparityFusion14PsfYJsyZMXAkkcEPfjjjfffff

...

[2021-01-06 22:29:00.373] [info] [cuMemHostAlloc]

[2021-01-06 22:29:00.374] [info] [cuMemAlloc_v2]

[2021-01-06 22:29:00.372] [info] [cuStreamCreate]

[2021-01-06 22:29:00.372] [info] [cuEventCreate]

...

[2021-01-06 22:29:00.378] [info] [cuMemcpy2DAsync_v2]

...

Extend hook

CUresult cuModuleGetFunction(CUfunction* hfunc, CUmodule hmod, const char* name)

{

std::lock_guard<std::mutex> lock(global_mutex);

auto result = call("cuModuleGetFunction", hfunc, hmod, name);

spdlog::info("[cuModuleGetFunction]: {}", name);

name_by_function[*hfunc] = name;

return result;

}

CUresult cuLaunchKernel(CUfunction f, unsigned int gridDimX, unsigned int gridDimY, unsigned int gridDimZ,

unsigned int blockDimX, unsigned int blockDimY, unsigned int blockDimZ,

unsigned int sharedMemBytes, CUstream hStream, void** kernelParams, void** extra)

{

std::lock_guard<std::mutex> lock(global_mutex);

if (name_by_function.count(f) == 0) {

spdlog::critical("{:p} not found", static_cast<void*>(f));

std::abort();

}

const std::string& name = name_by_function[f];

spdlog::info("[cuLaunchKernel]: {}", name);

}Remove trampolines and add signatures

$ LD_LIBRARY_PATH=$PWD ./test

[2021-01-06 22:39:33.820] [info] [cuCtxGetDevice]

[2021-01-06 22:39:33.820] [info] [cuModuleGetFunction]: _Z3incPi

[2021-01-06 22:39:33.820] [info] [cuMemAlloc_v2]

[2021-01-06 22:39:33.820] [info] [cuLaunchKernel]: _Z3incPi

[2021-01-06 22:39:33.820] [info] [cuMemcpyDtoH_v2]

42We see all input / outputs and are in control of the flow

Get the function

$ nvcc test.cu -o test

$ cuobjdump ./test

Fatbin elf code:

================

arch = sm_30

code version = [1,7]

producer = <unknown>

host = linux

compile_size = 64bit

Fatbin elf code:

================

arch = sm_30

code version = [1,7]

producer = cuda

host = linux

compile_size = 64bit

Fatbin ptx code:

================

arch = sm_30

code version = [6,3]

producer = cuda

host = linux

compile_size = 64bit

compressed

SASS

SASS

PTX

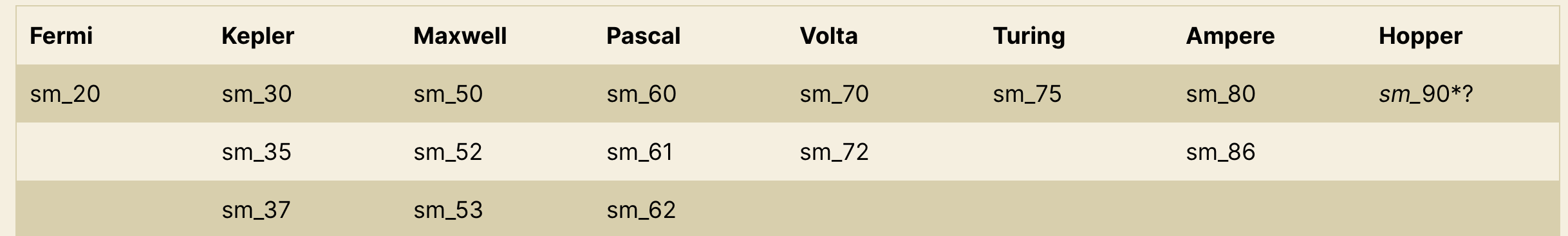

Digress: GPU Architectures

Certain features only supported by certain architectures:

works on any CUDA-capable GPU compatible with CUDA 9. Specifically, that means Kepler and later GPUs (Compute Capability 3.0+).

Find out compute

$ /usr/local/cuda/samples/1_Utilities/deviceQuery/deviceQuery

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "GeForce GTX 1080 Ti"

CUDA Driver Version / Runtime Version 10.1 / 10.0

CUDA Capability Major/Minor version number: 6.1

Total amount of global memory: 11176 MBytes (11719409664 bytes)

(28) Multiprocessors, (128) CUDA Cores/MP: 3584 CUDA Cores

GPU Max Clock rate: 1633 MHz (1.63 GHz)

Memory Clock rate: 5505 Mhz

Memory Bus Width: 352-bit

L2 Cache Size: 2883584 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 2048

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: No

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 1 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 10.1, CUDA Runtime Version = 10.0, NumDevs = 1

Result = PASS

PTX

- Targets to a specific virtual architecture

is forward-compatible, not backwards-compatible - "Comparable" to LLVM IR

Specify SASS

nvcc test.cu -gencode=arch=compute_XX,code=compute_XX -o testarch=compute_xx / code=sm_xx: Virtual architecture, aka ptx level

Build for different archs

for arch in 30 35 37 50 52 53 60 61 62 75; do

printf "${arch}: "

nvcc test.cu -gencode=arch=compute_${arch},code=sm_${arch} -o test

./test

done

30: 42

35: 42

37: 42

50: 42

52: 42

53: 42

60: 42

61: 42

62: 0

75: 0Show ptx

$ nvcc test.cu -gencode=arch=compute_60,code=sm_60 -o test

$ cuobjdump -ptx test

Fatbin ptx code:

================

arch = sm_60

code version = [6,3]

producer = cuda

host = linux

compile_size = 64bit

compressed

.version 6.3

.target sm_60

.address_size 64

.visible .entry _Z3incPi(

.param .u64 _Z3incPi_param_0

)

{

.reg .b32 %r<2>;

.reg .b64 %rd<3>;

ld.param.u64 %rd1, [_Z3incPi_param_0];

cvta.to.global.u64 %rd2, %rd1;

mov.u32 %r1, 42;

st.global.u32 [%rd2], %r1;

ret;

}Running ptx

#include <cuda.h>

#include <filesystem>

#include <iostream>

#include <memory>

#include <vector>

void checkCuda(int status) {

if (status != 0) {

std::abort();

}

}

struct CuContextDeleter {

void operator()(CUcontext ctx) { cuCtxDestroy_v2(ctx); }

};

using UniqueCUcontext = std::unique_ptr<CUctx_st, CuContextDeleter>;

void safeCuInit() {

if (auto status = cuInit(0); status != 0) {

std::cerr << "cuInit failed with status " << status << ". Asan?\n";

std::abort();

}

}

UniqueCUcontext context() {

safeCuInit();

auto cuDevice = 0;

CUcontext cuContext;

checkCuda(cuCtxCreate_v2(&cuContext, 0, cuDevice));

return UniqueCUcontext(cuContext);

}

struct CuModuleDeleter {

void operator()(CUmodule module) { cuModuleUnload(module); }

};

using UniqueCUmodule = std::unique_ptr<CUmod_st, CuModuleDeleter>;

UniqueCUmodule load_module(const std::string& ptx) {

CUmodule cuModule;

int status = cuModuleLoadData(&cuModule, ptx.c_str());

if (status != 0) {

throw std::runtime_error("Could not load: " + ptx +

". Error: " + std::to_string(status));

}

return UniqueCUmodule(cuModule);

}

int main() {

const auto filepath = "test.ptx";

const auto kernel_mangled_name = "test";

const auto grid_dim_x = 1;

const auto grid_dim_y = 1;

const auto grid_dim_z = 1;

const auto block_dim_x = 1;

const auto block_dim_y = 1;

const auto block_dim_z = 1;

const auto shared_memory_bytes = 0;

CUdeviceptr d_ptr;

std::vector<int> result(1);

const auto cuContext = context();

const auto ptx = R"(

.version 6.3

.target sm_61

.address_size 64

.visible .entry test(

.param .u64 param_0

)

{

.reg .b32 %r<2>;

.reg .b64 %rd<2>;

ld.param.u64 %rd1, [param_0];

cvta.to.global.u64 %rd1, %rd1;

mov.s32 %r1, 20;

st.global.s32 [%rd1], %r1;

ret;

})";

const auto module = load_module(ptx);

checkCuda(cuMemAlloc_v2(&d_ptr, result.size() * sizeof(int)));

std::vector<void *> arg_pointers{&d_ptr};

CUfunction cu_function;

auto status =

cuModuleGetFunction(&cu_function, module.get(), kernel_mangled_name);

const auto status_launch = cuLaunchKernel(

cu_function, grid_dim_x, grid_dim_y, grid_dim_z, block_dim_x, block_dim_y,

block_dim_z, shared_memory_bytes, nullptr, arg_pointers.data(), nullptr);

cuCtxSynchronize();

checkCuda(cuMemcpyDtoH_v2(result.data(), d_ptr, result.size() * sizeof(int)));

std::cout << "result: " << result[0] << std::endl;

}

c++ -std=c++17 test.cc -I/usr/local/cuda/include -lcudart -L/usr/local/cuda/lib64 -lcuda -g3SASS

- Targeted to a specific real architecture

neither forward- nor backwards compatible - "Comparable" to x86 assembly

Specify SASS

nvcc test.cu -gencode=arch=compute_XX,code=sm_YY -o testarch=compute_xx: Virtual architecture, aka ptx level

- A temporary PTX code will be generated from your source code, and it will use cc3.0 PTX.

- From that PTX, the ptxas tool will generate cc5.2-compliant SASS code.

- The SASS code will be embedded in your executable.

- The PTX code will be discarded.

code=sm_YY: Actual hardware architecture

Usually XX=YY

-gencode arch=compute_30,code=sm_52Can differ, example

Build for different archs

for arch in 30 35 37 50 52 53 60 61 62 75; do

printf "${arch}: "

nvcc test.cu -gencode=arch=compute_${arch},code=sm_${arch} -o test

./test

done

30: 0

35: 0

37: 0

50: 0

52: 0

53: 0

60: 42

61: 42

62: 0

75: 0Show sass

$ nvcc test.cu -gencode=arch=compute_60,code=sm_60 -o test

$ cuobjdump -sass test

Fatbin elf code:

================

arch = sm_60

code version = [1,7]

producer = <unknown>

host = linux

compile_size = 64bit

code for sm_60

Fatbin elf code:

================

arch = sm_60

code version = [1,7]

producer = cuda

host = linux

compile_size = 64bit

code for sm_60

Function : _Z3incPi

.headerflags @"EF_CUDA_SM60 EF_CUDA_PTX_SM(EF_CUDA_SM60)"

/* 0x001fc400fe2007f6 */

/*0008*/ MOV R1, c[0x0][0x20] ; /* 0x4c98078000870001 */

/*0010*/ MOV32I R0, 0x2a ; /* 0x0100000002a7f000 */

/*0018*/ MOV R2, c[0x0][0x140] ; /* 0x4c98078005070002 */

/* 0x001fbc00fe2007f2 */

/*0028*/ MOV R3, c[0x0][0x144] ; /* 0x4c98078005170003 */

/*0030*/ STG.E [R2], R0 ; /* 0xeedc200000070200 */

/*0038*/ NOP ; /* 0x50b0000000070f00 */

/* 0x001ffc00fd0007ef */

/*0048*/ NOP ; /* 0x50b0000000070f00 */

/*0050*/ NOP ; /* 0x50b0000000070f00 */

/*0058*/ EXIT ; /* 0xe30000000007000f */

/* 0x001f8000fc0007ff */

/*0068*/ BRA 0x60 ; /* 0xe2400fffff07000f */

/*0070*/ NOP; /* 0x50b0000000070f00 */

/*0078*/ NOP; /* 0x50b0000000070f00 */

...................

Control codes

$ nvcc test.cu -gencode=arch=compute_60,code=sm_60 -o test

$ cuobjdump -sass test

Fatbin elf code:

================

arch = sm_60

code version = [1,7]

producer = <unknown>

host = linux

compile_size = 64bit

code for sm_60

Fatbin elf code:

================

arch = sm_60

code version = [1,7]

producer = cuda

host = linux

compile_size = 64bit

code for sm_60

Function : _Z3incPi

.headerflags @"EF_CUDA_SM60 EF_CUDA_PTX_SM(EF_CUDA_SM60)"

/* 0x001fc400fe2007f6 */

/*0008*/ MOV R1, c[0x0][0x20] ; /* 0x4c98078000870001 */

/*0010*/ MOV32I R0, 0x2a ; /* 0x0100000002a7f000 */

/*0018*/ MOV R2, c[0x0][0x140] ; /* 0x4c98078005070002 */

/* 0x001fbc00fe2007f2 */

/*0028*/ MOV R3, c[0x0][0x144] ; /* 0x4c98078005170003 */

/*0030*/ STG.E [R2], R0 ; /* 0xeedc200000070200 */

/*0038*/ NOP ; /* 0x50b0000000070f00 */

/* 0x001ffc00fd0007ef */

/*0048*/ NOP ; /* 0x50b0000000070f00 */

/*0050*/ NOP ; /* 0x50b0000000070f00 */

/*0058*/ EXIT ; /* 0xe30000000007000f */

/* 0x001f8000fc0007ff */

/*0068*/ BRA 0x60 ; /* 0xe2400fffff07000f */

/*0070*/ NOP; /* 0x50b0000000070f00 */

/*0078*/ NOP; /* 0x50b0000000070f00 */

...................

Starting with the Kepler architecture Nvidia has been moving some control logic off of the chip and into kernel instructions which are determined by the assembler. This makes sense since it cuts down on die space and power usage, plus the assembler has access to the whole program and can make more globally optimal decisions about things like scheduling and other control aspects.

Describe:

- Stall Counts

- Yield Hint Flag

- Write Dependency Barriers

- Read Dependency Barriers

- Wait Dependency Barrier Flags

https://github.com/NervanaSystems/maxas/wiki/Control-Codes

Evolution of CC

$ nvcc test.cu -gencode=arch=compute_30,code=sm_30 -gencode=arch=compute_60,code=sm_60 -gencode=arch=compute_75,code=sm_75 -o test

$ cuobjdump -sass test

...

code for sm_30

Function : _Z3incPi

.headerflags @"EF_CUDA_SM30 EF_CUDA_PTX_SM(EF_CUDA_SM30)"

/* 0x2002e04282004307 */

/*0008*/ MOV R1, c[0x0][0x44]; /* 0x2800400110005de4 */

/*0010*/ MOV32I R4, 0x2a; /* 0x18000000a8011de2 */

/*0018*/ MOV R2, c[0x0][0x140]; /* 0x2800400500009de4 */

/*0020*/ MOV R3, c[0x0][0x144]; /* 0x280040051000dde4 */

/*0028*/ ST.E [R2], R4; /* 0x9400000000211c85 */

/*0030*/ EXIT; /* 0x8000000000001de7 */

/*0038*/ BRA 0x38; /* 0x4003ffffe0001de7 */

...................

...

code for sm_60

Function : _Z3incPi

.headerflags @"EF_CUDA_SM60 EF_CUDA_PTX_SM(EF_CUDA_SM60)"

/* 0x001fc400fe2007f6 */

/*0008*/ MOV R1, c[0x0][0x20] ; /* 0x4c98078000870001 */

/*0010*/ MOV32I R0, 0x2a ; /* 0x0100000002a7f000 */

/*0018*/ MOV R2, c[0x0][0x140] ; /* 0x4c98078005070002 */

/* 0x001fbc00fe2007f2 */

/*0028*/ MOV R3, c[0x0][0x144] ; /* 0x4c98078005170003 */

/*0030*/ STG.E [R2], R0 ; /* 0xeedc200000070200 */

/*0038*/ NOP ; /* 0x50b0000000070f00 */

/* 0x001ffc00fd0007ef */

/*0048*/ NOP ; /* 0x50b0000000070f00 */

/*0050*/ NOP ; /* 0x50b0000000070f00 */

/*0058*/ EXIT ; /* 0xe30000000007000f */

/* 0x001f8000fc0007ff */

/*0068*/ BRA 0x60 ; /* 0xe2400fffff07000f */

/*0070*/ NOP; /* 0x50b0000000070f00 */

/*0078*/ NOP; /* 0x50b0000000070f00 */

...................

...

code for sm_75

Function : _Z3incPi

.headerflags @"EF_CUDA_SM75 EF_CUDA_PTX_SM(EF_CUDA_SM75)"

/*0000*/ MOV R1, c[0x0][0x28] ; /* 0x00000a0000017a02 */

/* 0x000fd00000000f00 */

/*0010*/ MOV R0, 0x2a ; /* 0x0000002a00007802 */

/* 0x000fe20000000f00 */

/*0020*/ ULDC.64 UR4, c[0x0][0x160] ; /* 0x0000580000047ab9 */

/* 0x000fce0000000a00 */

/*0030*/ STG.E.SYS [UR4], R0 ; /* 0x00000000ff007986 */

/* 0x000fe2000c10e904 */

/*0040*/ EXIT ; /* 0x000000000000794d */

/* 0x000fea0003800000 */

/*0050*/ BRA 0x50; /* 0xfffffff000007947 */

/* 0x000fc0000383ffff */

/*0060*/ NOP; /* 0x0000000000007918 */

/* 0x000fc00000000000 */

/*0070*/ NOP; /* 0x0000000000007918 */

Kepler, no CC

Pascal, 1:3 CC

Turing, 1:1 CC

Random notes

- Hobby sass assembler for maxwell architecture

https://github.com/NervanaSystems/maxas - Hand-writing sass code for optimization

https://github.com/NervanaSystems/maxas/wiki/SGEMM - TensorRT comes with hand-tuned kernels

- Paper on reversing cuda architectures:

Dissecting the NVIDIA Volta GPU Architecture via Microbenchmarking

https://arxiv.org/pdf/1804.06826.pdf

sass / ptx

| PTX | SASS | |

|---|---|---|

| Tooling | assembler ptxas | no assembler |

| Readability | higher level | low level |

| Documentation | documented | propiertary |

| Compatibility | forward | none |

| Loading | JIT | machine code |

Intercepting sample kernel

$ LD_LIBRARY_PATH=$PWD ./zed | grep cuLaunchKernel | c++filt

[2021-01-07 01:55:27.151] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.172] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.185] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.198] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.211] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.224] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.236] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.249] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.262] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.274] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.285] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.297] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.313] [info] [cuLaunchKernel] nv12_to_uyvy(unsigned char const*, unsigned int, unsigned char*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.340] [info] [cuLaunchKernel] void device::FrP0k5607tPV<(sl::YUV_CONVENTION)2>(uchar4*, int, device::uchar8*, device::uchar8*, int, int, int, float)

[2021-01-07 01:55:27.374] [info] [cuLaunchKernel] device::mZd2jMuKVE2EgUg(unsigned long long, unsigned long long, uchar4*, uchar4*, unsigned int, int, int, float, float)

[2021-01-07 01:55:27.375] [info] [cuLaunchKernel] device::njBh4Ku5x07U(unsigned long long, unsigned long long, device::uchar8*, device::uchar8*, uchar2*, uchar2*, unsigned int, unsigned int, float2*, float2*, unsigned int, int, int)

[2021-01-07 01:55:27.375] [info] [cuLaunchKernel] device::arwkBu8amrxx7Fc(unsigned long long, unsigned long long, uchar4*, uchar4*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.375] [info] [cuLaunchKernel] device::VEErEQPsQe7AAdQ(unsigned long long, unsigned long long, uchar4*, uchar4*, uchar1*, uchar1*, unsigned int, unsigned int, unsigned int, unsigned int, float, float)

[2021-01-07 01:55:27.375] [info] [cuLaunchKernel] device::hajPGXg8md5PxtQ(unsigned long long, unsigned long long, uchar4*, uchar4*, unsigned int, uchar1*, uchar1*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.375] [info] [cuLaunchKernel] device::hajPGXg8md5PxtQ(unsigned long long, unsigned long long, uchar4*, uchar4*, unsigned int, uchar1*, uchar1*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.375] [info] [cuLaunchKernel] device::hajPGXg8md5PxtQ(unsigned long long, unsigned long long, uchar4*, uchar4*, unsigned int, uchar1*, uchar1*, unsigned int, unsigned int, unsigned int)

[2021-01-07 01:55:27.375] [info] [cuLaunchKernel] device::cQjrpMDEt9aYJFc(unsigned long long, unsigned long long, unsigned char*, unsigned char*, unsigned int, unsigned int, int, int)

[2021-01-07 01:55:27.375] [info] [cuLaunchKernel] device::cQjrpMDEt9aYJFc(unsigned long long, unsigned long long, unsigned char*, unsigned char*, unsigned int, unsigned int, int, int)

[2021-01-07 01:55:27.375] [info] [cuLaunchKernel] device::cQjrpMDEt9aYJFc(unsigned long long, unsigned long long, unsigned char*, unsigned char*, unsigned int, unsigned int, int, int)Hardcode hook

CUresult cuLaunchKernel(CUfunction f, unsigned int gridDimX, unsigned int gridDimY, unsigned int gridDimZ,

unsigned int blockDimX, unsigned int blockDimY, unsigned int blockDimZ, unsigned int sharedMemBytes,

CUstream hStream, void** kernelParams, void** extra)

{

std::lock_guard<std::mutex> lock(global_mutex);

if (name_by_function.count(f) == 0) {

spdlog::critical("{:p} not found", static_cast<void*>(f));

std::abort();

}

const std::string& name = name_by_function[f];

spdlog::info("[cuLaunchKernel] {}", name);

CUresult result = call("cuLaunchKernel", f, gridDimX, gridDimY, gridDimZ,

blockDimX, blockDimY, blockDimZ, sharedMemBytes,

hStream, kernelParams, extra);

if (name == "_ZN6device12FrP0k5607tPVILN2sl14YUV_"

"CONVENTIONE2EEEvP6uchar4iPNS_6uchar8ES6_iiif") {

for (int i : {0, 2, 3}) {

CUdeviceptr ptr = *reinterpret_cast<CUdeviceptr*>(kernelParams[i]);

absl::StatusOr<MemoryDescription> memory =

cuda.memory_description(ptr);

if (memory.ok()) {

AllocPitch* alloc = std::get_if<AllocPitch>(&*memory);

assert(alloc != nullptr);

const std::string filename = fmt::format("result_{}.bin", i++);

absl::Status status = dump(filename, *alloc);

assert(status.ok());

spdlog::error("Is okay? {}", memory.ok());

}

}

}

return result;

}

_ZN6device12FrP0k5607tPVILN2sl14YUV_CONVENTIONE2EEEvP6uchar4iPNS_6uchar8ES6_iiif

void device::FrP0k5607tPV<(sl::YUV_CONVENTION)2>(uchar4*, int, device::uchar8*, device::uchar8*, int, int, int, float)

Hook allocation

absl::Status dump(const AllocPitch& alloc, std::vector<std::byte>& out) {

...

if (auto result = call("cuMemcpy2DUnaligned_v2", &p_copy); result != 0) {

return absl::InternalError(

fmt::format("Could not download, status: {}", result));

}

return absl::OkStatus();

} CUresult CUDAAPI cuMemAlloc_v2(CUdeviceptr* dptr, size_t bytesize)

{

std::lock_guard<std::mutex> lock(global_mutex);

auto result = call("cuMemAlloc_v2", dptr, bytesize);

spdlog::info("cuMemAlloc_v2 {:x}", *dptr);

cuda.register_alloc(Alloc{*dptr, bytesize, static_cast<int>(bytesize)});

return result;

}

Result

void device::FrP0k5607tPV<(sl::YUV_CONVENTION)2>(uchar4*, int, device::uchar8*, device::uchar8*, int, int, int, float)

In

Out1

Out2

In

Out1

Out2

deck

By technology

deck

- 95