reactive stream processing

w/ kafka rx

Thomas Omans

Greetings and introductions...

nice to meet you

Kafka+ReactiveX

tweetStream

.groupBy(hashTag)

.flatMap { (tag, stream) =>

process(stream, tag)

.saveToKafka(kafkaProducer)

.map { record =>

record.commit()

}

}a reactive api for kafka producers and consumers

why did we write kafka-rx?

kafka is a great way to decouple systems

rx is a great way to transform streams

existing clients lacked desired behavior

building new generation of data infrastructure

data & debt

both grow with time

continuous evolution, continuous refactoring

how do we make any sense of it?

shifting landscapes, mistakes and failures

how do we make the right decisions?

use simple solutions

If you want to solve big problems

terms & definitions

streams in practice

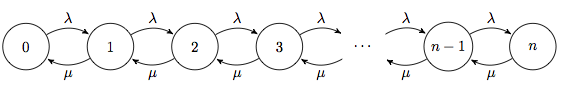

streams in theory

talk syllabus

Stream Processing

continuous directed transformations

https://stat.duke.edu/~cr173/Sta523_Fa14/hw/hw4.html

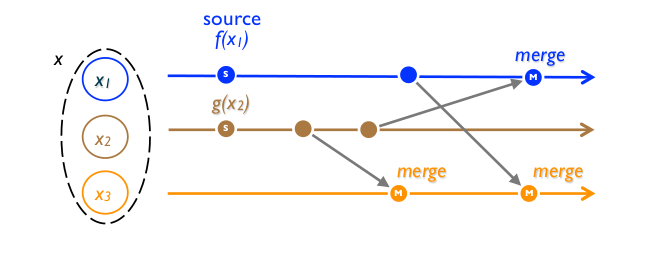

Concurrent Streams

what does it mean to be concurrent?

Kafka

a system for distributing concurrent streams

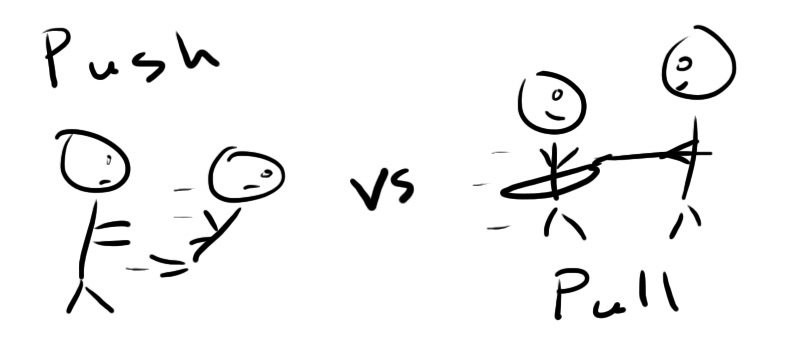

Reactive Programming

events are pushed at you, forcing you to react

Functional Programming

is programming w/ functions

function composition

sequence operations

value expressions

immutable data

no side effects

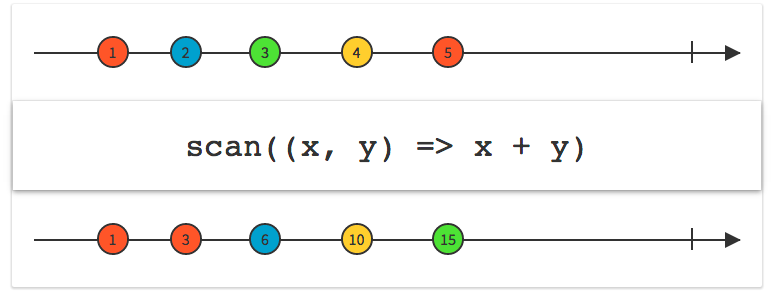

Introducing ReactiveX

ReactiveX is a library for composing asynchronous and event-based programs

Observable Streams

an interface for async sequences

public interface Iterable<T> {

Iterator iterator();

}

public interface Iterator<T> {

Boolean hasNext();

T next();

}

public interface IObservable<T> {

Subscription subscribe(IObserver<T> o);

}

public interface IObserver<T> {

void onNext(T value);

void onCompleted();

void onError(Exception error);

}

iterable

observable

filter, select, and combine

streams of values

streams as signals

define behavior in terms of streams

react to changes in the system

join two or more streams together

streams & the unix philosophy

do one thing and do it well

pipe inputs and ouputs

composition of small tools

let kafka be the buffer between your programs

program1 | program2 | program3thinking with

distributed streams

did my message get delivered?

are my messages in order?

have I seen this before?

have I seen this before?

keeping consistent

once an operation is complete it is visible everywhere

causal consistency & atomic broadcast

eventual consistency

so long as everyone receives the set of operations, everyone will be in the same state

just use a join semilattice

https://hal.inria.fr/file/index/docid/555588/filename/techreport.pdf -- Shapiro et al

CRDTs & distributed merge

ensure your results are increasing and your operations are commutative, associative, and idempotent

merge(new, old) ==

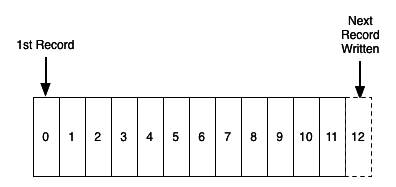

merge(old, merge(old, new))stream models

micro batch or one record at a time?

local state or distributed state?

deterministic or non-deterministic?

bounded or unbounded?

synchronized or coordination-free?

which way is right for me?

often techniques need to be mixed and matched

streaming solutions need to compose

different systems need different solutions

total confusion - tamra davisson

a different way

push integration to the edges

program against value transformations

expose simple abstractions

treat separate concerns separately

a simple consumer

import com.cj.kafka.rx._

import scala.concurrent.duration._

new RxConsumer("zookeeper:2181", "group")

.getMessageStream("topic")

.tumbling(5 seconds)

.map(process)unit testing w/ kafka-rx

val fakeProducer = new MockProducer()

val fakeStream =

Observable.from(1 to 10).map(toFakeRecord)

val results =

new MyConsumer(fakeStream)

.produce(fakeProducer)

results.toBlocking.toList.length should be(10)

fakeProducer.history().length should be(10)

abstractions

across domains

it doesn't matter who creates your data

the only thing that matters is the abstraction

it doesn't matter where it lives

systems come and go

how about distribution?

does it run on mesos? yarn? docker? aws?

concurrency is an application concern

run on one server or one hundred

fibers, futures, actors

you decide!

I need exactly once processing

do you really? ... well okay

val stream = ....

stream.foreach { bucket =>

process(bucket)

bucket.last.commit(merge)

}calls to commit synchronize across the consumer group

a merge callback can be provided for offset reconciliation

use idempotency first, coordination as a last resort

DEMO

TIME

natural design

relearning natures lessons

good designs fit our needs so well that the design is invisible

― Donald A. Norman,

The Design of Everyday Things

lists + time = streams

abstractions need to compose

find faithful abstractions and try to plus them

kafka is a way to distribute streams

rx is a way to transform streams

push unbounded lists in and out of your programs

tools should help us think clearly

constraints foster creativity

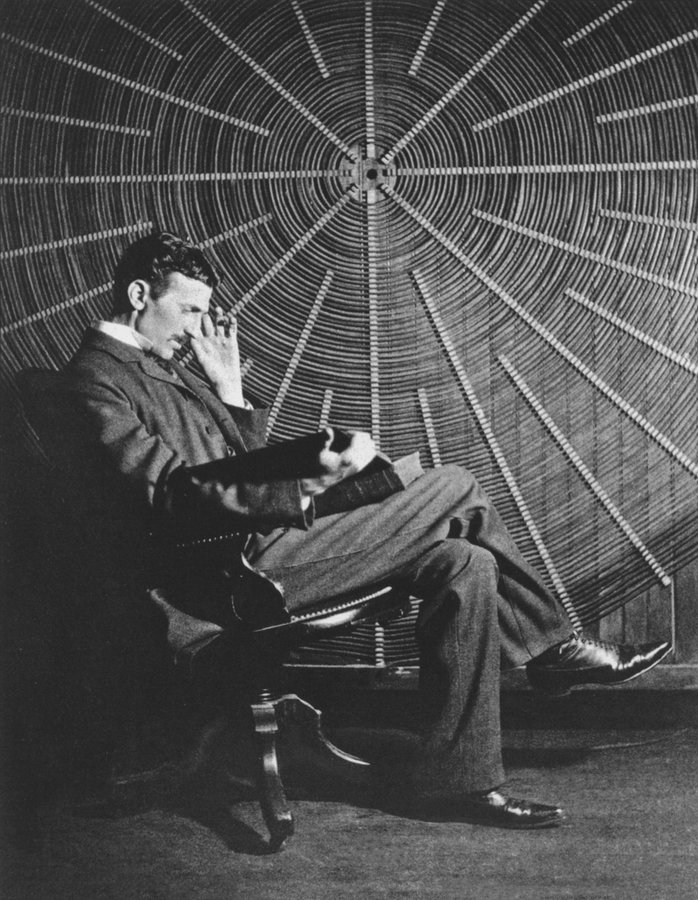

The scientists of today think deeply instead of clearly. One must be sane to think clearly, but one can think deeply and be quite insane

— Nikola Tesla

System architecture should support the addition of any feature at any time.

freedom to change

— Henrik Kniberg

Lean From the Trenches

Future Work

port to java & kafka's 0.9 java consumer

rx & curator in kafka core?

api cleanup

mesos/yarn layer for consumer scaling

Questions???

Reactive Stream Processing w/ kafka-rx

By Thomas Omans

Reactive Stream Processing w/ kafka-rx

- 11,123