oplog tailing

Our app helps test all the kids in the

state for fall, winter, and spring benchmarks.

- Initial Testing / August Training

- CPU high on single Mongo

- Sharding required

- Write locks alleviated

Initial Version: Paged subscriptions with a reactive Router.page() for server-side collection paging.

Did not scale under relatively small load.

Fall Benchmarks

- Complete rewrite of server-side pagination

- Subscribe to all publications at the school level

RESULT:

Using 3 Shards and poll-and-diff, we were able to get

through 10% of the IA pilot schools with relatively good load times average ~30 seconds

BUT: relatively small amount of data in the database.

winter benchmarks

- A lot more data (significantly more schools added)

- Upped to 4 shards

Started to get huge queueing problems.

Mongo was operating at 2-3K operations per second.

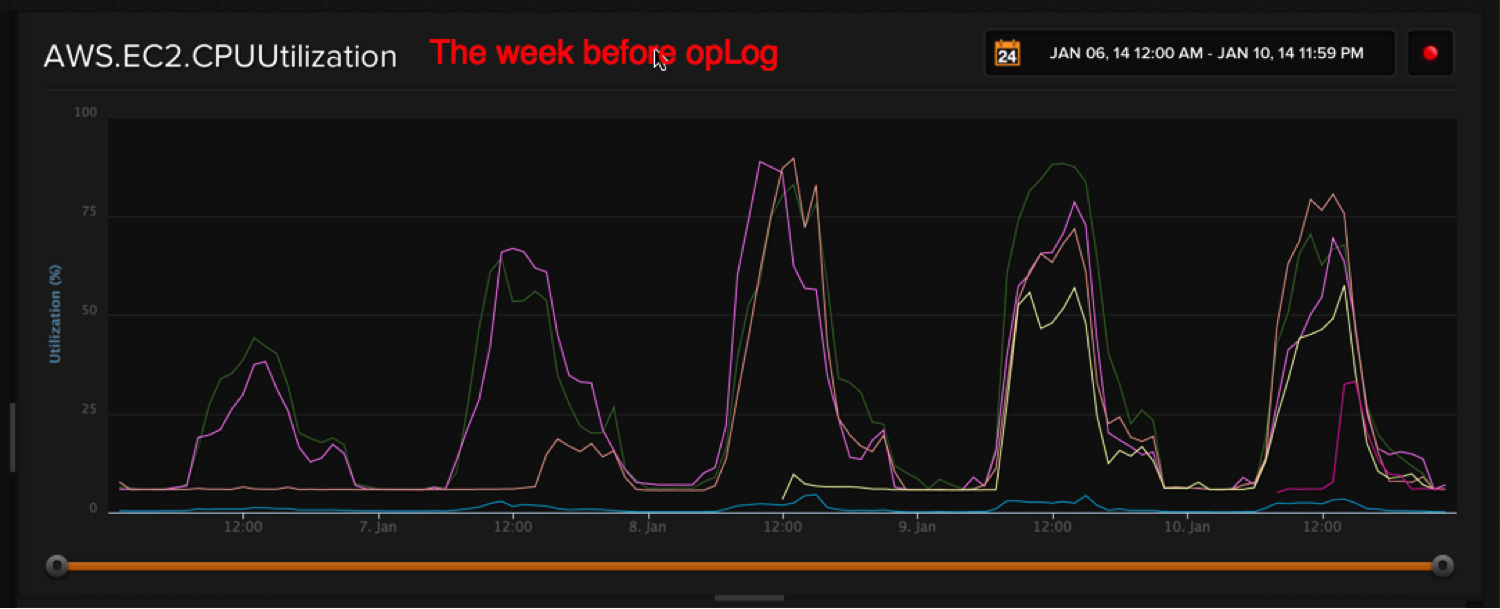

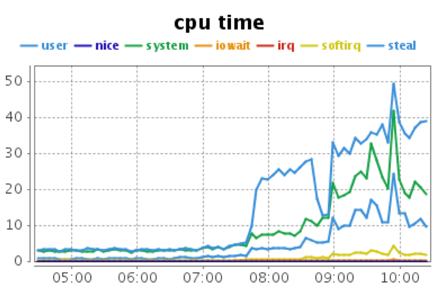

1st WEEK - One week before OpLog - 4 x c1.xlarge

WEB Tier

90% of users loaded sites in 154.55 seconds or less

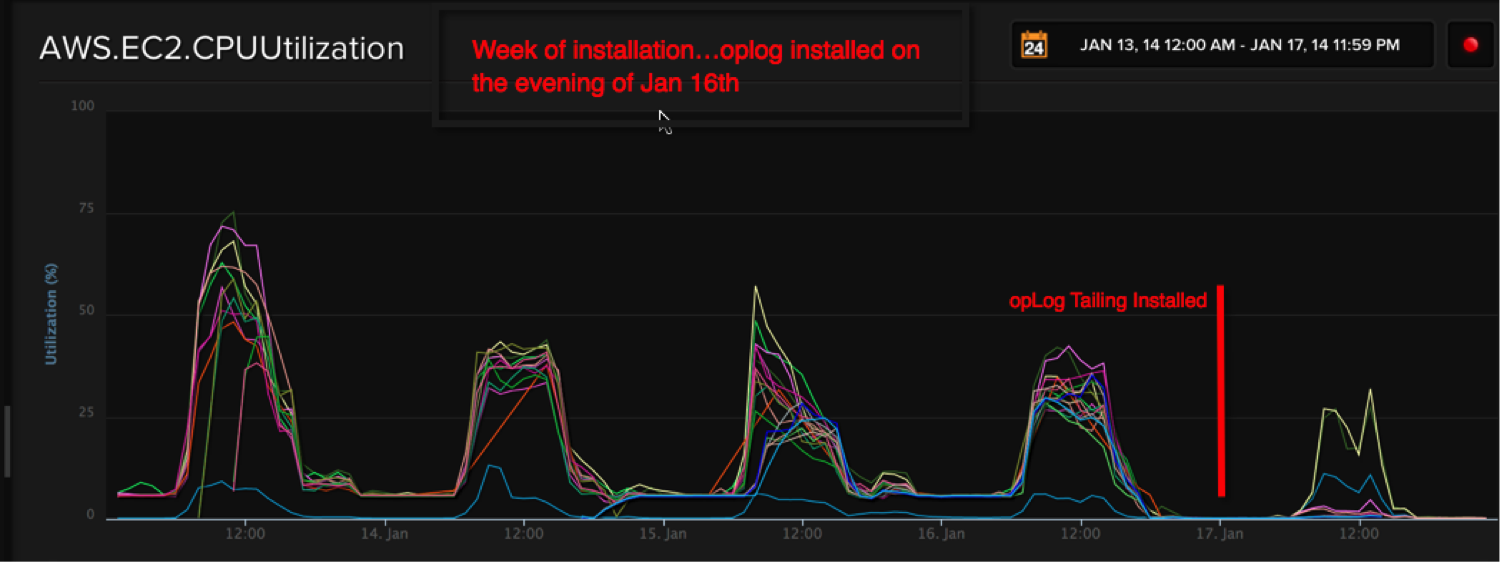

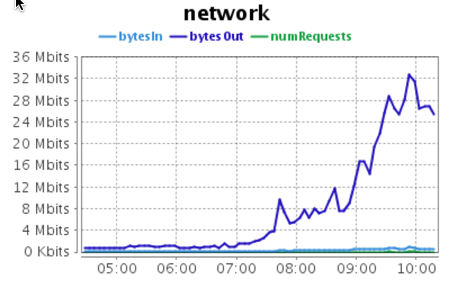

Install WEEK - oplog tailing installed on Jan 17th

Jan 16th: 90% of users loaded their site in 293.12 seconds or less

Jan 17th: 90% of users loaded their site in 34.46 seconds or less

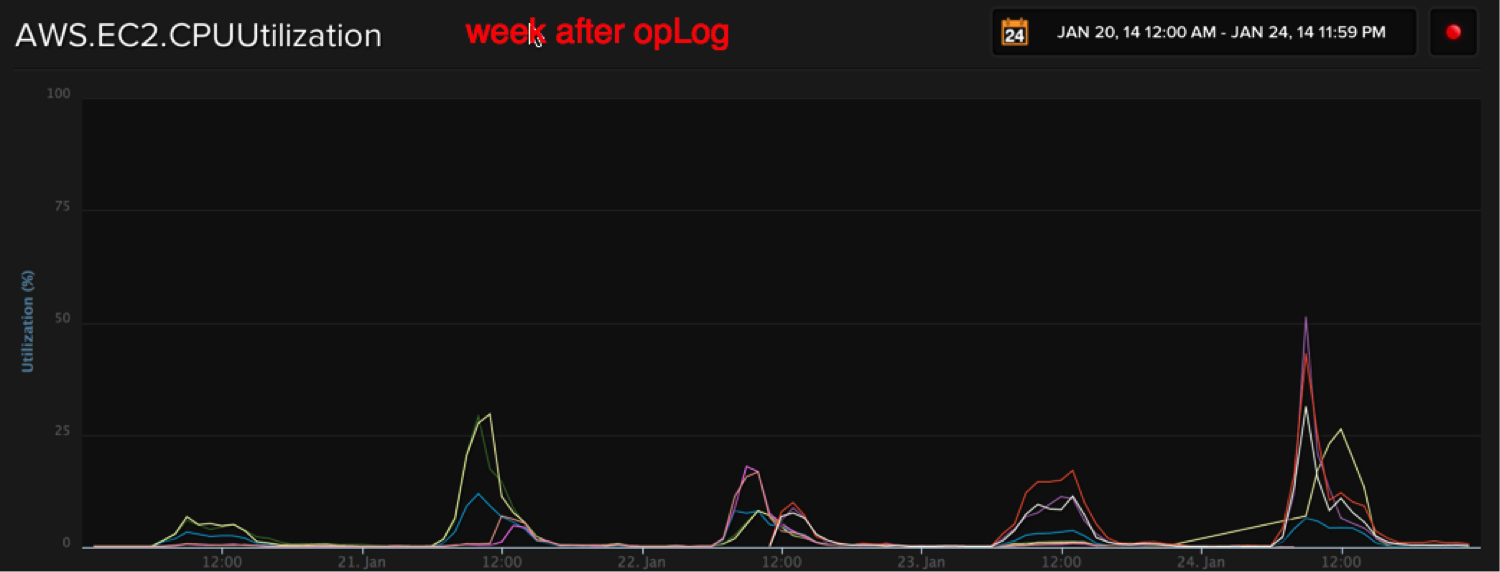

WEB - up to 4 c1.xLarge - WEEK AFTER OPLOG

90% of users loaded sites in 28.04 seconds or less

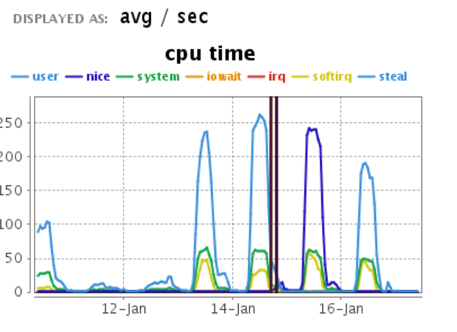

MONGO STATS

ONE SSD shard (200-250%) Oplog SSD Replica Set

Down from 200-250% (on ONE shard of four!) to 50% total

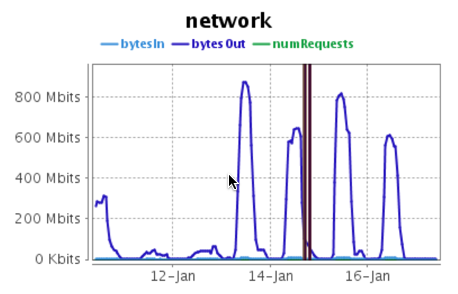

MONGO STATS

max of 850 mbit - Poll/Diff 36 Mbit - oplog

This proved to be the bottleneck

1gigabit was the cap on the NIC card.

opLog Tailing with Meteor

By timh

opLog Tailing with Meteor

- 885