Spring cloud stream

A new rick & morty adventure

i want to welcome you

to the microverse

spring cloud stream

- framework for creating message driven microservices

- builds upon Spring Boot

- based on Spring Integration

- opinionated configuration of message brokers

- persistent pub/sub semantics

- supports different middleware vendors:

- Kafka

- RabbitMQ

- ActiveMQ

- Redis

- AWS Kinesis

Basic example

@SpringBootApplication

@EnableBinding(Sink.class)

public class VoteRecordingSinkApplication {

public static void main(String[] args) {

SpringApplication.run(VoteRecordingSinkApplication.class, args);

}

@StreamListener(Sink.INPUT)

public void processVote(Vote vote) {

votingService.recordVote(vote);

}

}spring cloud stream

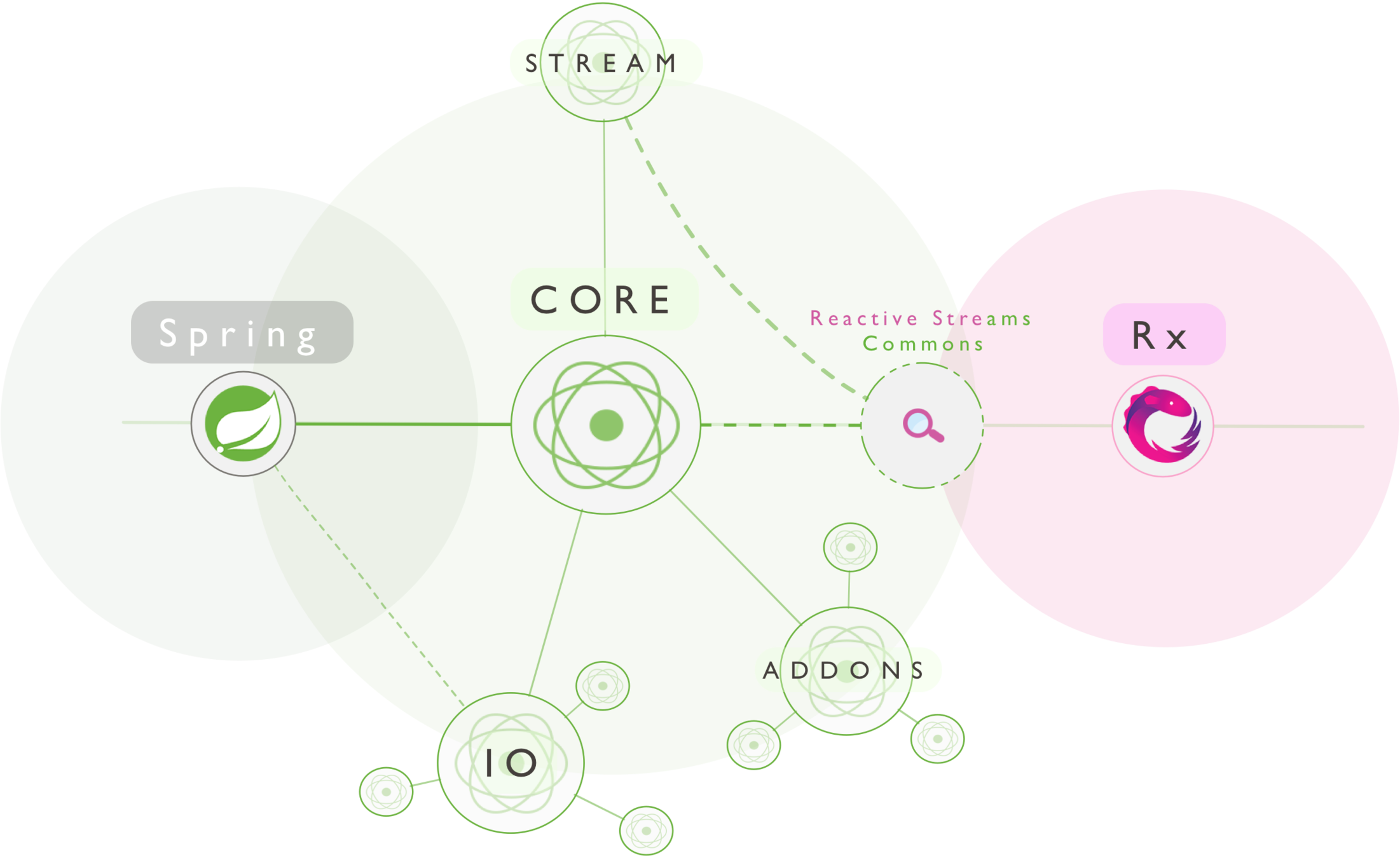

- Has reactive programming support through Reactor or RxJava

@EnableBinding(Processor.class)

@EnableAutoConfiguration

public static class UppercaseTransformer {

@StreamListener

@Output(Processor.OUTPUT)

public Flux<String> receive(@Input(Processor.INPUT) Flux<String> input) {

return input.map(s -> s.toUpperCase());

}

}

example: Web application

with a RabbitMQ output binder

public interface MyChannels {

@Output("some-output")

MessageChannel output();

}spring.jms.pub-sub-domain=true<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-stream-rabbit</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

When using JMS:

@RestController

@SpringBootApplication

@EnableBinding(MyChannels.class)

public class SpringCloudStreamDemoApplication {

public static void main(String[] args) {

SpringApplication.run(SpringCloudStreamDemoApplication.class, args);

}

@Autowired

private MyChannels myChannels;

@GetMapping(params = "message")

public void sendMessage(@RequestParam("message") String message) {

this.myChannels.output().send(MessageBuilder.withPayload(message).build());

}

@StreamListener("some-output")

public void receiveMessage(String message) {

System.out.println(message);

}

}Web application

with a RabbitMQ output binder

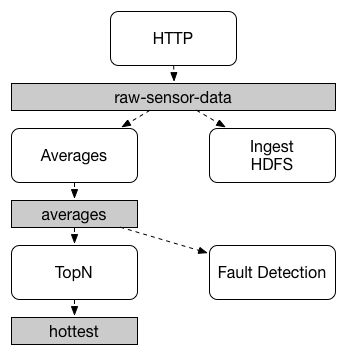

Advantages

of pub-sub style communication

- data is broadcast through shared topics

- reduces complexity across producer and consumer

- new consumer can easily be added without disturbing others

- reduces coupling between microservices

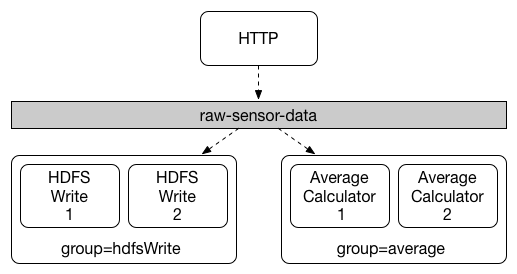

Consumer groups

splitting up workload

- similar to Kafka consumer groups

- all groups which subscribe to a given destination receive a copy of published data

- only one member of that group receives actual message

- default behaviour: every instance is anonymous, single-member consumer group

use case: szechuan sauce

RICk

Genius. Grandpa. Asshole.

- morty's grandfather

- inventor of

- the microverse

- interdimensional travel

- a butter-passing robot

- wants more szechuan sauce

- too lazy to look for it himself

RICk

Genius. Grandpa. Asshole.

Rick Microservice

RabbitMQ Binder

RabbitMQ

- microverse

- meeseeks

- rick

Meeseeks

Existence is pain.

- invented by Rick

- summoned by Meeseeks Box

- magical creature

- spawned for a single purpose

- disappears once task is complete

MEEseeks

Existence is pain.

Meeseeks microservice

RabbitMQ Binder

RabbitMQ

- microverse

- rick

- mcdonalds

- meeseeks

mcdonalds

One in five hundred chance to win.

McDonalds microservice

RabbitMQ Binder

RabbitMQ

- meeseeks

- mcdonalds

MORTY

The perfect sidekick.

Morty microservice

RabbitMQ Binder

RabbitMQ

- rick

- meeseeks

- mcdonalds

- microverse

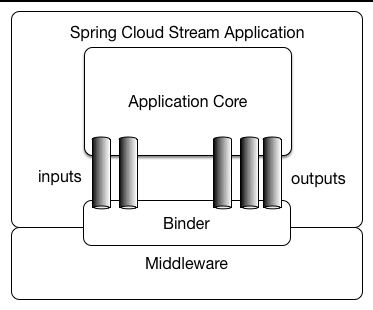

architecture

RabbitMQ

Scale up/down

The Microverse

TIME FOR ACTION

SPring Cloud stream 2.0

WHAts new?

POLLED CONSUmers

consume messages when you feel like it

public interface PolledConsumer {

@Input

PollableMessageSource destIn();

@Output

MessageChannel destOut();

}Micrometer support

export all metrics to any compatible registry like prometheus

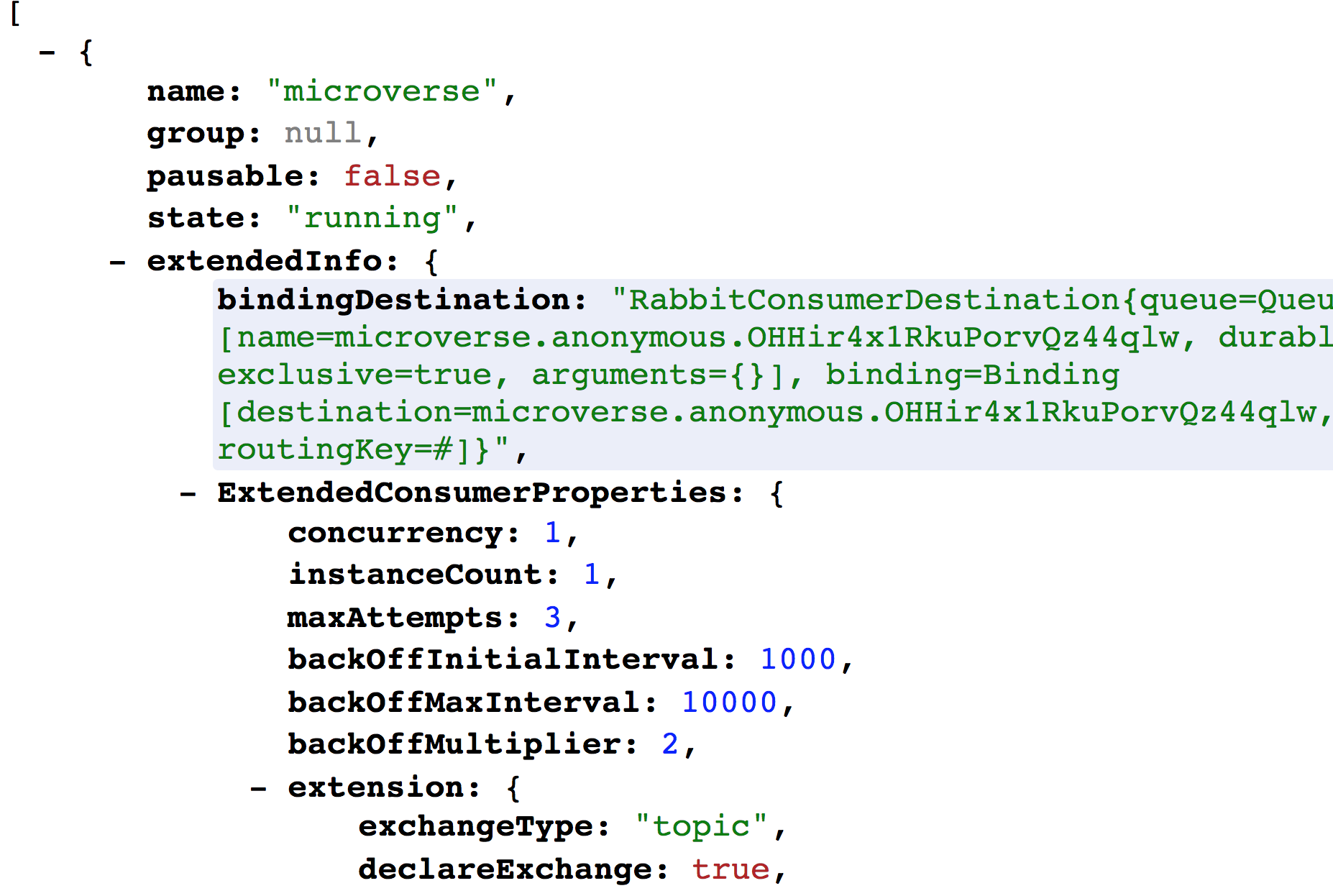

ACTUATOR BINDING CONTROLS

allow both the VISUALISATION AND CONTROL of the Bindings lifecycle.

ACTUATOR AND WEB DEPENDENCY ARE NOW OPTIONAL

run your streaming microservice simply as a process

ENHANCED SUPPORT FOR KAFKA STREAMS

- enhanced interop between Kafka Streams and regular Kafka bindings

- multiple Kafka Streams types (KStream, KTable)

- bindable components, and interactive-query support

@SpringBootApplication

@EnableBinding(KStreamProcessor.class)

public class WordCountProcessorApplication {

@StreamListener("input")

@SendTo("output")

public KStream<?, WordCount> process(KStream<?, String> input) {

return input

.flatMapValues(value -> Arrays.asList(value.toLowerCase().split("\\W+")))

.groupBy((key, value) -> value)

.windowedBy(TimeWindows.of(5000))

.count(Materialized.as("WordCounts-multi"))

.toStream()

.map((key, value) -> new KeyValue<>(null, new WordCount(key.key(), value, new Date(key.window().start()), new Date(key.window().end()))));

}

public static void main(String[] args) {

SpringApplication.run(WordCountProcessorApplication.class, args);

}links

QUEstions?

Copy of Spring Cloud Stream - A new Rick & Morty adventure

By Dieter Hubau

Copy of Spring Cloud Stream - A new Rick & Morty adventure

My presentation on Spring Cloud Stream for Ordina JOIN event

- 1,067