Coding Guideline

Niels & Qiang

Purpose

HIVE8

PEP8

Zen of Python

- Readability counts.

- Beautiful is better than ugly.

- Explicit is better than implicit.

Best point

- PR

- Always changes

- Doc

- Quality - Debt - Redo

Niels

Qiang

Structure

- Imports

- Naming conventions

- Break long lines

- Comments

- Miscellaneous

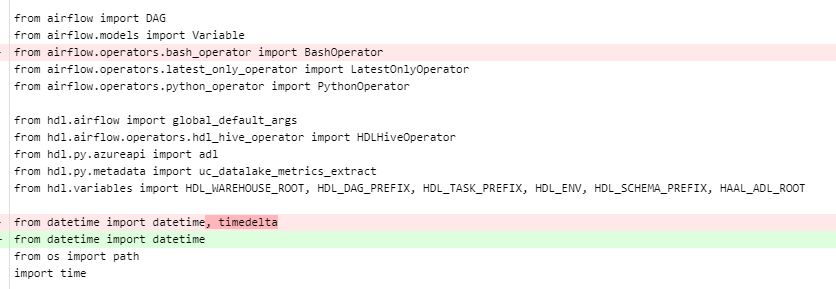

Imports

- Grouping imports

- Order alphabetically

- Break long lines

Not recommended

import pendulum from airflow import DAG from hdl.airflow import global_default_args from datetime import datetime

Recommended

from datetime import datetime from airflow import DAG import pendulum from hdl.airflow import global_default_args

Grouping imports

Not recommended

from hdl.variables import HDL_DAG_PREFIX, ... from hdl.variables import HAAL_ADL_ROOT from hdl.airflow.sensors.adf_activity_run_sensor import ADFActivityRunSensor from hdl.py.dl_raw import partitioned from hdl.airflow.operators.hdl_hive_operator import HDLHiveOperator from hdl.airflow.sensors.hdl_table_source_file_is_ready_sensor import HDLTableSourceFileIsReadySensor from hdl.py.dl_raw import unpartitioned from hdl.airflow.operators.hdl_access_rights_operator import HDLAccessRightsOperator from hdl.airflow.operators.hdl_load_status_operator import HDLLoadStatusOperator from hdl.airflow.operators.hdl_compute_stats_operator import HDLComputeStatsOperator from hdl.airflow import global_default_args

Recommended

from hdl.airflow import global_default_args from hdl.airflow.operators.hdl_access_rights_operator import HDLAccessRightsOperator from hdl.airflow.operators.hdl_compute_stats_operator import HDLComputeStatsOperator from hdl.airflow.operators.hdl_hive_operator import HDLHiveOperator from hdl.airflow.operators.hdl_load_status_operator import HDLLoadStatusOperator from hdl.airflow.sensors.adf_activity_run_sensor import ADFActivityRunSensor from hdl.airflow.sensors.hdl_table_source_file_is_ready_sensor import HDLTableSourceFileIsReadySensor from hdl.py.dl_raw import partitioned from hdl.py.dl_raw import unpartitioned from hdl.variables import HDL_DAG_PREFIX, ...

Order alphabetically

Not recommended

from hdl.variables import HDL_DAG_PREFIX, HDL_SCHEMA_PREFIX, HDL_WAREHOUSE_ROOT, DATA_FACTORY_V2, \ DATA_FACTORY_V1, HDL_TASK_PREFIX, HDL_INGRESS_ROOT, HDL_ENV_NAME, LOCAL_TZ

Recommended

from hdl.variables import ( DATA_FACTORY_V1, DATA_FACTORY_V2, HDL_DAG_PREFIX, HDL_INGRESS_ROOT, HDL_SCHEMA_PREFIX, HDL_TASK_PREFIX, HDL_WAREHOUSE_ROOT, LOCAL_TZ, )

Break long lines

Naming Conventions

- Constants

- Avoid using abbreviations

Not recommended

tuple_fields = "hdl_target_schema_name ..."

Recommended

TUPLE_FIELDS = "hdl_target_schema_name ..."

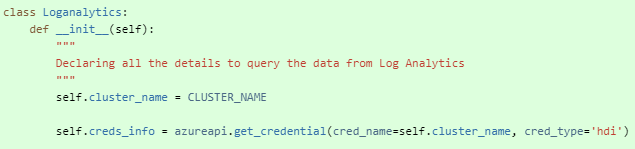

Constants

Not recommended

self.creds_info = azureapi.get_credential(cred_name=self.cluster_name, cred_type="hdi")

Recommended

self.credential_info = azureapi.get_credential( credential_name=self.cluster_name, credential_type="hdi", )

Avoid using abbreviations

Break long lines

- Strings

- Arrays

- Parameters

- List comprehensions

Not recommended

PartitionedTable = collections.namedtuple("PartitionedTable", ("hdl_target_schema_name hdl_target_table_name adf_activity_name adf_name " "adf_pipeline_name do_not_deduplicate primary_key_columns_list " "primary_key_sort_columns_list on_insert_sort_by min_adf_completion_count"))

Recommended

PartitionedTable = collections.namedtuple( "PartitionedTable", " ".join([ "adf_name", "adf_activity_name", "adf_pipeline_name", "do_not_deduplicate", "hdl_target_schema_name", "hdl_target_table_name", "min_adf_completion_count", "on_insert_sort_by", "primary_key_columns_list", "primary_key_sort_colums_list", ]) )

Strings

Not recommended

my_array = [element1,

element2,

element3,

]

Recommended

my_array = [ element1, element2, # ... element4, ]

my_array = [ element1, element2, # ... element4]

Arrays

Not recommended

def my_methods(param1, param2, param3, ):

Recommended

def my_methods( param1, param2, param3, ):

def my_methods( param1, param2, param3):

def my_methods(param1, param2, ):

Parameters

Not recommended

articles_ids = [ articles["id"] for articles in articles_list if articles["type"] == "A" ]

Recommended

articles_ids = [ articles["headid"] for articles in articles_list if articles["type"] == "A" ]

List Comprehensions

Comments

- Write complete sentences

- One-line Docstrings

- Multi-line Docstrings

- None return or parameters

Not recommended

# defining skip function def skip_fn(*args, **kwargs): ...

Recommended

# Define a skip function. def skip_fn(*args, **kwargs): ...

Write complete sentences

Not recommended

def skip_fn(*args, **kwargs): """ Define a placeholder function for the skip operator. """ return True

Recommended

def skip_fn(*args, **kwargs): """Define a placeholder function for the skip operator.""" return True

One-line Docstrings

Not recommended

def compute_params(context): """ Passing date and environment parameters to ADF Pipeline :param context: ... :return: pDayPath, pMonthPath, pYearPath, pDatePath

Recommended

def compute_params(context): """Pass the date and environment parameters to ADF Pipeline. :param context: ... :return: pDayPath, pMonthPath, pYearPath, pDatePath

Multi-line Docstrings

Not recommended

def skip_fn(*args, **kwargs): """Placeholder function for the skip operator. :param args: :param kwargs: """ return True

Recommended

def skip_fn(*args, **kwargs): """Placeholder function for the skip operator.""" return True

None return or parameters

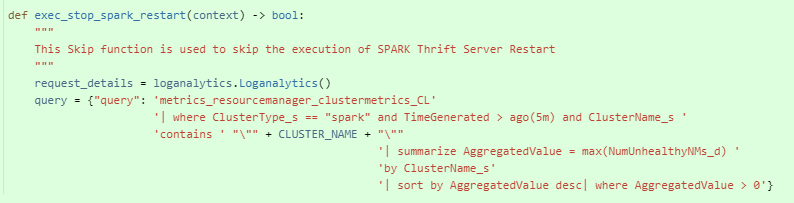

Miscellaneous

- Mix of single and double quote

- Magic number

Not recommended

query = {"query": 'metrics_resourcemanager_clustermetrics_CL' '| where ClusterType_s == "spark" and TimeGenerated > ago(5m) and ClusterName_s ' 'contains ' "\"" + CLUSTER_NAME + "\"" '| sort by AggregatedValue desc| where AggregatedValue > 0'}

Recommended

query = """metrics_resourcemanager_clustermetrics_CL | where ClusterType_s == "spark" and TimeGenerated > ago(5m) and ClusterName_s contains "{CLUSTER_NAME}" | sort by AggregatedValue desc| where AggregatedValue > 0 """.format(CLUSTER_NAME=CLUSTER_NAME)

query = {"query": '...' '(contains ' "\"" + CLUSTER_NAME_A + "\"" ' or ' "\"" + CLUSTER_NAME_B + "\"" ' or' "\"" + CLUSTER_NAME_C + "\")"

'...'}

query = """metrics_resourcemanager_clustermetrics_CL | where ClusterType_s == "spark" and TimeGenerated > ago(5m) | and ( ClusterName_s contains "{}" or "{}" or"{}" ) | sort by AggregatedValue desc| where AggregatedValue > 0 """.format( CLUSTER_NAME_A,

CLUSTER_NAME_B,

CLUSTER_NAME_C,

)

Mix of single and double quote

Not recommended

LOAD = HDLPartitionedDecentralizedDeltaInsertOperator( num_partitions_per_batch=100, max_partitions_in_total=600, megabytes_per_reducer_on_finalize=128, )

Recommended

PARTITIONED_TABLE = PartitionedTable( num_partitions_per_batch=100, max_partitions_in_total=600, megabytes_per_reducer_on_finalize=128, )

LOAD = HDLPartitionedDecentralizedDeltaInsertOperator( num_partitions_per_batch=PARTITIONED_TABLE.num_partitions_per_batch, max_partitions_in_total=PARTITIONED_TABLE.max_partitions_in_total, megabytes_per_reducer_on_finalize=PARTITIONED_TABLE.megabytes_per_reducer_on_finalize, )

Magic number

Materials

- PPT

- Github

- HAAL doc

Questions?

Import

Avoid using abbreviations

Mix single/double quote

PEP8

By Qiang MENG

PEP8

- 4