Speech Project

Week-6 Progress Report

B02901054 方為

B02901085 徐瑞陽

Kaldi Project

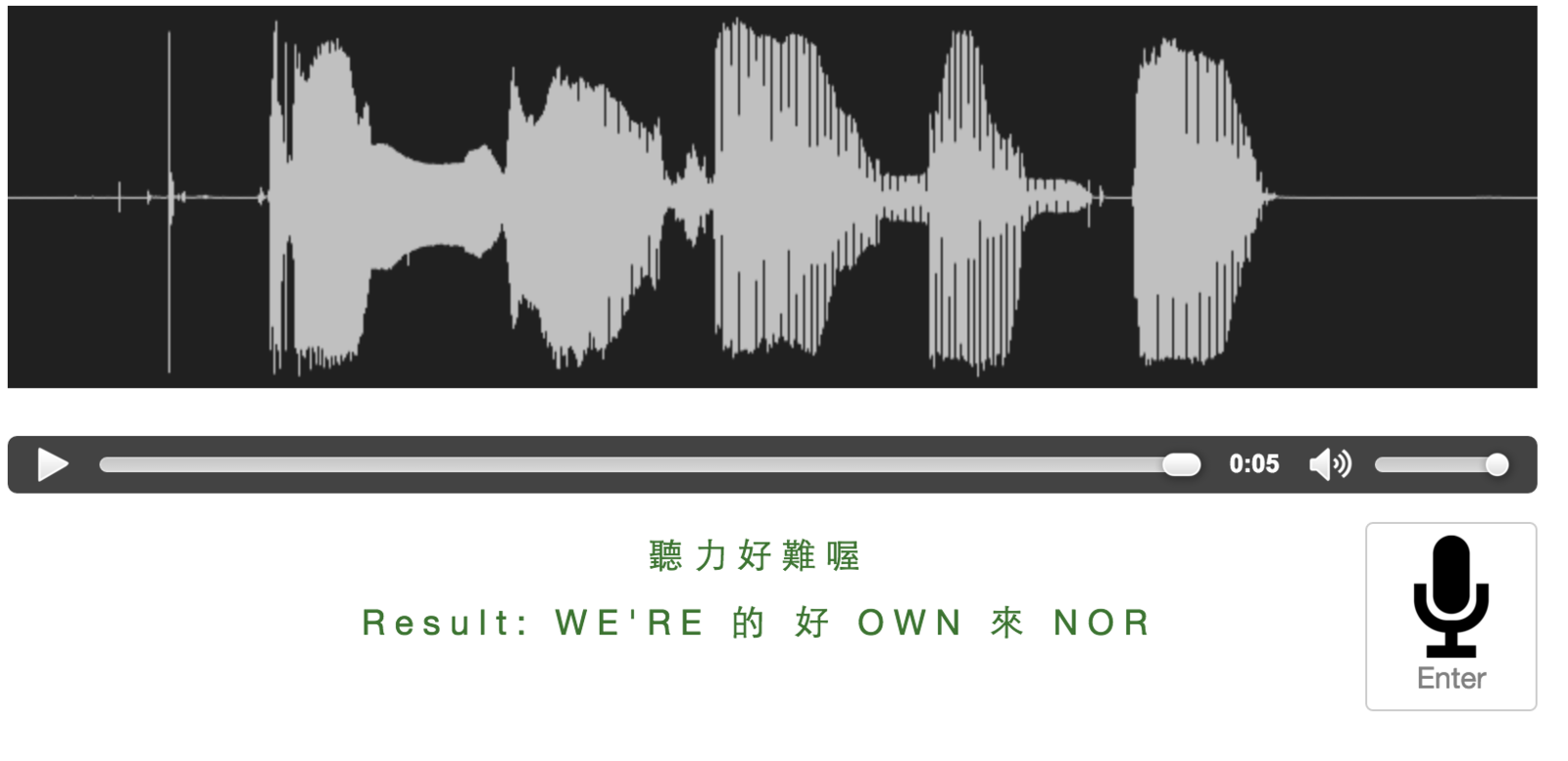

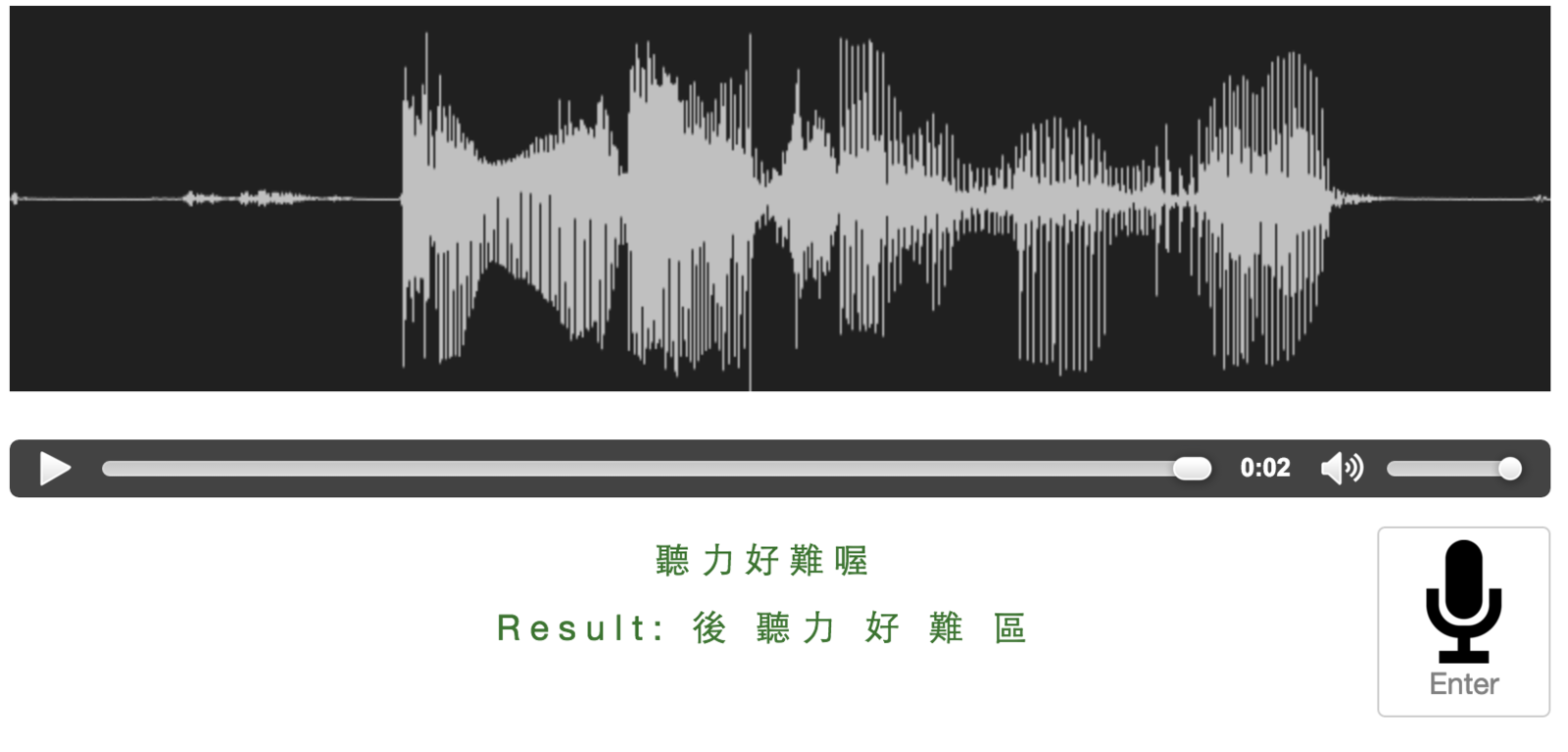

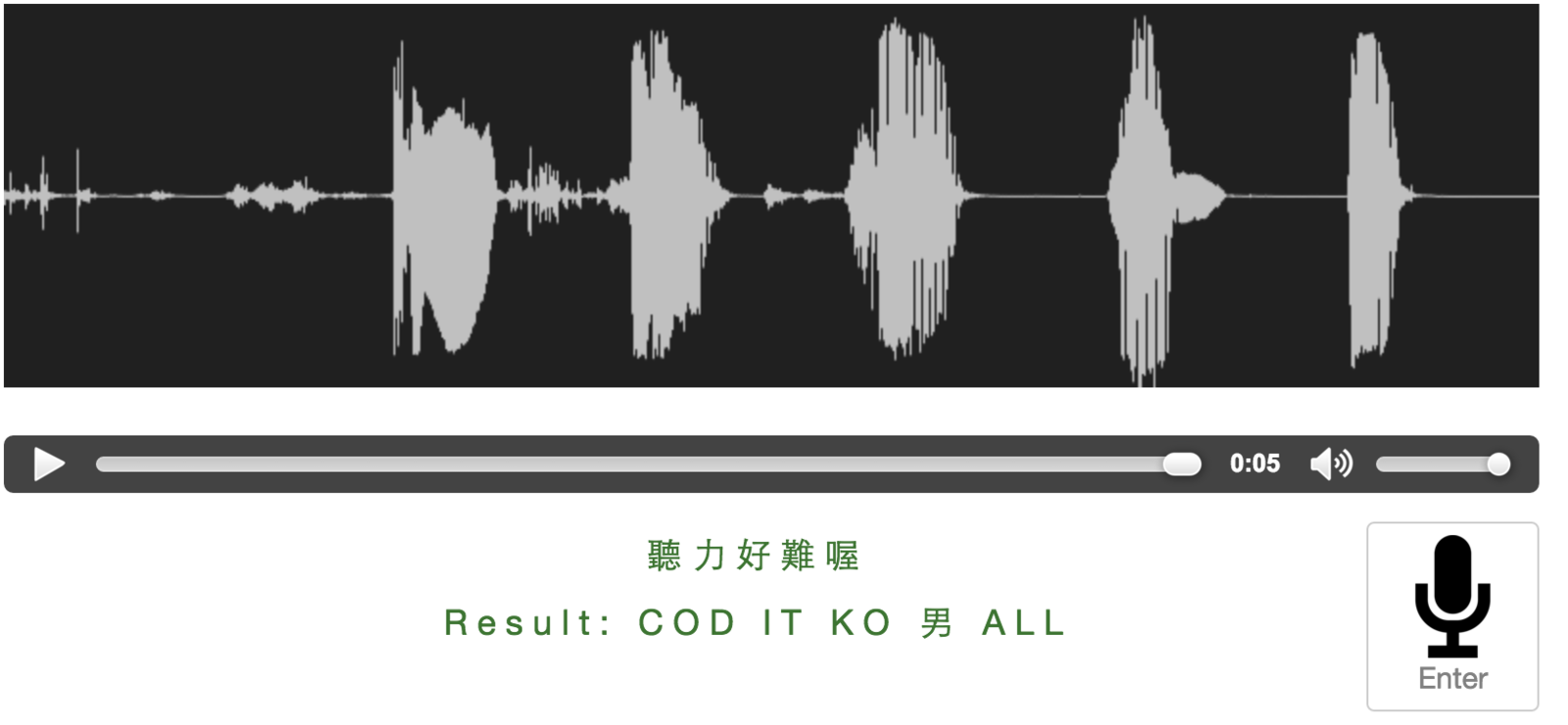

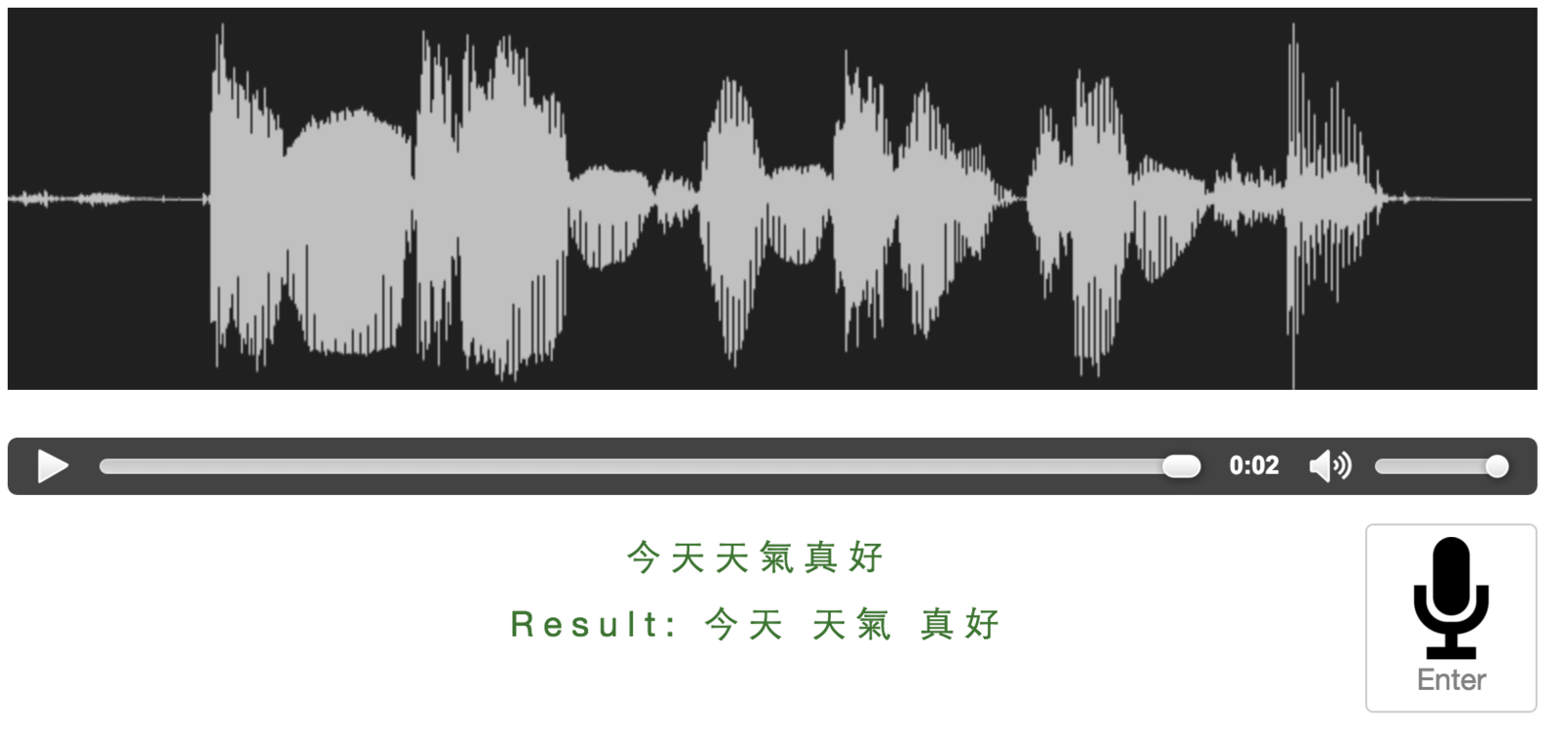

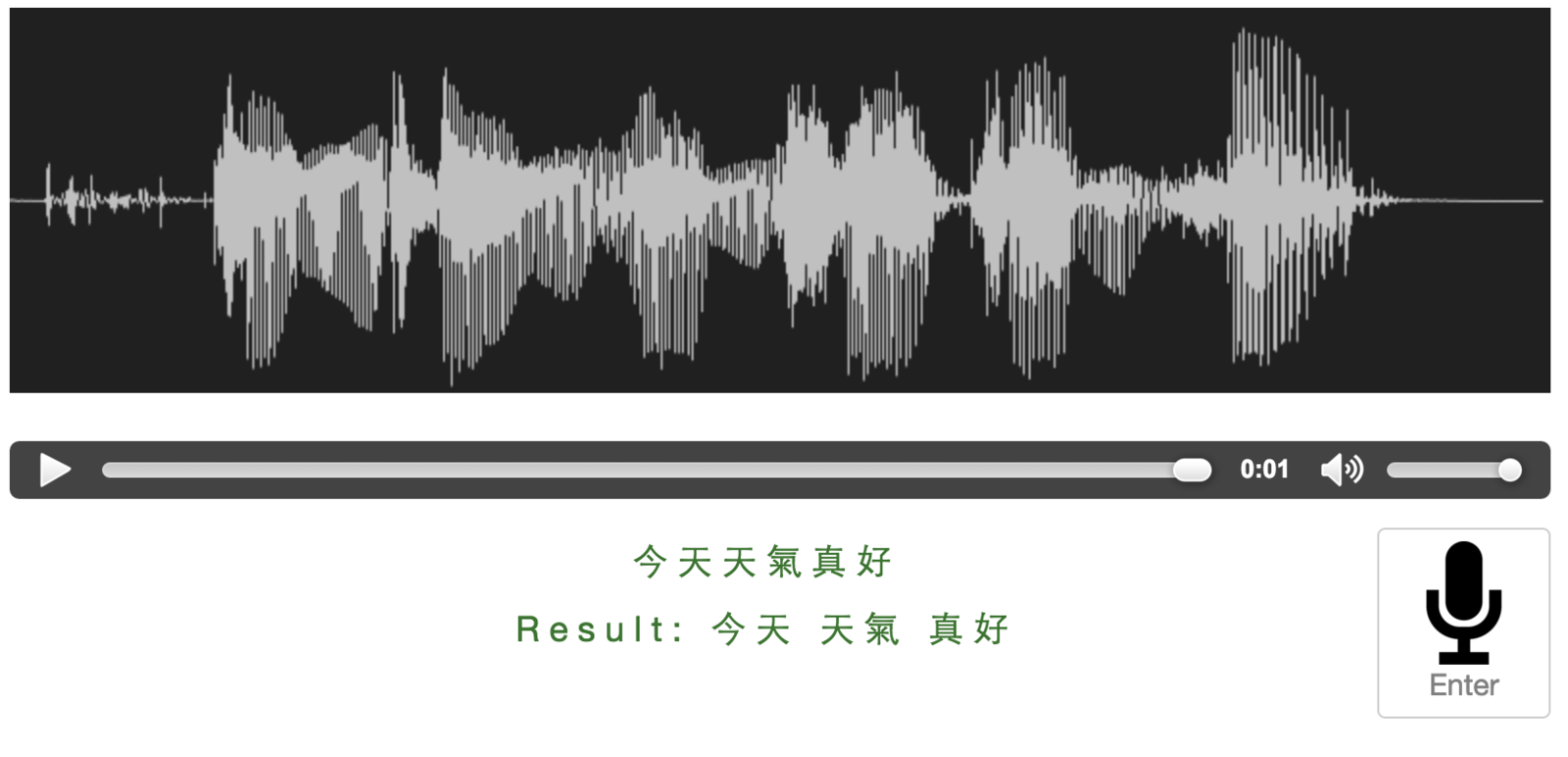

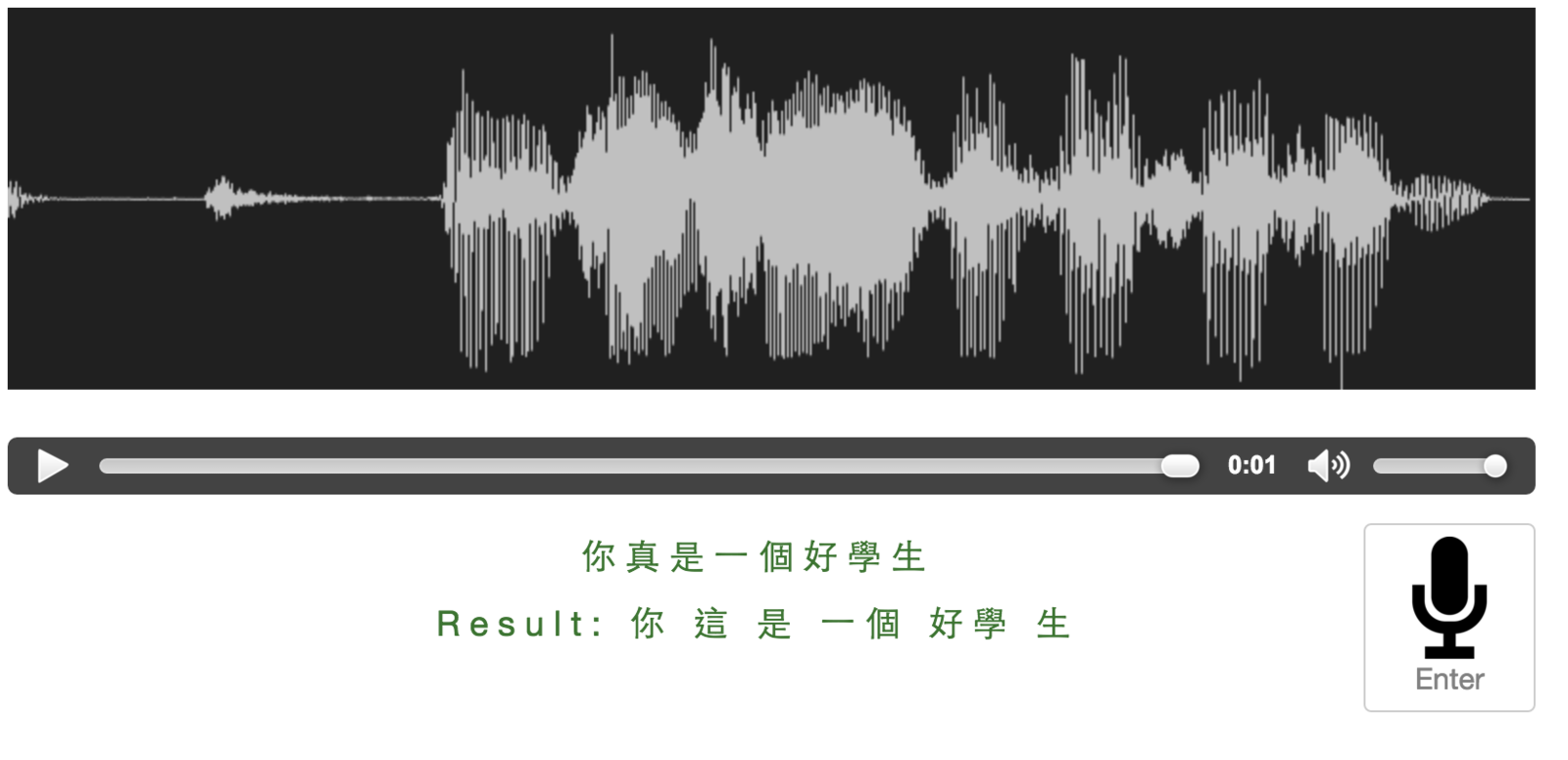

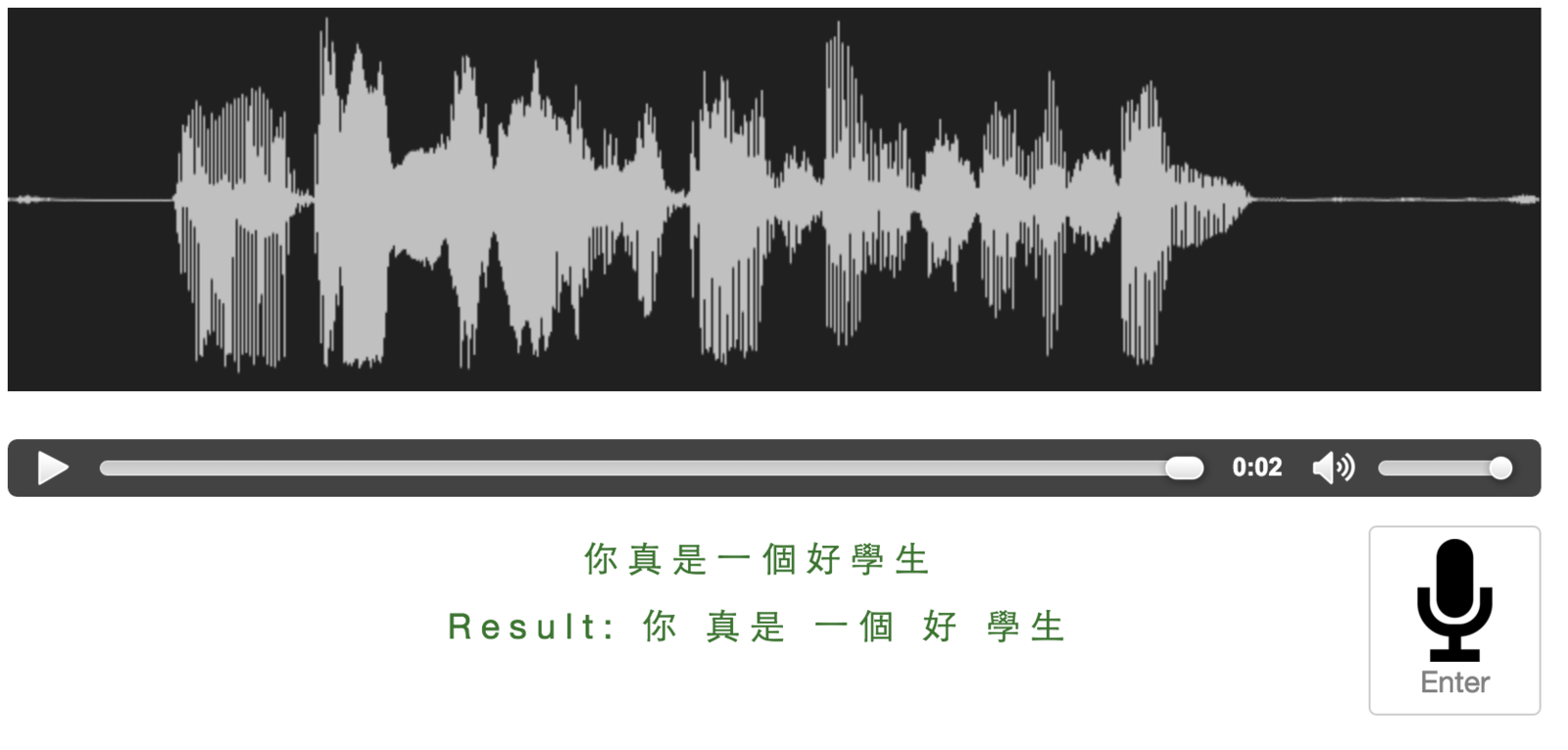

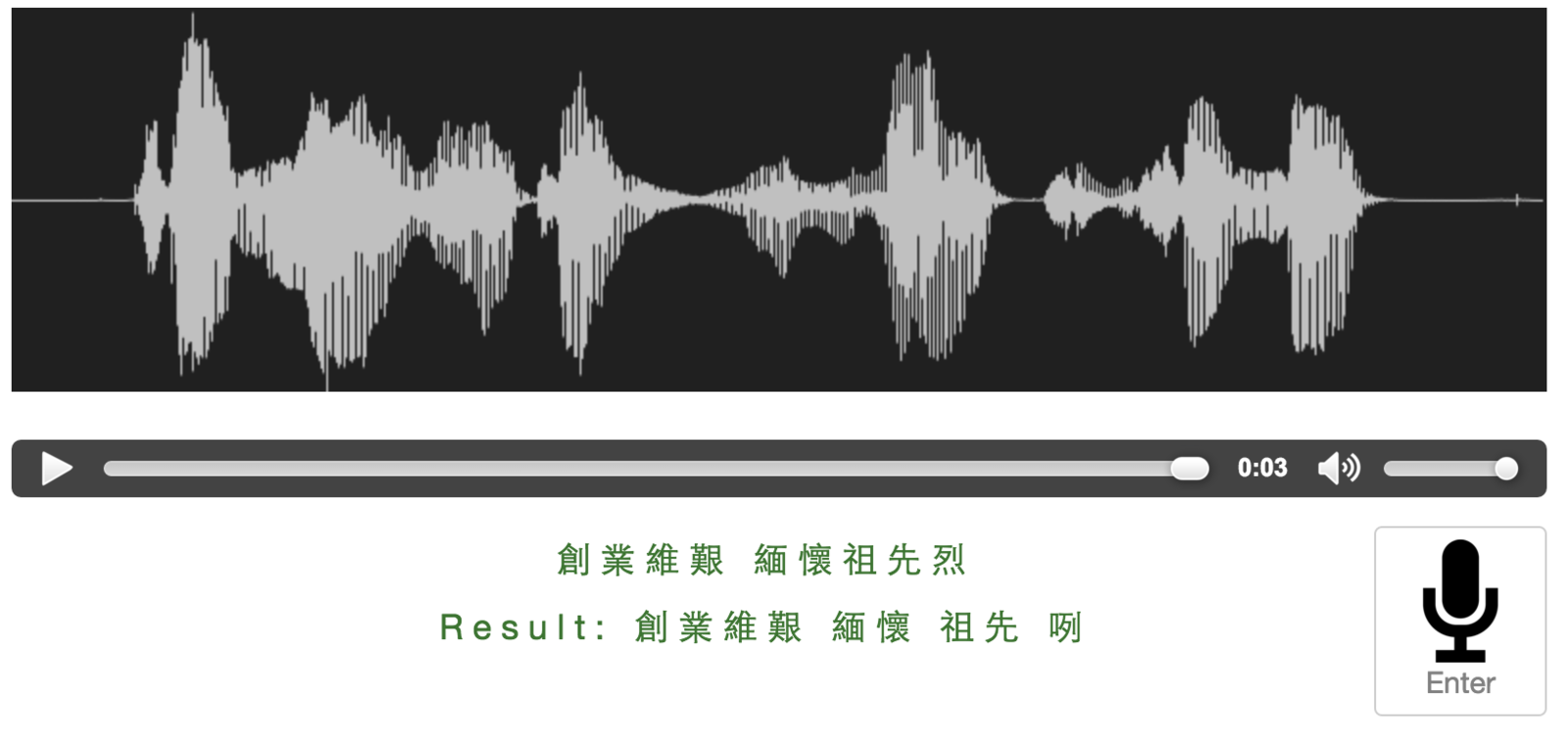

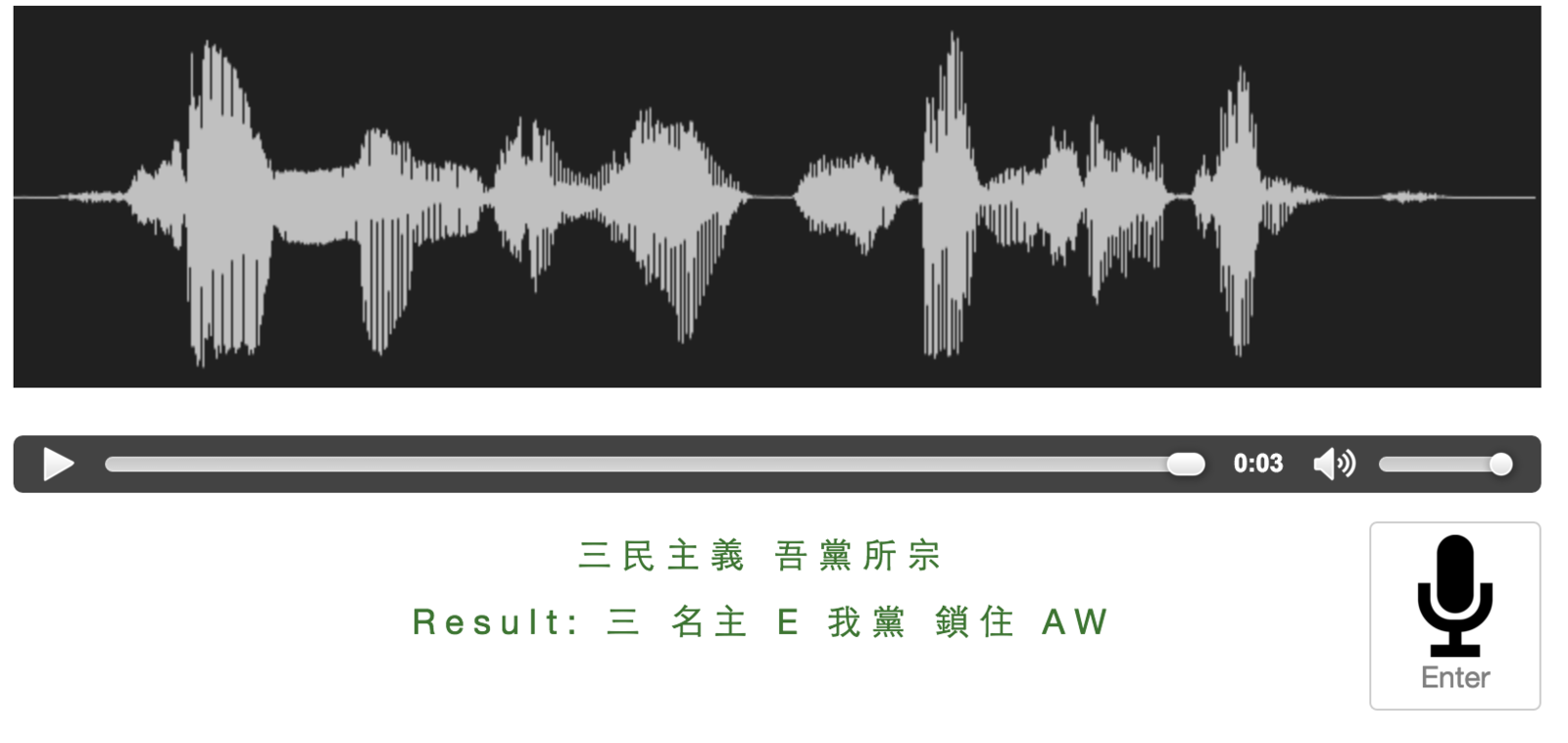

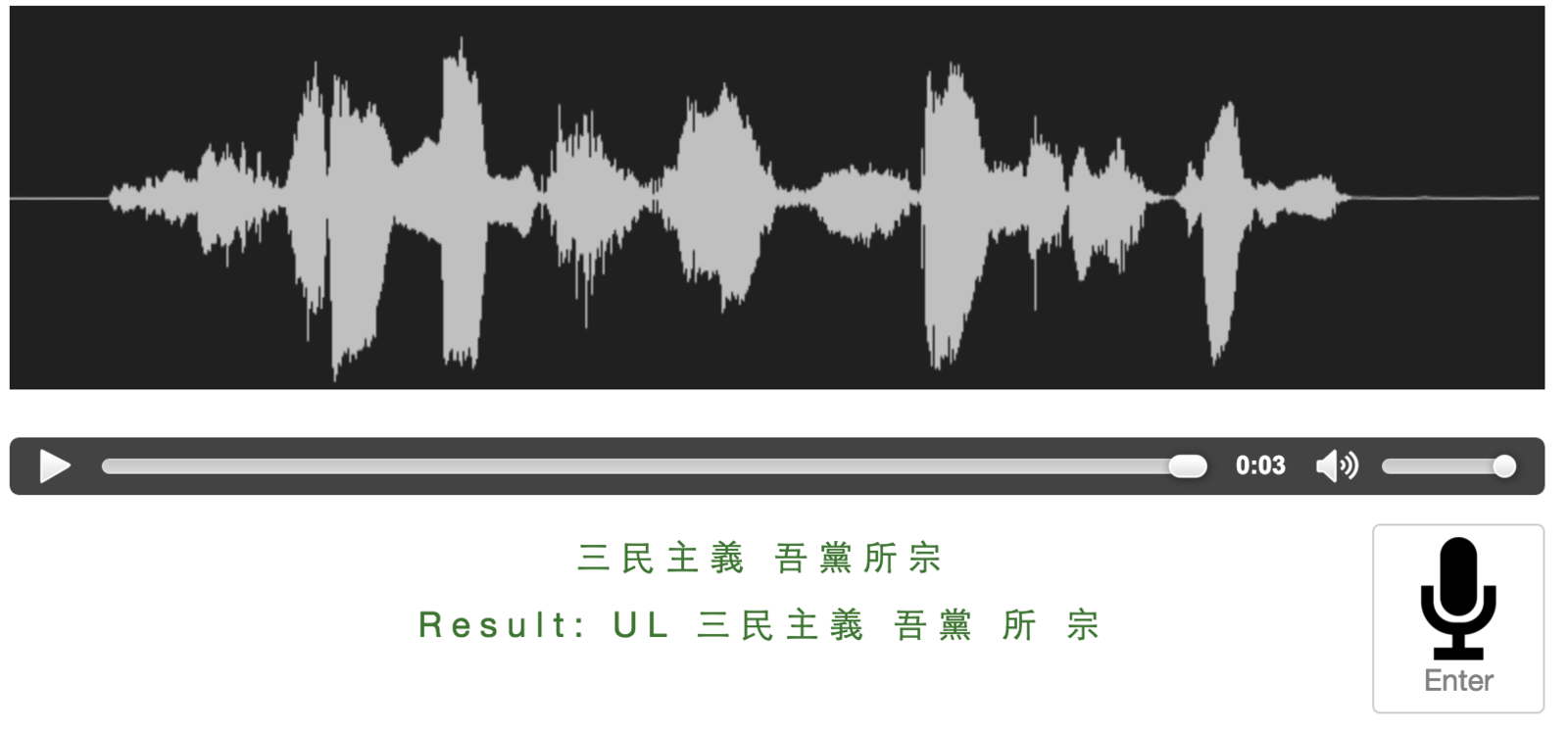

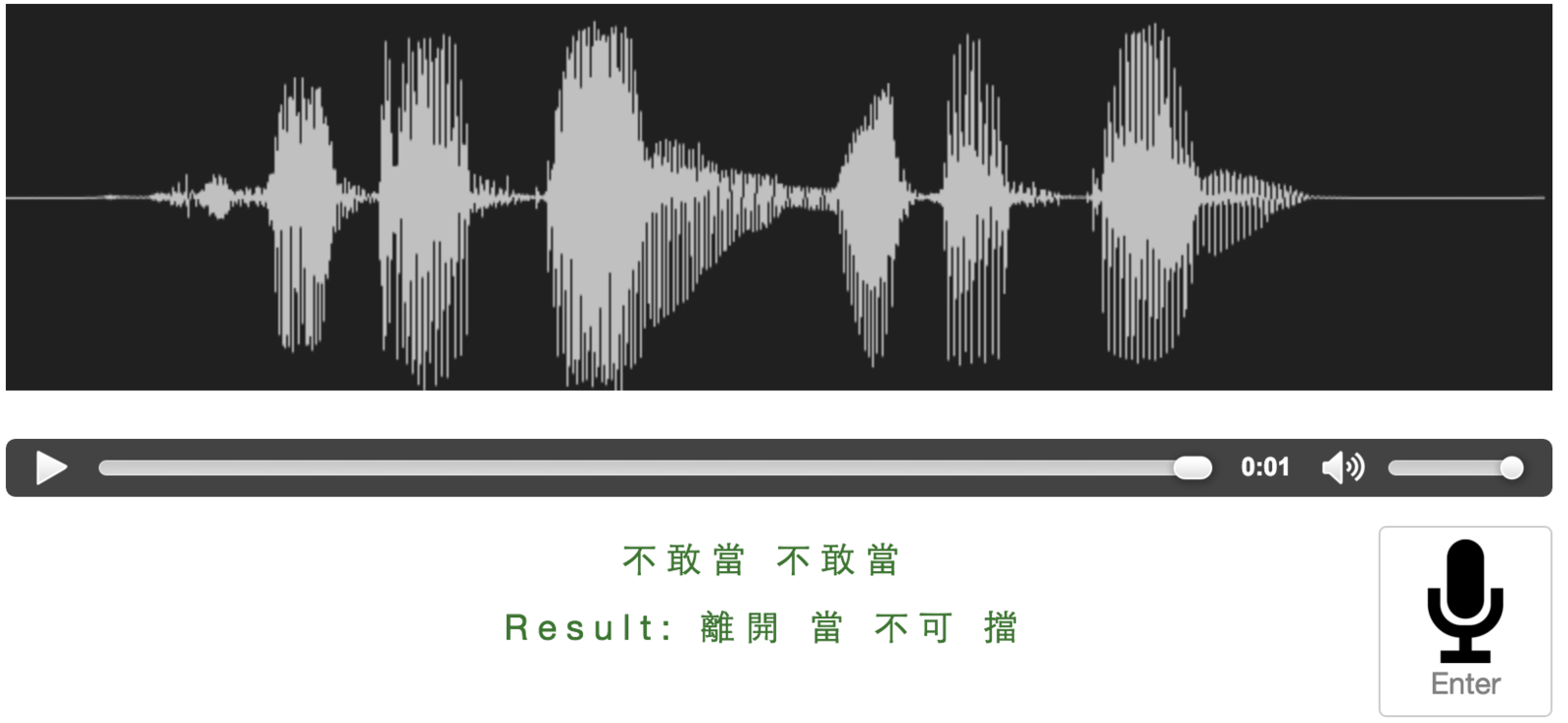

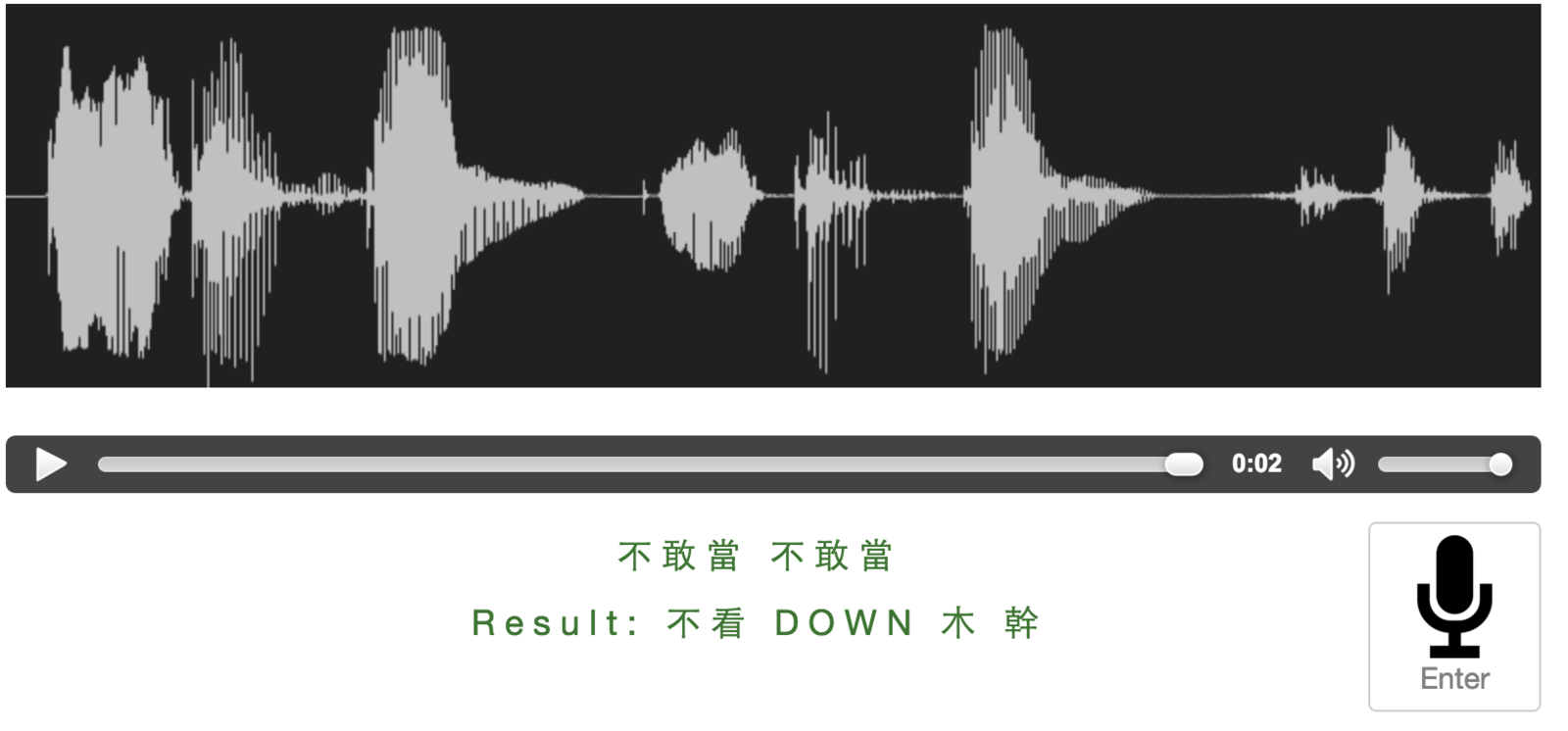

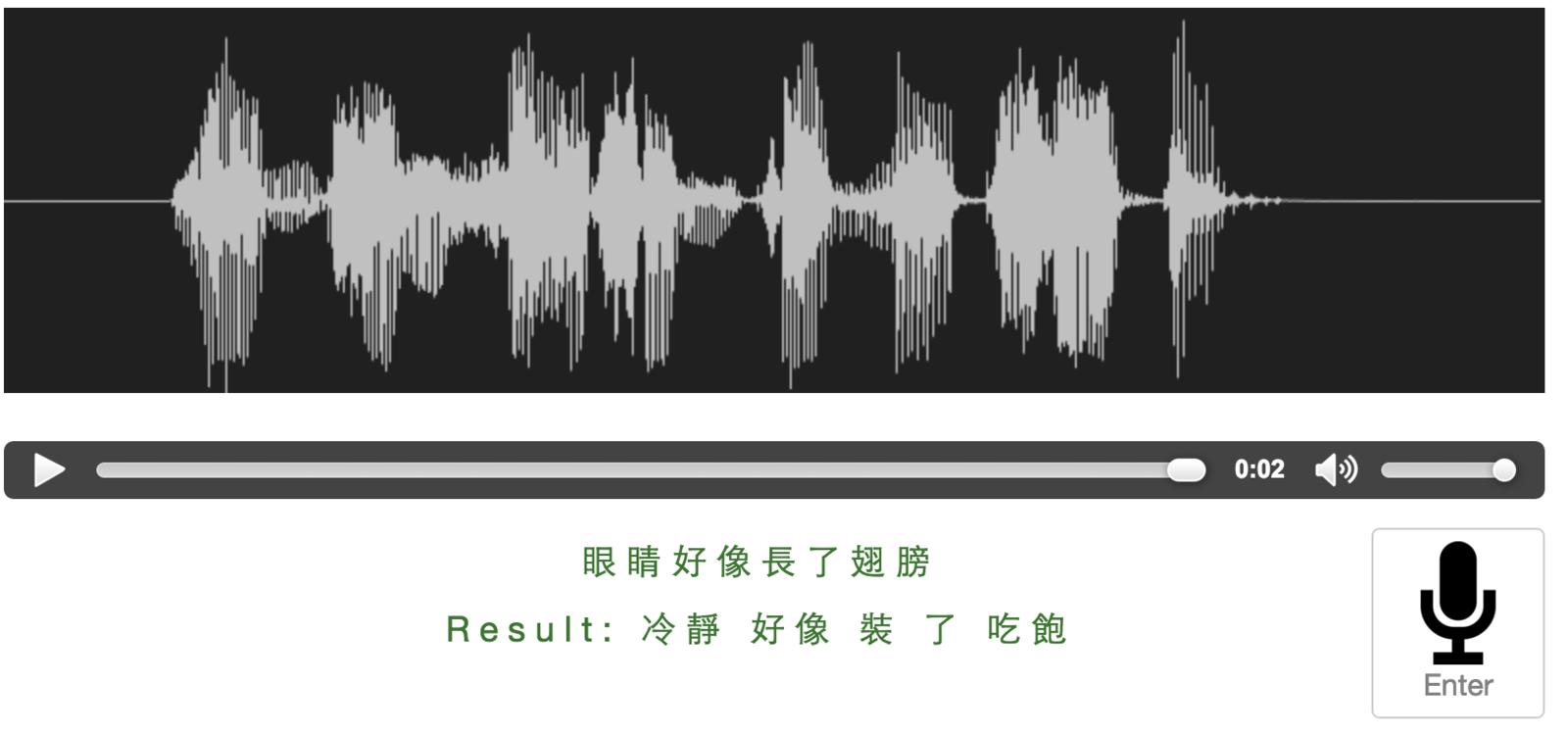

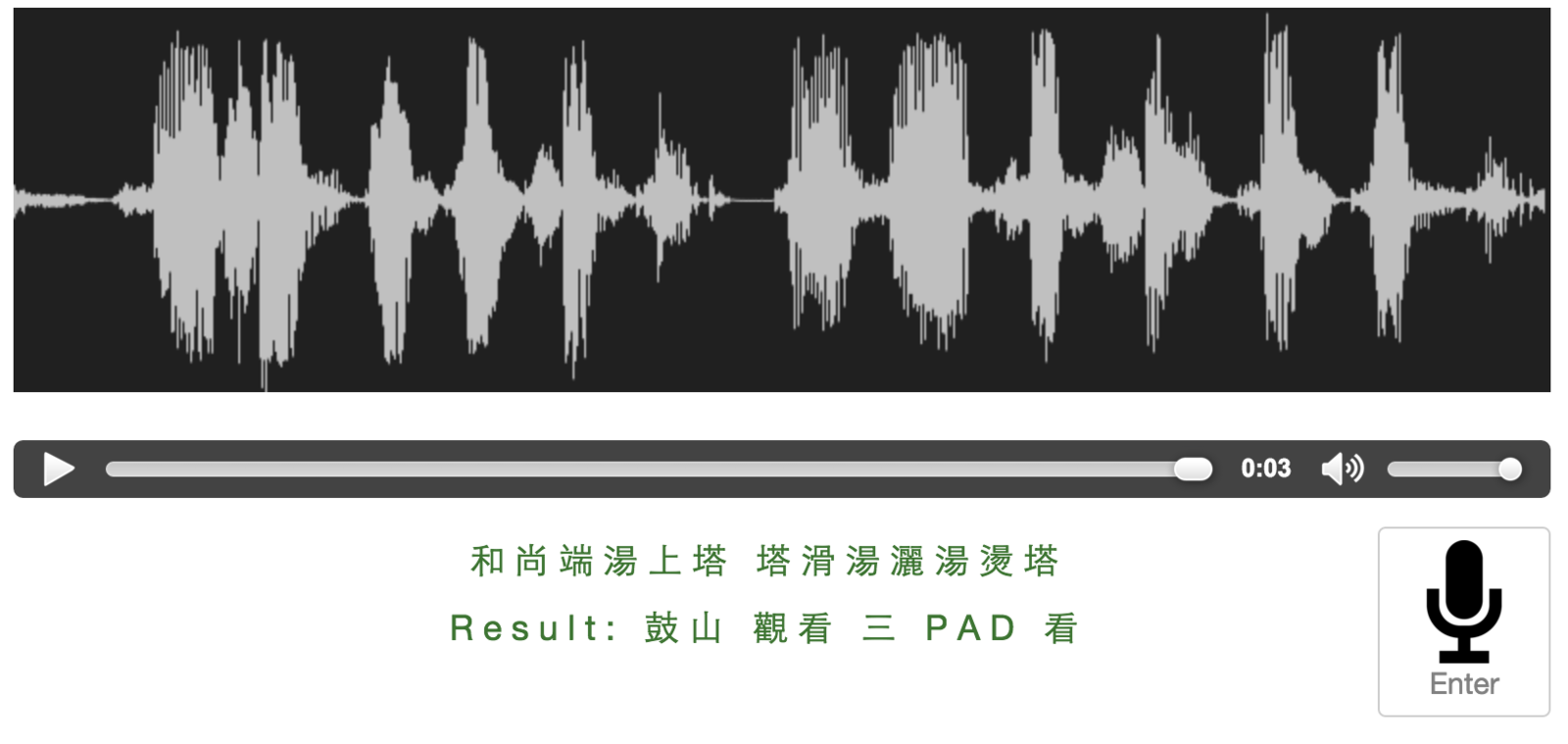

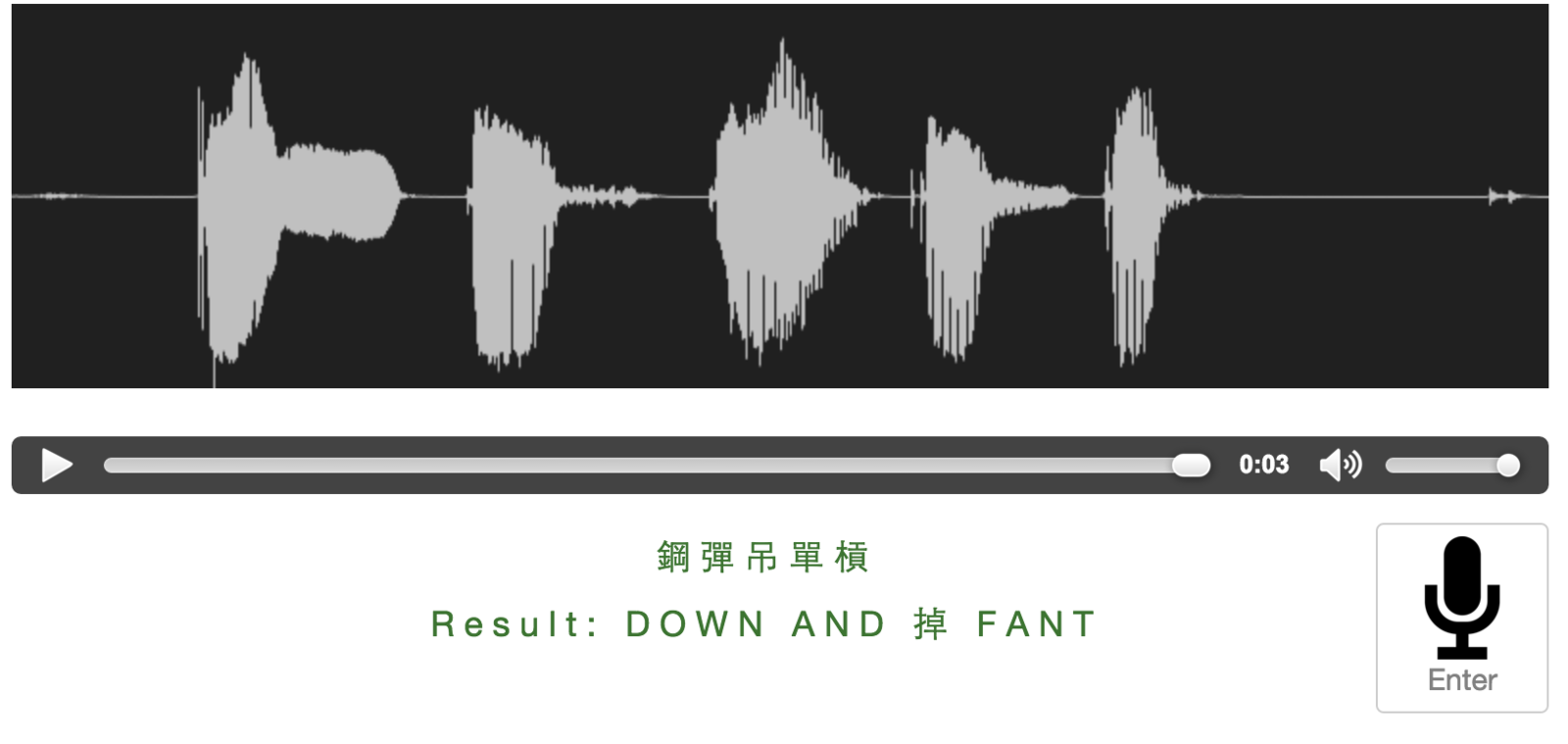

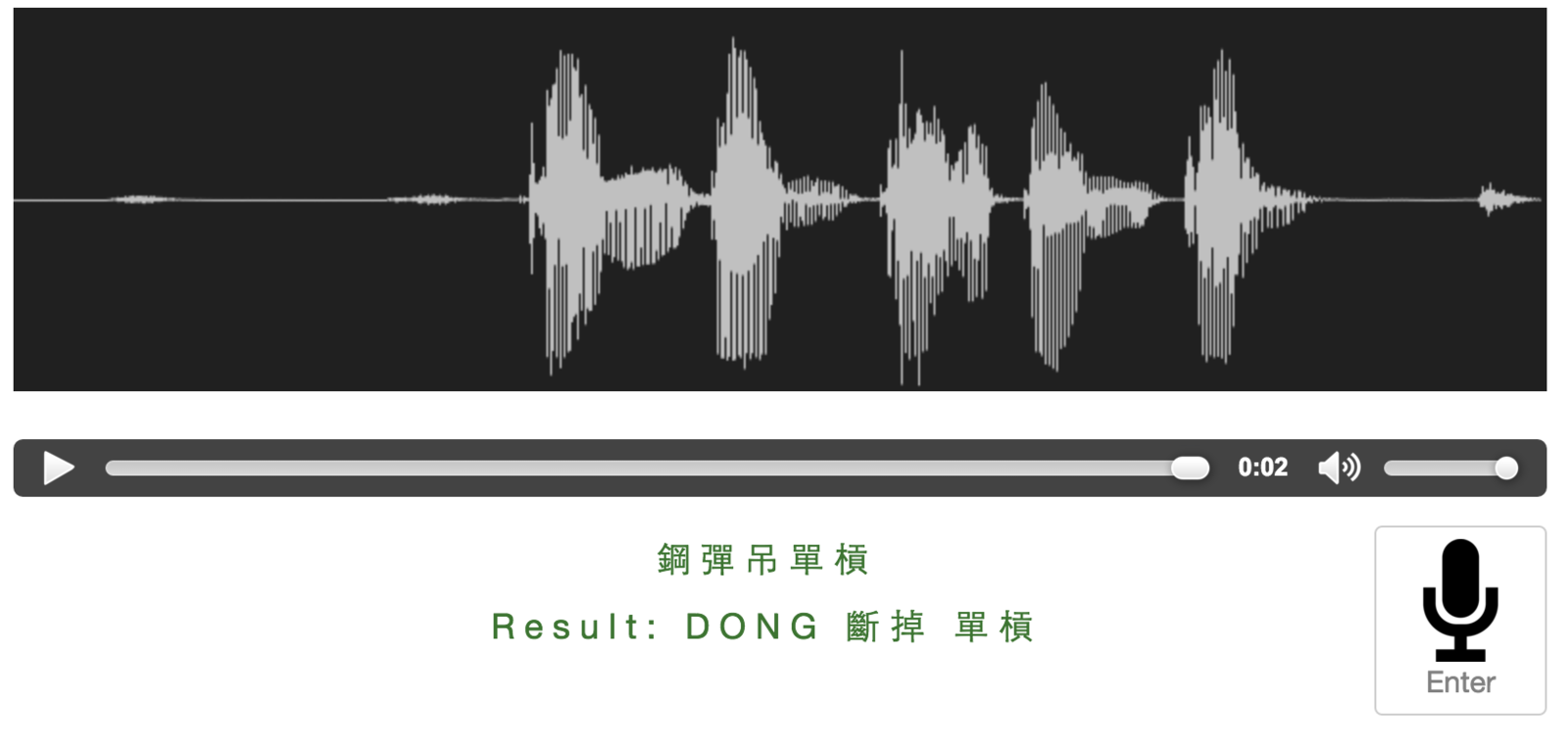

Live Demo

Obstacles (not solved yet...)

- Cannot predict with uploaded files, including the default files provided by the TA

- Can only use basic model

Slow

Fast

Interrupted

Interrupted

Fast

Fast

Slow

Slow

Special Project

2nd stage

Natural Language Processing

Recursive Neural Network

Evolution

Meaning of Word

1 - of - N encoding

再根據不同應用去train

一個Neural Network

Neighbor

word

Middle

word

prob.

e.g

Word2Vec

將單字轉為投射到實數向量空間

而且這個空間有許多好的性質

Text

coupus

A set of

vector

Meaning of sentence

sentence word sequence

\approx

≈

Bag of words

Without considering the order

But order is matter @@"

Sequential Model

Tree Model

Implementation

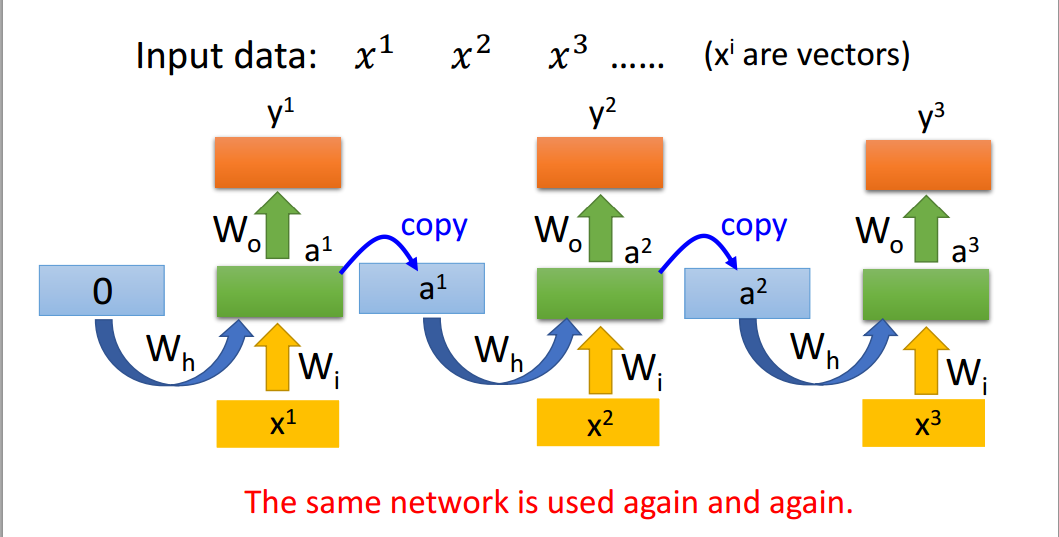

RNN

Recurrent Neural Network

Can't catch long sentence Meaning

CONS

Gradient Explosion or Vanish

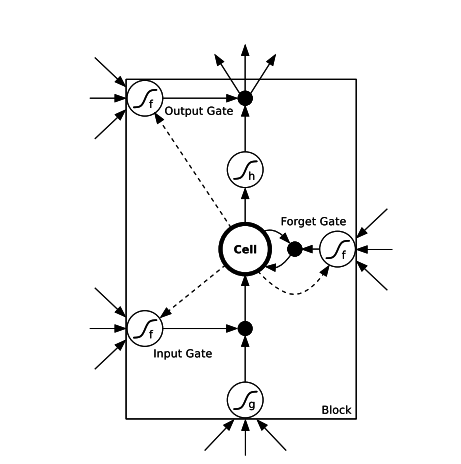

LSTM

Long Short-Term Memory

強化版 Neuron Layer

Input gate :

Output gate :

Forget gate :

Memory Cell要被留下來的比例

Memory Cell要隨Input更新的比例

Memory Cell要呈現在output的比例

求導很複雜...

還好我們有theano ^.<

\frac{\partial O^{r} }{\partial c_t} \approx f_t \odot \frac{\partial O^{r} }{\partial c_{t-1}}

∂ct∂Or≈ft⊙∂ct−1∂Or

c_t = i_t \odot g + f_t \odot c_{t-1}

ct=it⊙g+ft⊙ct−1

簡化版error signal backpropogate

跟memory cell相關的所有參數

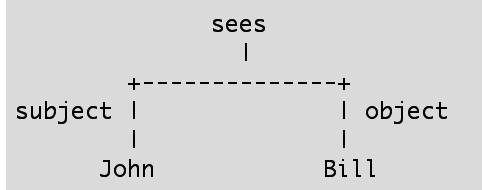

Tree - LSTM

Child-Sum Tree-LSTM

Dependency Tree

\tilde{h}_{see} =\left \{ h_{John} , h_{Bill}\right \}

h~see={hJohn,hBill}

\tilde{h}_{j} = \sum_{k \in Child(j)} h_k

h~j=∑k∈Child(j)hk

f_{jk} = \sigma\left ( W^{(f)}x_j + U^{(f)}h_k + b^{(f)}\right )

fjk=σ(W(f)xj+U(f)hk+b(f))

c_j = i_j \odot g + \sum_{k \in Child(j)} f_{jk} \odot c_k

cj=ij⊙g+∑k∈Child(j)fjk⊙ck

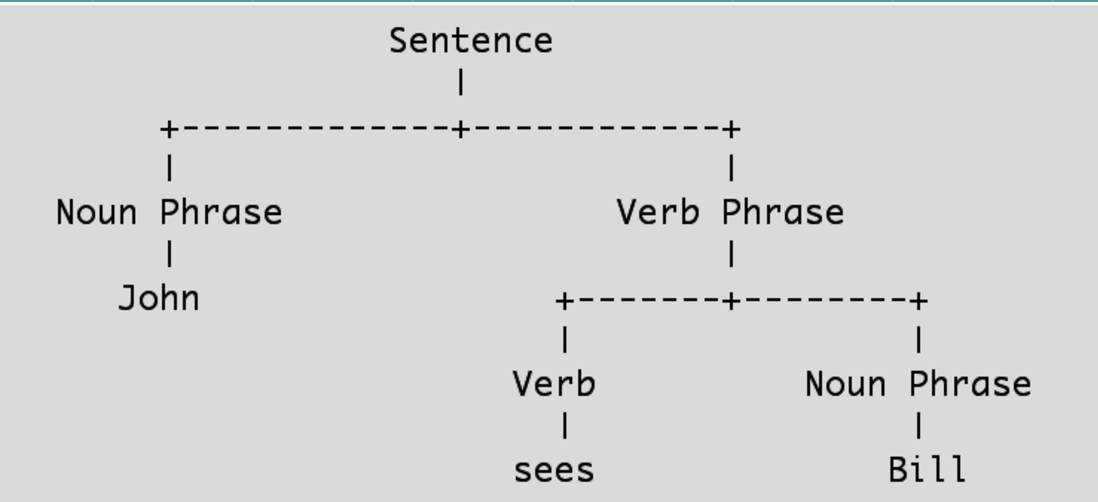

N-ary Tree-LSTM

Constituency Tree

i_j = \sigma\left ( W^{(i)}x_j +\sum_{l=1}^{N} U_{l}^{(i)} h_{jl} + b^{(i)}\right )

ij=σ(W(i)xj+∑l=1NUl(i)hjl+b(i))

以此類推

o_j , f_j

oj,fj

是leaf 才有

x_j

xj

c_j = i_j \odot g + \sum_{l=1}^{N} f_{jl} \odot c_{jl}

cj=ij⊙g+∑l=1Nfjl⊙cjl

How to find that structure

SpeechProject-week6

By Wei Fang

SpeechProject-week6

- 665