a defence against adversarial examples

Alvin Chan

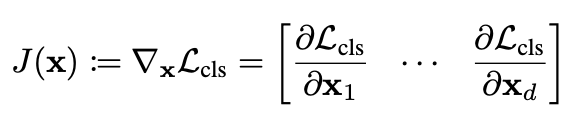

Jacobian

JARN:

Adversarially Regularized Networks

Outline

- Introduction

- Target Domains

- Attacks

- Defenses

- Challenges & Discussion

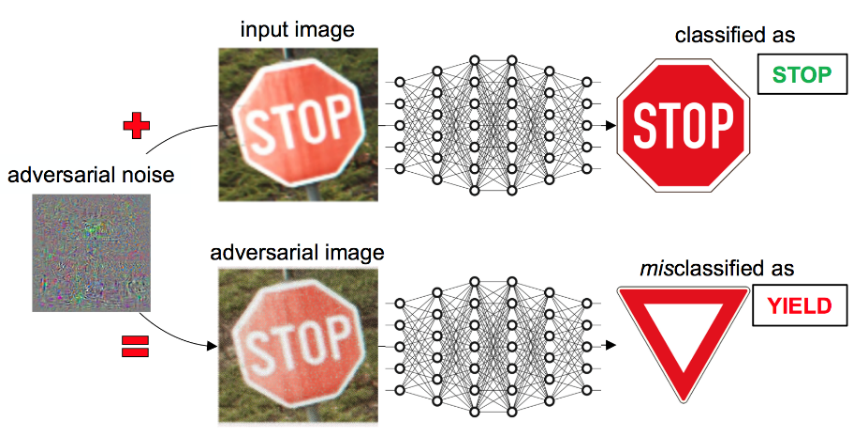

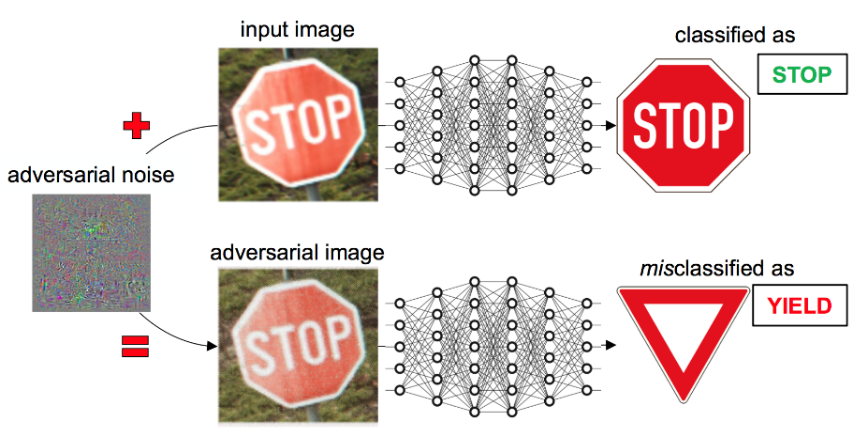

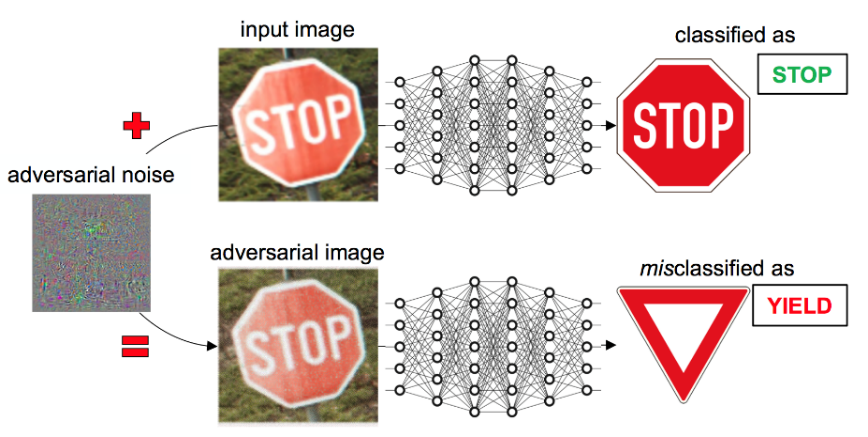

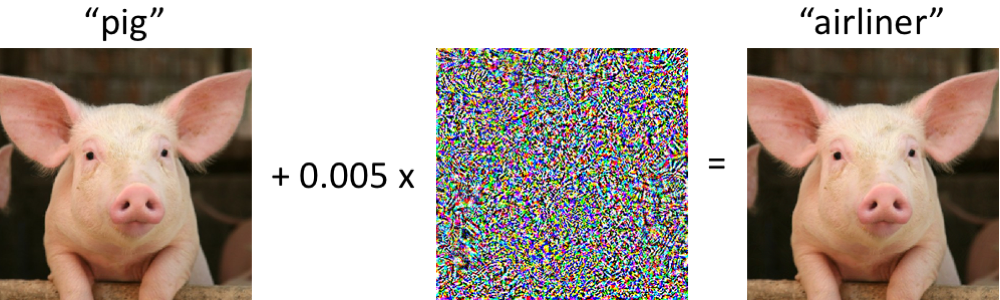

Adversarial Attacks

stop sign

90 km/h

Introduction

- Deep Learning models are still vulnerable to adversarial attacks despite new defenses

- Adversarial attacks can be imperceptible to human

Computer Vision

-

Misclassification of image recognition

-

Face recognition

-

Object detection

-

Image segmentation

-

-

Reinforcement learning

Gradient-based Attacks

-

Mostly used in Computer Vision domain

-

Uses gradient of the target models to directly perturb pixel values

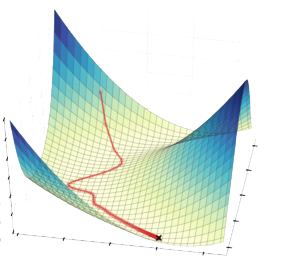

Gradient-based Attacks

-

Optimizing two components:

- Distance between the clean and adversarial input

- Label prediction of image

Gradient-based Attacks

-

White-box: Access to architecture & hyperparameters

-

Black-box: Access to target model’s prediction

-

Transfer attacks from single or an ensemble of substitute target models

-

Adversarial Training

- Training on adversarial examples

- Attacks used affects effectiveness

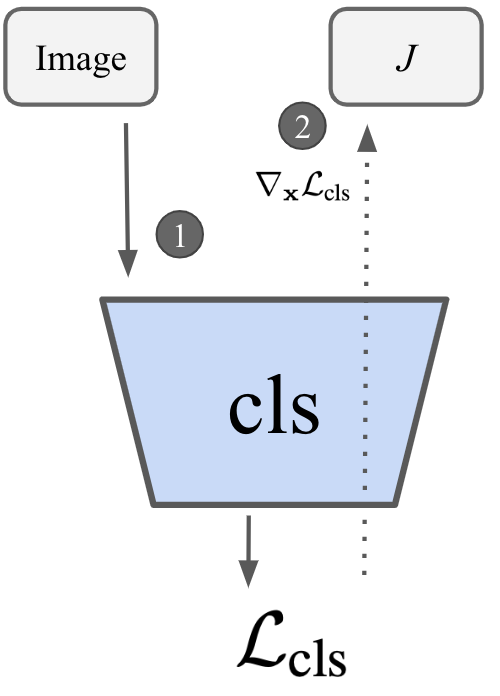

Robustness & Saliency

Image

Standard

PGD7

Robustness & Saliency

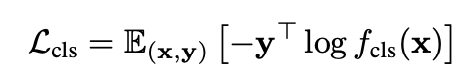

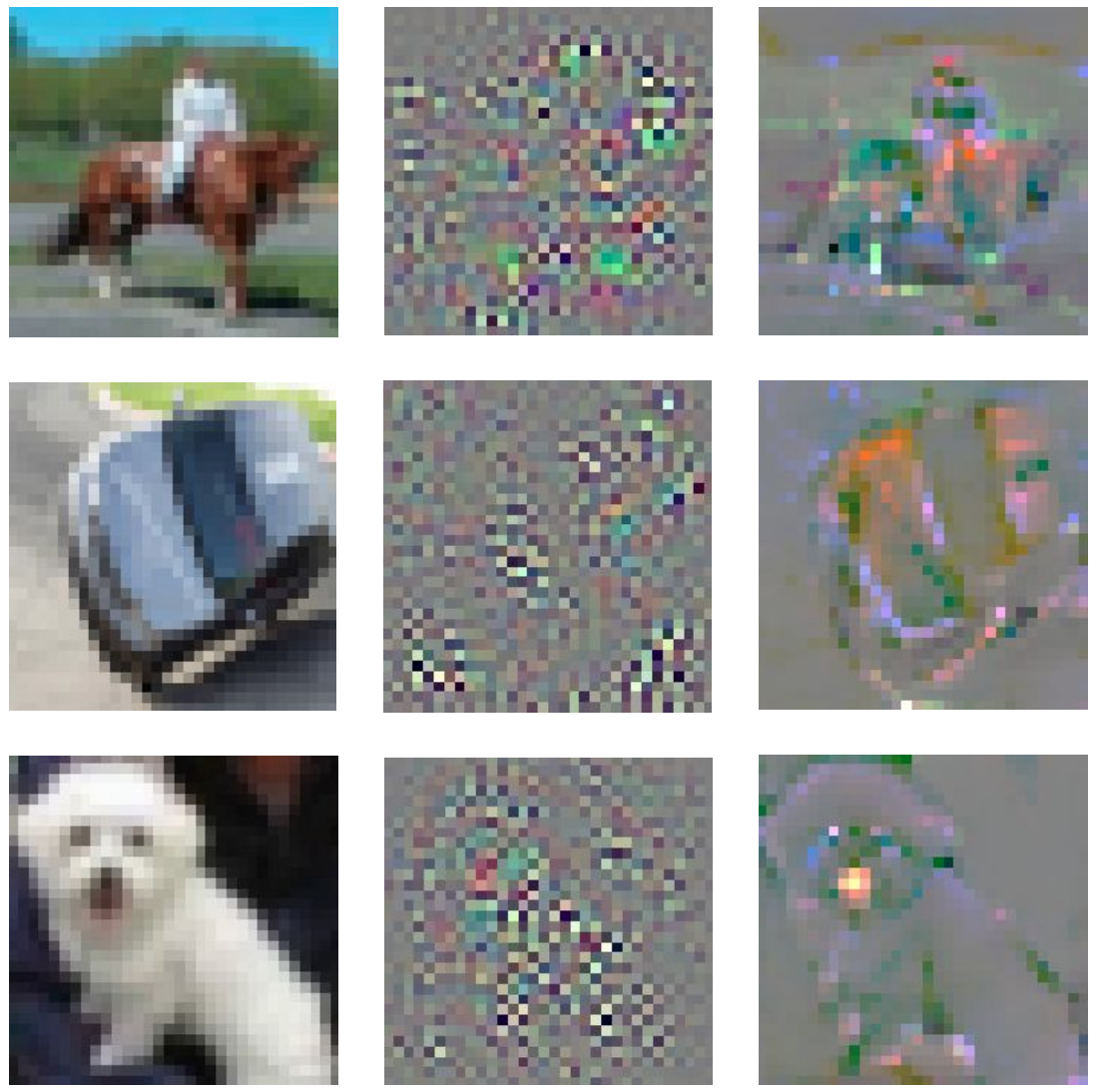

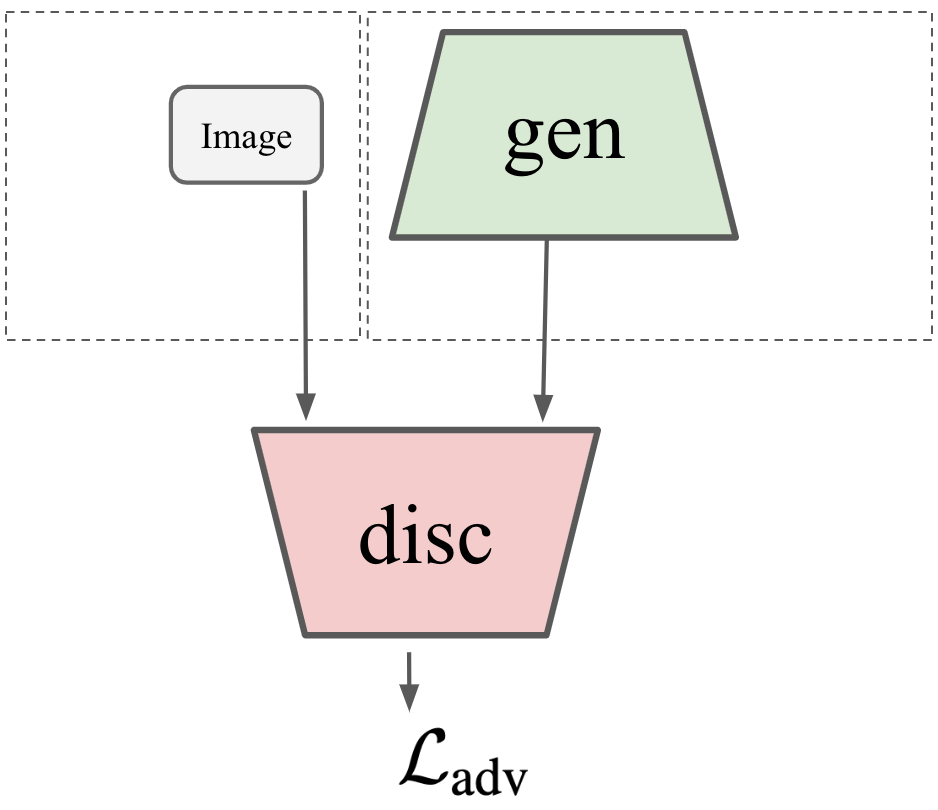

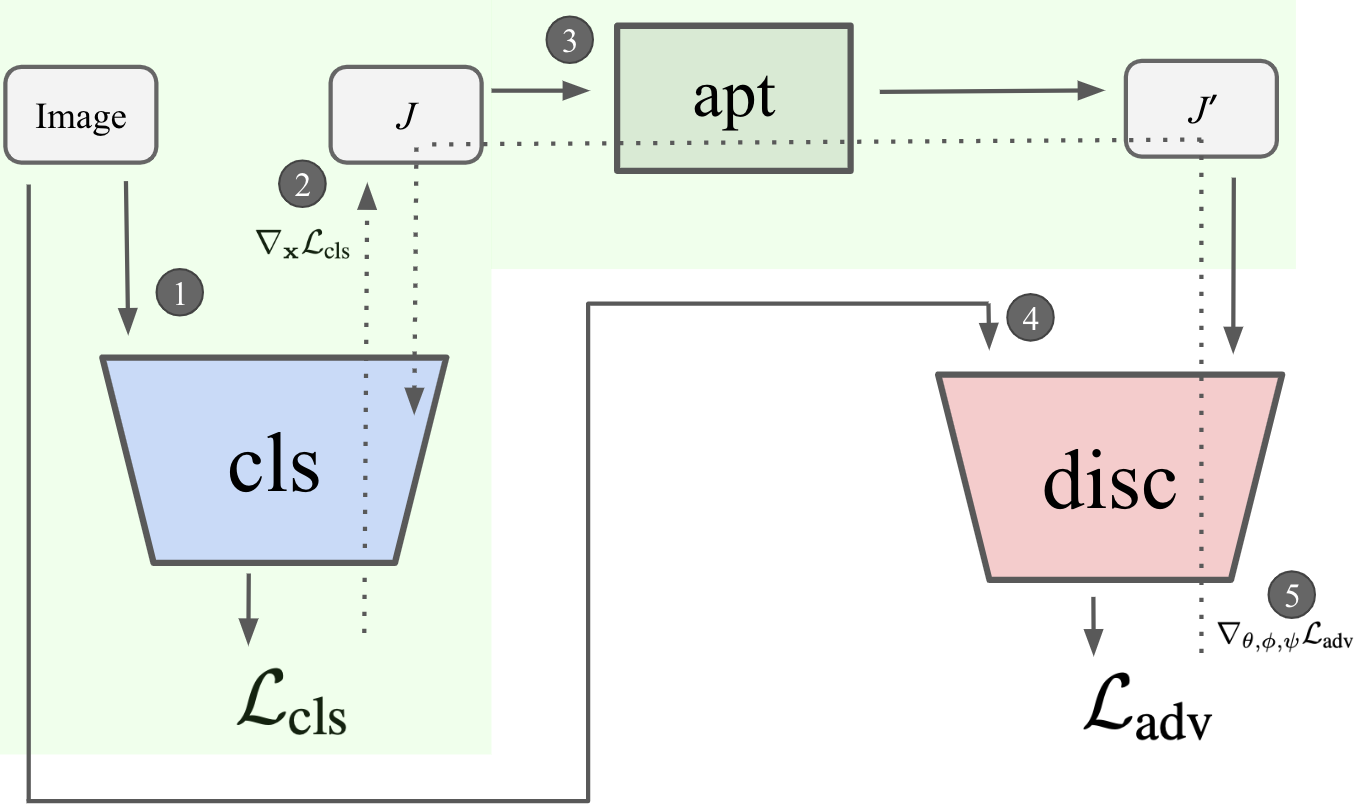

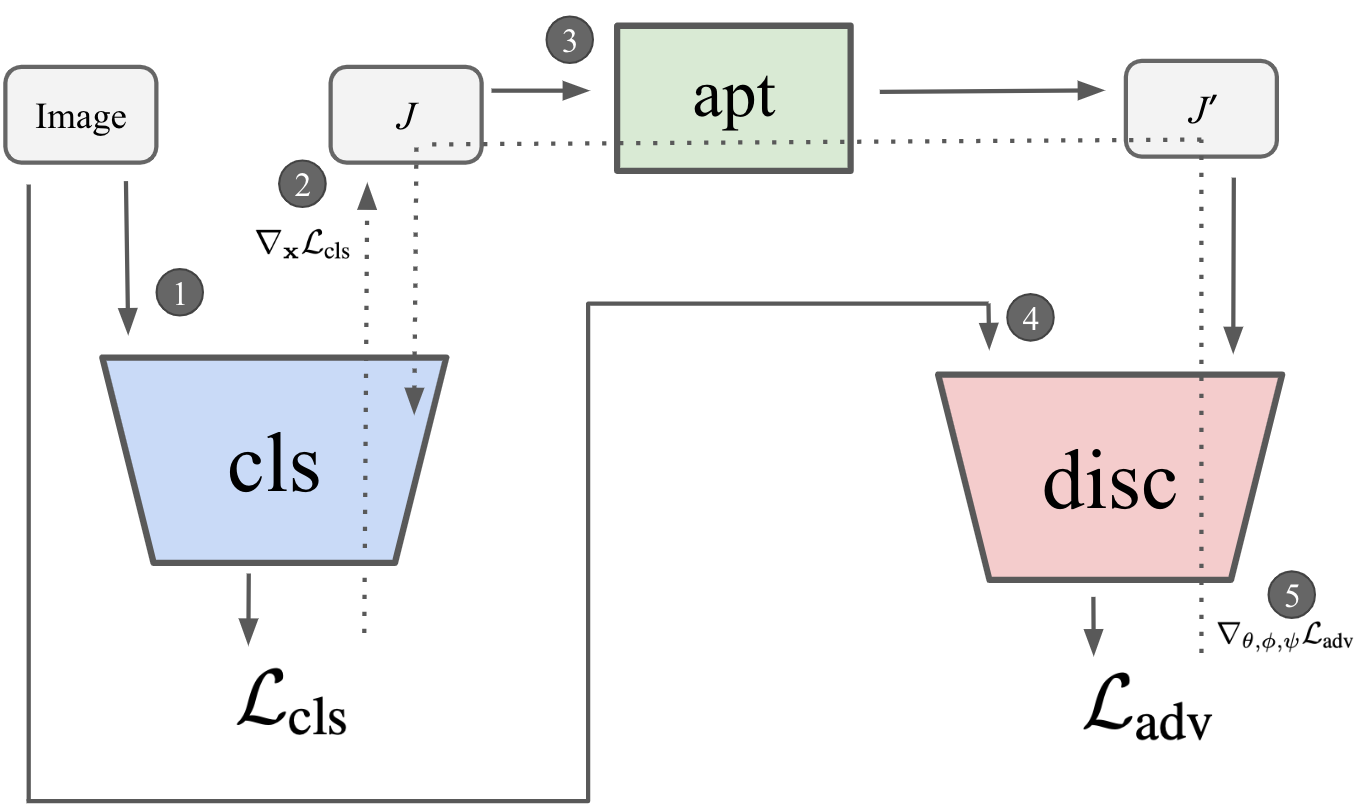

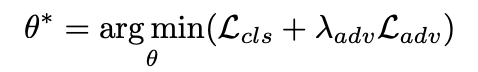

Jacobian Adversarially

Regularized Networks

Real

Fake

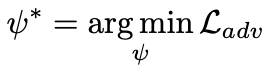

Jacobian Adversarially

Regularized Networks

Jacobian Adversarially

Regularized Networks

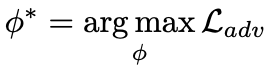

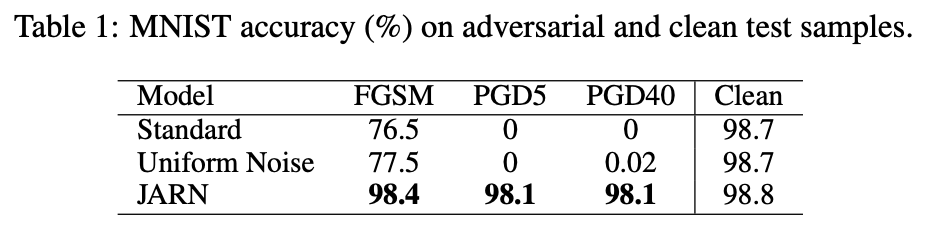

Results

Cheers!

https://slides.com/alvinchan/jarn_dso

JARN @ DSO

By Alvin Chan

JARN @ DSO

- 751