Making Look-Ahead Active Learning Strategies Feasible with Neural Tangent Kernels

Mohamad Amin Mohamadi*, Wonho Bae*, Danica J. Sutherland

The University of British Columbia

NeurIPS 2022

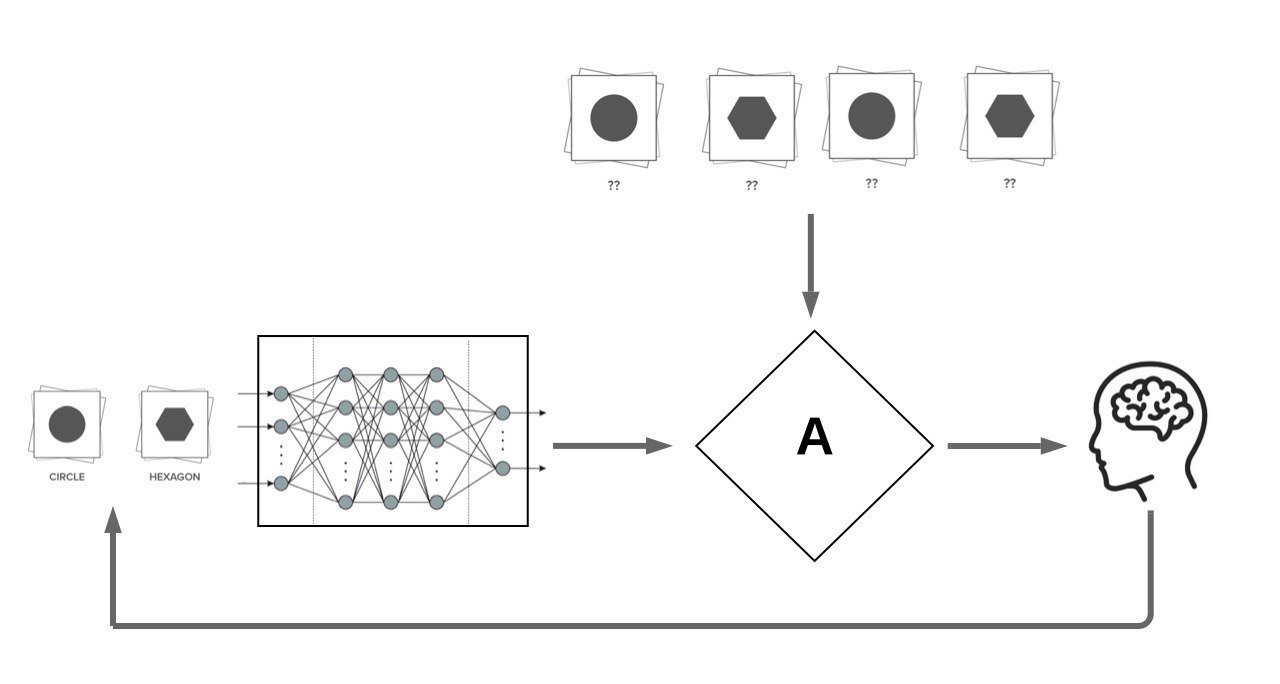

Active Learning

Active learning: reducing the required amount of labelled data in training ML models through allowing the model to "actively request for annotation of specific datapoints".

We focus on Pool Based Active Learning:

AL Acquisition Functions

Most proposed acquisition functions in deep active learning can be categorized to two branches:

- Uncertainty Based: Maximum Entropy, BALD

- Representation-Based: BADGE, LL4AL

Our Motivation: Making Look-Ahead acquisition functions feasible in deep active learning:

Retraining

Engine

Contributions

-

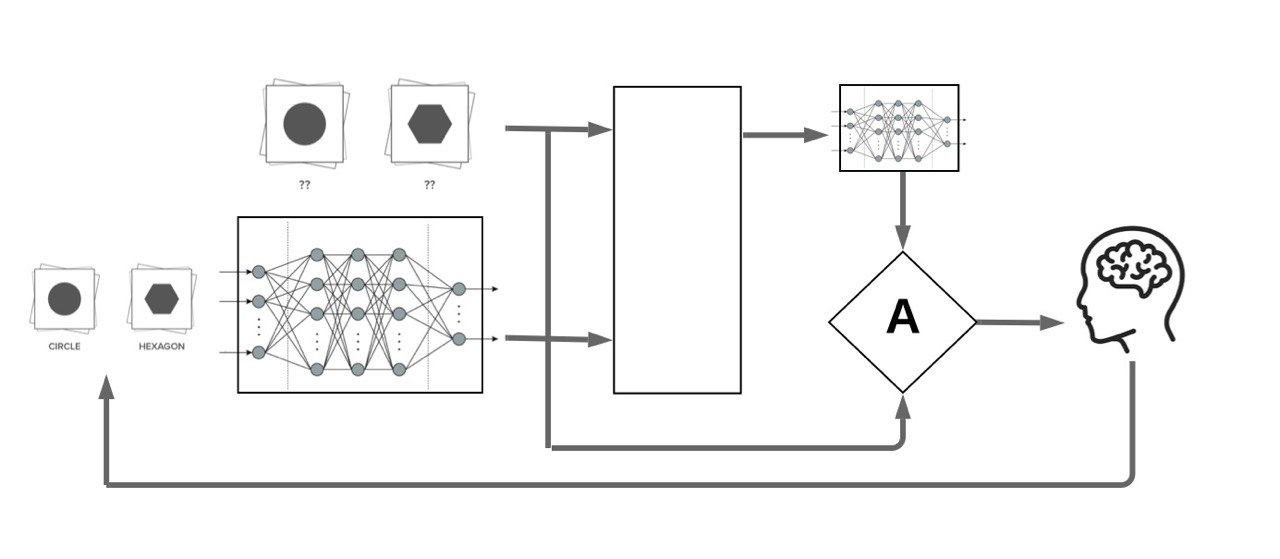

Problem: Retraining the neural network with every unlabelled datapoint in the pool using SGD is practically infeasible.

-

Solution: We propose to use a proxy model based on the first-order Taylor expansion of the trained model to approximate this retraining.

-

Contributions:

- We prove that this approximation is asymptotically exact for ultra wide networks.

- Our method achieves similar or improved performance than best prior pool-based AL methods on several datasets.

-

Our proxy model can be used to perform fast Sequential Active Learning (no SGD needed)!

Approximation of Retraining

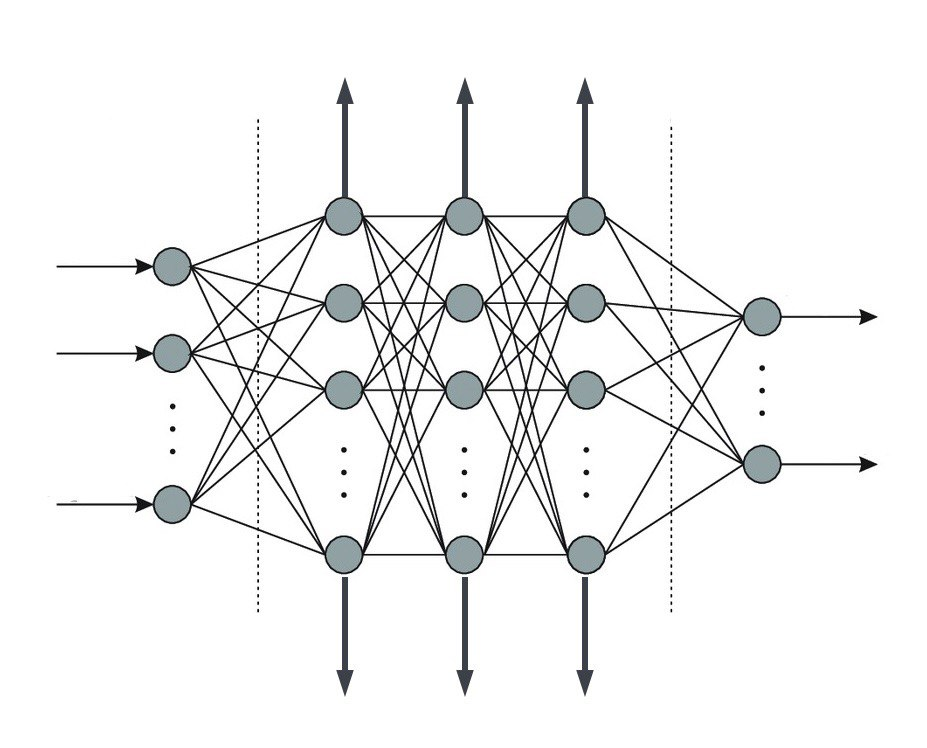

- Jacot et al.: training dynamics of suitably initialized infinitely wide neural networks can be captured by the Neural Tangent Kernels (NTKs).

- Lee et al.: the first-order Taylor expansion of a neural network around its initialization has training dynamics converging to that of the neural network as the width grows:

- Idea: approximate the retrained neural network on a new datapoint ( ) using the first-order taylor expansion of the network around the current model .

- We prove that this approximation is asymptotically exact for ultra wide networks and is empirically comparable to SGD for finite width networks.

Approximation of Retraining

- Idea: approximate the retrained neural network on a new datapoint ( ) using the first-order taylor expansion of the network around the current model .

- We prove that this approximation is asymptotically exact for ultra wide networks and is empirically comparable to SGD for finite width networks.

Approximation of Retraining

Look-Ahead Active Learning

- We employ the proposed retraining approximation in a well-suited acquisition function which we call the Most Likely Model Output Change (MLMOC):

-

Although our experiments in the pool-based AL setup were all done using MLMOC, the proposed retraining approximation is general.

- We hope that this enables new directions in deep active learning using the look-ahead criteria.

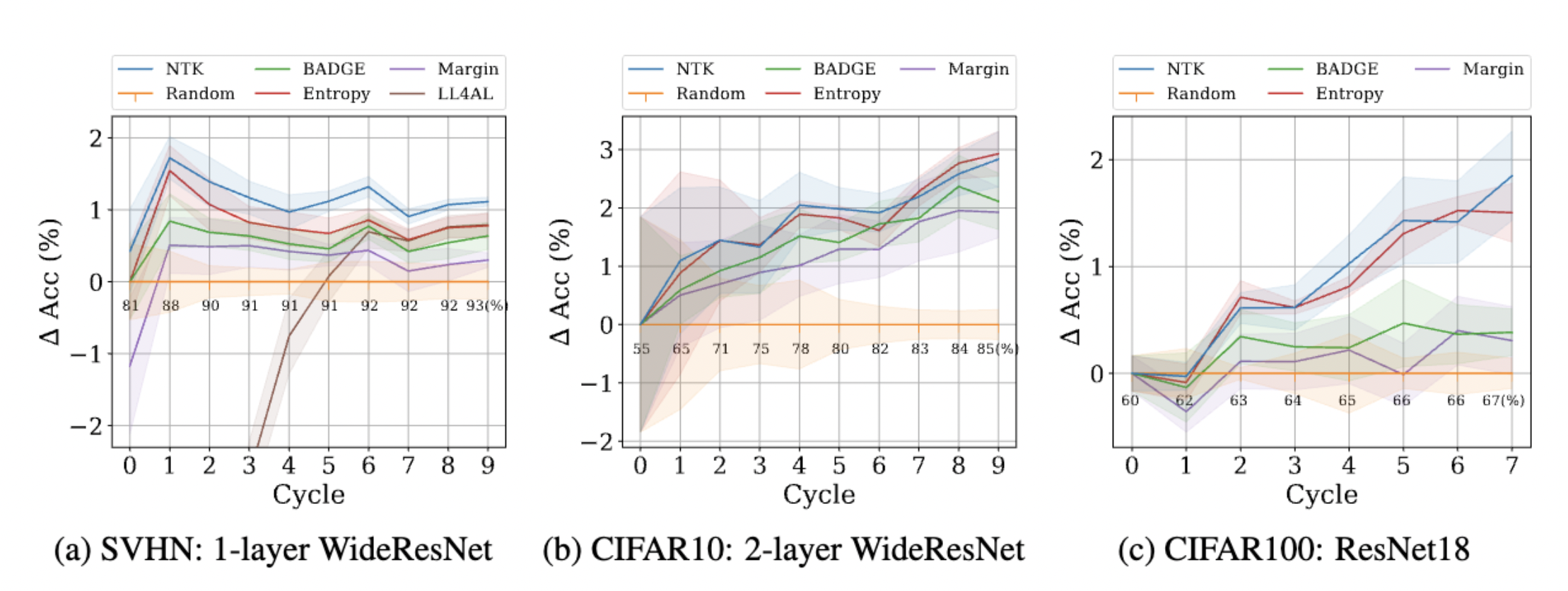

Experiments

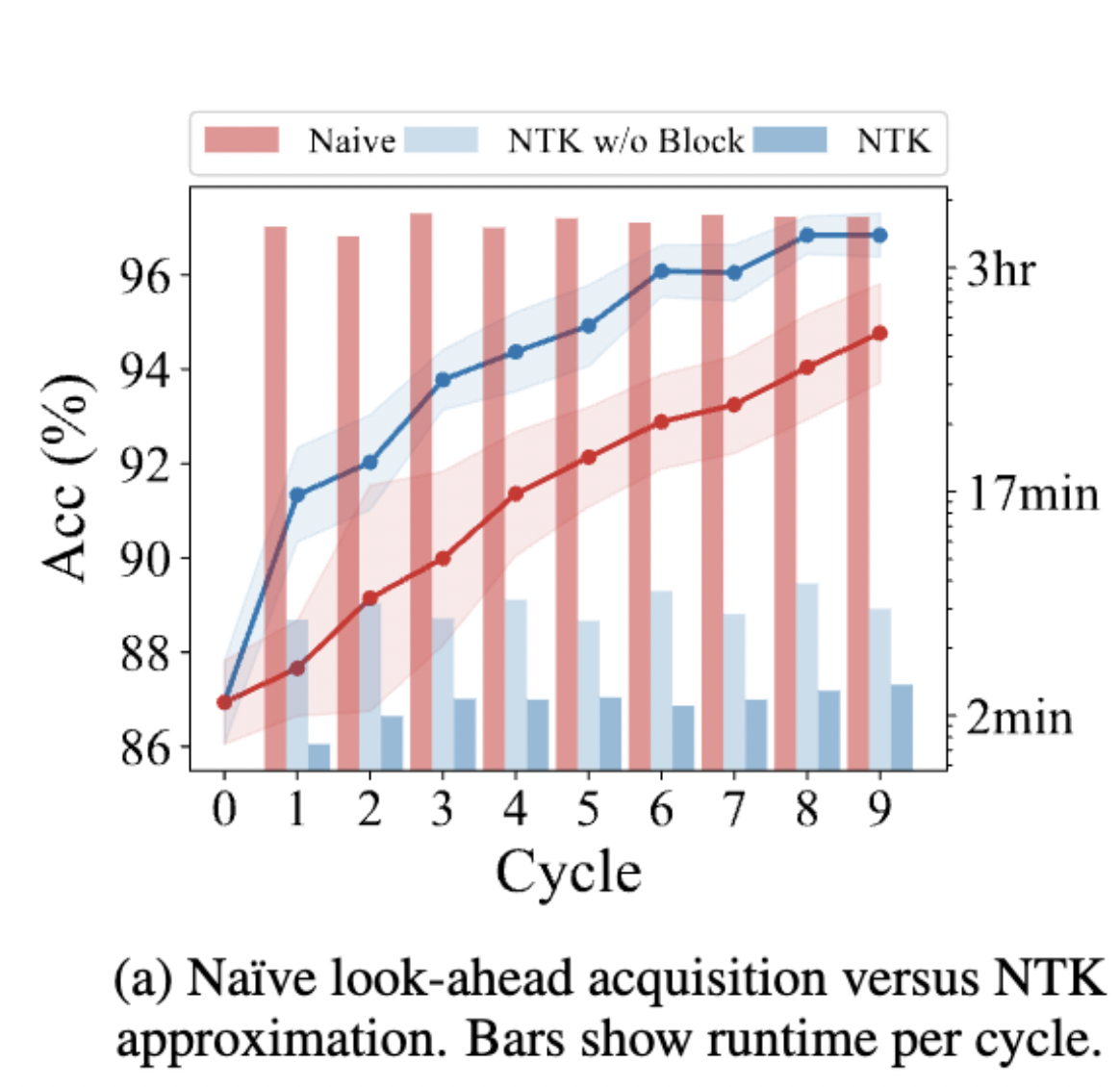

Retraining Time: The proposed retraining approximation is much faster than SGD.

Experiments: The proposed querying strategy attains similar or better performance than best prior pool-based AL methods on several datasets.

Thanks!

arXiv URL

GitHub URL

NTK-AL

By Amin Mohamadi

NTK-AL

- 350