Probabilistic Ensembles of Zero- and Few-Shot Learning Models for Emotion Classification

Angelo Basile

Guillermo Perez-Torro

Marc Franco-Salvador

angelo.basile@symanto.com

guillermo.perez@symanto.com

marc.franco@symanto.com

The angry wolf ate the happy boy

Building affective corpora is hard.

Can we approach at least the existing datasets using few or no annotated instances?

Research Question

Emotion Classification as Entailment

I loved the pizza!

This person expressed a feeling of pleasure.

This person feels sad.

Premise

Hypothesis

This person feels [...].

- Entailment

- Contradiction

- Neutral

Natural Language Inference (NLI)

JOY

SADNESS

What NLI dataset?

What PLM?

What hypothesis?

???

ANLI, Fever, MNLI, XNLI?

BERT, BART, RoBERTa?

This person feels [...], This person is feeling [...]?

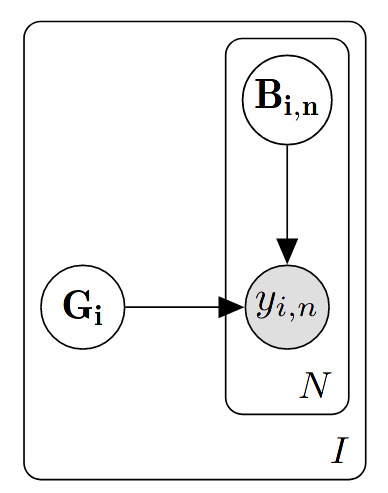

Our Proposal

Build many different models and infer the best possible label from their predictions.

MACE (Hovy et al., 2013)

The angry wolf ate the happy boy

Model 1

Model 2

...

Model N

JOY

SADNESS

SADNESS

...

Final Label

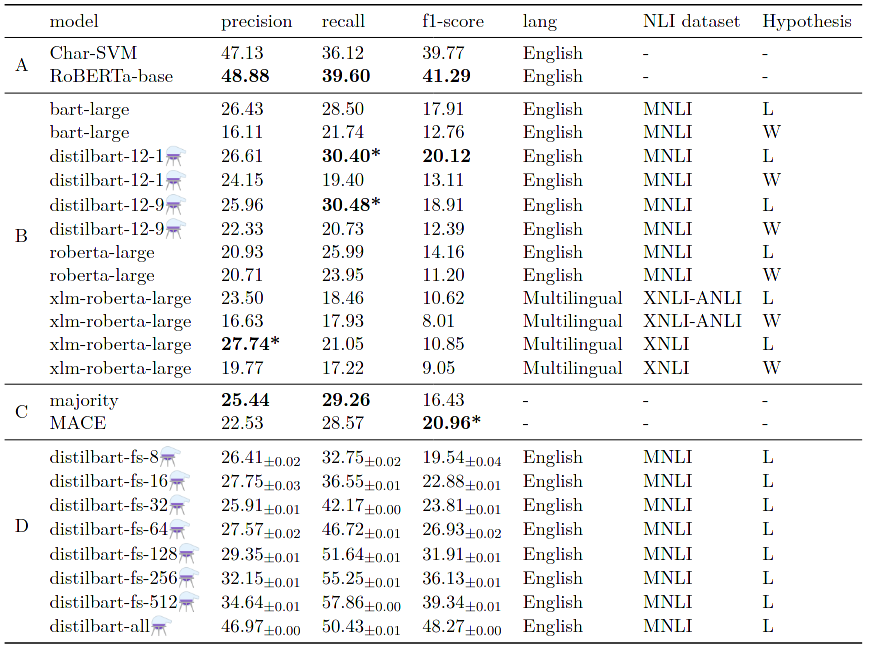

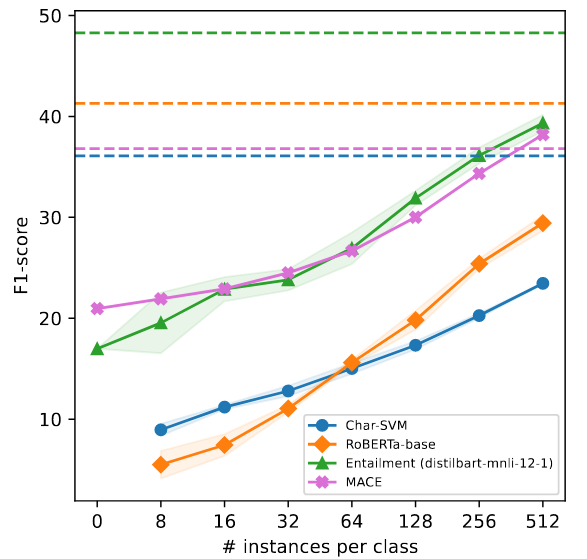

Experiments

Unify Emotion

(Bostan and Klinger, 2018)

- Zero-shot models provide modest performance

- A model of aggregation helps

- Few-shot NLI models are almost as good as fully-supervised models

The benefits of a model of aggregation

- confidence value for each instance

- estimation of model's performance

- usually better than majority voting

- integrate labeled data, if available

- merge rule-based and deep learning systems

(see Passonneau and Carpenter, 2014)

RaNLP2021

By Angelo

RaNLP2021

- 838