Node JS

Getting Started

- Installation via nvm

- Executing scripts

- Installing packages

- Reading Environment Variables

- package.json

What is NodeJS

- Open source & cross-platform project.

- Build on Chrome's JavaScript runtime environment.

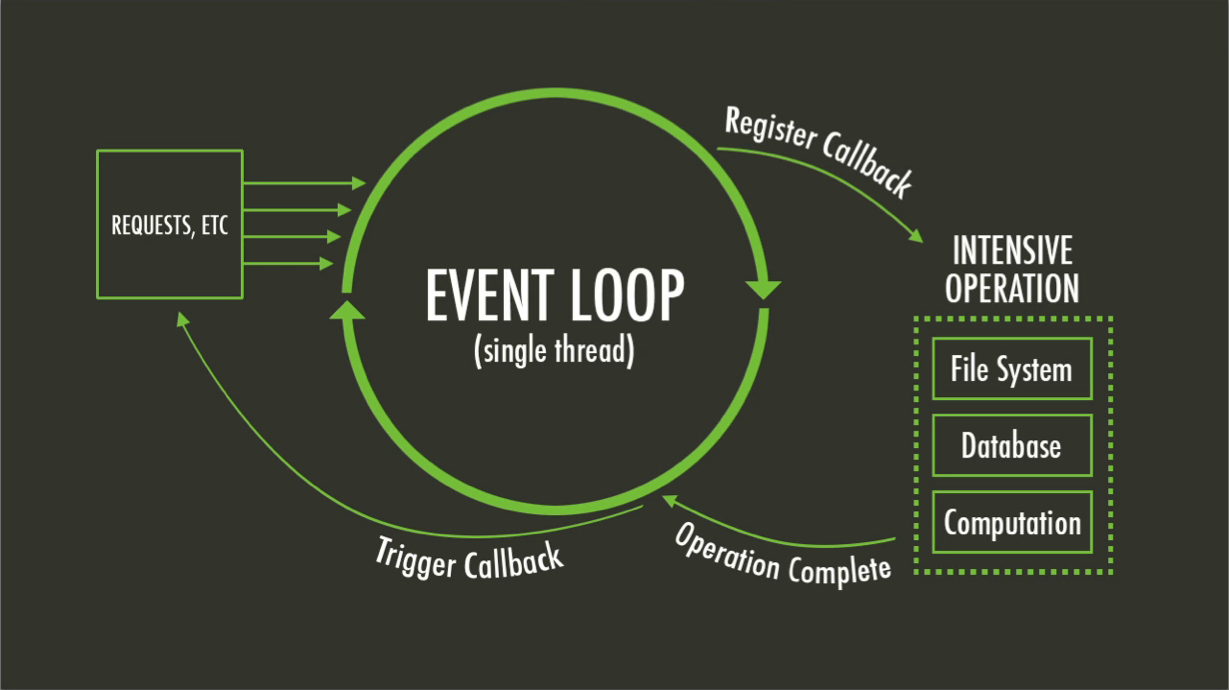

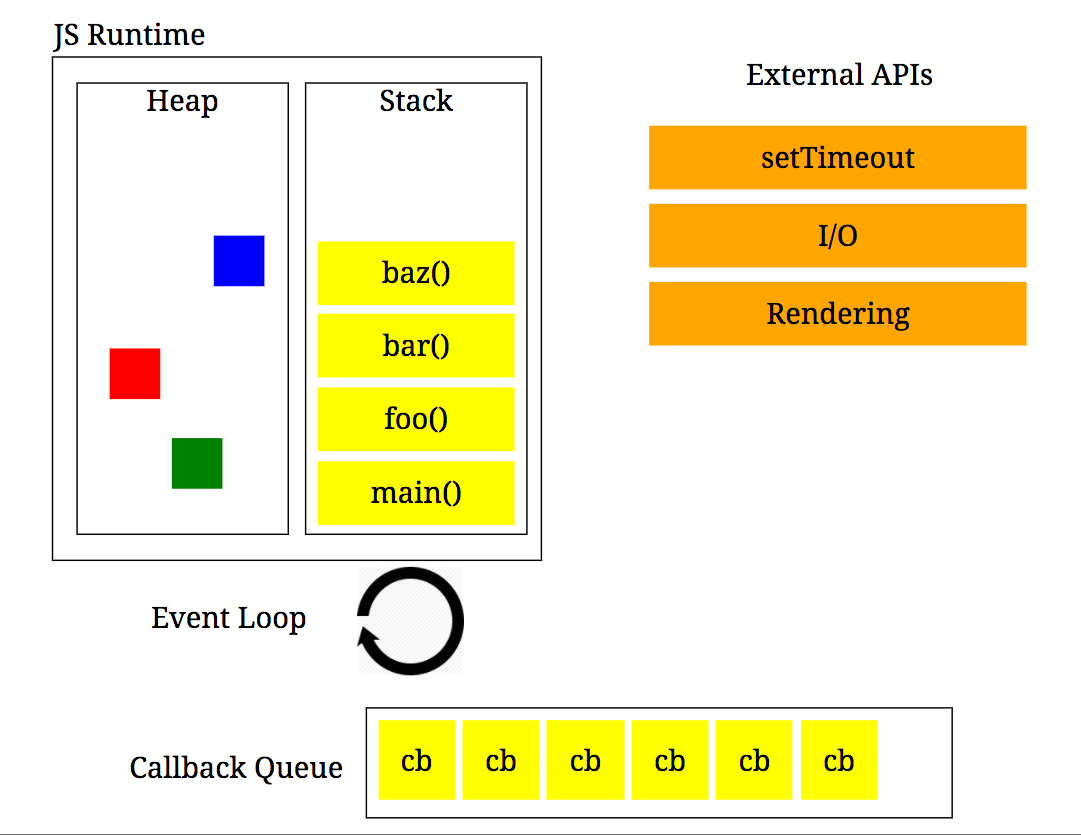

- Event driven & Non-blocking IO.

- Real time apps.

- Single threaded.

- Build in Async IO, HTTP/s, file-system etc.

- Ability to do JavaScript on server.

- Act as a web server.

- Executes JavaScript

- Event Driven Concurrency

- Emits Events

- Listens for Responses

Simple Server

What NodeJS is Not

-

NOT written in JS

- IS a C/C++ based interpreter running the V8 JS engine

- NOT a framework, more like an API

-

NOT multi-threaded

- IS single threaded, using events and callbacks

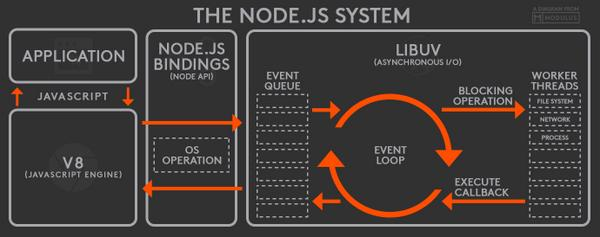

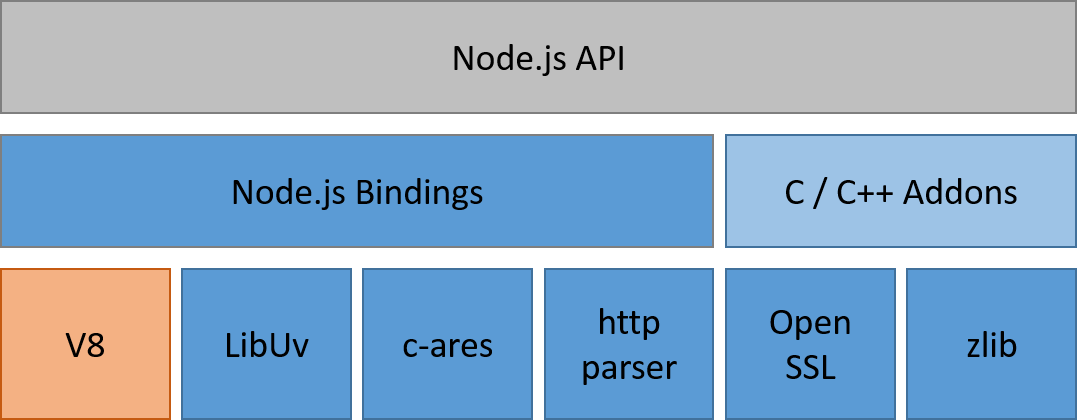

NodeJS Architecture

- V8 - Google's open source JavaScript engine that resides in Chrome/Chromium browsers.

- libuv - libuv was originally developed to provide asynchronous I/O that includes asynchronous TCP & UDP sockets, (famous) event loop, asynchronous DNS resolution, file system read/write, and etc. libuv is written in C.

- Other Low-Level Components - such as c-ares, http parser, OpenSSL, zlib, and etc, mostly written in C/C++.

- Application - here is your code, modules, and Node.js' built in modules, written in JavaScript (or compiled to JS through TypeScript, CoffeeScript, etc.)

Core APIs

Events

The event model used in the browser comes from the DOM rather than JavaScript

EventEmitter

Because the event model is tied to the DOM in browsers, Node created the Event Emitter class to provide some basic event functionality.

EventEmitter has a handful of methods, the main two being on and emit. The on method creates an event listener for an event

server.on('event', function(a, b, c) { //do things

});-

The on method takes two parameters: the name of the event to listen for and the function to call when that event is emitted.

-

Because EventEmitter is an interface pseudoclass, the class that inherits from EventEmitter is expected to be invoked with the new keyword.

const util = require('util');

const EventEmitter = require('events');

function MyStream() {

EventEmitter.call(this);

}

util.inherits(MyStream, EventEmitter);

MyStream.prototype.write = function(data) {

this.emit('data', data);

};

const stream = new MyStream();

console.log(stream instanceof EventEmitter); // true

console.log(MyStream.super_ === EventEmitter); // true

stream.on('data', (data) => {

console.log(`Received data: "${data}"`);

});

stream.write('It works!'); // Received data: "It works!"const EventEmitter = require('events');

class MyStream extends EventEmitter {

write(data) {

this.emit('data', data);

}

}

const stream = new MyStream();

stream.on('data', (data) => {

console.log(`Received data: "${data}"`);

});

stream.write('With ES6');Passing arguments and this to listeners

-

The eventEmitter.emit() method allows an arbitrary set of arguments to be passed to the listener functions.

-

It is important to keep in mind that when an ordinary listener function is called, the standard this keyword is intentionally set to reference the EventEmitter instance to which the listener is attached.

const myEmitter = new MyEmitter();

myEmitter.on('event', function(a, b) {

console.log(a, b, this, this === myEmitter);

// Prints:

// a b MyEmitter {

// domain: null,

// _events: { event: [Function] },

// _eventsCount: 1,

// _maxListeners: undefined } true

});

myEmitter.emit('event', 'a', 'b');It is possible to use ES6 Arrow Functions as listeners, however, when doing so, the this keyword will no longer reference the EventEmitter instance:

const myEmitter = new MyEmitter();

myEmitter.on('event', (a, b) => {

console.log(a, b, this);

// Prints: a b {}

});

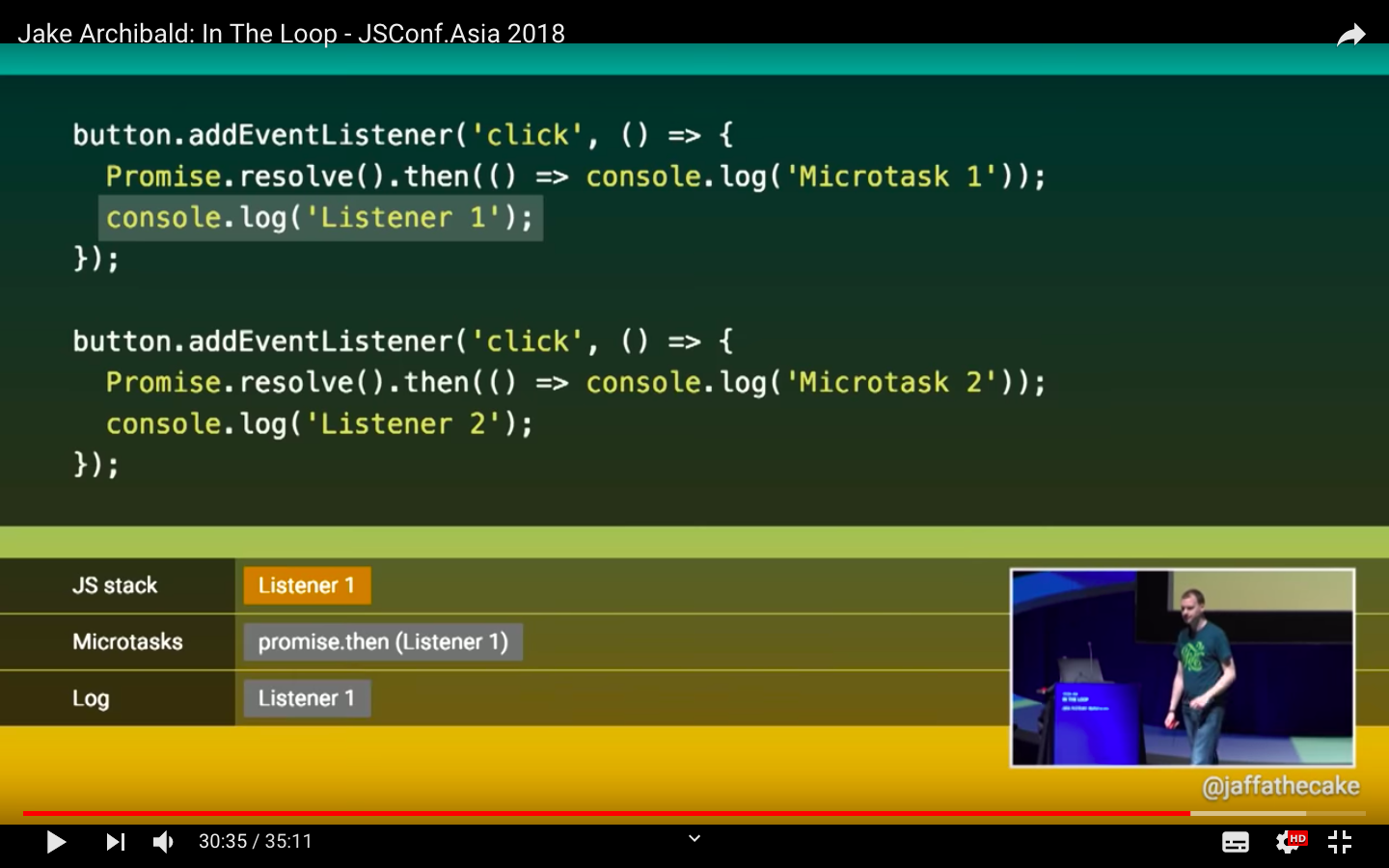

myEmitter.emit('event', 'a', 'b');Asynchronous vs. Synchronous

-

The EventEmitter calls all listeners synchronously in the order in which they were registered.

-

This is important to ensure the proper sequencing of events and to avoid race conditions or logic errors.

const myEmitter = new MyEmitter();

myEmitter.on('event', (a, b) => {

setImmediate(() => {

console.log('this happens asynchronously');

});

});

myEmitter.emit('event', 'a', 'b');Handling events only once

When a listener is registered using the eventEmitter.on() method, that listener will be invoked every time the named event is emitted.

const myEmitter = new MyEmitter();

let m = 0;

myEmitter.once('event', () => {

console.log(++m);

});

myEmitter.emit('event');

// Prints: 1

myEmitter.emit('event');

// IgnoredUsing the eventEmitter.once() method, it is possible to register a listener that is called at most once for a particular event. Once the event is emitted, the listener is unregistered and then called.

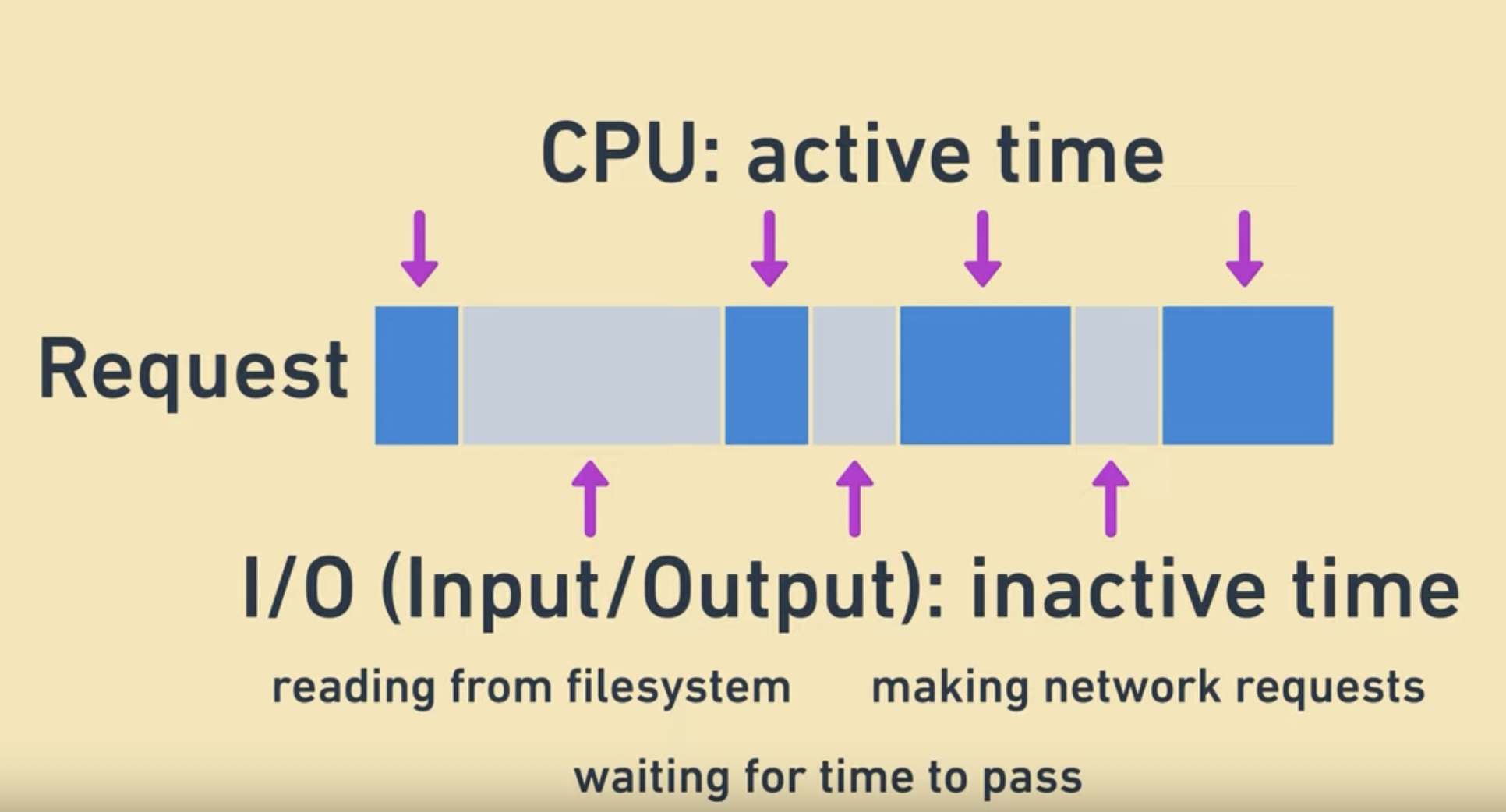

Blocking vs Non-Blocking

- Blocking is when the execution of additional JavaScript in the Node.js process must wait until a non-JavaScript operation completes.

- This happens because the event loop is unable to continue running JavaScript while a blocking operation is occurring.

- Synchronous methods in the Node.js standard library that use libuv are the most commonly used blocking operations.

Blocking methods execute synchronously and non-blocking methods execute asynchronously.

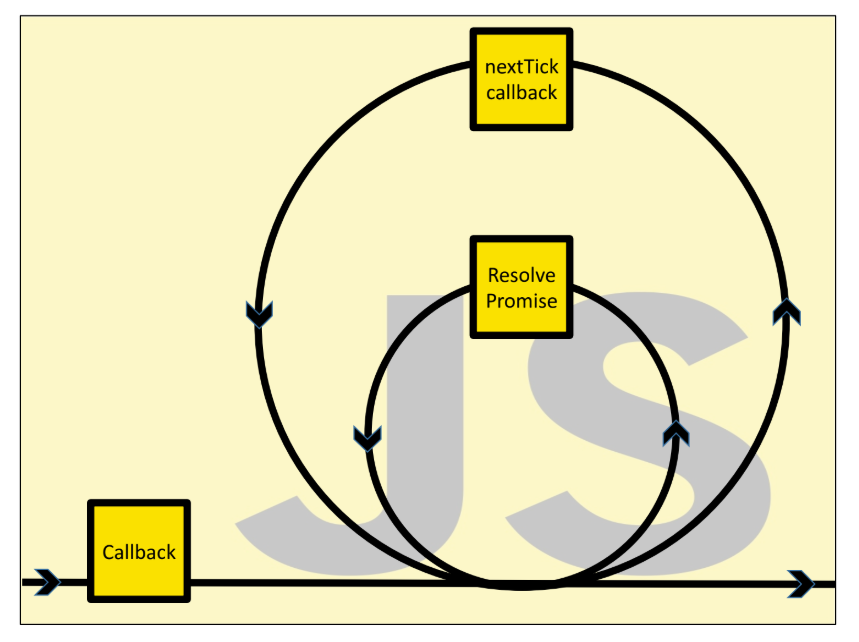

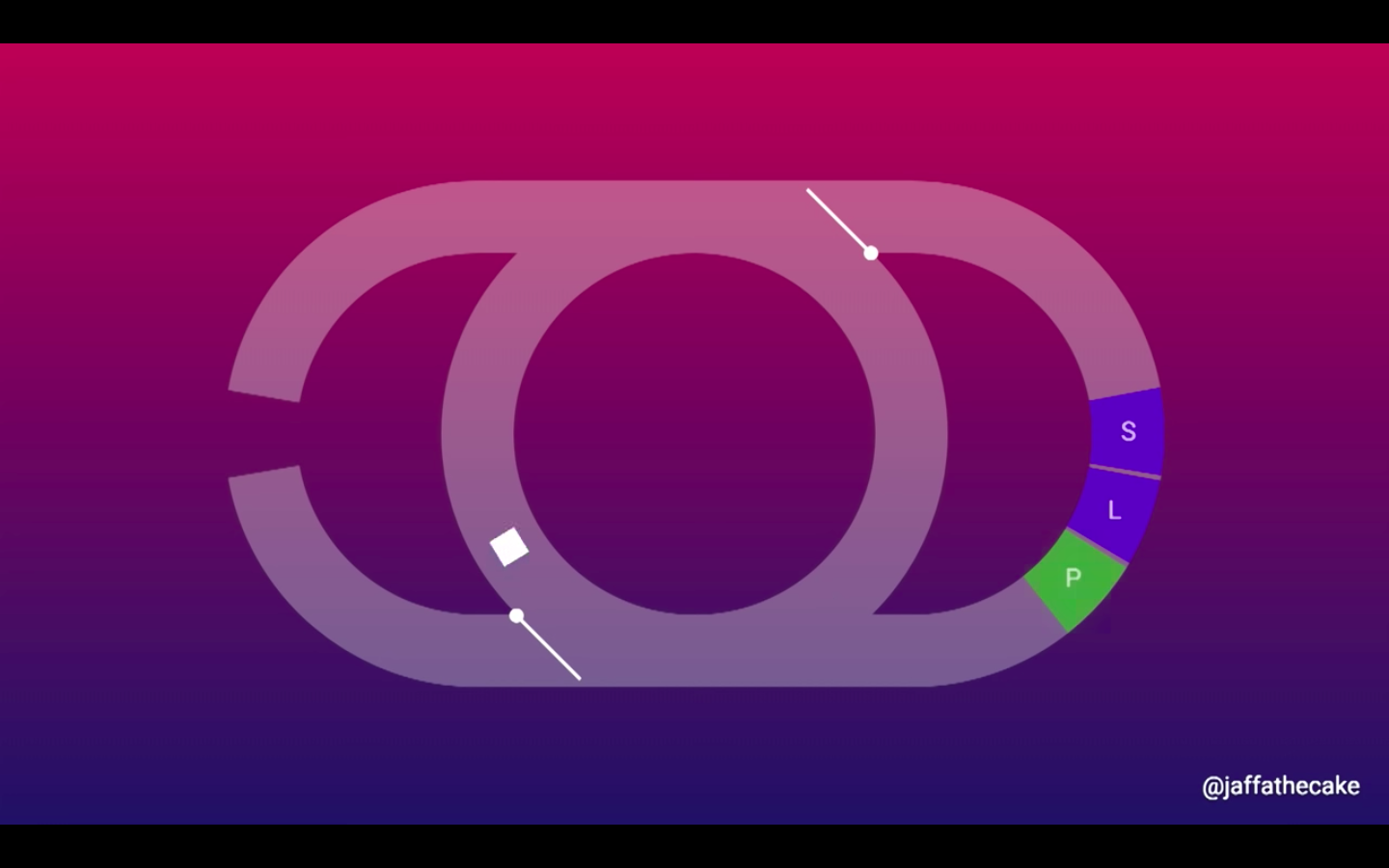

What is the Event Loop?

- The event loop is what allows Node.js to perform non-blocking I/O operations — despite the fact that JavaScript is single-threaded — by offloading operations to the system kernel whenever possible.

- Since most modern kernels are multi-threaded, they can handle multiple operations executing in the background.

- When one of these operations completes, the kernel tells Node.js so that the appropriate callback may be added to the poll queue to eventually be executed.

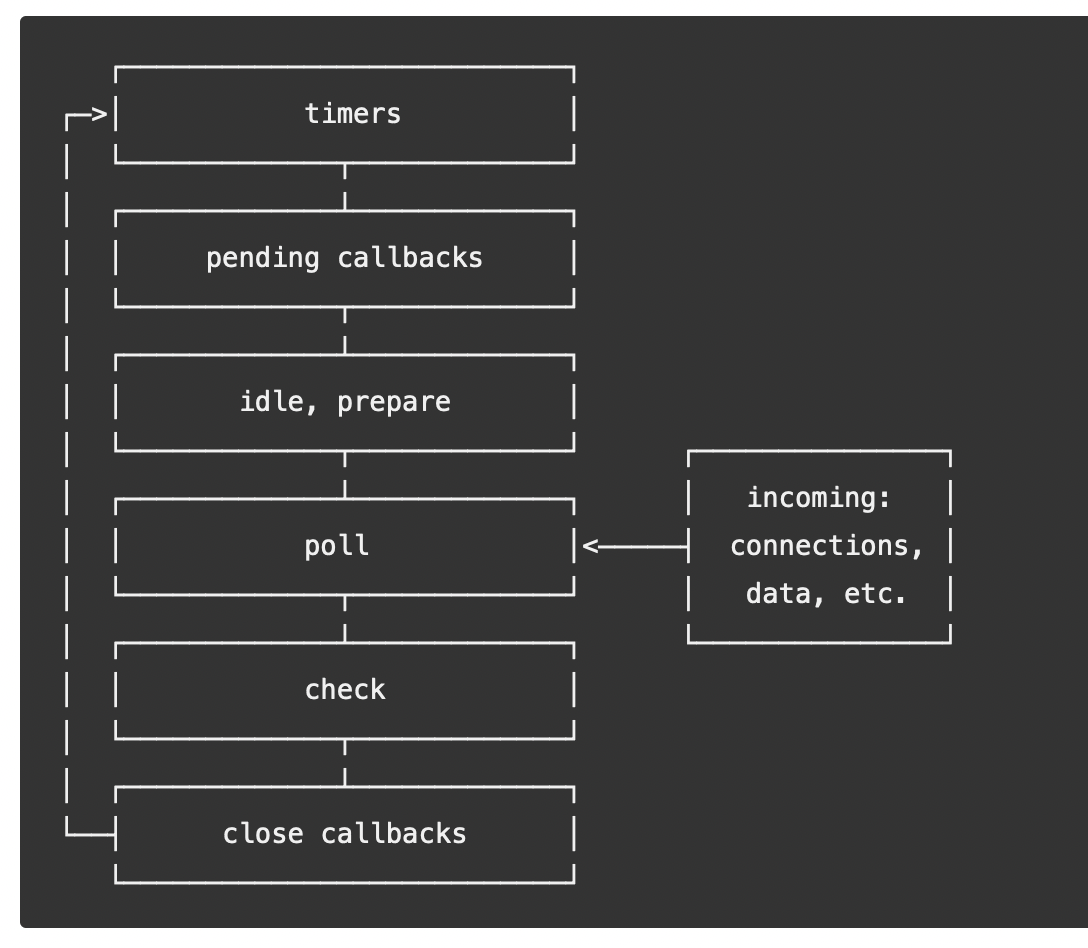

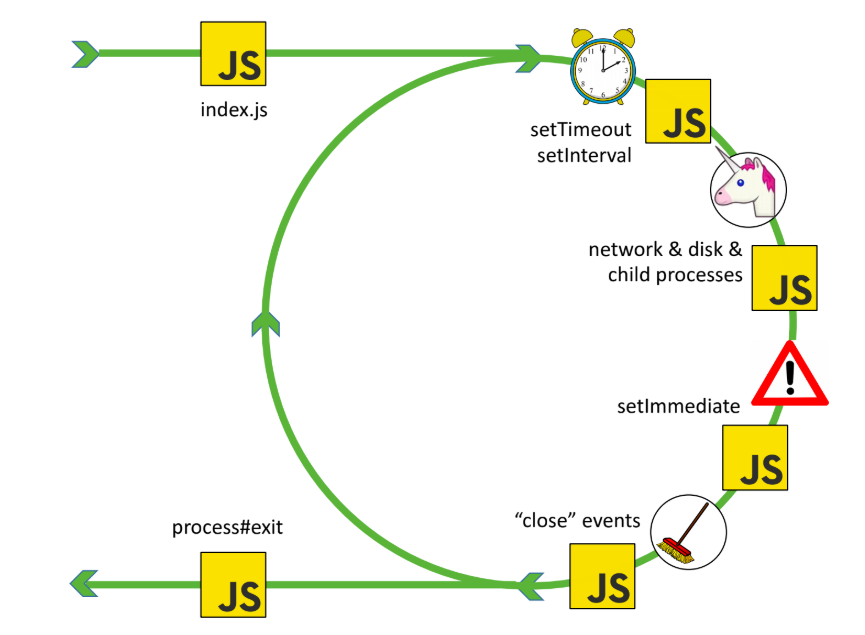

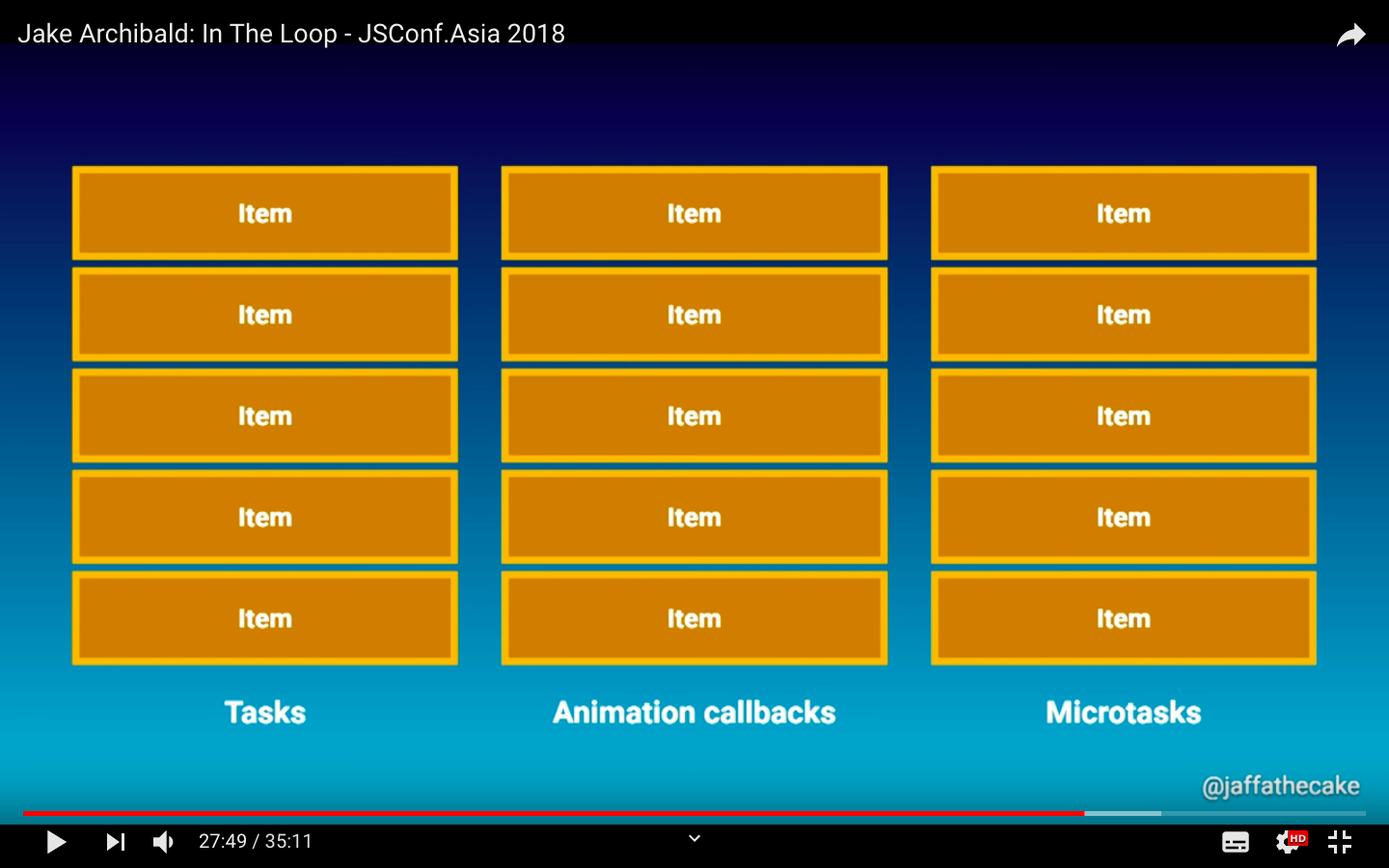

Event Loop

Phases Overview

- timers: this phase executes callbacks scheduled by setTimeout() and setInterval().

- pending callbacks: executes I/O callbacks deferred to the next loop iteration.

- idle, prepare: only used internally.

- poll: retrieve new I/O events; execute I/O related callbacks (almost all with the exception of close callbacks, the ones scheduled by timers, and setImmediate()); node will block here when appropriate.

- check: setImmediate() callbacks are invoked here.

- close callbacks: some close callbacks, e.g. socket.on('close', ...).

- Each phase has a FIFO queue of callbacks to execute.

- While each phase is special in its own way, generally, when the event loop enters a given phase, it will perform any operations specific to that phase, then execute callbacks in that phase's queue until the queue has been exhausted or the maximum number of callbacks has executed.

- When the queue has been exhausted or the callback limit is reached, the event loop will move to the next phase, and so on.

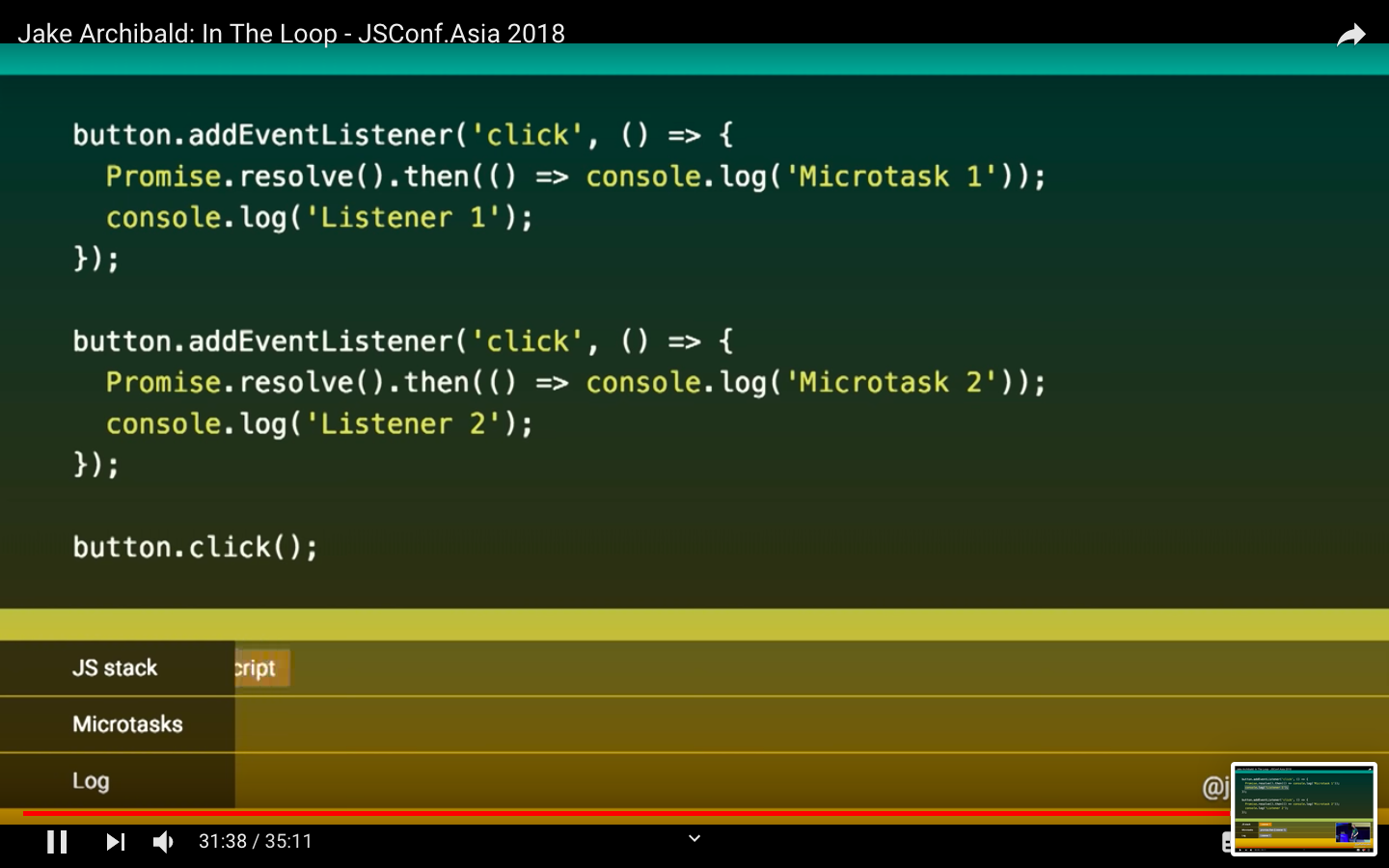

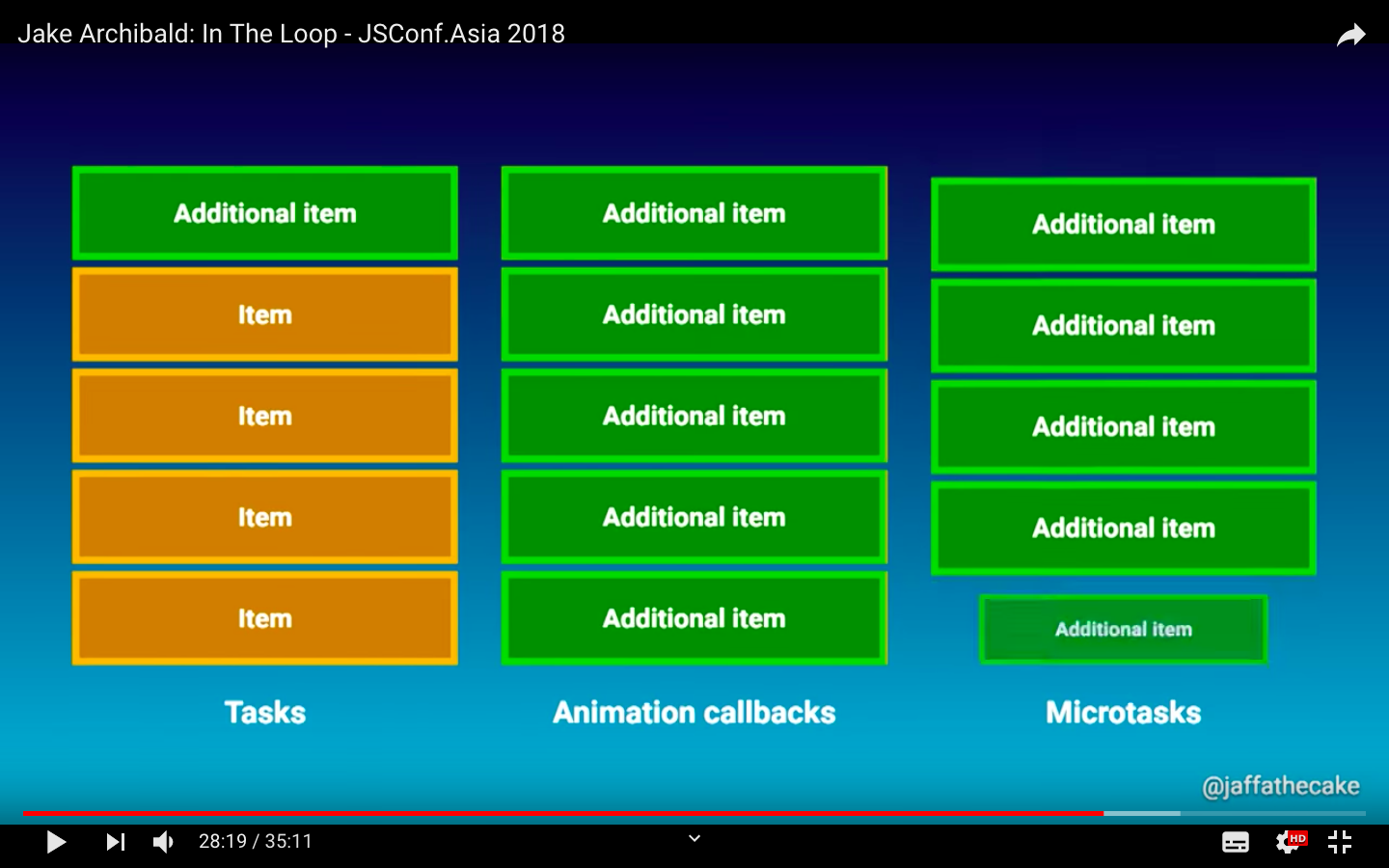

Microtask queue

In Node, there are also two special microtask queues that can have callbacks added to them while a phase is running.

- The first microtask queue handles callbacks that have been registered using process.nextTick().

- The second microtask queue handles promises that reject or resolve.

-

Callbacks in the microtask queues take priority over callbacks in the phase’s normal queue, and callbacks in the next tick microtask queue run before callbacks in the promise microtask queue.

function f() {

return Promise.resolve()

.then(() => {

console.log(Date.now());

})

.then(f);

}

f();

console.log('1. Begin..')

setTimeout(() => console.log('2. setTimeout'), 0);

new Promise((resolve) => resolve()).then(

() => console.log('3. in a promise')

).then(

() => console.log('4. then of a then in promise')

);

setImmediate(() => console.log('5. setImmediate'));

const runThis = () => {

process.nextTick(() => console.log('6. process.nextTick'));

console.log('7. runThis function')

}

new Promise((res) => res()).then(

() => {

new Promise((res) => res()).then(

() => console.log('8. Promise inside a then')

)

}

);

console.log('9. Top level console');

runThis();Output?

console.log('script start');

setTimeout(function() {

console.log('setTimeout');

}, 0);

Promise.resolve().then(function() {

console.log('promise1');

}).then(function() {

console.log('promise2');

});

console.log('script end');const fs = require('fs');

setImmediate(() => console.log(1));

Promise.resolve().then(() => console.log(2));

process.nextTick(() => console.log(3));

fs.readFile(__filename, () => {

console.log(4);

setTimeout(() => console.log(5));

setImmediate(() => console.log(6));

process.nextTick(() => console.log(7));

});

console.log(8);

setImmediate() vs setTimeout()

setImmediate and setTimeout() are similar, but behave in different ways depending on when they are called.

- setImmediate() is designed to execute a script once the current poll phase completes.

- setTimeout() schedules a script to be run after a minimum threshold in ms has elapsed.

- The order in which the timers are executed will vary depending on the context in which they are called.

- Since any of these operations may schedule more operations and new events processed in the poll phase are queued by the kernel, poll events can be queued while polling events are being processed.

- As a result, long running callbacks can allow the poll phase to run much longer than a timer's threshold.

Between each run of the event loop, Node.js checks if it is waiting for any asynchronous I/O or timers and shuts down cleanly if there are not any.

// timeout_vs_immediate.js

setTimeout(() => {

console.log('timeout');

}, 0);

setImmediate(() => {

console.log('immediate');

});$ node timeout_vs_immediate.js

timeout

immediate

$ node timeout_vs_immediate.js

immediate

timeoutHowever, if you move the two calls within an I/O cycle, the immediate callback is always executed first:

fs.readFile(__filename, () => {

setTimeout(() => {

console.log('timeout');

}, 0);

setImmediate(() => {

console.log('immediate');

});

});process.nextTick()

- process.nextTick() is not technically part of the event loop.

- Instead, the nextTickQueue will be processed after the current operation completes, regardless of the current phase of the event loop.

Why would that be allowed?

Part of it is a design philosophy where an API should always be asynchronous even where it doesn't have to be. Take this code snippet for example:

function apiCall(arg, callback) {

if (typeof arg !== 'string')

return process.nextTick(callback,

new TypeError('argument should be string'));

}process.nextTick() vs setImmediate()

We have two calls that are similar as far as users are concerned, but their names are confusing.

- process.nextTick() fires immediately on the same phase

- setImmediate() fires on the following iteration or 'tick' of the event loop

In essence, the names should be swapped. process.nextTick() fires more immediately than setImmediate(), but this is an artifact of the past which is unlikely to change.

We recommend developers use setImmediate() in all cases because it's easier to reason about (and it leads to code that's compatible with a wider variety of environments, like browser JS.)

File System

The fs module provides an API for interacting with the file system in a manner closely modeled around standard POSIX functions.

All file system operations have synchronous and asynchronous forms.

const fs = require('fs');

fs.unlink('/tmp/hello', (err) => {

if (err) throw err;

console.log('successfully deleted /tmp/hello');

});const fs = require('fs');

try {

fs.unlinkSync('/tmp/hello');

console.log('successfully deleted /tmp/hello');

} catch (err) {

// handle the error

}File paths

Most fs operations accept filepaths that may be specified in the form of a string, a Buffer, or a URL object using the file: protocol.

const fs = require('fs');

fs.open('/open/some/file.txt', 'r', (err, fd) => {

if (err) throw err;

fs.close(fd, (err) => {

if (err) throw err;

});

});fs.open('file.txt', 'r', (err, fd) => {

if (err) throw err;

fs.close(fd, (err) => {

if (err) throw err;

});

});- r+ open the file for reading and writing

- w+ open the file for reading and writing, positioning the stream at the beginning of the file. The file is created if not existing.

- a open the file for writing, positioning the stream at the end of the file. The file is created if not existing

- a+ open the file for reading and writing, positioning the stream at the end of the file. The file is created if not existing.

File details

Every file comes with a set of details that we can inspect using Node.js.

const fs = require('fs')

fs.stat('test.txt', (err, stats) => {

if (err) {

console.error(err)

return

}

//we have access to the file stats in `stats`

})- if the file is a directory or a file, using stats.isFile() and stats.isDirectory()

- if the file is a symbolic link using stats.isSymbolicLink()

- the file size in bytes using stats.size.

File Paths

Every file in the system has a path.

There's a module 'path' for dealing with path-related things.

const path = require("path");

const notes = "/Users/arfatsalman/Desktop/node/filetoread.txt";

console.log(path.dirname(notes)); // /users/joe

console.log(path.basename(notes)); // filetoread.txt

console.log(path.extname(notes)); // .txt

path.basename(notes, path.extname(notes)); //filetoread

Reading and Writing files

- fs.readFile

- fs.readFileSync

- fs.readFile (promise version)

- fs.writeFile

- fs.writeFileSync

- fs.writeFile (promise version)

- fs.appendFile

- fs.appendFileSync

- fs.appendFile (promise version)

Folders

- fs.access: to check if the folder exists and Node.js can access it with its permissions.

- fs.mkdir/sync: to create a new folder.

- fs.readdir/sync: to read the content of a directory

- fs.existsSync: to check if a file / folder exists

- fs.rename/Sync: to rename a folder

- fs.rmdir/Sync: to remove a folder

OS

The os module provides a number of operating system-related utility methods.

os.EOL

os.arch()

os.constants

os.cpus()

os.endianness()

os.freemem()

os.homedir()

os.hostname()

os.loadavg()

os.networkInterfaces()

Process

-

The process object is a global that provides information about, and control over, the current Node.js process.

-

As a global, it is always available to Node.js applications without using require().

Process Events

The process object is an instance of EventEmitter.

Event: 'beforeExit'

-

The 'beforeExit' event is emitted when Node.js empties its event loop and has no additional work to schedule.

-

Normally, the Node.js process will exit when there is no work scheduled, but a listener registered on the 'beforeExit' event can make asynchronous calls, and thereby cause the Node.js process to continue.

-

The 'beforeExit' event is not emitted for conditions causing explicit termination, such as calling process.exit() or uncaught exceptions.

-

The 'beforeExit' should not be used as an alternative to the 'exit' event unless the intention is to schedule additional work.

Event: 'exit'

The 'exit' event is emitted when the Node.js process is about to exit as a result of either:

-

The process.exit() method being called explicitly;

-

The Node.js event loop no longer having any additional work to perform.

There is no way to prevent the exiting of the event loop at this point, and once all 'exit' listeners have finished running the Node.js process will terminate.

Event: 'uncaughtException'

-

The 'uncaughtException' event is emitted when an uncaught JavaScript exception bubbles all the way back to the event loop.

-

By default, Node.js handles such exceptions by printing the stack trace to stderr and exiting.

-

Adding a handler for the 'uncaughtException' event overrides this default behavior.

process.on('uncaughtException', (err) => {

fs.writeSync(1, `Caught exception: ${err}\n`);

});

setTimeout(() => {

console.log('This will still run.');

}, 500);

// Intentionally cause an exception, but don't catch it.

nonexistentFunc();

console.log('This will not run.');It is not safe to resume normal operation after 'uncaughtException'.

Event: 'unhandledRejection'

-

The 'unhandledRejection' event is emitted whenever a Promise is rejected and no error handler is attached to the promise within a turn of the event loop.

-

When programming with Promises, exceptions are encapsulated as "rejected promises".

-

Rejections can be caught and handled using promise.catch() and are propagated through a Promise chain.

process.on('unhandledRejection', (reason, p) => {

console.log('Unhandled Rejection at:', p, 'reason:', reason);

// application specific logging, throwing an error, or other logic here

});

somePromise.then((res) => {

return reportToUser(JSON.pasre(res)); // note the typo (`pasre`)

}); // no `.catch` or `.then`process.argv

-

The process.argv property returns an array containing the command line arguments passed when the Node.js process was launched.

-

The first element will be process.execPath.

// print process.argv

process.argv.forEach((val, index) => {

console.log(`${index}: ${val}`);

});process.env

The process.env property returns an object containing the user environment.

process.exit([code])

-

The process.exit() method instructs Node.js to terminate the process synchronously with an exit status of code.

-

If code is omitted, exit uses either the 'success' code 0 or the value of process.exitCode if it has been set.

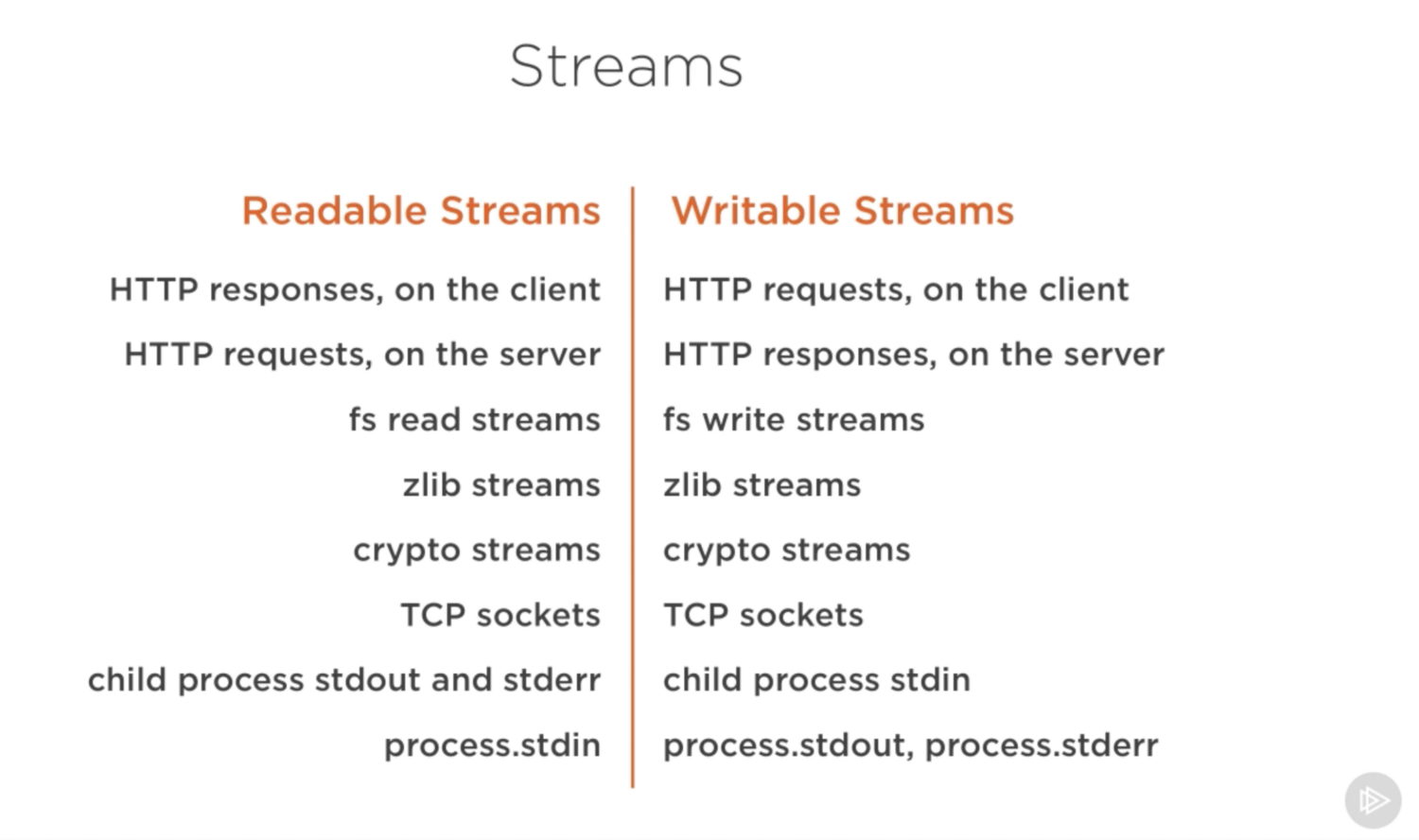

Streams

- Streams are collections of data — just like arrays or strings.

- The difference is that streams might not be available all at once, and they don’t have to fit in memory.

- This makes streams really powerful when working with large amounts of data, or data that’s coming from an external source one chunk at a time.

- They also give us the power of composability in our code.

There are four fundamental stream types in Node.js: Readable, Writable, Duplex, and Transform streams.

- A readable stream is an abstraction for a source from which data can be consumed. An example of that is the fs.createReadStream method.

- A writable stream is an abstraction for a destination to which data can be written. An example of that is the fs.createWriteStream method.

- A duplex streams is both Readable and Writable. An example of that is a TCP socket.

- A transform stream is basically a duplex stream that can be used to modify or transform the data as it is written and read. An example of that is the zlib.createGzip stream to compress the data using gzip. You can think of a transform stream as a function where the input is the writable stream part and the output is readable stream part.

-

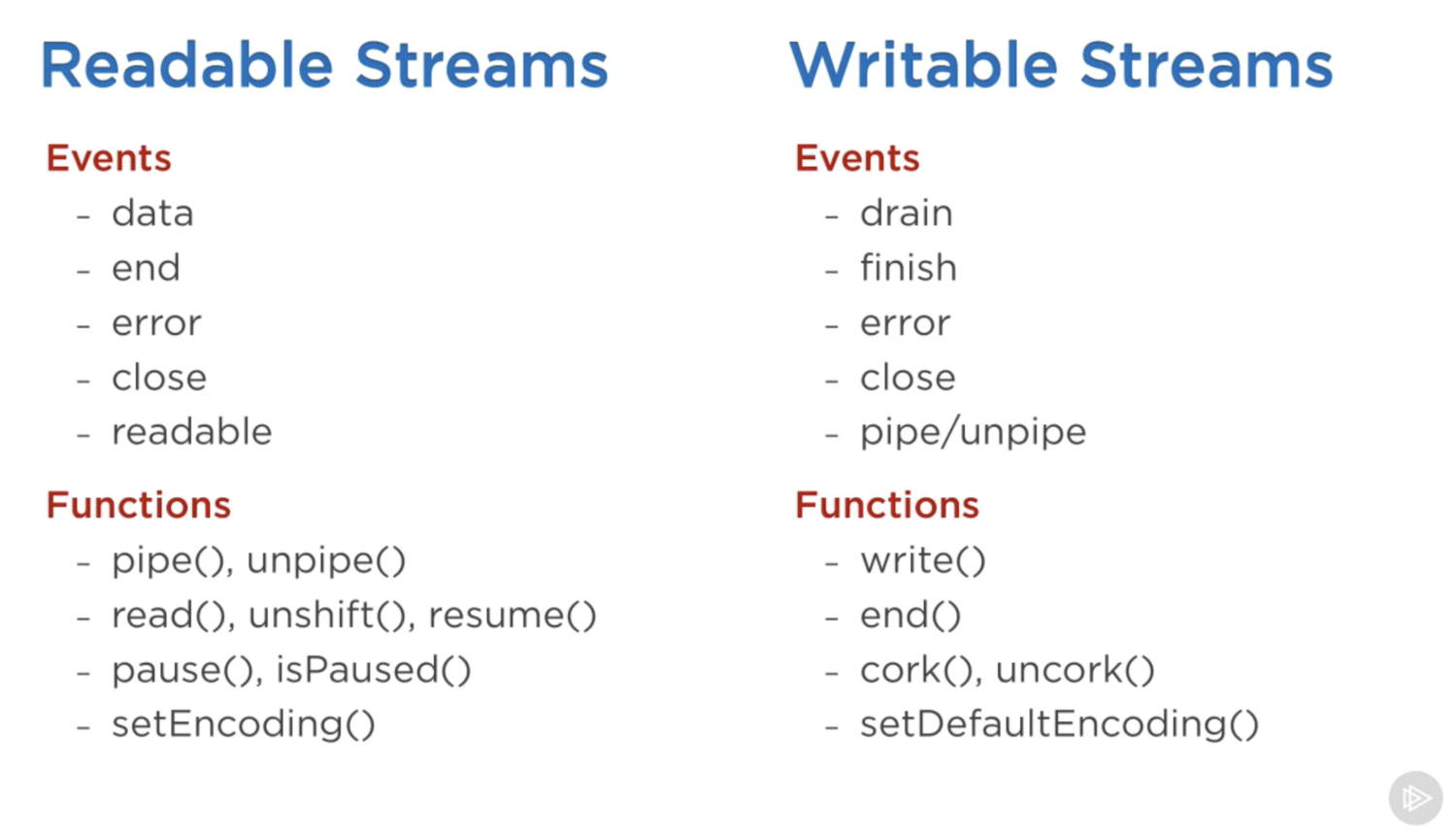

All streams are instances of EventEmitter. They emit events that can be used to read and write data.

The pipe method

readableSrc.pipe(writableDest)

The pipe method returns the destination stream, which enabled us to do the chaining above.

For streams a (readable), b and c (duplex), and d (writable), we can:

a.pipe(b).pipe(c).pipe(d)

# Which is equivalent to:

a.pipe(b)

b.pipe(c)

c.pipe(d)

# Which, in Linux, is equivalent to:

$ a | b | c | dStream events

- Beside reading from a readable stream source and writing to a writable destination, the pipe method automatically manages a few things along the way.

- For example, it handles errors, end-of-files, and the cases when one stream is slower or faster than the other.

// readable.pipe(writable)

readable.on('data', (chunk) => {

writable.write(chunk);

});

readable.on('end', () => {

writable.end();

});

The most important events on a readable stream are:

- The data event, which is emitted whenever the stream passes a chunk of data to the consumer

- The end event, which is emitted when there is no more data to be consumed from the stream.

The most important events on a writable stream are:

- The drain event, which is a signal that the writable stream can receive more data.

- The finish event, which is emitted when all data has been flushed to the underlying system.

Paused and Flowing Modes of Readable Streams

Readable streams have two main modes that affect the way we can consume them:

- They can be either in the paused mode

- Or in the flowing mode

- All readable streams start in the paused mode by default but they can be easily switched to flowing and back to paused when needed.

- When a readable stream is in the paused mode, we can use the read()method to read from the stream on demand

- In the flowing mode, data can actually be lost if no consumers are available to handle it.

- This is why, when we have a readable stream in flowing mode, we need a data event handler.

Implementing Streams

- The task of consuming them.

- The task of implementing the streams.

Implementing a Writable Stream

const { Writable } = require('stream');

class myWritableStream extends Writable {

}

const outStream = new Writable({

write(chunk, encoding, callback) {

console.log(chunk.toString());

callback();

}

});

process.stdin.pipe(outStream);This write method takes three arguments.

- The chunk is usually a buffer unless we configure the stream differently.

- The encoding argument is needed in that case, but usually we can ignore it.

- The callback is a function that we need to call after we’re done processing the data chunk. It’s what signals whether the write was successful or not. To signal a failure, call the callback with an error object.

Implement a Readable Stream

To implement a readable stream, we require the Readable interface, and construct an object from it, and implement a read() method in the stream’s configuration parameter:

const { Readable } = require('stream');

const inStream = new Readable({

read() {}

});

inStream.push('ABCDEFGHIJKLM');

inStream.push('NOPQRSTUVWXYZ');

inStream.push(null); // No more data

inStream.pipe(process.stdout);const inStream = new Readable({

read(size) {

this.push(String.fromCharCode(this.currentCharCode++));

if (this.currentCharCode > 90) {

this.push(null);

}

}

});

inStream.currentCharCode = 65;

inStream.pipe(process.stdout);const fs = require('fs');

const file = fs.createWriteStream('./big.file');

for(let i=0; i<= 1e6; i++) {

file.write('Lorem ipsum dolor sit amet, consectetur adipisicing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur. Excepteur sint occaecat cupidatat non proident, sunt in culpa qui officia deserunt mollit anim id est laborum.\n');

}

file.end();const fs = require('fs');

const server = require('http').createServer();

server.on('request', (req, res) => {

fs.readFile('./big.file', (err, data) => {

if (err) throw err;

res.end(data);

});

});

server.listen(8000);

const fs = require('fs');

const server = require('http').createServer();

server.on('request', (req, res) => {

const src = fs.createReadStream('./big.file');

src.pipe(res);

});

server.listen(8000);

HTTP

The HTTP interfaces in Node.js are designed to support many features of the protocol which have been traditionally difficult to use. In particular, large, possibly chunk-encoded, messages.

Class: http.Agent

An Agent is responsible for managing connection persistence and reuse for HTTP clients.

http.get({

hostname: 'localhost',

port: 80,

path: '/',

agent: false // create a new agent just for this one request

}, (res) => {

// Do stuff with response

});deck

By Arfat Salman

deck

- 748