10 Reasons why I use (And love)

Arnau Quera-Bofarull

Theory Lunch 29/03/2021

Julia is...

... a Fast, Dynamic, Reproducible, Composable, General, and Open source programming language.

Developed at MIT ~ 9 years ago

Jeff Bezanson, Alan Edelman, Stefan Karpinski, Viral B. Shah

Reason 1

Speed

C

Julia

Python

- Benchmarking is complicated

- Bottom line: C-like speeds ~ easy to get (in my experience)

- What makes a language fast?

int sum(int a, int b)

{

return a + b;

}

int main(){

int a = 2;

int b = 3;

int c = sum(a, b);

}C

def sum(a, b):

return a + b

>>> sum(1, 2)

3

>>> sum("hello", " world")

"hello world"Python

For speed you want static types.

How to make Python fast

- Use C / Fortran libraries (numpy, scipy, etc.)

- JIT compilation (Numba)

- Call C functions (ctypes)

- Specify types (Cython)

- etc.

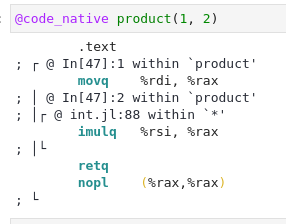

Why is Julia fast

- JIT compilation of every function for every type

function product(a, b)

return a * b

end

Reason 2

Multiple Dispatch

Came for the speed, stayed for the syntax

What is multiple dispatch?

Mapping

Argument

types

Function

Python

C

Julia

0

1

n

Dispatch

arguments

Function

+

Methods

a + b

a[i] + b[i]...

a = 1

b = 2

@which a * b*(x::T, y::T) where T<:Union{Int128, Int16, Int32, Int64, Int8, UInt128, UInt16, UInt32, UInt64, UInt8} in Base at int.jl:88

a = "hello"

b = " world!"

@which a * b*(s1::Union{AbstractChar, AbstractString}, ss::Union{AbstractChar, AbstractString}...) in Base at strings/basic.jl:260

How many methods does '*' have?

all_methods = collect(methods(*))

>>> length(all_methods)

336all_methods[19]*(A::Union{LinearAlgebra.LowerTriangular, LinearAlgebra.UnitLowerTriangular}, B::LinearAlgebra.Bidiagonal) in LinearAlgebra at /home/arnau/opt/julia-1.6.0-rc3/share/julia/stdlib/v1.6/LinearAlgebra/src/bidiag.jl:658

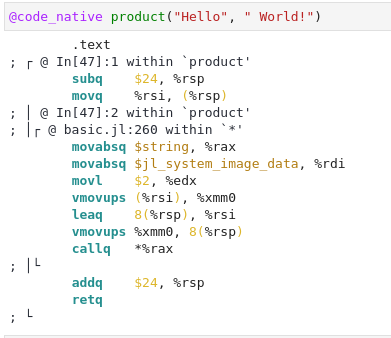

Type hierarchy

Two kinds of types: Abstract and Concrete

Variables always instances of concrete type

Astronomy Example

abstract type CelestialBody endstruct Star <: CelestialBody

M

endstruct Planet <: CelestialBody

M

end

struct Galaxy <: CelestialBody

M_bulge

M_disc

endAstronomy Example

mass(body::CelestialBody) = body.M>>> methods(mass)

# 1 method for generic function mass:

- mass(body::CelestialBody) in ...

>>> sun = Star(1)

>>> mass(sun)

1Astronomy Example

>>> milky_way = Galaxy(1e10, 1e12)

>>> mass(milky_way)

error: type Galaxy has no field Mmass(galaxy::Galaxy) = galaxy.M_bulge + galaxy.M_disc

>>> methods(mass)

# 2 methods for generic function mass:

- mass(galaxy::Galaxy) in ...

- mass(body::CelestialBody) in ...

>>> mass(milky_way)

1.01e12Julia always calls the most restrictive method

Astronomy Example

mass(object) = error("This function only

works for Celestial objects")

>>> mass("test")

error: This function only works for Celestial objects

If the type is not specified, then it works for any input (like Python)

Multiple Dispatch "Feels' Natural

Data

- Vectors

- Integers

Functions

- Sum

- Product

Reason 3

Generic Code

# Python

class BlackHole:

def __init__(self, M):

self.M = M

def compute_schwarzschild_radius(self):

return np.sqrt(2 * G * self.M / C)How can we expand the class?

- Edit source code (not always possible)

- Class inheritance

class NewBlackHole(BlackHole):

def new_method(self):

...Painful

- In Python it is hard to reuse code

- This ends up with huge packages

- Scipy

- Numpy

- Astropy

- etc.

- In Julia there is a lot of code reusability

- This ends up with lots of small packages (which can be grouped)

struct BlackHole

M

end

function compute_schwarzschild_radius(bh::BlackHole)

return sqrt(2 * G * bh.M) / C

endnew_method(bh::BlackHole) = ...REason 4

Parallelism

Julia natively supports parallelism (CPU and GPU)

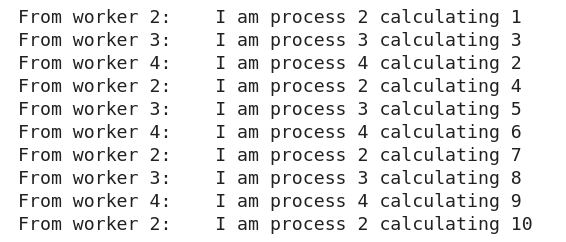

using Distributed

addprocs(3) # I want 3 CPU workers@everywhere function expensive_function(i)

println("I am process $(myid()) calculating $i")

sleep(2)

endpmap(expensive_function, 1:10)

You can spawn processes through Slurm!

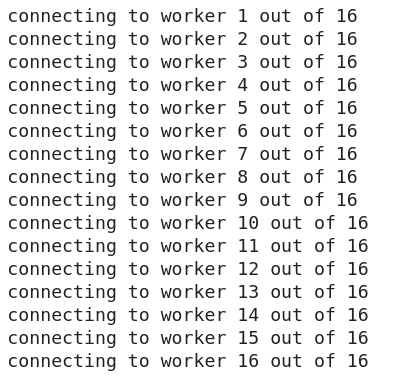

using ClusterManagers

addprocs_slurm(16, A="durham", p="cosma", t="01:00:00")

- Easy(er) to debug parallel code.

- Can run interactively in Jupyter

Bonus : Revise.jl

Revise.jl automatically recompiles code in interactive sessions.

Similar to %autoreload magic in IPython.

Reason 5

The Package Manager

- Integrated Package Manager

- Very portable / reproducible

- Testing integrated

- Great dependency / compatibility management

Reason 6

Language Interoperability

Very easy to call Python, C and Fortran code in Julia

using PyCall

scipy = pyimport("scipy")

# now we can use all scipy functions!x = range(1, 100, length=50)

y = x .^ 2

interp = scipy.interpolate.interp1d(x, y)>>> interp([1,2,3,4])

...Reason 7

Code syntax

Matlab-like dot syntax

>>> f(x) = x^2

>>> vector = [1, 2, 3, 4, 5]

>>> f.(x)

[1, 4, 9, 16, 25]Equivalent to map(f, vector)

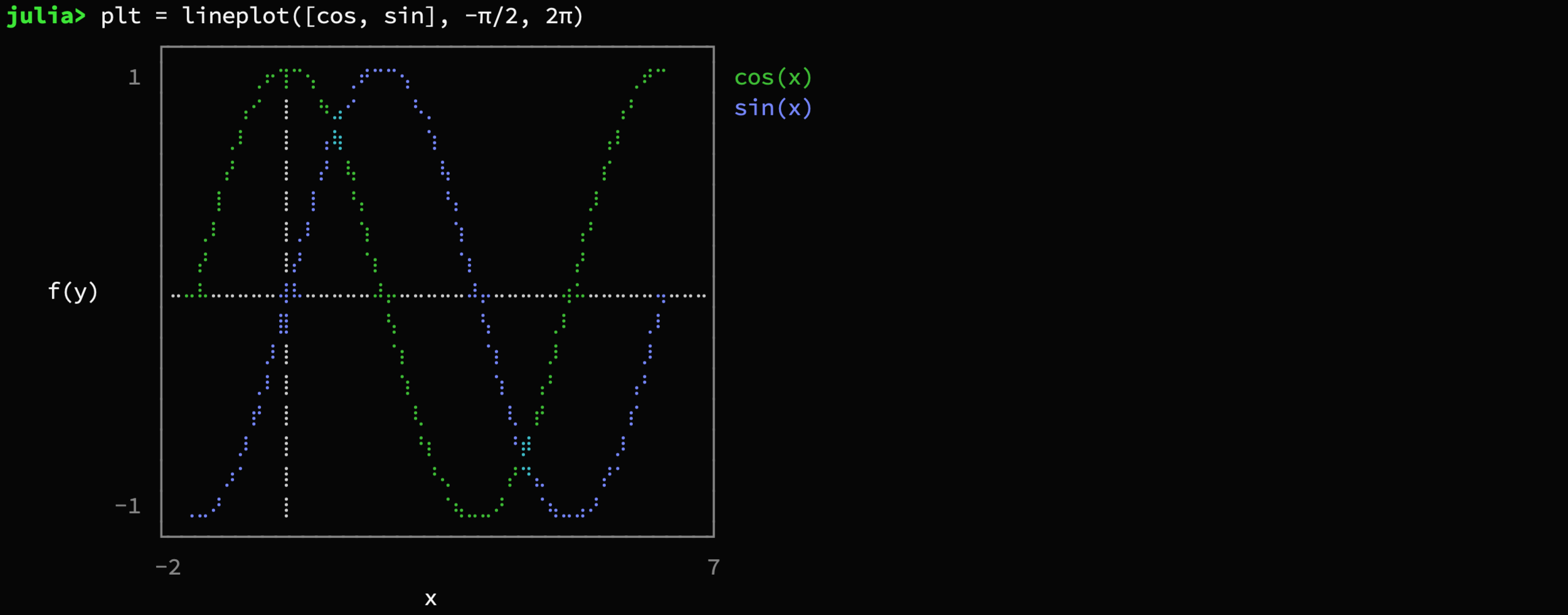

Unicode support

This is not Latex, it is legit Julia code!

Even plots in unicode!

Reason 8

Julia is written in julia

Reason 9

Active community

Reason 10

Open source

Summary

I encourage you to try Julia if:

- Performance is key

- Curious about multiple dispatch

- Tired of Numba / Cython etc.

- Interested in new programming languages

If your main focus is data analysis with no algorithm development, Python is the best option (for now).

Slides: slides.com/astrobyte/julia

Why I use Julia

By arnauqb

Why I use Julia

- 730