Web Speech:

Past, Present,

Future and Opportunity

Interesting historical note

There's history of us trying to make

machines that 'speak' or 'listen' going

back to at least the 1700s

Bell Labs demonstrated digital voice

synthesis at the World's Fair in 1939

If you find this interesting, I wrote a whole

piece on The History of Speech

Who remembers this movie?

1983

Me in 1983:

Me...

... I was easily distracted.

The Web: Early 90s - the web really brought me back to computers

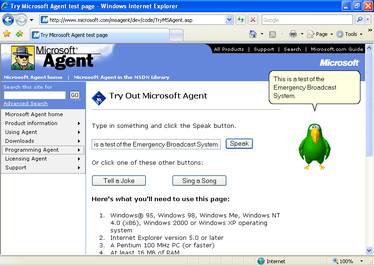

<object ID="AgentControl" width="0" height="0" CLASSID="clsid:D45FD31B-5C6E-11D1-9EC1-00C04FD7081F" CODEBASE="http://server/path/msagent.exe#VERSION=2,0,0,0">

</object>

1997!!!

Me in 1997:

WebSpeech

The path to the current state of affairs...

1998 CSS2 Aural Stylesheets

March 1999

AT&T Corporation, IBM, Lucent, and Motorola formed the VoiceXML Forum

Handed over to W3C in 2000

AskJeves, AT&T, Avaya, BT, Canon, Cisco, France Telecon, General Magic, Hitachi, HP, IBM, isSound, Intel, Locus Dialogue, Lucent, Microsoft, Mitre, Motorola, Nokia, Nortel, Nuance, Phillips, PipeBeach, Speech Works, Sun, Telecon Italia, TellMe.com, and Unisys

Mayyyybbbeeee?

Like HTML, but for... wait... I'm confused.

So many XMLs

For the next decade...

2010: Speech XG Community Group

let's sidestep this mess...

Competing draft proposals from:

- Google,

- Microsoft,

- Mozilla

- Voxeo

Stir....

~ kinda a spec

Next stop:

Working Group

LOL jk

2012: Another Community Group!

~"Here's what we're shipping"

Buuuuuttt....

The Web: Present Day

Almost :(

The Web: Present Day

- Parts of it work everywhere (almost, better than many 'standards' in history)

- No RECs here, or even earlier. Not standards tracked

- The actual de-facto standard isn't written down

- Lots of partial successes

- Lots of interest from developers!

- No-one is prioritizing this

I wrote some posts: Web Speech (series)

- Intro: The History and State of Speech

- Part I: Greetings, Professor Falken

- Part II: You Don't Say

- Part III: Listen up

- Part IV: Thoughts on Voice Recognition

- It is historically weird (like MathML)

- Here's how it works (and doesn't)

- It needs resolution and attention to resolve its weirdness and architecture.. But nobody is doing it

- The world is quite a bit different too

- I have some thoughts about how to resolve this

We should ultimately have a well explained, layered architecture with escape hatches

-

A promise based API

-

Feature detection

-

A sensible voice model for TTS, like fonts.

-

Constructors/A replaceable service provider

Lots of service APIs

Even at the OS level!

Lots of discussion, but no driver.

So what's holding it up?

It lacks a driver - especially one without an interest in both ends of it

A few proposals.

Basic support for Linux would be great for WebKit in general :(

Opportunity and advantage Igalia?

Voice notifications?

Hands free assistance? (keep that screen clean)

"Play Frozen for the Kids in the back"

"Turn on/off/up the AC/Heat"

Voice notifications?

"You're due for an oil change soon"

WPE could have advantage with Speech.

Igalia could position as a strong driver at fixing historical problems...

Lots of potential funding strategies here potentially.

Thanks.

Web Speech APIs now

By Brian Kardell

Web Speech APIs now

- 772