An introduction to Graph Neural Networks

Carolina Cuesta-Lazaro

25th November 2021 - IAC-Deep

\vec{x}_n = [M_n, x_n, y_n,z_n]

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

\vec{y} = \frac{\sum_n M_n (x_n, y_n, z_n)}{\sum_n M_n}

f

\vec{y}

Toy problem: Learn to compute the center of mass

\vec{x}_n = [M_n, x_n, y_n,z_n]

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

f

\vec{y}

\vec{x}_2

\vec{x}_3

\vec{x}_1

\vec{x}_4

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

\vec{x}_1

\vec{x}_2

\vec{x}_4

\vec{x}_3

Impose symmetries!

Toy problem: Learn to compute the center of mass

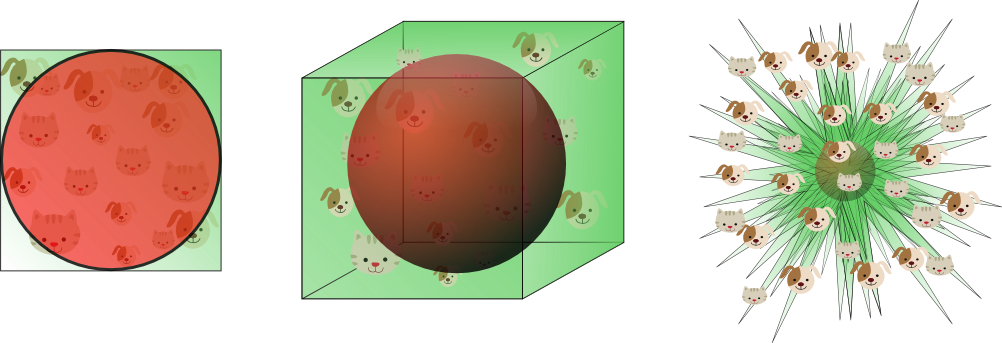

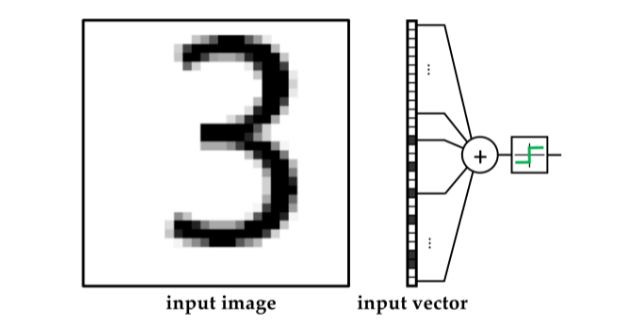

The Curse of Dimensionality

Convolutions: Reduce parameters

+ Translational Equivariance

f(Tx) = Tf(x)

f

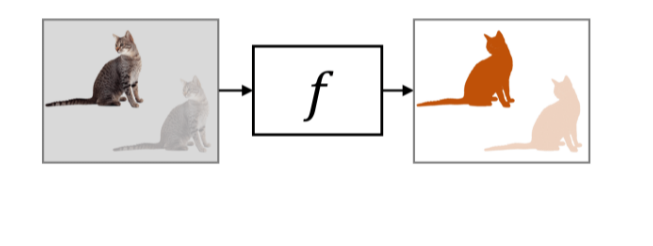

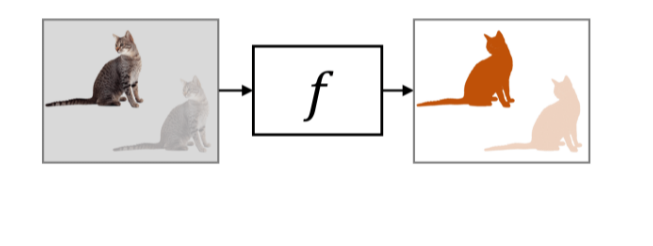

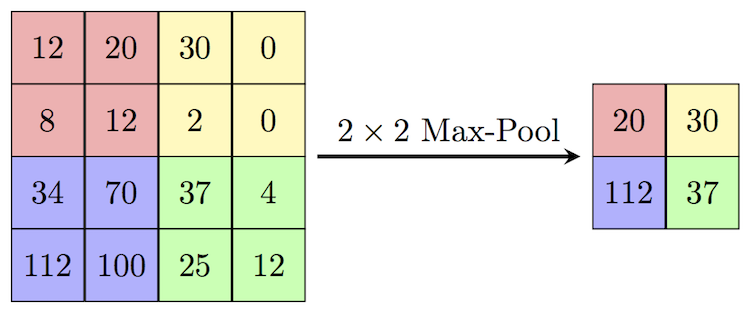

Pooling: Translational Invariance

f(Tx) = f(x)

f

CAT

2x2 Max

Pooling

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

f(Px) = f(x)

Sets: Permutation Invariance

y = \bigoplus (

\vec{h}_1

\vec{h}_2

\vec{h}_3

\vec{h}_4

) = \bigoplus(

Permutation Invariant function: Mean, Avg, Sum, Max ...

\vec{h}_2

\vec{h}_3

\vec{h}_1

\vec{h}_4

)

y = g \left(\bigoplus_{i \in V} (f(x_i))\right)

Neural Nets

Deep Sets Zaheer et al, NeurIPS'18

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

f

f

f

f

f

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

\vec{h}_1

\vec{h}_2

\vec{h}_3

\vec{h}_4

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

f(Px) = Pf(x)

y_i =f(x_i)

Node Level

Permutation Equivariance

y = g \left( \bigoplus_{i \in V} (f(x_i)) \right)

Set Level

Permutation Invariance

f(Px) = f(x)

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

A = \begin{pmatrix}

0 & 0 & 0 & 1\\

0 & 0 & 1 & 0\\

0 & 1 & 0 & 1\\

1 & 0 & 1 & 0

\end{pmatrix}

Adjacency Matrix

Permutation Invariance:

f(Px, P A P^T) = f(x, A)

Permutation Equivariance:

f(Px, P A P^T) = Pf(x, A)

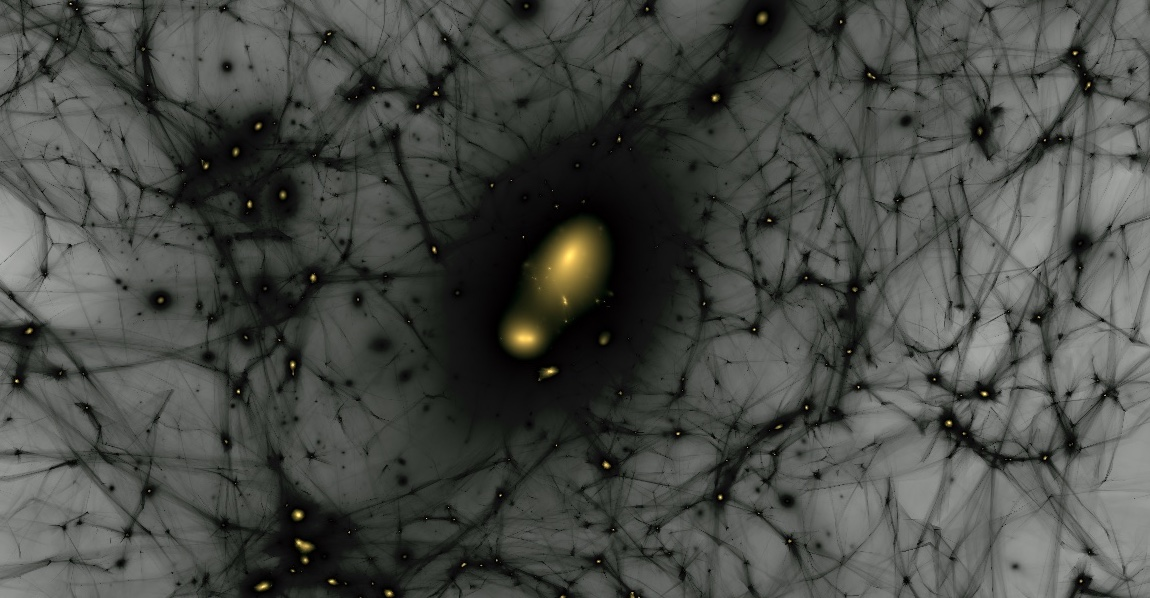

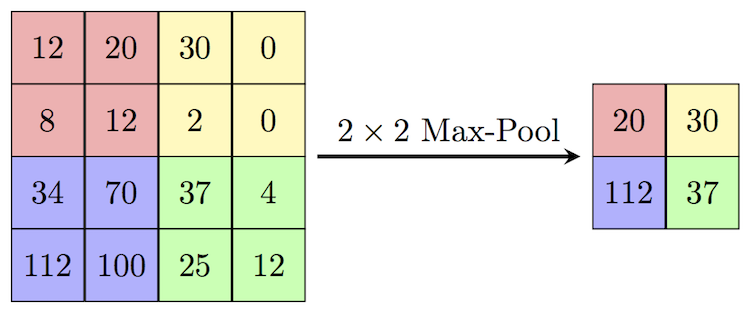

Aggregate features from local neighbourhood

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

Input Graph (x, A)

\vec{h}_3

f

Learned Graph (h, A)

\vec{h}_3 = \bigoplus(\vec{h}_2, \vec{h}_4)

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

Input Graph (x, A)

Learned Graph (h, A)

\vec{h}_3

\vec{h}_4

\vec{h}_2

\vec{h}_1

Node regression

/classification

z_i = g(h_i)

Graph regression

/classification

z_G = f(\bigoplus_{i \in V} h_i)

Link prediction

z_{ij} = f(h_i,h_j, e_{ij})

\vec{x}_1

\vec{x}_2

\vec{x}_3

\vec{x}_4

\vec{h}_i = g\left(\vec{x}_i, \bigoplus_{j \in N_i} f(\vec{x}_i, \vec{x}_j)\right)

\vec{h}_i = g\left(\vec{x}_i, \bigoplus_{j \in N_i} c_{ij} f(\vec{x}_j)\right)

CONVOLUTIONAL

MESSAGE PASSING

\vec{h}_i = g\left(\vec{x}_i, \bigoplus_{j \in N_i} a(\vec{x}_i, \vec{x}_j) f(\vec{x}_j)\right)

ATTENTIONAL

Transformers = Fully connected Graph Nets (attentional)!

\vec{h}_i = g\left(\vec{x}_i, \bigoplus_{j \in N_i} a(\vec{x}_i, \vec{x}_j) f(\vec{x}_j)\right)

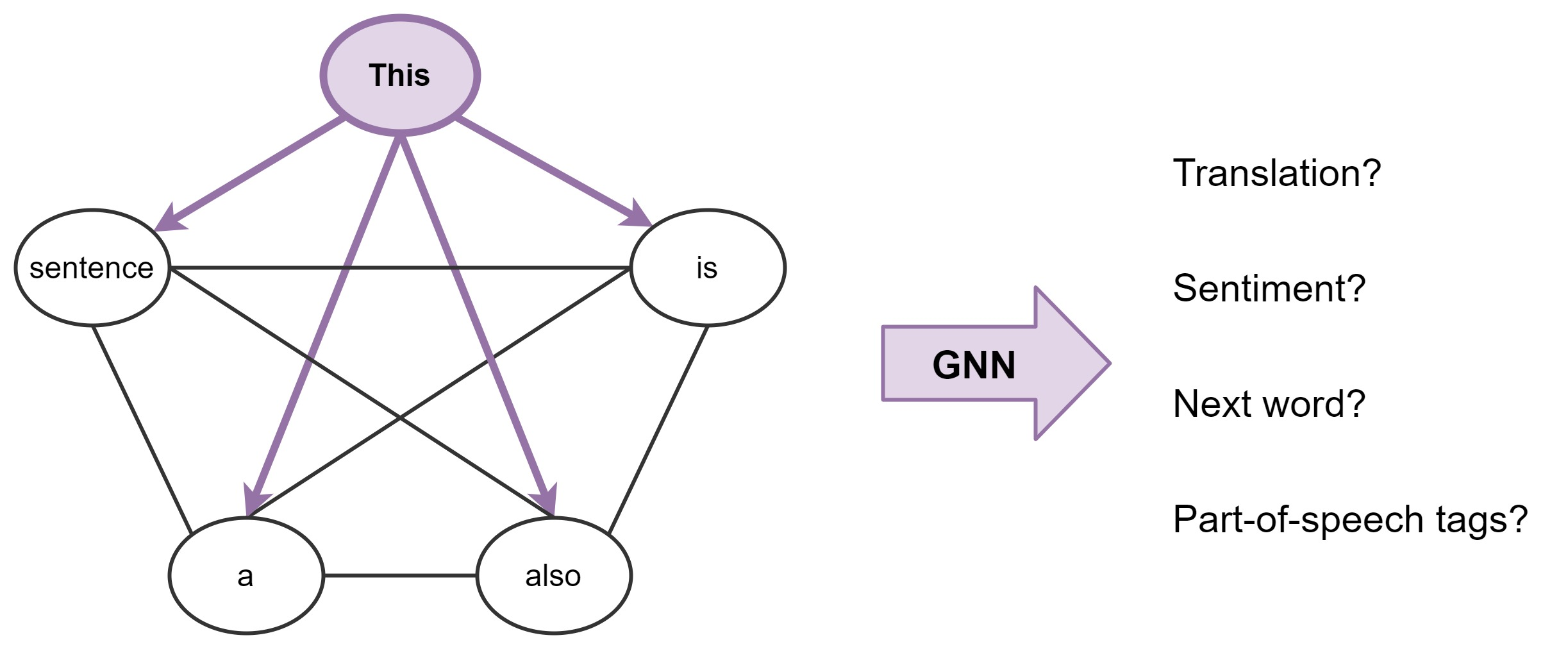

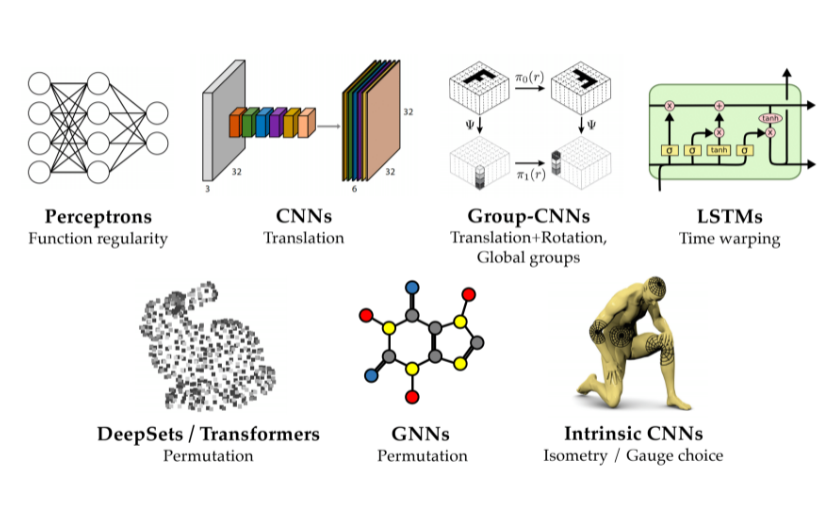

Image Credit + More Info https://arxiv.org/abs/2104.13478

Geometric Deep Learning

Graph Neural networks

By carol cuesta

Graph Neural networks

- 570