Cosmology's Midlife Crisis

IAIFI Fellow, MIT

Carolina Cuesta-Lazaro

Art: "A Bar at the Folies-Bergère" by Édouard Manet

Embracing the Machine Learning Makeover

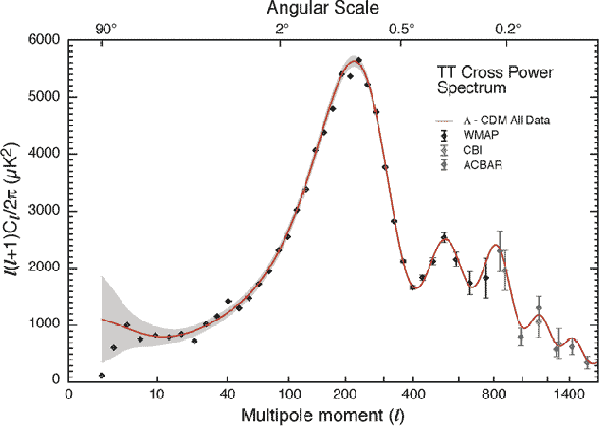

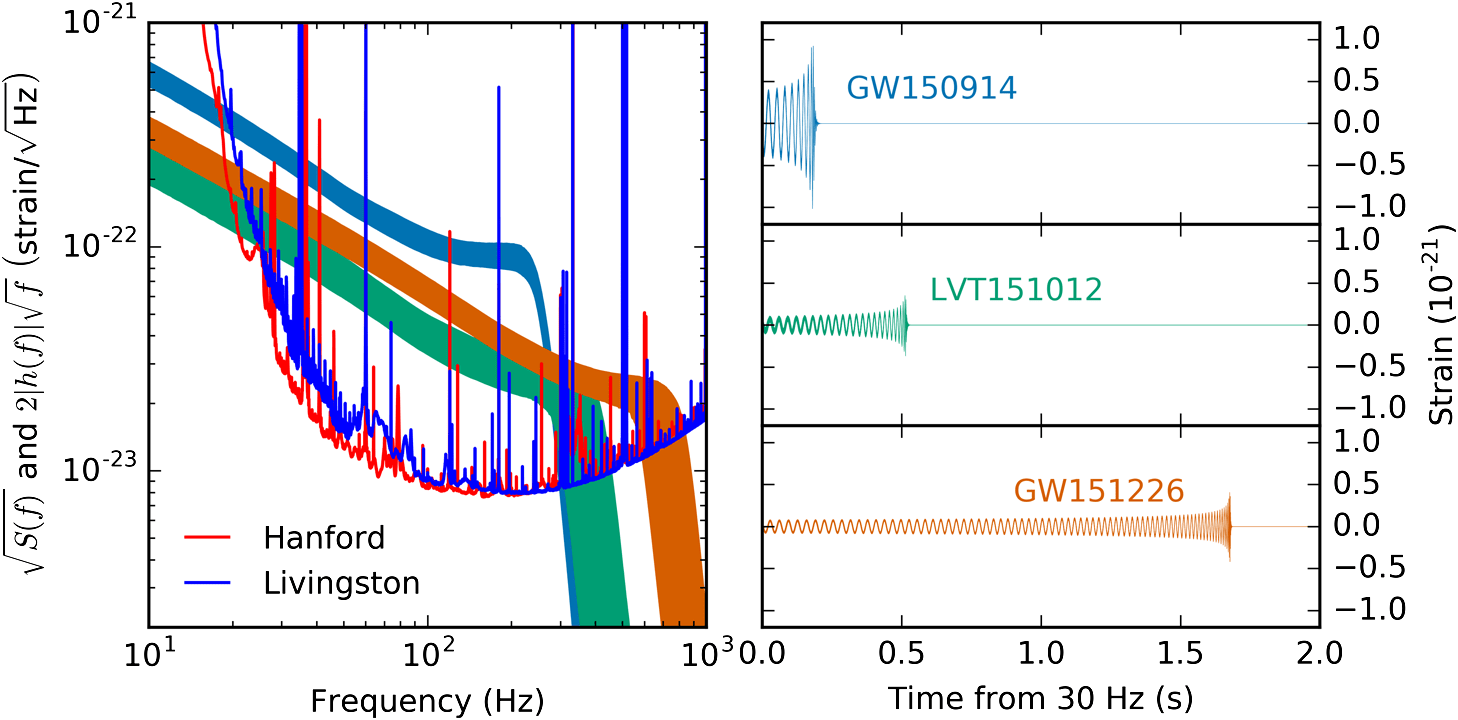

["DESI 2024 VI: Cosmological Constraints from the Measurements of Baryon Acoustic Oscillations" arXiv:2404.03002]

What role did Machine Learning play?

Dark Energy is constant over time

DESI's Dark Energy constraints

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

1-Dimensional

Machine Learning

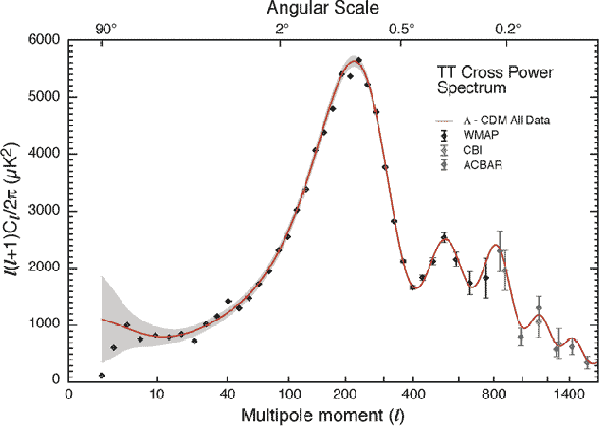

Secondary anisotropies

Galaxy formation

Intrinsic alignments

DESI, DESI-II, Spec-S5

Euclid / LSST

Simons Observatory

CMB-S4

Ligo

Einstein

The era of Big Data Cosmology

xAstrophysics

5-Dimensional

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

Dataset Size = 1

Can't poke it in the lab

Simulations

Bayesian statistics

Cosmology is hard

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

Astrophysics dominates Simulation-based Inference

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

Unicorn land The promise of ML for Cosmology

Reality Check Roadblocks & Bottlenecks

Outline of this talk

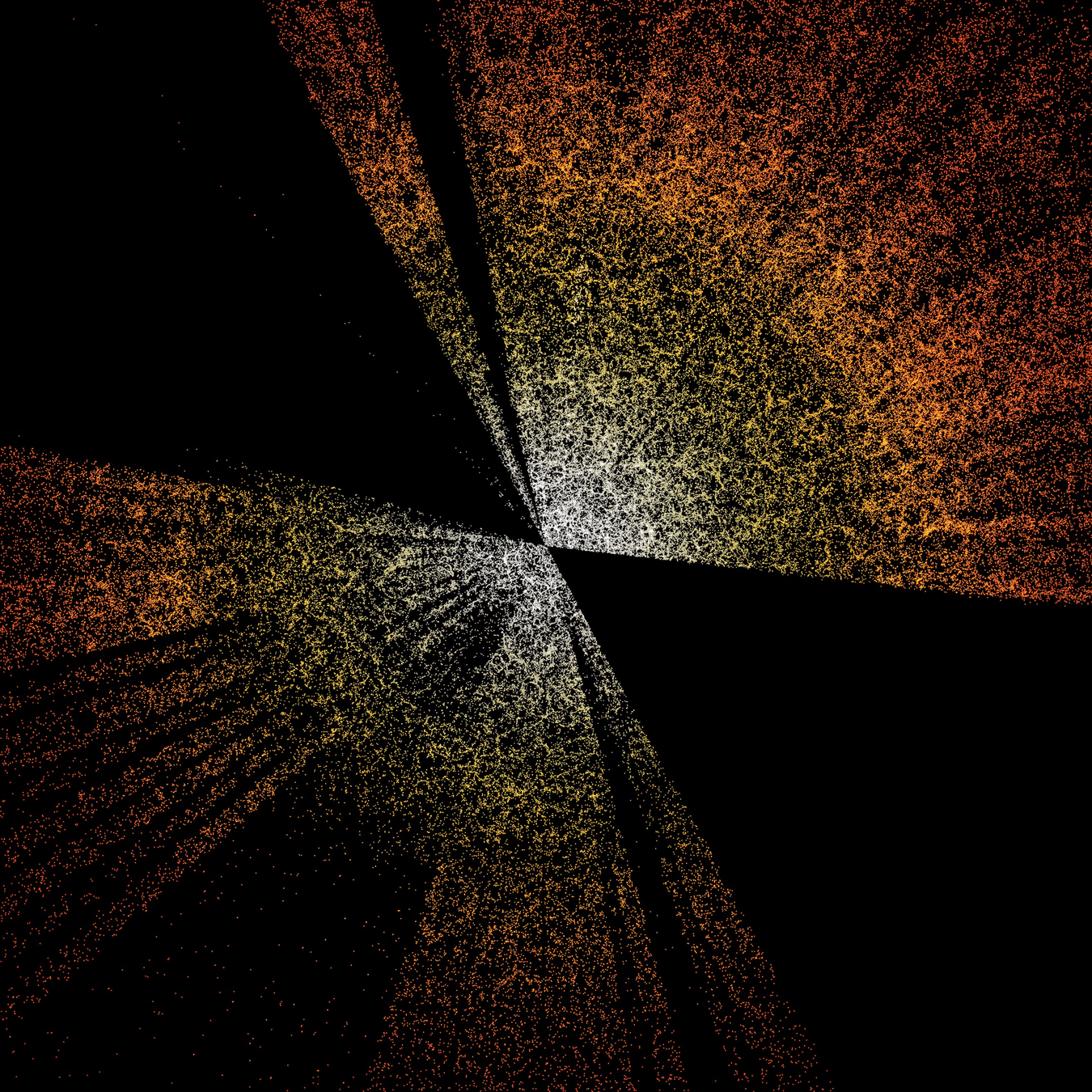

Mapping dark matter

Reverting gravitational evolution

Learning to represent baryonic feedback

Data-driven hybrid simulators

Unsupervised problems

Fast Emulators + Compression + Field Level Inference

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

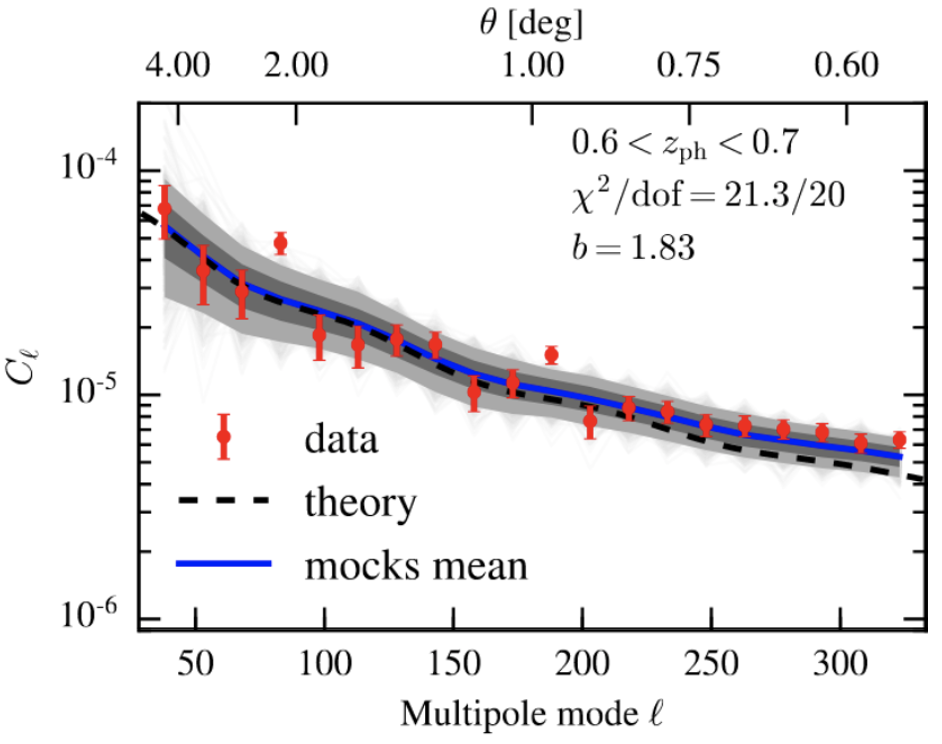

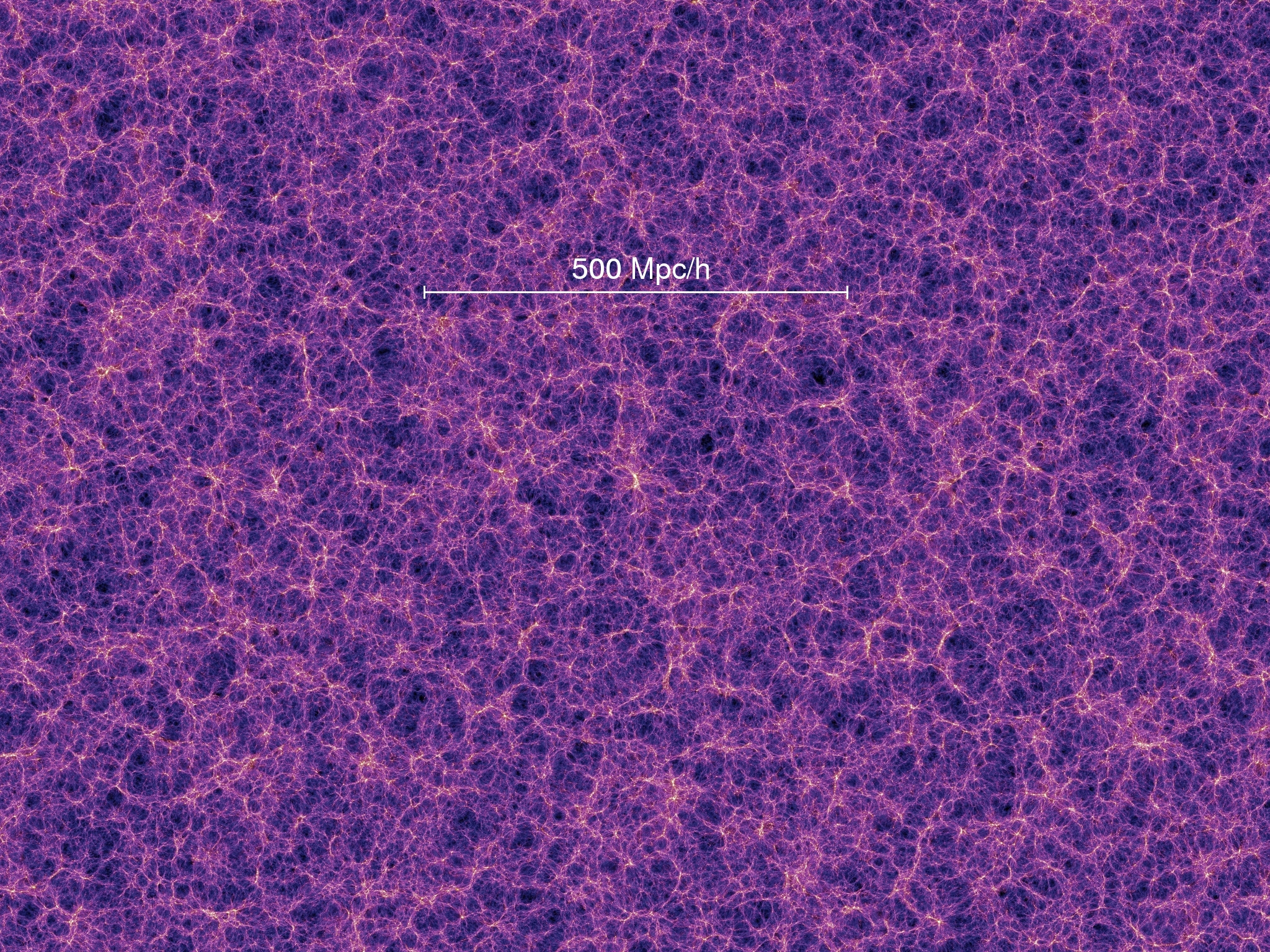

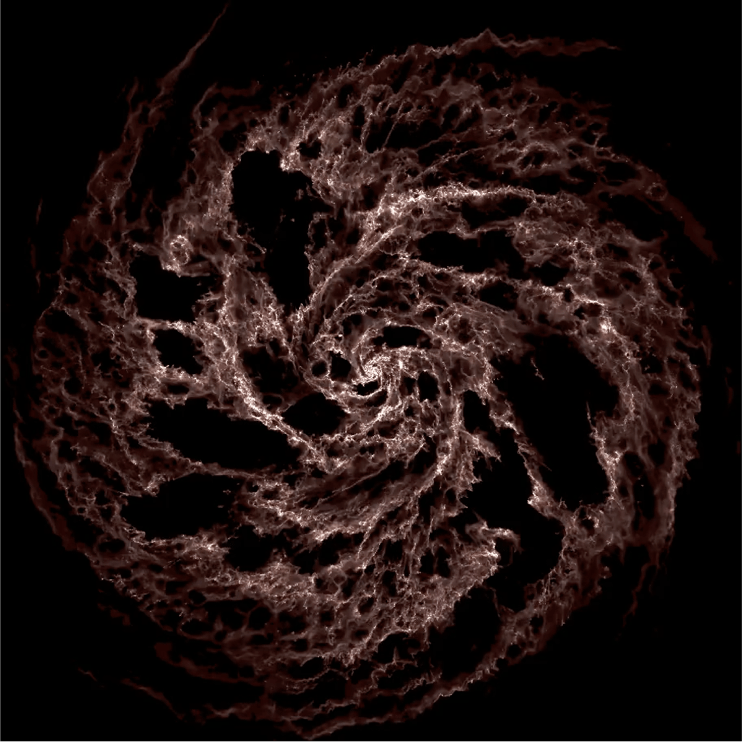

[Image Credit: Claire Lamman (CfA/Harvard) / DESI Collaboration]

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

The cost of cosmological simulations

AbacusSummit

330 billion particles in 2 Gpc/h volume

60 trillion particles

~ 8TBs per simulation

15M CPU hours

(TNG50 ~100M cpu hours)

ML Requirements

WANTED

Fast field level emulators

Compression methods

Likelihood estimators

Simulation efficient methods

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

Model

Training Samples

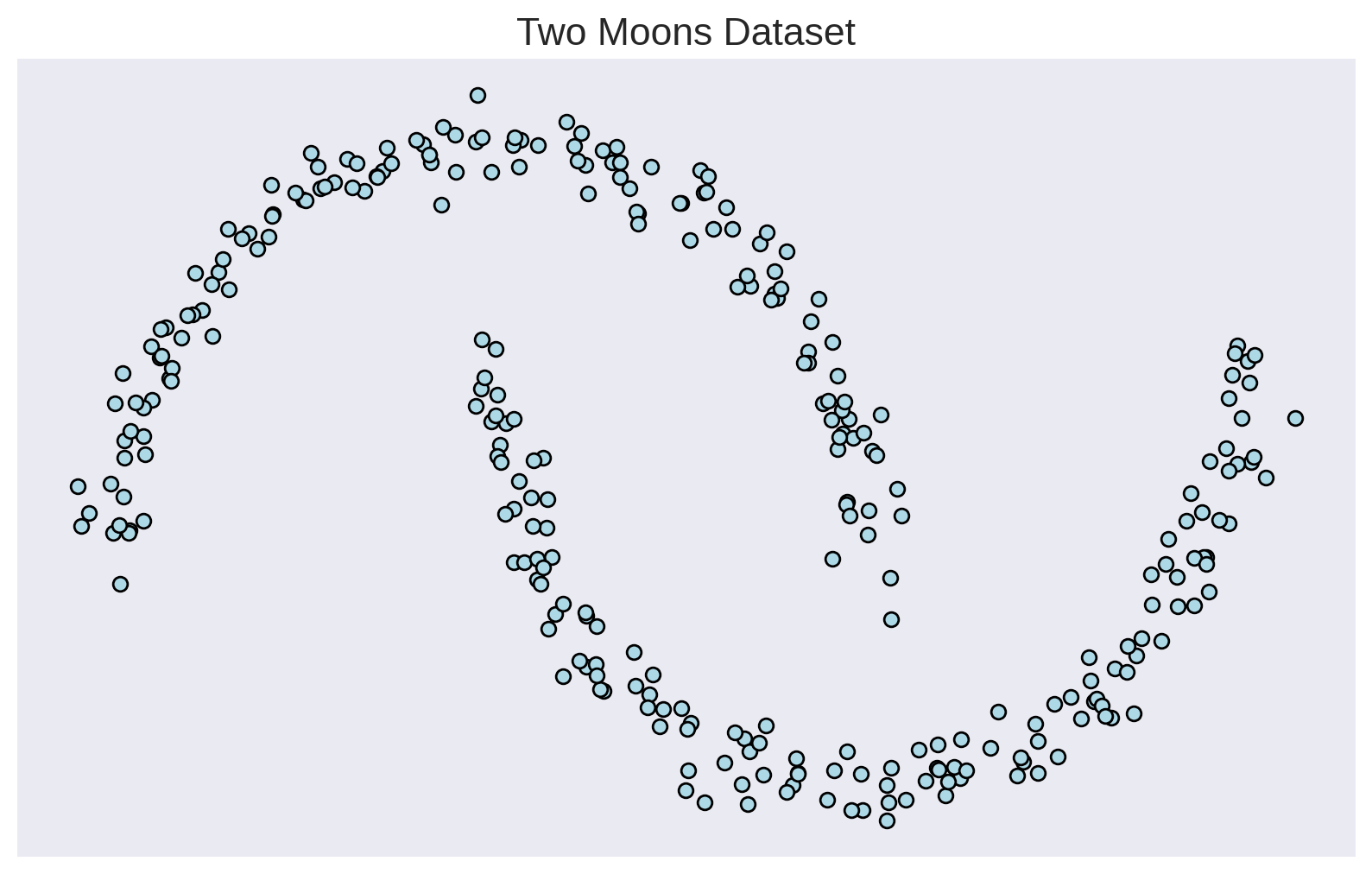

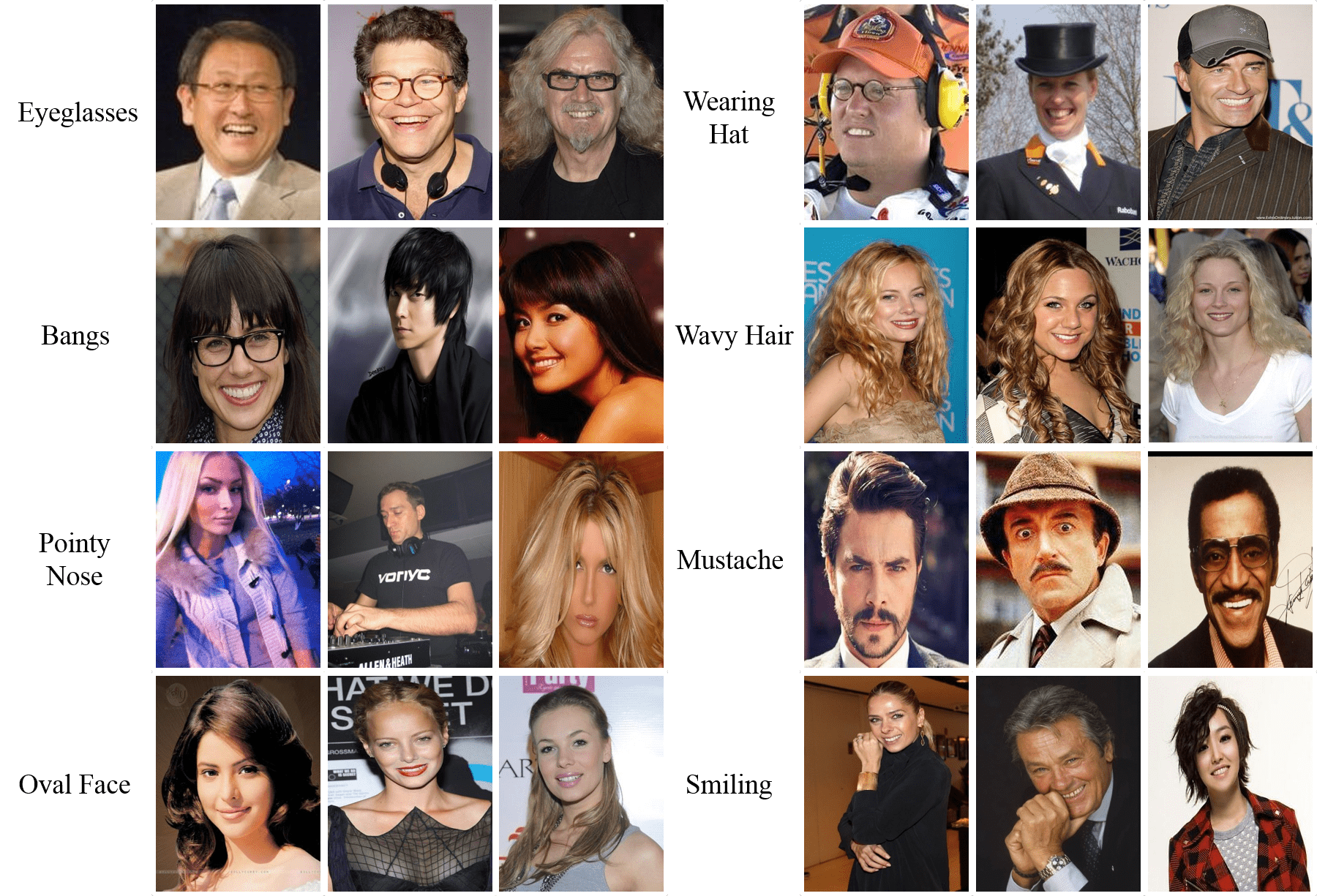

Generative Models 1o1

Evaluate probabilities

Low p(x)

High p(x)

Generate Novel Samples

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

Probability mass conserved locally

Inference a la gradient descent

1) Tractable

2) f maximally expressive

Loss = Maximize likelihood training data

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

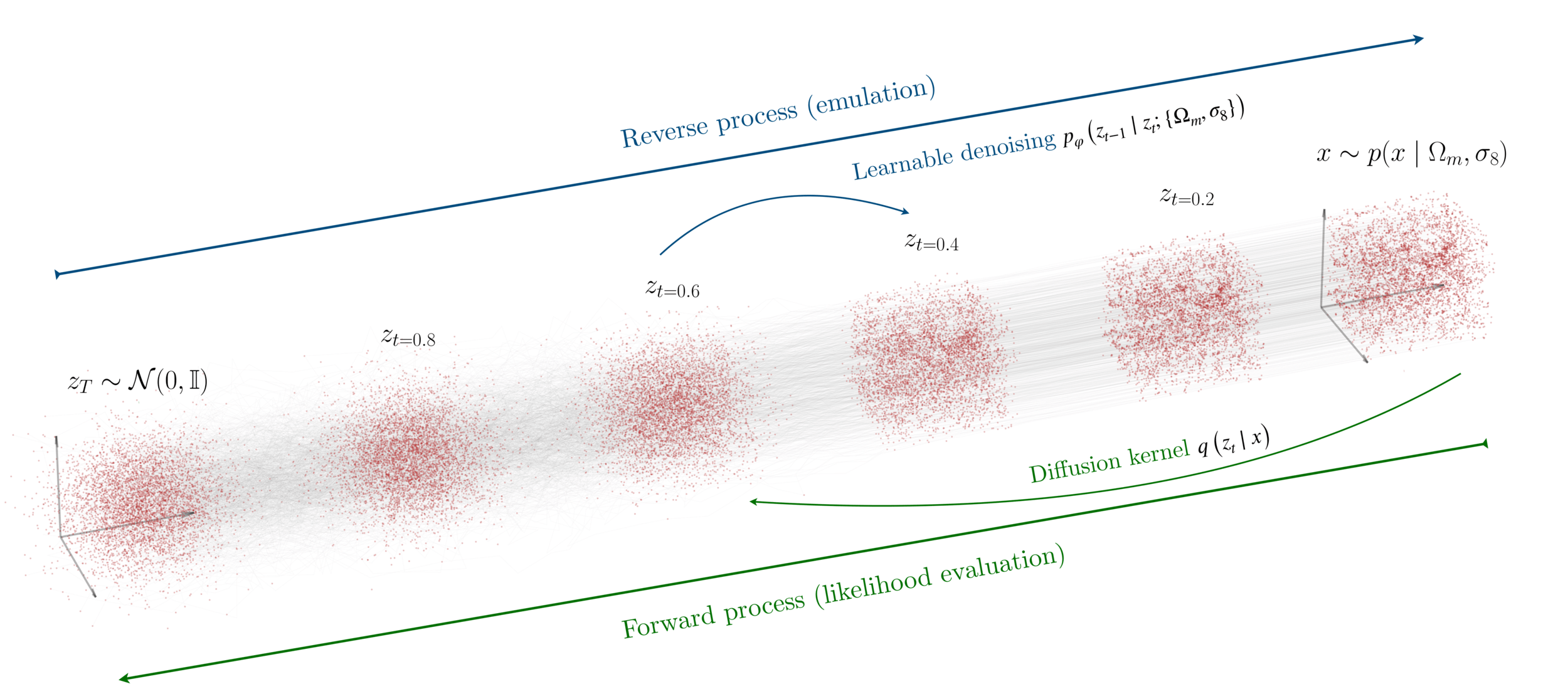

In continuous time

Continuity Equation

Loss requires solving an ODE!

Diffusion, Flow matching, Interpolants... All ways to avoid this at training time

[Image Credit: "Understanding Deep Learning" Simon J.D. Prince]

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

Can we regress the velocity field directly?

Turned maximum likelihood into a regression problem!

Interpolant

Stochastic Interpolant

Expectation over all possible paths that go through xt

["Stochastic Interpolants: A Unifying framework for flows and diffusion" Albergo et al arXiv:2303.08797]

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

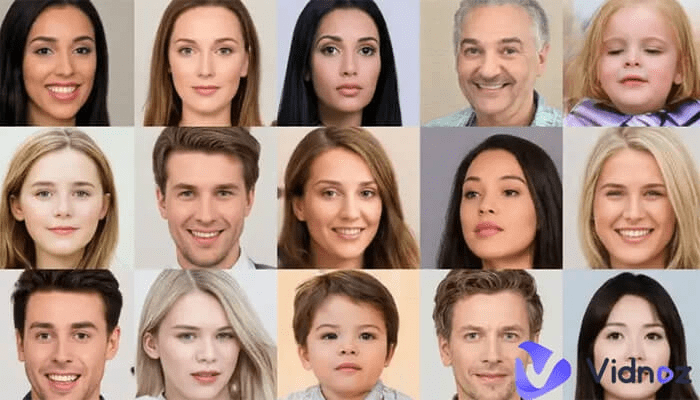

Diffusion Models

Reverse diffusion: Denoise previous step

Forward diffusion: Add Gaussian noise (fixed)

Prompt

A person half Yoda half Gandalf

Denoising = Regression

Fixed base distribution:

Gaussian

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

["A point cloud approach to generative modeling for galaxy surveys at the field level"

Cuesta-Lazaro and Mishra-Sharma

ICML AI4Astro 2023, arXiv:2311.17141]

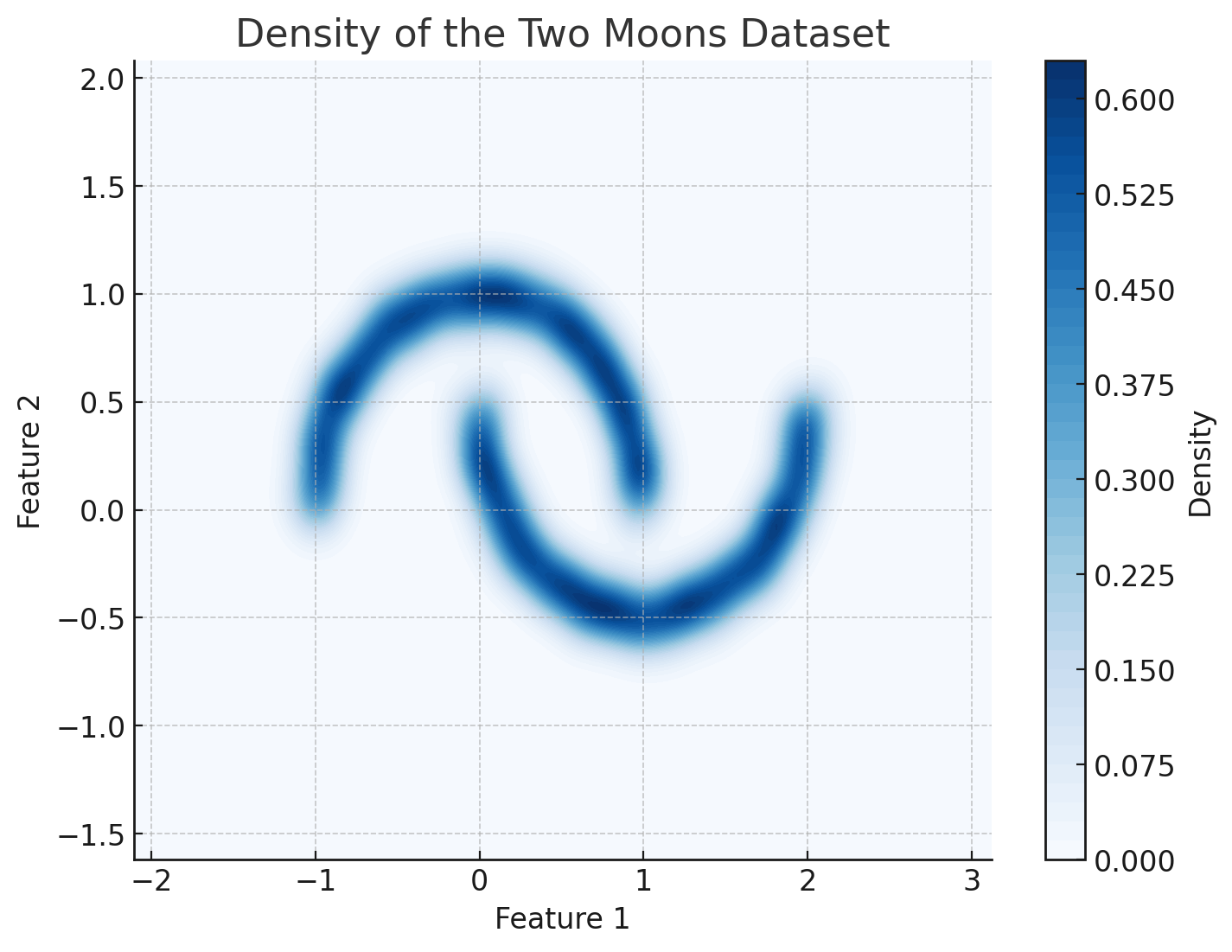

Base Distribution

Target Distribution

- Sample

- Evaluate

Long range correlations

Huge pointclouds (20M)

Homogeneity and isotropy

Siddharth Mishra-Sharma

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

Fixed Initial Conditions / Varying Cosmology

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

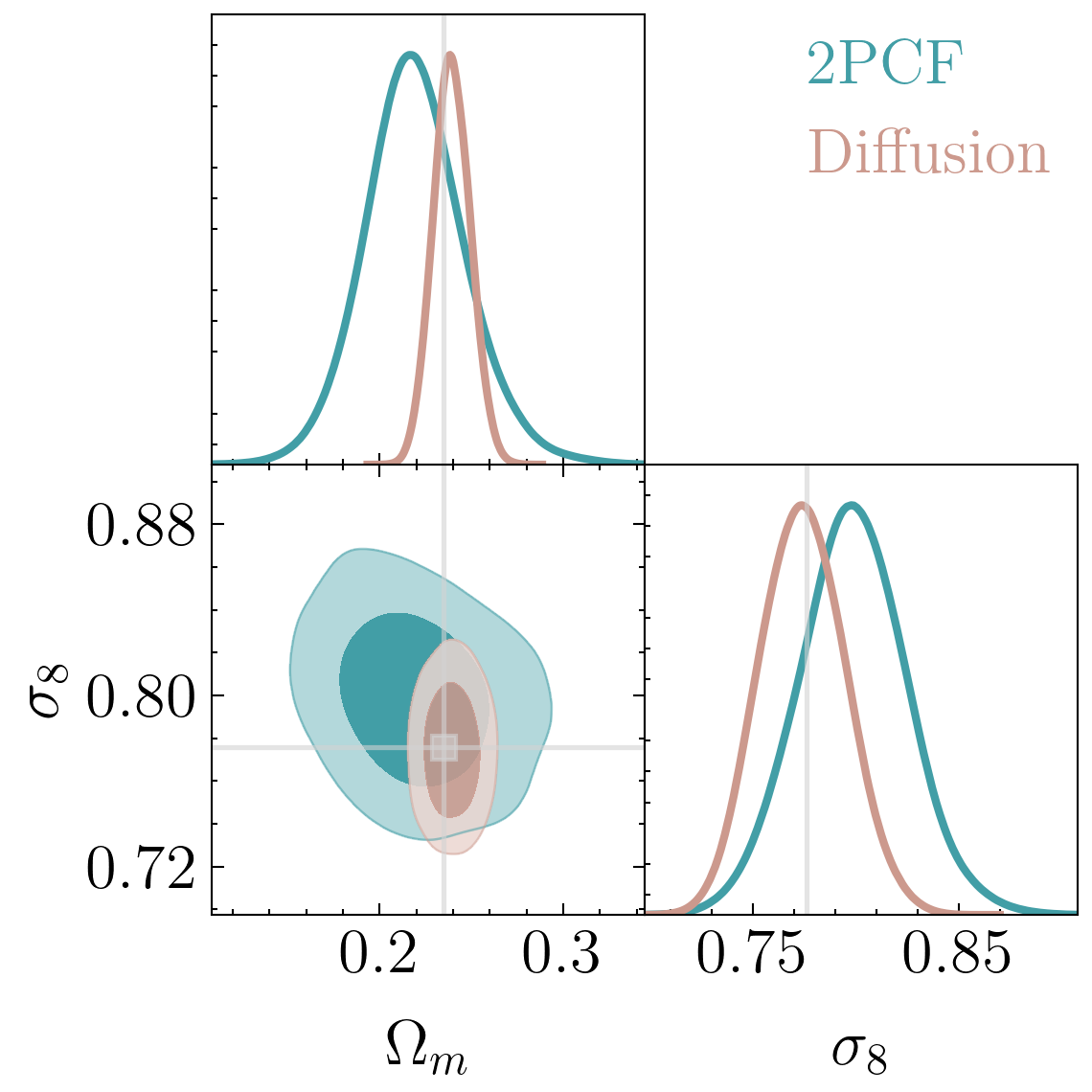

Diffusion model

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

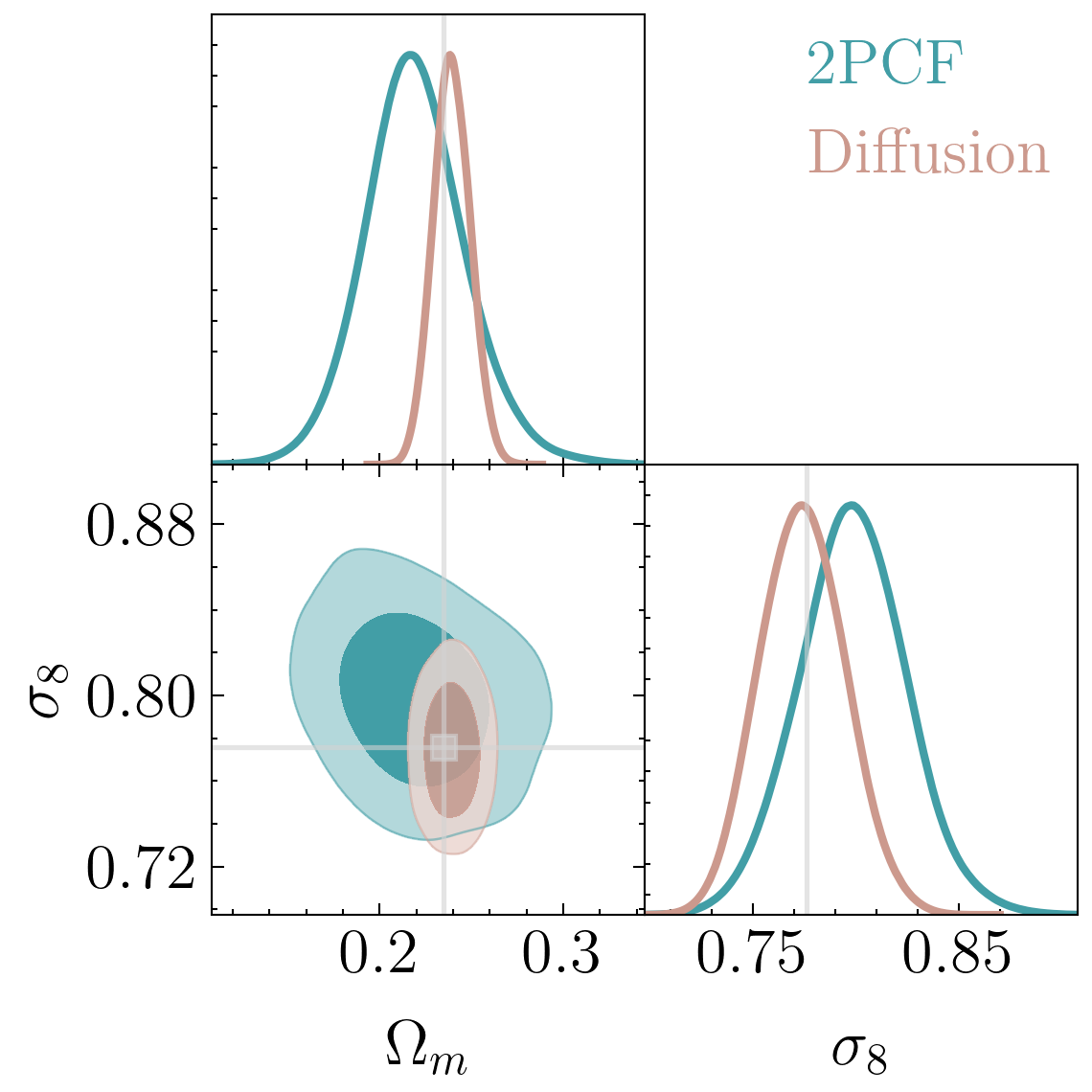

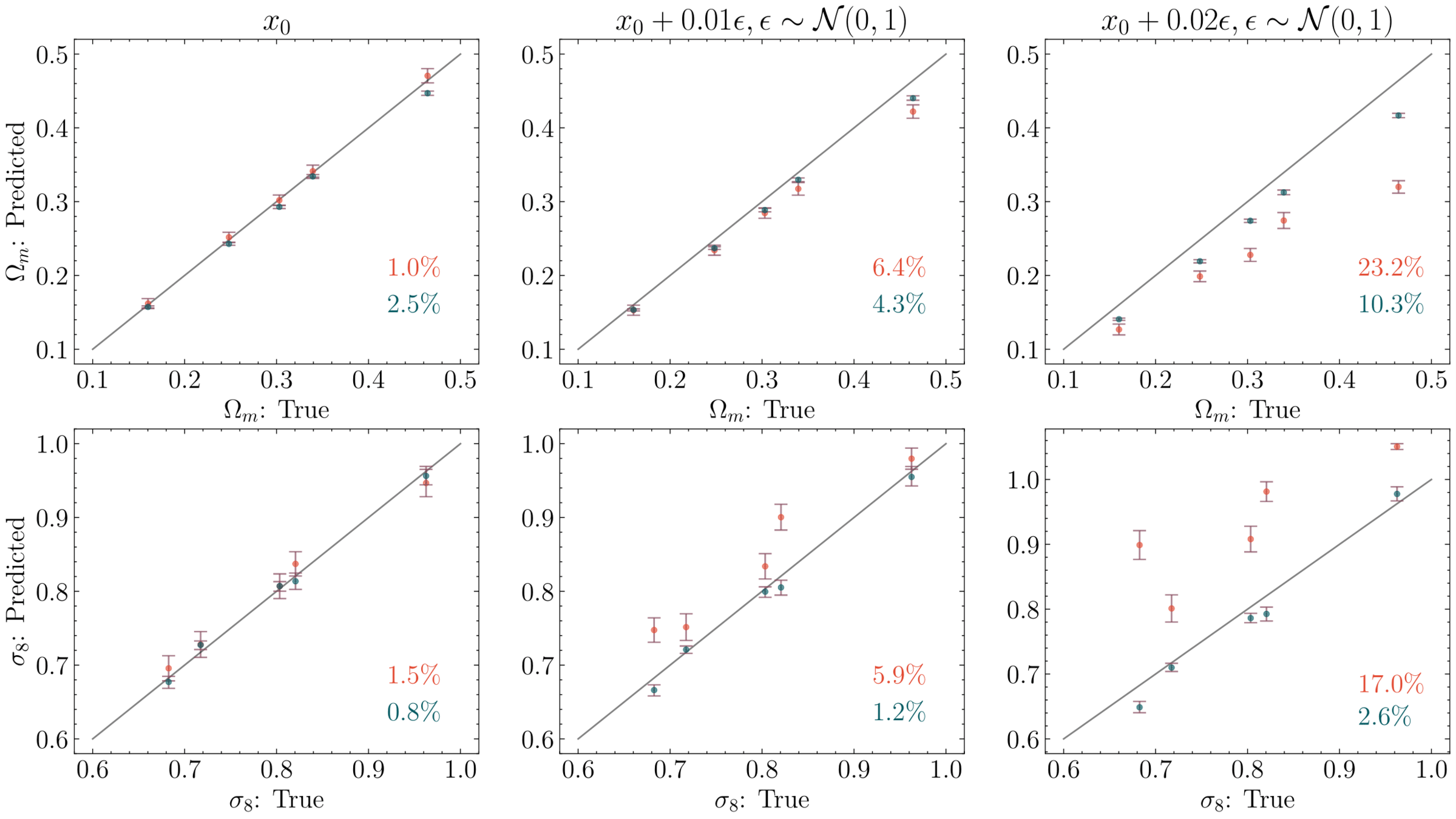

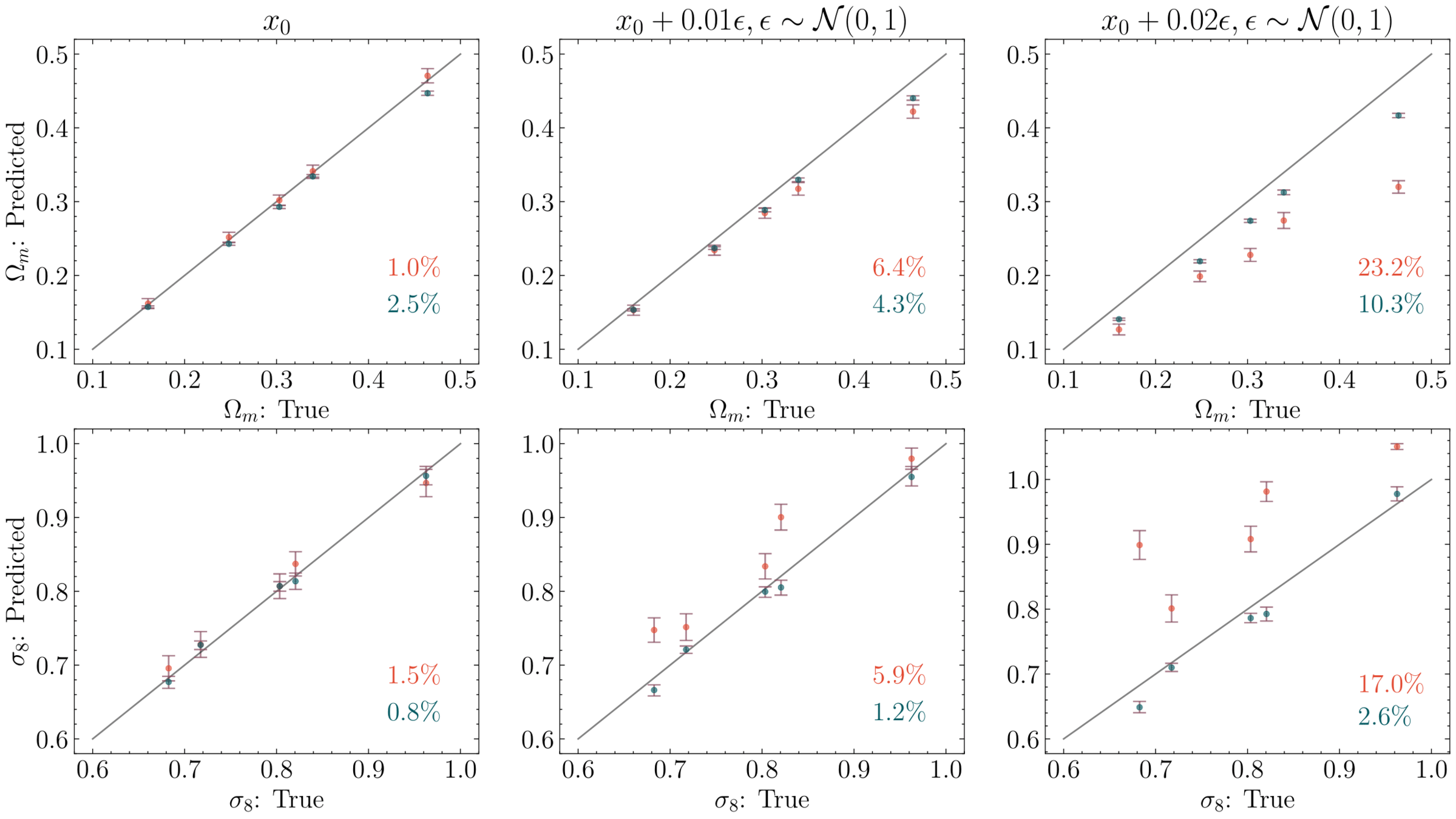

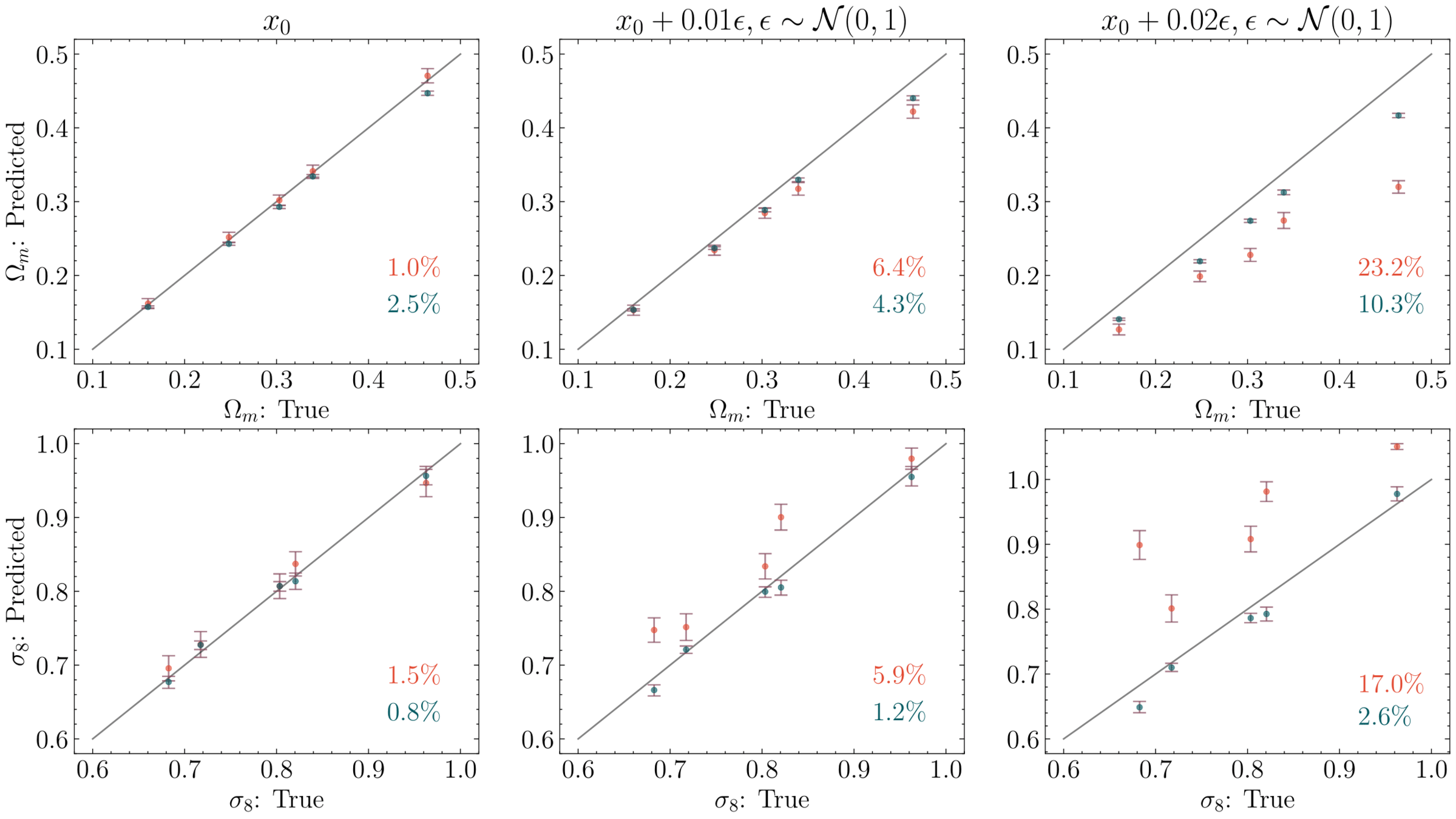

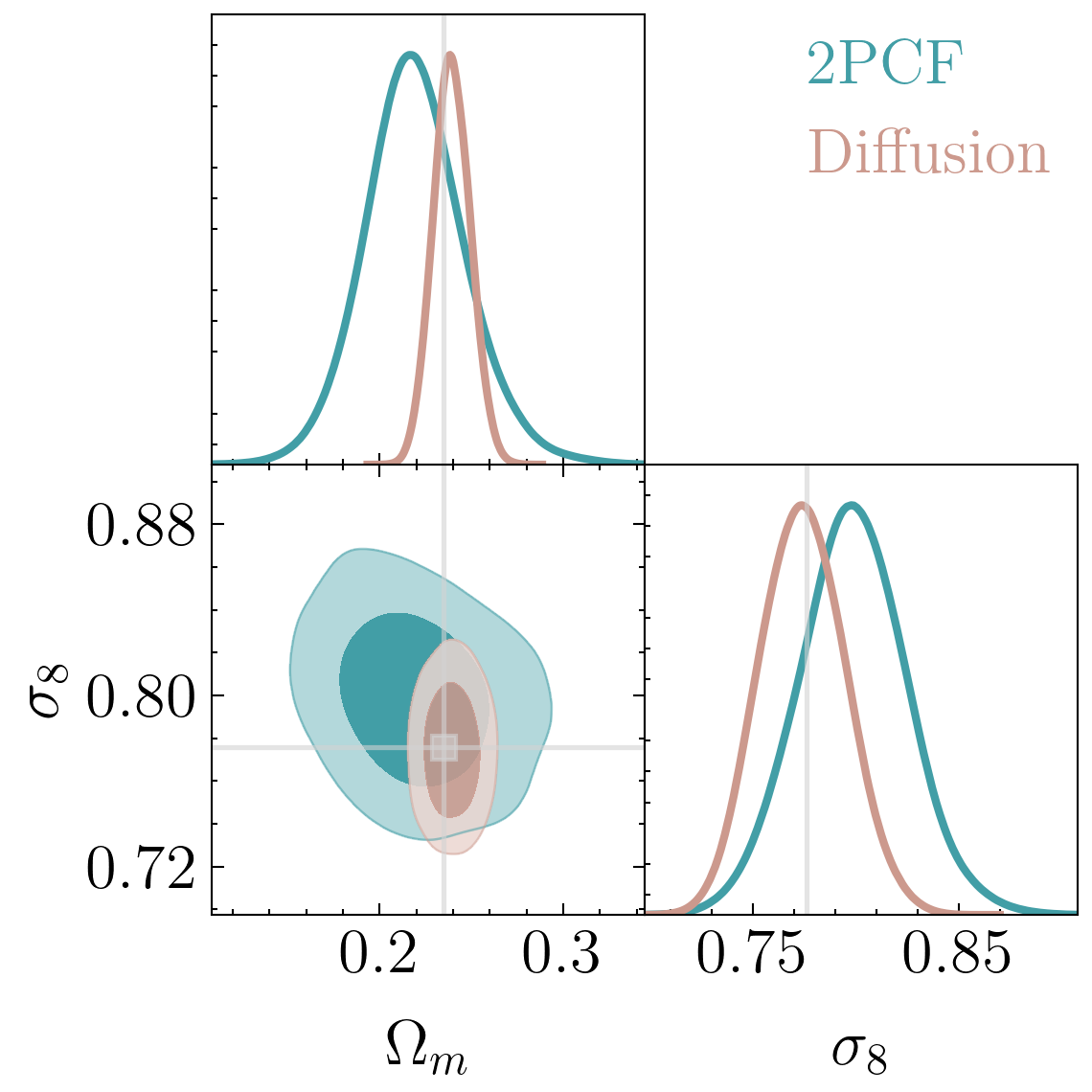

CNN

Diffusion

Increasing Noise

["Diffusion-HMC: Parameter Inference with Diffusion Model driven Hamiltonian Monte Carlo" Mudur, Cuesta-Lazaro and Finkbeiner NeurIPs 2023 ML for the physical sciences, arXiv:2405.05255]

Nayantara Mudur

CNN

Diffusion

Carolina Cuesta-Lazaro IAIFI/MIT @ University of Tokyo 2024

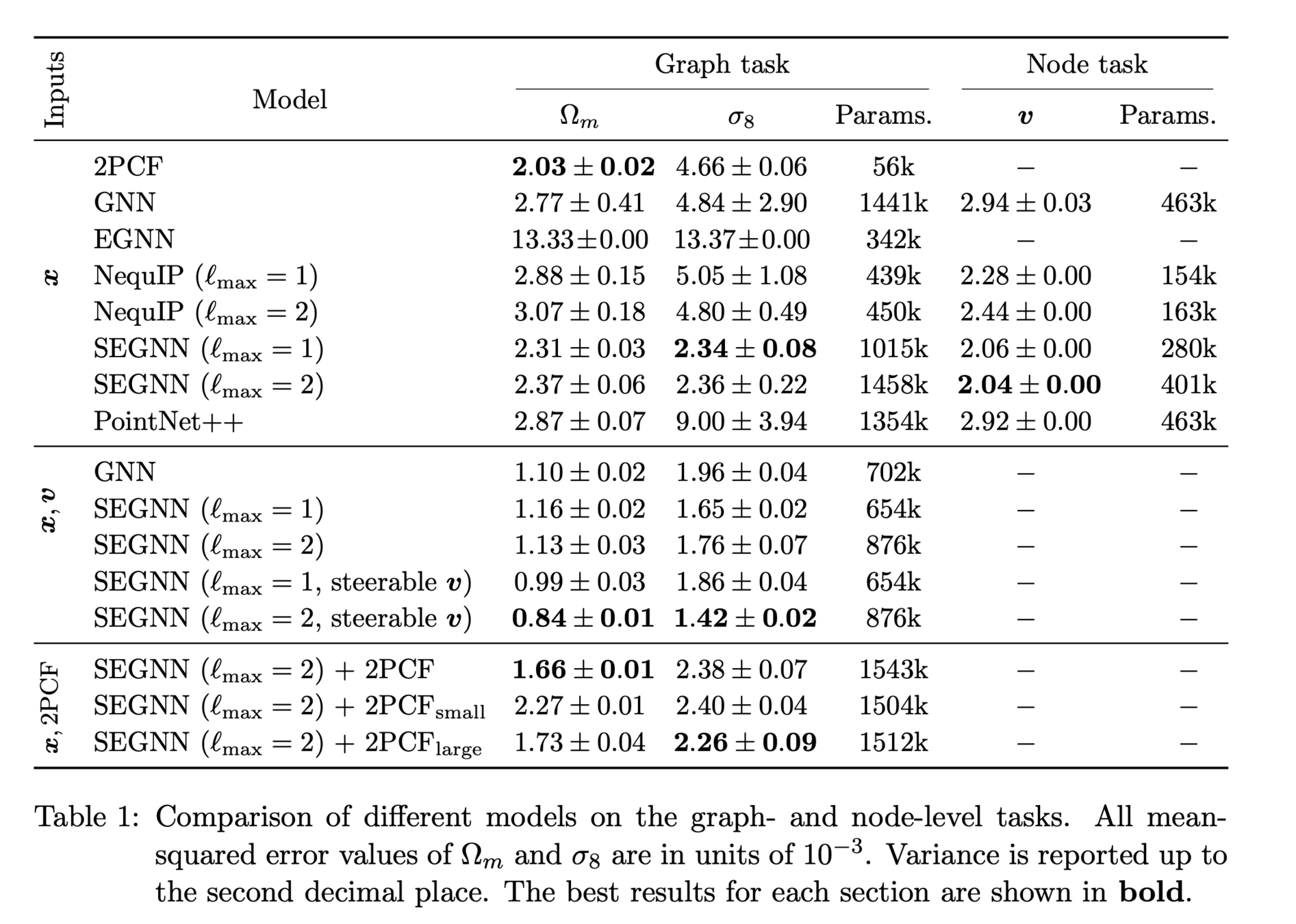

["A Cosmic-Scale Benchmark for Symmetry-Preserving Data Processing"

Balla, Mishra-Sharma, Cuesta-Lazaro et al

NeurIPs 2024 NuerReps arXiv:2410.20516]

E(3) Equivariant architectures

Benchmark models

["Geometric and Physical Quantities Improve E(3) Equivariant Message Passing" Brandstetter et al arXiv:2110.02905]

Symmetry-preserving ML

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

The bitter lesson by Rich Sutton

The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin. [...]

methods that continue to scale with increased computation even as the available computation becomes very great. [...]

We want AI agents that can discover like we can, not which contain what we have discovered.

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

["A Cosmic-Scale Benchmark for Symmetry-Preserving Data Processing"

Balla, Mishra-Sharma, Cuesta-Lazaro et al

NeurIPs 2024 NuerReps arXiv:2410.20516]

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Memory scaling point clouds and voxels

Graph

Nodes

Edges

3D Mesh

Voxels

Both data representations scale badly with increasing resolution

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Representing Continuous Fields

Continuous in space and time

x500 Compression!

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

#Recap

More than fast emulators:

Robust field-level likelihood models

Need to compress data:

Continuous fields ~1000?

Need to improve data efficiency:

Incorporating symmetries helps

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

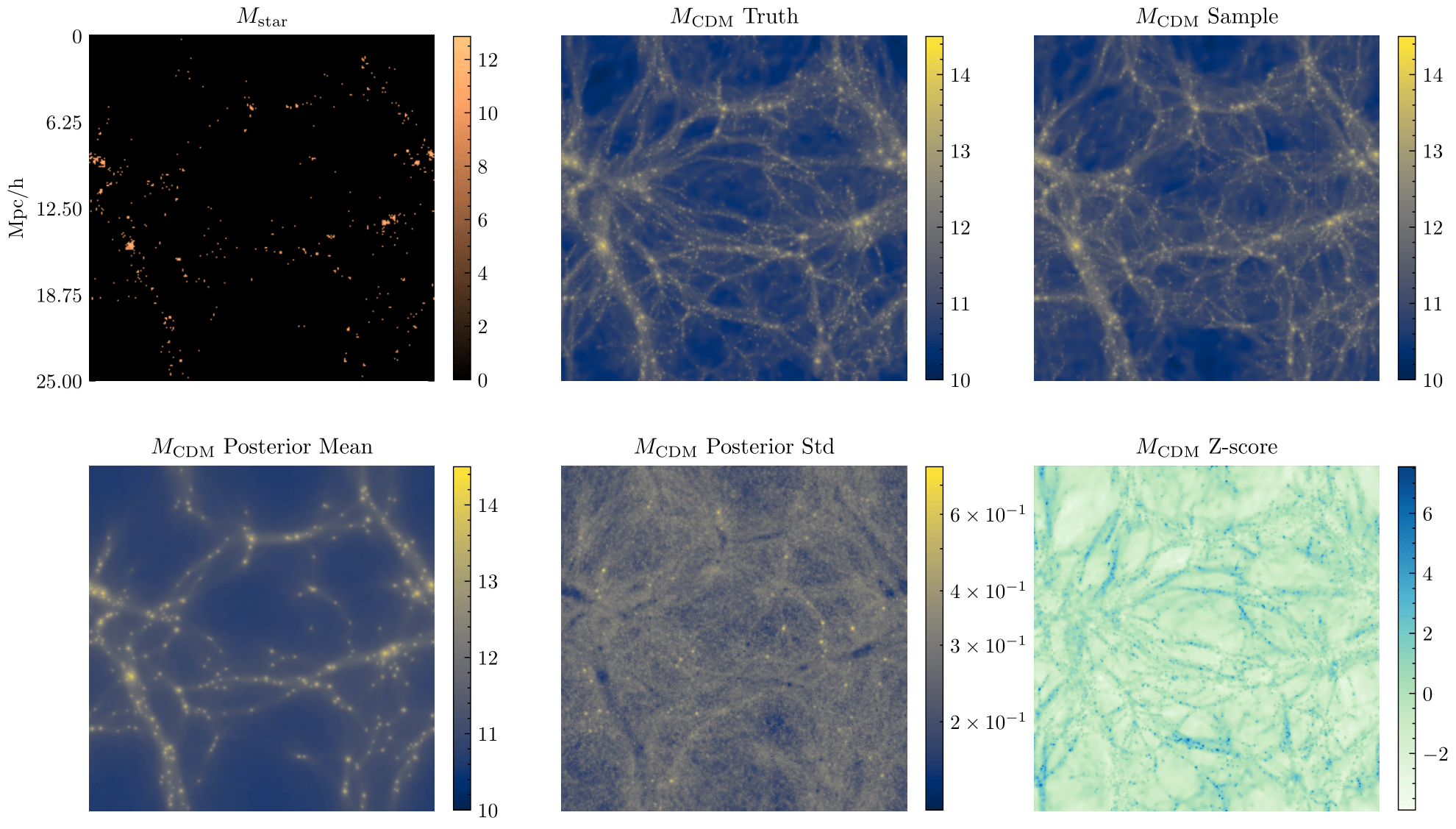

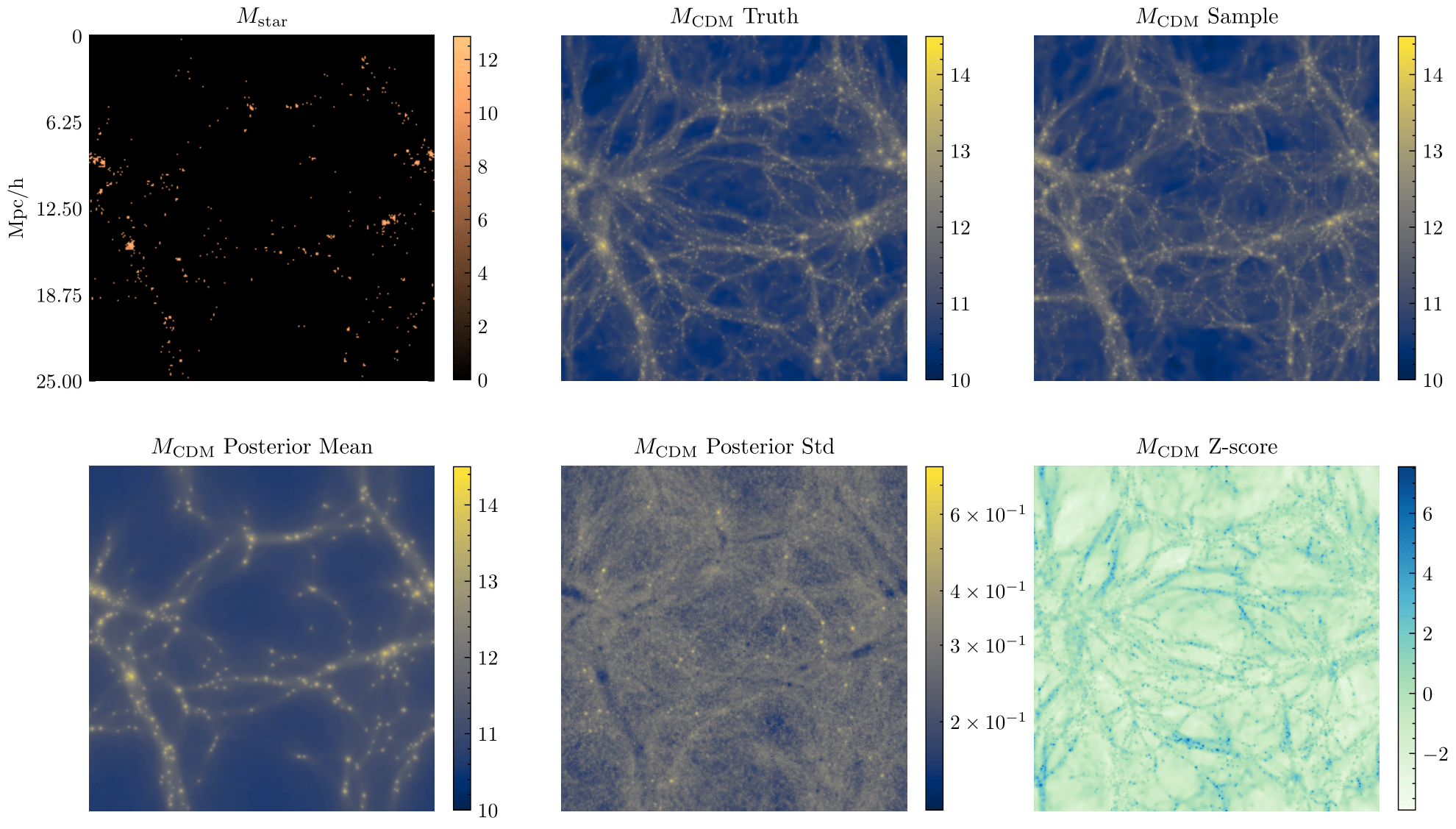

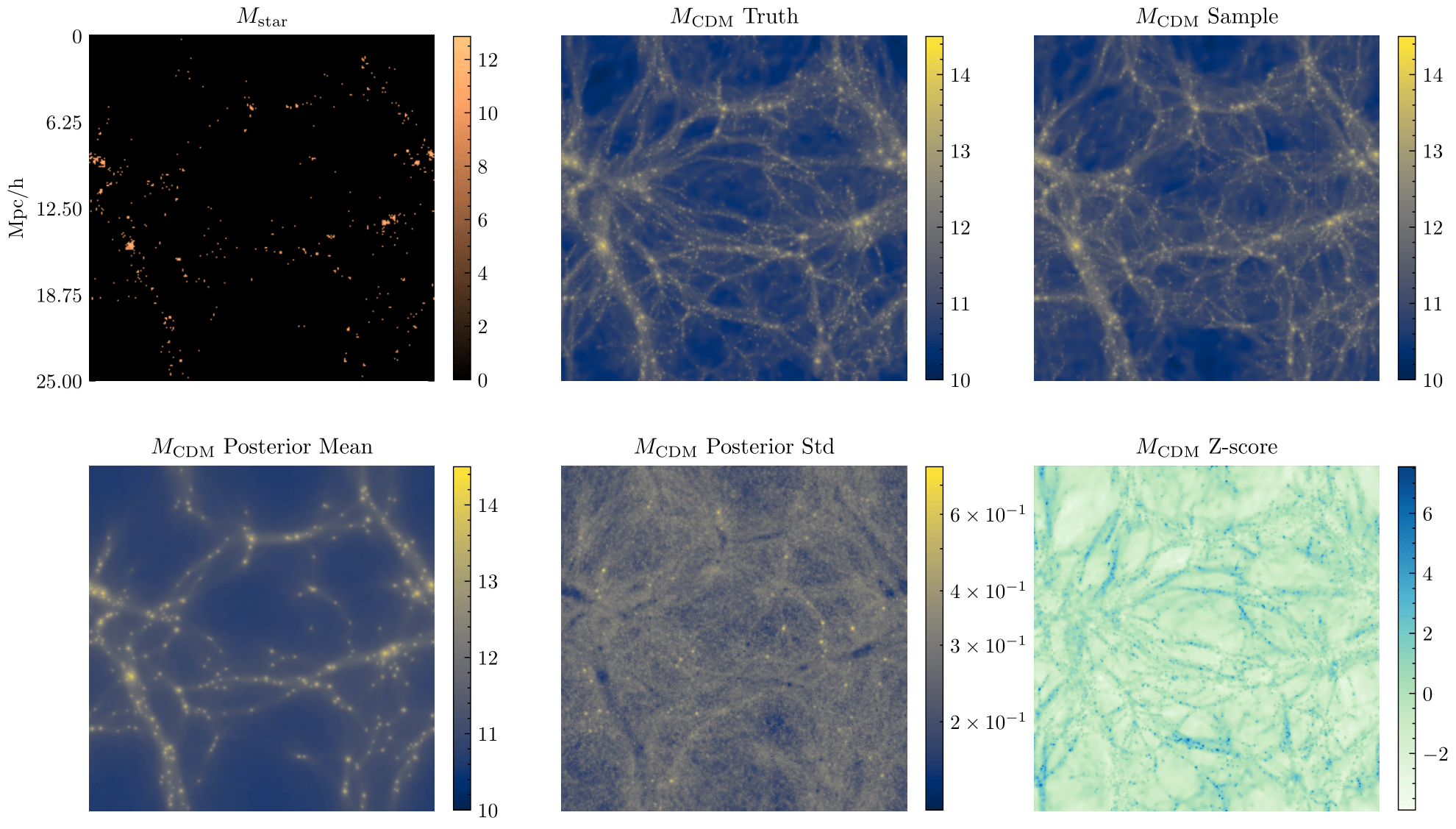

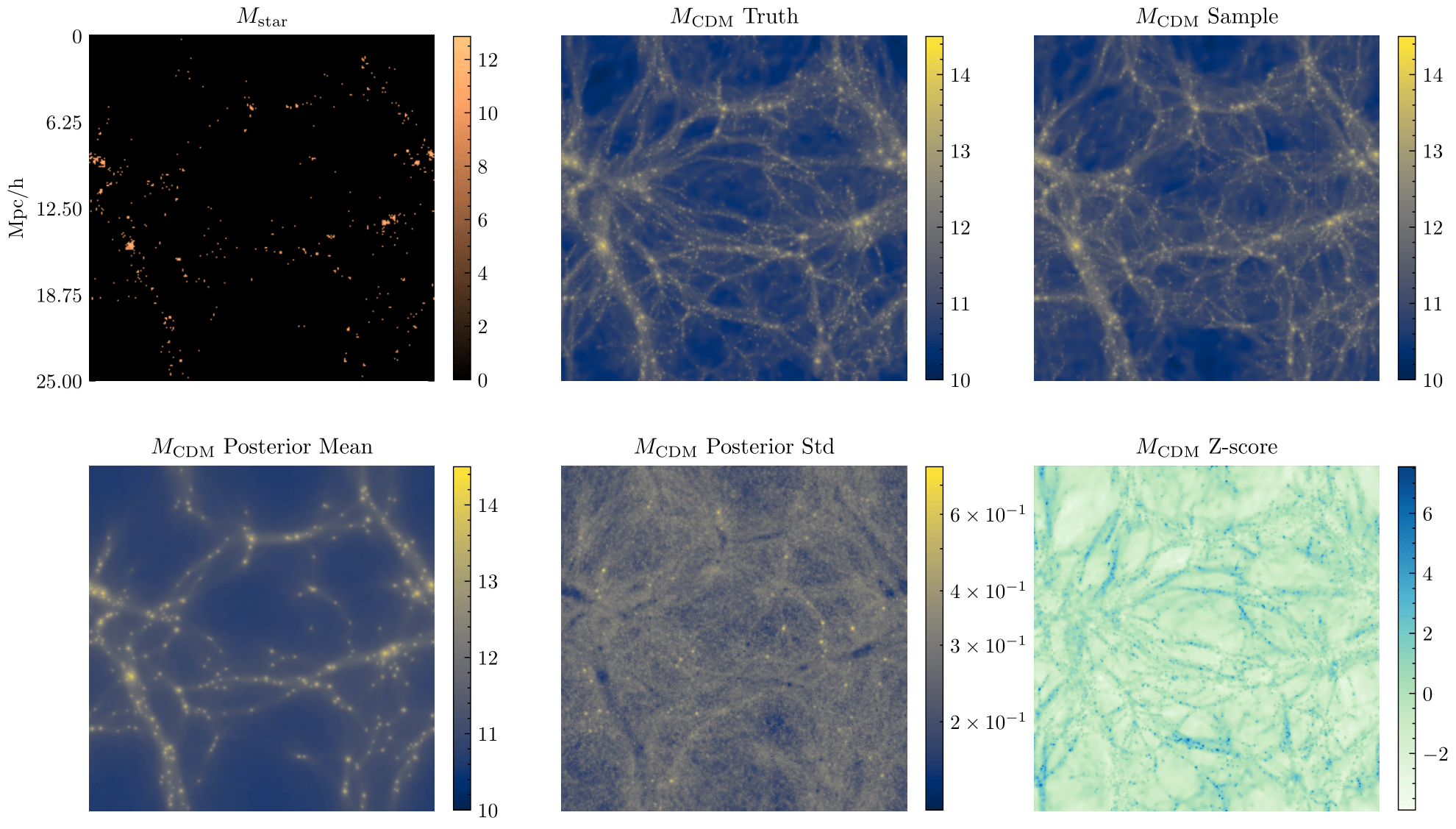

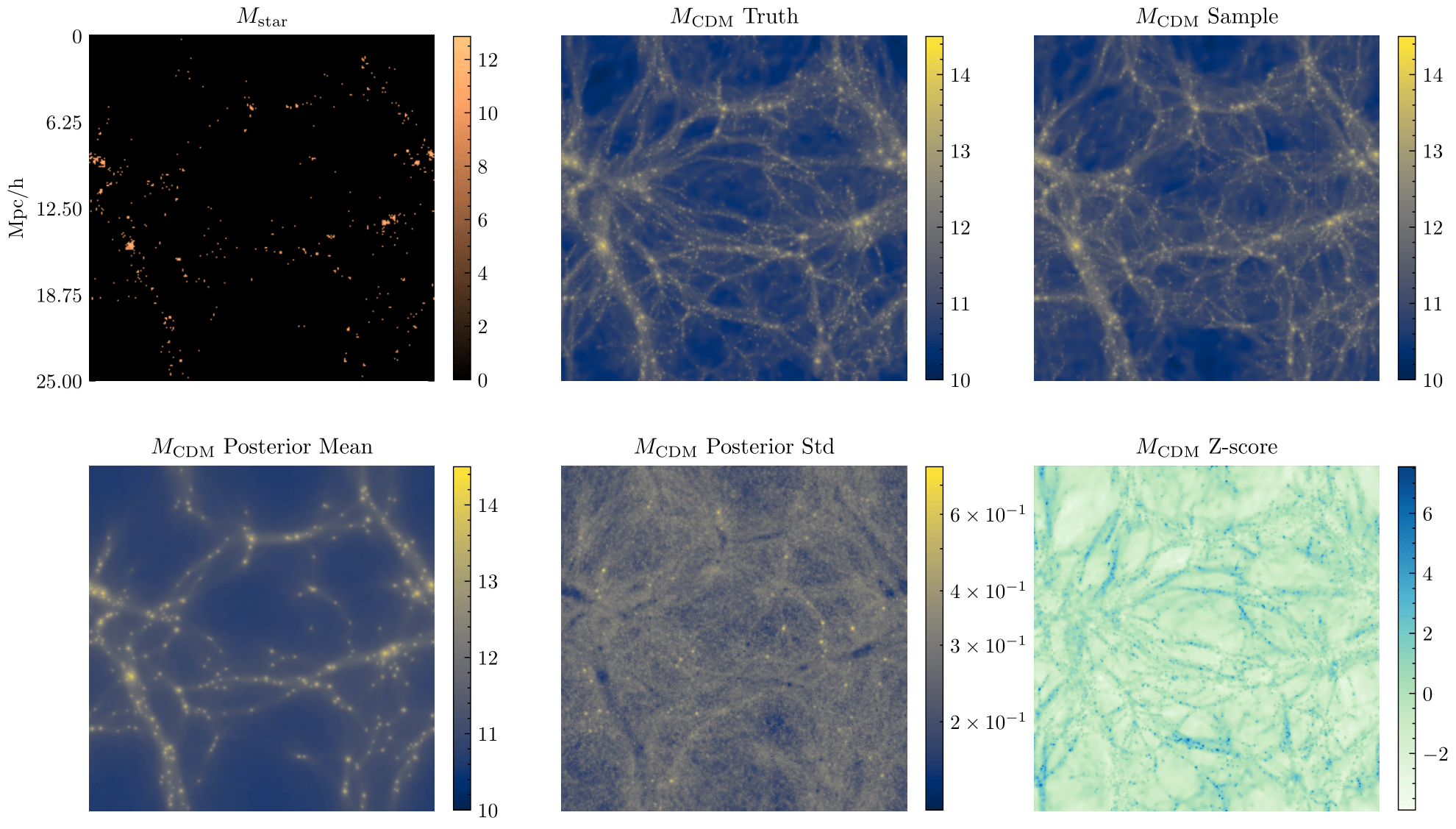

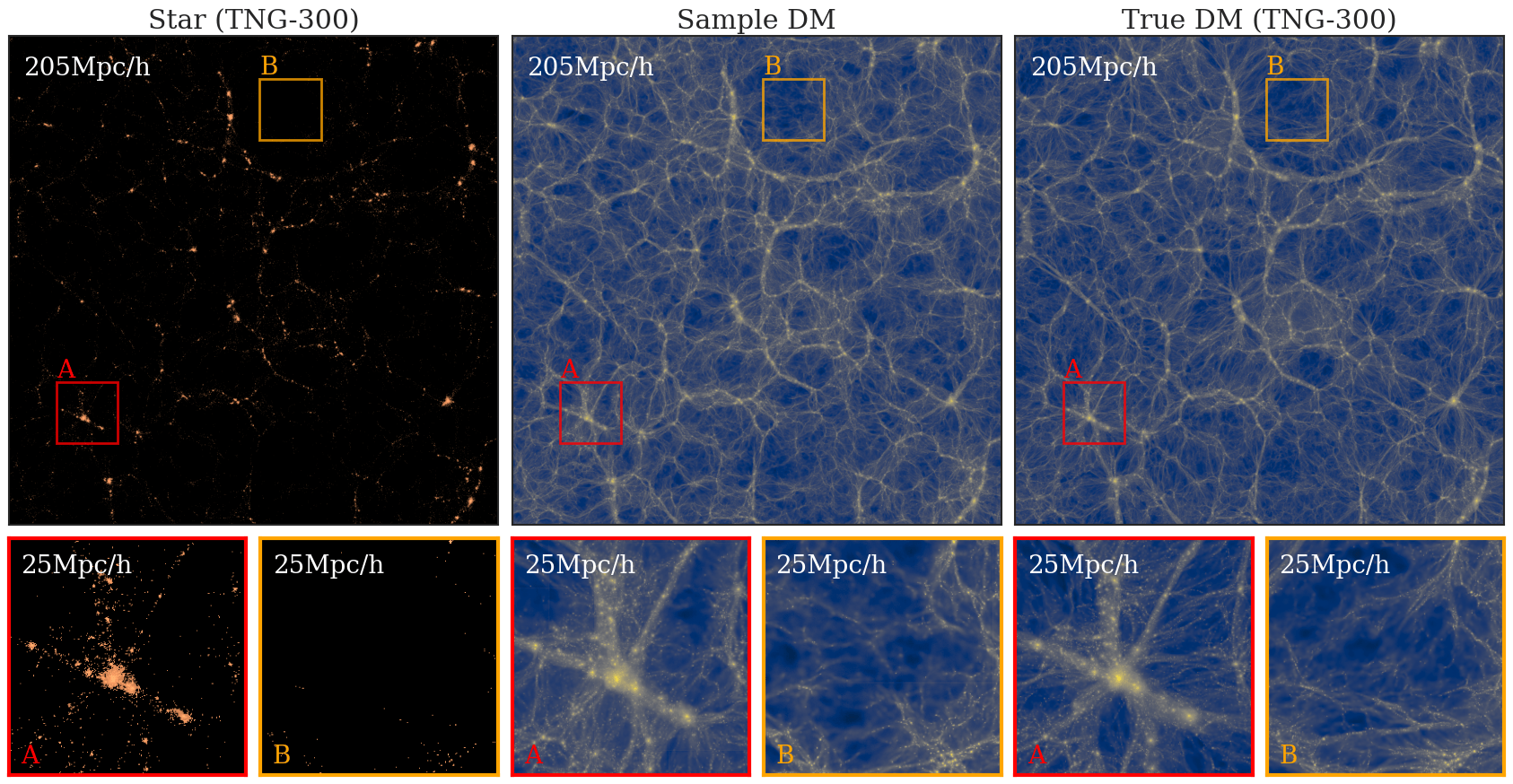

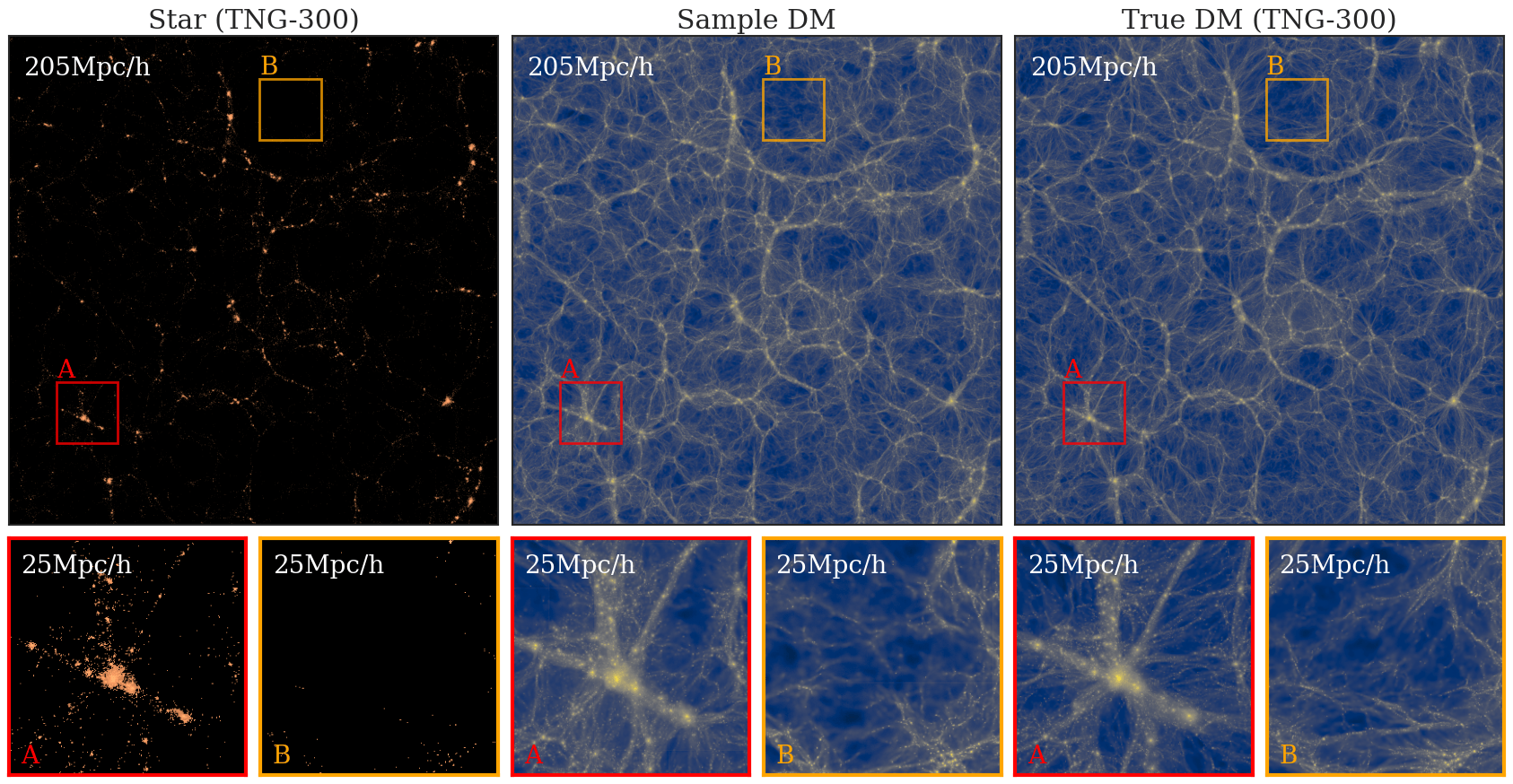

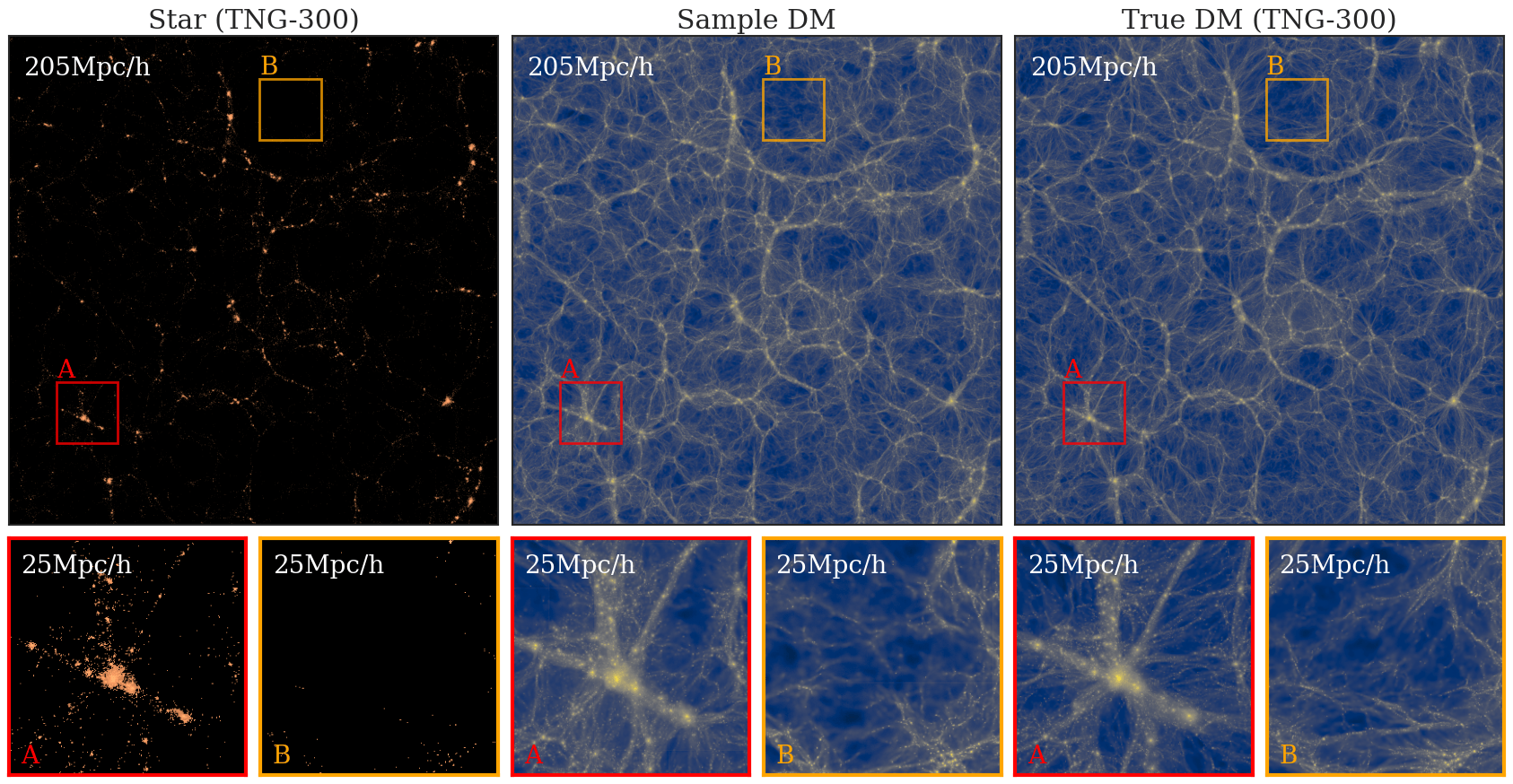

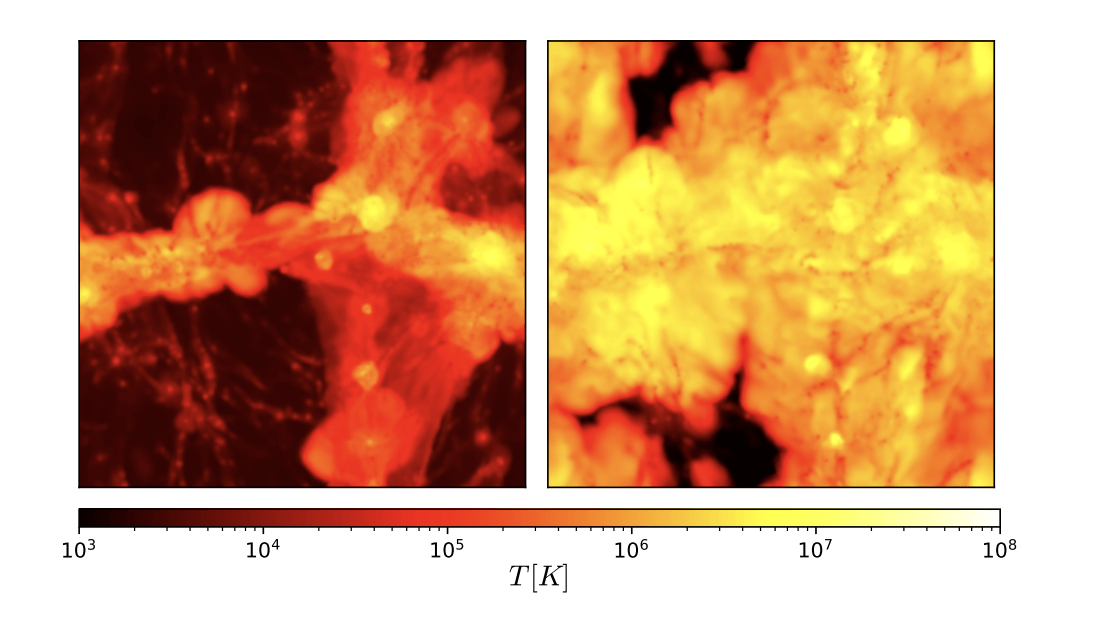

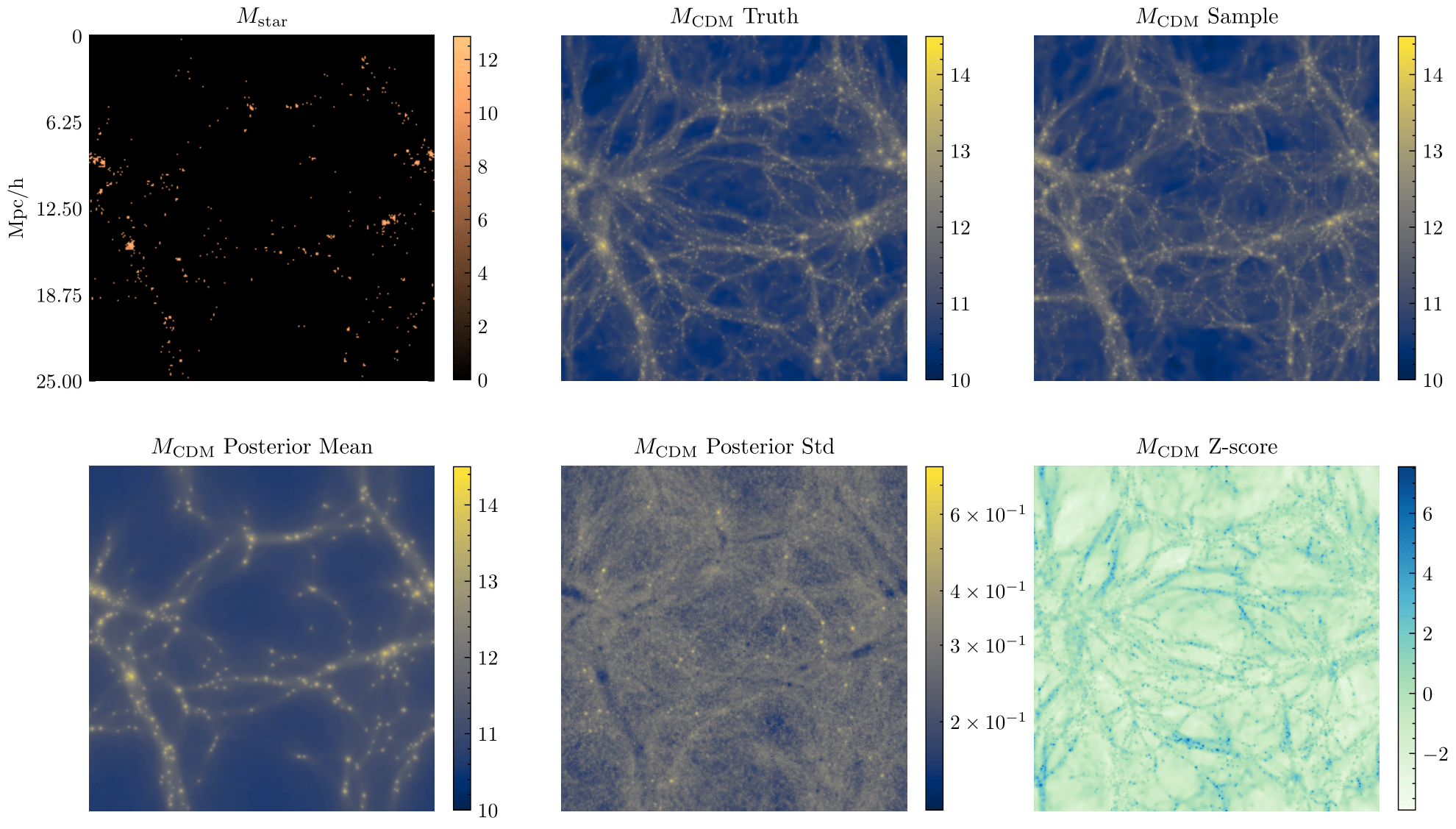

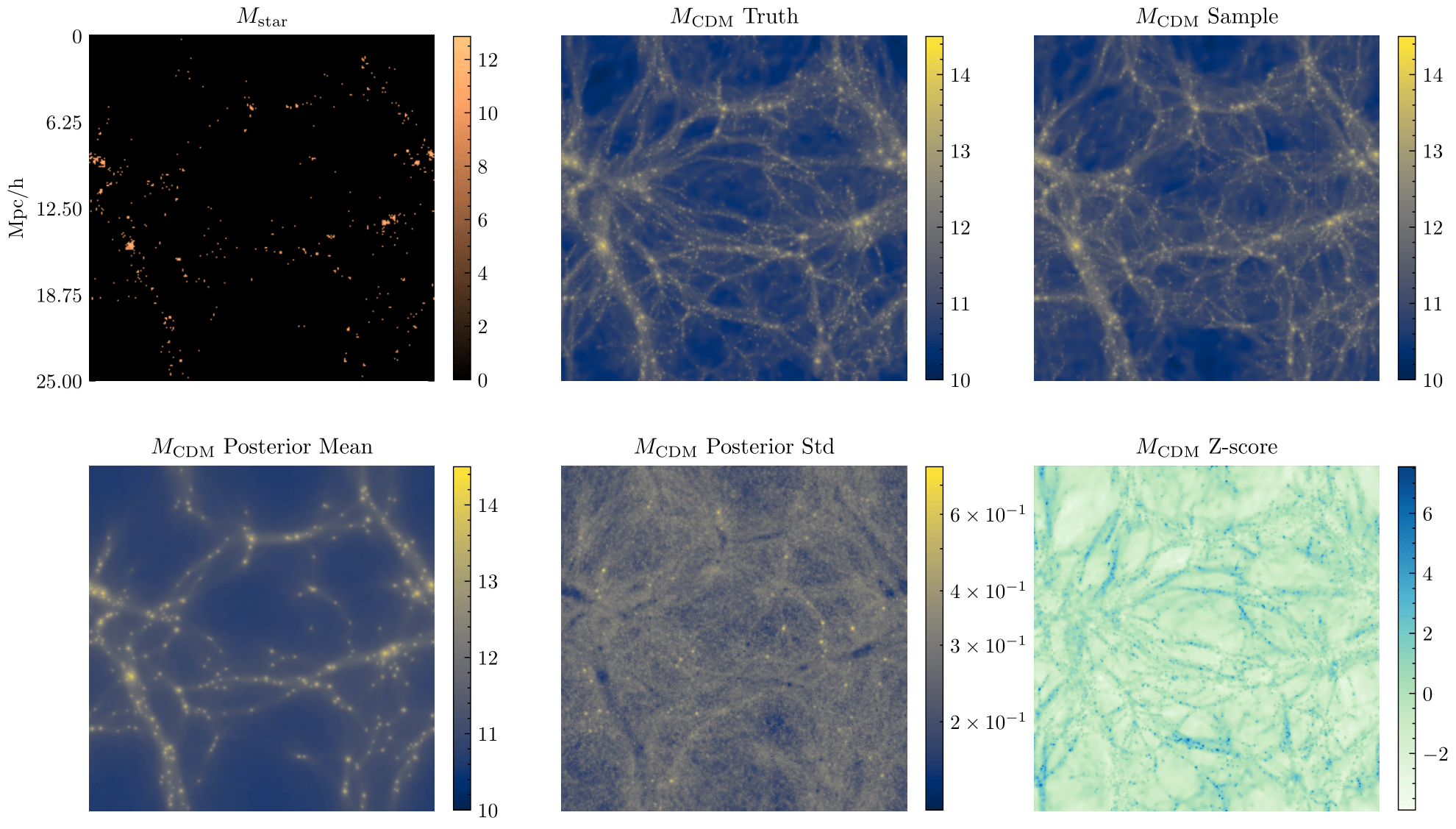

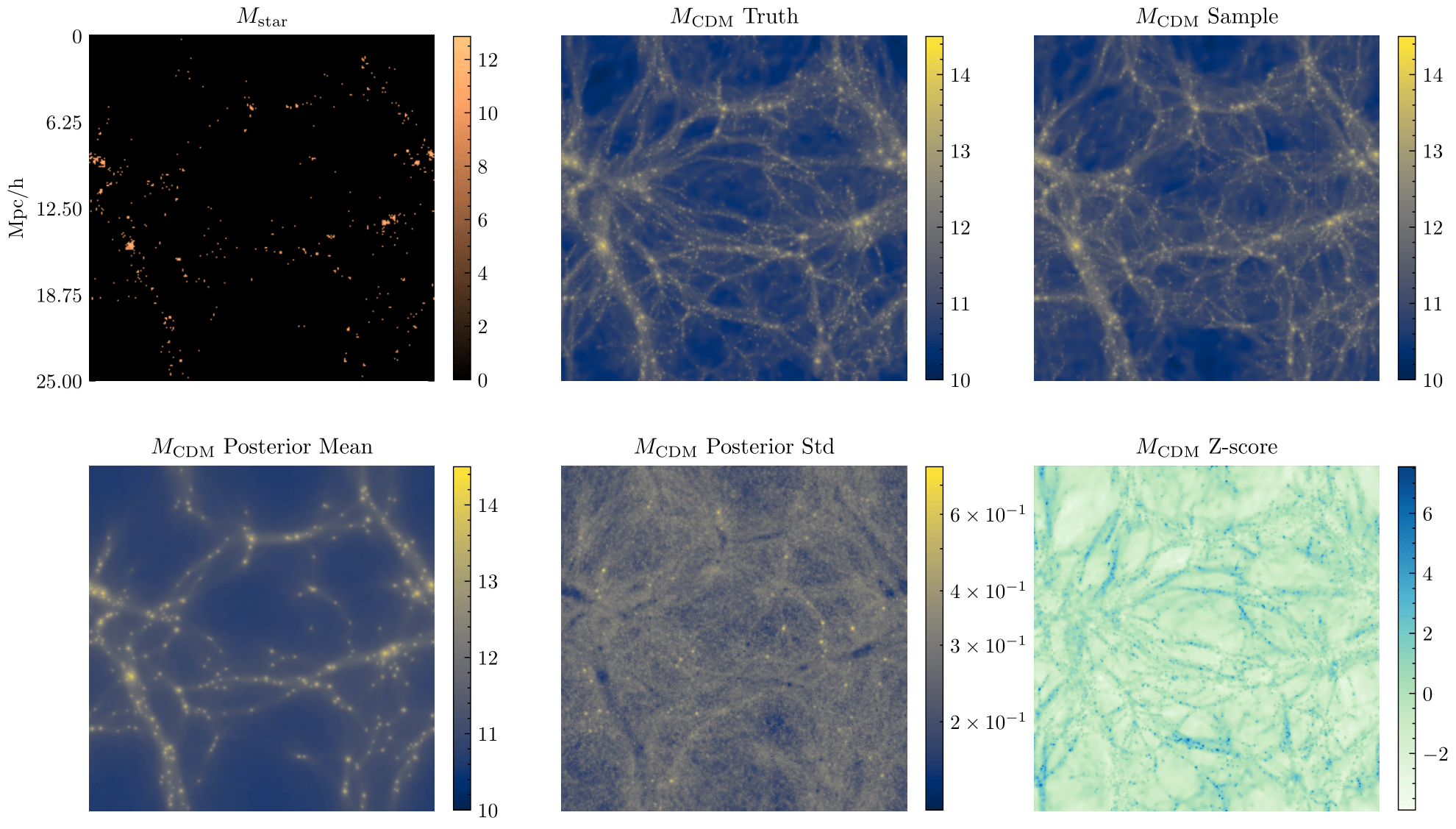

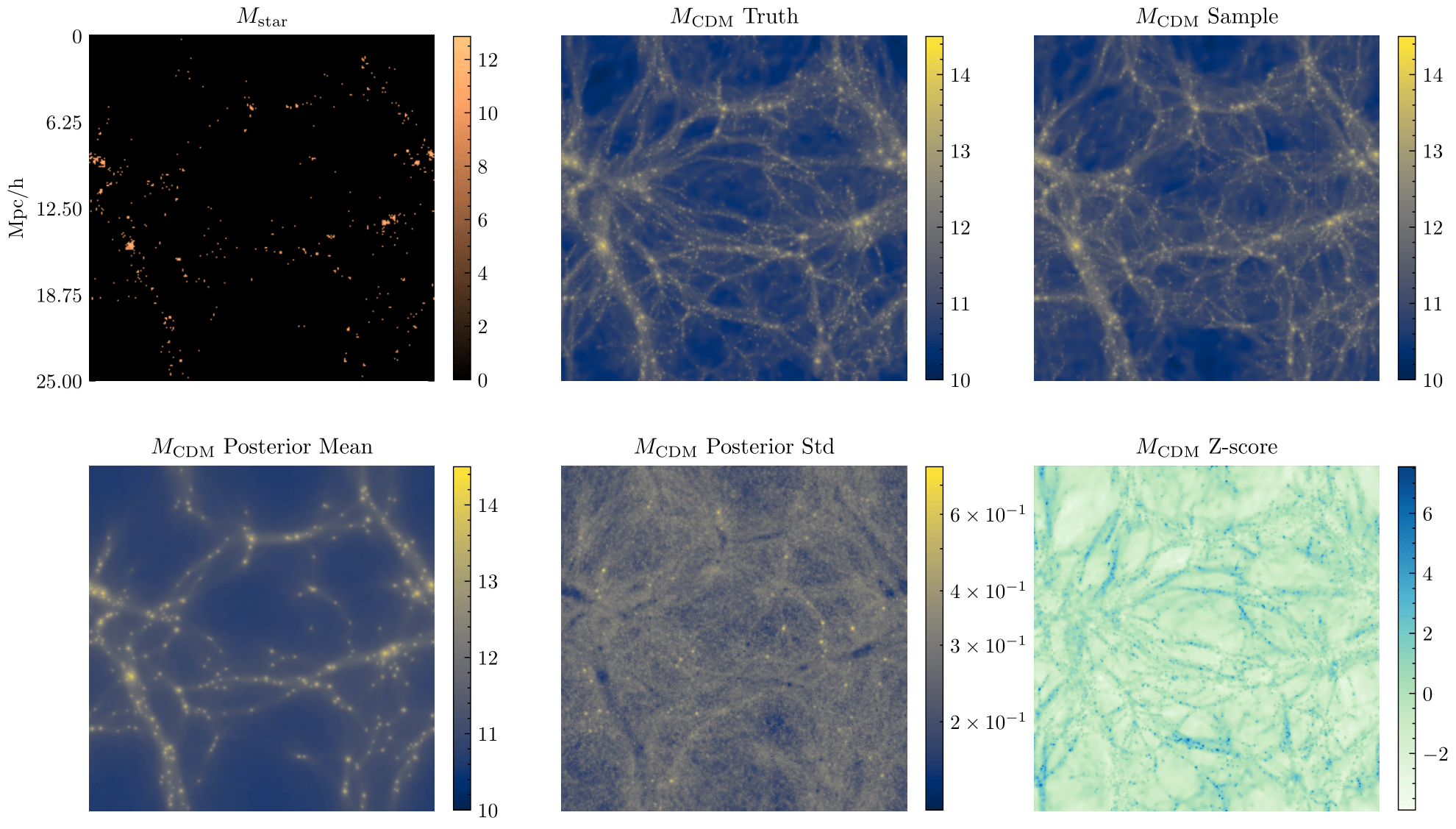

1 to Many:

Galaxies

Dark Matter

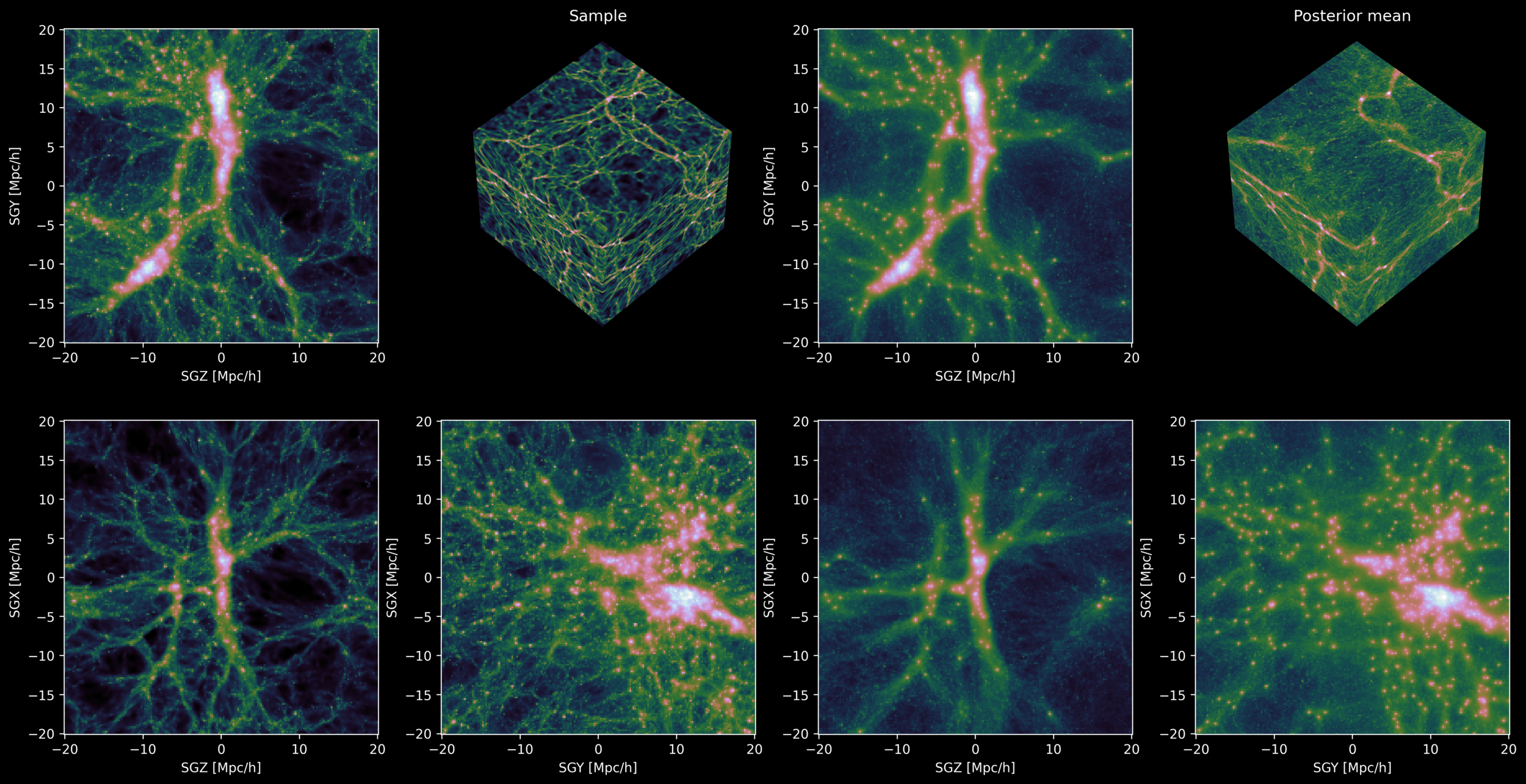

["Debiasing with Diffusion: Probabilistic reconstruction of Dark Matter fields from galaxies"

Ono et al (including Cuesta-Lazaro)

NeurIPs 2024 ML for the physical Sciences arXiv:2403.10648]

Victoria Ono

Core F. Park

Generative Models for Inverse Problems

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

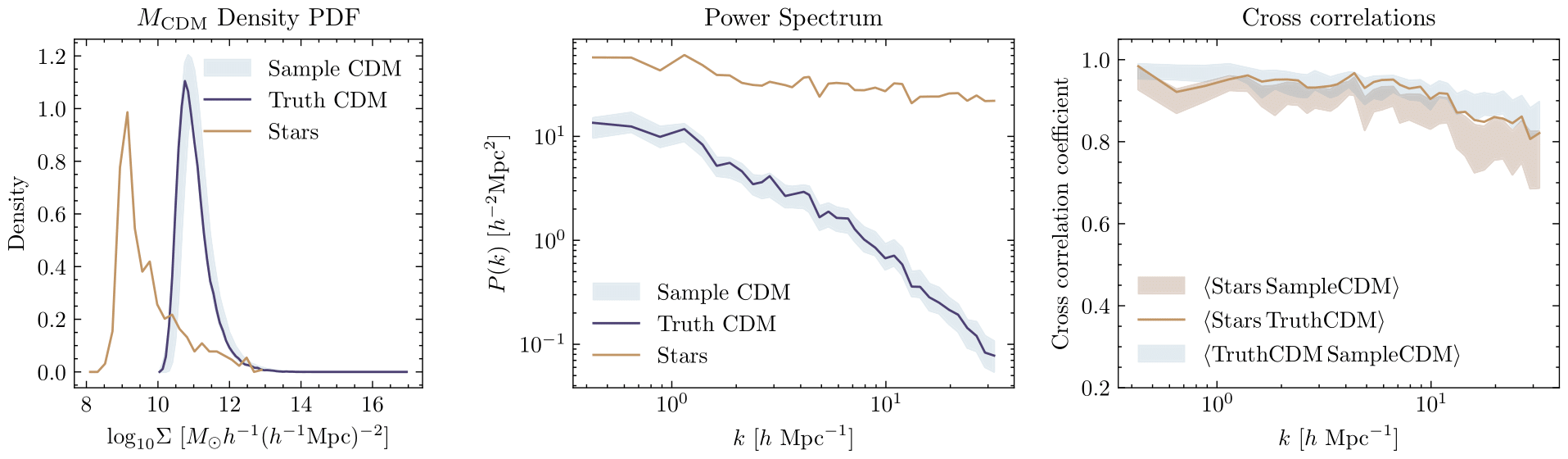

Truth

Sampled

Observed

Small

Large

Scale (k)

Power Spectrum

Small

Large

Scale (k)

Cross correlation

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

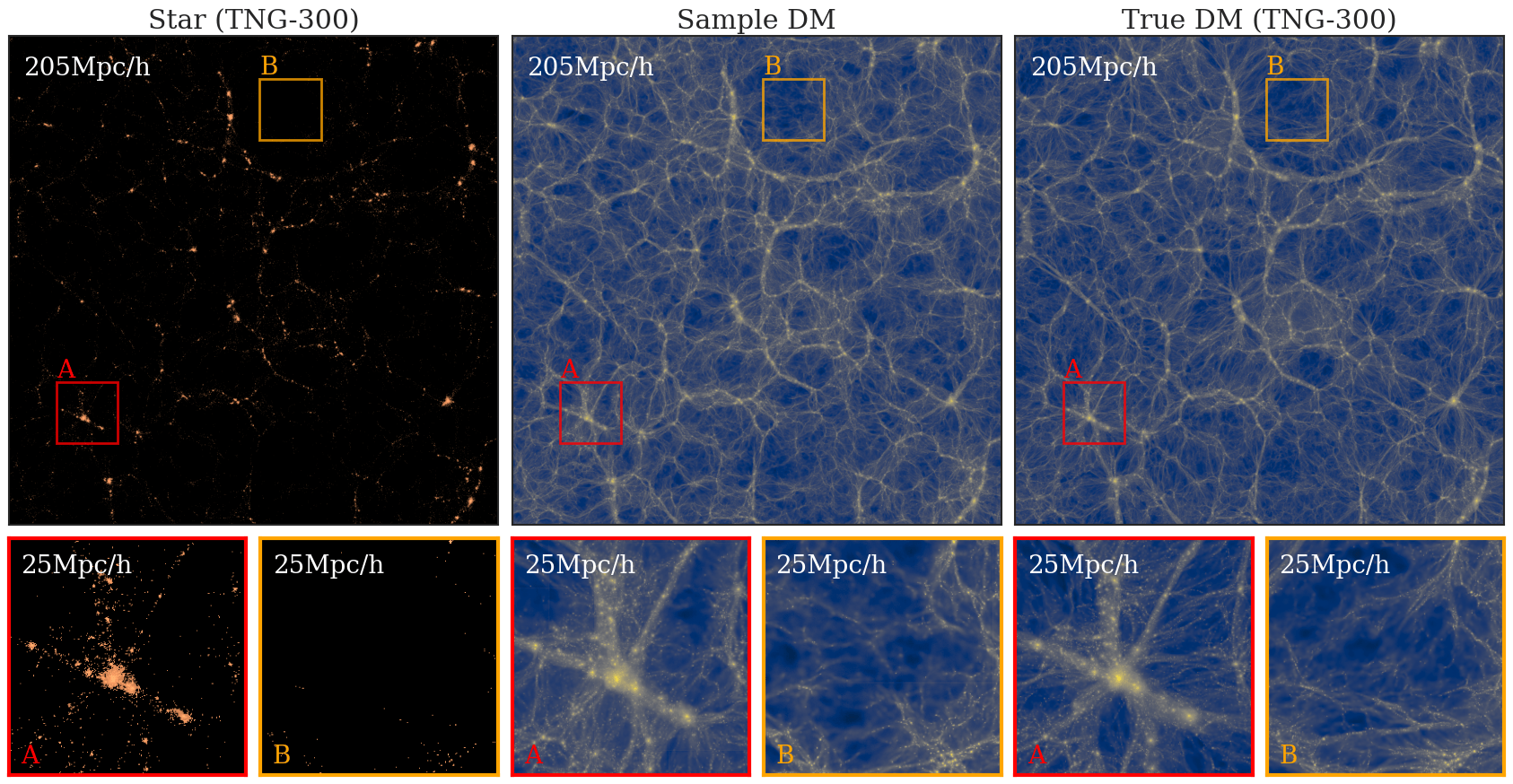

TNG-300

True DM

Sample DM

Size of training simulation

1) Generalising to larger volumes

Model trained on Astrid subgrid model

2) Generalising to subgrid models

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

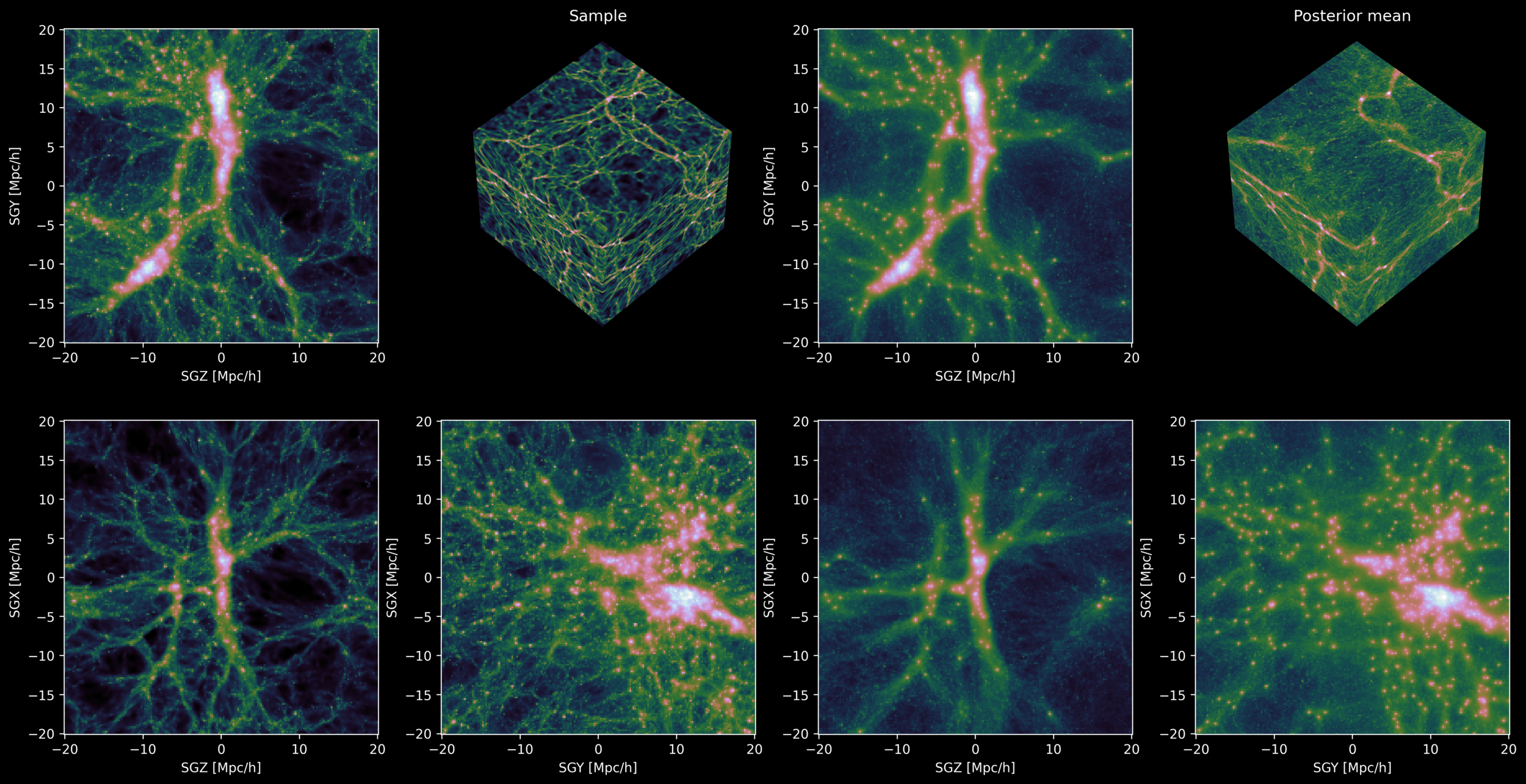

["3D Reconstruction of Dark Matter Fields with Diffusion Models: Towards Application to Galaxy Surveys" Park, Mudur, Cuesta-Lazaro et al ICML 2024 AI for Science]

Posterior Sample

Posterior Mean

Debiasing Cosmic Flows

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

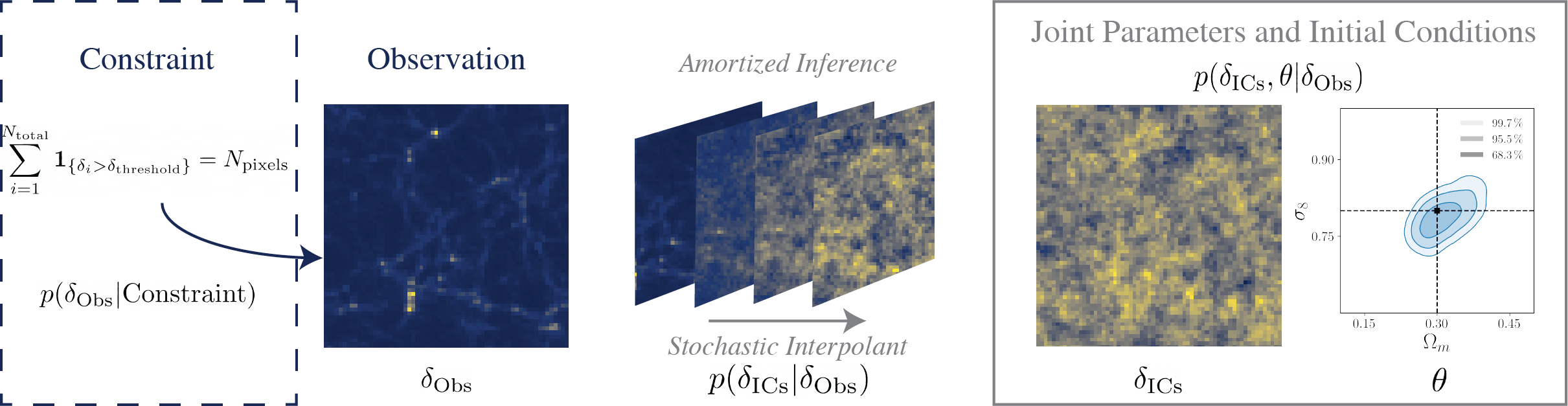

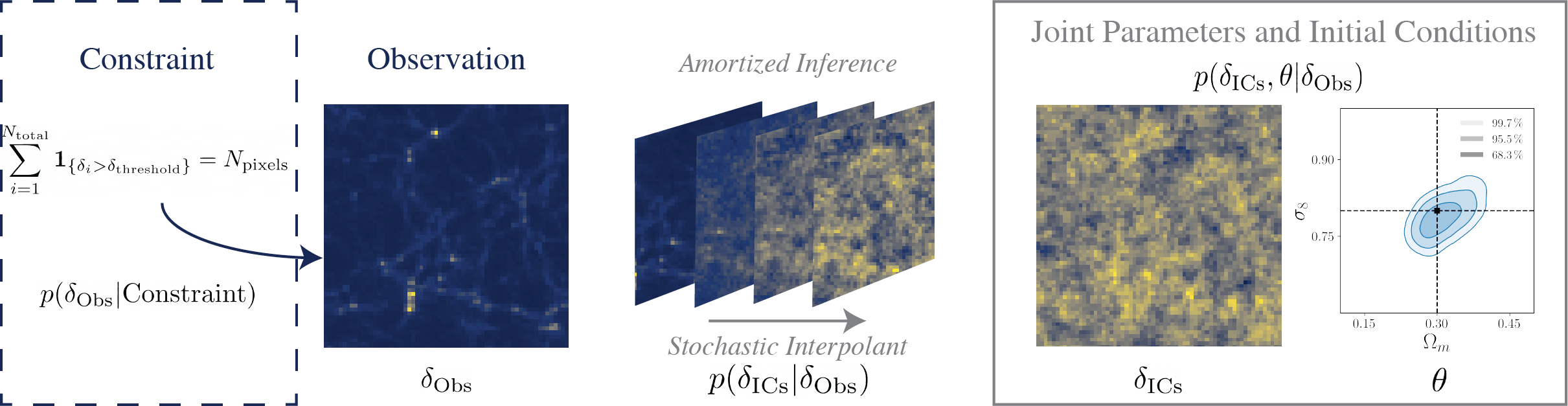

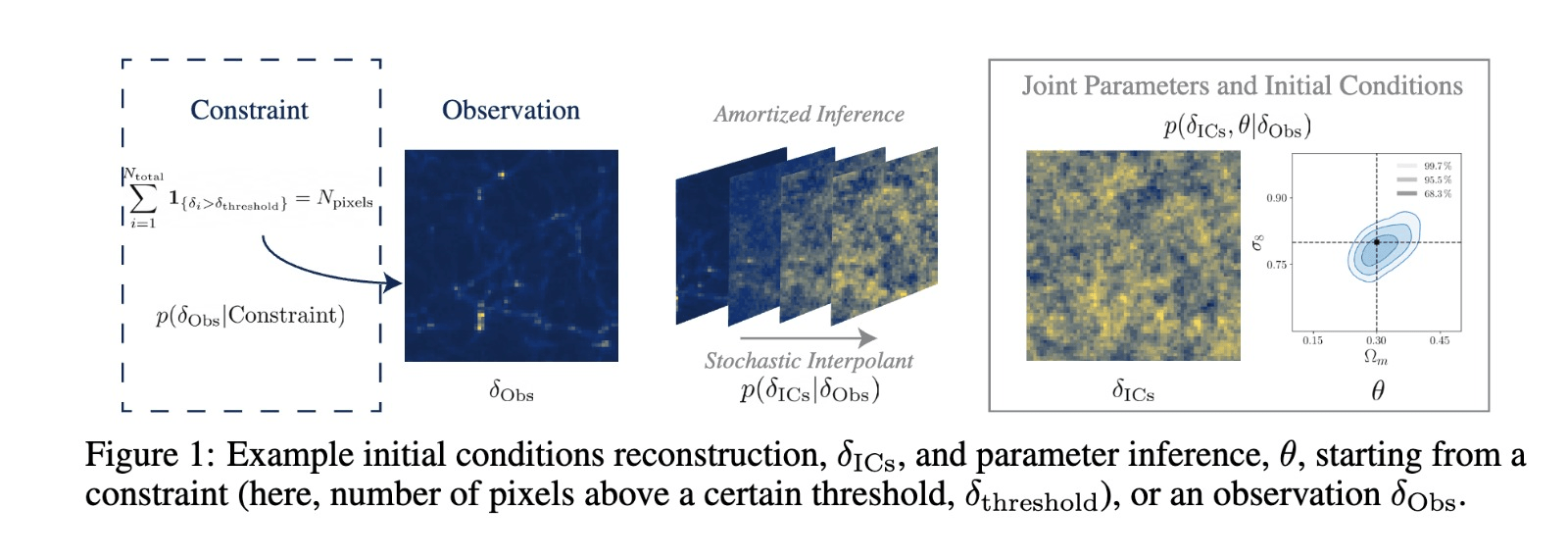

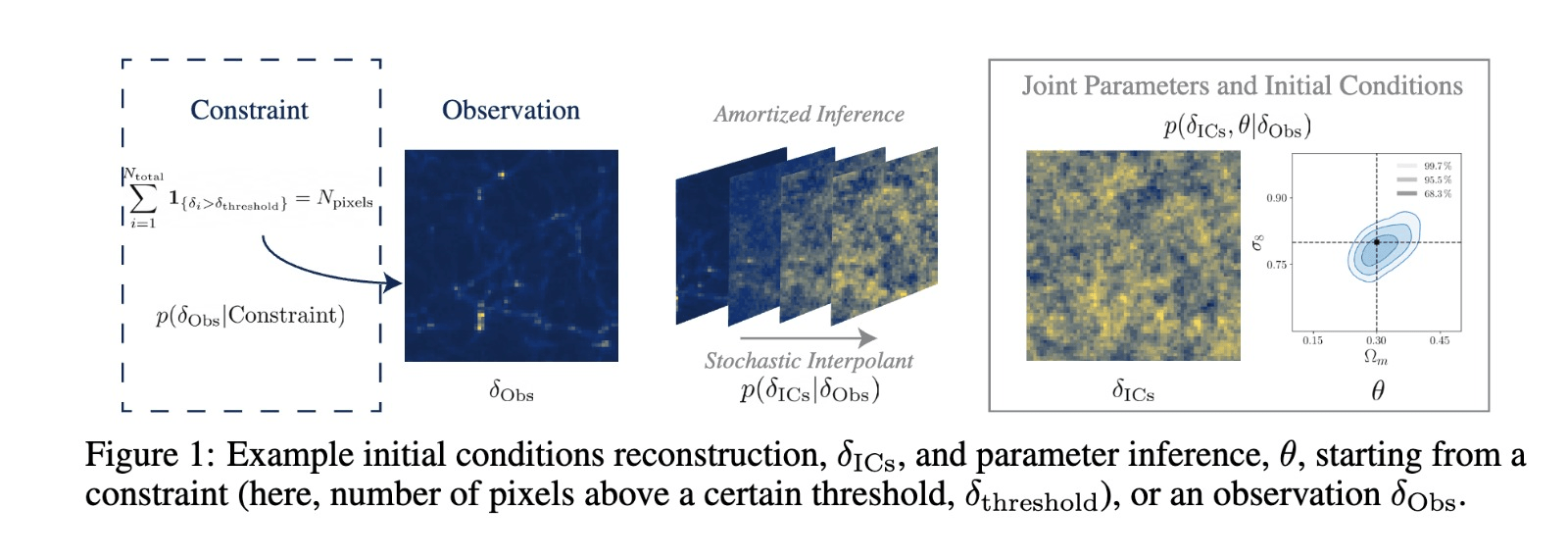

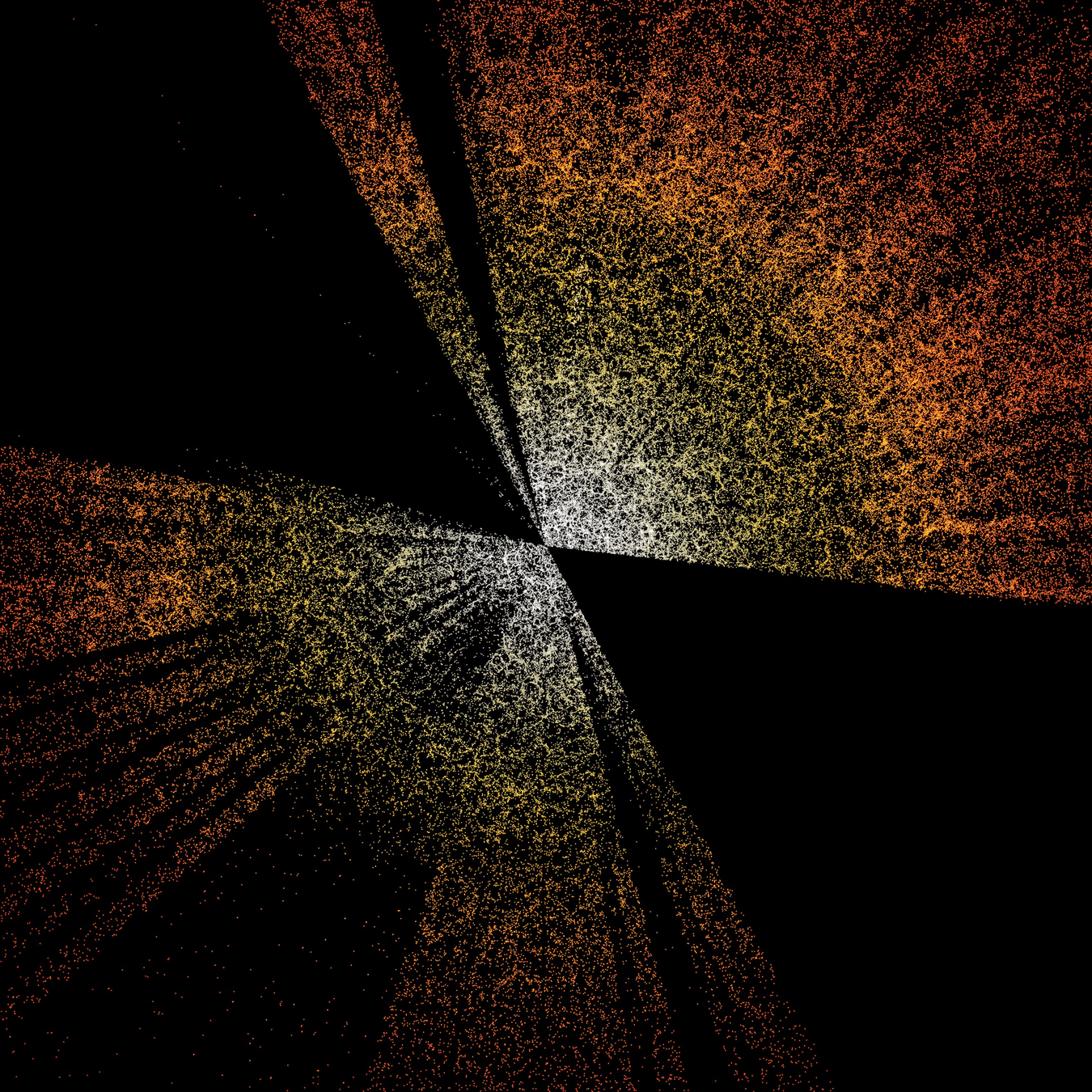

Reconstructing dark matter back in time

Stochastic Interpolants

NF

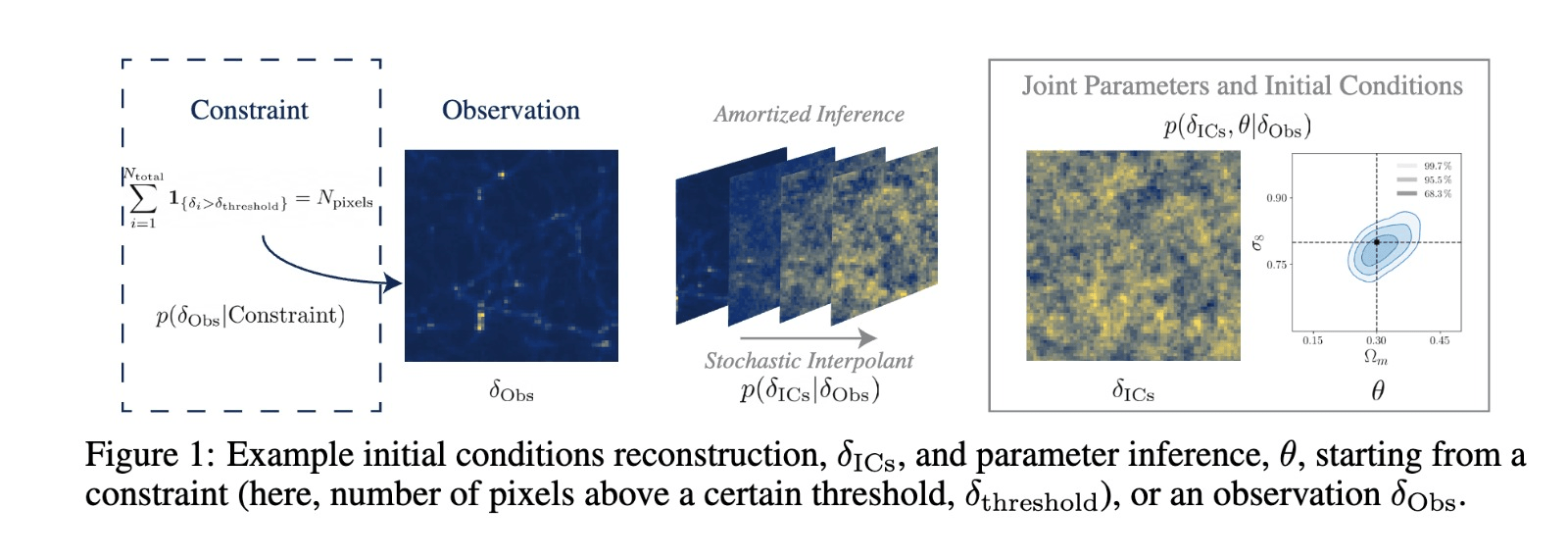

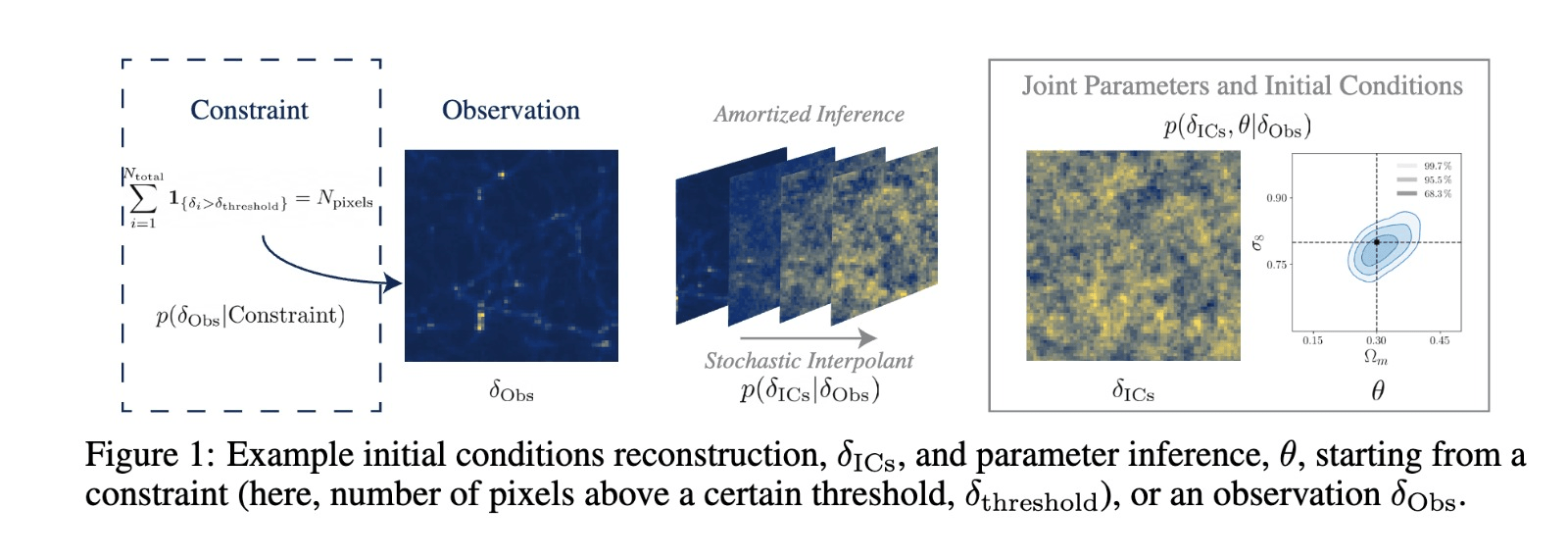

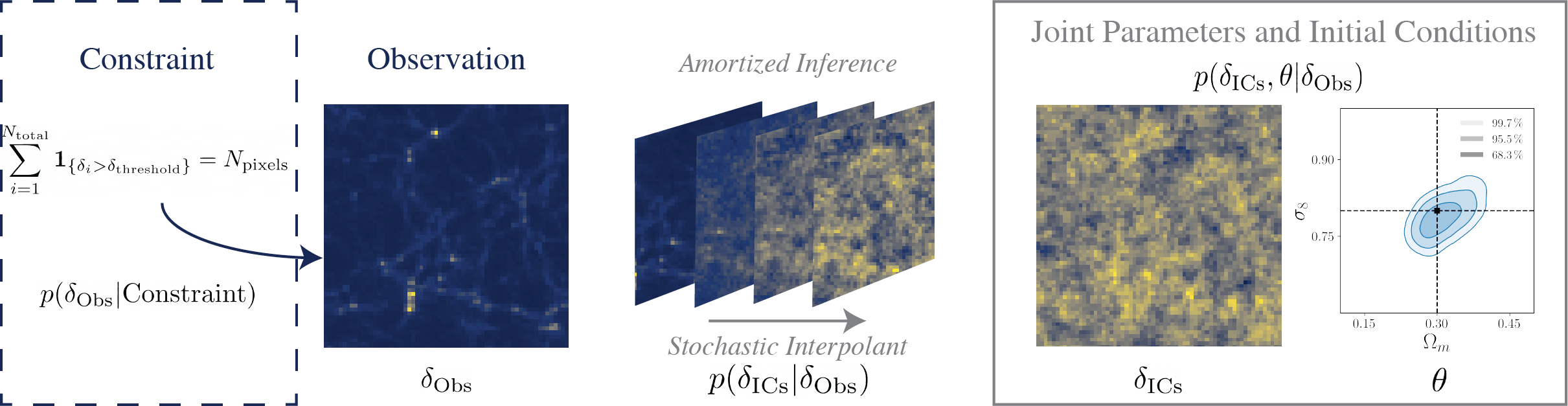

["Joint cosmological parameter inference and initial condition reconstruction with Stochastic Interpolants" Cuesta-Lazaro, Bayer, Albergo et al NeurIPs 2024 ML for the Physical Sciences]

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

?

["Probabilistic Forecasting with Stochastic Interpolants and Foellmer Processes" Chen et al arXiv:2403.10648 (Figure adapted from arXiv:2407.21097)]

Generative SDE

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Guided simulations with fuzzy constraints

Simulate what you need

(and sometimes what you want)

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Simulate what you need

(and sometimes what you want)

["Joint cosmological parameter inference and initial condition reconstruction with Stochastic Interpolants" Cuesta-Lazaro, Bayer, Albergo et al NeurIPs 2024 ML for the Physical Sciences]

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

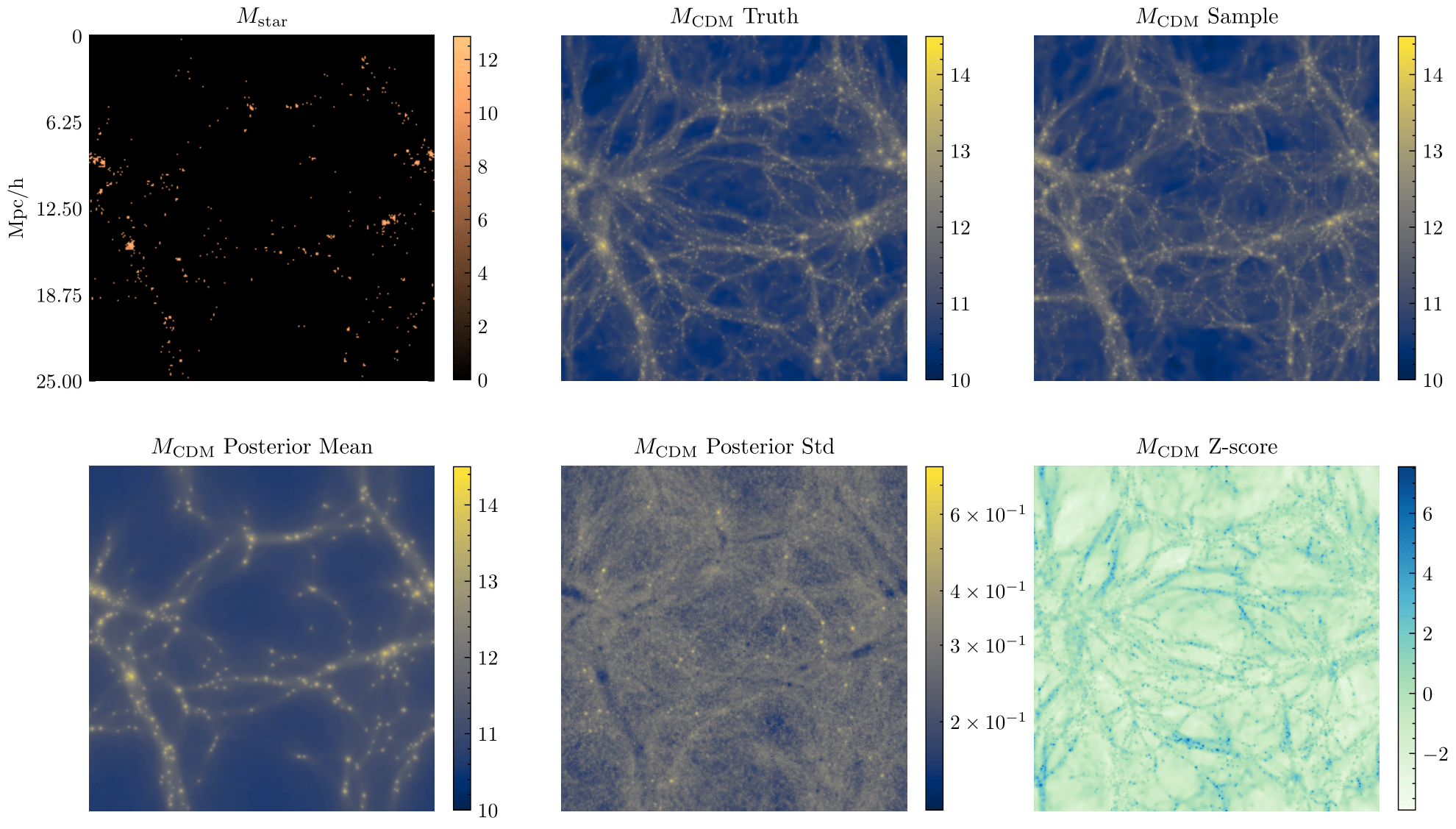

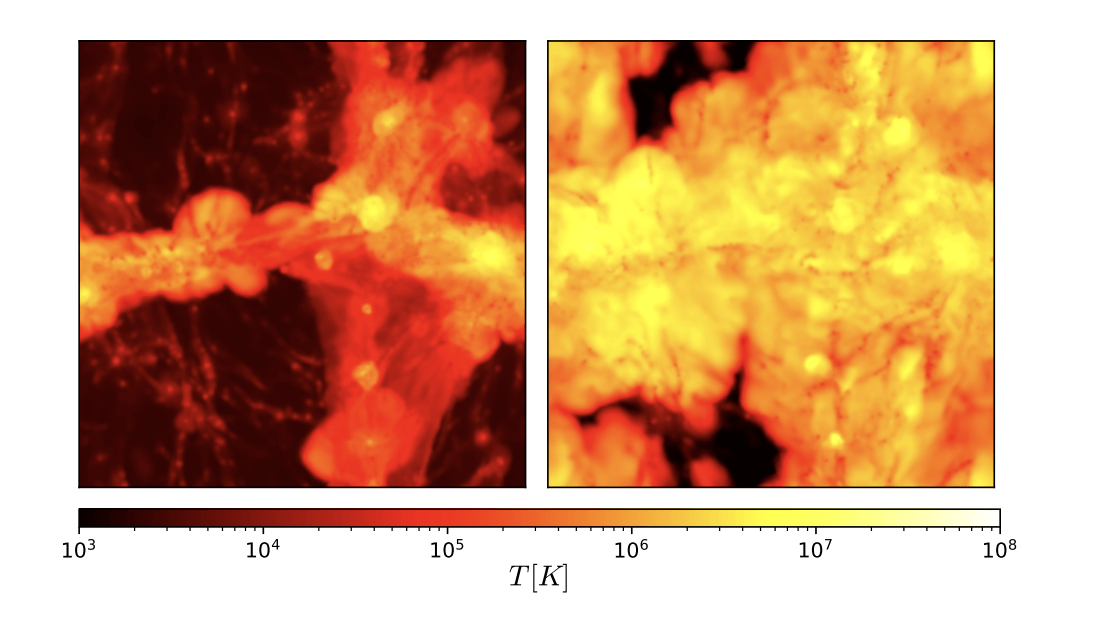

How do we learn what is the robust information?

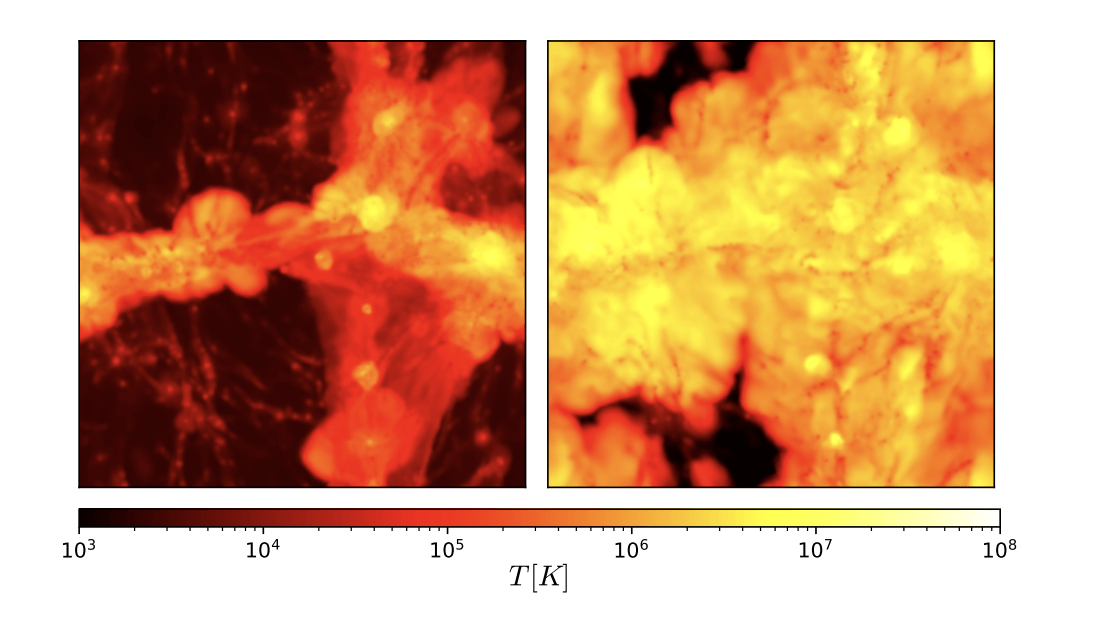

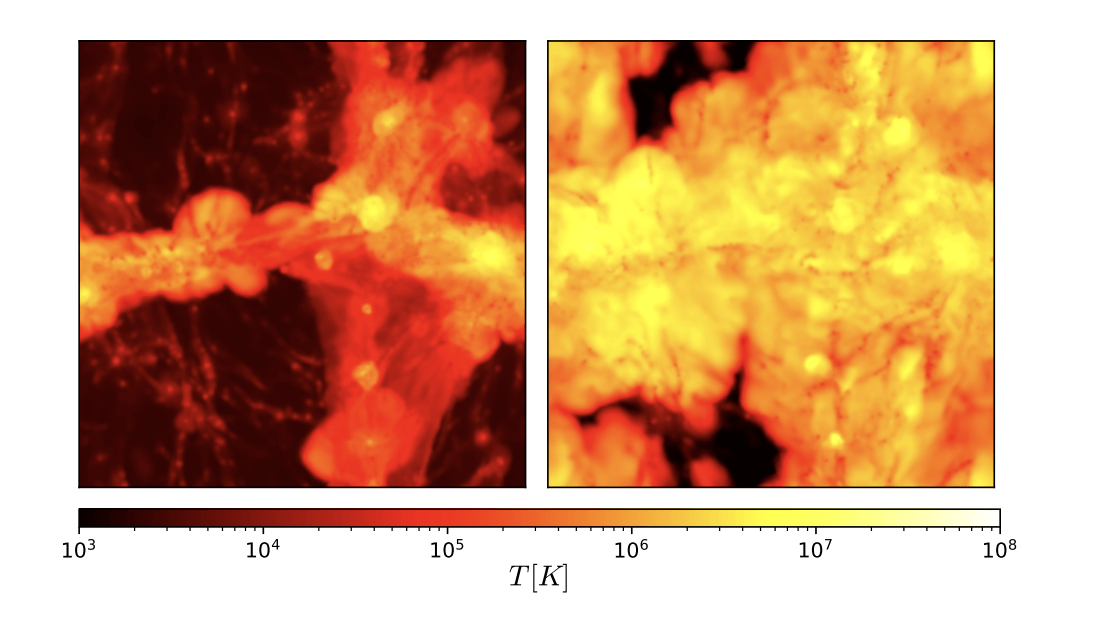

Simulating dark matter is easy!

"Atoms" are hard" :(

N-body Simulations

Hydrodynamics

Can we improve our simulators in a data-driven way?

How well can we simulate the Universe?

(if cold!)

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

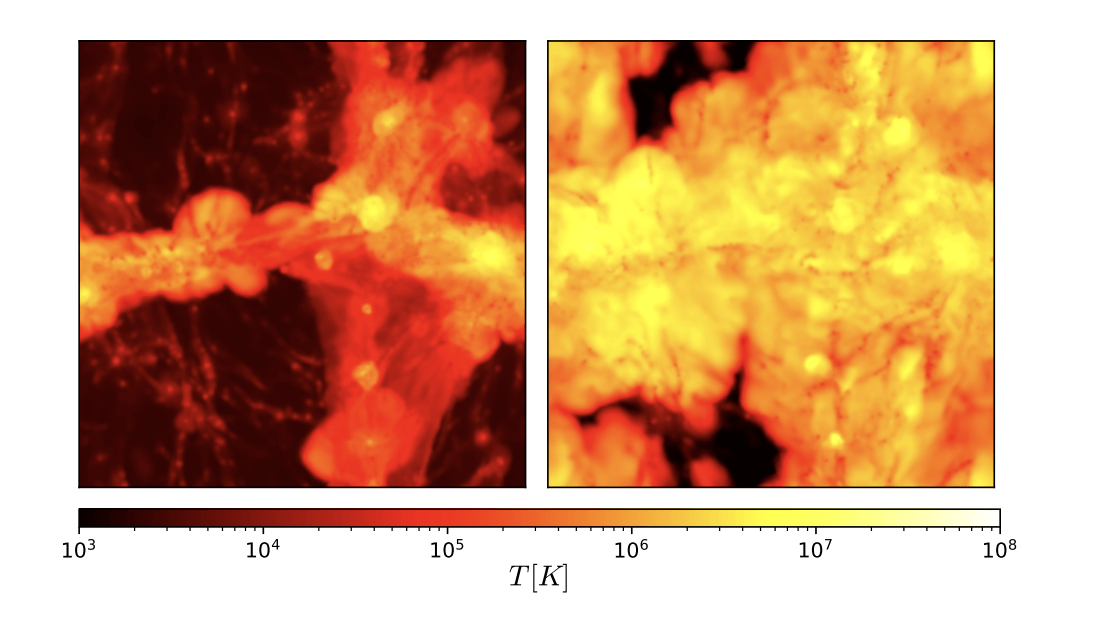

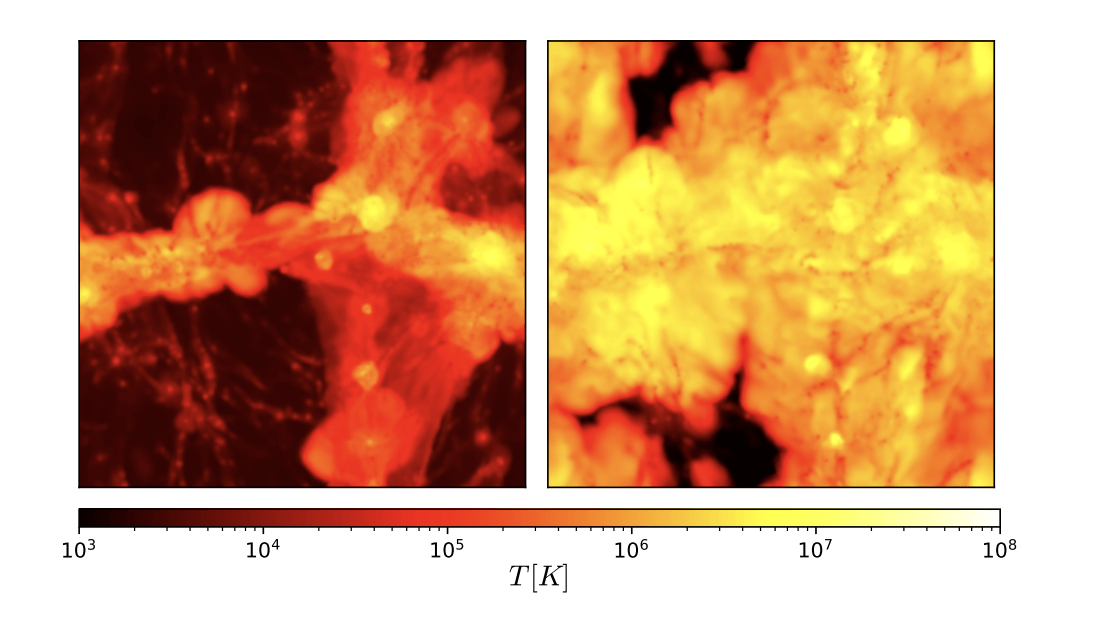

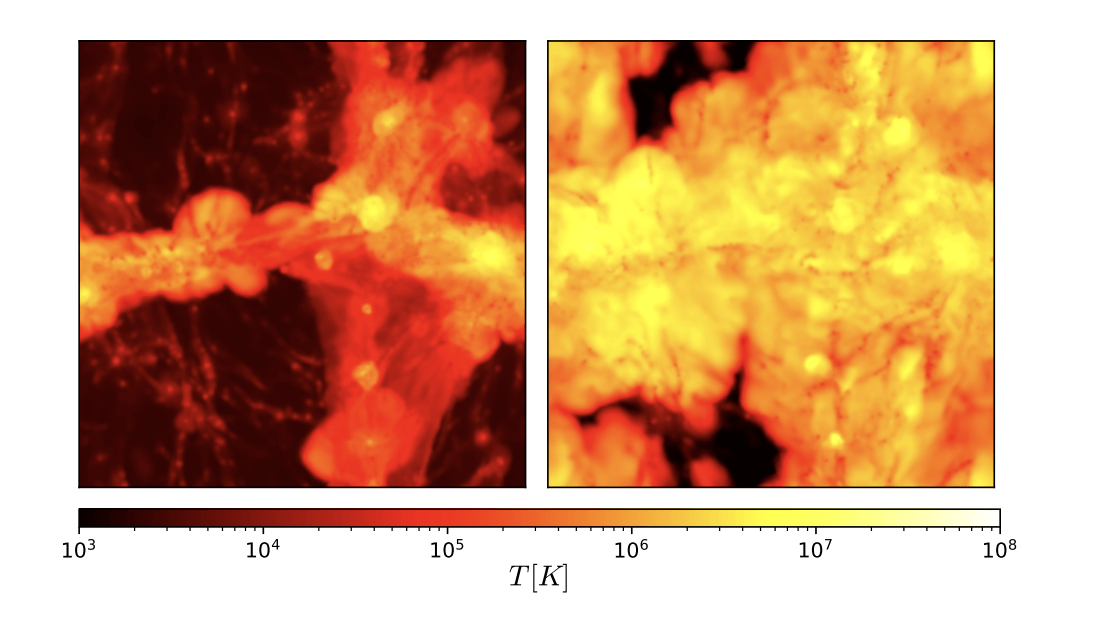

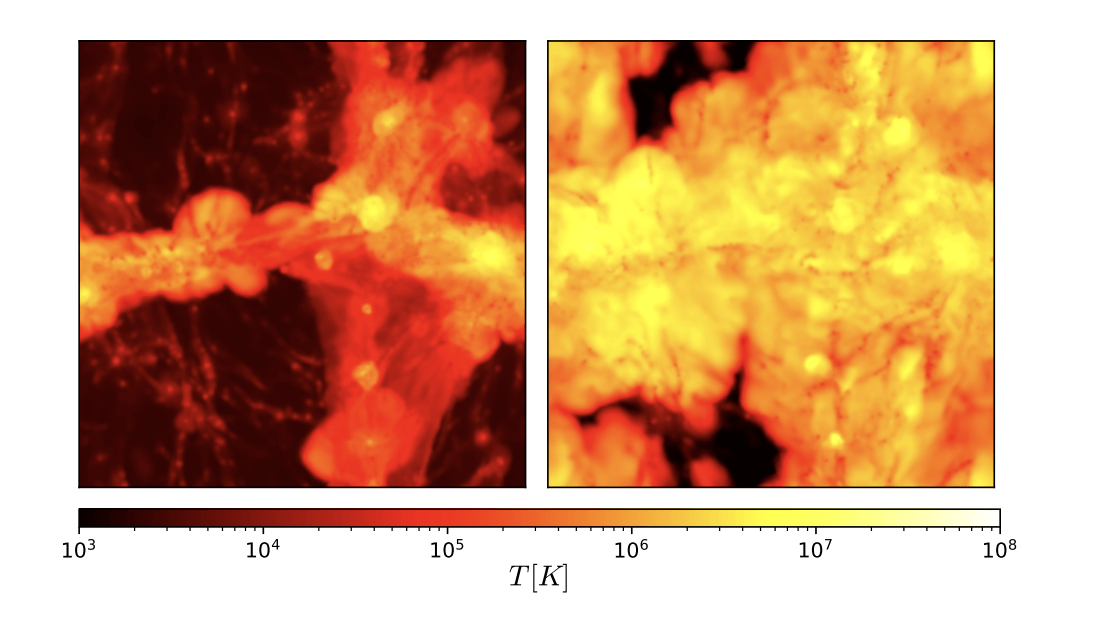

~ Gpc

pc

kpc

Mpc

Gpc

[Video credit: Francisco Villaescusa-Navarro]

Gas density

Gas temperature

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Representation Learning

Informative abstractions of the data

Transfer learning beyond LCDM

Cosmic web Anomaly Detection

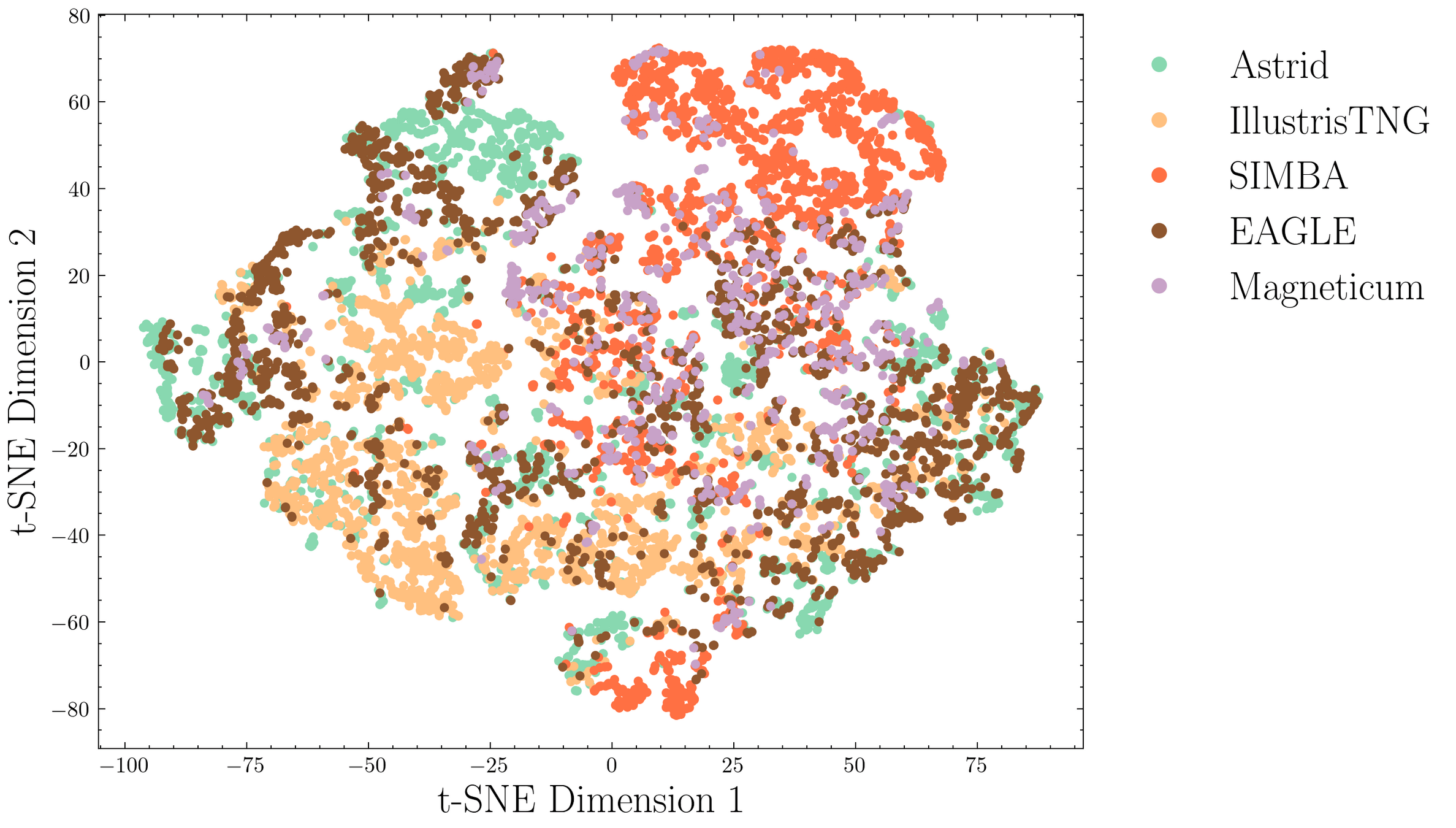

Representing baryonic feedback

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

What makes a good representation?

General

Predictive

Low dimensional?

Should generalize across scales, systems...

Transfer to unseen conditions

p(x|z)

Simple : Occam's razor

Causal?

Copernican

Ptolomeic

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Representation Learning a la gradient descent

Contrastive

Generative

inductive biases

from scratch or from partial observations

Students at MIT are

OVER-CAFFEINATED

NERDS

SMART

ATHLETIC

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Simulator 1

Simulator 2

Dark Matter

Feedback

i) Contrastive

Learning the feedback manifold

Baryonic fields

ii) Generative

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Baryonic fields

Dark Matter

Generative model

Total matter, gas temperature,

gas metalicity

Encoder

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Parity violation cannot be originated by gravity

["Measurements of parity-odd modes in the large-scale 4-point function of SDSS..." Hou, Slepian, Chan arXiv:2206.03625]

["Could sample variance be responsible for the parity-violating signal seen in the BOSS galaxy survey?" Philcox, Ereza arXiv:2401.09523]

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

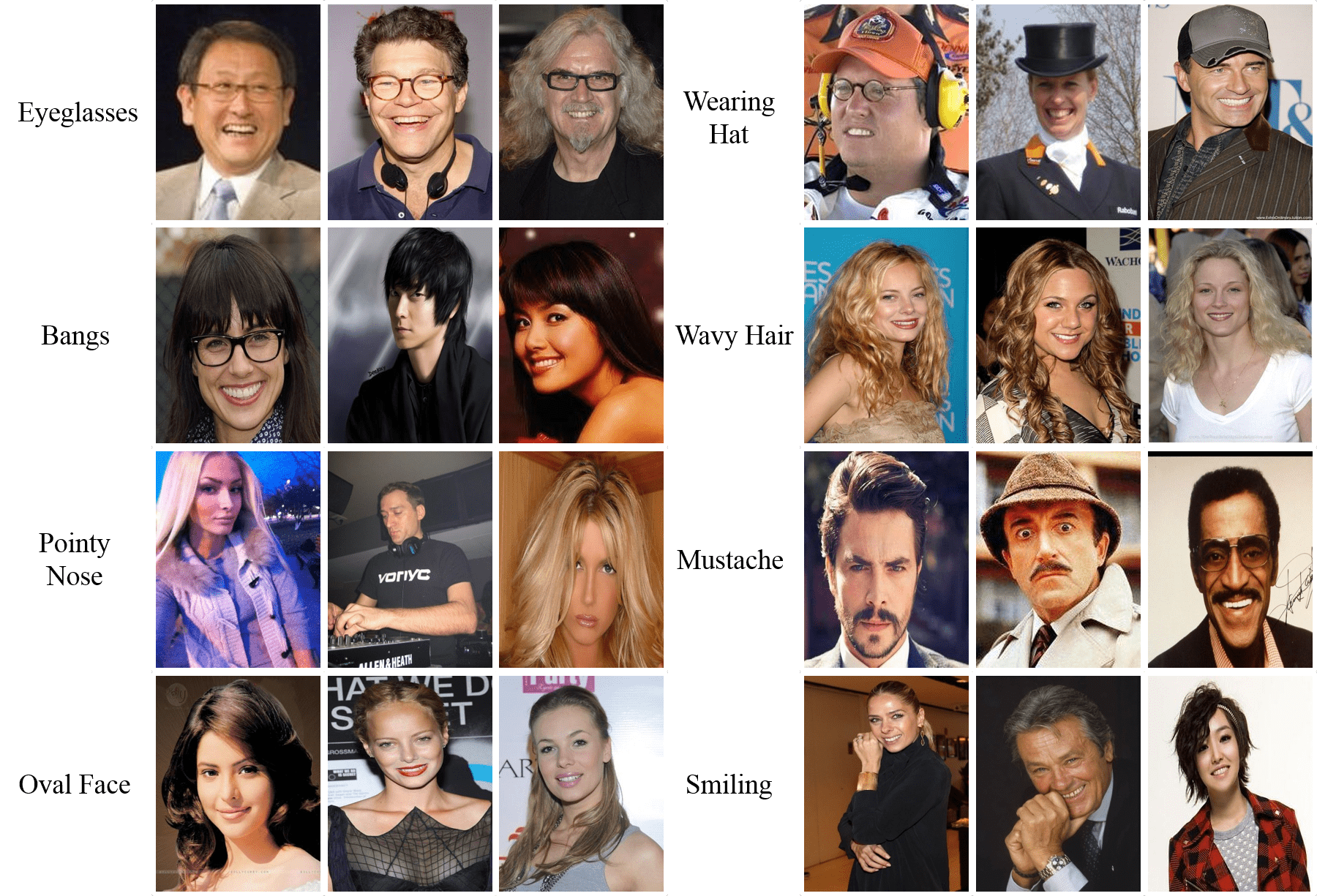

Real or Fake?

x or Mirror x?

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Train

Test

Me: I can't wait to work with observations

Me working with observations:

Very subtle effect -> Hard to find data efficient architectures

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

1. There is a lot of information in galaxy surveys that ML methods can access

2. We can tackle high dimensional inference problems so far unatainable

3. Our ability to simulate limits the amount of information we can robustly extract

Hybrid simulators, forward models, robustness

Unsupervised problems

Mapping dark matter, constrained simulations... Let's get creative!

Generative Models = Fast emulators + Field level likelihoods

Conclusions

Symmetries can help reduce number of training simulations

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

Small

Large

In-Distribution

In-Distribution

In-Distribution

Out-of-Distribution

Out-of-Distribution

Out-of-Distribution

Out-of-Distribution

Out-of-Distribution

Out-of-Distribution

Carolina Cuesta-Lazaro IAIFI/MIT @ IPMU 2024

APEC-Seminar2024

By carol cuesta

APEC-Seminar2024

- 531