Reinforcement learning

in Scala

Chris Birchall

Reinforcement learning

Unsupervised learning

Fools say they learn from experience;

I prefer to learn from the experience of others.

Delayed rewards

Environment

Markov Decision Process

- State machine satisfying Markov property

- Defines two functions:

- Given current state and an action, what is the next state?

- Given current state, action and next state, what is the reward?

Environment.scala

trait Environment[State, Action] {

def step(

):

}Environment.scala

trait Environment[State, Action] {

def step(

currentState: State,

actionTaken: Action

):

}Environment.scala

trait Environment[State, Action] {

def step(

currentState: State,

actionTaken: Action

): (State, Reward)

}Environment.scala

type Reward = Double

trait Environment[State, Action] {

def step(

currentState: State,

actionTaken: Action

): (State, Reward)

}Environment.scala

type Reward = Double

trait Environment[State, Action] {

def step(

currentState: State,

actionTaken: Action

): (State, Reward)

def isTerminal(state: State): Boolean

}Agent

Agent

At every time step t

- knows what state it is currently in

- chooses an action to take

- is told the new state, and what reward it received

- learns something!

AgentBehaviour.scala

trait AgentBehaviour[AgentData, State, Action] {

}AgentBehaviour.scala

trait AgentBehaviour[AgentData, State, Action] {

def chooseAction(

agentData: AgentData,

state: State,

validActions: List[Action]

):

}AgentBehaviour.scala

trait AgentBehaviour[AgentData, State, Action] {

def chooseAction(

agentData: AgentData,

state: State,

validActions: List[Action]

): (Action, )

}AgentBehaviour.scala

type Reward = Double

case class ActionResult[State](reward: Reward,

nextState: State)

trait AgentBehaviour[AgentData, State, Action] {

def chooseAction(

agentData: AgentData,

state: State,

validActions: List[Action]

): (Action, ActionResult[State] => AgentData)

}Runner

- Start with initial agent data and state

- At every time step:

- Ask the agent to choose an action

- Tell the environment, which will return the new state and a reward

- Tell these to the agent, which will return an improved version of itself

- (Update the UI)

Runner

var agentData = initialAgentData

var currentState = initialState

def step(): Unit = {

}

Runner

var agentData = initialAgentData

var currentState = initialState

def step(): Unit = {

val (nextAction, updateAgent) =

agentBehaviour.chooseAction(agentData, currentState, ...)

}

Runner

var agentData = initialAgentData

var currentState = initialState

def step(): Unit = {

val (nextAction, updateAgent) =

agentBehaviour.chooseAction(agentData, currentState, ...)

val (nextState, reward) =

env.step(currentState, nextAction)

}

Runner

var agentData = initialAgentData

var currentState = initialState

def step(): Unit = {

val (nextAction, updateAgent) =

agentBehaviour.chooseAction(agentData, currentState, ...)

val (nextState, reward) =

env.step(currentState, nextAction)

agentData = updateAgent(ActionResult(reward, nextState))

currentState = nextState

updateUI(agentData, currentState)

}

DEMO

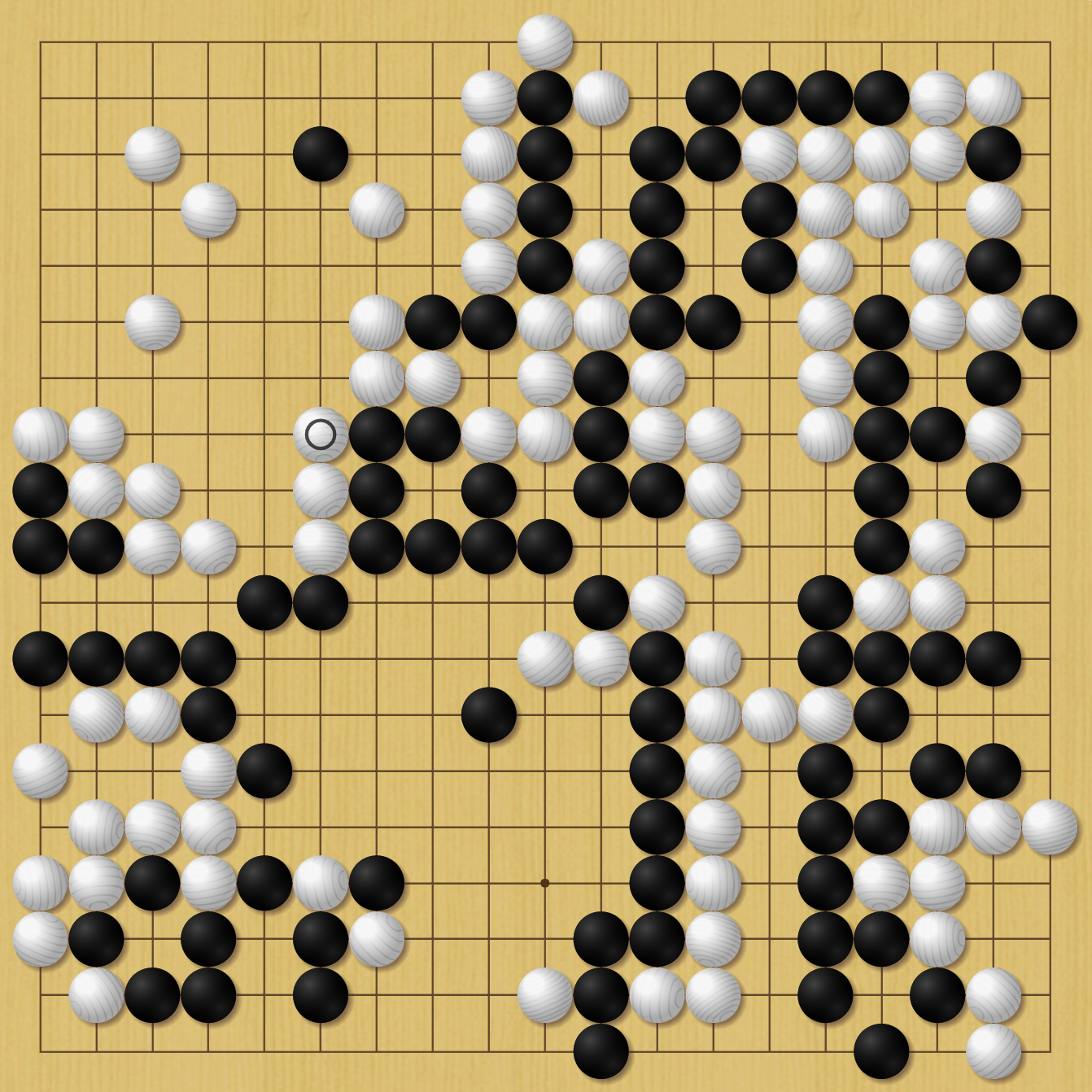

Gridworld

How does it learn?

- State-action values

- Policies

- Prediction and control

- Model-free vs model-driven

- Exploitation vs exploration

- Bootstrapping

State-action values

For each state s

(e.g. agent is in cell (1, 2) on the grid)

and each action a

(e.g. "move left"),

Q(s, a) = estimate of value of being in state s and taking action a

(Q*(s, a) = the optimal value)

Value?

Total return of all rewards from that point onward

Policy

If we have a state-action value function Q(s, a),

then making a policy is trivial

Agent needs a policy:

"If I'm in some state s, what action should I take?"

If I'm in state s,

choose the action a with the highest Q(s, a)

My Super Awesome Policy

| (state, action) | Q(s, a) |

|---|---|

| ((1, 1), Move Left) | 1.4 |

| ((1, 1), Move Right) | 9.3 |

| ((1, 1), Move Up) | 2.2 |

| ((1, 1), Move Down) | 3.7 |

"If I'm in state (1, 1), I should move right"

Reduce a hard problem (learning an optimal policy)

into two easier problems:

- "evaluation"/"prediction"

- measure the performance of some policy π

- "improvement"/"control"

- find a policy slightly better than that one

Prediction and control

- Start with arbitrary policy

- Repeat:

- Evaluate current policy

- Improve it slightly

General strategy

Model-free/model-driven

Exploitation/exploration

ε-greedy

follow the policy most of the time,

but occasionally pick a random action

Bootstrapping

Basing estimates on other estimates

Eventually converges to the right answer!

Recap

- State-action values

- Policies

- Prediction and control

- Model-free vs model-driven

- Exploitation vs exploration

- Bootstrapping

Q-learning

- Model-free

- Exploration

- Bootstrapping

Q-learning

QLearning.scala

case class QLearning[State, Action](

α: Double, // step size, 0.0 ≦ α ≦ 1.0

γ: Double, // discount rate, 0.0 ≦ γ ≦ 1.0

ε: Double, // 0.0 ≦ ε ≦ 1.0

Q: Map[State, Map[Action, Double]]

)Agent data

QLearning.scala

object QLearning {

implicit def agentBehaviour[State, Action] =

new AgentBehaviour[QLearning[State, Action], State, Action] {

type UpdateFn =

ActionResult[State] => QLearning[State, Action]

def chooseAction(

agentData: QLearning[State, Action],

state: State,

validActions: List[Action]): (Action, UpdateFn) = {

...

}

}

}Agent behaviour

QLearning.scala

def chooseAction(

agentData: QLearning[State, Action],

state: State,

validActions: List[Action]): (Action, UpdateFn) = {

val actionValues =

agentData.Q.getOrElse(state, zeroForAllActions)

// choose the next action

val (chosenAction, currentActionValue) =

epsilonGreedy(actionValues, agentData.ε)

...

}Agent behaviour

QLearning.scala

val updateStateActionValue: UpdateFn = { actionResult =>

val maxNextStateActionValue = ...

val updatedActionValue =

currentActionValue + agentData.α * (

actionResult.reward

+ agentData.γ * maxNextStateActionValue

- currentActionValue

)

val updatedQ = ...

agentData.copy(Q = updatedQ)

}

Agent behaviour

Scaling up to more interesting problems

trait StateConversion[EnvState, AgentState] {

def convertState(envState: EnvState): AgentState

}StateConversion.scala

DEMO

Pole-balancing

HOMEWORK

Pacman!

Next steps

- Smarter policies

- Softmax, decay ε over time, ...

- More efficient learning

- TD(λ), Q(λ), eligibility traces, ...

- Large/∞ state space

- Function approximation

- Deep RL

Learn more

-

Reinforcement Learning: An Introduction (Sutton & Barto

- RL Course by David Silver (DeepMind)

Thank you!

Slides, demo and code:

https://cb372.github.io/rl-in-scala/

Reinforcement learning in Scala

By Chris Birchall

Reinforcement learning in Scala

- 4,748