How I made a powerful cache system using Go

Sylvain Combraque

Go/ReactJS Freelance architect

Creator of Souin HTTP cache

Cache-handler maintainer

OSS contributor

@darkweak

@darkweak_dev

Shanti

Head of happiness in my house

Some context

Traefik

Emile Vauge

Written in GO

Easy to use

French Quality

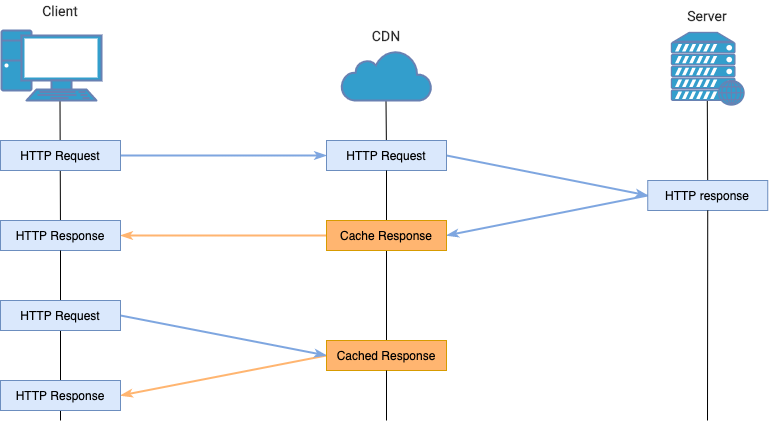

Where is the cache ?

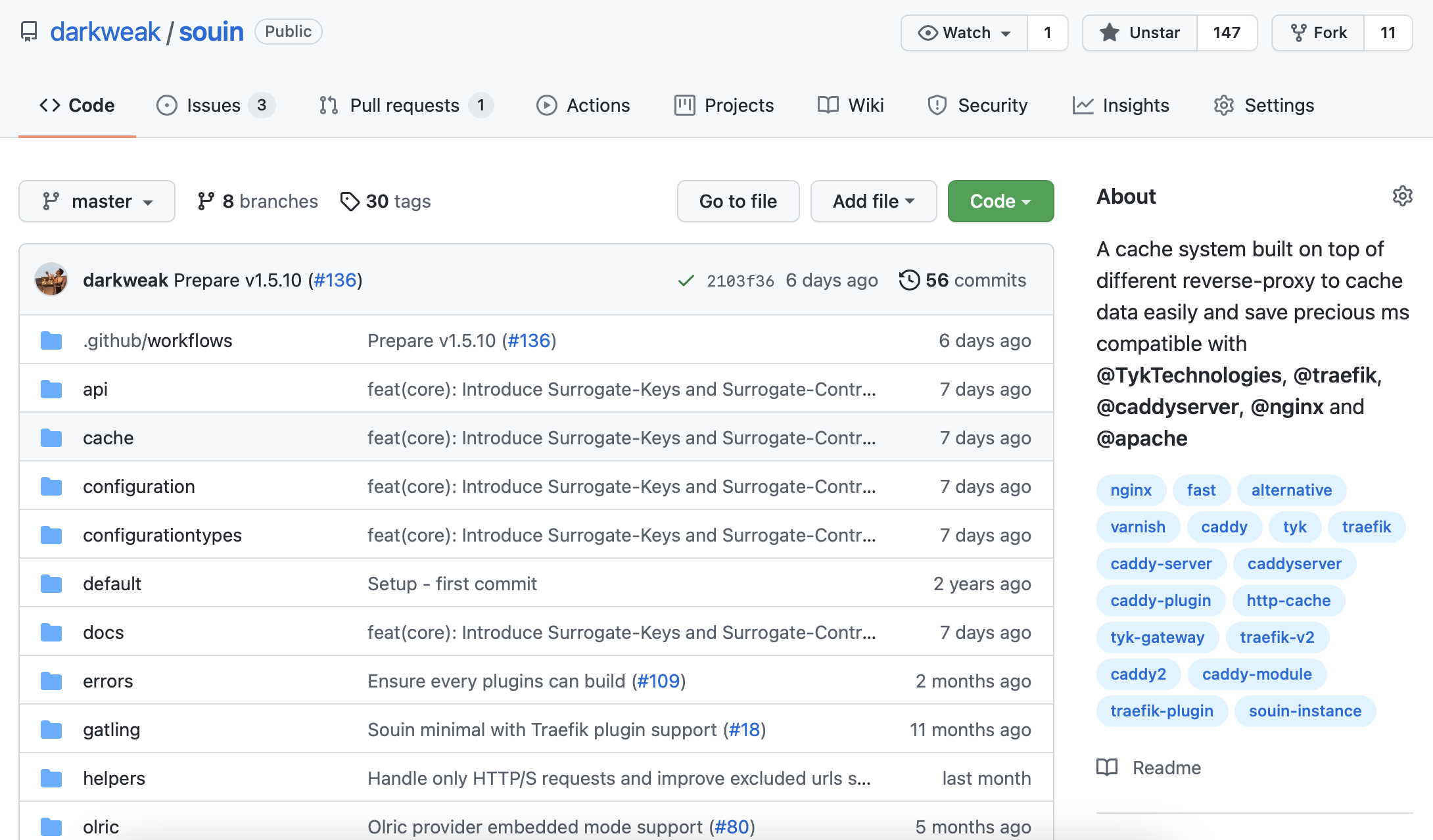

github.com/darkweak/Souin

Leave a ⭐️ on Github

Varnish vs Souin

[disclaimer trolling]

Demo

How does Go can help us to achieve performance

The power of

go func() {

_, _ = new(http.Client).Do(req)

}()With channels

go func(rs http.ResponseWriter, rq *http.Request) {

if rc != nil && <-coalesceable {

rc.Temporize(req, rs, nextMiddleware)

} else {

errorBackendCh <- nextMiddleware(rs, rq)

return

}

errorBackendCh <- nil

}(res, req)select {

case <-req.Context().Done():

switch req.Context().Err() {

case ctx.DeadlineExceeded:

cw := res.(*CustomWriter)

rfc.MissCache(cw.Header().Set, req, "DEADLINE-EXCEEDED")

cw.WriteHeader(http.StatusGatewayTimeout)

_, _ = cw.Rw.Write(serverTimeoutMessage)

return ctx.DeadlineExceeded

case ctx.Canceled:

return nil

default:

return nil

}

case v := <-errorBackendCh:

if v == nil {

_, _ = res.(souinWriterInterface).Send()

}

return v

}Interfaces to the rescue

type Badger struct {}

func (provider *Badger) ListKeys() []string {}

func (provider *Badger) Prefix(key string, req *http.Request) *http.Response {}

func (provider *Badger) Get(key string) []byte {}

func (provider *Badger) Set(key string, value []byte) error {}

func (provider *Badger) Delete(key string) {}

func (provider *Badger) DeleteMany(key string) {}

func (provider *Badger) Init() error {}

func (provider *Badger) Name() string {}

func (provider *Badger) Reset() error {}type Olric struct {}

func (provider *Olric) ListKeys() []string {}

func (provider *Olric) Prefix(key string, req *http.Request) *http.Response {}

func (provider *Olric) Get(key string) []byte {}

func (provider *Olric) Set(key string, value []byte) error {}

func (provider *Olric) Delete(key string) {}

func (provider *Olric) DeleteMany(key string) {}

func (provider *Olric) Init() error {}

func (provider *Olric) Name() string {}

func (provider *Olric) Reset() error {}type Storer interface {

ListKeys() []string

Prefix(key string, req *http.Request) *http.Response

Get(key string) []byte

Set(key string, value []byte) error

Delete(key string)

DeleteMany(key string)

Init() error

Name() string

Reset() error

}func NewStorages(c AbstractConfigurationInterface) map[string]Storer {

providers := make(map[string]AbstractProviderInterface)

olric, _ := OlricConnectionFactory(c)

providers["olric"] = olric

badger, _ := BadgerConnectionFactory(configuration)

providers["badger"] = badger

for _, p := range providers {

_ = p.Init()

}

return providers

}Context

ctx := context.Background()

valCtx := ctx.Value("MY_CONTEXT_KEY")

if valCtx == nil {

return "not found"

}

val, ok := valCtx.(string)

// not foundctx := context.Background()

ctx = context.WithValue(ctx, "MY_CONTEXT_KEY", "a string")ctx := context.Background()

valCtx := ctx.Value("MY_CONTEXT_KEY")

if valCtx == nil {

return "not found"

}

val, ok := valCtx.(string)

// val: "a string"

// ok: trueconst (

CacheName ctxKey = "souin_ctx.CACHE_NAME"

RequestCacheControl ctxKey = "souin_ctx.REQUEST_CACHE_CONTROL"

)

func (cc *cacheContext) SetContext(req *http.Request) *http.Request {

co, _ := cacheobject.ParseRequestCacheControl(req.Header.Get("Cache-Control"))

return req.WithContext(

context.WithValue(

context.WithValue(

req.Context(),

CacheName,

cc.cacheName

),

RequestCacheControl,

co

)

)

}Cool features in Souin

default_cache:

ttl: 10s

reverse_proxy_url: 'http://reverse-proxy'YAML configuration

func GetConfiguration() *Configuration {

data := readFile("./configuration/configuration.yml")

var config Configuration

if err := yaml.Unmarshal(data, config); err != nil {

log.Fatal(err)

}

return &config

}# souin/docker-compose.yml

version: '3.4'

x-networks: &networks

networks:

- your_network

services:

souin:

image: darkweak/souin:latest

ports:

- 80:80

- 443:443

environment:

GOPATH: /app

volumes:

- ./configuration.yml:/configuration/configuration.yml

<<: *networks

networks:

your_network:

external: true

# anywhere/docker-compose.yml

version: '3.4'

x-network:

&network

networks:

- your_network

services:

traefik:

image: traefik:latest

command: --providers.docker

ports:

- 81:80

- 444:443

- 8080:8080

volumes:

- /var/run/docker.sock:/var/run/docker.sock

<<: *network

networks:

your_network:

external: trueContainer first

Configuration as plugin

No configuration required

Use it as plugin or module extension

Caddy

Træfik

xcaddy build --with \

github.com/darkweak/souin/plugins/caddy# Caddyfile

{

cache

}

localhost {

response "Hello World!"

}./caddy run# your/traefik.yml

# ...

experimental:

plugins:

souin:

moduleName: github.com/darkweak/souin

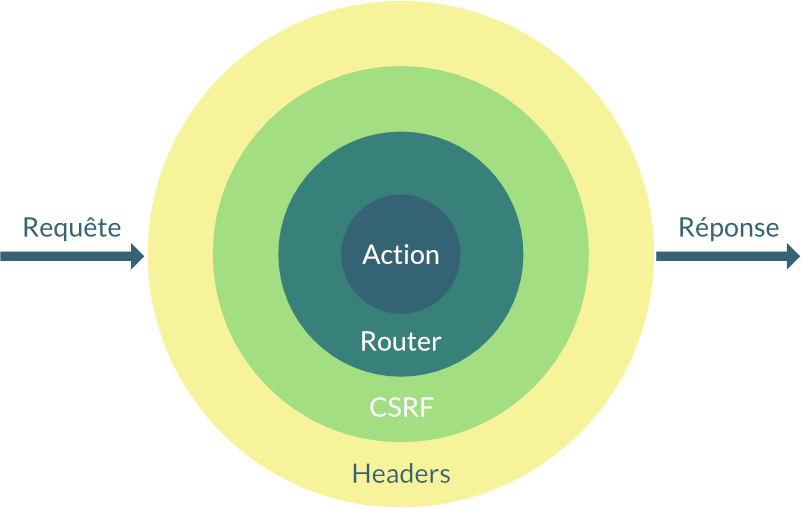

version: v1.7.8docker compose up traefikMiddlewares

Middlewares

APP

Headers middleware

Middlewares

func (s *DummyCaddyPlugin) ServeHTTP(rw http.ResponseWriter, r *http.Request, next caddyhttp.Handler) error {

req := s.Retriever.GetContext().SetBaseContext(r)

# do some stuff....

customWriter := newInternalCustomWriter(rw, bufPool)

getterCtx := getterContext{customWriter, req, next}

ctx := context.WithValue(req.Context(), getterContextCtxKey, getterCtx)

req = req.WithContext(ctx)

req.Header.Set("Date", time.Now().UTC().Format(time.RFC1123))

next.ServeHTTP(customWriter, r)

return nil

}Request coalescing system support

var result singleflight.Result

select {

case <-timeout:

http.Error(rw, "Gateway Timeout", http.StatusGatewayTimeout)

return

case result = <-ch:

}

if result.Err != nil {

http.Error(rw, result.Err.Error(), http.StatusInternalServerError)

return

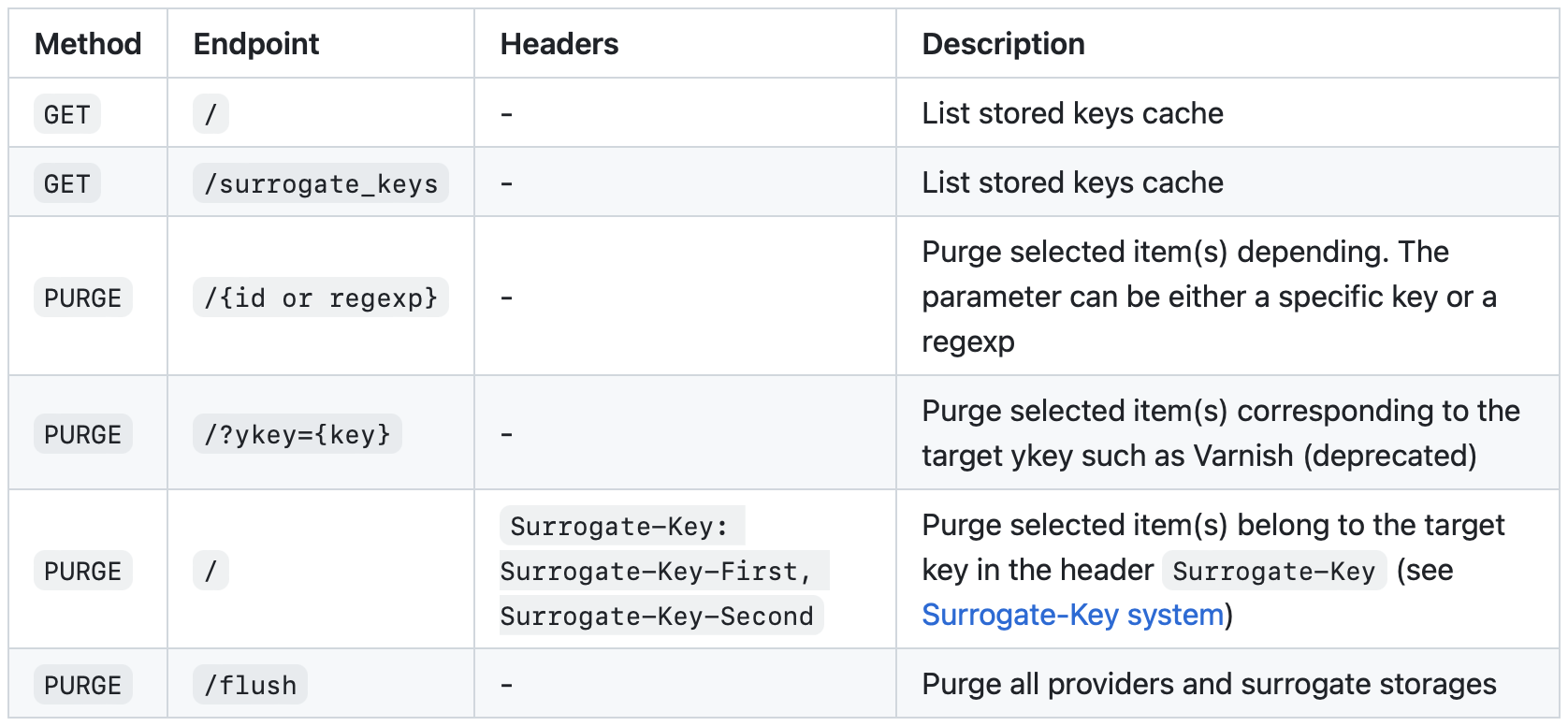

}API management

Configuration

api:

basepath: /souin-api

prometheus:

basepath: /anything-for-prometheus-metrics

souin:

basepath: /anything-for-souinCache management API

Default base path: /souin

basePathAPIS := c.GetAPI().BasePath

if basePathAPIS == "" {

basePathAPIS = "/souin-api"

}

for _, endpoint := range api.Initialize(provider, c) {

if endpoint.IsEnabled() {

http.HandleFunc(

fmt.Sprintf(

"%s%s",

basePathAPIS,

endpoint.GetBasePath()

),

endpoint.HandleRequest

)

http.HandleFunc(

fmt.Sprintf(

"%s%s/",

basePathAPIS,

endpoint.GetBasePath()

),

endpoint.HandleRequest

)

}

}How to code this?

Choose your storage

nutsdb

badger

redis

olric

etcd

Surrogate-Keys

First group

Second group

Third group

key 1

key 3

key 564

key 90

key ABC

key 3

key 789

key 1

key 789

Cache-Groups

CDN tag invalidation

CDN tag invalidation

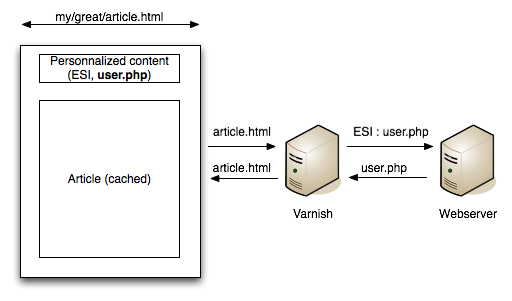

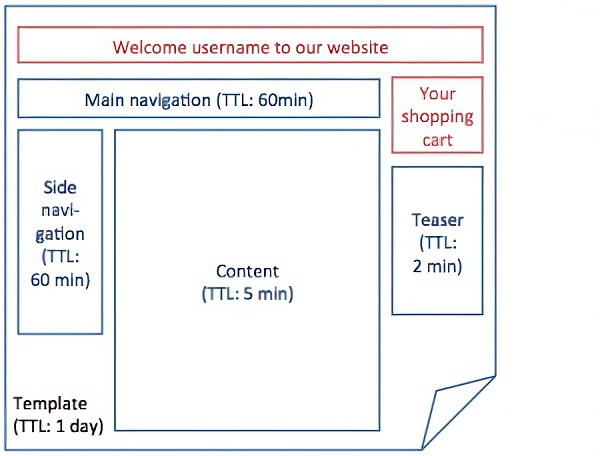

ESI tags

ESI tags

<esi:include

src="http://example.com/1.html"

alt="http://bak.example.com/2.html"

onerror="continue"

/>ESI tags

<esi:try>

<esi:attempt>

<esi:comment text="Include an ad"/>

<esi:include src="http://www.example.com/ad1.html"/>

</esi:attempt>

<esi:except>

<esi:comment text="Just write some HTML instead"/>

<a href=www.akamai.com>www.example.com</a>

</esi:except>

</esi:try>ESI tags

<esi:vars>

<img

src="http://www.example.com/$(HTTP_COOKIE{type})/hello.gif"

alt="$(HTTP_COOKIE{logo_name})"

/>

</esi:vars>ESI tags

<img

src="http://www.example.com/human/hello.gif"

alt="My human GIF"

/>ESI tags

Compatible with many softwares

Used in production by many OSS projects

It was funny parts to dev

Respect the RFC

such a pain

When you think it's simple, RFC tells you it is not

1. Introduction

HTTP is typically used for distributed information systems, where performance can be improved by the use of response caches. This document defines aspects of HTTP/1.1 related to caching and reusing response messages. An HTTP cache is a local store of response messages and the subsystem that controls storage, retrieval, and deletion of messages in it. A cache stores cacheable responses in order to reduce the response time and network bandwidth consumption on future, equivalent requests. Any client or server MAY employ a cache, though a cache cannot be used by a server that is acting as a tunnel. A shared cache is a cache that stores responses to be reused by more than one user; shared caches are usually (but not always) deployed as a part of an intermediary. A private cache, in contrast, is dedicated to a single user; often, they are deployed as a component of a user agent. The goal of caching in HTTP/1.1 is to significantly improve performance by reusing a prior response message to satisfy a current request. A stored response is considered "fresh", as defined in Section 4.2, if the response can be reused without "validation" (checking with the origin server to see if the cached response remains valid for this request). A fresh response can therefore reduce both latency and network overhead each time it is reused. When a cached response is not fresh, it might still be reusable if it can be freshened by validation (Section 4.3) or if the origin is unavailable (Section 4.2.4).

1.1. Conformance and Error Handling

The key words "MUST", "MUST NOT", "REQUIRED", "SHALL", "SHALL NOT", "SHOULD", "SHOULD NOT", "RECOMMENDED", "MAY", and "OPTIONAL" in this document are to be interpreted as described in [RFC2119]. Conformance criteria and considerations regarding error handling are defined in Section 2.5 of [RFC7230].

1.2. Syntax Notation

This specification uses the Augmented Backus-Naur Form (ABNF) notation of [RFC5234] with a list extension, defined in Section 7 of [RFC7230], that allows for compact definition of comma-separated lists using a '#' operator (similar to how the '*' operator indicates Fielding, et al. Standards Track [Page 4]

RFC-7234

if isResponseTransparent {

return transparent

}

if isResponseStale {

return stale

}

if isResponseFresh {

return fresh

}expiresHeader := respHeaders.Get("Expires")

if expiresHeader != "" {

expires, err := time.Parse(time.RFC1123, expiresHeader)

if err != nil {

lifetime = zeroDuration

} else {

lifetime = expires.Sub(date)

}

}RFC-9211

aka. Cache-Status RFC

Cache-Status

Souin; stored; fwd=uri-missSouin; hit; ttl=1234; key=GET-domain.com-/uri-anotherSouin; fwd=uri-miss; key=GET-domain.com-/uri-another,

Caddy; hit; ttl=432; key=abcdef123Cache-Status

res

.header

.Set(

"Cache-Status",

fmt.Sprintf(

"%s; fwd=uri-miss; key=%s; detail=UNCACHEABLE-STATUS-CODE",

rq.Context().Value(context.CacheName),

rfc.GetCacheKeyFromCtx(rq.Context()),

)

)RFC-9213

aka. Targeted Cache-Control

CDN-Cache-Control

{name}-Cache-Control

Age: 1800

Cache-Control: max-age=600Age: 1800

Cache-Control: max-age=600

CDN-Cache-Control: max-age=3600Age: 1800

Cache-Control: max-age=600

CDN-Cache-Control: no-cache

Souin-Cache-Control: public; max-age=3600

LowLevelApp-Cache-Control: public; max-age=7200New version deployment

The workflow

Test

Build

Release

Testing

golangci-lint

go unit test

build plugins

E2E plugin tests

go unit test with services

jobs:

lint-validation:

name: Validate Go code linting

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Install Go

uses: actions/setup-go@v3

with:

go-version: ${{ env.GO_VERSION }}

- name: golangci-lint

uses: golangci/golangci-lint-action@v8

with:

args: --timeout=240sgolangci-lint

jobs:

unit-test-golang:

needs: lint-validation

name: Unit tests

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Install Go

uses: actions/setup-go@v3

with:

go-version: ${{ env.GO_VERSION }}

- name: Run unit static tests

run: go test -v -raceunit tests

jobs:

unit-test-golang-with-services:

needs: lint-validation

name: Unit tests with external services

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Install Go

uses: actions/setup-go@v3

with:

go-version: ${{ env.GO_VERSION }}

- name: Build and run the docker stack

run: |

docker network create your_network || true

docker-compose -f docker-compose.yml.test up \

-d --build --force-recreate --remove-orphans

- name: Run pkg storage tests

run: docker-compose -f docker-compose.yml.test exec -T souin \

go test -v -race ./pkg/storageunit tests with services

jobs:

build-roadrunner-validator:

name: Check that Souin build as middleware

uses: ./.github/workflows/plugin_template.yml

secrets: inherit

with:

CAPITALIZED_NAME: Roadrunner

LOWER_NAME: roadrunner

GO_VERSION: '1.21'build plugins

jobs:

plugin-test:

name: Check that Souin build as ${{ inputs.CAPITALIZED_NAME }} middleware

runs-on: ubuntu-latest

env:

GO_VERSION: ${{ inputs.GO_VERSION }}

# ...

steps:

- name: Check if the configuration is loaded to define if Souin is loaded too

uses: nick-invision/assert-action@v1

with:

expected: 'Souin configuration is now loaded.'

actual: ${{ env.MIDDLEWARE_RESULT }}

comparison: contains

- name: Run ${{ inputs.CAPITALIZED_NAME }} E2E tests

uses: anthonyvscode/newman-action@v1

with:

collection: "docs/e2e/Souin E2E.postman_collection.json"

folder: ${{ inputs.CAPITALIZED_NAME }}

reporters: cli

delayRequest: 5000E2E plugin tests

jobs:

generate-souin-docker:

name: Generate souin docker

runs-on: ubuntu-latest

steps:

- name: Build & push Docker image containing only binary

id: docker_build

uses: docker/build-push-action@v4

with:

push: true

file: ./Dockerfile-prod

platforms: linux/arm64, # others...

build-args: |

"GO_VERSION=${{ env.GO_VERSION }}"

tags: |

darkweak/souin:latest

darkweak/souin:${{ env.RELEASE_VERSION }}Build and Release

jobs:

generate-artifacts:

name: Deploy to goreleaser

runs-on: ubuntu-latest

steps:

- name: Run GoReleaser

uses: goreleaser/goreleaser-action@v3

with:

version: latest

args: release --clean

workdir: ./plugins/souin

env:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

GO_VERSION: ${{ secrets.GITHUB_TOKEN }}Release

jobs:

generate-tyk-versions:

name: Generate Tyk plugin binaries

runs-on: ubuntu-latest

env:

LATEST_VERSION: v5.0

PREVIOUS_VERSION: v4.3

SECOND_TO_LAST_VERSION: v4.2

steps:

- name: Generate Tyk amd64 artifacts

run: cd plugins/tyk && make vendor && docker compose up

- name: Upload Tyk amd64 artifacts

uses: actions/upload-artifact@v3

with:

path: plugins/tyk/*.soRelease

My reaction when all jobs are green and the new version is available

The deployment is done

RESULTS

================================================================================

---- Global Information --------------------------------------------------------

> request count 101000 (OK=101000 KO=0 )

> min response time 0 (OK=0 KO=- )

> max response time 56 (OK=56 KO=- )

> mean response time 8 (OK=8 KO=- )

> std deviation 4 (OK=4 KO=- )

> response time 50th percentile 8 (OK=8 KO=- )

> response time 75th percentile 10 (OK=10 KO=- )

> response time 95th percentile 15 (OK=15 KO=- )

> response time 99th percentile 21 (OK=21 KO=- )

> mean requests/sec 3884.615 (OK=3884.615 KO=- )

---- Response Time Distribution ------------------------------------------------

> t < 800 ms 101000 (100%)

> 800 ms < t < 1200 ms 0 ( 0%)

> t > 1200 ms 0 ( 0%)

> failed 0 ( 0%)

================================================================================Roadmap

- 👷♂️ Stream every responses

- 🚀 Better performances

- 🛠️ Support 103 Early Hints

Any idea ?

Open an issue at https://github.com/darkweak/souin/issues/new

Special thanks!

silverbackdan

developer_west

mholt6

mholt

MohammedSahaf

mohammed90

hussam_almarzoq

hussam-almarzoq

Thank you for your attention

How I made a powerful cache system using Go

By darkweak

How I made a powerful cache system using Go

- 1,487