exploring artificial intelligence

neural networks for life sciences

Felipe Delestro

contact@delestro.com

1. Introduction

2. Basics of Artificial Neural Networks

3. ANNs for embryo classification

4. How to get started

Layout

Introduction

- Artificial Neural Networks (ANNs) are computational models inspired by biological neurons in the brain

- ANNs recognize patterns in data and make predictions based on those patterns

- ANNs are typically organized into layers of neurons, with each layer performing a different type of processing

Overview

In the 1920s, McCulloch and Pitts developed the first mathematical model of a neuron, known as the McCulloch-Pitts neuron

McCulloch WS, Pitts W.

A logical calculus of the ideas immanent in nervous activity.

The bulletin of mathematical biophysics. 1943During the 1950s Frank Rosenblatt developed the first neural network model capable of learning, known as the Perceptron

Perceptron , Mark I

National Museum of American History

Washington, D.C.

Basics of Artificial Neural Networks

- Perceptron is a mathematical model that mimics the behavior of a single neuron

- It takes inputs, applies weights, sums them up, and passes the result through an activation function to produce an output

- Perceptrons are essentially linear classifiers that make decisions by combining inputs with weights and producing output using an activation function

Perceptrons

https://towardsdatascience.com/perceptron-the-artificial-neuron-4d8c70d5cc8d

https://medium.com/@shrutijadon/survey-on-activation-functions-for-deep-learning-9689331ba092

Weights are learned through training, where the perceptron is presented with a set of labeled examples and it adjusts its weights in order to correctly classify the inputs

Perceptron cooking as a chef

Stable diffusion, OpenJourney model

https://scikit-learn.org/stable/modules/clustering.html

On its own, the perecptron is limited to linear decision boundaries.

Adding layers

- Multi-layer perceptrons (MLPs) or feedforward neural networks were developed to overcome the linearity limitation

- MLPs have multiple layers, including input, hidden, and output layers

- MLPs can learn more complex features and relationships between inputs

Visualization of a fully connected neural network, version 1

Marijn van Vliet

MLP as a detective solving a crime

Stable diffusion, OpenJourney model

Backpropagation

- Backpropagation is an algorithm for training ANNs to learn from their mistakes and improve their accuracy over time

- Backpropagation adjusts the weights of the connections in the network to minimize the difference between the actual output and the desired output

- It propagates the error backwards through the network, from output layer to input layer, and adjusts the weights based on the contribution of each connection to the error

- Backpropagation aims to find the set of weights that produces the smallest error for a given set of input-output pairs

Backpropagation as a choreographer teaching a dance

Stable diffusion, OpenJourney model

Deep learning

- Multi-layer perceptrons (MLPs) have limitations in learning complex patterns, which deep learning can solve by using many layers of neurons.

- The number of layers in deep neural networks can range from a few dozen to several hundred or more, making it possible to learn intricate features and relationships within the data.

- Layers can be specialized, and two types are very important: convolution and pooling layers

Convolution

- Convolution is a weighted sum operation used in signal and image processing to extract features from data.

- A small filter is slid over the input signal or image, and the results of element-wise multiplication are summed up to obtain a single output value.

https://commons.wikimedia.org/wiki/File:2D_Convolution_Animation.gif

https://www.youtube.com/watch?v=KuXjwB4LzSA

But what is a convolution?

Pooling

- Pooling is a technique used in neural networks to reduce the spatial dimensionality of feature maps obtained from convolutional layers.

- The pooling function (such as max pooling or average pooling) reduces the information in each region to a single value, resulting in a smaller feature map with a reduced spatial dimensionality.

- Pooling helps make the subsequent layers of the network more robust to slight variations in the input and provide translational invariance to the input data.

https://www.geeksforgeeks.org/cnn-introduction-to-pooling-layer/

Pooling as understanding a book by summarizing the chapters

Stable diffusion, OpenJourney model

convolution + pooling made possible the creation of the convolutional Neural Networks, or CNNs

They correspond to the vast majority of DL models currently in usage.

ANNs for embryo classification

José Celso

Applied mathematics

Marcelo Nogueira

Embryology

Cummulative Google Scholar results for "deep learning" and "image"

Started to work on embryo classification

Human embryo development during 5 days, data from time-lapse system

User manually perform measurements in the image

Graphical interface allows inference using the ANN

Compromises

- MLPs cannot read directly from the image, a feature extraction step was needed

- The system predicts quality of the embryo, which could be a biased label

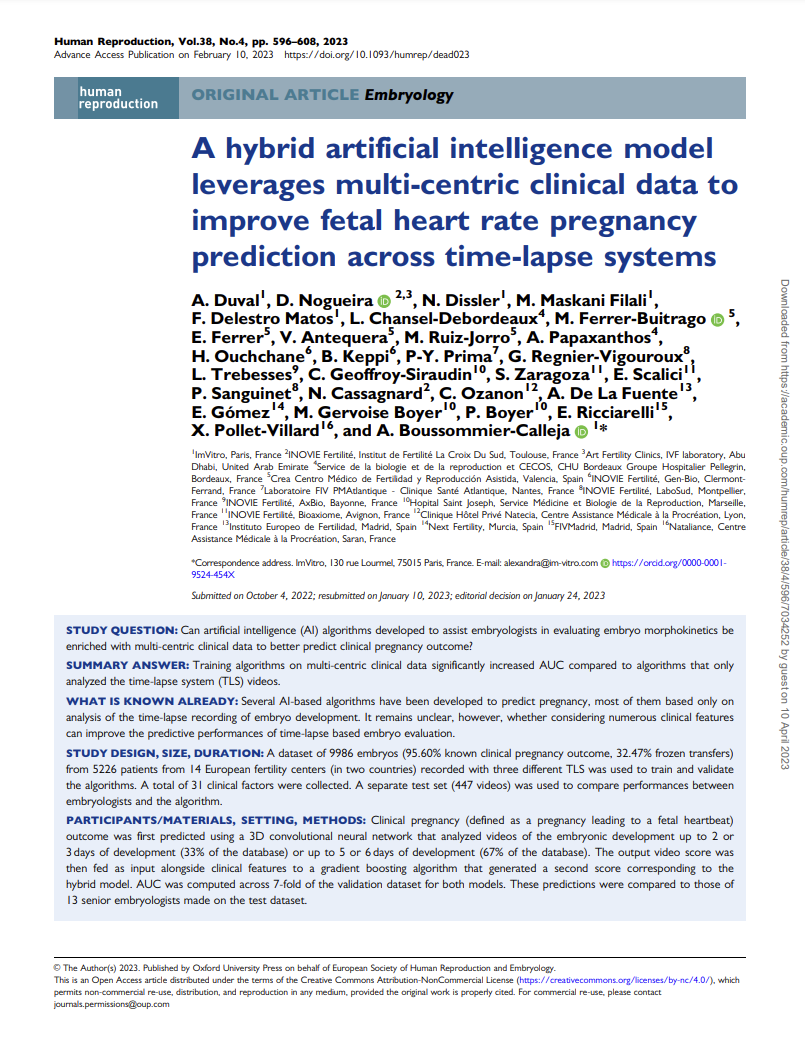

Alexandra Boussommier

CEO ImVitro

https://doi.org/10.1093/humrep/dead023

Embryoscope

(Vitrolife)

MIRI

(ESCO)

Geri

(Genea Biomedx)

Automatic embryo cropping using YoloV5. The original model was retrained using a few hundred annotated images

https://github.com/ultralytics/yolov5

https://www.youtube.com/watch?v=cHDLvp_NPOk

YoloV5 model in action for object detection

pregnancy

no pregnancy

AUC: 0.68

video score

clinical features

pregnancy

no pregnancy

AUC: 0.73

How to get started

Knowledge background

-

Python:

A popular programming language for deep learning and is used extensively in the field for tasks such as data manipulation, preprocessing, and training models. -

Machine learning

Understanding the principles and concepts of machine learning is important for developing deep learning models as it helps to identify the appropriate models, evaluate their performance, and make modifications to improve them. -

"Classical" approaches

Knowledge of classical approaches such as linear regression, logistic regression, and decision trees can help understand the fundamentals of deep learning and develop more complex models. It can also help in selecting appropriate preprocessing techniques and handling missing data.

Recommended framework

High level approaches

BioImage model Zoo

thanks!

https://slides.com/delestro/ann-unesp

Exploring Artificial Intelligence

By Felipe Delestro

Exploring Artificial Intelligence

neural networks for life sciences

- 588