End-to-End Differentiable Simulations of Particle Accelerators

D. Vilsmeier

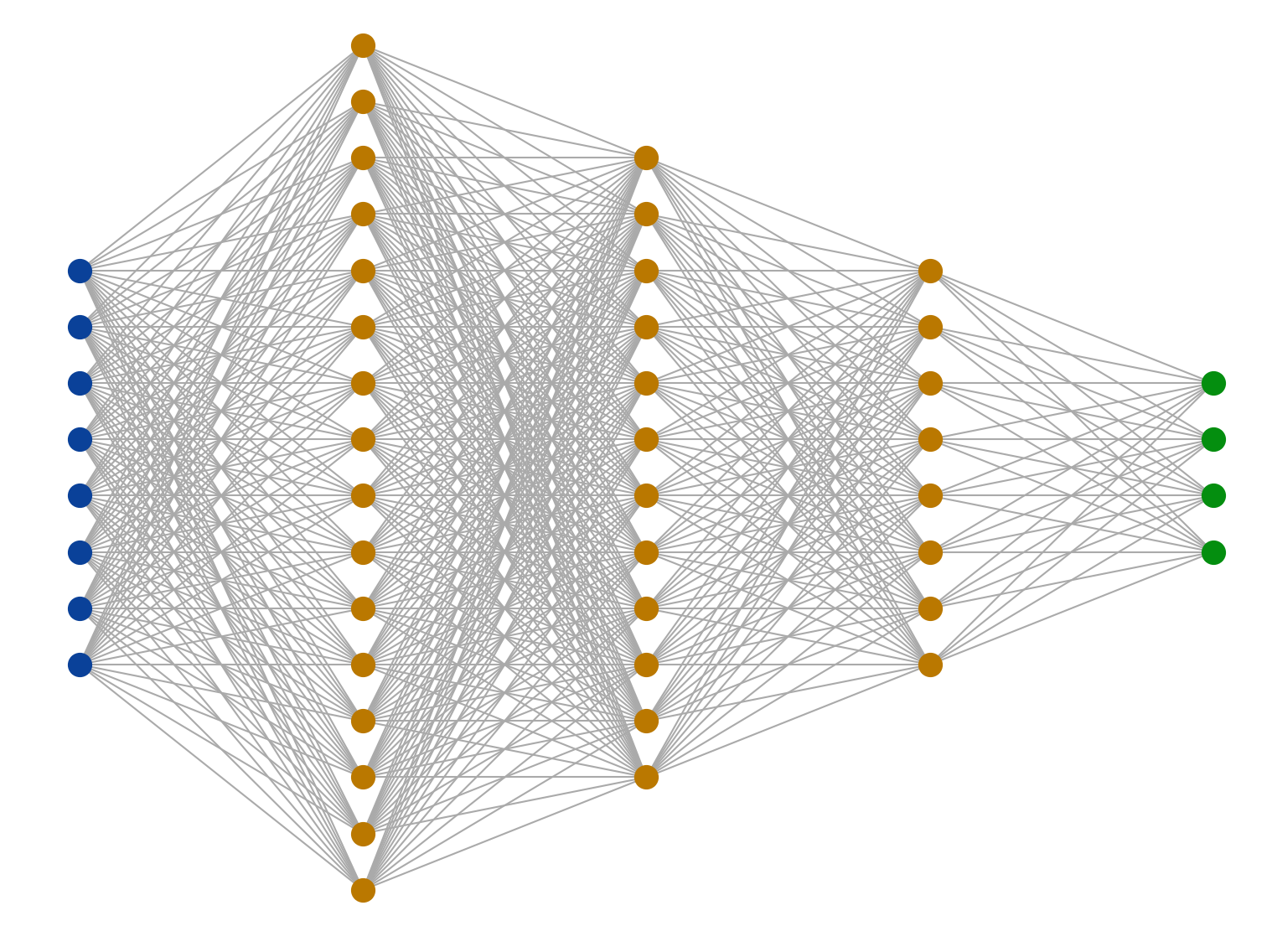

Artificial Neural Networks

Example: "Fully connected, feed-forward network"

Input Layer

"Hidden" Layers

Output Layer

Transformation per layer

Bias term

Weight matrix

(Non-)linear function ("activation")

Input data

Prediction

- Used for mapping complex, non-explicit relationships

- E.g. image classification, translation, text / image / video generation, ...

- Data-driven algorithms

Optimization of network parameters

"Supervised Learning" using samples of input and expected output

Sample 🡒 Forward pass 🡒 Prediction

Quality of prediction by comparing with (expected) sample output, e.g. MSE

Minimization of loss by tuning network parameters 🡒 Optimization problem

Gradient based parameter updates using (analytically) exact gradients via Backpropagation algorithm

Dataflow &

Differentiable

Programming

Example: Particle Tracking

Example: Linear optics

Transfer Matrix

- Tracking through one element is analogue to a neural network layer (b = 0, σ = id)

- Weights are given and constrained by the transfer matrix

- Whole beamline is analogue to a feed-forward network

@ Entrance

@ Exit

Example: Particle Tracking

Example: Quadrupole

Tracking as computation graph

Express as combination / chain of elementary operations

Output / prediction

Input

(Elementary) operation

Parameter of element

Layout as computation graph (keeping track of operations and values at each stage)

Tracking as computation graph

Tracking as computation graph

0.1

0.32

3.2

0.98

1.0

0.32

0.32

0.32

0.95

0.31

0.0295

0.0475

0.077

0.03

0.05

Tracking as computation graph

0.1

0.32

3.2

0.98

1.0

0.32

0.32

0.32

0.95

0.31

0.0295

0.0475

0.077

1.0

1.0

1.0

0.03

0.05

0.05

0.03

0.096

0.009

-0.016

0.091

0.075

-0.091

-0.025

Why not derive manually?

For long lattices this type of derivatives can become very quickly very tedious

Beamline consists of many elements

Tracking in circular accelerator requires multiple turns

Dependence on lattice parameters typically non-linear

Symbolic differentiation can help to "shortcut"

Example: Quadrupole tuning

- Beamline, 160 m, 21 quadrupoles, gradients are to be adjusted

- Fulfill multiple goals:

- Minimize losses along beamline

- Minimize beam spot size at target

- Keep beam spot size below threshold at beam dump location

21 Quadrupoles

Target

Beam dump

Envelope

Optimize gradients

Example: Inference of model errors

- Same beamline as before, infer quadrupole gradient errors w.r.t model

- Quality metric: mean squared error of simulated vs. measured Trajectory Response Matrix (TRM)

- TRM = orbit response @ BPMs in dependence on kicker strengths

beam position @ BPM

kicker strength

What about other optimizers?

Gradient-Free

Gradient-Approx.

Gradient-Exact

E.g. Nelder-Mead, Evolutionary algorithms, Particle Swarm, etc.

Any derivative-based method, e.g. via finite-difference approx.

Balance exploration vs. exploitation of the loss landscape (parameter space)

Exploration: Try out yet unknown locations

Exploitation: Use information about landscape to perform an optimal parameter update

No "built-in" exploration, but can be included via varying starting points (global) and learning rate schedules (local)

Symbolic differentiation or differentiable programming

Additional advantages

- Good convergence properties, also for (very) large parameter spaces

- No need to rely on plain vanilla gradient descent, all sort of advanced optimizers are available, e.g. RMSprop or Adam

- Two function evaluations per gradient computation (where e.g. finite-difference approx. uses N function evaluations)

- Running on GPU / TPU? Yes!

Deep learning packages provide straightforward ways to switch devices

→ "Batteries included"

https://commons.wikimedia.org/wiki/File:Geforce_fx5200gpu.jpg

Not limited to particle tracking nor linear optics

High versatility w.r.t. data and metrics

End-to-End Differentiable Simulations of Particle Accelerators

By Dominik Vilsmeier

End-to-End Differentiable Simulations of Particle Accelerators

- 1,143