Systems Administrator Interview Q's

Describe your career trajectory? How you began? Why you moved from position to position?

I started off in USD as an HelpDesk Coordinator where I managed the Helpdesk and co-ordinated technicians, delegating as needed. This position developed my organizational, technical, and people skills. And after 3 months I was promoted to being the fulltime IT administrator for the School of Nursing + Manchester Conference Center, where I improved business operations and streamlined communications with the implementation of an AD domain, streamlined the Imaging of lab PC's using multicast, trained endusers on PC sec, introduced a Sony Video Conferencing Systems and secured lab PCs using Faronics Deep Freeze to snapshot a computers desired configuration state.

Next I moved to SDSU where they needed a Systems Analyst (with Windows Server Administration + programming experience) where I was responsible for a portion of the SDSU Student Services network including: security, aidlink web applications and databases, servers, backups, user support, and equipment procurement.

I achieved compliance with SDSU Information Security Plan, while overseeing strategic IT planning and department-wide IT infrastructure and operations, aligning IT with CSU priorities, implementing secure systems configurations in accordance with the Campus Info Sec Plan.

I upgraded the Win infra + Servers to x64 Win7 + Server2008

+ implementing Secure Server builds, streamlining Vul Mgmt Processes (with McAfee EPO + CounterSpy Spyware scanning), introducing the Solidus VoIP phone system, and improving DR / BC by following a 3-2-1 rule based backup + recovery strategy).

I provided leadership and mentoring to my work study techs + endusers and handled all security incidents (99% of which were false positives).

I optimized and refined departmental business applications, while automating and optimizing EDI processes and reporting, scripting Oracle PL/SQL jobs, reducing processing time, troubleshooting software issues, and improving quality control of CBRS Campus Billable and Receivable Systems, Fee Deferment processing, Waiver Subsidy processing, and NSLDS Quality Control and Transaction Monitoring systems. Working with DBAs on query optimization.

While I continued my self education learning web dev.

I went back to Ireland to help out with a family emergency and while there I was indep IT consultant primarily for Sherlock Brothers Furniture where I put together a H+S VPN network (5 locations) using Untangle FWs, HP Switches, Dell Servers, and implemented an AD Domain, and improved security by upgrading their antiquated CCTV security camera's to ip-based Axis Security Cameras recording to DVR.

Upon returning to the USA I worked at Align General Insurance underwriters where I was part of a 2-man IT Admin team where we took care of everything for the company: Win/Linux/Unix Servers, the Network (a Multi-Site WAN/LAN), RDS Server with Remote users, FreedomVoice Cloud based VoIP, P to V server migrations (VMWare+Hyper-V).

I helped design + implemented BCP and DRP strategies to insulate the company against events, incidents, and unexpected disasters.

I cultivated an environment of collaboration and efficiency, by streamlining Office communication, with the migration to O365 (from Ex 2010) and the introduction of FreedomVoice Cloud based VoIP.

I enhanced the company’s digital presence with a refactored modern website using HTML5, CSS3, PHP, Apache web server, port forwarding, PSAD Port Scan Attack Detector IDS using Snort Signatures, Sendmail mail server, Linux Bind DNS name servers, a MySQL database servers, while using Git for version control.

While in my spare time I kept going with prog, learning PHP + joining the SDPHP study group, learning more Linux / DB / Web Server administion + Web App security.

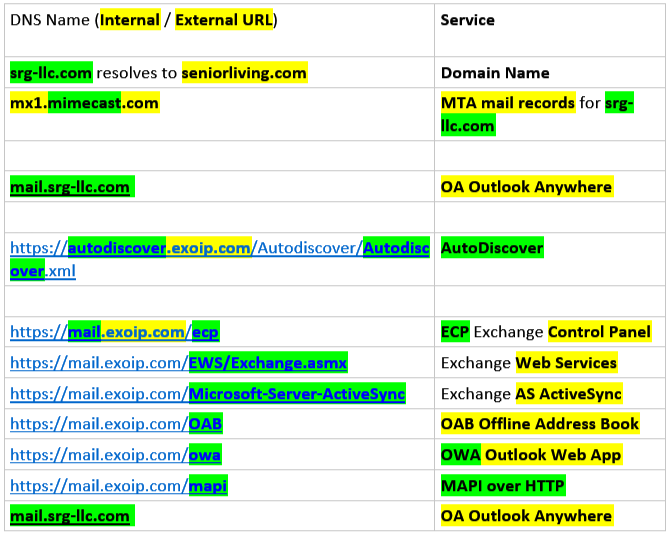

Finally progressing to SRG where I'm part of a 4 person team, 2 Directors, Me the Sys Mgr of 2 helpdesk analysts, managing the network of a 33 site senior living community network that consists of (36 Firewalls, 600 Switches, 800 Wifi APs, 15,000+ Data Ports) .

I deal with the day to day running of systems + engineering of network equipment, supervising vendor mgmt,

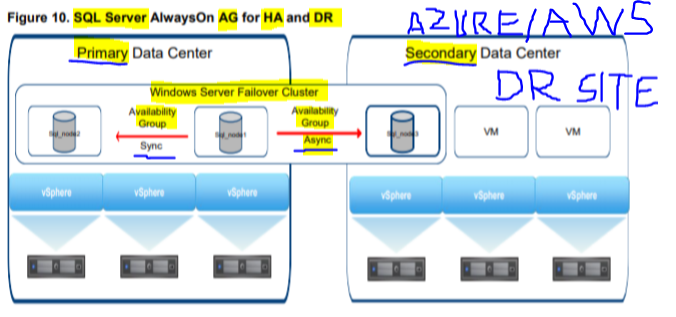

Monitoring our DR + BC systems (DFS, FC SAN, AWS Backup, VMWare virtualized Exchange DAG and SQL Server FCI and AG), maintaining Network Security, handling Incident Response, providing senior management with status, issue, and resourcing reports.

I provide technical leadership + mentoring to our support analyst's (Jake+Paul) while supervising the Helpdesk, consult on IT architecture solutions, and procure equipment + implement solutions.

I am responsible for and throughly enjoy being responsible for companywide security ensure the adoption and implementation of information security best practices with a view to securing assets, educating users, and reducing incidents.

Its been an enjoyable career and has brought me to the point where I feel I am ready to take on a position as an IT Manager.

1 Why are you applying for this position / what interests you about this job? What is your IT Dept Structure?

Working as a Senior IT Admin or IT Manager would be a step up for me, I currently work under 2 IT dirs one who supervises contracts (accountant) while the other controls budgets + tech strategy.

I deal with the day to day running of systems, supervising the Helpdesk, vendor mgmt, net security, and incident response.

While making equip purchase recs + implem, and mon DR + BC systems.

Plus I will have less of a commute by working locally.

Working as an IT Mgr / TAM would represent professional growth allowing me to tailor technical strategy (BCP+DRP) + controls to mgmt risk (counter threats + vuls), control budgets, and mgmt vendor contracts while having final say on all technical decisions.

Working at ScaleMatrix would allow me to grow professionally by working in a fast paced DC environment on the latest public + private cloud technologies, HA solutions + LBed clusters while the opportunity to make architectural recommendations + perf upgrades excites me!

1 Why do you want to leave your current position?

I want to leave my current job as I believe I've out grown the role, having gained more experience + accomplished my CISSP, VMW, AWS, Server16 + Fort certs, so I'm testing the waters to see what else is out there + am looking for professional growth. I was going to switch before COVID hit

1 Tell us about your long-term career goals.

My long term career goals are to get more specialized Public/Private cloud, working on App Arch + Sec, before growing into a DevSecOps / SRE / IT Manager role.

In the meantime I have plenty of certs to take (CCNA, O365, Azure, Python) and would like to work in a co. with

a bigger Public/Priv cloud presence with more chall work.

I got interested in sec following a Cryptol attack, while a friend in InfoSec recommended Security+,CISSP

1 Describe your typical day? current workload?

I get in just before 8am and check in with our helpdesk guy (they work a staggered schedule one starts at 7am-4pm and the other at 9am-6pm) to see what's going on, on the network (such as any events, incidents or Outages / Bottlenecks / contention / Latency),

There'll usually be some device flagged as being down (false positives) so I'll jump on that straight away (it could be an issue with the physical network, a Firewall, switch, ISP internet service or damaged cabling))

Or an issue with our virtual network topology.

I have WhatsupGold net mon setup to monitor

our physical + virtual network, while our team finds it's virtual overlay feature useful for pinpointing issues in our NW, SAN, storage, Exchange, SQL Server, and SharePoint apps.

We monitor our Ubiquiti Wireless net through Ubiquiti's own self hosted Unifi Controller SDN SW solution which monitors all our Switches and APs on our wireless network.

I'll initially solve any urgent tier 3/4 type stuff, that is impacting peoples ability to conduct business or that requires admin level changes (perms/rules) or re-configs.

While I deal with less urgent issues that require Vendor input, throughout the day where I often try to re-create a problem for root cause analysis.

With all emergencies out of the way, I check the logs from our nightly backups + Powershell scripting jobs to make sure everything is as it should be or for anomalies to look into.

Next I do a Daily analysis of service tickets + whether we have met our SLA goals (of all tickets being looked at within a day) and if there is anything that our analysts may have missed that needs to be looked at.

As systems manager, I manage + train our analysts, manage staff schedules, vacation time, and on call shifts.

Then I switch over to projects I'm working on such as:

- Researching any new HW or SW we are going to implement.

- Coordinating with our Vendors + Reviewing Vendor support issues (have we got support within SLA).

- Planning the HW replacement cycle (5 years on servers/4 years on PCs) and ordering new equipment.

- Planning the takeover/handoff of new/old communities.

- Collaborating with our IT Dirs on technical decisions and our technical direction. Establishing and improving dept policies, procedures, and guidelines.

After lunch:

I schedule any appointments or meetings as there is less chance I will be pulled onto something else.

Coaching our Analysts + working with remote techs (remote hands) we engage to swap out net equipment.

Our Analysts deal with:

Desktop, laptop, phone connectivity issues.

Replacing parts on malfunctioning PCs under warrantee.

Account lockouts + password resets,

Drive mappings

Lobby stations not working

Anything preventing someone from working takes priority!

Describe your monthly? current workload?

I review and test all system updates on a monthly basis before scheduling them to run that night through WSUS.

I handle all imaging through WDS.

I do a quarterly analysis of analyst perf (tickets closed).

I do 2 monthly site visits to geographically dispersed communities for Onboarding and training of new employees (Security Awareness), Installation of equipment, Site surveys and maintenance of existing systems (network, telecoms, POS, medical alerting, PBX/phones, AV Equipment, Security Camera, ADP timeclock, printer, PC, Mac).

1 What regional vendors do you use and how do you manage your vendor relationships?

vendors services: Fort, HP, Dell, Ubiq, VMWare, MS, Bitdefender, Mimecast, WhatsUpGold, Cisco, CDW, ADP, Dameware, Teamviewer, GoToMeeting.

ISPs\Telcos (VoIP & Data services): ATT, TelePacific, Cox

Cable TV services: ATT, Cox

Phone System: Shoretel, Nortel, NEC, Mitel

The Community Manager / Corp Manager takes care of:

In-House TV: ZeeVee, Thor, AVerKey, Creston

Where do you get regional techs from? What perms?

We hire regional techs from Field Nation.

1 What are your strong points / What are you good at?

I have great people skills + can articulate problems + sols

to non-technical people in an easy to understand manner while building good rapport. I believe good comm delivered in a timely fashion is key to great cust serv

I have great problem solving, multitasking + analytical ability,

being able to diagnose problems quickly while getting the user working again.

1 What do you like to work on / find most interesting?

At the minute Public + Private Cloud services and the innovation happening in that space (Smart NICs) along with Web App Sec, attack surface reduction, net defense, SASE + SDWAN, Identity + ZTNA, and MP-BGP eVPN VxLAN and the

latency in network connections, connecting to the cloud.

1 Whats the biggest mistake you’ve made? How recover?

The biggest mistake I made was at SDSU, when demoting an old file server, assuming my boss knew the local admin password but didn't! Luckily we had everything backed up off there before hand so there wasn’t a problem, so now I always make sure to set the local password before removing from the domain.

1 How have you handled situations where you have had an excessive work load?

I regularly have situations where I have an excessive work load. In a situation like that its essential to prioritize what needs to be solved first, and then good communication between team members and end users is needed to keep everybody in the loop. If a lot of people have the same problem at the same time then a group email comes in handy.

1 Could you give 2 examples of stressful situations you have encountered at work, how you handled them?

A stressful situation for me was back at SDSU where one of the programs I was responsible for had a logic flaw on a production run messing up production data, it couldn't be rolled back, as subsequent programs had already run on that data, so we had to manually fix production data, unfortunately at the same McAfee threw up a false positive on someone computers which always needed to handled immediately. So it was a matter of having to juggle competing priorities.

And then of course there was the WorldIsYours ransomeware attack where 1400 Win PCs + Servers got encrypted as our AD Admin account had been compromised which put our entire team in a stressful situation. We went into a state of lockdown pulling 16 hour days until we had a functioning network again. It made me fully focus on security + attack surface reduction.

Give an example of where you had a conflict with a colleague and how you handled it?

In my time a ALignG myself and Jose had conflicting views on how to fix VoIP call clarity problem we were having, I was the point of contact working with the vendor FreedomVoice who had been recommending to me that we needed to use, their recommended and tested Dell Sonicwall TZ 215 UTM Firewall, however Jose wanted to maintain the Untangle NG UTM Firewall appliance, as it provided the OpenVPN site-to-site VPN connection to our datacenter Untangle FW.

I had already segmented out our VoIP and Data traffic onto 2 separate VLANs so the next logical step for me was to use the vendor recommended Dell Sonicwall appliance and switch to an IPSec site-to-site VPN as only open source firmware provides OpenVPN connectivity. At the end of the day Jose was the senior member and decided to run both FW's in tandem.

Everyone in the office says Internet does not work. What steps for investigation will you perform?

Ping 8.8.8.8 to see if you can get to google.

If no response, ping the on premise router of your ISP.

If no response, ping the public ip of your router.

If no response, ping the private ip of your router.

If no response, ping your switch.

If no response, ping your DC.

These steps will help to isolate where the malfunction and perform remediation.

What are the benefits of using a UPS versus What are the benefits of using a UPS versus a PDU??

A UPS has a battery and provides near-instantaneous protection from input power interruptions, by supplying energy stored in batteries. It provides emergency power to your devices for a short period of time when an outage occurs and allows you to gracefully power down your devices.

A PDU is an industrial grade power stripe used to remotely monitor, manage, and protect the flow of power to your devices, it can have built in switching capability which allows you to toggle the power on and off to specific power outlets.

They are used to multiply the number of power outlets available and are used to protect devices which don’t have redundant power supplies (single power cord devices).

Generally you use both together where Main - UPS - PDU.

You might bypass the UPS altogether and have a secondary power line running directly from the mains supply to PDU,

or

You might have dual UPSs with separate mains lines,

or

You might have one UPS being fed by dual mains lines.

2 Draw out your network diagram? What software do you use for creating network diagrams?

How do you manage your network documentation?

How do you handle late night equipment upgrades / patching.

2.1 Describe how you setup your Firewalls to give internet access to different subnets/VLANs?

2.1 Describe the use of VLANs in your environment? Describe how you configure VLANs?

2.1 What type of Routing have you configured for your network?

1 Describe your current network environment?

We have a H+Spoke VPN Topology (in a 2 tier architecture) providing redundant connectivity from each of our 33 communities back to our Head Office via IPSec VPN's running on Fortinet FTGW 60E NGFW Firewall devices (FortiOS 6.0) configured with fully redundant route based IPSec VPNs with Link Health Monitors + floating static routes over dual WAN interfaces from different ISPs at both the H+S FTGWs to providing redundant HA connectivity between our Head Office DC Hub and our remote Spoke Senior Living communities.

The Link Health Monitors configured on the IPSec VPN static routes are used to rapidly detect when a link goes down, providing sub-millisecond failover to the backup IPSec VPN running on our secondary WAN circuit.

Our FortiGuard security subscription services + UTM advanced threat protection we get automated protection against today’s sophisticated threats including 24/7 firewall support, application control, advanced threat protection, IPS service, VPN, and web filtering + Anti-Spam service, all from one device that’s easy to deploy and manage.

For regular internet traffic:

I allow load balanced internet access from the individual community locations through the use of ECMP Equal Cost Multipath Routing for redundancy over dual WAN interfaces configured with a weighted load balancing algorithm that distributes our regular internet traffic in a 70/30 split across WAN interfaces as our primary ATT WAN interface has more bandwidth and is less expensive than our secondary TelePacific WAN interface which is primarily used for VoIP phone service.

Back on the H+S VPN Topology, the fully redundant Route based IPSec VPN connections concentrate together at our Hub VPN Concentrator (a FTGW 600E unit) from the spoke remote VPN Gateways (a mix of FTGW 60Es, 90Ds, previously 110Cs) in each community.

The VPN Concentrator is a single, central FortiGate unit that acts a focal point to co-ordinate communication between IPSec VPN interfaces coming in from the remote spoke networks on one device in the network Hub, all VPN tunnels terminate at the hub's VPN Concentrator.

For IPSec connectivity

I configure the prim IPSec VPN with a static route exiting our HQ FTGW over WAN1 on our ATT Business Fiber link, entering our Branch FTGWs on WAN1. I configure the secondary IPSec VPN with a static route exiting our HQ FTGW over WAN2 on our TelePacific link and entering our Branch FTGWs on WAN2 also.

I have set both static routes of the IPSec VPNs with the same AD Administrative distance but I have set the static route of the Primary IPSec VPN to have a higher priority this way the Primary IPSec VPN handles all of our VPN traffic while keeping the default static route of our Secondary IPSec VPN active in the Routing table. Keeping the default static route of our Secondary IPSec VPN active in the Routing table, keeps the secondary IPSec VPN interface up, and available to respond to Link Health Monitor pings, however no traffic from the LAN or DMZ actively traverses this VPN unless the other static route fails out of the routing table, this config facilitates fast failover.

When there are multiple routes to the same destination with the same distance then the priority is checked, and the route with the most priority (lowest number) is given preference.

I put both IPSec VPN Interfaces in a VPN Zone in order to avoid duplication of Security Policy and to ensure seamless failover between IPSec VPNs.

I configure Link Health Monitors on the static routes of the Primary IPSec VPN that radiate out to each remote VPN gateway at the different communities. The Link Health Monitors control FO Failover from the active static route of the Primary IPSec VPN to the passive static route configured for the Secondary IPSec VPN.

I have added Blackhole Routes for subnets reachable using our IPSec VPNs to ensure that if a VPN tunnel goes down, unencrypted traffic is not mistakingly routed to our ISPs or the Internet. The Blkhole Routes are given the highest AD + least priority making it the last route hit when all other routes fail!

These Blackhole routes are not factored into our ECMP Load Balancing for regular internet traffic (non-VPN traffic) and are merely configured as a failsafe should the Static routes (WAN1 + WAN2) used by our IPSec VPNs to the private networks behind the remote VPN GWs fail.

This configuration avoids a SPOF (of only having one WAN link) and

provides availability through the rapid FO failover from one route to another in the event the primary WAN circuit goes down thanks to the Link Health Monitors,

while maintaining the highest IPSec security standard while in transit using the strongest possible AES 265 encryption and in the event both WAN circuits fail simultaneously, our routes over the IPSec VPNs fail safe to the Blackhole Routes preventing our traffic from leaving our network unencrypted!

For additional security I have segmented our network out into logically separate VLANs and use Policy based Routing to control the flow of information between the VLANs and what can get out to the internet.

With all of these in place Traffic can pass from our private networks (LAN/VLAN/DMZ) in the Corporate Head Office / Datacenter and remote Senior Living Communities to the other private networks in either the Corporate Head Office / Datacenter or remote Senior Living Communities.

Communication between remote private networks is facilitated by hairpinning traffic from one remote private network back through the hub before being directed out to the corresponding remote Spoke VPN Gateway on route to the private network of a different Community.

a spoke isn’t necessarily a FTGW, it could be VPN client software or it could be a Cisco device with a GRE VPN or a Windows device with an L2TP VPN.

Our switching infrastructure consists is a mixture HP 1820 48-port 24-port (half PoE ports for phones) and 8-port Switches, along with some legacy Procurve switches with extensive use of Vlans to segment our Corporate Business, VoIP and protected Server Networks while segregate our Web Application proxy / ADFS Proxy out onto our authenticated DMZ while also isolating our insecure Guest Wifi network out onto our unauthenticated DMZ.

For our Wifi we use Ubiquiti Edge Switch XP switches with passive PoE(formerly ToughSwitch) and Ubiquiti UAP-AC-PRO UAP-AC-LR Access Points for both our Corp and Guest Wifi networks, both running on separate Vlans.

In each community:

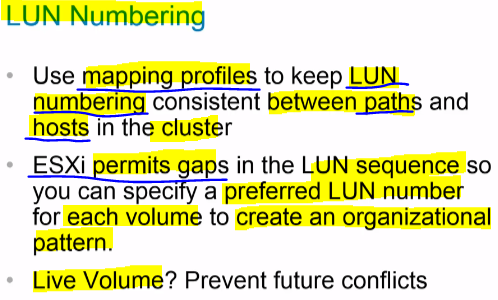

we have dual Dell PowerEdge R720 servers (2 500GB SATA drives in a RAID1 config for the C drive and 5 1TB SAS disks in a RAID10 config for the data drive), which are VMWare ESXi v6 hosts to Win Server 2016 DCs.

From which we utilize AD Group Policy + DA Desktop Authority for config mgmt. Both servers get their power thru Eaton UPS's so they have backup power should there be an outage.

All communities have an internet lounge with 2 to 3 workstations and a printer.

All communities have a segmented corporate network running on its own Vlan.

All communities have Kiosk training computers where they can complete compliance based training courses for health care workers.

Back at Headquarters:

We house the majority of our VMWare (vCenter) virtualized corporate server infrastructure, SAN, FC Fabric and Dell SC8000 Storage Array in our purpose built DC with dual mains lines + FM200 fire suppressant system.

We use Windows DFS Distributed File System to replicate community files back to our head office where they are be backed up using block level replication.

We use WDS for building / capturing images (with driver injection), deploying images (WIM) with WDS/PXE, and WSUS for scheduling patching (once a month) ) + reporting.

For Asset Management and tracking we used Cisco’s Meraki Systems Manager.

Tell us about your experience with network switches?

Our switching infrastructure consists is a mixture HP 1820 48-port 24-port (half PoE ports for phones) and 8-port Switches, along with some legacy Procurve switches with extensive use of Vlans to segment our Corporate Business, VoIP and protected Server Networks while segregate our Web Application proxy / ADFS Proxy out onto our authenticated DMZ while also isolating our insecure Guest Wifi network out onto our unauthenticated DMZ.

I have in the past had to manipulate the Voice VLAN feature + PoE to achieve VoIP Passthrough in certain communities where we use one ethernet cable to supply Voice + Data + Power to workstations as there may be limited ethernet cabling or in communities where it is prohibitively expensive to run new cabling.

We use Yealink T42G VoIP phones with integrated Dual Port Gigabit ethernet switch with Voice VLAN functionality + Power via 802.3af PoE (or local PoE injector) to supply both Voice, Data, and Power to troublesome collocated workstations.

we use the PoE injectors to supply power to the VoIP phones in locations where our main switch doesn’t supply PoE or doesn’t supply the correct voltage (this saves on the cost buying a new 48 port switch to supply the correct PoE).

The Voice VLAN feature allows me to send Voice VLAN tagged traffic to the VOIP phones Dual Port Gigabit ethernet switch while using the Access Port to passthrough untagged data traffic on the default VLAN to the Client PC, bypassing the need to use a trunk port and the Native VLAN with their drawbacks to accomplish the same task.

VLAN1 - LAN / Default VLAN - untagged by default

VLAN51 - Native VLAN for Legacy Equip, blocked from other VLANs - tagged

VLAN52 - MGMT VLAN - Net Equip Mgmt VLAN - tagged

VLAN2 - Faux VLAN - for unused ports - tagged - FW Policy blocks this from going anywhere

VLAN5 - SRG Business Network - tagged

VLAN6 - VoIP Network - tagged

VLAN10 - Office Guest Internet Network (wired) - tagged

VLAN11 - Training Network - tagged

VLAN20 - VMW Management traffic

VLAN21 - VMW vMotion Network

VLAN22 - WSFC Heartbeat Network + AG Cluster Replication traffic (ethernet VLAN)

VLAN23 - VMW FT logging for vSphere fault tolerance

VLAN 40 - Ubiquiti Mgmt Net (DMZ port) - tagged

VLAN 41 - Guest Wifi + Internet Lounge Computers (DMZ port)- tagged.

VLAN 42 - IOTCRAP / Camera Network - tagged

2.2 Describe the controls you have put in place to secure Guest Wifi access + IoT devices? How do you manage your Unifi Controller / Network?

I have setup + administer a Ubiquiti Unifi SDN Wifi Network for 33 Senior Living communities, consisting of

1 cloud base UniFi Controller + 200 Ubiquiti ToughSwitches +

600 Ubiquiti WAPs (Unifi AP AC PRO / LR)

I segment our Guest Wifi VLAN41 + IOT device VLAN42 networks into its own DMZ with its own dedicated Ubiquiti ToughSwitch switching equipment in order to completely separate insecure traffic out onto its own infra + VLANs this

allowing better bandwidth mgmt with QoS,

ensures Ubiquiti PoE devices receive the correct PoE voltage and secures us from VLAN hopping attacks.

VLAN40 Ubiquiti Mgmt Net / VLAN41 G_Wifi / VLAN42 IOT

this VLAN is used to centrally manage all Ubiquiti devices from our AWS hosted Unifi SDN Controller which itself is hosted in an isolated AWS account and is only contactable from the public IP addresses of our Corporate Firewalls, creates maximum separation from our corporate systems + network ensuring their security.

In order for the Ubiquiti Switches + WAPS out in the different communities to receive their configurations from and be managed by the Unifi Controller in AWS, I have configured FW Security Policies to allow communication from VLAN40 through the WAN interfaces to the Unifi Controller in the AWS.

I created a Custom Unifi Profile on the Unifi Controller to deliver both VLAN 40 + VLAN41 + PoE to Ubiquiti switches + WAPs in hard to reach places, while I modified the flat Unifi Profile for VLAN42 for the IOT/Camera devices network to deliver only VLAN60 + PoE to the end point device they are connected to.

Custom Unifi profile (VLAN40 + VLAN41)

The custom profile was necessary here because I was implementing a non-standard configuration where I needed to pass 2 VLANs out a trunk port while also configuring the port to send PoE to other Ubiquiti switches and Ubiquiti WAPs.

VLAN1 Default VLAN / Native VLAN:

For security reasons I move the Native VLAN to our Faux VLAN2 to ensure no untagged traffic travels on our net.

VLAN40 for the Ubiquiti Mgmt net (Switches + WAPs)

VLAN41 for the Guest Wifi + Internet Lounge network

Our WAPs (UAP HD Access Points) are configured to dispense IP Addresses in separate DHCP ranges according to the VLAN that SSID is configured to run on.

PoE:

I also use Custom Unifi profile to supply PoE to WAPS + IOT/ Camera devices so separate power lines are not required to be ran to hard to reach places and so the devices can easily be rebooted with a bounce of their switch port.

To power our Yealink T42G VoIP phones, I enable Active PoE (802.3af/A) / PoE+ (802.3at/A 25.5 watts) which allow the devices to auto negotiate the proper voltage + pins to use for the transfer of electrical power between the 2 devices using Active PoE (802.3af/A) which is supported on both ends.

PoE PassThrough Switches

can operate as both a Powered Device (PD) and Power Sourcing Equipment (PSE).

This means that the switch can be powered by PoE while simultaneously providing power by PoE to other devices such as IP phones or WAPs wireless access points.

I have configured Security policies to

allow traffic inbound/outbound on port 8080 for the Inform protocol only from communities public WAN IP addresses after I have adopted new Unifi devices via Ubiquiti Cloud Access portal,

allow traffic inbound + outbound on port UDP 3478 for the STUN protocol only from communities public WAN IP addresses as it allows the Unifi controller to initiate contact with Unifi devices, so it doesn't have to wait for the WAP to send an inform packet before communication can begin which speeds the entire process up.

FW Policy blocking IOT use of its own insecure DNS:

The order of the rules is important here as (we generally place the most restrictive rule higher in the list so it is enforced before the less restrictive rules lower down the list)

DNS traffic from any source is allowed in to VLAN42,

so the default Fort DNS server is able to communicate with VLAN42, then directly after this

DNS traffic from any source is blocked to all interfaces, preventing external DNS sources from being used.

You always want to use your own DNS servers.

Securely Adopting Remote Unifi devices over the public internet

Finally with our Unifi Controller setup + configured for mgmt via the Ubiquiti Cloud Access portal at unifi.ubnt.com we can securely adopt our remote Unifi devices over the internet

the UniFi Cloud Access Portal at https://unifi.ui.com/

the Cloud Access Mgmt feature (that we temporarily enabled when configuring our Unifi Controller)

a computer on the same VLAN as the Unifi devices to access our UniFi Cloud Access Portal, via a Chrome Browser with the Ubiquiti Discovery tool plugin installed.

The Ubiquiti Discovery tool plugin allows the UniFi Cloud Access Portal to discover any locally-available unmanaged UniFi Devices and adopt them once we login with our Ubiquiti SSO account with 2FA which ensures secure authentication.

Using the Ubiquiti Chrome Plugin to adopt WAPs:

Mitigates the inherent risks of remote L3 adoption of Unifi devices and is an entirely more secure process than allowing direct web access to the Unifi controller using the unencrypted insecure inform protocol, because

TLS is used to encrypt the communication between the laptop (same VLAN) and the UniFi Cloud Access Portal,

meaning the unencrypted inform protocol is only exposed locally and never travels over the internet until secure encryption keys have been established and the adoption process is complete.

What type of perf / net monitoring do you have? what features do you like about it?

I introduced WhatsUpGold net monitoring to provide real time alerting of network issues + is also a log management tool that delivers advanced visualization features that enable our team to pinpoint + remedy net anomalies minimizing downtime for optimal availability while lowering MTTR.

It provides App monitoring, network traffic analysis, discovery and net + virtual env monitoring.

Nagios net mon is cheaper + more complicated to install, it monitors Switches, Routers, Servers, and Apps using SNMP.

2.1 Describe the VPN Topology of your Network and what type of Redundancy / Availablility + Security you perform between Branch + HQ Locations ?

2.4 Describe how you configure a H+S VPN Topology?

Takes place at the Hub / VPN Concentrator + at the Spokes, and with essentially the same 3 part configuration necessary in both places where I

Define Route based IPSec VPNs Phase1+2 configs in order to establish secure IPSec VPN connectivity

Define Routing thru the secure IPSec tunnels with FO (thru Link Monitors) + LB (thru PBR)

Define Security Policy to allow traffic to flow between the connected networks.

2.4.1 Describe the Phase 1+2 configuration of your HUB FTGW 600E unit + How it interacts your Spoke 60Es/90Ds(avoid like the plague-FortGu)?

2.4.2 Describe how you have configured Routing (with LB+FO) in your H+S Topology?

At the Hub I configure static routes from the local interfaces (LAN/VLAN/DMZ) to the VPN interface which sends traffic via VPN to each comm remote VPN GW

I configure Link Health Monitors to trigger fast FO.

3 Describe the Sec Pol / PBR on the Hub / VPN Concentrator to permit traffic to flow between the Corporate Private Networks and the Community Private Networks?

2.4.2 Describe how you have configured Routing (with LB+FO) in your H+S Topology?

2.4.2 At the Spoke Describe the Phase 1+2 configuration of your Spoke 60Es/90Ds + How it interacts with your HUB FTGW 600E unit?

2.4.2 Describe how you have configured Routing (with LB+FO) at the Spoke's in your H+S Topology?

I configure Link Health Monitors to trigger FO.

2.4.2 Describe the Sec Pol / PBR at the Spoke Remote VPN GWs to permit traffic to flow between the Spoke Private Networks and the Hub Private Networks?

2.3 Describe how you use FW Rules / Security Policies (Proxy Options) / PBR - by Service (NAT mode) on your Firewalls to secure + optimize traffic flow in + out of each network segment (VLAN/subnet) on your network?

2.3.2Describe your use of DPI / full SSL/SSH Inspection in your Security Policies?

2.3.1 What are your 9 major Egress Policies?

2.3.2 What are your 7 major Ingress Policies?

2.3.2 Describe your use of VIPs + WAP/ADFS Proxy / F5 LB in securing inbound server traffic?

2.3.3 What are your 4 major Internal Traffic Policies?

2.2 Describe how you have segmented your network using VLANs + Subnetting?

2.2 Describe the Vlan tagging / Switching setup of your network?

2.2 What's the difference between a VLAN and a subnet?

2.2 Describe your use of VLANs and DMZ's in securing your business Servers + Data?

What type of dynamic routing protocols have you used?

I use OSPF dynamic routing at one of our locations where the community is in a forrested location with private chalets distributed about 1000 feet from the main community building, making running cables too expensive. As a work around I setup a wireless network bridge configured with authenticated single area private OSPF routing to share routing info out to the internet between all routers so the chalets have highly available access to the internet.

I use a Ubiquiti LightBeam AC (LBE-5AC-23, with 23dBi of antenna gain (giving us a range of 50 miles or more) to create the wireless network bridge between several buildings at our Narrow Glen community.

We dont use a great deal of dynamic routing as we have been able to get away with IPSec VPN Static Routing, Policy based Routing, and ECMP Equal Cost Multipath routing with Link Health Monitors and a weighted Load Balancing Algorithm for high availability + Failover of our active + passive routes, and to control the flow of traffic between our Locations + VLANs.

2.5 How would you maintain 24/7 network uptime / HA / IT service availability? five 9's (99.999% uptime)?

Active redundancy where redundant items of the same kind, a method to detect failure + auto FO systems to bypass failed items in a HA FT design to maintain uptime.

- Hot swapping of components, no need to power down.

- Temp sensors to throttle operating frequency on servers.

- Redundant Power Supplies on servers + UPS

- RAID arrays on all servers to provide data redundancy,

- Data Backups locally and to remote warm sites for DR.

- HSRP (Hot Standby Routing Protocol) to FO to hot standby router. HSRP allows host servers in DC to use a single virtual router (multiple routers) to maintain connectivity even if the first hop router fails.

We have designed our systems using a fault-tolerant design following Active redundancy principles using Load Balanced Clustered Virtual servers to ensure maximum uptime.

Active redundancy is used in complex systems to achieve high availability with no performance decline. Multiple items of the same kind are incorporated into a design that includes a method to detect failure and automatically reconfigure the system to bypass failed items using a voting scheme.

A fault-tolerant design enables a system to continue its intended operation, possibly at a reduced level, rather than failing completely, when some part of the system fails. FT systems are typically based on the concept of redundancy.

HSRP (Hot Standby Routing Protocol) fail over internet connections should the main connection go down. HSRP is a routing protocol that allows host computers on the Internet to use multiple routers that act as a single virtual router, maintaining connectivity even if the first hop router fails, because other routers are on "hot standby" - ready to go.

Hot swappable of components dont have to power down.

Temperature sensors to throttle op frequency on servers.

RAID arrays on all our servers to provide data redundancy, Data Backups locally and to remote locations. 3-2-1-1-0 Rule

Power: - Redundant Power Supplies on servers.

- UPS Uninterruptible Power Supply power supplies,

DRP: - have redundant remote network configured to

take over in the event of a natural disaster.

How did you handle the impact of COVID?

What Remote working solutions did you implement?

What type of secure remote access / VPN / RDP have you configured?

It didn't impact our IT team that much as we handle everything remotely anyway and had corporate users working remotely via our pre-autheticated Load Balanced Server 2016 RDS Farm where users connect through a web browser to our WAP Web Application Proxy / ADFS Proxy before being redirected to our RDS Farm via our F5-LTM Load Balancer where they can access their workspace and work remotely.

Out in the communities they scaled down to a skeleton crew and had strict cocooning regulations in place while providing a meal delivery service and temperature readings on all people attempting to access the buildings

2 Give an example of an instance of a technical problem which tested your problem solving / technical skills and were happy to solve.

2.5 What difficult networking problems have you encountered and how did you resolve them?

2.5 Describe some networking / systems issues you have had to resolve?

Give an example of an unexpected IT support issue you discovered and how did you handle it?

2.5 What difficult networking problems have you encountered and how did you resolve them?

SRG getting PC’s with no wired connectivity onto our corporate network so I had to get connectivity through the legacy wifi network in the building. Initial I tried piggybacking our corporate IP range on the legacy wifi network by setting static IP and DNS settings on the computers in question (while setting up Static IPs on the DHCP server) this gave connectivity for a period of time but then would lose their ability to communicate with the DC. This can happen fairly regularly when a network is setup with a DC not on the same Vlan as its clients, eventually a computer local to the Vlans subnet takes over as the master browser for that subnet.

To overcome this I setup a separate DHCP scope and used DHCP reservations on that scope, while also using reverse blocking rules on the legacy DHCP scope to prevent the Corporate computers from pulling an IP on the legacy DHCP scope and then I had consistent connectivity.

SRG Getting ADP timeclocks to connect back to headquarters as part of a takeover that was going on, before we got a chance to put our network equipment in place. We placed one of our Firewalls on the Vintage network next to the ADP Timeclocks and setup an IPSec VPN back to our HUB Concentrator and had their network admin put rules in place to allow the traffic.

2.5 SRG Troubleshooting DHCP Scope issues where PC’s were intermittently losing connectivity, it appeared as though there was an IP conflict as both devices showed as having the same IP in Dameware and DNS.

Both MAC addresses were showing on our Switches so they were online. So I checked the Firewall and could see the Host Name and IP were showing on there so it looked like it was getting out to the internet.

I set a static IP on one of the computers and it updated in DNS. I plugged it into her Shoretel phone which had pass thru enabled + it pull an IP from the VoIP DHCP scope.

It was at this point that I checked DHCP scope for the corp wifi and could see it had been used up by devices that shouldn’t have been on their (namely peoples phones), so corporate users had used the Corporate password to get on there.

2.5 SRG Packet Shaping

I had a problem in one of the recently acquired communities where I had limited bandwidth on our WAN links while we were waiting for new Broadband links to be installed. This meant that the Corporate VLAN we put in was saturating all our bandwidth and not leaving any service for our Guest Wifi network leading to complaints.

To overcome this I enabled packet shaping on the internet connection in order to give a dedicated amount of bandwidth to the wifi network so guests could access their email.

Describe a network crisis and how you handled it?

I have encountered 2 ransomware attacks in my time where corporate files were encrypted and ransomware payments demanded. The second attack was by far the worst resulting significant downtime, it was known as TheWorldIsYours Ransomware where attackers managed to brut force our publicly exposed Citrix server, gain administrative access to our network, wiping out all AD Domain Servers and computers! Luckily we follow the 3-2-1 backup rule and had snapshots of our data and server configurations replicating at the block level between our dual storage controllers so no data was lost. We got all our Servers backup and running using Thinware vBackup within 24 hours but had to engage external contractors to help re-install all 1600 client PCs which took about a week.

1 What recent network projects / Improvements have you architected / managed the implementation of recently that you are proud off?

What Systems Integration / optimizations have you made + how did they improve company performance?

Give an example of where you have leveraged technology for process improvement?

2 How did you have to adapt your network for:

Vintage Community Takeovers / Net Integration:

Community Wifi / Cameras Systems:

- Axis / Vitek / Hik / Geovision / ExacqVision IP based Security Camera’s.

Facilities Access Control:

- DKS Doorking, DXS, Viking, Vingcard, Maxxess.

In-House TV:

- ZeeVee, Thor, AVerKey, Creston

2 What Network Hardware have you configured and what features did you like / dislike about each?

2 What do you like / dislike about FortiGate's FortiOS?

2 Describe how you harden / upgrade a Firewall?

2 What sort of power control / backup power equipment have you used?

Are you using SDWAN yet?

How do you envision integrating SDWAN into your network?

And what benefits do you believe SDWAN will bring?

The move to SDWAN and a fully Software Defined Network (with increased opportunities for network automation) is on my plate for next year.

Describe the Layered Defense strategy / network segmentation strategy you used.

What is the difference between a threat, vuln, + a risk?

What's the difference between IDS v IPS? Give examples.

What are the steps to securing a Linux server?

Describe how a SIEM platform works.

How would you triage if something is high/med/low severity

At what point do you determine that a widespread malware outbreak is taking place versus a singular incident?

What sorts of anomalies would you look for to identify a compromised system?

What security measures have you implemented on your FWs?

What type security cert administration (PKI) have you done?

What sort of Vuln Mgmt / scanning do you do? Name at least 3 diff Vuln scanners and patterns to identify them

How do you increase Security Awareness across the company?

How have you improved the sec of your organization?

I drove a companywide security initiative to ensure the adoption + implementation of info sec BPs with a view to securing assets, educating users, + reducing incidents in the short, medium, and long term. MFA, SSO, SIEM and EDR

Exchange with mimecast filtering for spam control.

CarbonBlack / Bit9 whitelisting. BitDefender Anti-V + GravZo

I disabled use of SMB1, TLS 1.0 + 1.1 through GPO.

Syslog Servers to ingest logs from our servers.

I implemented a new secure password policy with password complexity rules + setup a Password Self–Service website (ManageEngine’s AD self-service plus product)

I implemented new account, account modification, and account termination procedures to bring us in line with SOX Compliance.

7 What Security Products / Equip have you used + configured?

Fortinet NGFW v6.0 / Untangle NG UTM Firewall v12.1 / Dell Sonicwall TZ 215 UTM Firewall / pfSense SG-4860 Sec GW.

Win Server FW, Linux Iptables/ufw firewall, Kali Linux.

WhatsUpGold / Nagios Core with MTRG integ for Net Mon

7 What Security experience do you have?

I recently drove a company-wide sec initiative to ensure the adoption + implementation of info sec BPs with a view to

- securing assets,

- educating users, and

- reducing incidents in the short, med, + long term.

- MFA, SSO, SIEM, + EDR,

- 3-2-1-1-0 Backup Rule (3 copies, 2 media, 1offsite/airgap)

3.2 How is encryption used to secure your data at rest?

I use Bitlocker Drive Encryption to encrypt at the VM level, so every volume gets encrypted (a key is required when mounting the volume), and stays encrypted so the whole volume is protected from offline attacks, even if a virtual disk gets stolen it still protected.

It protects at the file volume level so when the DB server is online, the volume is unlocked, though not decrypted. If a malicious party stole the whole drive, they still would not be able to attach + use it.

Detaching or Backing up a database to a different volume that is not protected by BitLocker causes any protection the file currently has to be lost.

For Servers in remote communities + Corporate laptops I enable Bitlocker FDE Full Disk Encryption so if a physical hard drive gets stolen the data stored on it is protected.

For our SQL Server instances I enable TDE Transparent Data Encryption, were the DB files themselves are encrypted (as well as backup files of the TDE-encrypted DBs).

Therefore, if either file is copied or stolen via a network share, the attacker still cannot read it.

With TDE you can encrypt the sensitive data in a DB and protect the encryption keys with a certificate.

TDE performs real-time I/O encryption and decryption of the data and log files to protect data at rest.

Backup files of DBs that have TDE enabled are also encrypted by using the DB encryption key. As a result, when you restore these backups, the cert protecting the DB encryption key must be available.

I use GP Restricted Groups to explicitly set and control the membership of a local group by replacing existing memberships with ones defined in the GPO, thus limiting who has administrative access. While also implementing LAPS Local Administrator Password Solution to manage the local account password of domain joined computers, it sets a unique password for every local administrator account and stores it in AD (limiting lateral movement if there’s a breach)

I have Advanced Audit Policy Configuration configured to log Success and Failure events.

3 How would you manage the configuration + security settings of computers on your domain?

3 How do you control all the Office 2013 / 2016 / 2019 settings on a network?

I use AD GPO in conjunction with Dell DA (Config Mgmt) + Bit9 process whitelisting to lockdown PCs,

while I monitor + defend our workstations using

Bitdefender (Win + Mac)+Gravityzone Cont Center +

CarbonBlack endp file behavior mon + real-time threat det.

While also doing a significant amount Powershell scanning to mon + detect suspicious activity across our AD, Servers, PCs

Secure Win Laptops using TPM+BitLocker Encryption

I use VirusTotal suspicious file + URL analysis - root cause analysis

I also integrated the ADSelfService Plus: Password Self-Service Portal which integrates with our AD domain + Ex email accounts to allow the user to register + set security questions before being able to reset their password and / or

unlock their accounts (network + email are linked together).

Introduced Config Mgmt + Sec Baselines + Golden Image to document, track, improve + approve network changes.

Introduced Meraki Systems Manager for Asset Management, which was very convenient to use through its browser based dashboard giving a detailed view of all pc and laptop assets.

MS CAL Management through the use of a KMS server.

AD Group Policy restrictions,

disabling legacy protocols (SSLv2).

password complexity requirements.

7 Give me an example of where you evaluated a risk and what control did you put in place to mitigate it?

While implementing new security controls + processes as part of our company wide digital transformation + security awareness program to simultaneously achieve refine our line of business tasks, improve security, while reducing OpEx .

ZT remote access to line of business apps using TLS/SSL based ADFS Federated Identity SSO solution.

Worked with AD as an LDAP repository, and wrote a number of powershell scripts for admin of data within AD. Automatically disabling User Accounts after 30 days

I've always approached IT with an eye on Security but it was in my role as a Systems Analyst at SDSU that I developed a deeper understanding of how a secure environment handling PII data should function.

I was part of the newly developed Campus Security Framework initiative, where I designed and implemented several new security controls such as:

McAfee EPO Anti-Virus + SunBelt Counterspy Anti-Spyware for centralized scanning, mgmt, + reporting of possible threats, compromises, + breaches.

I worked with the campus Info Sec Dept to developed + implement secure Server + PC builds.

Maintained our Software Licensing Inventory.

Implemented WSUS auto Patch Mgmt for our AD domain

MBAC MS Baseline Sec Analyzer.

SAST Code Analysis + Pen Tested Aidlink Web Portal

Any suspected security breach had to be reported to Info Sec Office and we had to send them copies of the logs of the suspected machine. We never had a security breach but McAfee popped false positives to be investigated, we took it off the network + shut it down.

We physically had to keep track of licenses for SW.

We had to educate all our users of the importance of not emailing or storing SSNs on their computers.

Any documents with social sec info were collected daily and sent away to be shredded.

Always using secure FTP.

We passed audits twice by outside auditors to see if we were in compliance.

How do you handle AntiVirus alerts?

Check the policy for the AV and then the alert. If the alert is for a legitimate file then it can be whitelisted and if this is malicious file then it can be quarantined/deleted.

The hash of the file can be checked for reputation on various websites like virustotal, malwares.com etc.

AV needs to be fine-tuned so that the alerts can be reduced.

What sort of attacks / Security incidents have you experienced? And how did you counter them?

What is your incident response plan / what incidence response methodology do you follow?

What is an incident and how do you manage it?

Any event which leads to compromise of the security of an organisation is an incident. The incident process goes like this:

- Identification of the Incident

- Logging it (Details)

- Investigation and root cause analysis (RCA)

- Escalation or keeping the senior management/parties informed

- Remediation steps

- Closure report.

Explain phishing and how it can be prevented.

List the steps to data loss prevention.

Describe what SQL Injection is ?

This is a server-side attack that takes advantage of vuls in web applications to send unauthorized DB queries

What's XSS and why is it bad? How would you rank it's severity?

Whats the difference between XSS v XSRF?

XXS Cross site scripting is a JavaScript vulnerability in the web applications. The easiest way to explain this is a case when a user enters a script in the client side input fields and that input gets processed without getting validated. This leads to untrusted data getting saved and executed on the client side.

Countermeasures of XSS are input validation, implementing a CSP (Content security policy)

C/XSRF Cross Site Request Forgery is a web application vulnerability in which the server does not check whether the request came from a trusted client or not. The request is just processed directly. It can be further followed by the ways to detect this, examples and countermeasures.

DDoS stands for distributed denial of service. When a network/server/application is flooded with large number of requests which it is not designed to handle making the server unavailable to the legitimate requests. The requests can come from different not related sources hence it is a distributed denial of service attack. It can be mitigated by analysing and filtering the traffic in the scrubbing centres. The scrubbing centres are centralized data cleansing station wherein the traffic to a website is analysed and the malicious traffic is removed.

Countermeasures:

- Implementing detection mechanisms, especially signal analysis techniques

- Deploying HA solutions and redundancy resources

- Disabling non-required service

- Traffic pattern analysis

Explain how the TCP handshake works. How is it different from UDP?

TCP is connection oriented, while UDP is connectionless.

The data exchange is done, thanks to a three-way handshake technique, shown here. The first step is the client sending an SYN packet to the server. The server then responds with an SYN-ACK packet, if the target port is open. Finally, the server receives an ACK packet and a connection is established.

SYN: Starts the connection

ACK: Acknowledges the reception

RST: Resets a connection

FIN: Finishes reception

URG: Indicates urgent processing

PSH: Sends immediately

Chain of custody?

For legal cases the data/device (evidence) needs to be integrated, hence any access needs to be documented – who, what when and why. Compromise in this process can cause legal issues for the parties involved.

CIA triad?

Confidentiality refers to protecting data by granting access to data (for example Personal identifiable information PII of clients) only to authorized persons and groups.

Integrity refers to the fact that data should be protected during every process. Simply is ensuring the trustworthiness, consistency and accuracy of data

Availability refers to ensuring that the data is available by authorized users when it needed.

7 Whats more important Security or Operations?

it's a balance! Sometimes ops takes priority + security might need to take a back seat, but that’s only for an accepted risk, or sometimes Security is going to lead.

You have to make sure you right size + put the investment in the right place to make sure your users are protected.

7 What Compliance Standards do you have experience off?

At SRG we had to deal with Sarbannes Oxley as one of our partner organizations was a publicly quoted company.

Short-Sighted Compliance:

Many organizations feel they can get away with doing the bare minimum by meeting compliance standards. What compliance really does is give a false sense of security without ensuring protection. It is checking a box rather than solving a problem.

3 What sort of Config Mgmt / Asset Inventory do you do?

For Asset Management and tracking we used Cisco’s Meraki Systems Manager.

7 Introduced Meraki Systems Manager for Asset Management, which was very convenient to use through its browser based dashboard giving a detailed view of all pc and laptop assets giving its connectivity status, SSID, Name, Serial Number, Model, Warranty, BIOS, installed software and performance.

What are the various ways by which the employees are made aware about AUP / info sec policies and procedures?

Employees should undergo mandatory information security training post joining the organisation. This should also be done on yearly basis, and this can be either a classroom session followed by a quiz or an online training.

Sending out notifications on regular basis in the form of slides, one pagers etc. to ensure that the employees are kept aware.

What are your views on usage of social media in office?

Social media is acceptable, just ensure content filtering is enabled and uploading features are restricted. Read only mode is acceptable till the time it does not interfere with work.

Not sure if the data is secure or not but users can take steps from their end to ensure safety.

- Connect with trusted people

- Do not post/upload confidential information

- Never use the same username password for all accounts

What are your thoughts on BYOD?

If it's being going to be used for work purposes then it needs to enrolled as a managed device.

2.5/7 What Net Mon experience do you have?

2.5 We don’t use Security Fabric as we use VDOMS for FW virtualization and Security Fabric doesn't work with VDOMs!

7 WhatsUpGold Net Infra monitoring:

mon+alerting services for SAN, servers, switches, apps.

Customizable dashboard so you can see whatsup!

Network maps / (live net topology shows outages location).

virtual overlay shows virtual network connectivity, as it differs from the physical connectivity of your physical network.

Heat maps identify choke points or interfaces with restricted throughput, by become amber or red .

Sends realtime alerts via email, text before users report.

Captures the NetFlow data (traffic patterns) from our routers.

It also monitors Ex, SQLS, + SharePoint apps.

7 What sort of log Management do you do?

7 What sort of Security, Logging, Log Analysis Solution have you used?

2.5 Describe how you do auditing and Logging in your current network?

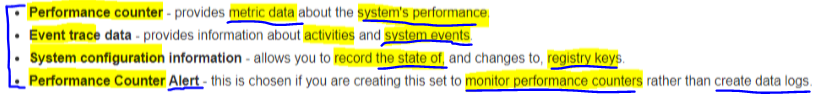

Data Collector sets:

Performance counter can be used to monitor the amount of replication that has taken place.

Describe how you do auditing and Logging?

We configure auditing and logging to send our encrypted logs to FortiAnalyzer as our SIEM for logging and event analysis as Logging on the Fortigates themselves is somewhat limited.

FortiAnalyzer allows us a central pane of glass to view reports and system event log messages in one place, while expediting our daily auditing schedule (to inspect logs for signs of intrusion and probing).

Why is DNS monitoring important?

Name 4 types of DNS records and what they signify.

What information would you include in a SOC report?

Do you have any experience SIEM / SOAR Solutions?

How do you secure your publicly available Web Services? (Yardi, Exchange OWA / ECP?)

How do you secure your AWS Cloud Presence (CASB/SASE)?

What sort of Identity Mgmt (ZTNA) do you do?

2.5 What sort of packet and protocol analysis have you done and why?

2.5 What Wireshark experience / Packet sniffing do you have?

2.5 Describe the difference between a Network SPAN and a Network TAP.

2.5 What of network test equipment have you used to test / put load on switches, routers, and wireless bridges.

2.5 How have you configured your home network / lab?

7 What sort of Server Monitoring do you do?

What are the steps to securing a Linux server?

Use Logwatch to monitor logs on our file servers.

Get daily emails from all our backup jobs.

Get daily security reports from our remote servers.

Get weekly emails from our untangle router.

Get security alerts / log extracts from our Sonicwall VPN.

Use DenyHosts on our web server to block suspicious ip's.

Symantec Cloud Alerts when there are PC issues.

Use VMs to decrease the severity of op sys sw faults.

DMZ Monitoring / dedicated IDS

To securely monitor activity in our DMZ I use a separate ZoneRanger monitoring appliance from Tavve inside the DMZ to monitor all servers, devices and applications with access from the internet,

it creates a secure conduit through the firewall to proxy encrypted SNMP data back to our centralized network management / monitoring station, for logging and has the ability to send a notification via e-mail, pager or other immediate alerting method to administrators and incident response teams.

I do not open firewall ports so that the existing network management and monitoring software can communicate with the DMZ devices as that opens up a new attack vector from the DMZ as the ICMP + SNMP protocols used to poll devices and keep track of availability are in fact not secure and can be exploited.

Honeypot

I also place a honeypot (Thinkst Canary / Dshield Honeypot ) in the DMZ, configured to look like a production server that holds information attractive to attackers.

The idea is to divert attention from your "real" servers, to track intrusion patterns, and even trace intrusion attempts back to the source and learn the identity of the attackers.

Binary Canary

Website monitoring, Server monitoring, Email monitoring, FTP Monitoring, DNS Monitoring, SMTP Monitor, PING monitor, Uptime Monitoring, router monitoring

3 How much Windows Server / Active Directory / Group Policy Experience do you have?

I've ran AD Controllers and Win File servers since 06.

3.3 Explain your use of ADMT AD Management Tool?

3.1 Do you employ any kind of SSO Single Sign-on solution on your domain?

3.1 What is Active Directory + How is your AD Infrastructure backed up?

It is the MS's authentication service + directory service for centralized management of domain user accounts, groups, and computers (objects) access rights.

To backup AD I use scheduled tasks running on our dedicated backup server to run Powershell scripts using WBAdmin cli of Windows Server Backup to reach out to our virtualized DFS Hub File servers + primary and secondary DCs in our HQ Datacenter and pull full data volume backups + system state backups on a nightly basis.

In addition to our centralized backups in the DC I also schedule distributed backups of All field domain controllers to local, secure disk storage in each community office, where WBAdmin backs up to a dedicated virtual disk which it actually takes over and removes from view anywhere on sys.

This dedicated invisible virtual disk contains only the most recently backed up copy of the system state of the DC, so should the DC operating system fail, this copy can be used to quickly restore the machine.

the only way you can dedicate a disk to our backups is through a scheduled backup, it is not with manual one time backups.

The dedicated disk is completely overwritten the first time it is written too, while Windows Server Backup prevents the disk from being visible in, as it's entirely dedicated to backups.

To make this method secure, configure backup share perms so that only domain admins can access this shared drive.

AD backups are very disk-intensive operations, backups may need to wait until weekends or other less busy periods.

I use dedicated backup agent service accounts with service admin credentials that are only used to perform backups for DCs to maintain separation of accounts from those used to backup application servers because the service account that is used to back up domain controllers must be a highly privileged service administrator that is part of the Backup Operators group.

Members of this group are considered service admins, because the group’s members have the privilege to restore files, including the system files, on domain controllers.

Individuals who are responsible for backing up applications on a member server should be made members of the local Backup Operators group on that server — as opposed to the Backup Operators group in AD. If the same backup agent service account is used for backups on both DCs as well as other appservers, then the app servers could potentially be compromised to gain access to this highly-privileged account.

The System State Backup is expedited as I have virtualized writeable DCs that include the GC, DNS, + FSMO flexible single master operation roles , not WDS - trimarc) in our HQ DC and backups run between VLANs over our 10 g/bit ethernet, in order to logically air gap our backups as they contain sensitive information such as

The KRBTGT account password

which is set when created & practically never changes.

The krbtgt account is the domain service account. This account is disabled but is used for KRBTGT Kerberos Tickets.

if an attacker was able to compromise one of our AD Backups and learn the password to the domain service account they could create golden tickets that would allow them to generate auth material for any account in AD and so subvert Kerberos auth by forging Kerberos tickets (assigning new authentication tokens at any level) to enable unauthorized access with a view to taking over the domain in a Pass the Ticket attack.

I archive our backups for a maximum of 180 days, as they become useless for restoration after that due to the AD Tombstone Lifetime being set to 180 days by default or >

If AD Recycle Bin is enabled, the backup lifetime is equal to the deletedObjectLifetime value or the tombstoneLifetime value, whichever is less.

I run System State Backup for our AD Infra in order to

- back up the registry,

- back up our boot files,

- back up our system files, and

- back up application specific things such as AD

AD is all stored in system state so when you backup system state you are in fact backing up AD as well

If you have AD Domain Services, it'll backup both ntds.dit (AD database) as well as the SYSVOL directory.

If you have AD Certificate Services installed on your Domain Controller / Certificate Authority, it will back up the DB.

The thing you really need to remember, though, is when you perform a restore of AD. You will want to make sure that you reboot your DC into AD Restore Mode, so DSRM Domain Services Restore mode as we call it. And then you can perform an authoritative restore of the DB.

if we wanted to perform a non-auth restore of this node, we can just simply perform a system state recovery restore on this node. Once we restarted this node then, it would receive its DB from another node in the cluster.

Cluster Database on a cluster node.

IIS on a web server.

IIS metabase - C:\Windows\System32\inetsrv + any websites you have (C:\inetpub\wwwroot)

Hyper-V VMs + Host Component

on a Hyper-V Host machine, will get all host level settings + all VM configuration settings.

In addition to the backups themselves I also:

archive the Admin account and DSRM password history in a safe place for as long as the backups are valid, that is, within the tombstone lifetime period or within the deleted object lifetime period if AD Recycle Bin is enabled.

I also synchronize the DSRM Domain Services Restore mode password with a domain user account (Administrator or Domain Admins or Enterprise Admins groups) in order to make it easier to remember, this synchronization must be done as preparation in advance of the forest recovery.

The Admin account is a member of the built-in Admins group by default, as are the Domain Admins and Enterprise Admins groups. This group has full control of all DCs in the domain.

I also generate + keep detailed daily reports on the health of our AD DS infra using a scheduled task + my AD Health Check Powershell script to email me a report on the status of our AD DS components, such as NTDS, Netlogon, DNS, etc.

so that, if there is a forest-wide failure, the approx time of failure can be identified, so an accurate backup can be identified that holds the last safe state of the forest .

The script checks the following AD DS components:

- Pings all the DCs in the forest

- Verifies that the Netlogon service is running

- Verifies that the NTDS service is running

- Verifies that the DNS service is running

- Runs the DCdiag Netlogons test to ensure the appropriate logon privileges allow replication to proceed

- Runs the DCdiag Replications test to check for timely replication between directory servers

- Runs the DCdiag Services test to see if appropriate supporting services are running

- Runs the DCdiag Advertising test to check whether each DSA is advertising itself, and whether it is advertising itself as having the capabilities of a DSA

- Runs the DCdiag FSMOCheck test on the DCs that hold the FSMO roles and the enterprise tests on the domain itself

3.1 What measures do you take to secure AD?

To counter a possibly compromised krbtgt password I:

Change krbtgt password 2x every year (DoD STIG requirement)

+ after any AD admin leaves or

To contain the impact of a compromise of a previously generated golden ticket, reset the built-in KRBTGT account password twice, which will invalidate any existing golden tickets that have been created with the KRBTGT hash and other Kerberos tickets derived from it.

Enable AES Kerberos encryption (or another stronger encryption algorithm), rather than RC4, where possible.

Ensure strong password length (ideally 25+ characters) and complexity for service accounts and that these passwords periodically expire.

Also consider using Group Managed Service Accounts or another third party product such as password vaulting.

Limit domain admin account perms to domain controllers and limited servers. Delegate other admin functions to separate accounts.

Limit service accounts to minimal required privileges, including membership in privileged groups such as Domain Administrators.

3.3 Describe the 5 types of Win Serv backup using WBAdmin?

wbadmin start systemrecovery

BMR+SystemState+all vols+all apps

BMR (system components, system drive+vols)

Windows Recovery environment. No OS.

Wbadmin systemstaterecovery (2012)

Registry, boot files, system files,

AD on DC (SysVol, ntds.dit)

DSRM AD Restore Mode - auth restore

IIS metabase + websites

Hyper-V Host + VMs

Cluster Host. Certificate Services

3.3 Describe the 5 types of Win Serv backup using WBAdmin?

Azure Backup (dom controllers + BMR, only NTFS,

cannot copy VHDXs to the cloud (2012)

MARS MS Azure Recovery Service agent (2012)

3.2 What type of File Server setup do you have? And how do you have its storage configured?

3.2 What MS DFS experience do you have?

3.2 How are Files / data replicated and backed up?

3.2 How do you monitor your DFS replication + your backup infrastructure?

3.1 What type of AD Security Model (Shares + NTFS Perms see below) do you use and why?

3.1 How do you control Share Permissions v NTFS / Folder permissions (AD groups / Inheritance (sub-folder perms) / Effective Permissions)?

3.2 What sort of Archiving / Backup / Recovery of User Data / Data Recovery do you perform?

3.2 How can files be recovered from the ConflictAndDeleted or PreExisting folders?

3.2 What’s the difference between standalone and fault-tolerant DFS installations?

3.2 Where exactly do fault-tolerant DFS shares store information in Active Directory?

3.2 What problems have you encountered with DFS?

3.3 How do you handle Win Updates / Patch Mgmt?

SCCMs integration with WSUS for scheduling patching (once a month) + reporting.

2.5 What type of print solutions have you administered?

3.2 What type of File Server setup do you have? And how do you have its storage configured?

In each of our communities, we use dual virt Win2016 file servers distributed across all our communities to provide locally redundant file services while also utilizing FT Domain-based DFS Namespaces + replication for HA and BC.

by storing DFS Namespace info in AD and having AD replicate that DFS Namespace info to all DFS subscribing DCs, making it highly available without having to use WSFC !

To make a domain-based namespace fault tolerant, you need at least 2 namespace servers + 2 DCs.

We have dual DC's in every Site with DFSN, DFSR, AD sites and services (with local subnet mapping to localize resource access) all configured appropriately and running on our DCs which is beneficial as they are already running other services like DHCP, DNS and AD sites and services which improves our namespace site awareness and the perf of domain queries.

Having dual DCs / DFS Namespace servers in every Site

providing DFS Client referral resolution within each community, as the AD site configuration is used to determine the nearest namespace server which clients can get their DFS referrals from.

DFS allows us to organize many geographically distributed SMB file shares into logical namespace folders (DFS Roots) in a distributed file system, so users can access files using a single path to files located on multiple servers without needing to know their physical location.

DFS provides location transparency by automatically redirecting users to the nearest copy of the data using Windows AD Sites + Services least cost calculations.

I use FSRM File Server Resource Manager to manage user file quotas, file perms, screening policies, while also using it to monitor volumes that are involved in DFS Replication.

Right now we give corp staff a 10GB quota.

Much of what is stored is office files (Word, Excel, Powerpoint) along with larger files from the Adobe CS suite and Autodesk products. To do quotas you would have to enable it on the volume in windows.

DFS replication

provides data redundancy by replicating community files back to our head office datacenter in a H+S replication topology with dual Hub Servers doing Data Collection and from there we do block level backups using our SC8000 SAN.

All spokes (Branch servers) can replicate with our dual Hub Servers and vice versa but spokes cannot replicate with each other, active file writes only happen at the communities.

If one Hub Server goes down, the other takes over and replication continues until the Hub Server comes back online, minimizing the impact of any outages.

We also use our DFS Replic H+S top to publish data out to comms for use in software installs and client PC patching.

To prevent branch clients from being able to browse to other branch servers, I have created one namespace for each branch server / hub server pair

I have created one replication group (set of members / servers) + one replicated folder per community, and put all other folders into the replicated community folder because we have a fast network.

Creating a single replicated folder with many subfolders is a way to throttle the amount of data replicated concurrently and minimizes disk I/O. This simplifies the process of deploying replicated folders because the topology, schedule, and bandwidth throttling for the replication group are applied to each replicated folder, as opposed to creating multiple replicated folders which would increase the number of concurrent downloads with higher throughput, but can potentially cause heavy disk I/O usage for staging file creation, staging folder cleanup, and so forth.

The DFS replication group schedule is set to replicate every 15 minutes, Monday to Friday, 7 days a week.

In the datacenter

we have 2 virtualized Win 2016 servers with DFS namespace in a VMWare HA Cluster running separately on 2 VMWare ESXi 6.0u1a Hosts connected to our Dell SC8000 array for all of our storage needs.

The advantage of this is that when we need to upgrade a server or apply a patch or compensate for a crash, we can do them all without requiring downtime as we have redundancy at the application level.