Methods for dealing with sparse and incomplete environmental datasets

By douglowe

Methods for dealing with sparse and incomplete environmental datasets

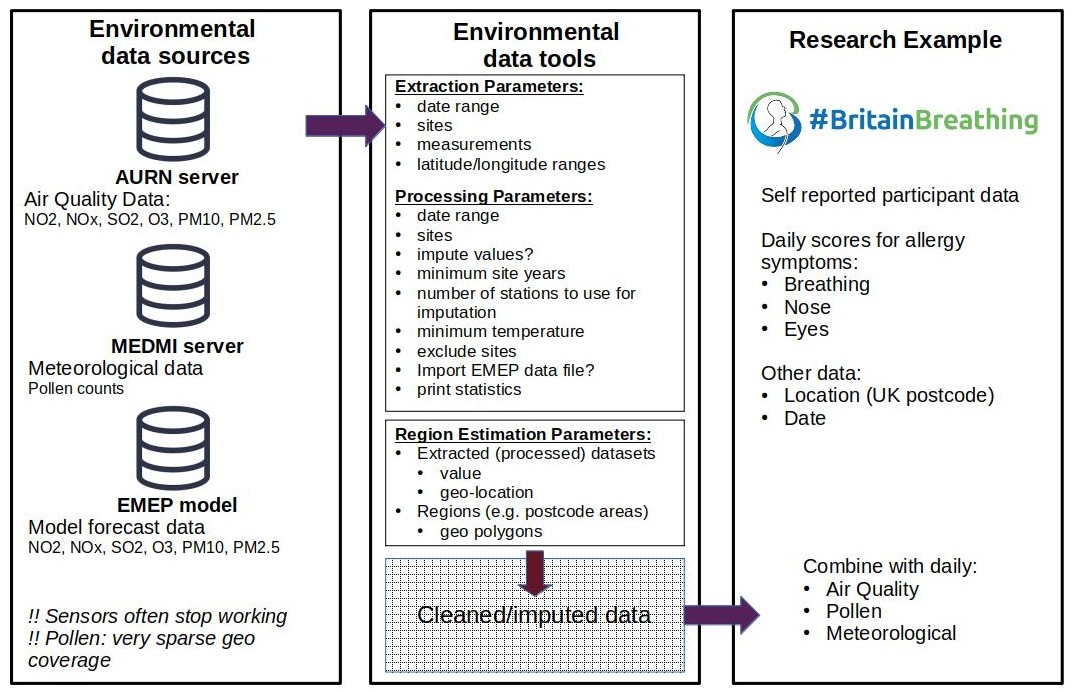

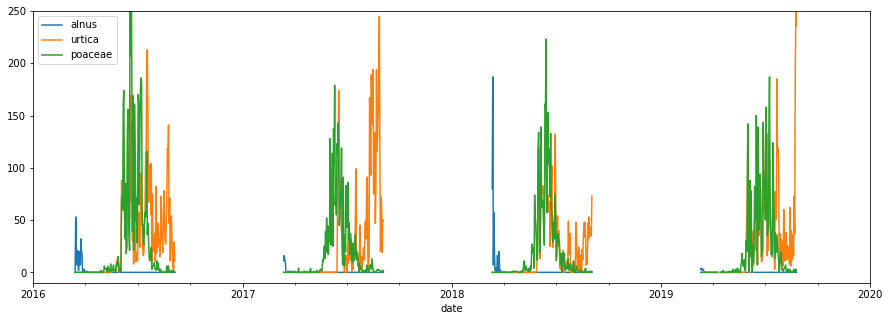

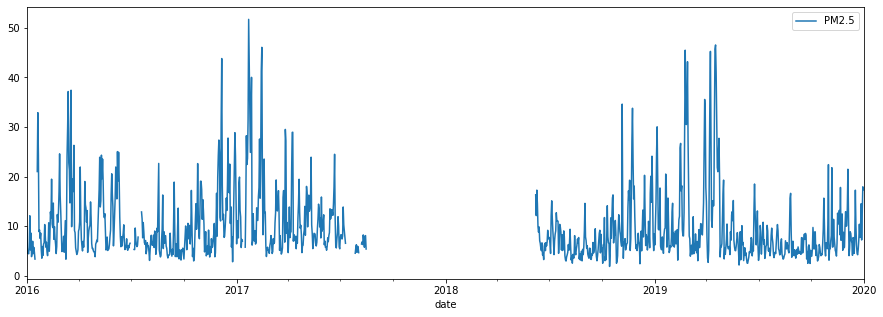

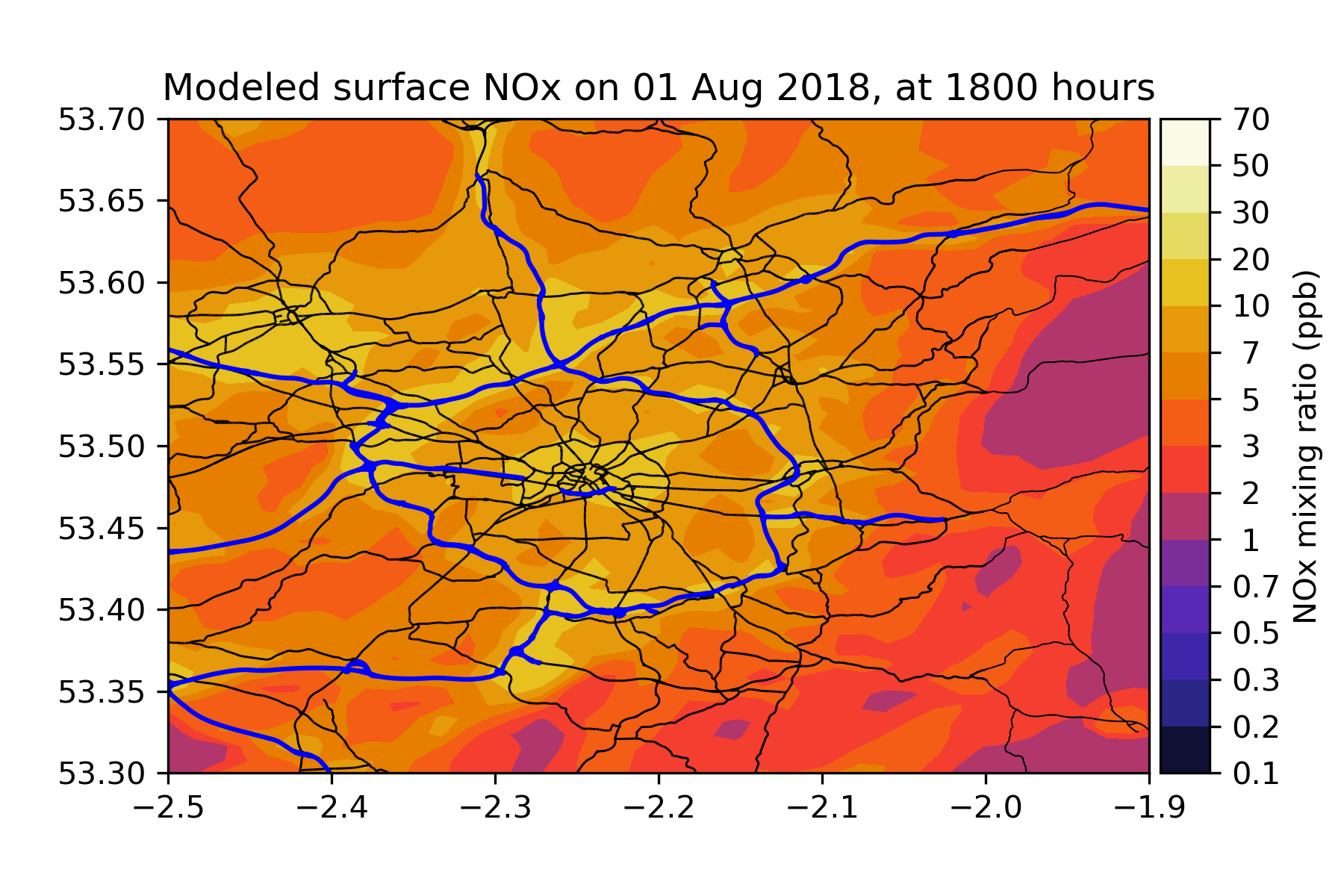

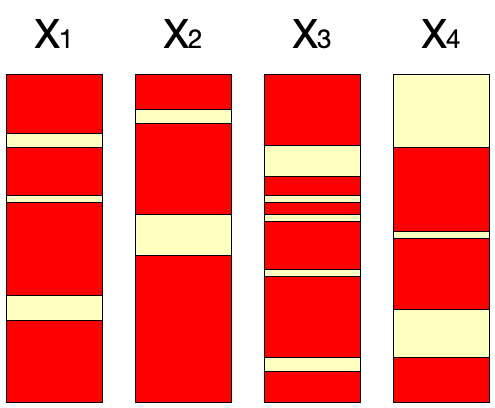

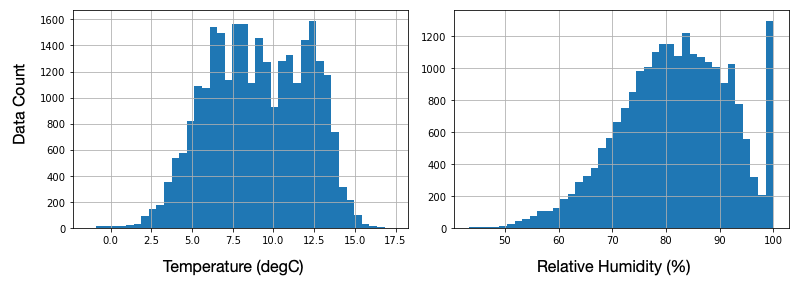

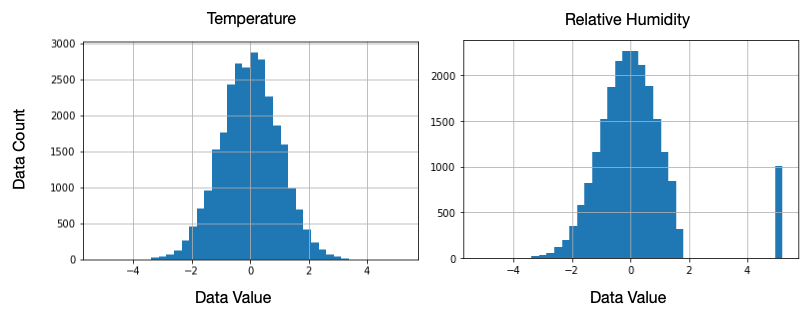

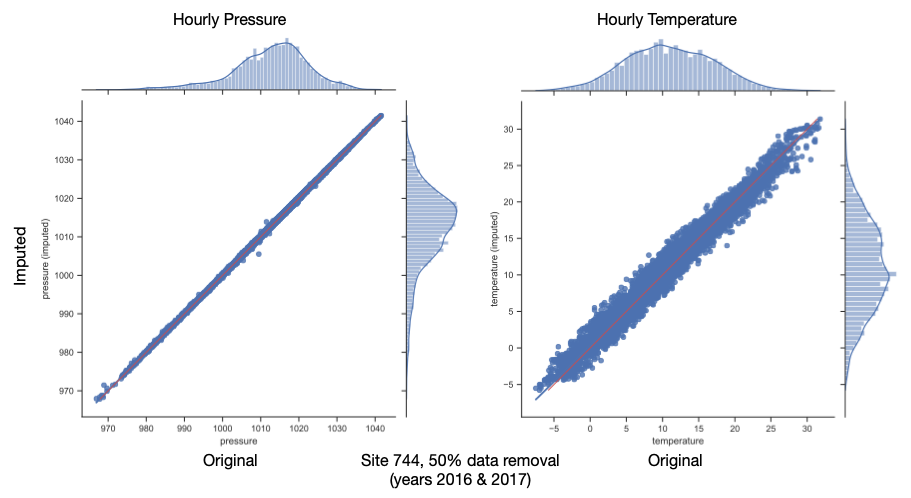

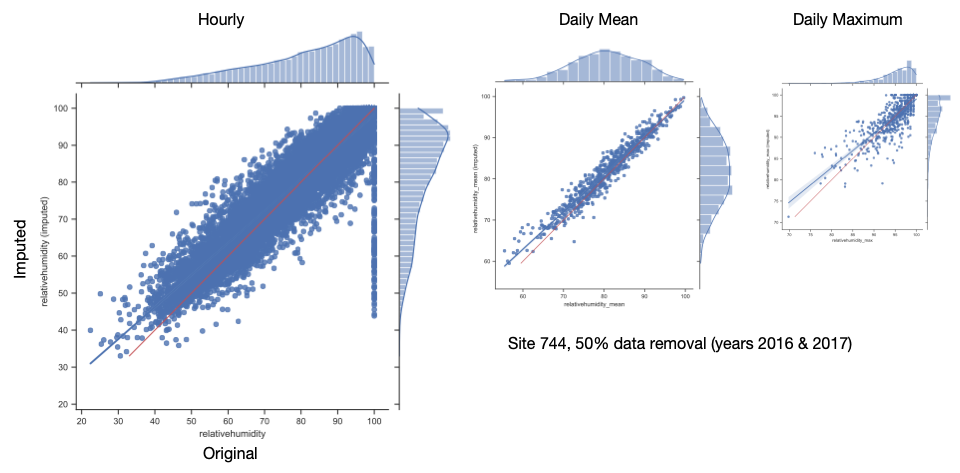

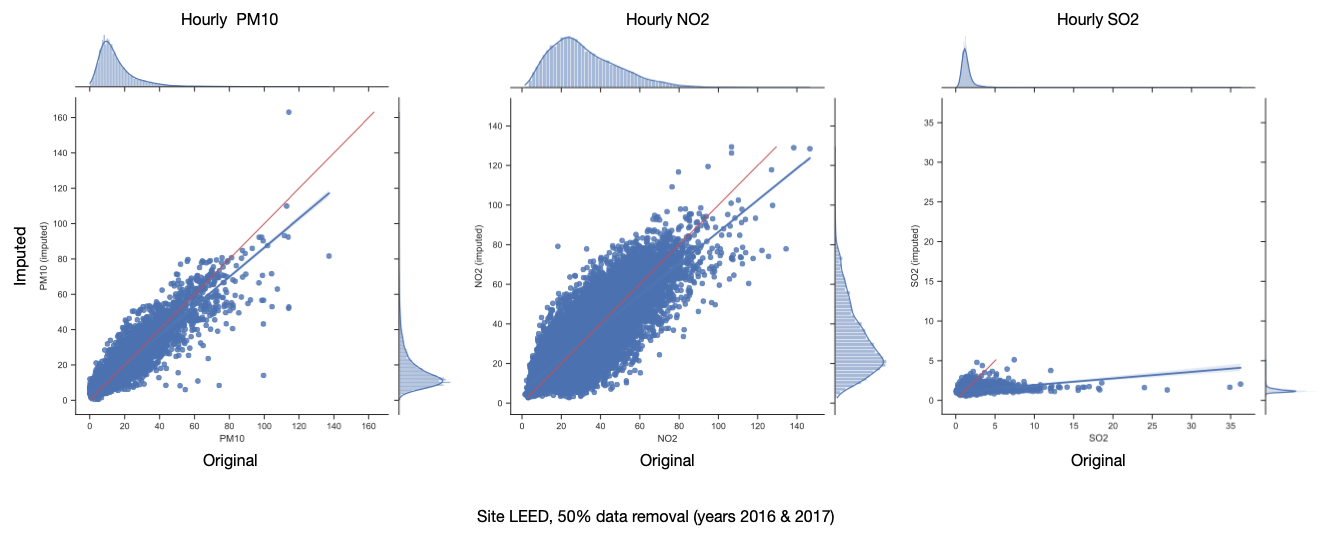

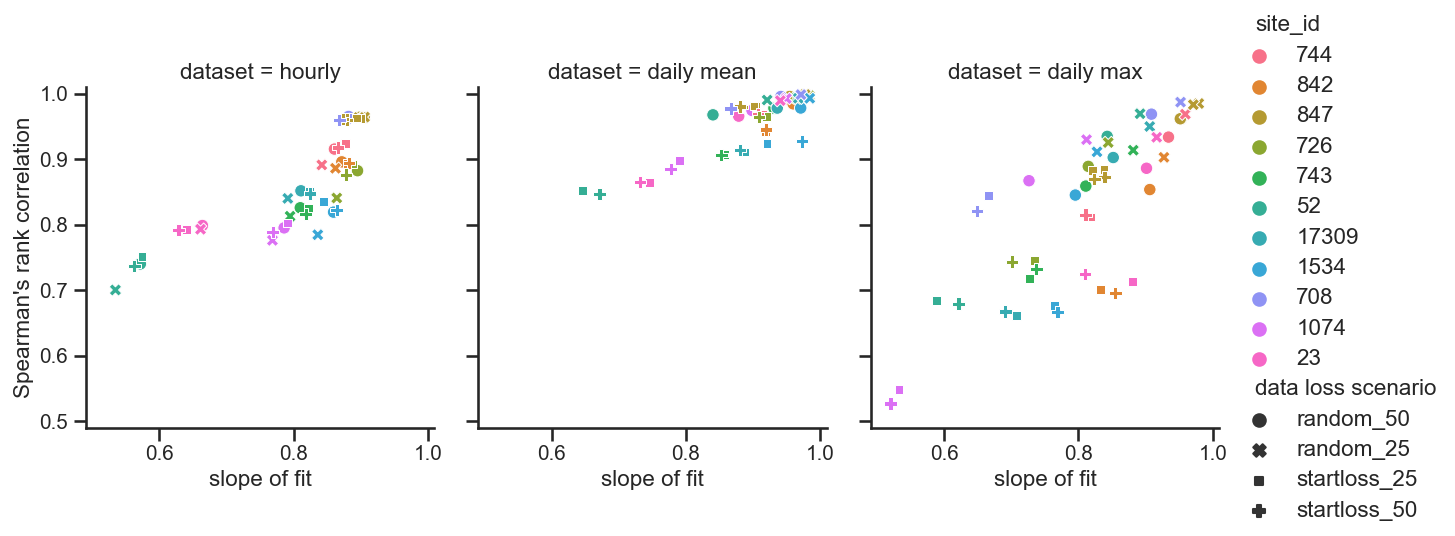

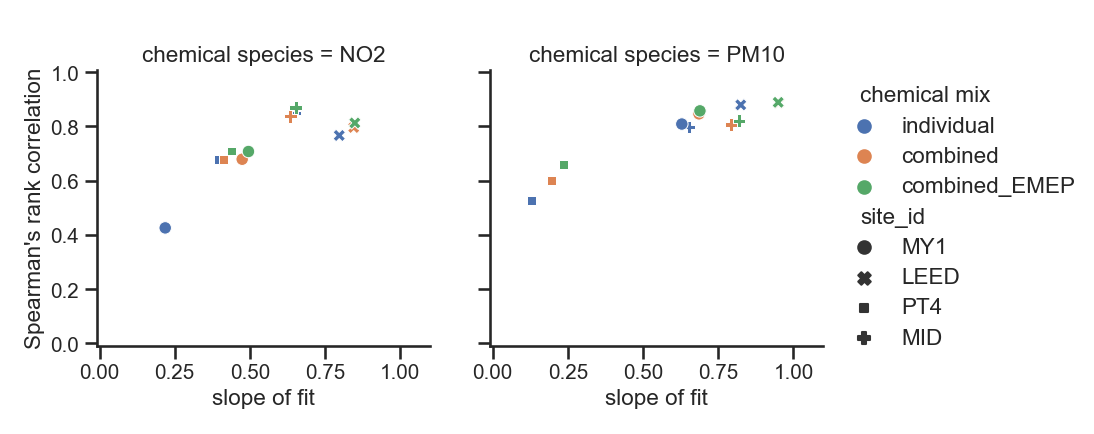

Whilst the importance of quantifying the impacts of detrimental air quality remains a global priority for both researchers and policy makers, transparent methodologies that support the collection and manipulation of such data are currently lacking. In support of the Britain Breathing citizen science project, aiming to investigate the possible interactions between meteorological or air quality events and seasonal allergy symptoms, we have built a comprehensive data-set, and a web application: ‘Mine the Gaps’, which present daily air quality, pollen and weather readings from the Automatic Urban and Rural Network (AURN) and Met Office monitoring stations in the years 2016 to 2019 inclusive, for the United Kingdom. Measurement time series are rarely fully complete so we have used machine learning techniques to fill in gaps in these records to ensure as good coverage as possible. To address sparse regional coverage, we propose a simple baseline method called concentric regions. ‘Mine the Gaps’ can be used for graphically exploring and comparing the imputed dataset and the regional estimations. The application code is designed to be reusable and flexible so it can be used to interrogate other geographical datasets. In this talk we will cover the development of these datasets - discussing some of the choices that we made, as well as how reliable the imputation and estimation processes are for the different meteorological and air quality measurements. We will also demonstrate the ‘Mine the Gaps’ application. The datasets that we discuss in this talk are available to download at https://zenodo.org/record/4416028 and https://zenodo.org/record/4475652. The data processing toolkit is written in python (and uses scikit-learn for the machine learning processes) and is available at https://zenodo.org/record/4545257. The regional estimation code is written in python and available at https://zenodo.org/record/4518866. The ‘Mine the Gaps’ application is written in Python, using the Django web framework and can be accessed at http://minethegaps.manchester.ac.uk. The code repository will be linked to from the website when it is released.