Data-driven galaxy morphology models for image simulations

Francois Lanusse

with Rachel Mandelbaum

Blending Task Force Meeting

7 Jan. 2019

A few words about Generative Models

Variational Auto-Encoder

- Trained to reproduce the input image while trying to match the latent representation to a given prior

\log p(x) \geq E_{z \sim q_{\varphi}(. | x)}[\log p_{\bm{\theta}}(x | z)] - \mathbb{D}_{KL}[q_{\varphi}(\bm{z} | x) || p(z)]

Balance between code regularization and image quality

Input

Reconstruction

Input

Reconstruction

\log p(x) \geq E_{z \sim q_{\varphi}(. | x)}[\log p_{\bm{\theta}}(x | z)] - \mathbb{D}_{KL}[q_{\varphi}(\bm{z} | x) || p(z)]

Low KL divergence

High KL divergence

Illustration from Engel et al. 2018

Conditional Masked Autoregressive Flows for sampling from VAE

- MAF is a state-of-the-art ML technique for density estimation using neural network

- We train a VAE with high KL divergence (i.e. low reconstruction loss) and use a MAF to model the effective distribution of the latent space

- Using a conditional MAF, we can adjust the distribution in the latent space to correspond to populations with desired properties

flux_radius [normalised]

code distribution

for real images

code distribution

learned by MAF

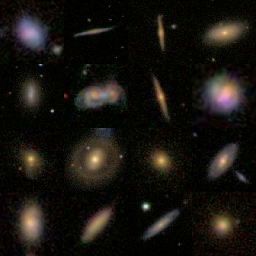

Training a Generative Model on COSMOS galaxies

- We use the GalSim COSMOS 25.2 sample as our training set

- We include the PSF as part of the generative model

- Last layer of the model is a convolution by the known PSF

- Generative model will output essentially unconvolved images

- We use a proper log likelihood taking into account the noise correlations

- We first train an unconditional VAE (time consuming) and then train on top of it a conditional MAF sampler (relatively fast)

- Our fiducial model is conditioned on size and magnitude

Some examples

Parametric

MAF-VAE

COSMOS

AutoEncoder Reconstruction

Residuals

Second order moments

Morphological

statistics

M and D statistics from Freeman et al. 2014

The GalSim Interface

Packaging and serving models

- We want a generic interface that can be used to execute any user provided trained generative model

- Tensorflow Hub Library:

- Computational Graph and trained Weights packaged in single .tar.gz archive

- Takes a set of named inputs, returns a tensor

- Package additional meta-information (e.g. pixel size, stamp size)

- Created galsim-hub, a repository of trained deep generative models which can directly be used by installing the galsim-hub GalSim extension:

$ pip install --user galsim-hubimport galsim

import galsim_hub

from astropy.table import Table

model = galsim_hub.GenerativeGalaxyModel('hub:cosmos_size_mag')

# Defines the input conditions

cat = Table([[5., 10. ,20.],

[24., 24., 24.]],

names=['flux_radius', 'mag_auto'])

# Sample light profiles for these parameters

ims = model.sample(cat)

# Convolve by PSF

ims = [galsim.Convolve(im, psf) for im in ims]

modules:

- galsim_hub

psf :

type : Gaussian

sigma : 0.06 # arcsec

gal :

type : GenerativeModelGalaxy

flux_radius : { type : Random , min : 5, max : 10 }

mag_auto : { type : Random , min : 24., max : 25. }

image :

type : Tiled

nx_tiles : 10

ny_tiles : 10

stamp_size : 64 # pixels

pixel_scale : 0.03 # arcsec / pixel

noise :

type : COSMOS

output :

dir : output_yaml

file_name : demo14.fits

input :

generative_model :

file_name : 'hub:cosmos_size_mag'Python Interface

Yaml driver

$ galsim demo.yaml

Interfacing with BlendingToolKit

- BTK uses the WeakLensingDeblending package to draw images

- Weak Lensing Deblending represents galaxies a sums of Sersic profiles (bulge + disk) and potentially AGN

- bulge + disk parameters, flux in each band is provided by extragalactic catalog

- How can we interface with the generative model in this setting?

Blending ToolKit

COSMOS parametric fits

- The COSMOS sample provided with GalSim includes bulge+disk parametric fits for a subset of galaxies

- We can use these parameters to condition the generative model:

- zphot, bulge_hlr, disk_hlr, bulge_q, disk_q, bulge_flux, disk_flux

- zphot, bulge_hlr, disk_hlr, bulge_q, disk_q, bulge_flux, disk_flux

- The image is drawn for the i-band, and flux is rescaled to match the input catalog in each band

Similar scheme would allow us to draw galaxies image for DC2/DC3

Example of running scarlet on

parametric vs generative model

- Blends drawn and processed through Scarlet using Sowmya's tools.

- In most cases results are very similar, but some visible residuals for more extended objects.

- Working on more quantitative results.

Parametric:

Generative model:

Conclusion

- Framework for integrating generative models with GalSim

- Will release models based on COSMOS along with code to train your own models (GAN and VAE)

- These generative models will allow us to include more realistic morphologies for galaxies in future image simulations

- Update of Ravanbakhsh et al. 2017

Data-driven galaxy morphology models

By eiffl

Data-driven galaxy morphology models

Presentation for Blending Task Force meeting, Jan. 7 2019

- 1,509