Commentary of

Deep Learning to identify structures in 3D data

from Stella Offner at SCMA VII

Francois Lanusse (CNRS/CEA Saclay)

Back in my days...

- Find out more about Morphological Component Analysis in Starck, Murtagh, Fadili, 2016

My own "Deep Learning moment"

My main takeaways

- U-net architecture

- The challenges of 3D data

U-Nets

Everywhere

What is so special about this architecture?

Selected applications in Astrophysics

- Semantic segmentation

- Solvers for inverse problems (denoising, deconvolution)

- Generative models

Semantic Segmentation: Morpheus

R. Hausen & B. Robertson (2020)

Solving Inverse Problems: DeepMass

N. Jeffrey, F. Lanusse, O. Lahav, J.-L. Starck (2020)

Trained under an l2 loss:

Generative Models: Denoising Score Matching

Remy, Lanusse, Ramzi, Liu, Jeffrey, Starck (2020)

See Benjamin's poster here

Dealing with large scale

3D data

Excerpts from papers

Given the extra dimension in our model, we cannot maintain the same spatial resolution in our 3D model due to the limited memory on GPU. We reform the input data to an array shape of 64×64×32 in position position-velocity (PPV).

Application of Convolutional Neural Networks to Identify Stellar Feedback Bubbles in CO Emission, Xu et al. 2020

Limited by the size of GPU memory and the fact that 3D data consume more memory than lower dimensional tasks, we cannot feed the whole simulations into the GPU during training and testing.

AI-assisted super-resolution cosmological simulations, Li et al. 2020

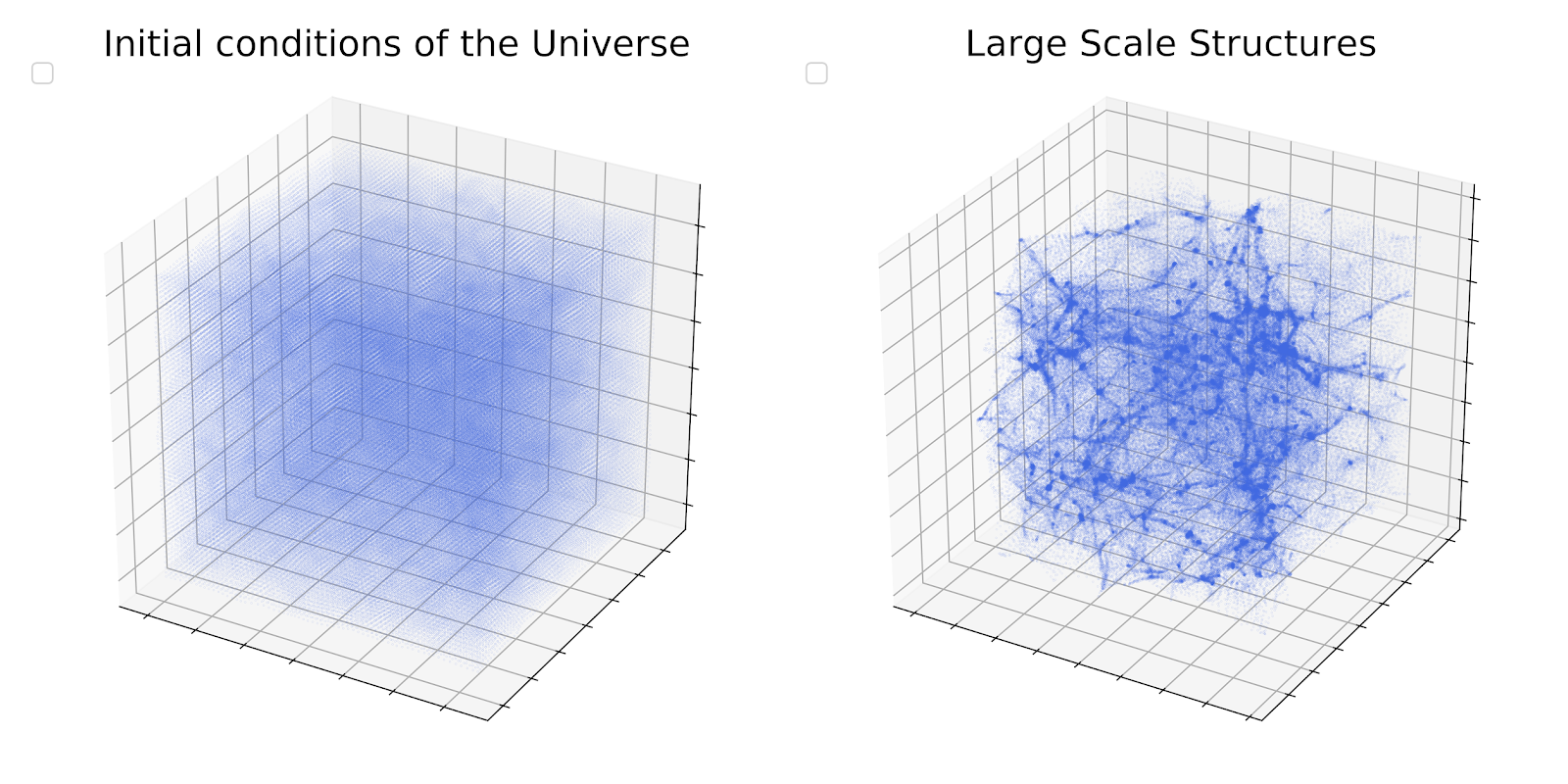

My own struggle with 3D data

FlowPM, TensorFlow N-body solver

try me out here

CosmicRIM: Recurrent Inference Machine for initial condition reconstruction

(Modi, Lanusse, Seljak, Spergel, Perreault-Levasseur 2021)

Why is this still a problem today?

Pipeline parallelism

Towards a solution

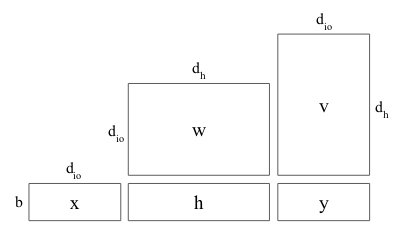

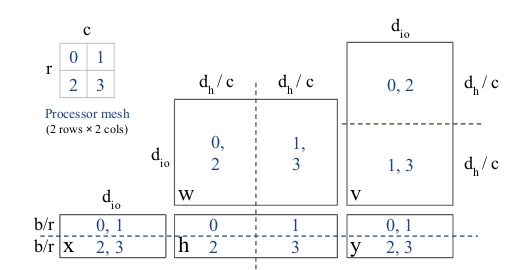

#A simple 2 layer fully connected network

#y=Relu(xw+bias)v

#Define dimensions

batch = mtf.Dimension("batch", b)

io = mtf.Dimension("io", d_io)

hidden = mtf.Dimension("hidden", d_h)

# x.shape == [batch, io]

#Get tensors

w = mtf.get_variable("w", shape=[io, hidden])

bias = mtf.get_variable("bias", shape=[hidden])

v = mtf.get_variable("v", shape=[hidden, io])

#Define operations

h = mtf.relu(mtf.einsum(x, w, output_shape=[batch, hidden]) + bias)

y = mtf.einsum(h, v, output_shape=[batch, io])

- Mesh TensorFlow, a TensorFlow-based framework for model parallelism

- Developing GPU backend for large scale model distribution on GPU clusters, join us if you are interested!

https://github.com/DifferentiableUniverseInitiative/mesh

Fresh from last week

-

2048^3 simulation of the Universe, distributed on 256 GPUs

=> Runs in 3m

=> multiple TB of RAM

Main Takeaways

-

Deep Learning has revolutionized structure identification, Stella's talk is an excellent illustration of that.

-

U-nets as an architecture are extremely versatile and successful.

-

Deep Learning with 3D data is still a challenge in 2021, this is because our needs in (astro-)physics are unique.

Commentary on Stella Offner's talk

By eiffl

Commentary on Stella Offner's talk

- 1,177