JSON & Proto encoders revised

Streaming Encoding for LIST Responses

Problem

- API server's memory usage spikes

- Out-of-memory (OOM) attacks on the control-plane Node

- Loosing other processes running on the same Node

Problem

- API server's memory usage spikes

- Out-of-memory (OOM) attacks on the control-plane Node

- Loosing other processes running on the same Node

Goal

- Implement JSON and Protocol Buffer streaming encoders for collections

- Reduce and stabilize API server memory usage for large LIST responses.

Problem

- API server's memory usage spikes

- Out-of-memory (OOM) attacks on the control-plane Node

- Loosing other processes running on the same Node

-

Implement custom streaming encoding for JSON and Protocol Buffer (Proto) for responses to LIST requests. List request (collections) refers to a list of instances of a specific type.

Proposal

Goal

- Implement JSON and Protocol Buffer streaming encoders for collections

- Reduce and stabilize API server memory usage for large LIST responses.

Problem

- API server's memory usage spikes

- Out-of-memory (OOM) attacks on the control-plane Node

- Loosing other processes running on the same Node

-

Implement custom streaming encoding for JSON and Protocol Buffer (Proto) for responses to LIST requests. List request (collections) refers to a list of instances of a specific type.

Proposal

Goal

- Implement JSON and Protocol Buffer streaming encoders for collections

- Reduce and stabilize API server memory usage for large LIST responses.

{

"kind": "ServiceList",

"apiVersion": "v1",

"metadata": {

"resourceVersion": "2947301"

},

"items": [

{

"metadata": {

"name": "kubernetes",

"namespace": "default",

...

"metadata": {

"name": "kube-dns",

"namespace": "kube-system",

...Custom encoders

-

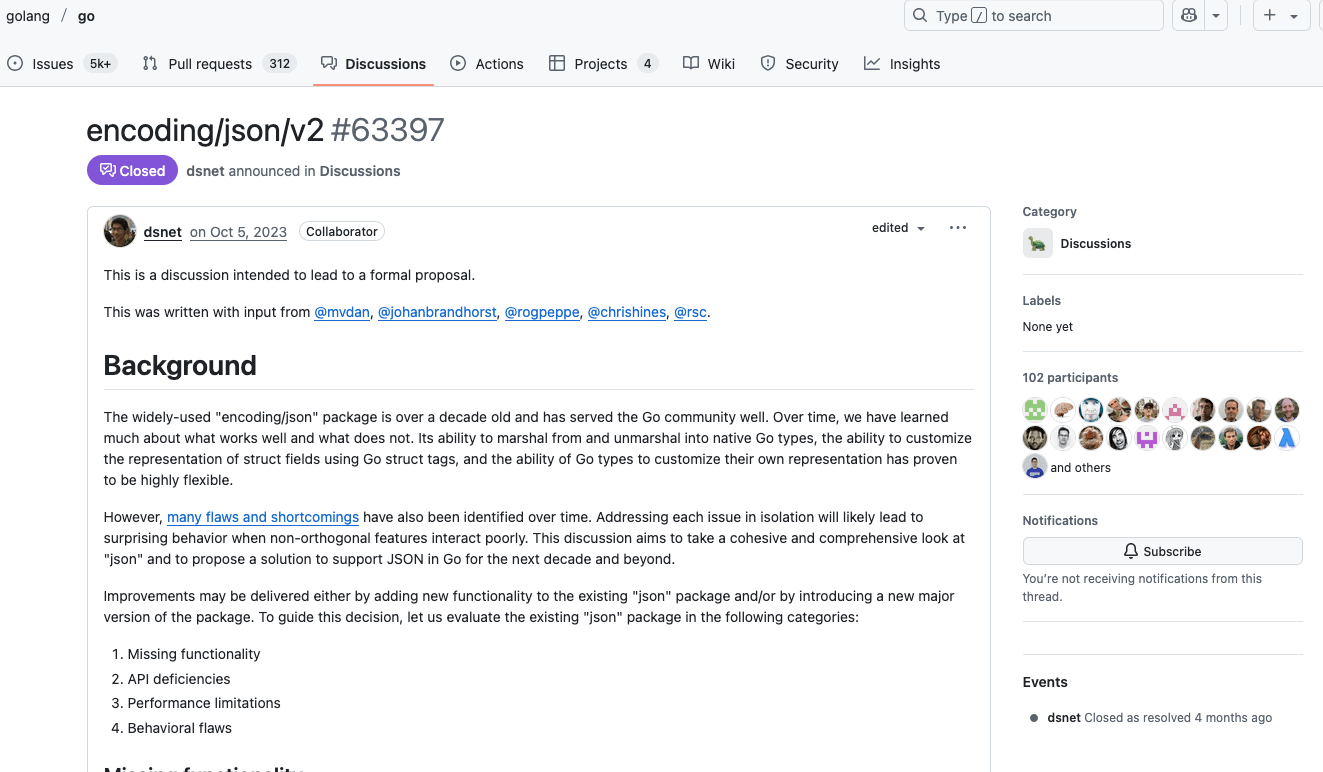

Streaming JSON list encoder

- temporary until json/v2 is stabilized. v1 library doesn't support streaming

-

Streaming Proto list encoder

- same as json/v2

apiServer:

extraArgs:

feature-gates: |

StreamingCollectionEncodingToJSON=true,

StreamingCollectionEncodingToProtobuf=trueIntroduced: 1.33

Stage: Beta (immediately)

Default: True

Caching

Snapshottable API server cache

Problem

- Unpredictable Memory Pressure

- Ineffective API Priority and Fairness (APF) Throttling

- Improve kube-apiserver's watch cache by generating B-tree snapshots, allowing it to serve LIST requests for previous states directly from the cache. This change aims to

Proposal

Goal

- Reduce the memory allocation when serving historical LIST requests

- Improve API server performance and stability.

- Prevent inconsistent responses returned by the cache

Caching

- Read directly from ETCD

etcd

API server

LIST historical state

READ

Caching

- Read directly from ETCD

- Read from cache (recent)

watchcache

etcd

API server

Caching

- Read directly from ETCD

- Read from cache (recent)

watchcache

etcd

API server

resourceVersion:

1

2

...

100

resourceVersion=100

Caching

- Read directly from ETCD

- Consistent reads from cache

watchcache

etcd

API server

resourceVersion:

1

2

...

100

resourceVersion=90

resourceVersion=100

Caching

watchcache

etcd

API server

resourceVersion:

1

2

...

100

resourceVersion=90

resourceVersion=100

response

response

- Read directly from ETCD

- Consistent reads from cache

Caching

etcd

API server

LIST historical state

READ

- Read directly from ETCD

- Consistent reads from cache

Introduced: 1.33

Stage: Alpha

Default: False

apiServer:

extraArgs:

feature-gates: |

ListFromCacheSnapshot=true,

DetectCacheInconsistency=truenot implemented yet

deck

By fmuyassarov

deck

- 118