Old school auditing

with the new JSONB stuff

{ "name": "Felix Kunde" , "email": "felix-kunde@gmx.de"}

Slides: http://slides.com/fxku/pgmemento_foss4gna16

Hi!

I'm Felix.

I'm from Berlin.

Working at a university.

Mining on traffic sensor data.

Fell in love with PostGIS 5yrs ago.

Used it for 3d city models.

Missed versioning.

Did it myself.

Voilà.

Who got me here?

Motivation

Related projects

Implementation

Initial Idea:

Version control for 3D city models

Version control inside the DBMS

Linear

Hierarchical

(Lohse 2007)

Linear versioning usecases

A link to the past

http://interaktiv.morgenpost.de/berlins-neue-skyline/

Hierarchical versioning

City planning scenarios

http://www.hamburg.de/sehenswuerdigkeiten/4520674/neue-koehlbrandbruecke/

3D City Database

- Open source project to store CityGML-based city models in PostGIS (github.com/3dcitydb)

- Want to know more? Visit the talk by Claus Nagel tomorrow:

Existent solution for 3DCityDB

Version control of a proprietary DBMS

Linear versioning with timestamps

Working in different "workspaces" (hierachical versioning)

Merging of workspaces is possible

Great in theory, but working not so well in the field ...

Conclusion

Clear separation between production and archive state

Logs should be easy to access and editable

Performance of queries shall not decrease

How to do it?

-

Use triggers to catch change events

-

Do something with the OLD version

-

Provide queryable versions for each row

-

Going back to a previous DB state would be nice

Everything in one place

-

Create a validity range for each row

-

OLD and NEW together in one table

-

Versioning is easy, but tables get messy

-

Include time condition for each query

-

How to delete logs?

QGIS DB Manager Versioning works like this

Everything in another place

-

Putting OLD into another table or schema

-

Validity range for each row in history table

-

Include time condition only for versioning queries

-

Dynamic time travel through views

-

How to delete logs?

Some examples for history tables

tablelog by Andreas Scherbaum

temporal_tables extension by Vlad Arkhipov

Tardis approach by Magnus Hagander

table_version extension by Jeremy Palmer

Generic auditing

Why have a history table for each audited table,

when you can just have one?

OLD can be converted into into a semi-structured data type:

RECORD, hstore, JSON, JSONB?

Don't need to care for DDL changes!

Craig Ringer does this with audit trigger 91plus (hstore)

And pgMemento does it as well.

Oh nice, but ...

-

Versioning gets more complicated then

-

Querying these logs is different (API changes)

-

Querying relational history tables is probably faster

-

DDL changes could be managed by event triggers

Disk space

(C) virualcitySYSTEMS 2016

Geometry, rasters and BLOBs

-

Logging complex objects even when they have not changed?

-

Calculate delta between OLD and NEW

-

Log only changed columns (need comparison)

-

Creating deltas produces unstructured logs

-

These could be handled nicely with hstore, JONSB etc.

-

Trigger overhead grows further

Pivoting changes

-

For each changed column generate a new row in a log table

-

column_name | pk | txid | tz | op | old_value | new_value

-

Cyan Audit by Moshe Jacobsen does it this way

- Enabling / disabling auditing on column-level

PostgreSQL Logical Decoding

-

New API since PG 9.4 to access replication streams

-

Decoding the WAL logs

-

Write changes to replication slots

-

Auditing and versioning are both possible

-

Output plugins: WAL-Format >> wished format

-

More Infos: Docu, FOSS4G NA, Postgres Open, FOSDEM, PgConfRu

Auditing using Logical Decoding

Auditing transaction meta data (no data log):

- pgaudit by Abhijit Menon-Sen & Ian Barwick (2nd Quadrant)

-

pgaudit fork by David Steele (Crunchy Data)

Audit log to JSONB, to restore in a (PostGIS) database:

- logicaldecoding by Sebastian R. Schmidt

(there might a talk about it @foss4g in Bonn)

Hierarchical version control

of spatial data

Use case: Multiple users working in their own branches

- QGIS Versioning Plugin by OSLandia

-

pgVersion by Horst Düster

- GeoGig by Boundless

- Other tools ...

How it all started with pgMemento

Table row >> row_to_json(OLD) >> JSON

JSON >> json_populate_record(null::test_table, JSON) >> Table row

Generic tracking of changes

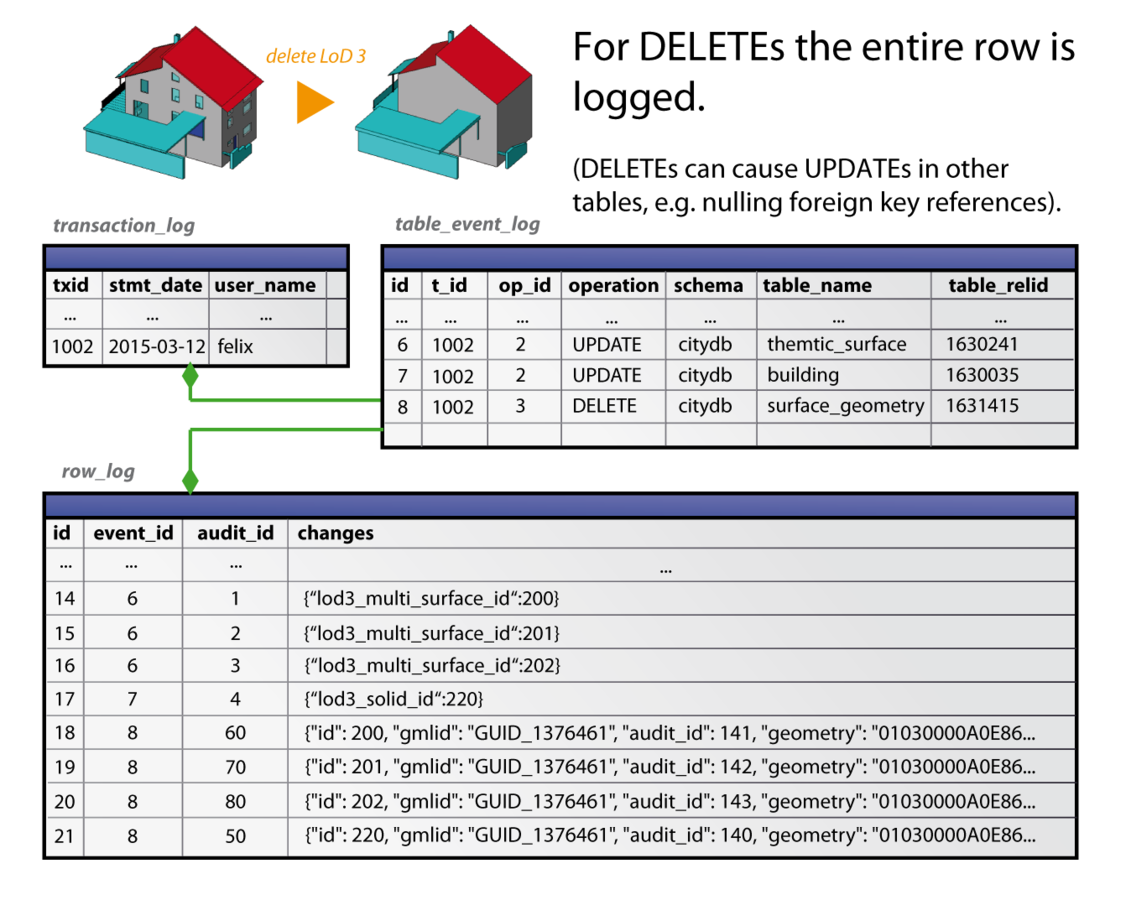

Log tables

DML log tables

-

Transaction_log: Meta data about transactions

-

Table_event_log: Meta data about table events related to txids

-

Row_log: Table for data log referenced to events

DDL log tables

-

Audit_table_log: Shows which tables exist for txid range

-

Audit_column_log: Shows which columns exist for txid range

Statement-level triggers

-

BEFORE DML changes log infos about current txid (UNIQUE)

-

BEFORE DML changes log infos about table event

-

BEFORE TRUNCATE statement log all the removed data

Row-level triggers

-

AFTER insert log inserted rows without the data (use first event ID for current txid)

-

AFTER update log deltas of updated row (use first event ID for current txid)

-

AFTER delete log data of deleted rows (use last event ID for current txid)

Event triggers

- Tag 'CREATE TABLE': Enable auditing for new table and fill DDL logs

-

Tag 'ALTER TABLE': Update the audit_column_log

-

Tag 'DROP TABLE': Update the DDL logs

-

Drop-Events can be narrowed down to single tables (PG 9.4) pg_event_trigger_dropped_objects()

-

Postgres 9.5 got us pg_event_trigger_ddl_commands()

Additional audit id for each table

-

A unique ID is necessary to trace a row in the logs

-

Primary key could be used but it can consist of multiple columns

-

pgMemento adds an additional audit_id column to a table

-

There is only one global sequence for all audit_ids

INSERT case

... and how it affects the log tables

UPDATE case

UPDATE building SET measured_height = 12.8 WHERE id = 1;

Creating the deltas

SELECT COALESCE(

(SELECT ('{'

|| string_agg(to_json(key) || ':' || value, ',')

|| '}')

FROM jsonb_each(row_to_json(OLD)::jsonb)

WHERE

NOT ('{'

|| to_json(key) || ':' || value

|| '}')::jsonb

<@ row_to_json(NEW)::jsonb

),

'{}')::jsonb AS delta

http://8kb.co.uk/blog/2015/01/27/wanting-hstore-style-operators-in-jsonb-revisited/

DELETE case

Fast queries thanks to GIN index

CREATE INDEX row_log_changes_idx ON pgmemento.row_log USING GIN (changes); JSON query: Which transactions has changed a certain column?

SELECT t.txid

FROM pgmemento.transaction_log t

JOIN pgmemento.table_event_log e ON t.txid = e.transaction_id

JOIN pgmemento.row_log r ON r.event_id = e.id

WHERE

r.audit_id = 4

AND

(r.changes ? 'measured_height');

-> Index Scan using row_log_audit_idx on row_log r

Index Cond: (audit_id = 4)

Filter: (changes ? 'measured_height'::text) JSON query 2: Searching for a specific key-value combination

SELECT DISTINCT audit_id FROM pgmemento.row_log

WHERE

changes @> '{"measured_height": 0.0}'::jsonb;

-> Bitmap Index Scan on row_log_changes_idx

Index Cond: (changes @> '{"measured_height": 0.0}'::jsonb)

Restore a former table

or database state

SELECT pgmemento.restore_schema_state(

1, -- Start of time slice

1002, -- End of time slice

'citydb', -- Source schema

'state_before_delete', -- Target schema

'TABLE', -- Relation type

0 -- Update restored state (views only)

);

First check: Which tables existed for the upper txid boundary

(audit_table_log table is queried)

What happens internally?

- Get valid rows for the given time slice

- Fetch Audit_IDs before Tx (using DISTINCT)

- Exclude Audit_IDs of already deleted entries

Get columns of a table for given txid

-

Therefore, query the audit_column_log table

SELECT column_name

FROM pgmemento.audit_column_log

WHERE table_relid = 123456

AND txid_range @> 1002::numeric

Create temporary table

-

For the last step we need a table as a template

-

Template should be historically correct

-

Create a temporary table from the audit_column_log

-

If restoring as tables these templates will be dropped on commit

-

If restoring as views these templates need to be preserved

Find old values

-

For each audit id loop over found columns

-

For each column search for the first appearance inside the data log

-

If nothing was found, query the recent state of the table

SELECT 'my_column' AS key, COALESCE(

(SELECT (r.changes -> 'my_column')

FROM pgmemento.row_log r

JOIN pgmemento.table_event_log e ON r.event_id = e.id

JOIN pgmemento.transaction_log t ON t.txid = e.transaction_id

WHERE t.txid >= 1002

AND r.audit_id = 40

AND (r.changes ? 'my_column')

ORDER BY r.id LIMIT 1

),

(SELECT COALESCE(to_json(my_column), NULL)::jsonb

FROM my_schema.my_table

WHERE audit_id = 40

)

) AS valueFind old values

Connect JSONB fragments

) -- end of WITH block, that has collected valid audit_ids

SELECT v.log_entry FROM valid_ids f

JOIN LATERAL ( -- has to be applied for each audit_id

SELECT json_build_object(

q1.key, q1.value,

q2.key, q2.value,

...)::jsonb AS log_entry

FROM ( -- search for old values (see previous slide)

SELECT q.key, q.value FROM (

SELECT 'id' AS key, r.changes -> 'id'

FROM pgmemento.row_log r ...

)q ) q1,

SELECT q.key, q.value FROM (

SELECT 'name' AS key, r.changes -> 'name'

FROM pgmemento.row_log r ...

)q ) q2, ...

Restoring the table

) -- previous steps are encapsulated in a WITH block

SELECT p.* FROM restore rq

JOIN LATERAL (

SELECT * FROM jsonb_populate_record(

null::my_temp_table, -- template

rq.log_entry -- concatenated JSONB

)

) p ON (true)

Revert transactions

Case 1: If deleted >> INSERT

Case 2: If updated >> UPDATE

Case 3: If inserted >> DELETE

Query to prepare the revert

SELECT r.audit_order, r.audit_id, r.changes,

a.schema_name, a.table_name, e.op_id

FROM pgmemento.table_event_log e

JOIN pgmemento.audit_table_log a ON a.relid = e.table_relid

JOIN pgmemento.transaction_log t ON t.txid = e.transaction_id

JOIN LATERAL (

SELECT

CASE WHEN e.op_id > 2 THEN

rank() OVER (ORDER BY audit_id ASC)

ELSE

rank() OVER (ORDER BY audit_id DESC)

END AS audit_order,

audit_id, changes

FROM pgmemento.row_log

WHERE event_id = e.id

) r ON (true)

WHERE upper(a.txid_range) IS NULL

AND t.txid = tid

ORDER BY e.id DESC, audit_order ASC;

Revert transactions

-

Reverts are processed in a loop to guarantee a correct order

-

Foreign key violations are avoided (unless no cascade stuff is done)

-

Can also be processed for a range of transactions

-

If there are no foreign keys only the oldest event per row should be reverted, because it's faster

Caveats

-

Of course, it slows down DDL and DML operations

-

No auditing for constraints, indexes and other DB objects

-

Restored views disappear when session ends

-

No merging of schemas

-

No partition strategy for data log table

-

More thoughts on restrictions and protection for log tables

-

No GUI, just SQL coding at the moment

-

Only supports Postgres 9.4 or higher

Additional notes

Probably not the right option for write-extensive databases (even though only deltas are stored)

Manipulation of log tables is very easy at the moment

pgMemento is no substitution for backup and recovery tools

Repository

https://github.com/pgMemento/pgMemento

Any questions ?

Contact:

Felix Kunde

felix-kunde@gmx.de

+4930 4504 3824

See you in Bonn!

pgMemento_FOSS4G-NA16

By fxku

pgMemento_FOSS4G-NA16

- 4,608