Neuromorphic Systems for Mobile Computing

Gregor Lenz

My background

-

Master's degree in Biomedical Engineering in Vienna, Austria

-

2.5 years working in a tech start-up, IT consultancy, Imperial College London, Prophesee

-

About to finish PhD in neuromorphic engineering in Paris, France

Neuromorphic System

1. Sensor

2. Algorithm

3. Hardware

Goal: low power system for mobile devices

Advantage: temporal and spatial sparsity

1. Connecting a Neuromorphic Camera to a Mobile Device

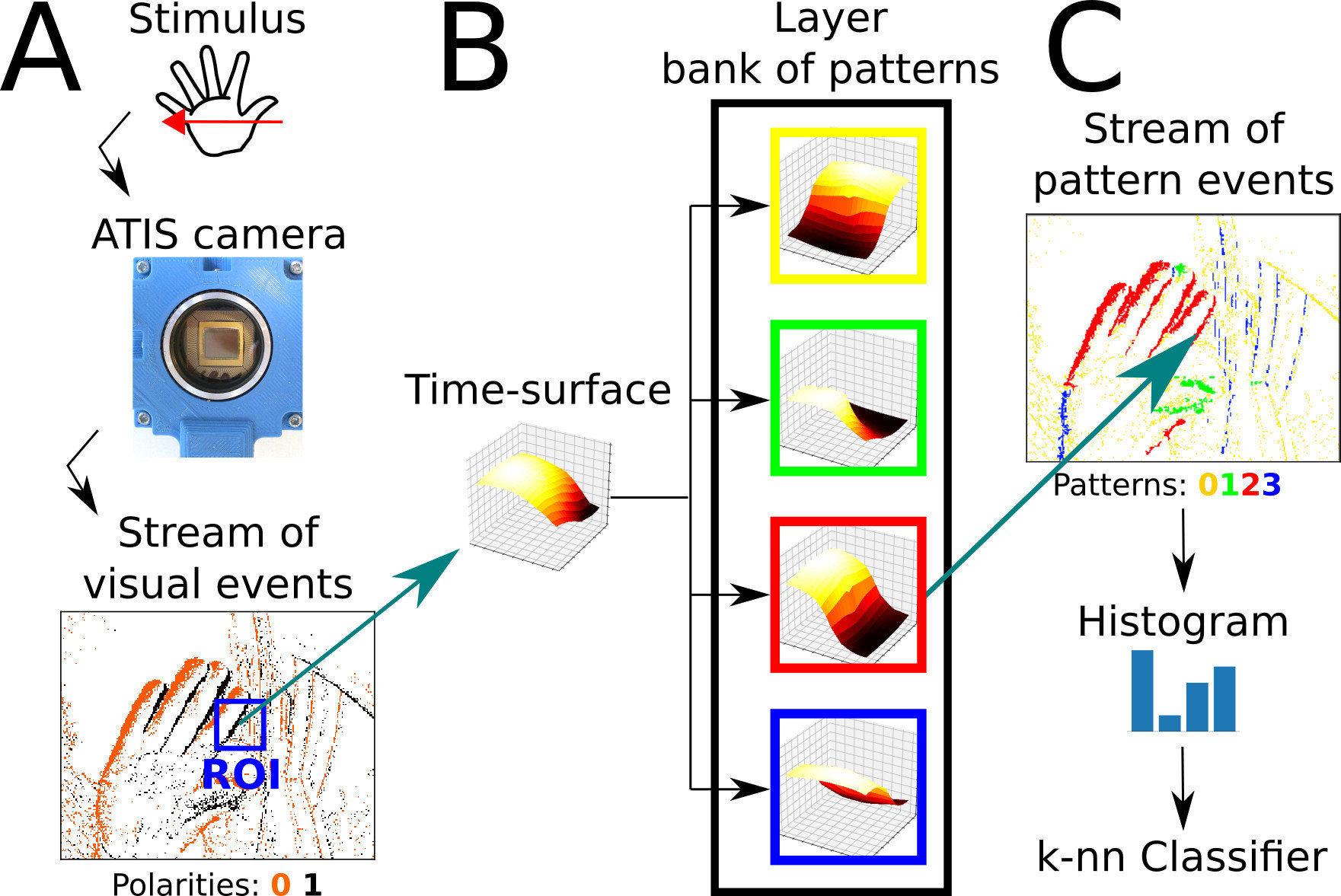

Event-based Visual Recognition on Mobile Phone

- Motivation: assist elderly and visually impaired people with hand gestures and voice commands

- Prototype that connects a small

event camera - Event-based always on

processing

- integrates different modalities

(gestures / speech) - displays event camera output

in real time - detects 6 different gestures: 4

directions, home, select

Event-based Visual Recognition on Mobile Phone

Maro, Lenz, Reeves and Benosman, Event-based Visual Gesture Recognition with Background Suppression running on a smart-phone, 14th ICAG 2019. Best demo award.

Event-based Visual Recognition on Mobile Phone

Maro, Lenz, Reeves and Benosman, Event-based Visual Gesture Recognition with Background Suppression running on a smart-phone, 14th ICAG 2019. Best demo award.

Event-based Visual Recognition on Mobile Phone

Maro, Lenz, Reeves and Benosman, Event-based Visual Gesture Recognition with Background Suppression running on a smart-phone, 14th ICAG 2019. Best demo award.

Extended Mobile Phone Android framework

- Extended framework for other visual tasks: event-based flow, image reconstruction

- variable frame rates save power when

no new input - grey-level images allow downstream

classical computer vision pipelines

2. Efficient neuromorphic algorithm for camera data

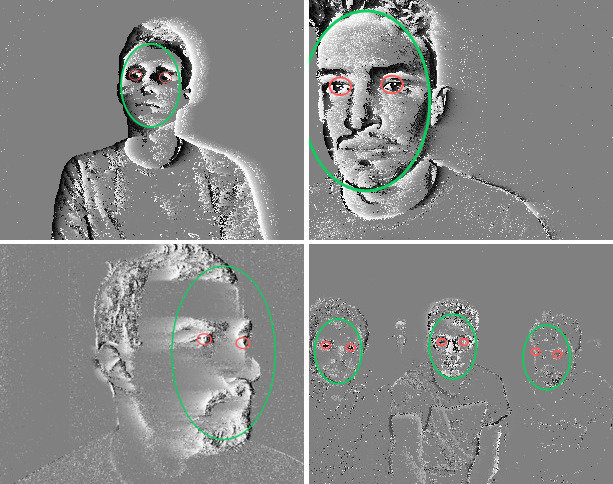

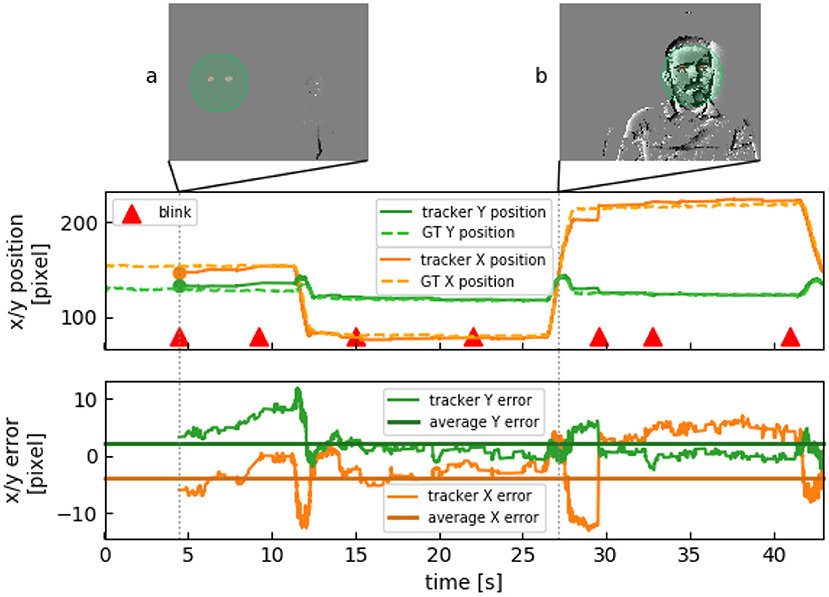

Event-based Face Detection Using the Dynamics of Eye Blinks

Lenz, Ieng and Benosman, Event-based Face Detection and Tracking Using the Dynamics of Eye Blinks, Frontiers of Neuroscience 2020.

- tracking with μs precision and in difficult light situations

- lower power than gold standard methods

- robust to multiple faces and partial occlusions

Event-based Face Detection Using the Dynamics of Eye Blinks

Lenz, Ieng and Benosman, Event-based Face Detection and Tracking Using the Dynamics of Eye Blinks, Frontiers of Neuroscience 2020.

Event-based Face Detection Using the Dynamics of Eye Blinks

Lenz, Ieng and Benosman, Event-based Face Detection and Tracking Using the Dynamics of Eye Blinks, Frontiers of Neuroscience 2020.

Event-based Face Detection Using the Dynamics of Eye Blinks

3. Neural computation on neuromorphic hardware

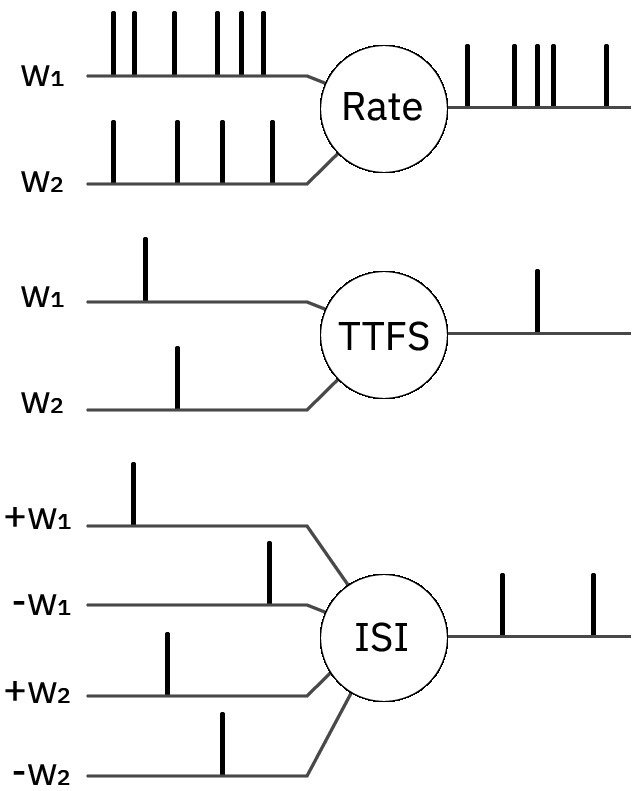

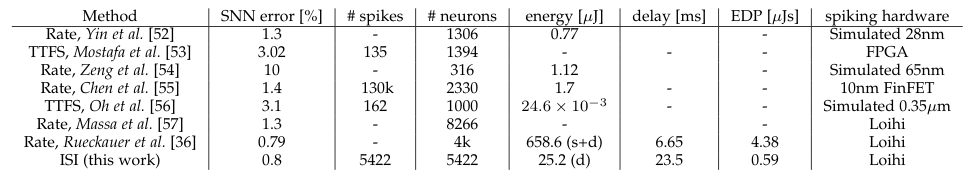

Neural Encoding Schemes

- Many spiking neural

networks use rate coding

- Temporal encoding exists:

Time To First Spike (TTFS)

fairly inaccurate

- We use alternative

encoding scheme based

on inter spike intervals (ISI)

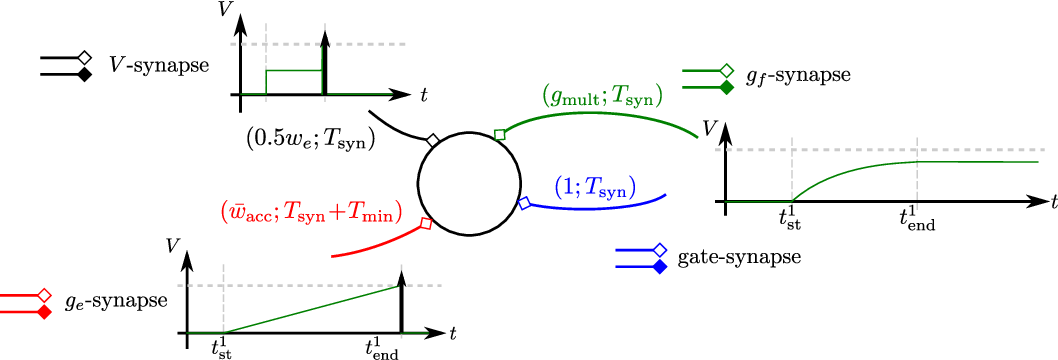

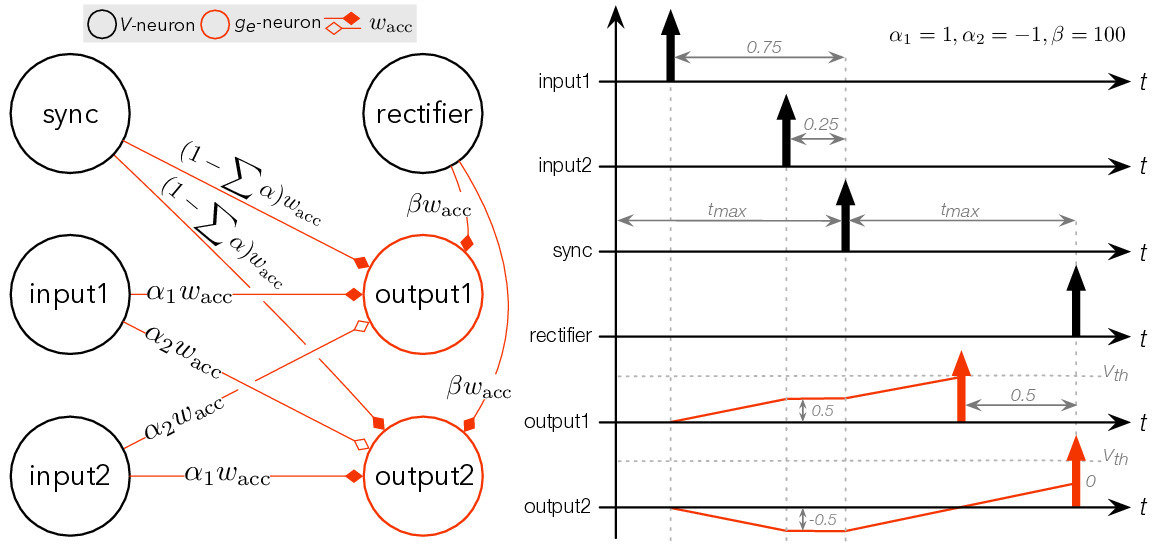

Spike Time Computation Kernel (STICK)

- Values are encoded in Inter Spike Intervals

- 4 different synapses provide 3 different current accumulation methods

Lagorce & Benosman, 2015

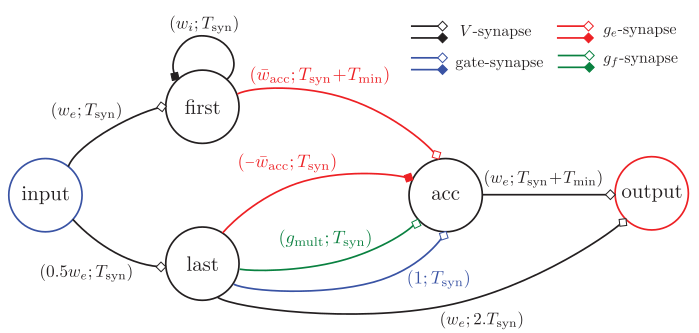

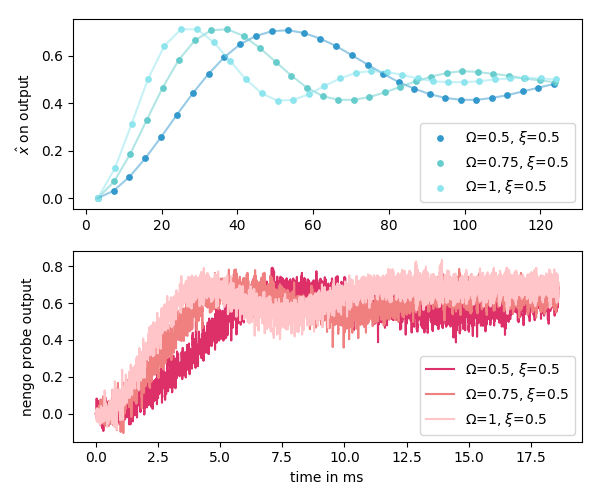

Spike Time Computation Kernel (STICK)

- Mathematical operations are cast into handcrafted spiking neural networks

- Networks for value storage, linear, nonlinear and differential computation

Lagorce & Benosman, 2015

Logarithm network

Neural Computation on Loihi

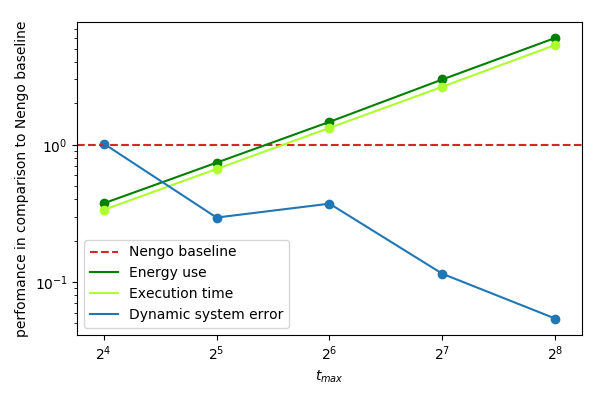

- Composable networks for general purpose computing using artificial neurons

Neural Computation on Loihi

- More efficient when calculating dynamic systems in comparison to population-coded framework on same hardware

Neural Computation on Loihi

- Conversion of networks trained on GPUs for efficient inference on Loihi

Neural Computation on Loihi

- Our method uses one spike per neuron at similar classification accuracy, which is significantly less than any rate-coded method

Conclusions

- Event cameras are suitable for sporadic, sparse signals, but need tight integration

- Mobile devices can already benefit from low-power event-by-event approaches or even variable frame rates, but could do so even more using spiking neural networks

- Temporal coding on neuromorphic hardware looks promising, opens up possibility for spiking computer

- ANN/SNN conversion using temporal coding has low EDP for low batch size

Why am I the right candidate?

- about to complete PhD in neuromorphic enginering

- familiar with taking orthogonal approaches due to the nature of events

- worked on Loihi for the past 1.5 years

- authored several software packages

- like writing software that is easy to use

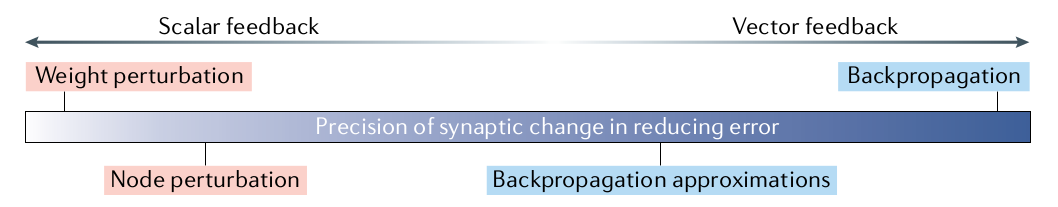

Topics to explore

- Temporal augmentation: can we make algorithms more robust by changing timings, maybe even learn representations in an unsupervised manner

- Online learning: continual model updates

- biologically plausible learning rules

picture adapted from Lillicrap et al. 2020

Conclusion

- Event cameras are suitable for sporadic, sparse signals, but need tight integration

- Mobile devices can benefit from low-power event-by-event approaches or even variable frame rates

- ideally makes use of spiking hardware

20 Watt for 6 years:

1 MWh

Can We Learn From the Brain?

1 GWh

Mobile Computing

- limited power capacity

- growing demands of functionality

- need for efficient computing

How does it scale?

- limited power capacity:

~5% battery improvement / year - need for efficient computing: more transistors / area

- growing demands of functionality: cloud computing

==> scales badly!

Can we learn from the brain?

- computes extremely efficiently (20 W)

- completely different mechanisms of computation

- copy it by recreating the basic components

Neuromorphic Engineering

- Artificial neurons

- Computing with spikes

- Asynchronous communication

Summary of contributions

- 1 journal article published

-

1 paper under submission

-

1 paper in preparation

-

110 page thesis manuscript draft

Other contributions:

Maro, Lenz, Reeves and Benosman, Event-based Visual Gesture Recognition with Background Suppression running on a smart-phone, 14th ICAG 2019.

Haessig, Lesta, Lenz, Benosman and Dudek, A Mixed-Signal Spatio-Temporal Signal Classifier for On-Sensor Spike Sorting, ISCAS 2020.

Oubari, Exarchakis, Lenz, Benosman and Ieng, Computationally efficient learning on very large event-based datasets, to be submitted 2020

interview

By Gregor Lenz

interview

- 210