Spooky Introduction to Word Embeddings

NLP "pipeline"

"The quick brown fox..."

"the quick brown fox..."

["the", "quick", "brown", ...]

[3, 6732, 1199, ...]

???

✨

negative

raw input

cleaning

tokenization

word representation

model

output

"naive" approach

- split sentence up into words

- use a one-hot-encoding as something for our model to learn against

"the quick brown fox..." → ["the", "quick", "brown", "fox",...]

"the" → [1, 0, 0, 0, 0, ...]

"quick" → [0, 1, 0, 0, 0, ...]

this is the approach used in Naive Bayes

let's take a step back

what are we actually asking our model to learn?

- meanings of words

- how those words fit together to form meaning

- how these fit together to do the task at hand

this feels like we're asking our model to do quite a lot

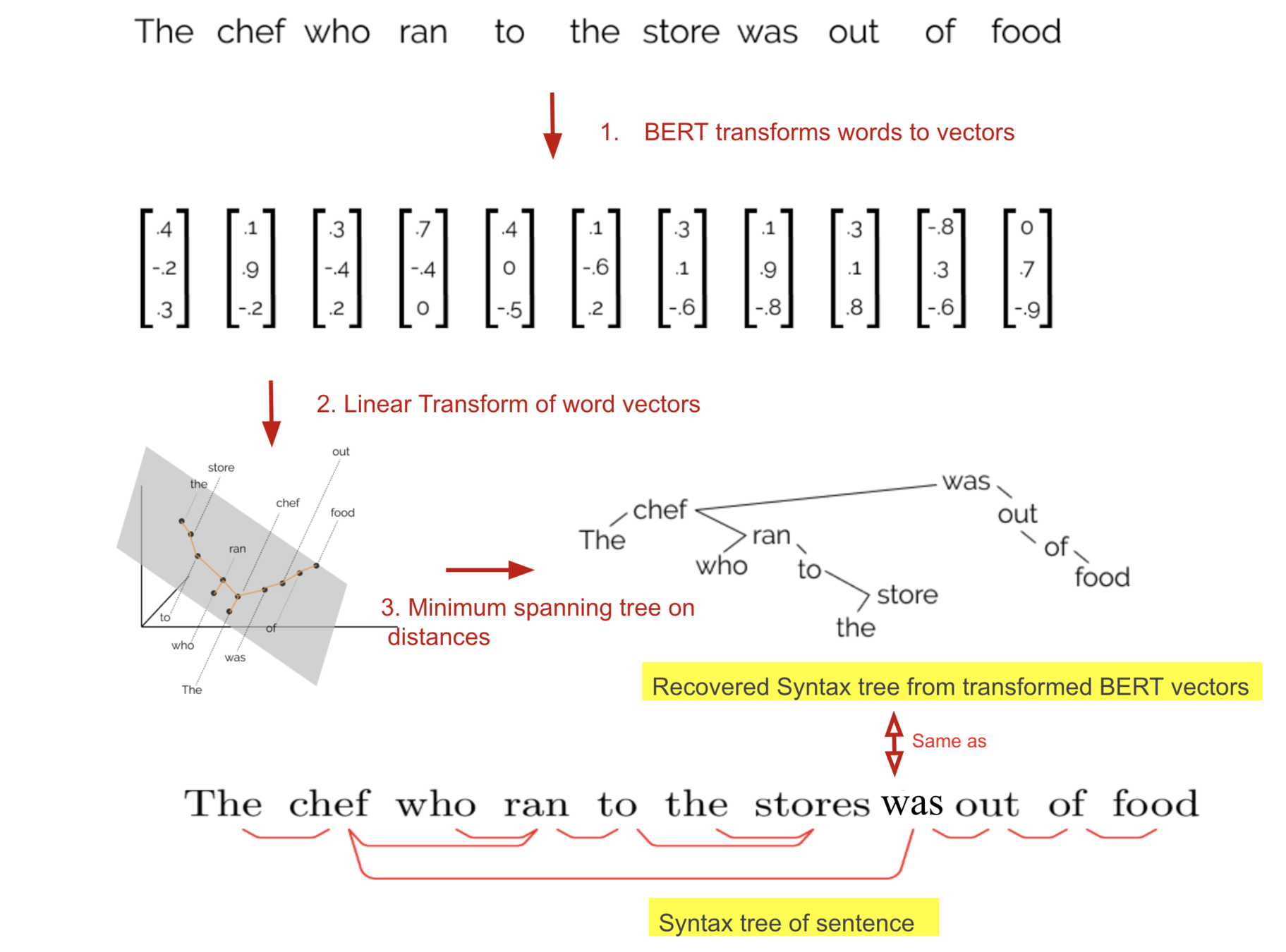

a better approach

- still split sentence up into words

- this time use a vector to represent our word

"the quick brown fox..." → ["the", "quick", "brown", "fox",...]

"the" → [-0.13, 1.67, 3.96, -2.22, -0.01, ...]

"quick" → [3.23, 1.89, -2.66, 0.12, -3.01, ...]

intuition: close vectors represent similar words

is this actually better? 🤔

- haven't we just created yet another thing we have to learn?

- in most contexts words have the same meaning

- can independently build a very accurate model against a huge dataset once and reuse it lots of tasks

- eg GloVe 840B is trained against 840 billion words (wikipedia)

word embeddings

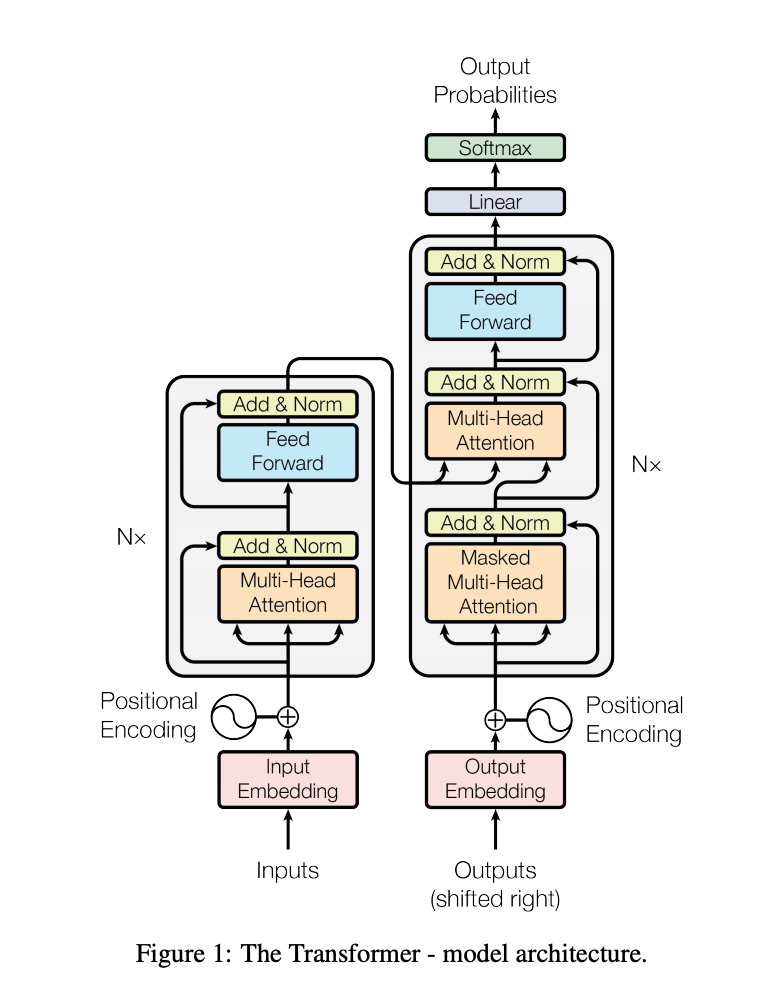

two common approaches to training

- GloVe

- word2vec

GloVe: co-occurance matrix, good fast results at both small and huge scales

w2v: NN with masking, very fast to train, nice python support

both result in something close to a Hilbert space

here have code

(The only scary slide)

tips and tricks

- training: word used a lot but not in embedding - use a random vector. you're asking your model to learn something weird, but if you don't do it too much it works

- inference: UNK token - typically one token chosen at random. here you're telling your model "there was something here but I don't know how to represent it" rather than it not being sure if there was a word or not (and potentially learning a non-pattern)

- you can combine embeddings

- FastText for unknown words

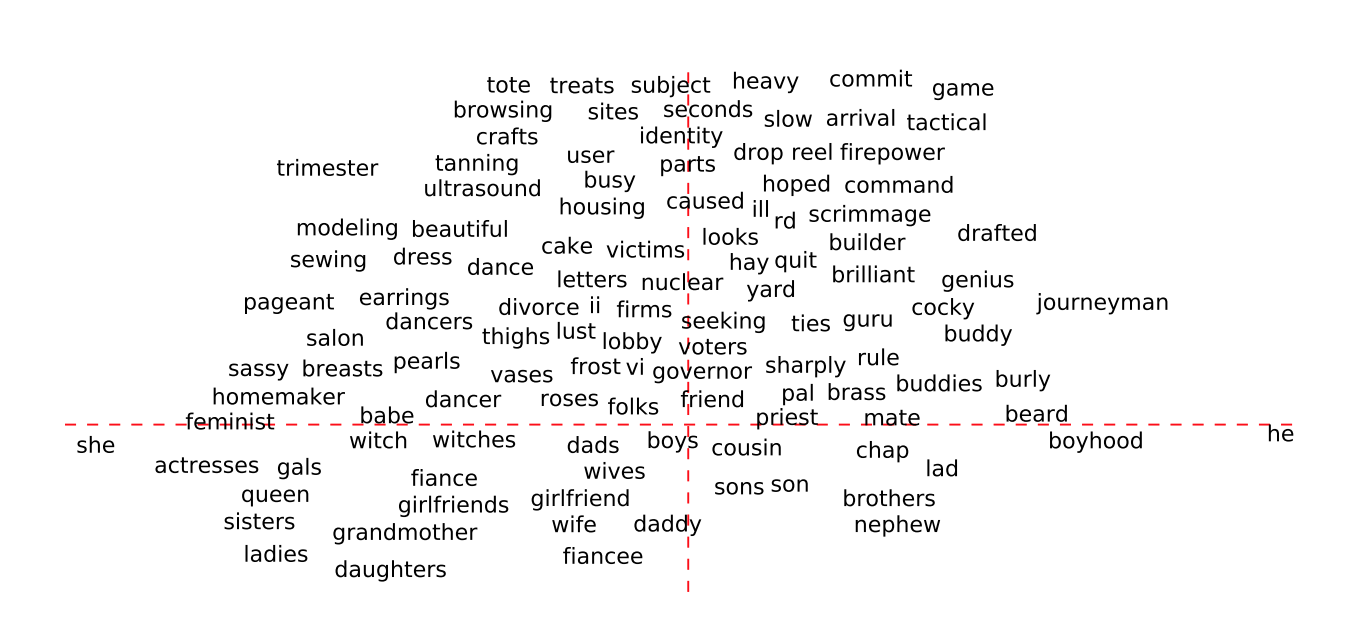

serious problems

- learn embeddings off large (read: huge) corpus

- biases

- can introduce biases to your model even if your training data isn't biased

OVO embeddings

- "smart" means something very specific in energy

- the whole point is to make things easy for our model

- Natalie and I have been training some word embeddings

Buffalo buffalo Buffalo buffalo buffalo buffalo Buffalo buffalo

end-to-end learning

Introduction to Word Embeddings

By Hamish dickson

Introduction to Word Embeddings

- 343