Meta-Transfer Learning through Hard Tasks

Outline

- Meta Learning

- Pipeline

- Pre-training Stage

- Meta-training Stage

- Hard Task (HT) Meta-batch

- Reference

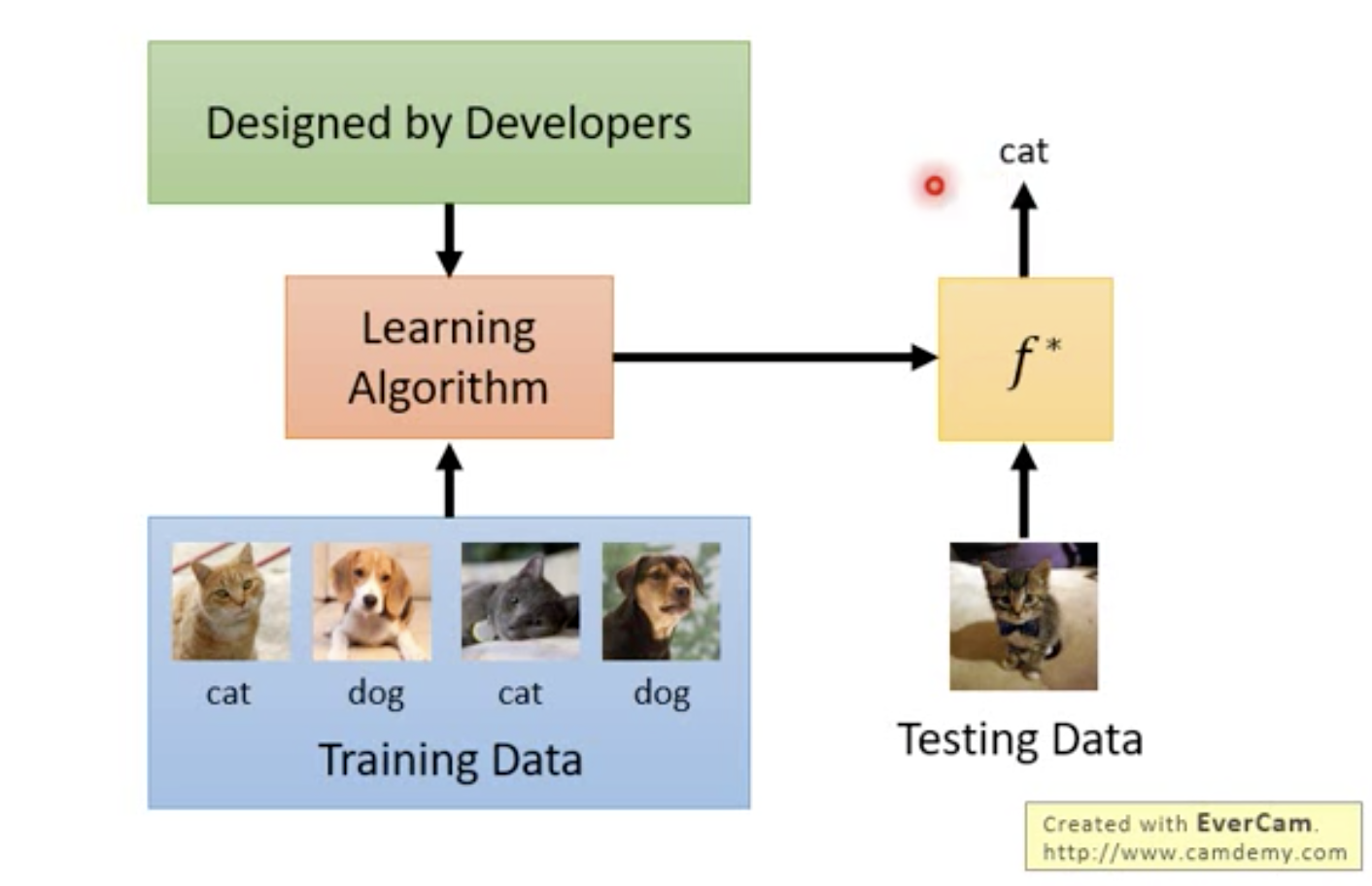

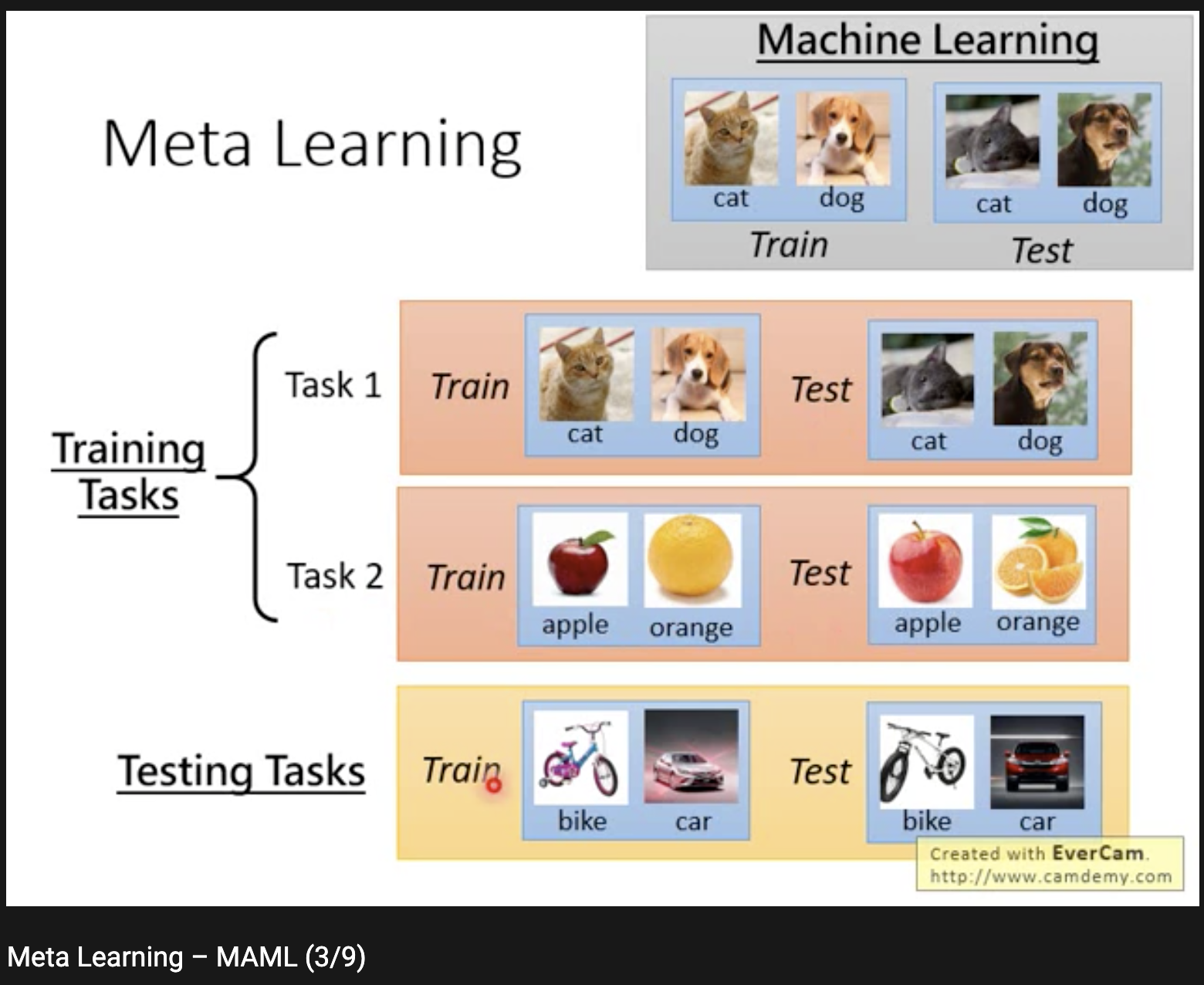

Meta-Learning

Learn to Learn

Learn to Learn

Why is it a problem?

- lack of large-scale training data

- medical domain

- ...

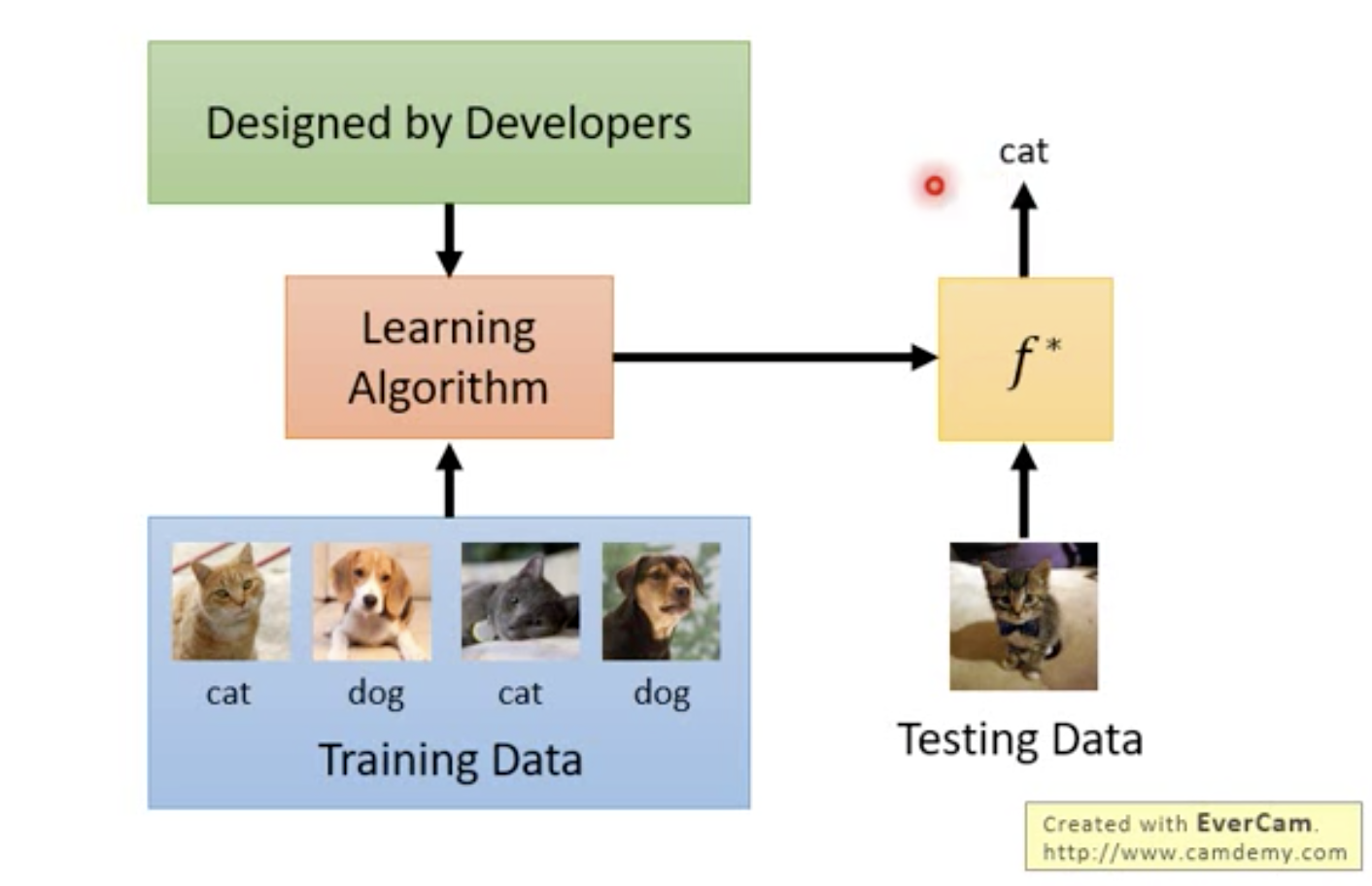

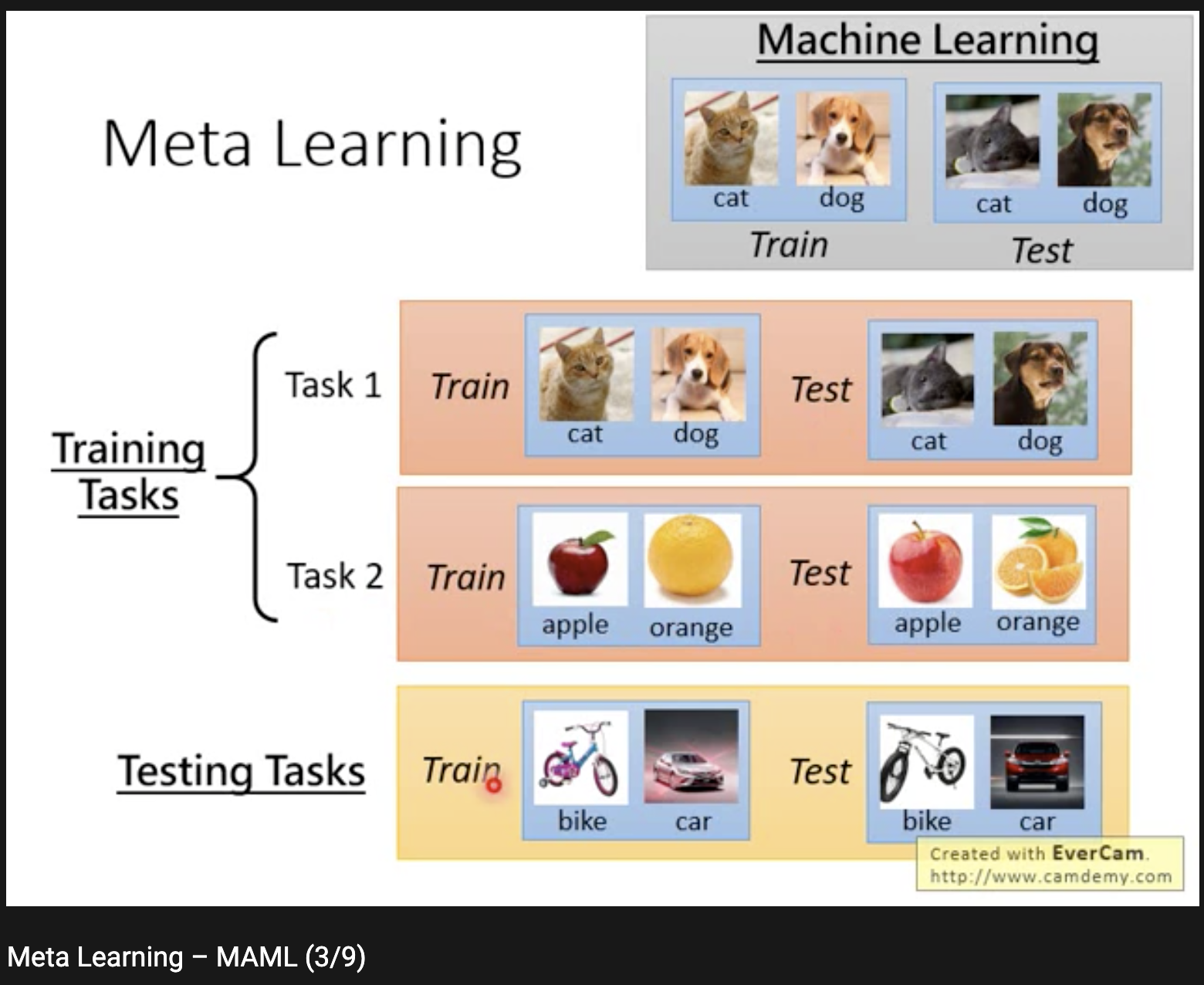

Machine Learning

- training set

- validation set

- testing set

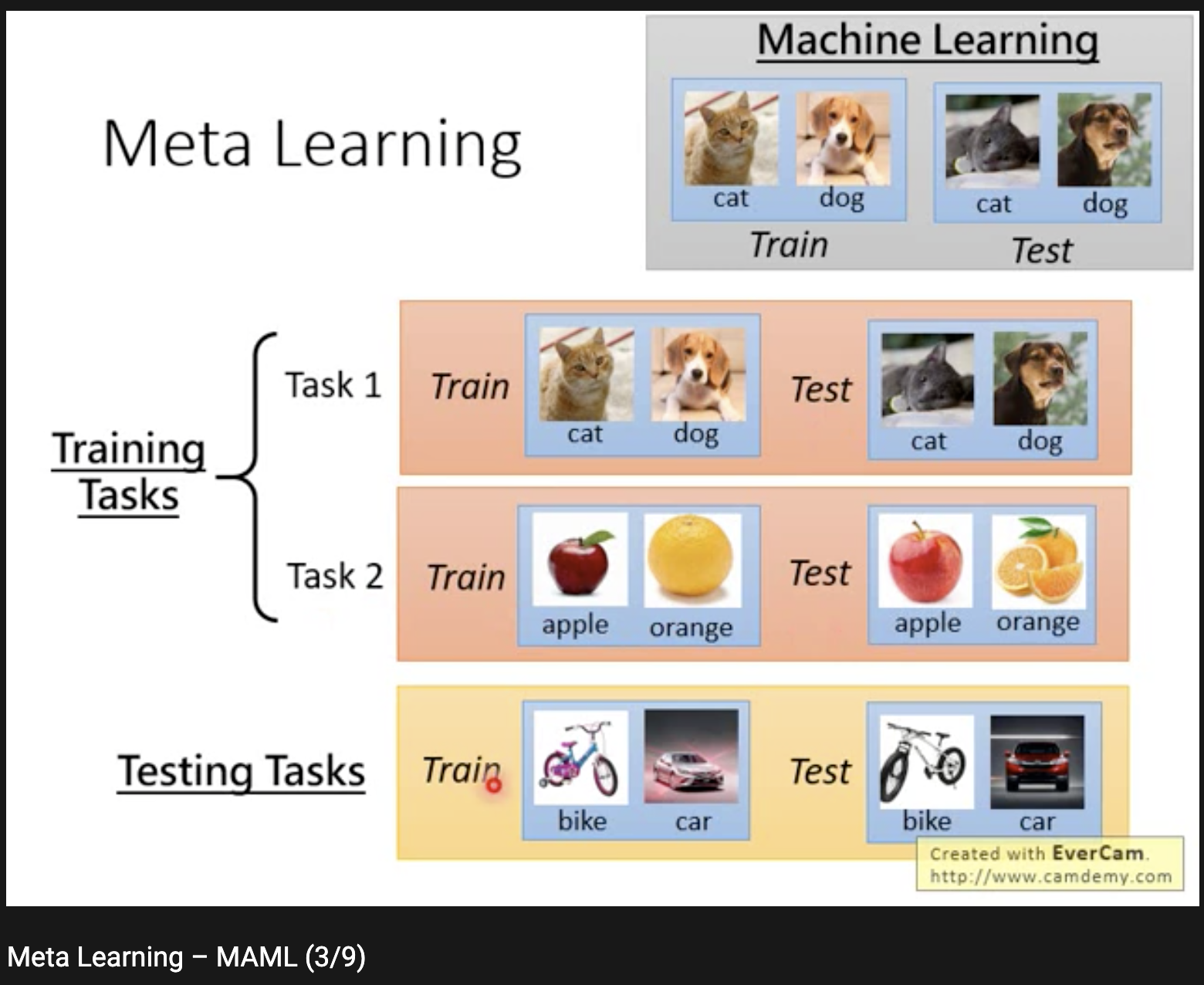

Meta Learning

-

training task

-

validation task

-

testing task

https://www.youtube.com/watch?v=PznN0w7dYc0&ab_channel=Hung-yiLee

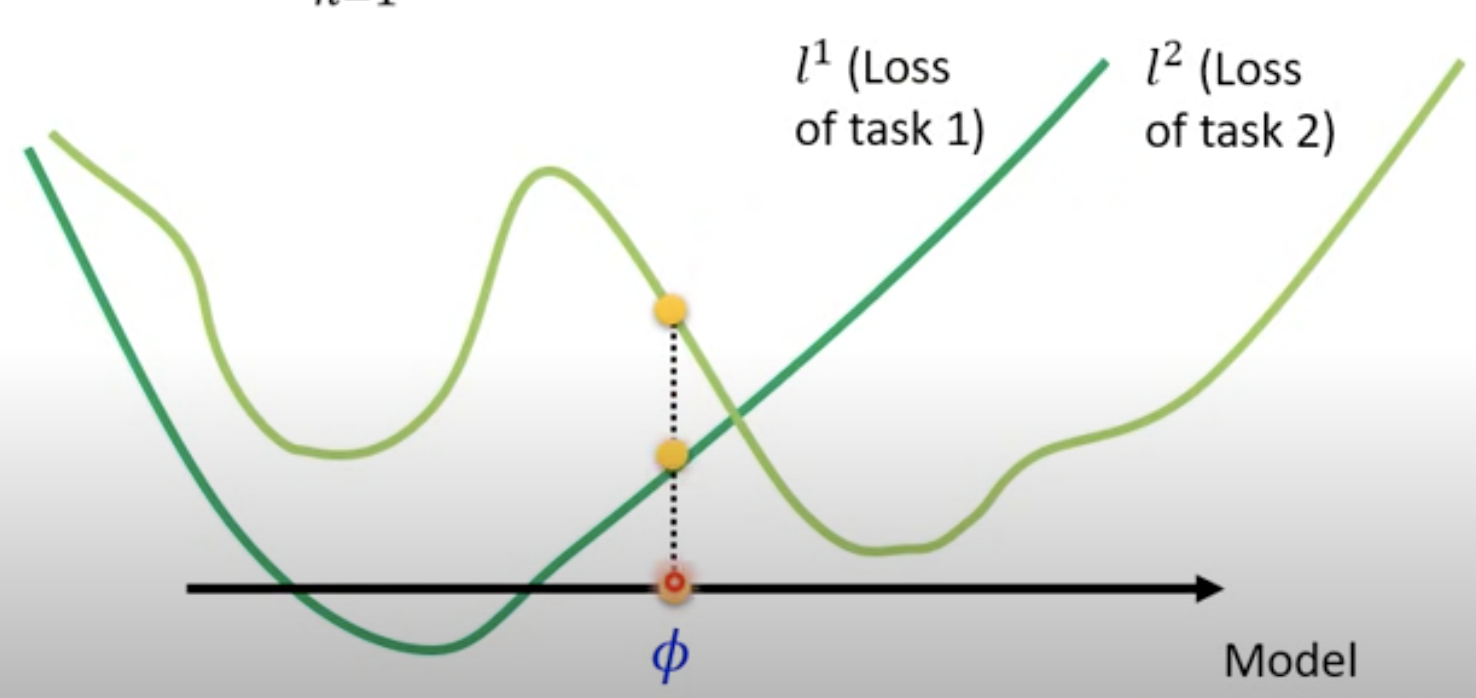

Example - MAML

- search for the optimal initialization

Model Parameter

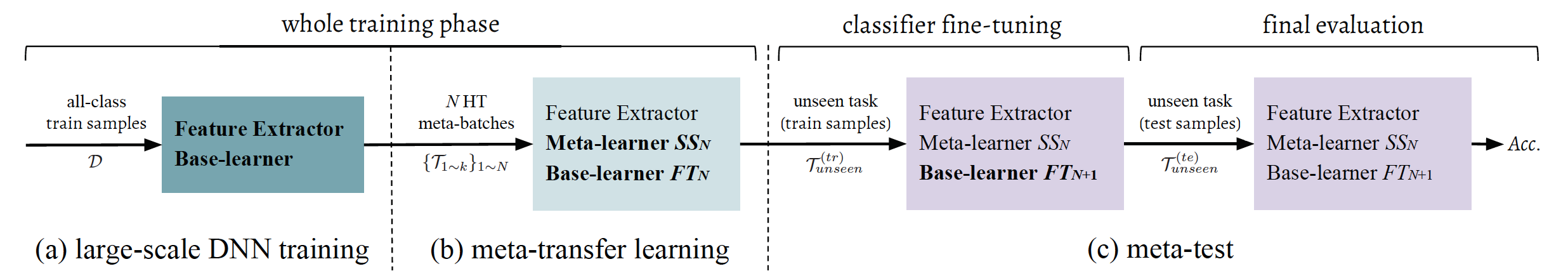

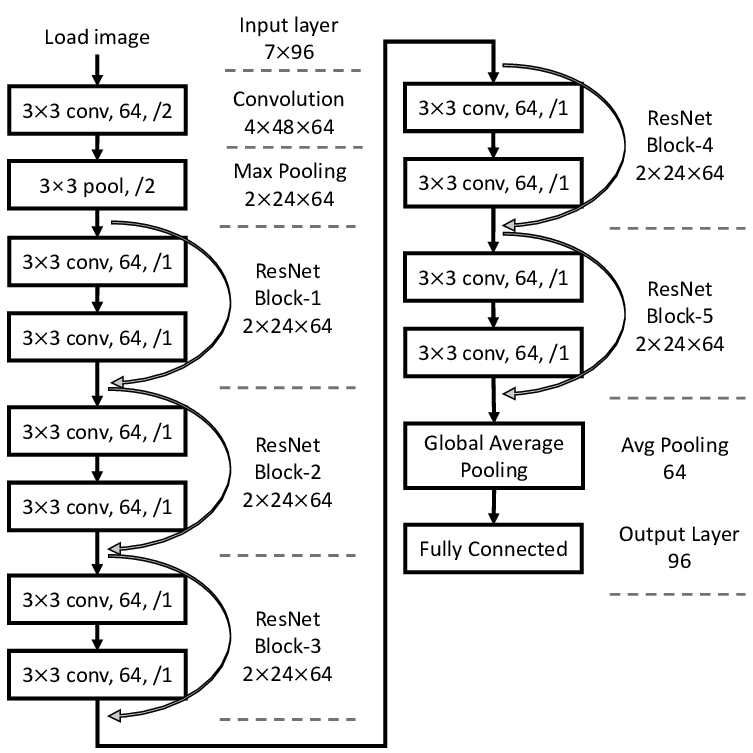

Pipeline

- Pre-training Stage

- ➜ feature extractor

- Meta-training Stage

- ➜ shifting & scaling parameter

- Hard Task (HT) Meta-batch

- ➜ improve the overall learning

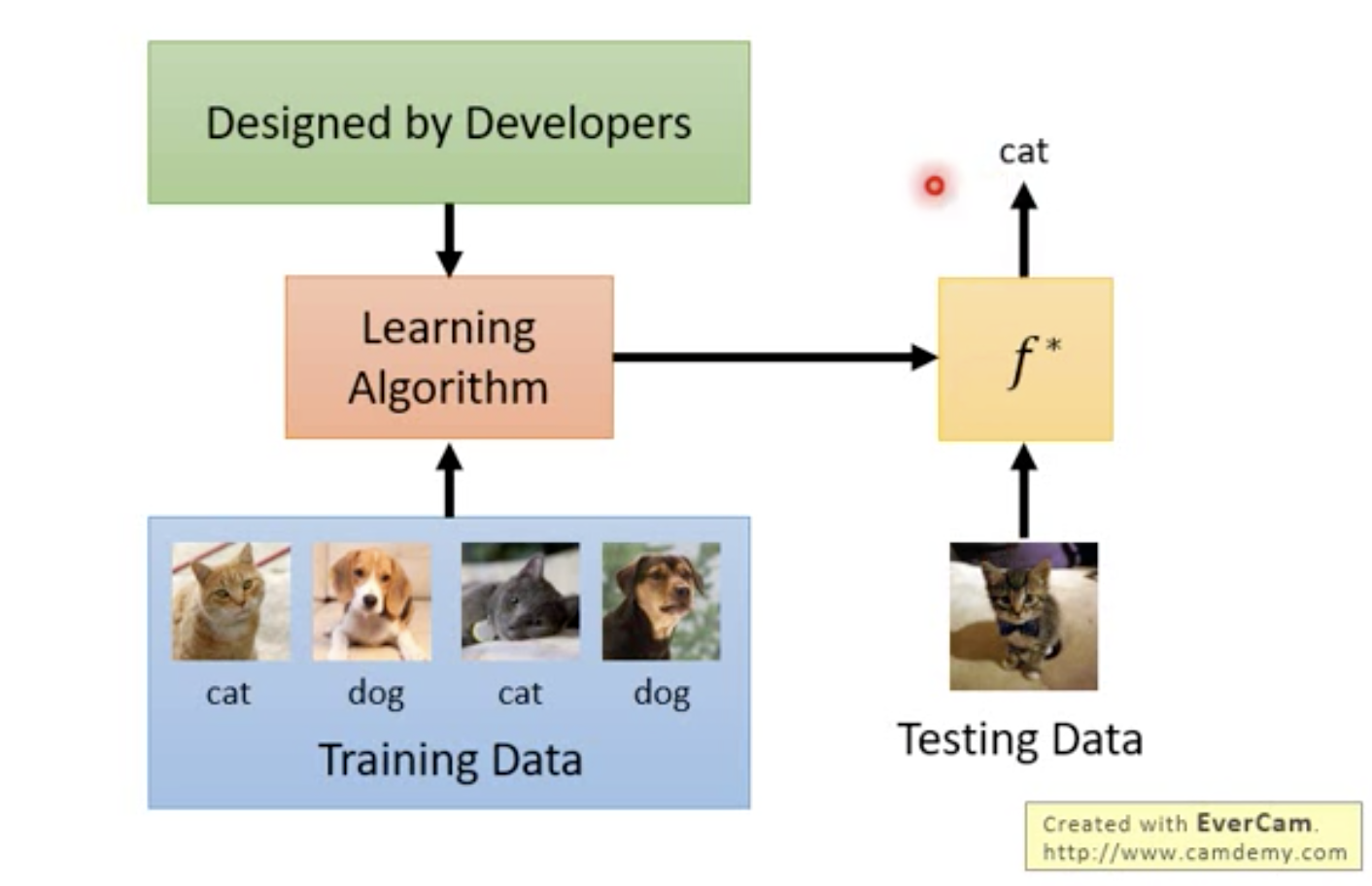

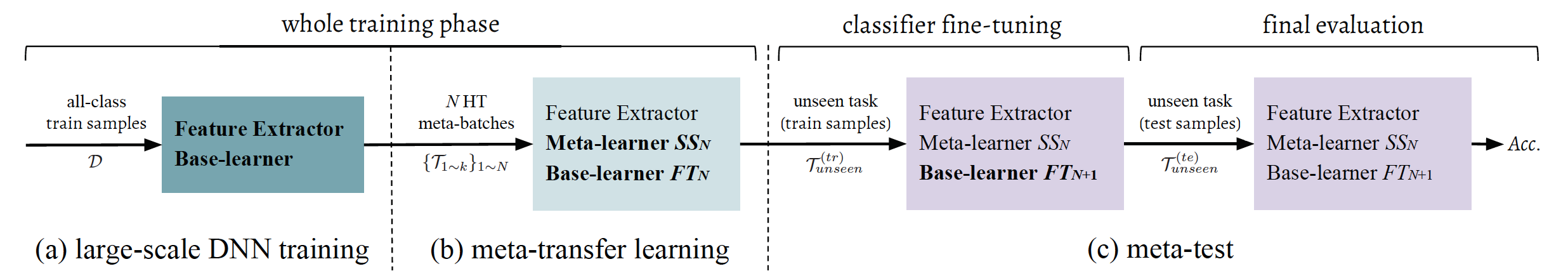

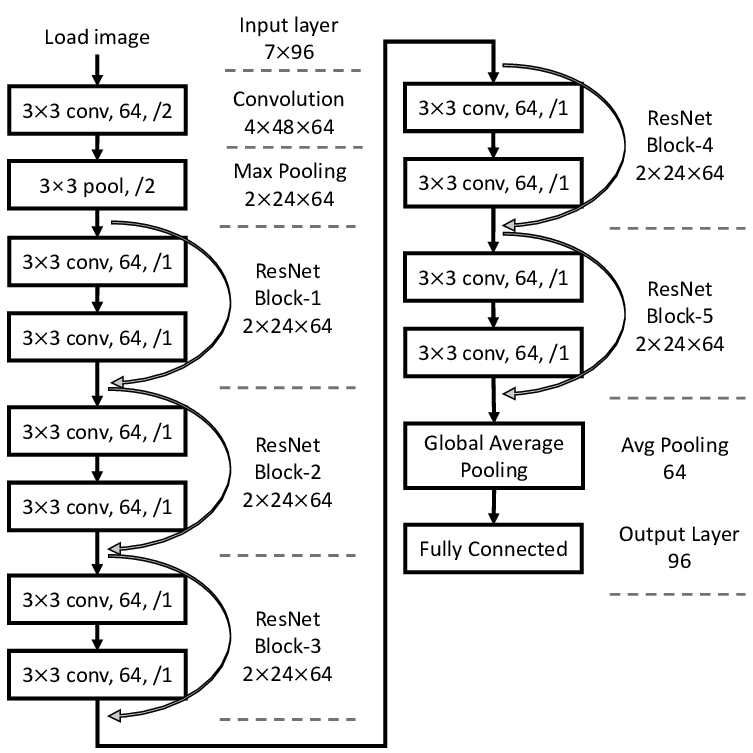

Pre-training Stage

Pre-train a 64-class classifier

- 64 classes 600 samples

feature extractor

➜ frozen

classifier

➜ discard

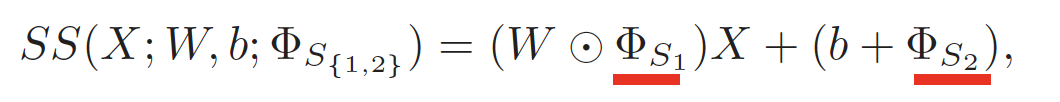

Meta-training Stage

Meta-training Stage

Result

shift & scale parameter

Benefits

-

starts from a strong initialization

-

➜ fast convergence for MTL.

-

-

without changing DNN weights

-

➜ avoiding “catastrophic forgetting”

-

-

lightweight

-

➜ reducing the chance of overfitting

-

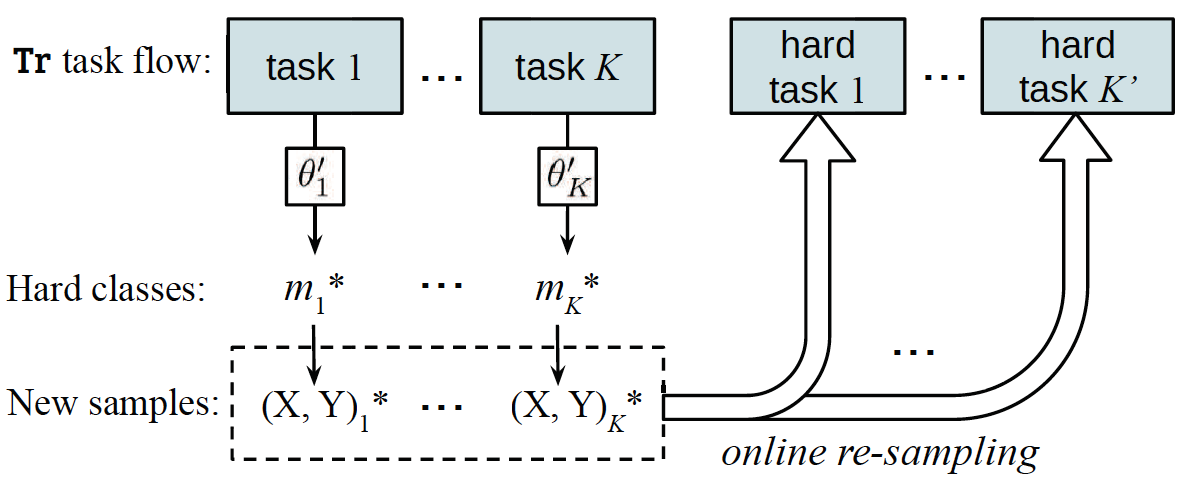

Hard task (HT)

meta-batch

Choose Hard Class

- by ranking the class-level accuracies

ex. distinguishing dogs is harder

ex. distinguishing apples is harder

Reference

Meta-Transfer Learning through Hard Tasks

By hsutzu

Meta-Transfer Learning through Hard Tasks

- 378