Privacy-Preserving Representation Learning

Privacy-Preserving Representation Learning

Empirical

Obfuscation

Methods

Methods That Attempt Formal Gaurentees

Cryptographic

Encodings

Privacy-Preserving Representation Learning

Empirical

Obfuscation

Methods

Methods That Attempt Formal Gaurentees

Cryptographic

Encodings

Adversarial

Training

Schemes

Training

Against

Adversarial-Robustness

Relates to Privacy

Privacy-Preserving Representation Learning

Empirical

Obfuscation

Methods

Methods That Attempt Formal Gaurentees

Cryptographic

Encodings

Adversarial

Training

Schemes

Training

Against

Adversarial-Robustness

Relates to Privacy

Differential Privacy Based

Information

Theoretic

Text

Emperical Obfuscation Methods

Least Previlage

Learning

Privacy-Preserving Representation Learning

Empirical

Obfuscation

Methods

Methods That Attempt Formal Gaurentees

Cryptographic

Encodings

Adversarial

Training

Schemes

Training

Against

Adversarial-Robustness

Relates to Privacy

Text

Techniques related to Fully homomorphic encryption

Differential Privacy Based

Information

Theoretic

Least Previlage

Learning

Emperical Obfuscation Methods

Adversarial Training Schemes

Obuscation

Adversary

Obuscation

Adversary

Obfuscation Loss

Loss for

predicting s

Adversarial Training Schemes

- Mostly No Theoretical Proofs/Guarantees

- Assume Threat/Attack Models, and empirically validate to show results

Adversarial Training Schemes

Madras, David, Elliot Creager, Toniann Pitassi, and Richard Zemel. 2018. “Learning Adversarially Fair and Transferable Representations.” arXiv [Cs.LG]. arXiv. http://arxiv.org/abs/1802.06309.

Xiao, Taihong, Yi-Hsuan Tsai, Kihyuk Sohn, Manmohan Chandraker, and Ming-Hsuan Yang. 2020. “Adversarial Learning of Privacy-Preserving and Task-Oriented Representations.” Proceedings of the ... AAAI Conference on Artificial Intelligence. AAAI Conference on Artificial Intelligence 34 (07): 12434–41.

Zhang, Binghui, Sayedeh Leila Noorbakhsh, Yun Dong, Yuan Hong, and Binghui Wang. 2023. “Adversarially Robust and Privacy-Preserving Representation Learning via Information Theory,” October. https://openreview.net/forum?id=9NKRfhKgzI.

Zhao, Han, Jianfeng Chi, Yuan Tian, and Geoffrey J. Gordon. 2020. “Trade-Offs and Guarantees of Adversarial Representation Learning for Information Obfuscation.” Advances in Neural Information Processing Systems 33: 9485–96.

Some Related works in this direction

Adversarial Training Schemes

Methods That Attempt Formal Gaurentees

Information Theoretic

Obuscation

1. Minimize Information Between s and z

2. In general, Maximize Information between x and z

Information Theoretic

Obuscation

1. Minimize Information Between s and z

2. In general, Maximize Information between x and z

Closely Related to Adversarial Training Schemes, But It is possible to derive Theoritical Bounds, to analyze the trade-off between Utility and Privacy

Information Theoretic

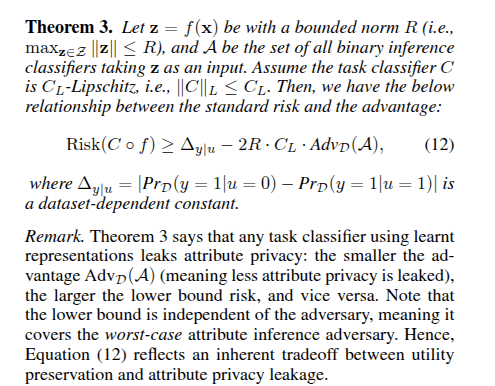

Closely Related to Adversarial Training Schemes, But It is possible to derive Theoritical Bounds, to analyze the trade-off between Utility and Privacy

Example Bound

Zhang B, Noorbakhsh SL, Dong Y, Hong Y, Wang B. Learning Robust and Privacy-Preserving Representations via Information Theory. InProceedings of the AAAI Conference on Artificial Intelligence 2025 Apr 11 (Vol. 39, No. 21, pp. 22363-22371).

Information Theoretic

Some Related Works in This Direction

Zhang B, Noorbakhsh SL, Dong Y, Hong Y, Wang B. Learning Robust and Privacy-Preserving Representations via Information Theory. InProceedings of the AAAI Conference on Artificial Intelligence 2025 Apr 11 (Vol. 39, No. 21, pp. 22363-22371).

Asoodeh, Shahab, and Flavio P. Calmon. 2020. “Bottleneck Problems: An Information and Estimation-Theoretic View.” Entropy (Basel, Switzerland) 22 (11): 1325.

Guo, Chuan, Brian Karrer, Kamalika Chaudhuri, and Laurens van der Maaten. 2022. “Bounding Training Data Reconstruction in Private (Deep) Learning.” In International Conference on Machine Learning, 8056–71. PMLR.

Hannun, Awni, Chuan Guo, and Laurens van der Maaten. 2021. “Measuring Data Leakage in Machine-Learning Models with Fisher Information.” In Uncertainty in Artificial Intelligence, 760–70. PMLR.

Maeng, Kiwan, Chuan Guo, Sanjay Kariyappa, and G. Edward Suh. 2023. “Bounding the Invertibility of Privacy-Preserving Instance Encoding Using Fisher Information.” arXiv [Cs.LG]. arXiv. http://arxiv.org/abs/2305.04146.

Mireshghallah, Fatemehsadat, Mohammadkazem Taram, Ali Jalali, Ahmed Taha Taha Elthakeb, Dean Tullsen, and Hadi Esmaeilzadeh. 2021. “Not All Features Are Equal: Discovering Essential Features for Preserving Prediction Privacy.” In Proceedings of the Web Conference 2021. New York, NY, USA: ACM. https://doi.org/10.1145/3442381.3449965.

Information Theoretic

One Notable Direction

Bounding the Invertibility of Privacy-Preserving Instance Encoding Using Fisher Information

Derives an Lower-Bound for Reconstruction Error, In terms of Fisher Information Matrix

Higher Lower Bound -> Certificate for hardness of Reconstruction under the MSE

Can be used to facilitate design choices that will give us a higher lower bound

Maeng K, Guo C, Kariyappa S, Suh GE. Bounding the invertibility of privacy-preserving instance encoding using fisher information. Advances in Neural Information Processing Systems. 2023 Dec 15;36:51904-25.

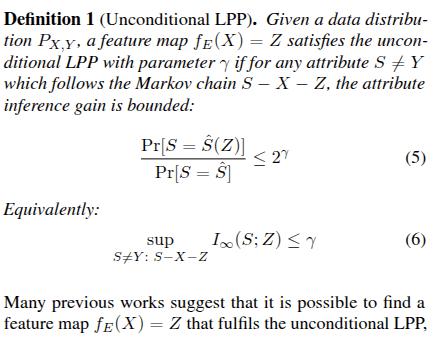

Least Previlage Learning

Learn Just what is Nessasary to get the Task Done, Do not Leak any additional features

[1] Stadler T, Kulynych B, Gastpar MC, Papernot N, Troncoso C. The fundamental limits of least-privilege learning. arXiv preprint arXiv:2402.12235. 2024 Feb 19.

[1] Analyzes the Trade-offs incurred by a feature map to satisfy Least Privilage Principle and Utility for the given task

Differential Privacy Based Methods

Differential Privacy Based Methods

- While a plethora of work has attempted to provide DP Guarantees for learning representations, I feel Recent Thesis work by Hanshen Xiao has provided a consolidating and unifying framework [1]

- Hence, I think it Is worth closely looking at this work :"Formal Privacy Proof of Data Encoding: The Possibility and Impossibility of Learnable Obfuscation"

[1] Xiao, Hanshen. 2024. “Automated and Provable Privatization for Black-Box Processing.” Massachusetts Institute of Technology. https://hdl.handle.net/1721.1/159202.

Differential Privacy Based Methods

Formal Privacy Proof of Data Encoding: The Possibility and Impossibility of Learnable Obfuscation

Heuristic

Obfuscation

Method

The Paper Looks at:

1. Matrix Masking : Distance PreservingRandom Matrix Projection, JL Lemma

2. Data Mixing: Linear Combination of (x,y)

3. Permutation: Permute the Order of X

Differential Privacy Based Methods

Formal Privacy Proof of Data Encoding: The Possibility and Impossibility of Learnable Obfuscation

Heuristic

Obfuscation

Method

The Paper Looks at:

1. Matrix Masking : Distance PreservingRandom Matrix Projection, JL Lemma

2. Data Mixing: Linear Combination of (x,y)

3. Permutation: Permute the Order of X

First gives Privacy bounds using (1) alone. But deems it to be not usable. Then uses (1)+(2)+(3), and gives reasonable privacy bounds, useful in practice

Differential Privacy Based Methods

Formal Privacy Proof of Data Encoding: The Possibility and Impossibility of Learnable Obfuscation

Heuristic

Obfuscation

Method

Using the theorems' from paper, one can choose sigma to get optimal privacy-utility tradeoffs under the PAC-Privacy setting

Also, Given a new "Obfuscation mechanism" we can attempt to derive a formal bound, using the tools presented in this paper, in the PAC-Private sense

Privacy Preserving Rep

By Incredeble us

Privacy Preserving Rep

- 108