From Linear Systems to NNs

Aadharsh Aadhithya A

What does it mean?

What does it mean?

Set of all points (x,y) that satisfy the relation 3x+4y=12.

What does it mean?

Set of all points (x,y) that satisfy the relation 3x+4y=12.

How many soltions possible?

What does it mean?

Set of all points (x,y) that satisfy the relation 3x+4y=12.

How many soltions possible?

Set of all points (x,y) that satisfy the relation 3x+4y=12.

Set of all points (x,y) that satisfy the relation 3x+4y=12.

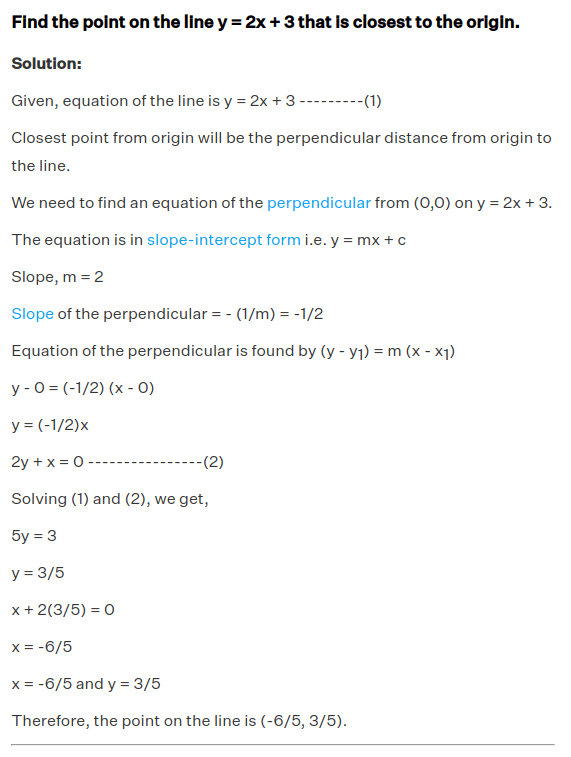

Now, From these infinite solutions, give me a solution that is closest to the origin!

The solution lies on the perpendicular line from the origin? r

Now, From these infinite solutions, give me a solution that is closest to the origin!

The solution lies on the perpendicular line from the origin?

But what is the direction perpendicular to that line?

Now, From these infinite solutions, give me a solution that is closest to the origin!

The solution lies on the perpendicular line from the origin?

But what is the direction perpendicular to that line?

4

3

Now, From these infinite solutions, give me a solution that is closest to the origin!

The solution lies on the perpendicular line from the origin?

But what is the direction perpendicular to that line?

4

3

Now, From these infinite solutions, give me a solution that is closest to the origin!

The solution lies on the perpendicular line from the origin?

But what is the direction perpendicular to that line?

4

3

A vector that is perpendicular to [4 3]

Now, From these infinite solutions, give me a solution that is closest to the origin!

The solution lies on the perpendicular line from the origin?

4

3

Then This solution is of the form

Excercise: Find c

Least Norm Solution

Now, From these infinite solutions, give me a solution that is closest to the origin!

Least Norm Solution

This is a plane in 3D

Now, From these infinite solutions, give me a solution that is closest to the origin!

Least Norm Solution

This is a plane in 3D

Infinite solutions are possible. "Least-Norm" Solution is again in a direction perpendicular to this plane

Now, From these infinite solutions, give me a solution that is closest to the origin!

Least Norm Solution

This is a plane in 3D

Infinite solutions are possible. "Least-Norm" Solution is again in a direction perpendicular to this plane

Direction that is perpendicular to the plane is:

Now, From these infinite solutions, give me a solution that is closest to the origin!

This is a plane in 3D

Infinite solutions are possible. "Least-Norm" Solution is again in a direction perpendicular to this plane

Least norm solution is of the form

Now, From these infinite solutions, give me a solution that is closest to the origin!

What does this mean geometrically?

Now, From these infinite solutions, give me a solution that is closest to the origin!

What does this mean geometrically?

Intersection of two planes: is a line

Infinite solutions!

Infinite points. Find point, closest to the origin!

Now, From these infinite solutions, give me a solution that is closest to the origin!

What does this mean geometrically?

Intersection of two planes: is a line

Infinite solutions!

Infinite points. Find point, closest to the origin!

The Point is on a line Orthogonal to the Line of intersection of both the planes.

Now, From these infinite solutions, give me a solution that is closest to the origin!

What does this mean geometrically?

Intersection of two planes: is a line

Infinite solutions!

Infinite points. Find point, closest to the origin!

The Point is on a line Orthogonal to the Line of intersection of both the planes.

But But, There are Skew lines in 3D!

But it should be on the plane perpendicular to the line

Now, From these infinite solutions, give me a solution that is closest to the origin!

What does this mean geometrically?

Intersection of two planes: is a line

Infinite solutions!

Infinite points. Find point, closest to the origin!

But But, There are Skew lines in 3D!

But it should be on the plane perpendicular to the line

Now, From these infinite solutions, give me a solution that is closest to the origin!

What does this mean geometrically?

Intersection of two planes: is a line

Infinite solutions!

Infinite points. Find point, closest to the origin!

But But, There are Skew lines in 3D!

But it should be on the plane perpendicular to the line

Now, From these infinite solutions, give me a solution that is closest to the origin!

Infinite solutions!

LESSON

The least norm solution, always lies in the row space of A

Now, From these infinite solutions, give me a solution that is closest to the origin!

Infinite solutions!

Theorem

The least norm solution, always lies in the row space of A

Representer Theorem

The least norm solution lies in the rowspace of A. In other words, it is a linear combination of the rows of A

It is of the form

The least norm solution lies in the rowspace of A. In other words, it is a linear combination of the rows of A

It is of the form

The least norm solution lies in the rowspace of A. In other words, it is a linear combination of the rows of A

It is of the form

What if AA^T is not invertable????

Resort to Pinv

Pseudoinverse

Pseudoinverse

Column Space

LNS

Row Space

Right Null Space

A is 8x8, with rank 4

Pseudoinverse

Pseudoinverse

Column Space

LNS

Pseudoinverse

Column Space

LNS

Column Space

LNS

B In Col Space

B Not In Col Space

Pseudoinverse

Column Space

LNS

Column Space

LNS

B In Col Space

B Not In Col Space

Pseudoinverse

Column Space

LNS

Column Space

LNS

B In Col Space

B Not In Col Space

Pseudoinverse

Pseudoinverse

Pseudoinverse

Pseudoinverse

Pseudoinverse

Row Space

Right Null Space

Pseudoinverse

Row Space

Right Null Space

Pseudoinverse

Pseudoinverse

Your Solution is a linear combination of Row Space

Pseudoinverse

Pinv Gives: Least Norm Solution

Pseudoinverse

Pinv Gives: Least Norm Solution

If b is not in col space, It gives Least Squares and Least Norm solution

Linear Regression

Linear Regression

Linear Regression

Linear Regression

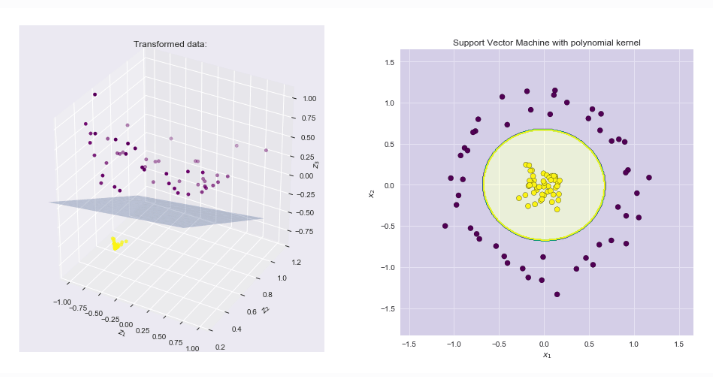

Kernel Regression

Kernel Regression

Perform Linear Regression, after Mapping Data

https://xavierbourretsicotte.github.io/Kernel_feature_map.html

Kernel Regression

Perform Linear Regression, after Mapping Data

Linear Regression

Kernel Regression

Kernel Regression

Perform Linear Regression, after Mapping Data

Linear Regression

Kernel Regression

Typically we map to higher dimentions, i.e k>d

Kernel Regression

Perform Linear Regression, after Mapping Data

Linear Regression

Kernel Regression

We have k unknowns

Kernel Regression

Perform Linear Regression, after Mapping Data

Least norm solution,

Kernel Regression

Kernel Regression

Kernel Regression

Kernel Regression

Kernel Regression

N unknowns

Even if we map to "infinite dimensions", we still have to determine only N unknowns

Kernel Regression

This is exactly Linear regression over alpha

Kernel Regression

This is exactly Linear regression over alpha

Kernel Regression

This is exactly Linear regression over alpha

Kernel Regression

This is exactly Linear regression over alpha

NNGP

Neural Network Gaussian Process

NNGP

Neural Network Gaussian Process

NNGP

Neural Network Gaussian Process

NNGP

Neural Network Gaussian Process

Mapping x to R^k

B is initialized Randomly

Fix B, Update A only

NNGP

Neural Network Gaussian Process

Map, do Linear Regression

i.e Kernel Regression

NNGP

Neural Network Gaussian Process

Map, do Linear Regression

i.e Kernel Regression

How to calculate the kernel matrix K?

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

DETOUR: SAMPLING FROM DISTRIBUTIONS AND ESTIMATION OF MEAN

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

These are samples from Normal Distributions

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

These are samples from Normal Distributions

We are summing over Samples from "a function of a Random Variable" and taking average

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

These are samples from Normal Distributions

We are summing over Samples from "a function of a Random Variable" and taking average

As we take larger and larger k, the more closer estimate of the mean/Expectation we get

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

As we take larger and larger k, the more closer estimate of the mean/Expectation we get

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

As we take larger and larger k, the more closer estimate of the mean/Expectation we get

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

As we take larger and larger k, the more closer estimate of the mean/Expectation we get

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

u and v are from different distributions

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

u and v are from different distributions

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

u and v are from different distributions

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

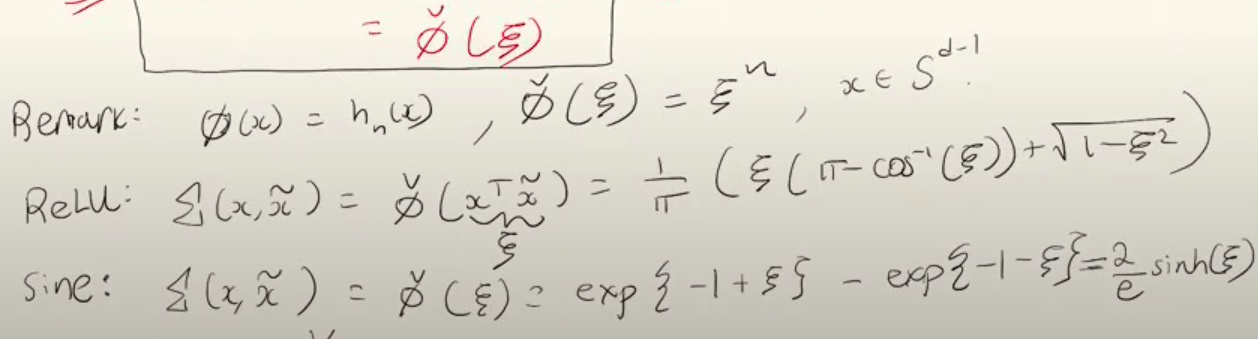

DUAL Activations

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

DUAL Activations

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

If data is normalized

NNGP

Neural Network Gaussian Process

How to calculate the kernel matrix K?

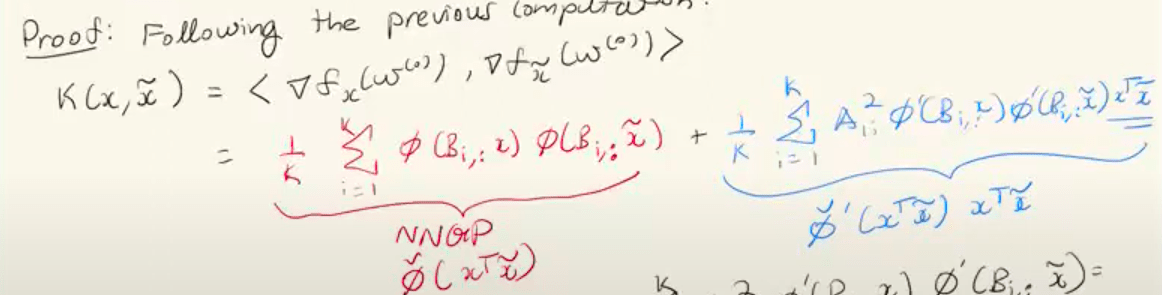

NTK

Neural Tangent Kernel

NTK

NTK

"Linearize f around some initialization w"

NTK

"Linearize f around some initialization w"

NTK

"Linearize f around some initialization w"

NTK

Unknown Weights

Can be 0

Then This is a linear model in w

NTK

Unknown Weights

Can be 0

Then This is a linear model in w

This can be intepreted as mapping the data and solving a linear model around w0

NTK

NTK

NTK

Neural Tangent Kernel

NTK

NTK

deck

By Incredeble us

deck

- 170