Distributed Node #7

Kubernetes

Agenda:

-

Intro

-

Scaling & High availability

-

Kubernetes

-

Wrapping our current configuration to a K8S YAML

-

Monitoring tools

Intro

What if a server can't handle all user connections?

WS

WS

...lots of people..

Can we increase server resources?

How to handle high load?

HTTP

This is OK

I will add 128 GB RAM and more replace the CPU AMD Ryzen 9 3950X

Dinesh

Replacing hardware will require downtime,

lets add some separate cheap servers

Gilfoyle

ANTON

Horizontal Scaling

Vertical Scaling

VS

| No limitation of horizontal scaled instances | Hardware limitation |

| It is difficult to implement | It is easy to implement |

| It is costlier, as new server racks comprises of a lot of resources | It is cheaper as we need to just add new resources |

| It takes more time to be done | It takes less time to be done |

| No downtime | Downtime |

Which one is your choice ?

Examples

Horizontal scaling is almost always more desirable than vertical scaling because you don’t get caught in a resource deficit.

Scaling the Chat container

Chat Docker image

Container 1

Container 3

Container 2

Using the Docker

Easy!

docker run -p 3030:8080 -d localhost:32000/node-web-app:latest

docker run -p 3031:8080 -d localhost:32000/node-web-app:latest

docker run -p 3032:8080 -d localhost:32000/node-web-app:latestJust create several instances of our chat containers on different ports

Right?

What if I want to run them on different hosts, how to organize the communication?

How to monitor and restart failed containers without manual actions ?

How to update the application automatically without downtime?

Auto-scale?

Load balancing?

Configuration stores?

SLI/SLO ?

Secrets?

There is a tool

with all these things!

Local development

Using Docker

Using docker-compose

Orchestration using Kubernetes

Kubernetes Alternatives?

But Kubernetes is still the gold standart

What clouds support Kubernetes?

Clouds services for Kubernetes

Amazon Elastic Kubernetes Service (EKS)

Azure Kubernetes Service (AKS)

Google Kubernetes Engine (GKE)

Container Service for Kubernetes (ACK)

Oracle Kubernetes Engine

Our strategy

1. Build a Docker chat image

2. Push it to a registry

3. Start a Kubernetes cluster

4. Create a Deployment configuration YAML file

5. Setup a replication factor 2 for the Chat Pod

6. Apply YAML config using kubectl CLI

7. Kill a chat Pod and watch how Kubernetes handles it

Kubernetes time

Kubernetes, also known as K8s, is an open-source system for automating deployment, scaling, and management of containerized applications.

It groups containers that make up an application into logical units for easy management and discovery. Kubernetes builds upon 15 years of experience running production workloads at Google, combined with best-of-breed ideas and practices from the community.

Planet Scale

Designed on the same principles that allow Google to run billions of containers a week, Kubernetes can scale without increasing your ops team.

Never Outgrow

Whether testing locally or running a global enterprise, Kubernetes flexibility grows with you to deliver your applications consistently and easily no matter how complex your need is.

Run K8s Anywhere

Kubernetes is open source giving you the freedom to take advantage of on-premises, hybrid, or public cloud infrastructure, letting you effortlessly move workloads to where it matters to you.

Kubernetes Features

IPv4/IPv6 dual-stack

Automated rollouts and rollbacks

Batch execution

Service Topology

Service discovery and load balancing

Horizontal scaling

Secret and configuration managment

Storage orchestration

Self-healing

Automatic bin packing

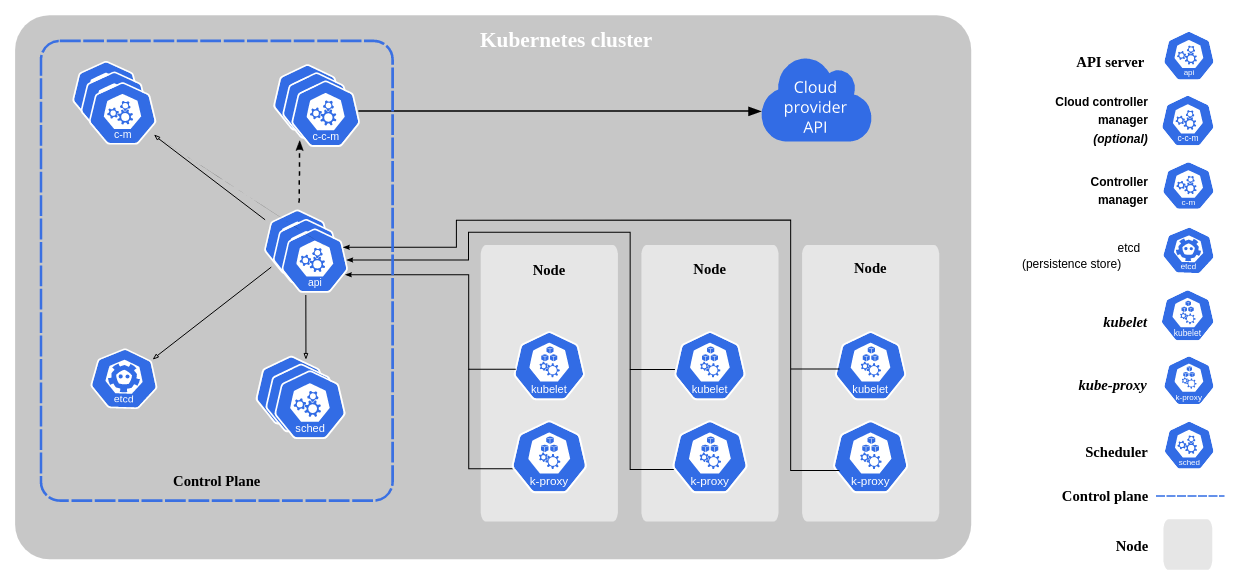

K8s Architecture and main components overview

Official diagram from kubernetes.io

Worker machine in K8s cluster

Node

DB

Pod

My app

Pod

- each Node has multiple Pods on it

- 3 processes must be installed on every Node

- Worker Nodes do the actual work

Container runtime

Kubelet:

- interacts with both - container and Node

- starts the pod with a container inside

Processes

Worker machine in K8s cluster

Node 1

DB

Pod

My app

Pod

Container runtime

Processes

Usually, there are multiple Nodes

Node 2

DB

Pod

My app

Pod

Container runtime

Processes

?

So, how to interact with a cluster?

How to:

- schedule Pod

- monitor

- re-schedule/re-start Pod

- join a new Node

And the answer is

all managing processes are done by Master Node

Master node (4 processes)

Node 1

Pod

Processes

Master Node

Pod

Node 2

Pod

Processes

Pod

Api Server

Client (kubelet, k8s API)

- cluster gateway

- acts as a gatekeeper for authentication

Some request

Api Server

validates request

..other processes..

Master node (4 processes)

Node 1

Pod

Processes

Master Node

Pod

Node 2

Pod

Processes

Pod

Api Server

Schedule new Pod

Api Server

Scheduler

Where to put the Pod?

Scheduler

60% used

30% used

Kubelet

Master node (4 processes)

Node 1

Pod

Processes

Master Node

Pod

Node 2

Pod

Processes

Pod

Api Server

Controller Manager

Scheduler

Scheduler

Kubelet

Controller Manager

detect cluster state changes

60% used

30% used

Master node (4 processes)

Node 1

Pod

Processes

Master Node

Pod

Node 2

Pod

Processes

Pod

Api Server

Scheduler

Controller Manager

60% used

30% used

etcd

Key Value Store

- etcd is the cluster "brain"

- Cluster changes get stored in the key value store

- What resources are available?

- Dod the cluster state change?e store

Cluster example

Minikube and kubectl

Production Cluster setup

Node

Pod

Processes

Master

Pod

Api Server

Scheduler

Controller Manager

etcd

Master

Api Server

Scheduler

Controller Manager

etcd

Node

Pod

Processes

Pod

Node

Pod

Processes

Pod

- Multiple Master and Worker nodes

- Separate virtual or physical machines

Test/Local Cluster setup: Minikube

Node

Pod

Processes

Pod

Master processes

Master and Node processes run on ONE machine

- creates a Virtual Box (or Hyper-V) on your laptop

- Node runs in that Virtual Box

- 1 Node K8s Cluster

- for testing purposes

Minikube:

Test/Local Cluster setup: kubectl

Node

Pod

Processes

Pod

Service

Secret

ConfigMap

Command-line tool for K8s cluster

Master processes - API Server enables interaction with the cluster

Api Server

Scheduler

Controller Manager

etcd

UI

API

CLI (kubectl)

Kubectl

Kubectl controls the Kubernetes cluster manager

kubectl create deployment nginx --image=nginx # Create NGINX deployment with 1 Pod (1 Container)

kubectl scale --replicas=3 deployment/nginx # Scale current NGINX servers to 3 replicas

kubectl delete deployment/nginx # delete everythingInstallation

Main kubectl commands

kubectl Cheat Sheet

K8s YAML configuration file

YAML

Instead of writing commands every time from scratch we can save them to a YAML file, and commit to the GitHub

kind: Deployment

apiVersion: apps/v1

metadata:

namespace: default

name: client-app

labels:

app: client-app

spec:

replicas: 1

selector:

matchLabels:

app: client-app

template:

metadata:

labels:

app: client-app

spec:

containers:

- name: client-app

image: iivashchuk/jsprocamp-client-app

env:

- name: API_HOST

value: "chat-service"

- name: API_PORT

value: "3001"

ports:

- name: web

containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: client-app

spec:

ports:

- protocol: TCP

name: web

port: 80

selector:

app: client-app

type: LoadBalancer

Helm is a tool for managing Charts. Charts are packages of pre-configured Kubernetes resources.

Use Helm to:

- Find and use popular software packaged as Helm Charts to run in Kubernetes

- Share your own applications as Helm Charts

- Create reproducible builds of your Kubernetes applications

- Intelligently manage your Kubernetes manifest files

- Manage releases of Helm packages

Helm usages:

$ helm repo add bitnami https://charts.bitnami.com/bitnami $ helm install my-release bitnami/mongodb $ helm install my-redis --set cluster.slaveCount=0 bitnami/redis

Demo: starting the Kubernetes cluster

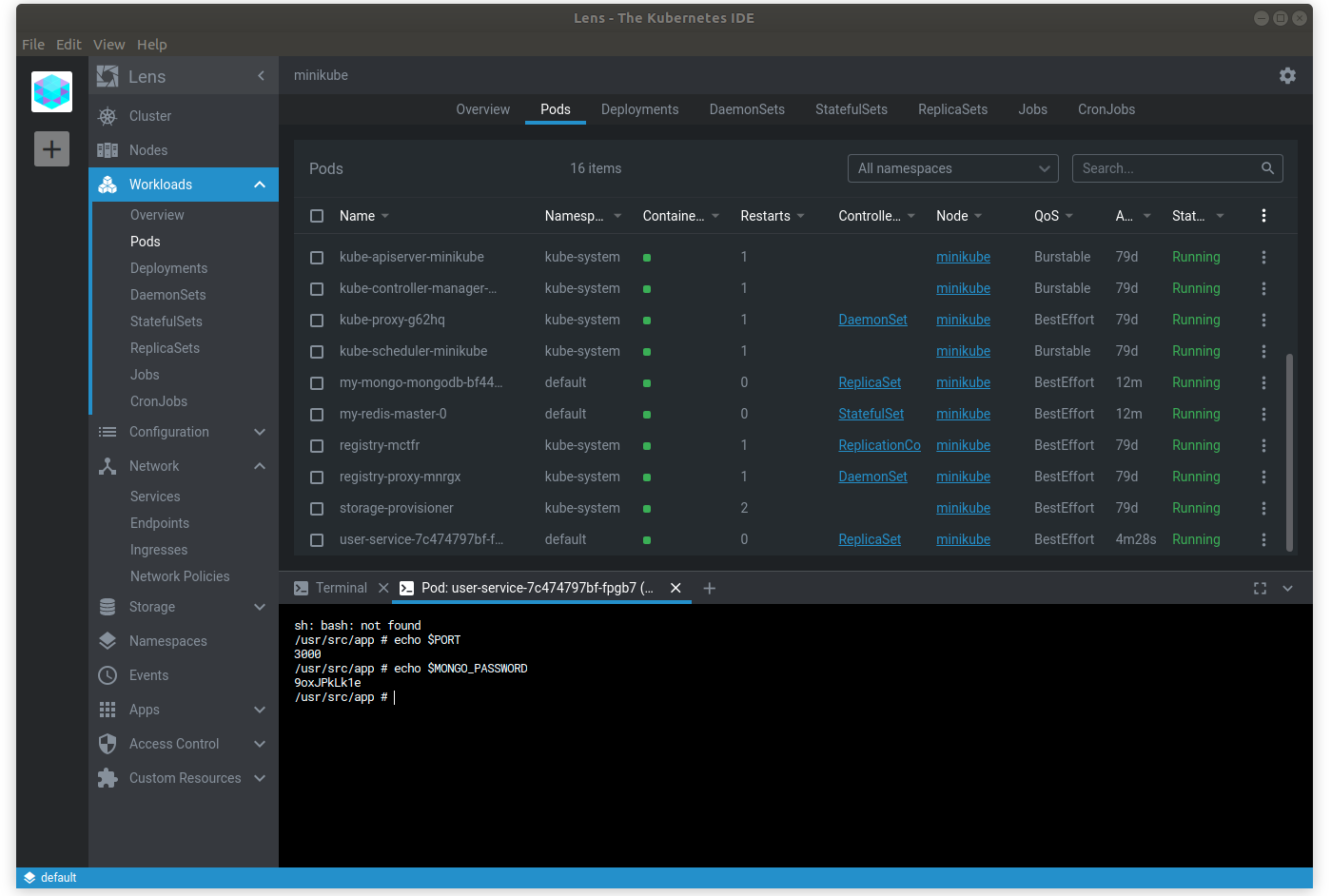

Useful Tool

Q & A

Distributed Node #7

By Inna Ivashchuk

Distributed Node #7

k8s

- 794