Do LLMs dream of Type Inference?

Leaner Technologies, Inc.

Shunsuke "Kokuyou" Mori

@kokuyouwind

$ whoami

Name: Shunsuke Mori

Handle: Kokuyou (黒曜)

from Japan 🇯🇵

(First time in the US / at RubyConf)

Work: Leaner Technologies, Inc.

Hobby Project:

Developing an LLM-based type inference tool

@kokuyouwind

@kokuyouwind

Why Type Inference with LLMs?

class Bird; end

class Duck < Bird

def cry; puts "Quack"; end

end

class Goose < Bird

def cry; puts "Gabble"; end

end

def make_sound(bird)

bird.cry

end

make_sound(Duck.new)

make_sound(Goose.new)What is the argument type of make_sound method?

Traditional Approach: Algorithmic

make_sound(Duck.new)

make_sound(Goose.new)Called with Duck

Called with Goose

The argument type of make_sound is (Duck | Goose)

Human Approach: Heuristics

class Bird; end

def make_sound(bird)

bird.cry

endThe argument name is bird.

The argument type of make_sound is Bird

There is Bird class.

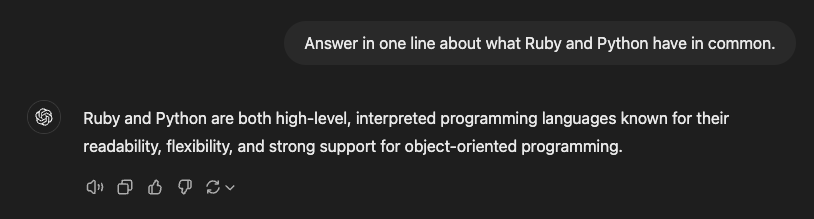

Algorithms are great at logic, but lack heuristic understanding.

LLMs offer the potential for human-like type inference.

I developed

as a tool to guess RBS types using LLMs.

RBS Goose

Generate RBS type definitions from Ruby code using LLMs

(Presented at RubyKaigi 2024)

Duck

quacking like geese

Duck

quacking like geese

Duck

Duck Typing

quacking like geese

Goose

RBS Goose: Current State

Ruby

RBS

some small

:

How capable is

:

?

We will need some metrics of RBS Goose performance.

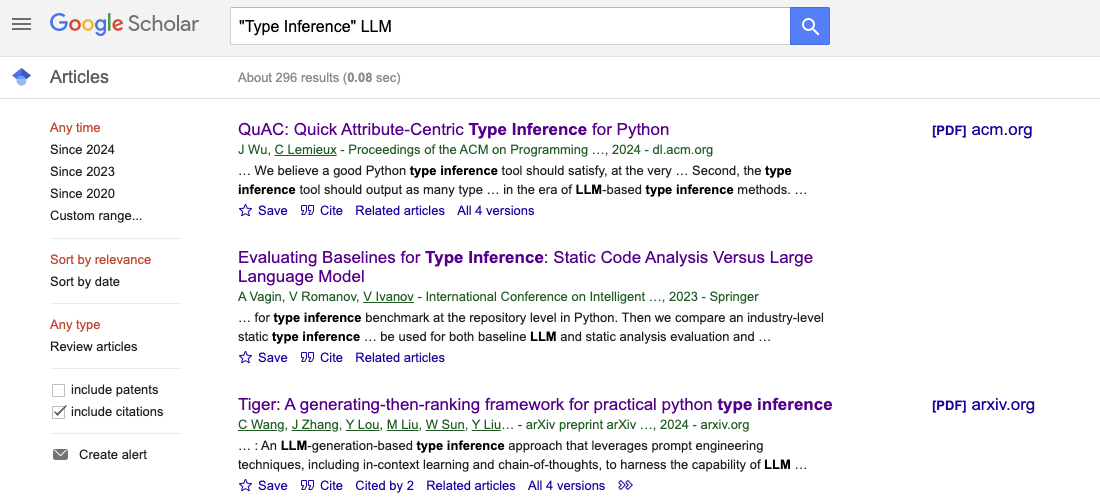

Previous Research

-

Ruby SimTyper: Research of type inference

-

Covers several libraries and Rails Applications

-

Not directly available due to different type formats

-

-

Python TypeEvalPy: Type Inference Micro-benchmark

-

Covers grammatical elements / typing context

-

Previous Research

A lot of papers exists... on Python🐍

-

Explain how RBS Goose works with LLM and evaluate

-

Better results than traditional methods in several cases

-

-

Share the idea of a type inference benchmark I planned

-

Referring to previous studies

-

Today's Focus

Outline

Basics of Type System and Type Inference

RBS Goose Architecture and Evaluation

Evaluation Method in Previous Studies

The idea of TypeEvalRb

Conclusion

Outline

Basics of Type System and Type Inference

RBS Goose Architecture and Evaluation

Evaluation Method in Previous Studies

The idea of TypeEvalRb

Conclusion

Type System

-

A mechanism to classify the components of a program

-

Strings, numbers, etc.

-

To prevent invalid operations

-

-

Ruby is a dynamically typed language

-

1 + 'a': TypeError is raised at runtime -

1 + 'a' if false: TypeError is not raised

-

Static Type Checking

-

A mechanism to detect type errors before execution

-

Need to know the type of each part of the code

-

Ruby does not use type annotations in its code

-

Define types with RBS / Checking with Steep

-

(Other options include RBI / Sorbet, and RDL, but we will not cover in this session)

-

Static Type Checking: Examples

-

For

1 + 'a', we can detect a type error if we know...-

1is anInteger -

'a'is aString -

Integer#+cannot accept a String

-

class Integer

def +: (Integer) -> Integer

# ...

endType Inference

-

Mechanism to infer types of code without explicit annotations

-

For performing static type checks

-

To generate types for Ruby code without type definitions

-

-

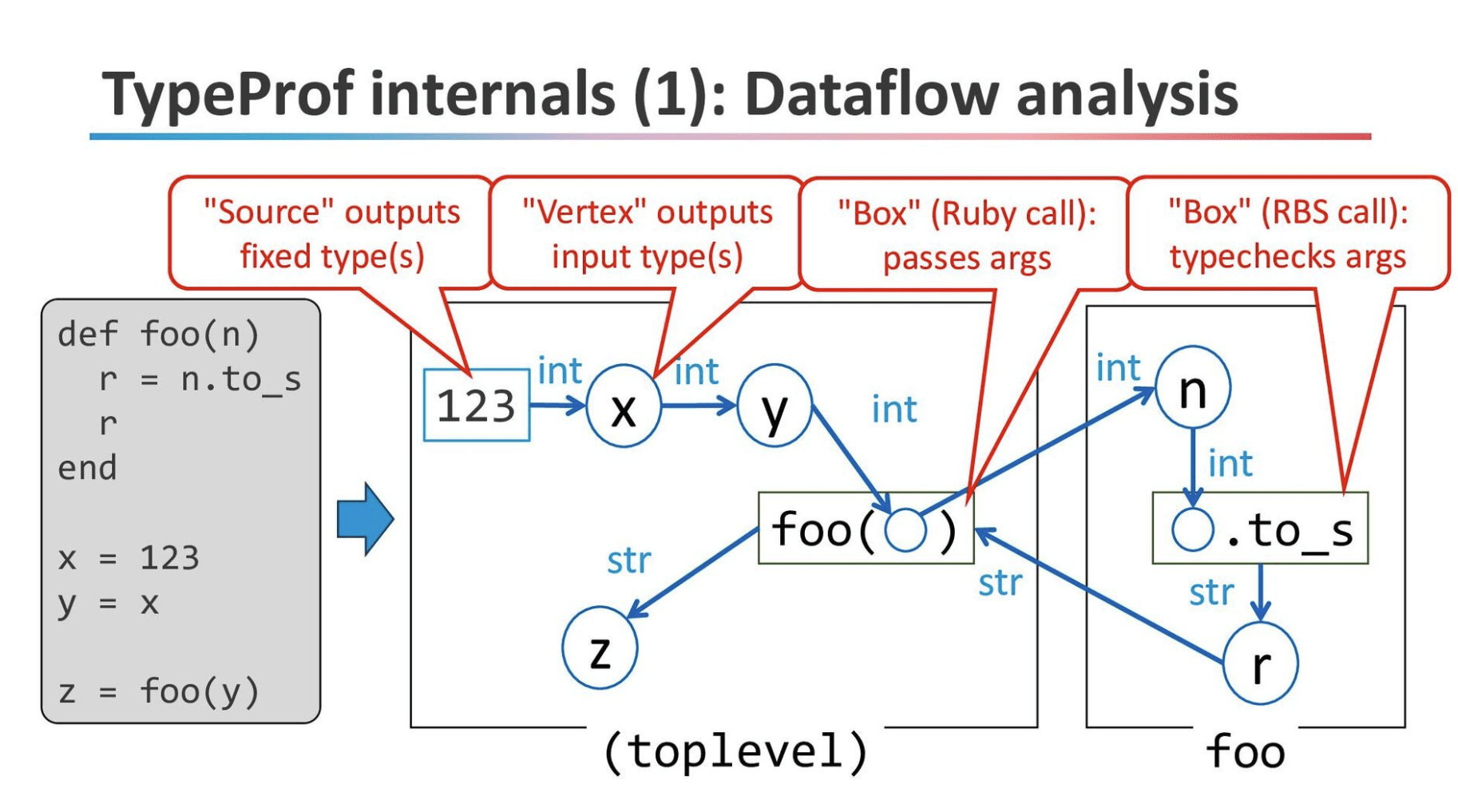

TypeProf: Ruby / RBS type inference tool

-

Tracking data flow in variable assignments and method calls

(Dataflow Analysis)

-

TypeProf: Mechanism

def foo: (Integer n) -> StringTricky Case - Generalization

class Bird; end

class Duck < Bird

def cry; puts "Quack"; end

end

class Goose < Bird

def cry; puts "Gabble"; end

end

def make_sound(bird)

bird.cry

end

make_sound(Duck.new)

make_sound(Goose.new)class Bird

end

class Duck < Bird

def cry: -> nil

end

class Goose < Bird

def cry: -> nil

end

class Object

def make_sound: (Duck | Goose) -> nil

endlib/bird.rb

sig/bird.rbs

The argument type of make_sound is

infered as a union of subtypes.

TypeProf

Tricky Case - Dynamic definition

class Dynamic

['foo', 'bar'].each do |x|

define_method("print_#{x}") do

puts x

end

end

end

d = Dynamic.new

d.print_foo #=> 'foo'

d.print_bar #=> 'bar'class Dynamic

endlib/dynamic.rb

sig/dynamic.rbs

[error] undefined method: Dynamic#print_foo

[error] undefined method: Dynamic#print_bar

TypeProf

Summary - Basics of Type System and Type Inference

-

Type System: prevent invalid operation of the program

-

Ruby has a dynamic type system

-

-

Static Type Checking: detect type errors before execution

-

Type description language (e.g. RBS) are used

-

-

Type Inference: Infer types of codes without type annotations

-

Traditional methods usually work well,

but are not good at generalization, dynamic definition, etc.

-

Since LLM imitates human thinking,

so it may work well in these cases.

Outline

Basics of Type System and Type Inference

RBS Goose Architecture and Evaluation

Evaluation Method in Previous Studies

The idea of TypeEvalRb

Conclusion

RBS Goose

class Bird; end

class Duck < Bird

def cry; puts "Quack"; end

end

class Goose < Bird

def cry; puts "Gabble"; end

end

def make_sound(bird)

bird.cry

end

make_sound(Duck.new)

make_sound(Goose.new)class Bird

end

class Duck < Bird

def cry: () -> void

end

class Goose < Bird

def cry: () -> void

end

class Object

def make_sound: (Bird arg) -> void

end

lib/bird.rb

sig/bird.rbs

Generate RBS type definitions from Ruby code using LLMs

LLM execution example (ChatGPT)

Prompt (Input Text)

Output Text

LLM Technique: Few-shot Prompting

Few-shot Prompt

(provide some examples)

Zero-shot Prompt

(provide no examples)

(Prompt)

Answer color code.

Q: red

A: #FF0000

Q: blue

A:

(Output)

#0000FF(Prompt)

Answer color code for blue.

(Output)

The color code for blue depends on the system you're using:

HEX: #0000FF

RGB: (0, 0, 255)

CMYK: (100%, 100%, 0%, 0%)

HSL: (240°, 100%, 50%)

Pantone: PMS 2935 C (approximation)

Would you like codes for a specific shade of blue?RBS Goose Architecture

Ruby

RBS

Refined RBS

rbs prototype

examples

Prompt

LLM

(e.g. ChatGPT)

Step.1 Generate RBS prototype

Ruby

RBS

Refined RBS

rbs prototype

examples

Prompt

LLM

(e.g. ChatGPT)

class Bird

end

class Duck < Bird

def cry: () -> untyped

end

class Goose < Bird

def cry: () -> untyped

end

class Object

def make_sound: (untyped bird) -> untyped

endsig/bird.rbs

RBS Goose Architecture

Ruby

RBS

Refined RBS

rbs prototype

examples

Prompt

LLM

(e.g. ChatGPT)

class Example1

attr_reader :quantity

def initialize(quantity:)

@quantity = quantity

end

def quantity=(quantity)

@quantity = quantity

end

end

lib/example1.rb

class Example1

@quantity: untyped

attr_reader quantity: untyped

def initialize: (quantity: untyped) -> void

def quantity=: (untyped quantity) -> void

end

sig/example1.rbs

class Example1

@quantity: Integer

attr_reader quantity: Integer

def initialize: (quantity: Integer) -> void

def quantity=: (Integer quantity) -> void

end

refined/sig/example1.rbs

Ruby

RBS

Refined RBS

examples

Prompt

LLM

(e.g. ChatGPT)

class Example1

attr_reader :quantity

def initialize(quantity:)

@quantity = quantity

end

def quantity=(quantity)

@quantity = quantity

end

end

lib/example1.rb

class Example1

@quantity: untyped

attr_reader quantity: untyped

def initialize: (quantity: untyped) -> void

def quantity=: (untyped quantity) -> void

end

sig/example1.rbs

class Example1

@quantity: Integer

attr_reader quantity: Integer

def initialize: (quantity: Integer) -> void

def quantity=: (Integer quantity) -> void

end

refined/sig/example1.rbs

rbs prototype

(or other tools)

Step.2 Load Few-shot Examples

Ruby

RBS

Refined RBS

rbs prototype

examples

Prompt

LLM

(e.g. ChatGPT)

When ruby source codes and

RBS type signatures are given,

refine each RBS type signatures.

======== Input ========

```lib/example1.rb

...

```

```sig/example1.rbs

...

```

======== Output ========

```sig/example1.rbs

...

```

======== Input ========

```lib/bird.rb

...

```

```sig/bird.rbs

...

```

======== Output ========Examples

Ruby Code

LLM Infer

RBS Prototype

Step.3 Construct Prompt

Step.4 Parse response and output

Ruby

RBS

Refined RBS

rbs prototype

examples

Prompt

LLM

(e.g. ChatGPT)

```sig/bird.rbs

class Bird

end

class Duck < Bird

def cry: () -> void

end

class Goose < Bird

def cry: () -> void

end

class Object

def make_sound: (Bird arg) -> void

end

```Key Points

-

LLMs are not inherently familiar with RBS grammar

-

Pre-generate RBS prototypes

-

Framing the task as a fill-in-the-blanks problem for untyped

-

-

Use Few-shot prompting

-

To format the output for easy parsing

-

Illustrate RBS unique grammar (such as

attr_reader)

-

RBS Goose Results - Generarization

class Bird; end

class Duck < Bird

def cry; puts "Quack"; end

end

class Goose < Bird

def cry; puts "Gabble"; end

end

def make_sound(bird)

bird.cry

end

# The following is not

# provided to RBS Goose

# make_sound(Duck.new)

# make_sound(Goose.new)class Bird

end

class Duck < Bird

def cry: () -> void

end

class Goose < Bird

def cry: () -> void

end

class Object

def make_sound: (Bird arg) -> void

end

lib/bird.rb

sig/bird.rbs

The argument of make_sound is inferred to be Bird.

RBS Goose Results - Dynamic definition

class Dynamic

['foo', 'bar'].each do |x|

define_method("print_#{x}") do

puts x

end

end

end

# The following is not

# provided to RBS Goose

# d = Dynamic.new

# d.print_foo #=> 'foo'

# d.print_bar #=> 'bar'class Dynamic

def print_foo: () -> void

def print_bar: () -> void

endlib/dynamic.rb

sig/dynamic.rbs

Correctly infer dynamic method definitions

RBS Goose Results - Proc Arguments

def call(f)

f.call()

end

f = -> { 'hello' }

p call(f)# Wrong Syntax

def call: (() -> String f) -> Stringlib/call.rb

# Correct Syntax

def call: (^-> String f) -> StringTypeProf

Correct Syntax

Wrong Syntax

Manual evaluation has limitations

ProcType

OptionalType

RecordType

TuppleType

AttributeDefinition

Generics

Mixin

Member Visibility

Ruby on Rails

ActiveSupport

ActiveModel

Refinement

Quine

method_missing

delegete

Need better evaluation methods

-

Works in small examples, but no metrics of performance

-

Unclear what RBS Goose can and cannot do

-

-

It's difficult to determine the improvement direction

-

How do I check if the change has made it better?

-

-

Find out previous studies

-

How to evaluate type inference

-

We need better methods to evaluate type inference.

Let's look at how previous studies have evaluated this.

Outline

Basics of Type System and Type Inference

RBS Goose Architecture and Evaluation

Evaluation Method in Previous Studies

The idea of TypeEvalRb

Conclusion

Evaluation Method in Previous Studies

-

This session will focus on below two studies

-

Study 1: Evaluation of SimTyper(Ruby Type Inference Tool)

-

Study 2: TypeEvalPy (Python Type Inference Benchmark)

-

-

Ruby type inference tool

-

Constraint-based inference

-

-

Built on RDL, one of the Ruby type checker

-

incompatible with RBS

-

Kazerounian, SimTyper: sound type inference for Ruby using type equality prediction, 2021, OOPSLA 2024

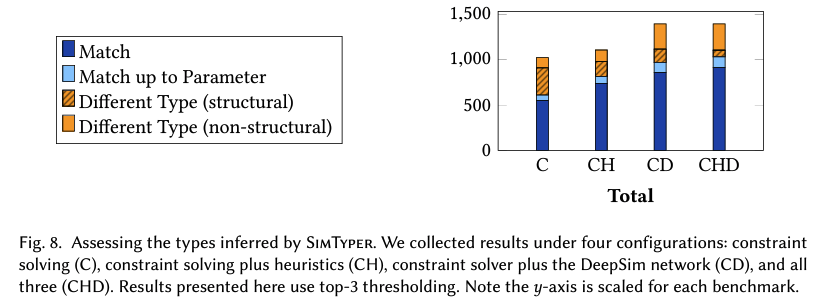

Previous Study 1: SimTyper

Previous Study 1: SimTyper

Kazerounian, SimTyper: sound type inference for Ruby using type equality prediction, 2021, OOPSLA 2024

SimTyper - Evaluation Method

Compare expected and inferred types

for each argument, return value, and variable

def foo: (Array[String], Array[Integer]) -> Array[String]def foo: (Array[String], Array[String]) -> voidexpected:

inferred:

Match

Match

up to Parameter

Different

SimTyper - Test Data

SimTyper - Evaluation Result

The number of matches can be compared for each method.

SimTyper - Artifacts

The reproducion data is provided

... as a VM image 😢

What we can learn from Study 1

-

Compare expected and inferred types and count matched

for each argument, return value, and variable

-

Ruby libraries and Rails applications are targeted

Practice-based results

Any repository with type declarations can be used

Even if it's Ruby, it's hard to use evaluation tools directly

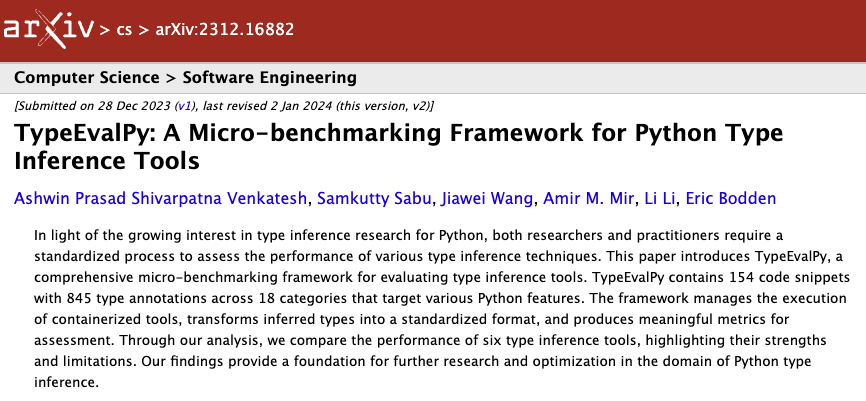

TypeEvalPy - Abstract

-

Micro-benchmarks for type inference in Python

-

Small test cases, categorized by grammatical elements, etc.

-

-

Evaluation method is almost the same as SimTyper

-

compares for each parameter, return, and variable

-

only Exact matches counted

-

Venkatesh, TypeEvalPy: A Micro-benchmarking Framework for Python Type Inference Tools, 2023, ICSE 2024

Previous Study 2: TypeEvalPy

Venkatesh, TypeEvalPy: A Micro-benchmarking Framework for Python Type Inference Tools, 2023, ICSE 2024

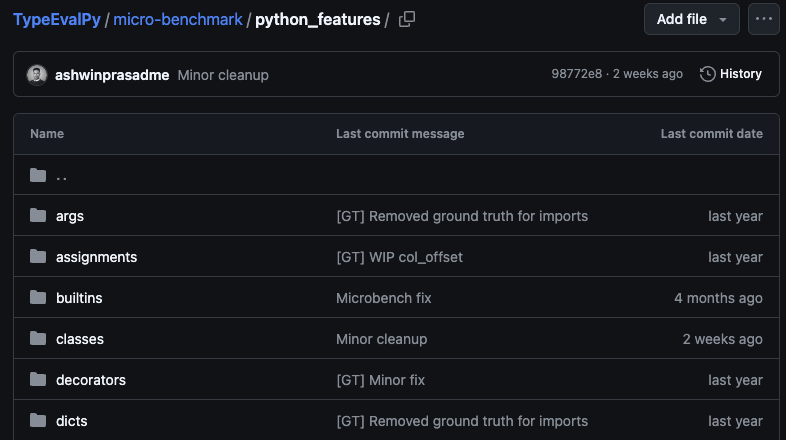

TypeEvalPy: TestCase Categories

TypeEvalPy: TestCase

def param_func():

return "Hello from param_func"

def func(a):

return a()

b = param_func

c = func(b)main.py

[{"file": "main.py",

"line_number": 4,

"col_offset": 5,

"function": "param_func",

"type": ["str"]},

{"file": "main.py",

"line_number": 8,

"col_offset": 10,

"parameter": "a",

"function": "func",

"type": [ "callable"]},

// ...main_gt.json

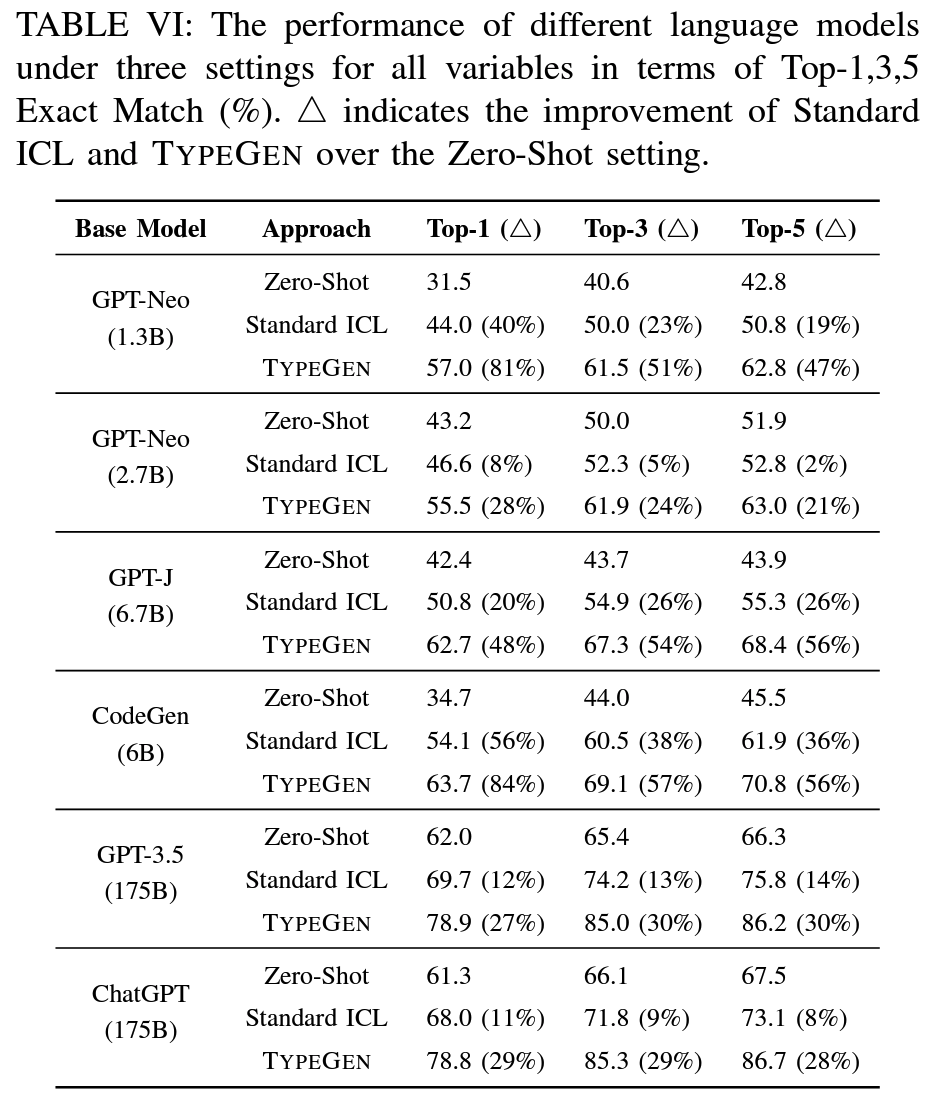

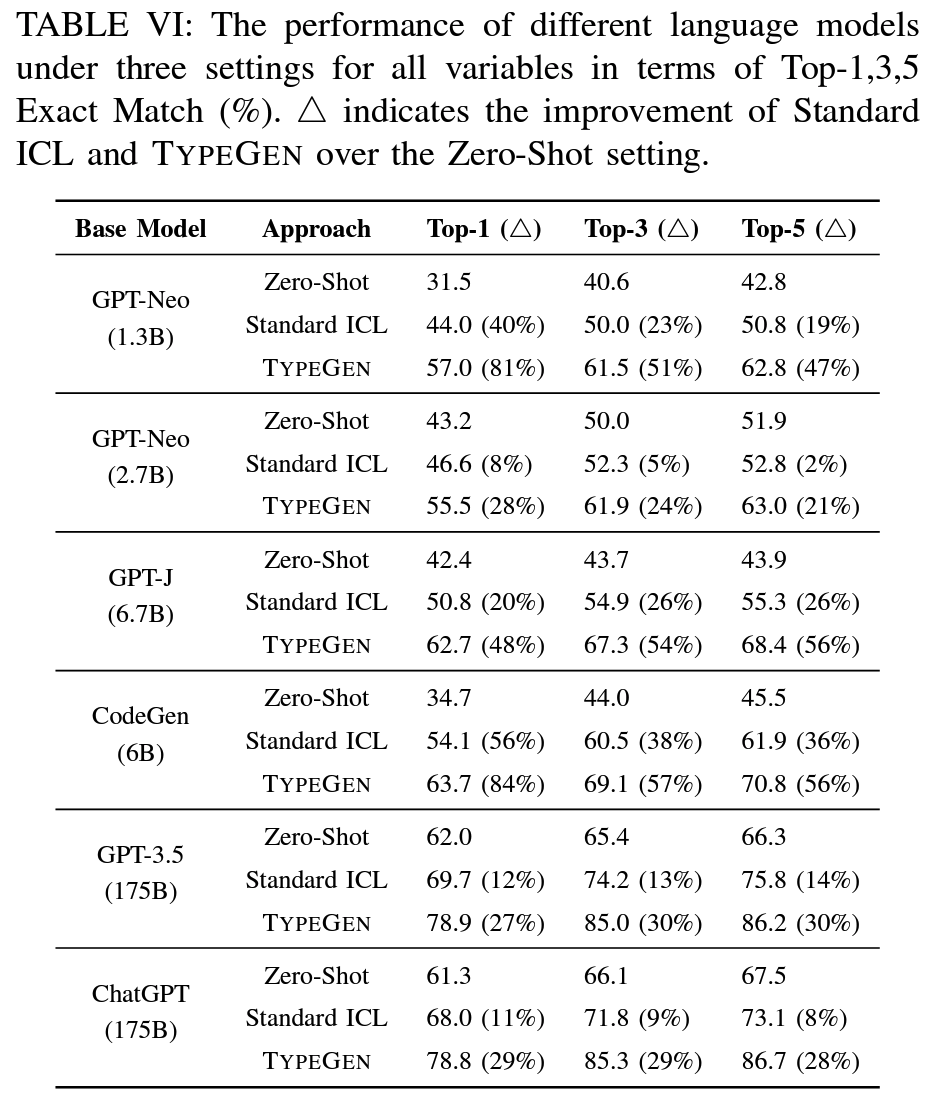

TypeEvalPy: Benchmark Results

What we can learn from Study 2

-

Categorised test cases by grammar element, etc.

-

Aggregated by category to reveal strengths and weaknesses

-

-

Test case is small because it is a micro-benchmark

-

Possibility of deviation from practical performance

-

Based on these studies,

we will now consider how to evaluate RBS Goose's performance.

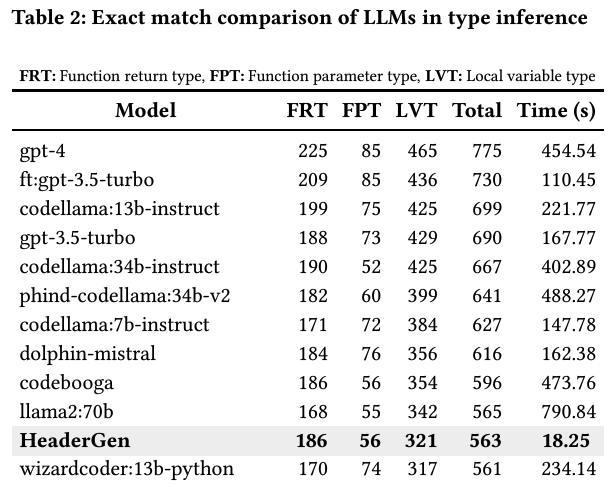

TypeEvalPy: Results

| Category | Total facts | Scalpel |

|---|---|---|

| args | 43 | 15 |

| assignments | 82 | 23 |

| builtins | 68 | 0 |

| classes | 122 | 24 |

| decorators | 58 | 19 |

...

Aggregate by category,

measure strengths and weaknesses.

What we can learn from Previous Studies

-

Compare expected and inferred types

-

for each argument, return value, and variable

-

The number of matches can be used as metrics

Two types of test data

-

Real-world code: measures practical performance

-

Micro benchmark: clarify the strengths and weaknesses

-

Outline

Basics of Type System and Type Inference

RBS Goose Architecture and Evaluation

Evaluation Method in Previous Studies

The idea of TypeEvalRb

Conclusion

Future Prospects in Ruby

Ruby+RBS Type Data Sets

(like ManyTypes4Py)

Provide Training Data

(Embed as examples)

Provide Data for evaluation

Type Benchmark

(like TypeEvalPy)

Evaluate

Develop data sets and benchmarks

to enable performance evaluation

Future Prospects in Ruby (2)

Generate type hints and Embed to prompts

RubyGems

Project Files

gem_rbs_collection

Collect Related Type Hints

TypeEvalRb - Architecture

Comparator

Test data

Expected RBS Types

Ruby Code

Inferred RBS Types

Benchmark Result

Aggregate

Match / Unmatch

TypeEvalRb - Comparation

-

Construct Comparison Tree from two

RBS::Environment-

Currently working on

-

-

Traverses Comparison Tree and Calcurate Match Count

-

Comparison is done per argument, return value, etc.

-

Classify as Match, Match up to parameters, or Different

-

TypeEvalRb - Comparation

Construct Comparison Tree from two RBS::Environment

# load expected/sig/bird.rbs to RBS::Environment

> loader = RBS::EnvironmentLoader.new

> loader.add(path: Pathname('expected/sig/bird.rbs'))

> env = RBS::Environment.from_loader(loader).resolve_type_names

=> #<RBS::Environment @declarations=(409 items)...>

# RBS::Environment contains ALL types includes stdlib, etc.

> env.class_decls.count

=> 330

# Extract Goose class

> goose = env.class_decls[RBS::Namespace.parse('::Goose').to_type_name]

=> #<RBS::Environment::ClassEntry:0x000000011e478d70 @decls=...>

TypeEvalRb - Comparation

Goose's ClassEntry is... so deeply nested 😅

> pp goose

#<RBS::Environment::ClassEntry:0x000000011f239a40

@decls=

[#<struct RBS::Environment::MultiEntry::D

decl=

#<RBS::AST::Declarations::Class:0x0000000128d7dd08

@annotations=[],

@comment=nil,

@location=

#<RBS::Location:371300 buffer=/Users/kokuyou/repos/type_eval_rb/spec/fixtures/examples/bird/refined/sig/bird.rbs, start=8:0, pos=61...105, children=keyword,name,end,?type_params,?lt source="class Goose < Bird">,

@members=

[#<RBS::AST::Members::MethodDefinition:0x0000000128d7dd58

@annotations=[],

@comment=nil,

@kind=:instance,

@location=

#<RBS::Location:371360 buffer=/Users/kokuyou/repos/type_eval_rb/spec/fixtures/examples/bird/refined/sig/bird.rbs, start=9:2, pos=82...101, children=keyword,name,?kind,?overloading,?visibility source="def cry: () -> void">,

@name=:cry,

@overloading=false,

@overloads=

[#<RBS::AST::Members::MethodDefinition::Overload:0x000000011f23a968

@annotations=[],

@method_type=

#<RBS::MethodType:0x0000000128d7dda8

@block=nil,

@location=

#<RBS::Location:371420 buffer=/Users/kokuyou/repos/type_eval_rb/spec/fixtures/examples/bird/refined/sig/bird.rbs, start=9:11, pos=91...101, children=type,?type_params source="() -> void">,

@type=

#<RBS::Types::Function:0x0000000128d7ddf8

@optional_keywords={},

@optional_positionals=[],

@required_keywords={},

@required_positionals=[],

@rest_keywords=nil,

@rest_positionals=nil,

@return_type=

#<RBS::Types::Bases::Void:0x0000000128892af0

@location=

#<RBS::Location:371440 buffer=/Users/kokuyou/repos/type_eval_rb/spec/fixtures/examples/bird/refined/sig/bird.rbs, start=9:17, pos=97...101, children= source="void">>,

@trailing_positionals=[]>,

@type_params=[]>>],

@visibility=nil>],

@name=#<RBS::TypeName:0x000000011f23abc0 @kind=:class, @name=:Goose, @namespace=#<RBS::Namespace:0x000000011f23abe8 @absolute=true, @path=[]>>,

@super_class=

#<RBS::AST::Declarations::Class::Super:0x000000011f23a9e0

@args=[],

@location=

#<RBS::Location:371540 buffer=/Users/kokuyou/repos/type_eval_rb/spec/fixtures/examples/bird/refined/sig/bird.rbs, start=8:14, pos=75...79, children=name,?args source="Bird">,

@name=

#<RBS::TypeName:0x000000011f23b160 @kind=:class, @name=:Bird, @namespace=#<RBS::Namespace:0x0000000100cdf6a8 @absolute=true, @path=[]>>>,

@type_params=[]>,

outer=[]>],

@name=#<RBS::TypeName:0x000000011f239a68 @kind=:class, @name=:Goose, @namespace=#<RBS::Namespace:0x000000011f23abe8 @absolute=true, @path=[]>>,

@primary=nil>TypeEvalRb - Comparation

Take only defined classes and build a tree structure (lack many things)

> compare_bird

=>

ComparisonTree(

class_nodes=[

ClassNode(typename=::Bird, instance_variables=[ ], methods=[ ])

ClassNode(typename=::Duck,

instance_variables=[ ],

methods=[

MethodNode(name=cry, parameters=[ ],

return_type=TypeNode( expected="void", actual="untyped")

)])

ClassNode(typename=::Goose,

instance_variables=[ ],

methods=[

MethodNode(name=cry, parameters=[ ],

return_type=TypeNode( expected="void", actual="untyped")

)])

])TypeEvalRb - Test Data

-

Micro-benchmark data like TypeEvalPy

-

Small test data classified by grammatical elements, etc.

-

For detailed evaluation of strengths and weaknesses

-

-

Real-world data, similar to that used to evaluate SimTyper

-

Libraries and Rails applications with RBS type definitions

-

For evaluation of practical performance

-

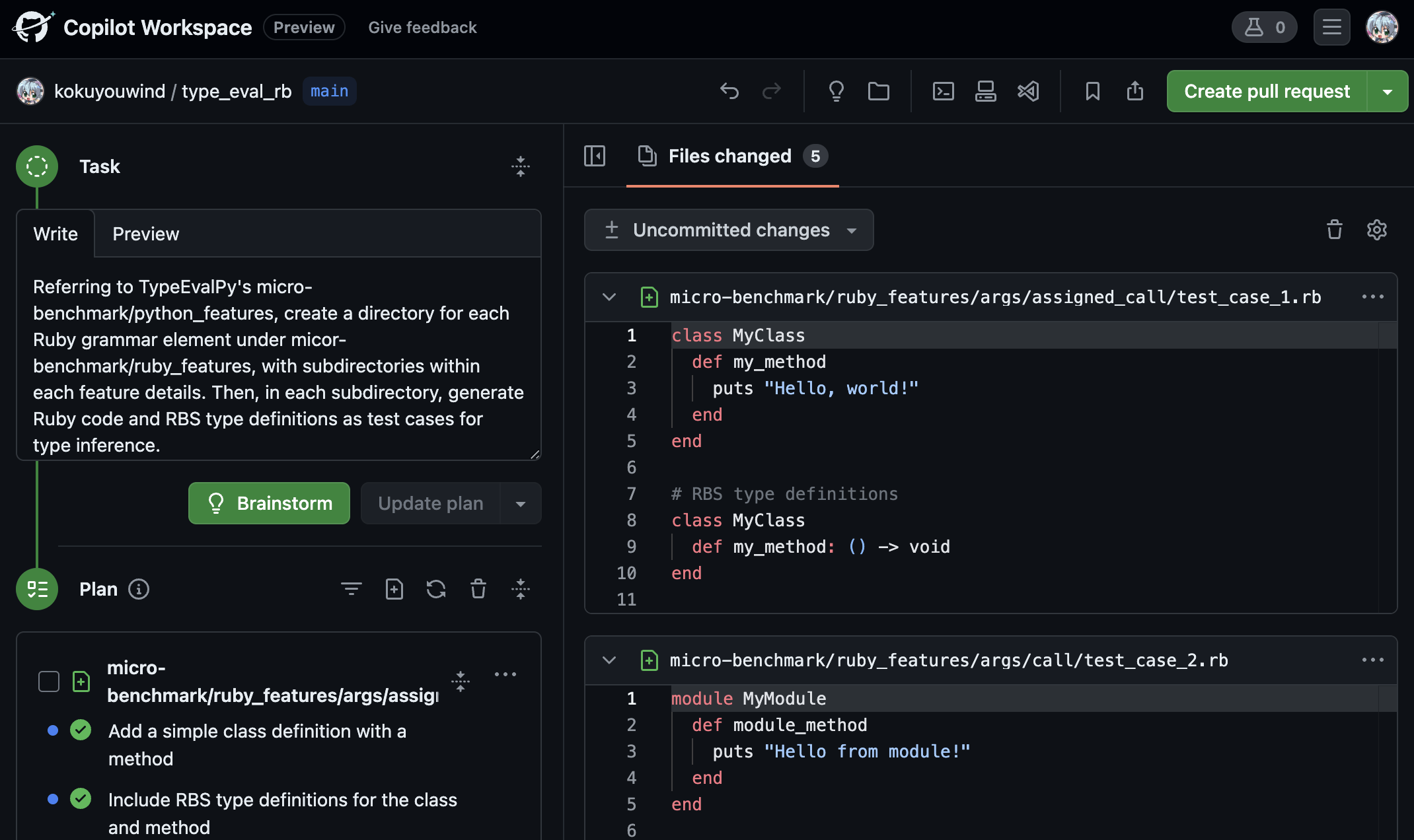

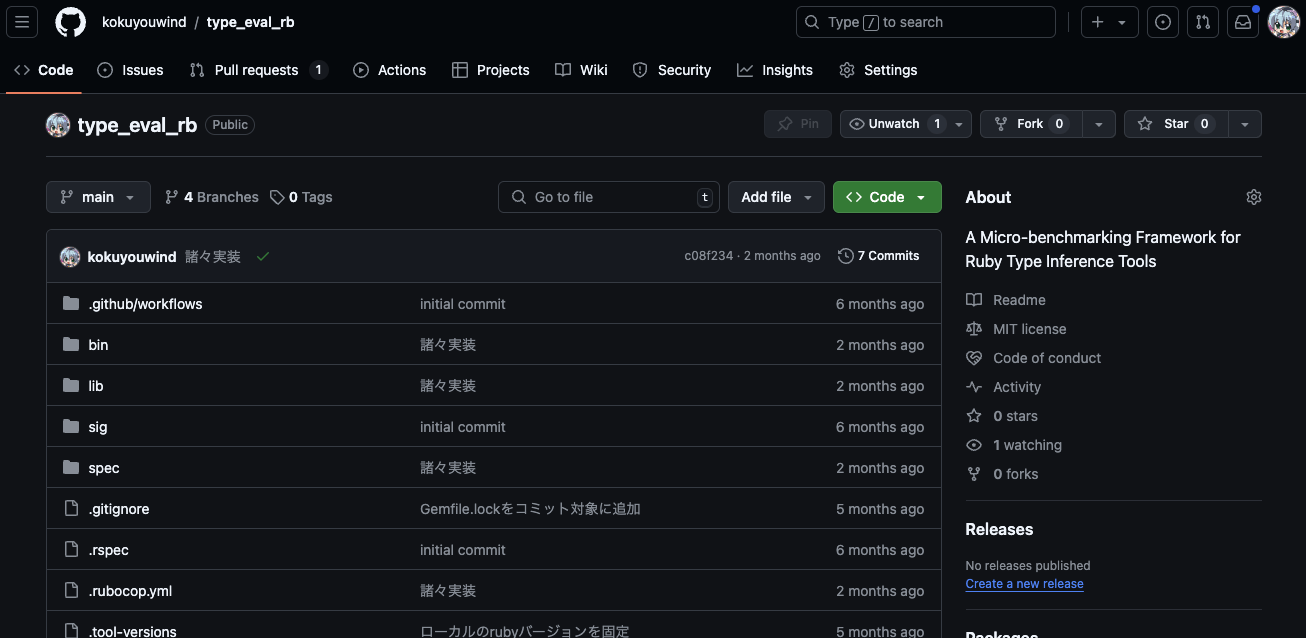

TypeEvalRb - Microbenchmark Test Data

Exploring the possibility of

using the GitHub Copilot Workspace for data preparation.

TypeEvalRb

Work in Progress

Outline

Basics of Type System and Type Inference

RBS Goose Architecture and Evaluation

Evaluation Method in Previous Studies

The idea of TypeEvalRb

Conclusion

Conclusion

-

Shared how RBS Goose works and evaluation results

-

Better results than traditional methods in some cases

-

-

Surveyed evaluation methods in previous studies

-

Count matches between expected and inferred types

-

Both Micro-Benchmark and real-world data are useful

-

-

Shared idea of TypeEvalRb, type inference benchmark

-

To reveal inference performance and for future improvement

-

Preliminary Slides

What is LLM?

Large language model (LLM) is

"Large" "Language Model".

Language Model (LM)

A model assigns probabilities to sequence of words.

["The", "weather", "is"]

A model assigns probabilities to sequence of words.

(50%) "sunny"

(20%) "rainy"

Language Model

(0%) "duck"

"Large" Language Model

The amount of pre-training data size and the model size are “large”.

Pre-training

Pre-training

(non-large) LM

Large Language Model

"Large" Language Model

Considerations for LLMs

-

Because LLMs are one type of language model,

LLM generate text probabilistically.-

Inference process is unclear

-

Sometimes they output "plausible nonsense" (hallucinations)

-

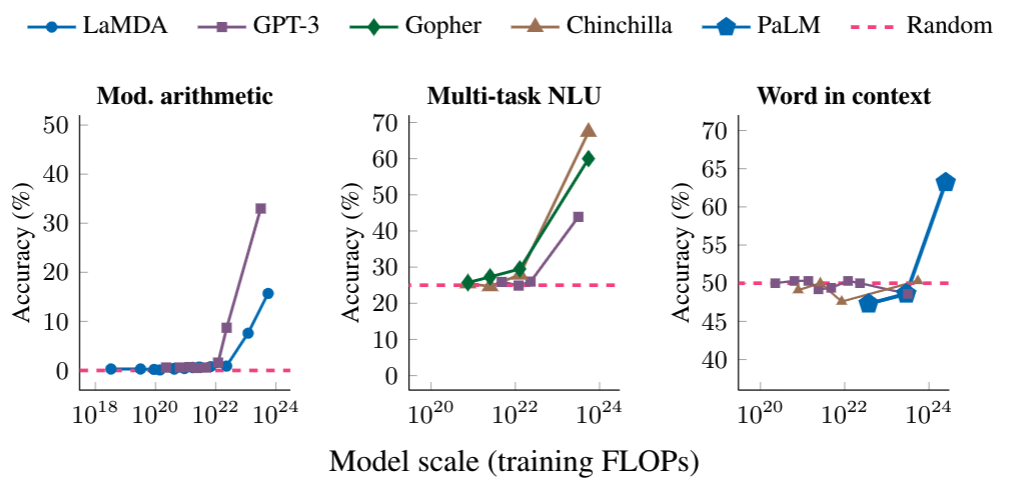

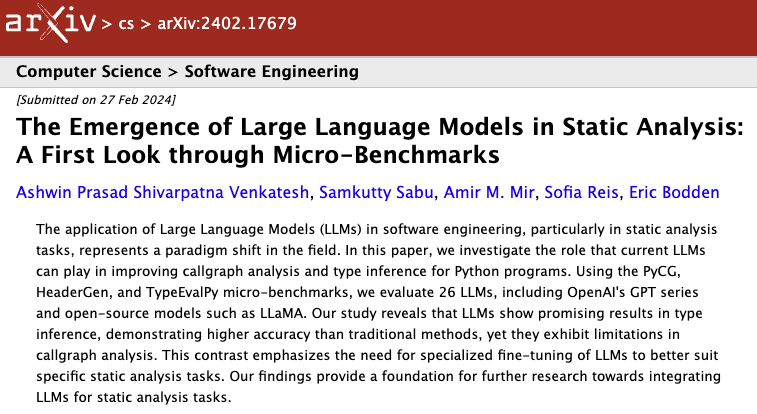

Papers Found

-

Found many previous papers

-

Today I pick up two papers

-

Measured the type inference capability of LLMs

-

Improvement with Chain of Thought Prompts

-

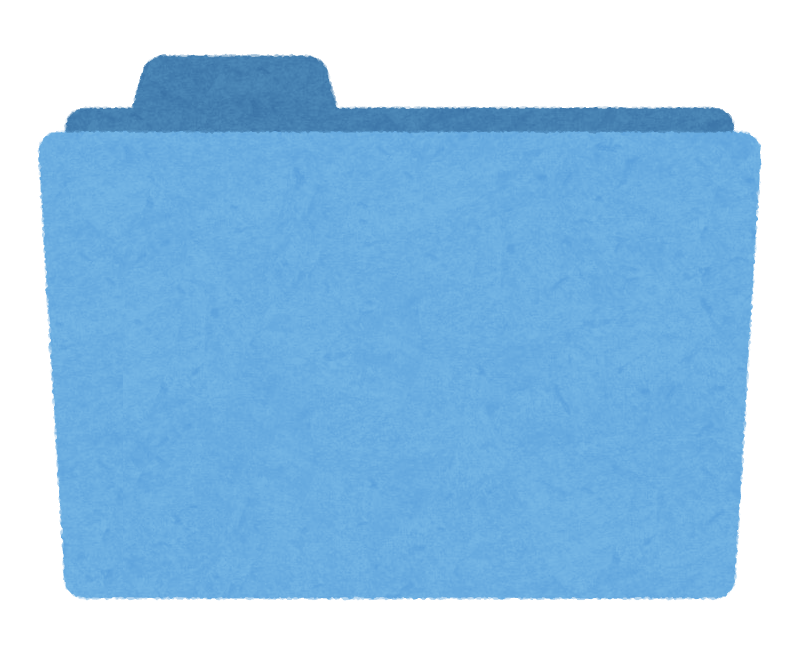

Paper 1. Measured the type inference capability

Ashwin Prasad Shivarpatna Venkatesh,

The Emergence of Large Language Models in Static Analysis: A First Look through Micro-Benchmarks, 2024, ICSE FORGE 2024

Paper 1. Abstruct

-

Evaluate LLM type inference accuracy in Python

-

LLMs showed higher accuracy than traditional methods

-

-

TypeEvalPy is used as a micro-benchmark for type inference

-

Comparing the inferred types with the correct data

(Function Return Type, Function Parameter Type, Local Variable Type)

-

Paper 1. Prompt

Simple few-shot Prompt

Input: Python code

Output: JSON

You will be provided with the following information:

1. Python code. The sample is delimited with triple backticks.

2. Sample JSON containing type inference information for the Python code in

a specific format.

3. Examples of Python code and their inferred types. The examples are delimited

with triple backticks. These examples are to be used as training data.

Perform the following tasks:

1. Infer the types of various Python elements like function parameters, local

variables, and function return types according to the given JSON format with

the highest probability.

2. Provide your response in a valid JSON array of objects according to the

training sample given. Do not provide any additional information except the JSON object.

Python code:

```

def id_func ( arg ):

x = arg

return x

result = id_func (" String ")

result = id_func (1)

```

inferred types in JSON:

[

{

"file": "simple_code.py",

"function": "id_func",

"line_number": 1,

"type": [

"int",

"str"

]

}, ...Python Code

Specify Output Format (JSON)

Instruction

Paper 1. Results

GPT-4

Score: 775

Time: 454.54

Traditional Method

Score: 321

Time: 18.25

Paper 1. What We Can Learn

-

Benchmarks for type inference exist, such as TypeEvalPy

-

Provide consistent metrics for type inference capability

-

Ruby also needs benchmarking

-

-

Even Simple prompt can have a higher inference capability

-

Note that micro-benchmarks favor LLMs

-

Both time and computational costs are high

-

Paper 2. Improvement with Chain of Thought

Yun Peng, Generative Type Inference for Python, 2023, ASE' 23

Paper 2. Abstruct

-

Chain of Thought (COT) prompts can be used for type inference

-

Improved 27% to 84% compared to the zero-shot prompts

-

-

ManyTypes4Py is used for training and evaluation

-

Dataset for Machine Learning-based Type Inference

-

80% to Training, 20% to Evaluation

-

Measure Exact Match and Match Parametric in Evaluation

-

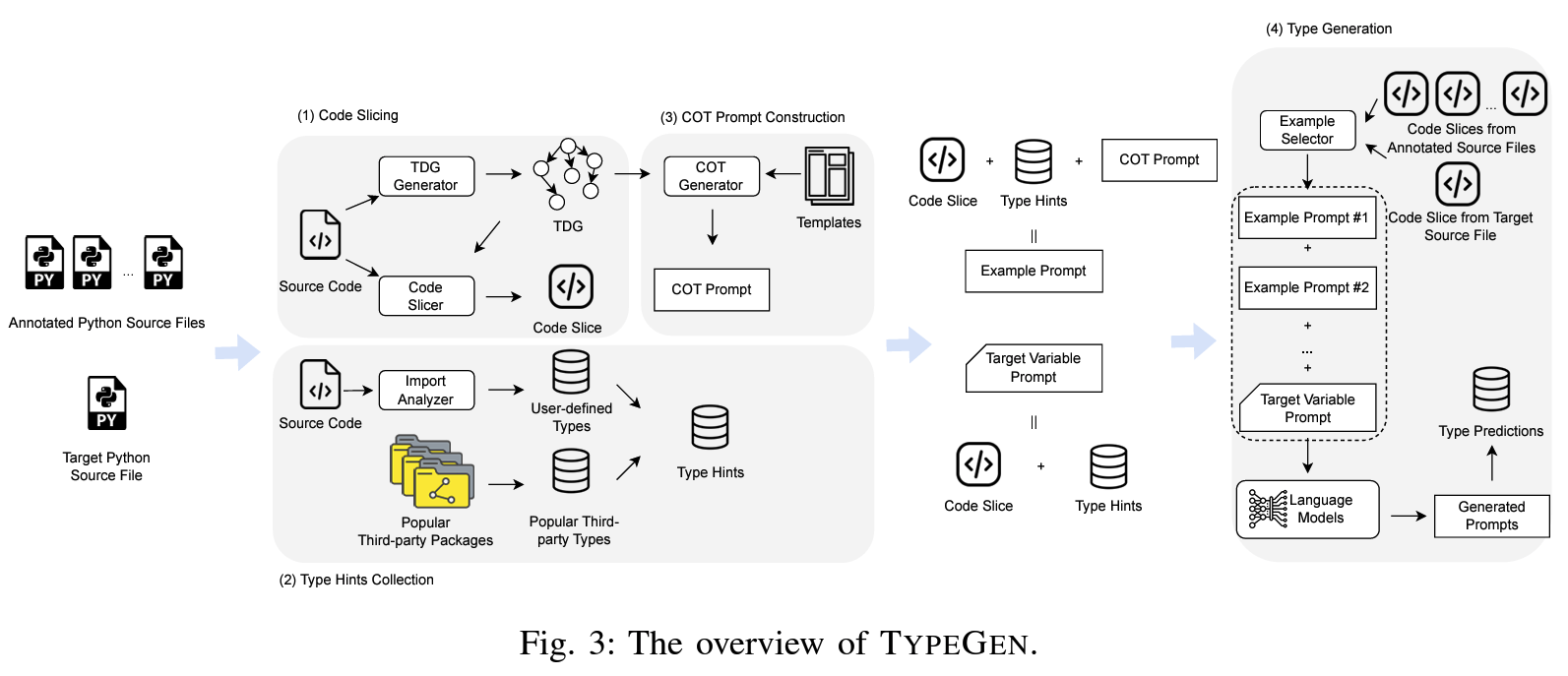

Paper 2. Architecture

COT Prompt Construction

Training Data is used

as an example

Generate Type Hints

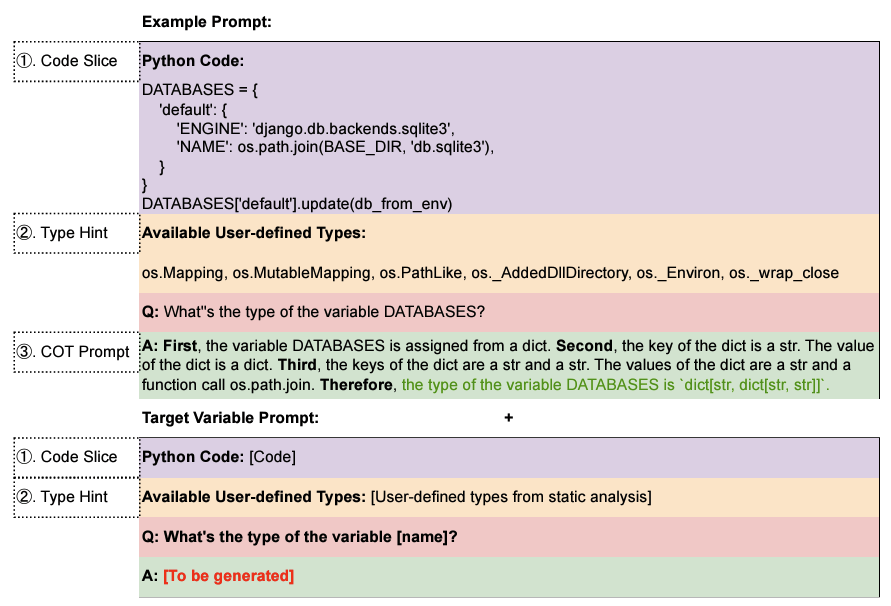

Paper 2. Prompt

Embedded type derivation process with COT

Embedded type hints

Embedded examples

Paper 2. Results

28-29% improved

than Zero-Shot

in ChatGPT

Paper 2. What We Can Learn

-

What information is embedded in the prompt is important

-

Add Type Hints

-

Use chain of thought prompts

-

-

Type data sets like ManyTypes4Py are useful

-

Can be used as an example prompt as well as for evaluation

-

Prospective of Type Benchmark - Considerations

-

Currently, rbs-inline is under development [*]

-

it allows type descriptions within special inline comments

-

Similar to YARD documentation

-

-

Might need to support

-

RBS Goose Input / Output

-

Benchmark Test Data / Comparator

-

Do LLMs dream of Type Inference?

By 黒曜

Do LLMs dream of Type Inference?

Session of RubyConf 2024

- 734