Text Generation

The past, the present, and the future

Richard Rohla | Laurens ten Cate | Alex Kyalo | Gianluca Sabbatucci | Asier Sarasua | Kamal Nandan | Veronique Wang

WTF is text generation

NLG means generating natural language from a model

Very challenging computational task:

- grammatical complexity

- ambiguity

NLG aims to find an underlying 'language model' from which we can sample to generate text

Initially this was very heuristic based

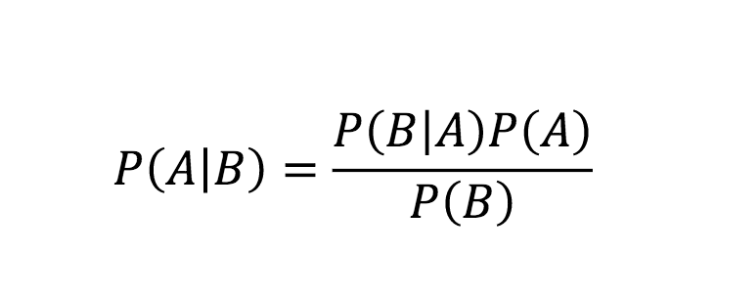

Probabilistic language models

Probabilistic language models assign a probability for the next part of the sequence.

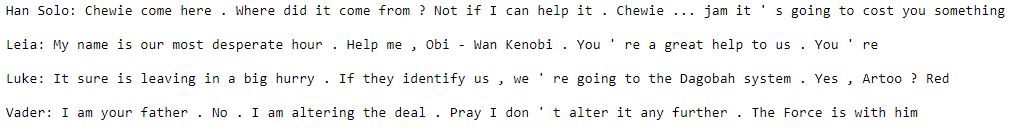

Conditional Frequency Distribution model

Recurrent Neural Networks

Alex Graves (2013) - Generating Sequences with RNNs

- Good for sequence generation

- Processes sequences one step at the time

- Finds internal representation of sequence instead of exact matches

- Less repetitive generation

- Char vs Word

The future (GANs)

Generative Adverserial Networks

- Discreteness of text data causes problems with back-propagation of error

- SeqGan -- policy gradients + MCM

- We switched to VAEs

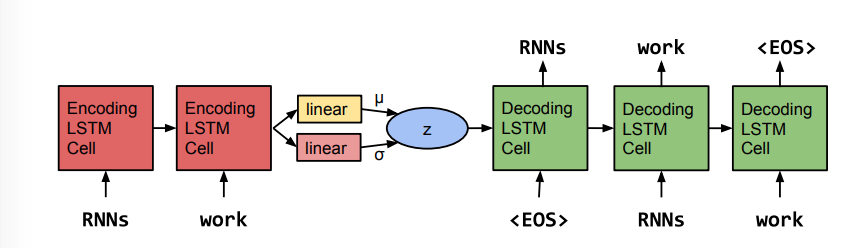

VAE

S. Bowman (2016) - Generating Sentences from a Continuous Space

Variational Auto-Encoder

Auto-encoders encode data in a lower n-dimensional space which allows decoding to original data

VAE encodes data not in discrete points but as soft-ellipsoidal regions in this n-dimensional space

Demo

Three models:

- CHAR LSTM

- WORD LSTM

- VAE

nlp2

By laurenstc

nlp2

- 738