Games always change

Liad Yosef

Liad Yosef

Client Architect @ Duda

Pixels and Robots

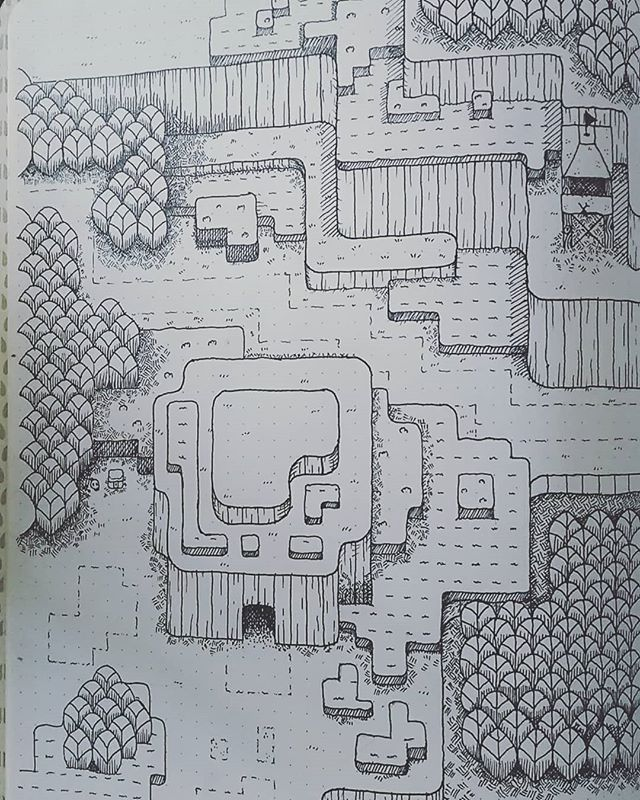

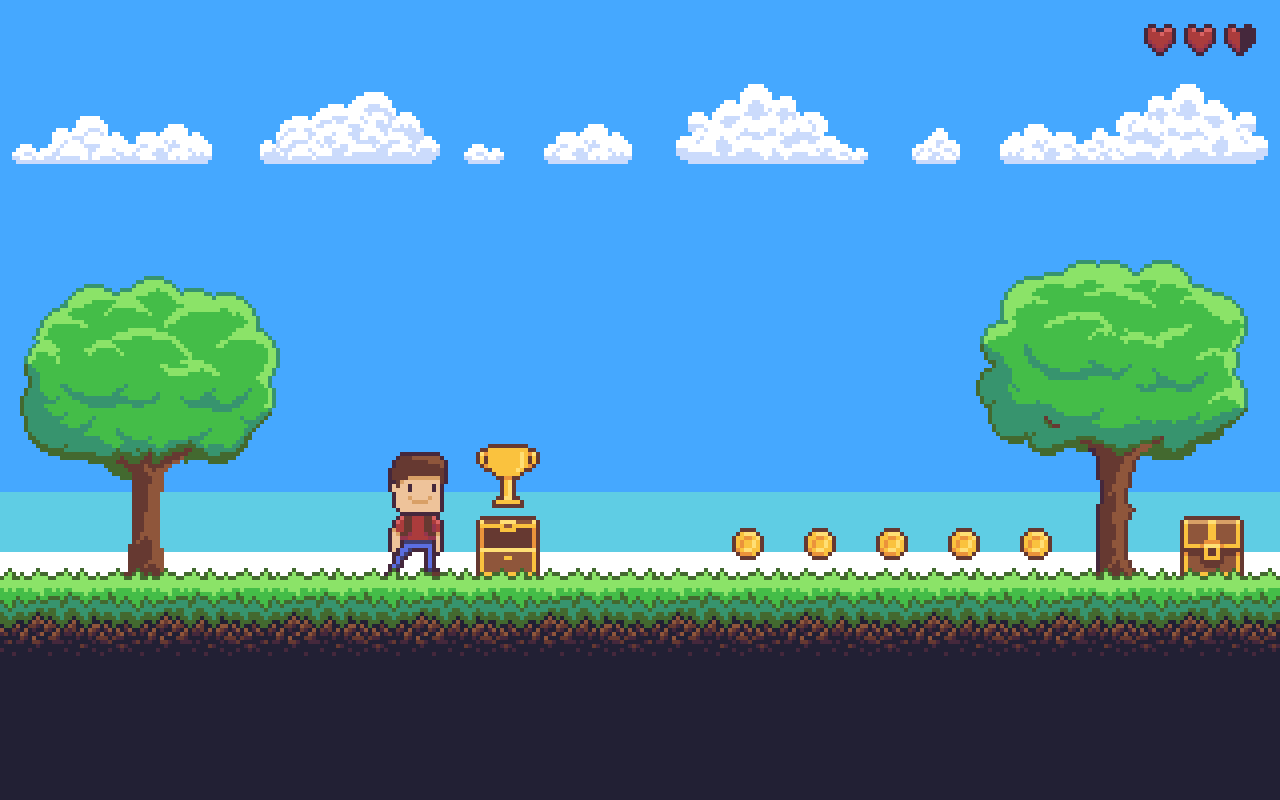

Village of Boredom

Chapter 1

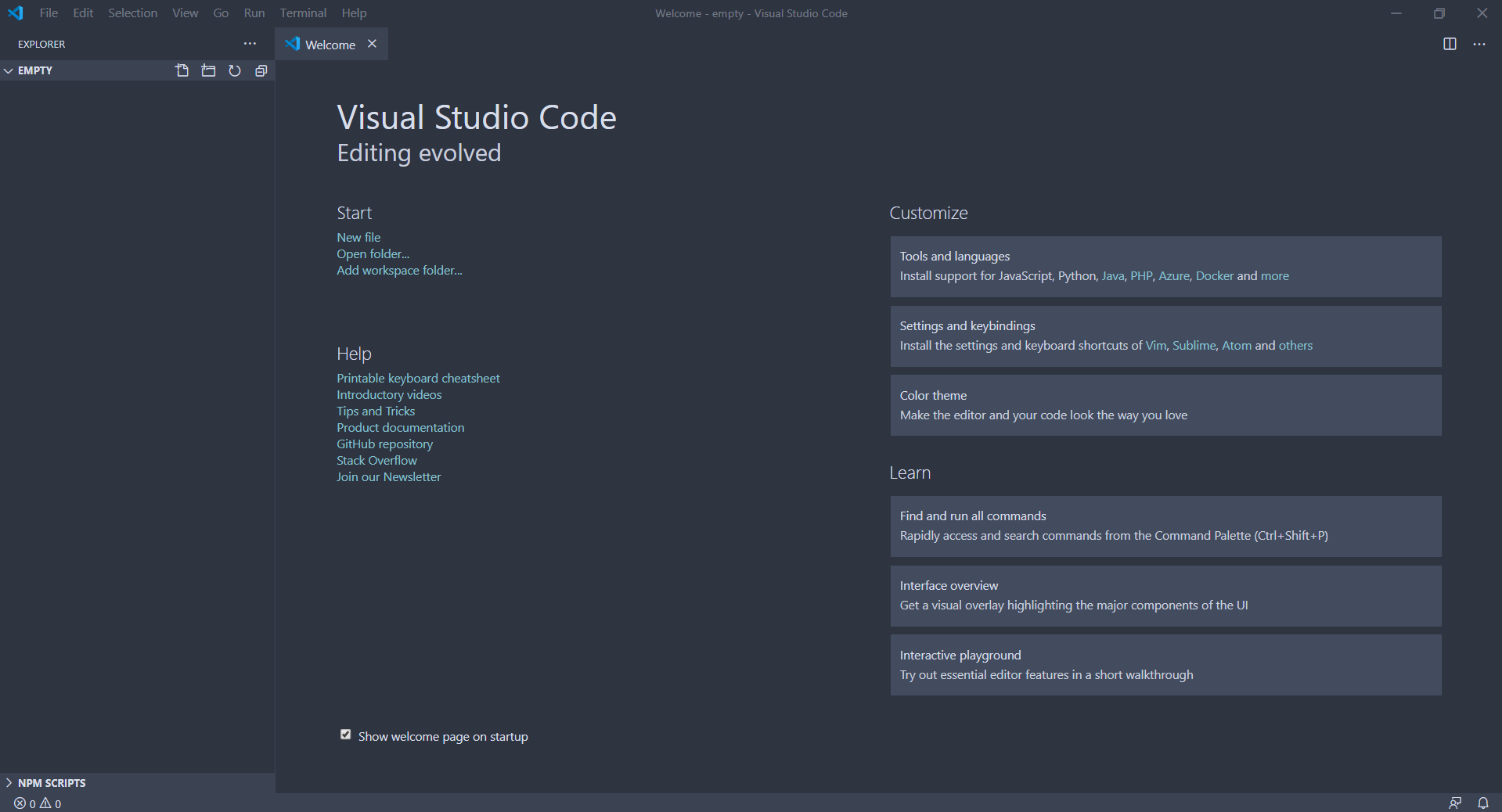

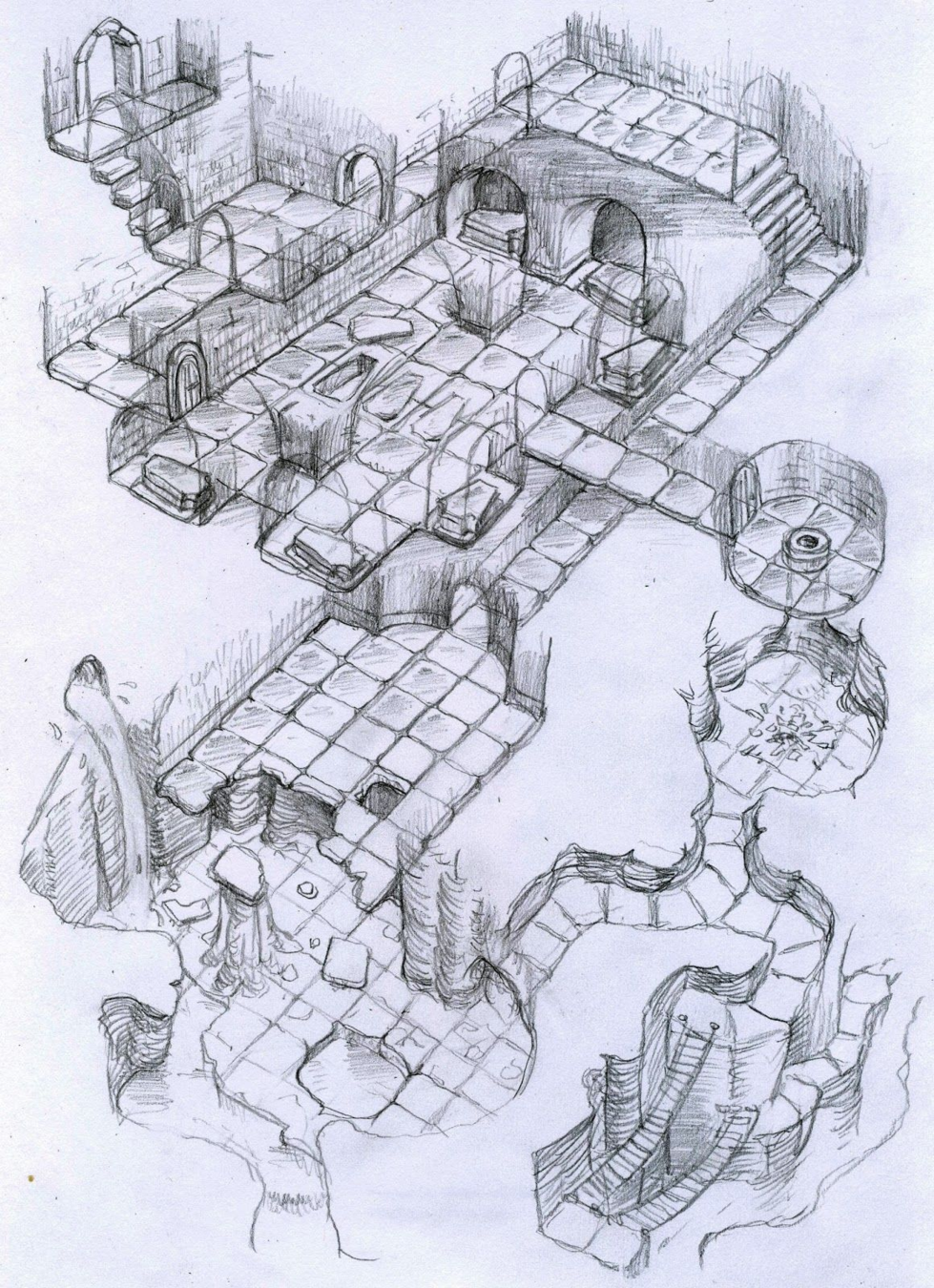

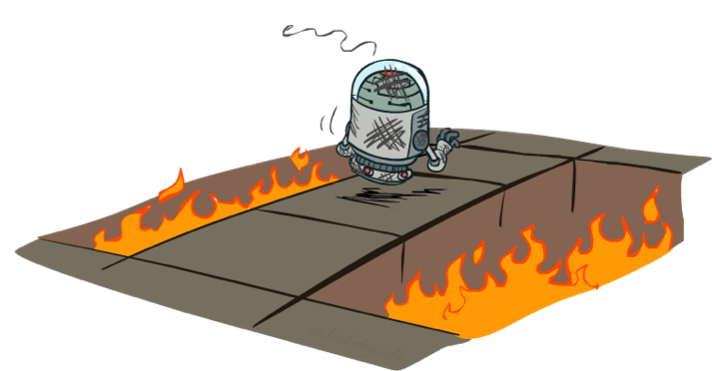

Pit of Despair

Chapter 1a

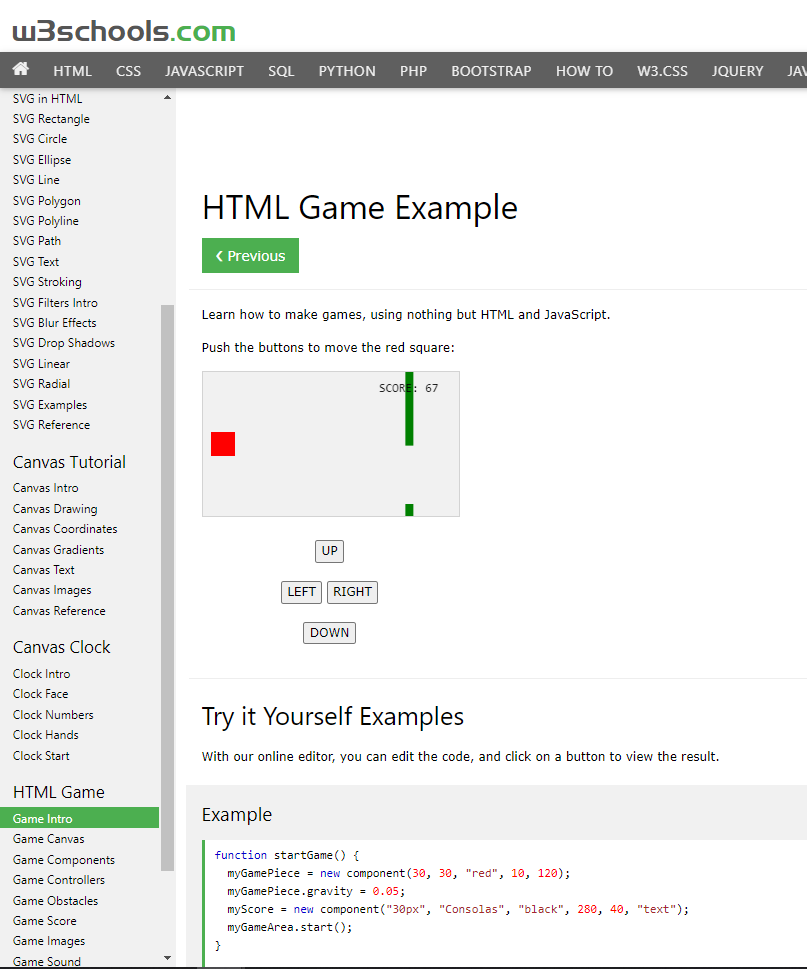

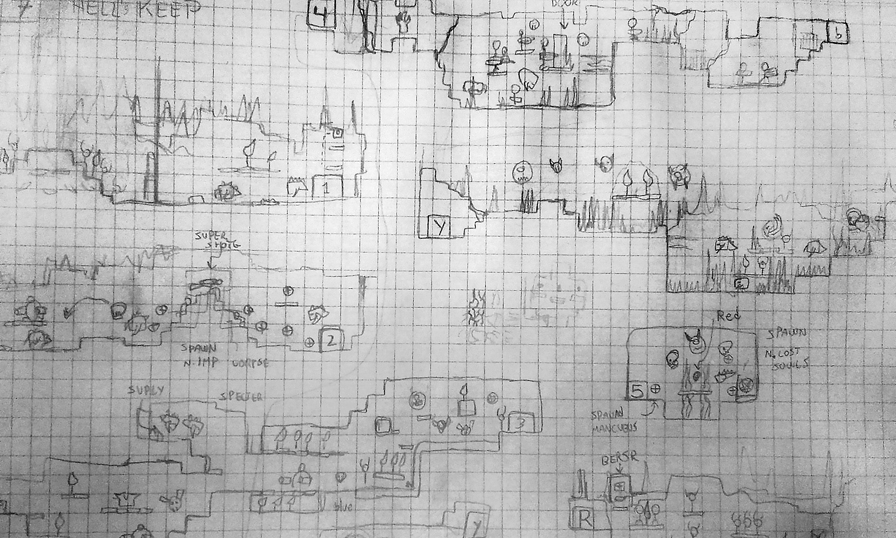

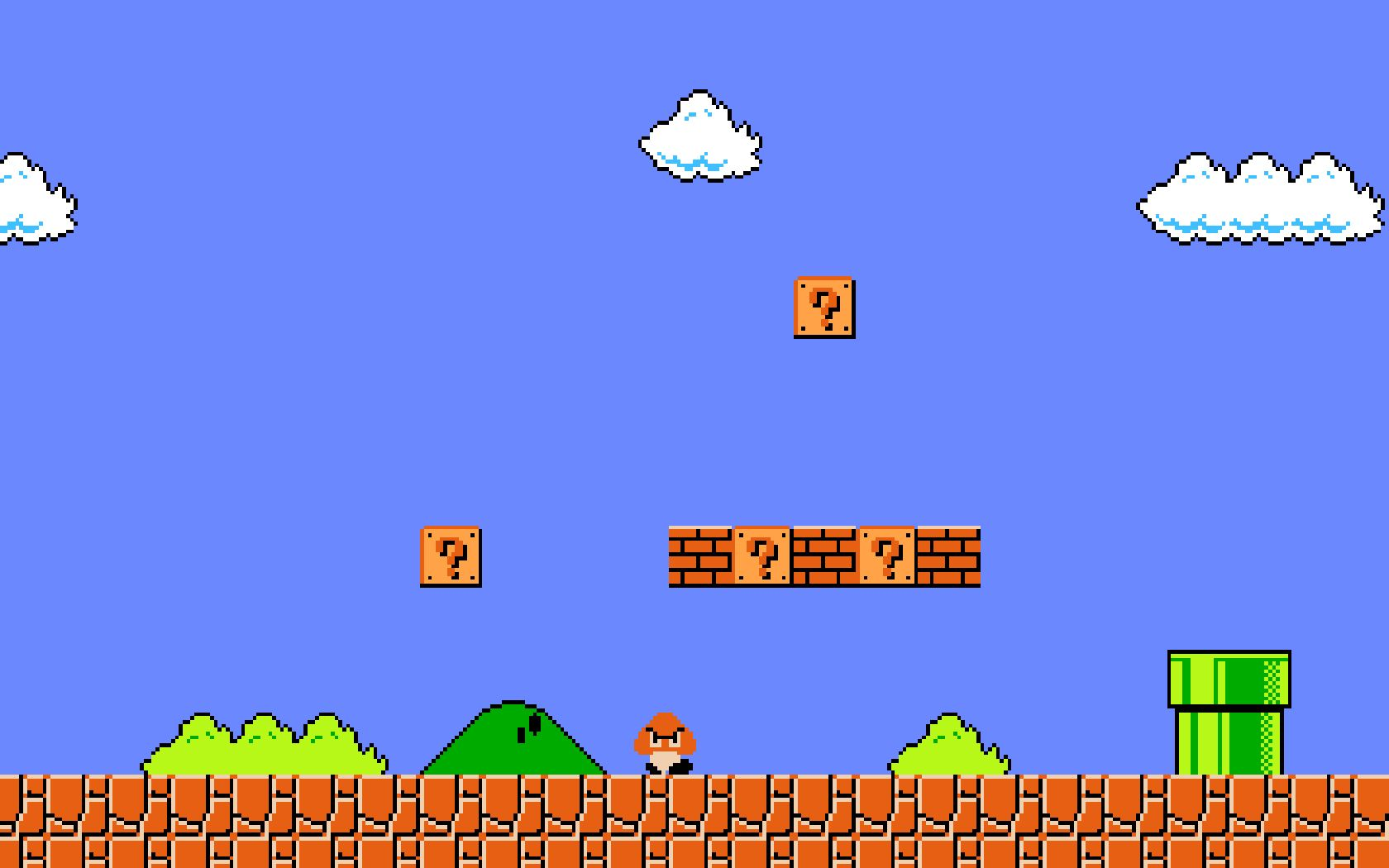

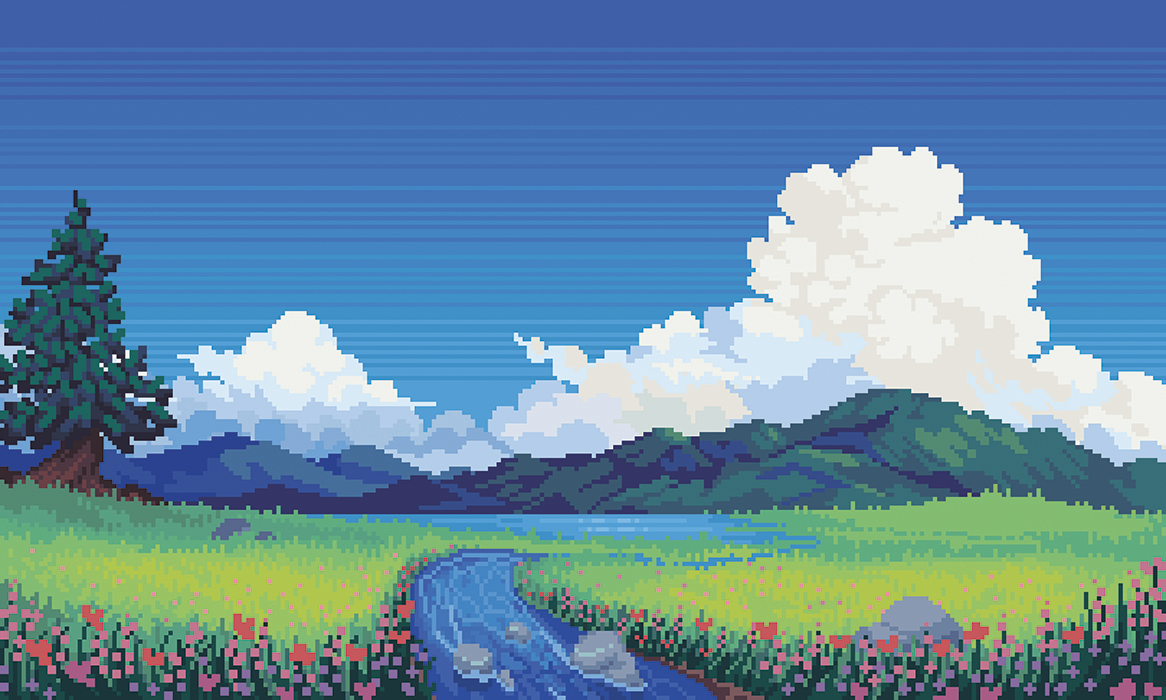

Valley of Pixels

Chapter 2

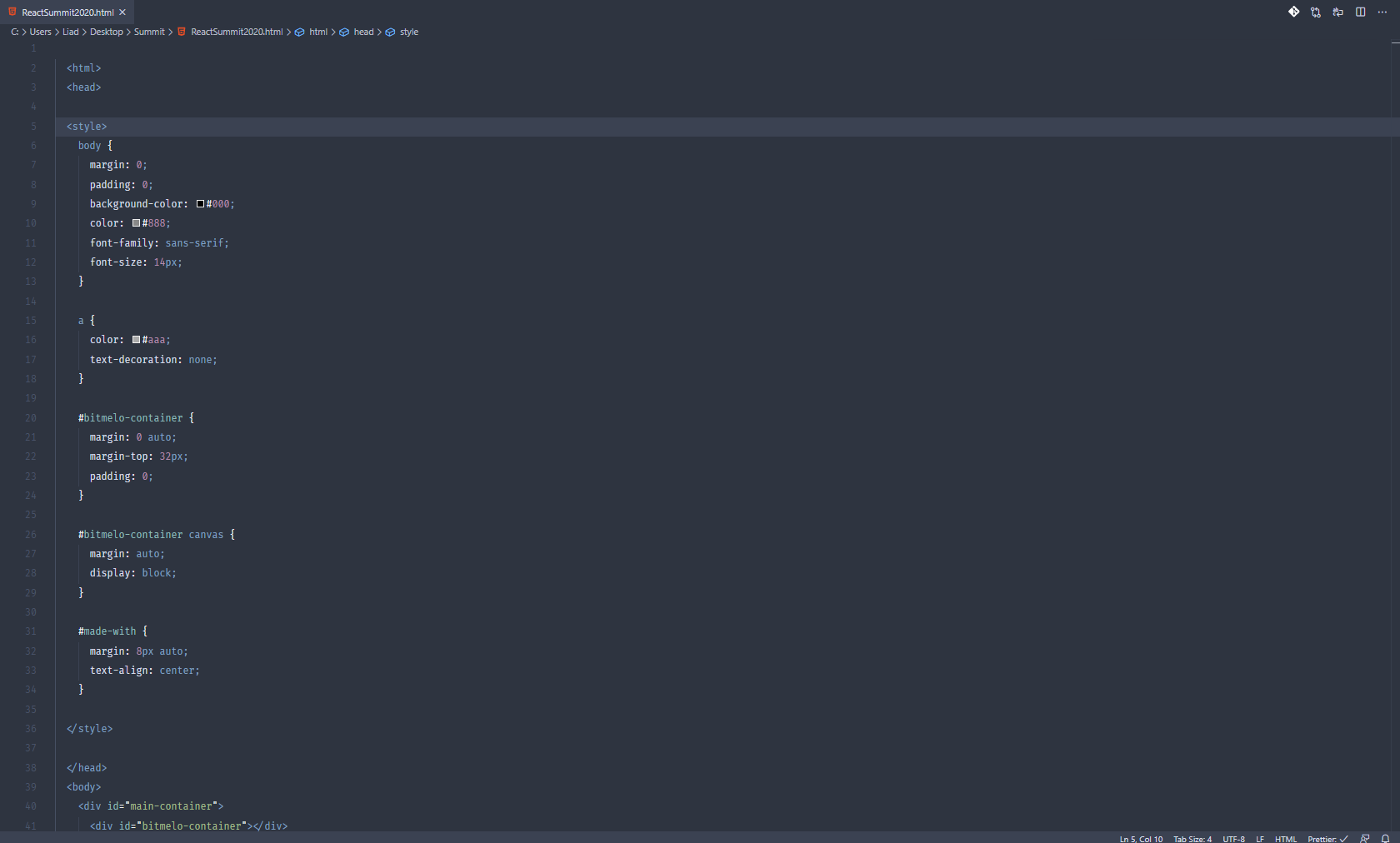

<body>

...

<canvas></canvas>

...

</body>

Game Graphics

<body>

...

<canvas></canvas>

...

</body>

Game Graphics

const canvas = document.querySelector("canvas");

const context = canvas.getContext("2d");<body>

...

<canvas></canvas>

...

</body>

Game Graphics

const canvas = document.querySelector("canvas");

const context = canvas.getContext("2d");

// later - draw on canvas

context.fillStyle = "#201A23";

context.fillRect(0, 0, 1220, 400);

context.fillStyle = "#8DAA9D";

context.beginPath();

context.rect(square.x, square.y, square.width, square.height);

context.fill();Game Elements

const player = {

x: 30,

y: 90,

running: false,

health: 5

}const enemy = {

x: 10,

y: 60,

velocity: 0.5

}

Game Controls

const keys = {};

window.addEventListener("keydown", onKeyDown);

window.addEventListener("keyup", onKeyUp);

const onKeyDown = (event) => registerKey(event.keyCode, true);

const onKeyUp = (event) => registerKey(event.keyCode, false);

function registerKey(keyCode, isPressed) {

switch(keyCode) {

case 37:

keys.left = isPressed;

case 38:

keys.up = isPressed;

case 39:

keys.right = isPressed;

case 40:

keys.down = isPressed;

}

}

Game Logic

if(keys.left) {

batman.velocityX = 0.5;

}

if(keys.down) {

batman.velocityY = 0.5;

}

batman.x += batman.velcoityX;

batman.y += batman.velocityY;

if(batman.y >= screenHeight) {

batman.y = screenHeight;

}

if(batman.x === superman.x) {

batman.lives -= 1;

}

if(player.x === heart.x) {

batman.lives += 1;

}

if(martha) {

break;

}

Game Loop

function loop() {

// magic here

requestAnimationFrame(loop);

}

requestAnimationFrame(loop);

Game Loop

function loop() {

// update according to input

if(keys.left) {

batman.velocityX = 0.5;

}

batman.x += batman.velcoityX;

...

// run game logic (collisions, etc.)

if(batman.x === superman.x) {

batman.lives -= 1;

}

if(player.x === heart.x) {

batman.lives += 1;

}

...

// draw on canvas

context.fillStyle = "#8DAA9D";

context.rect(square.x, square.y, square.width, square.height);

context.fill();

...

requestAnimationFrame(loop);

}

requestAnimationFrame(loop);

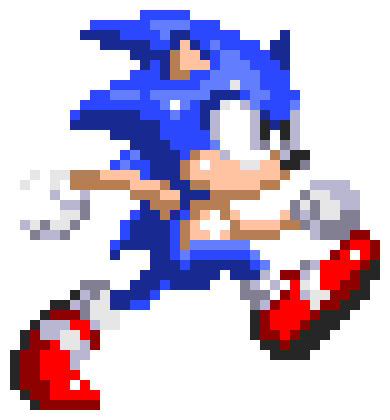

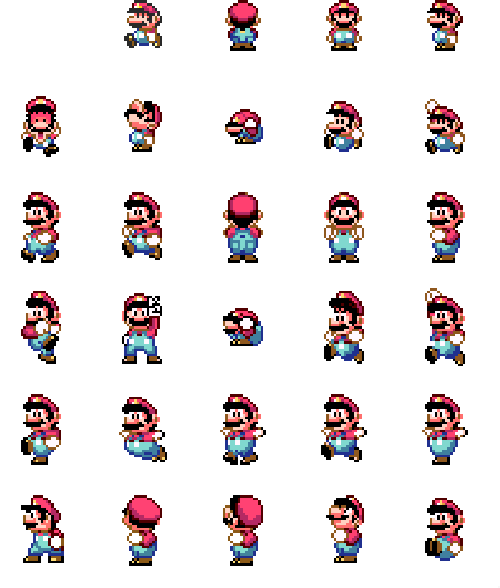

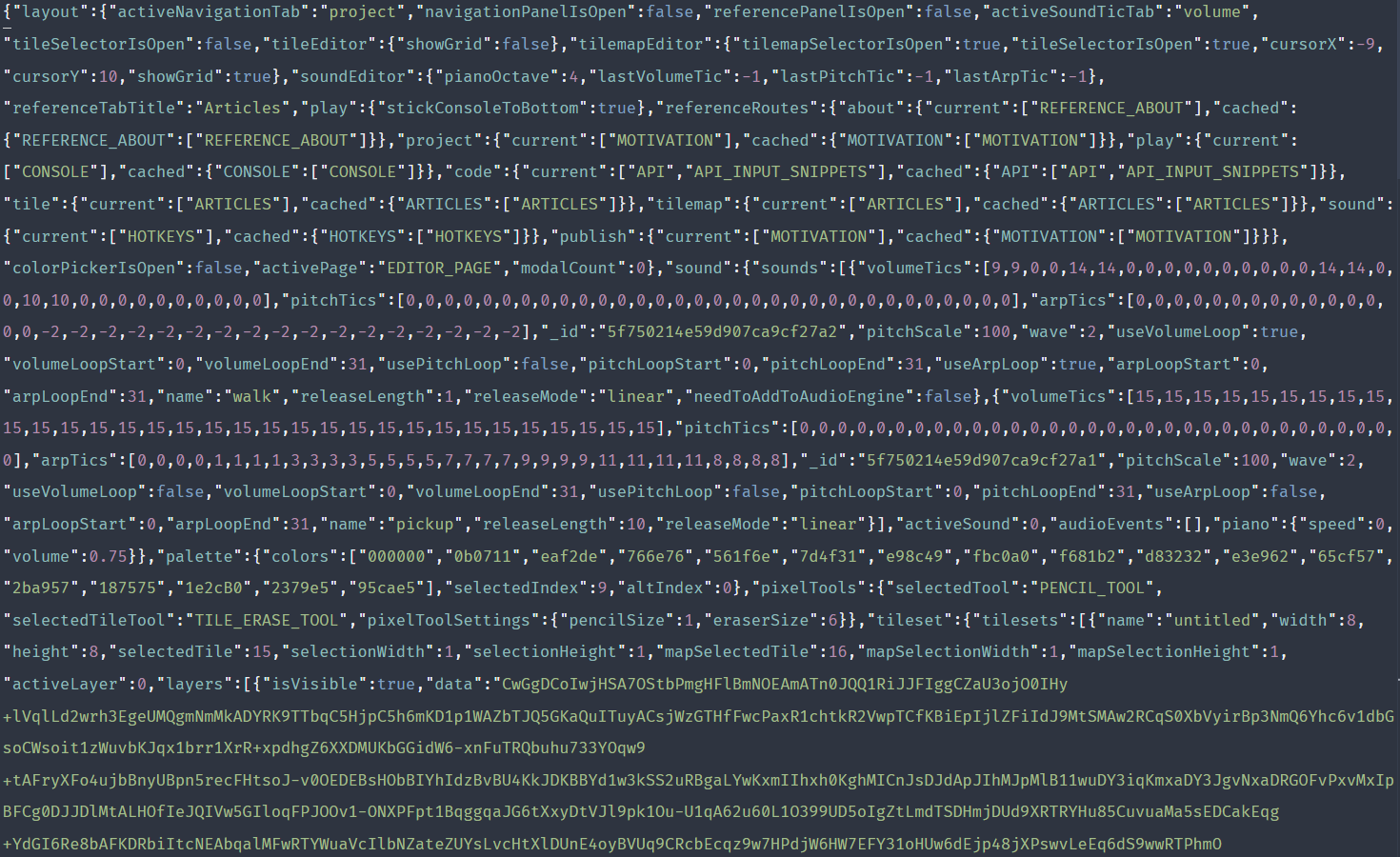

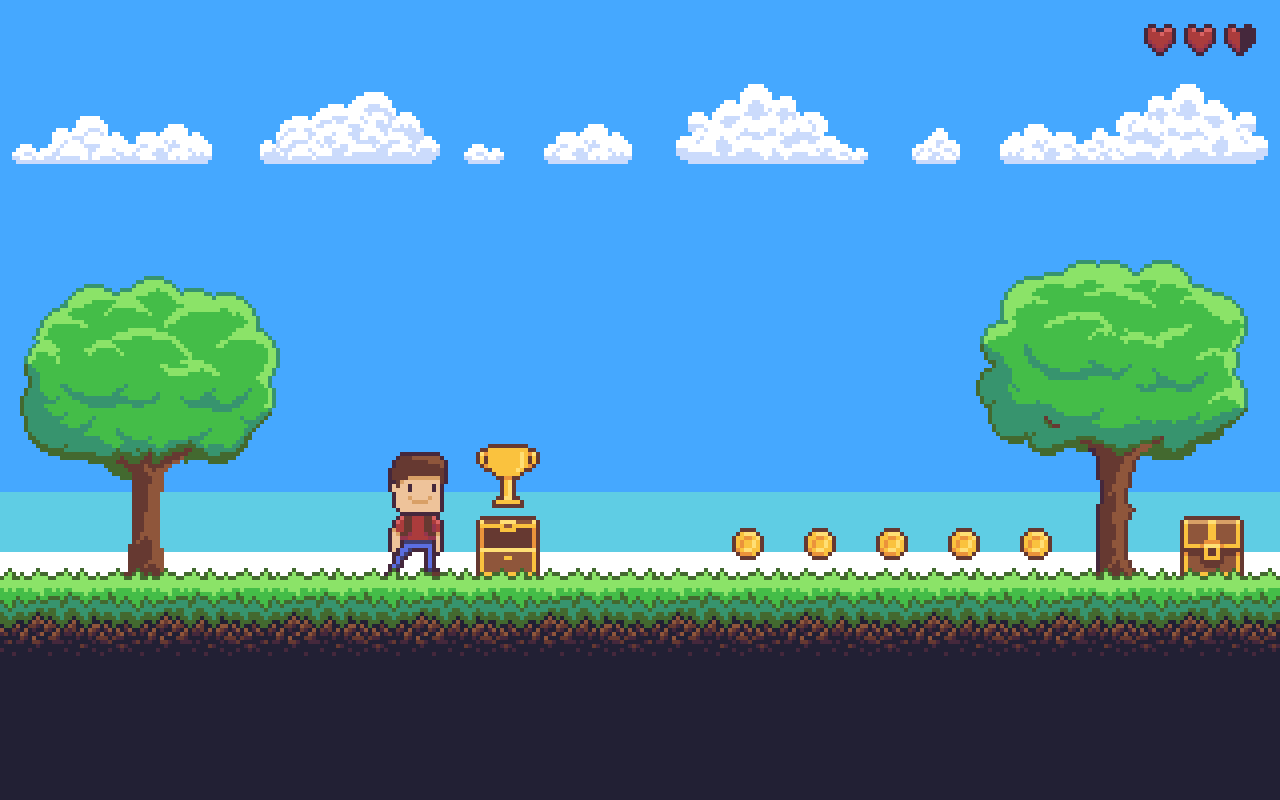

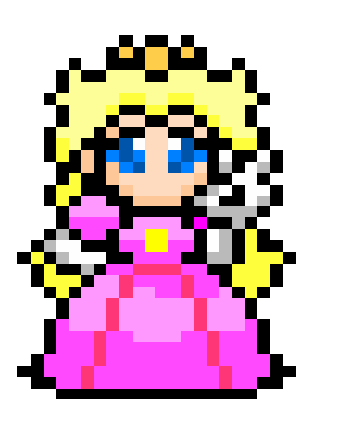

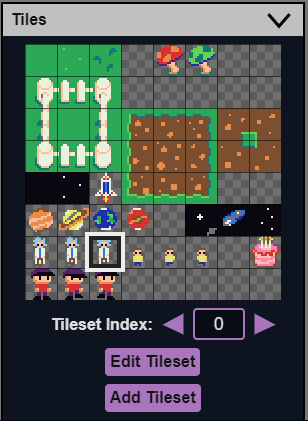

Sprites

Pixel Art

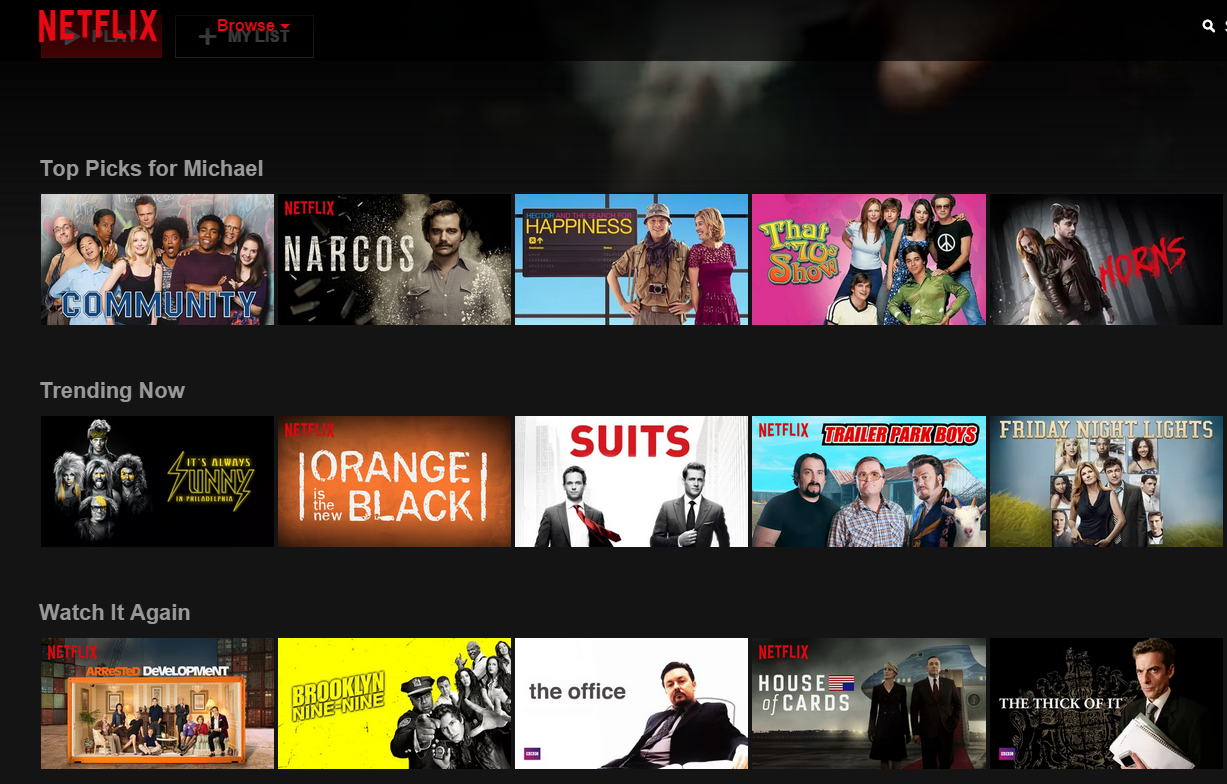

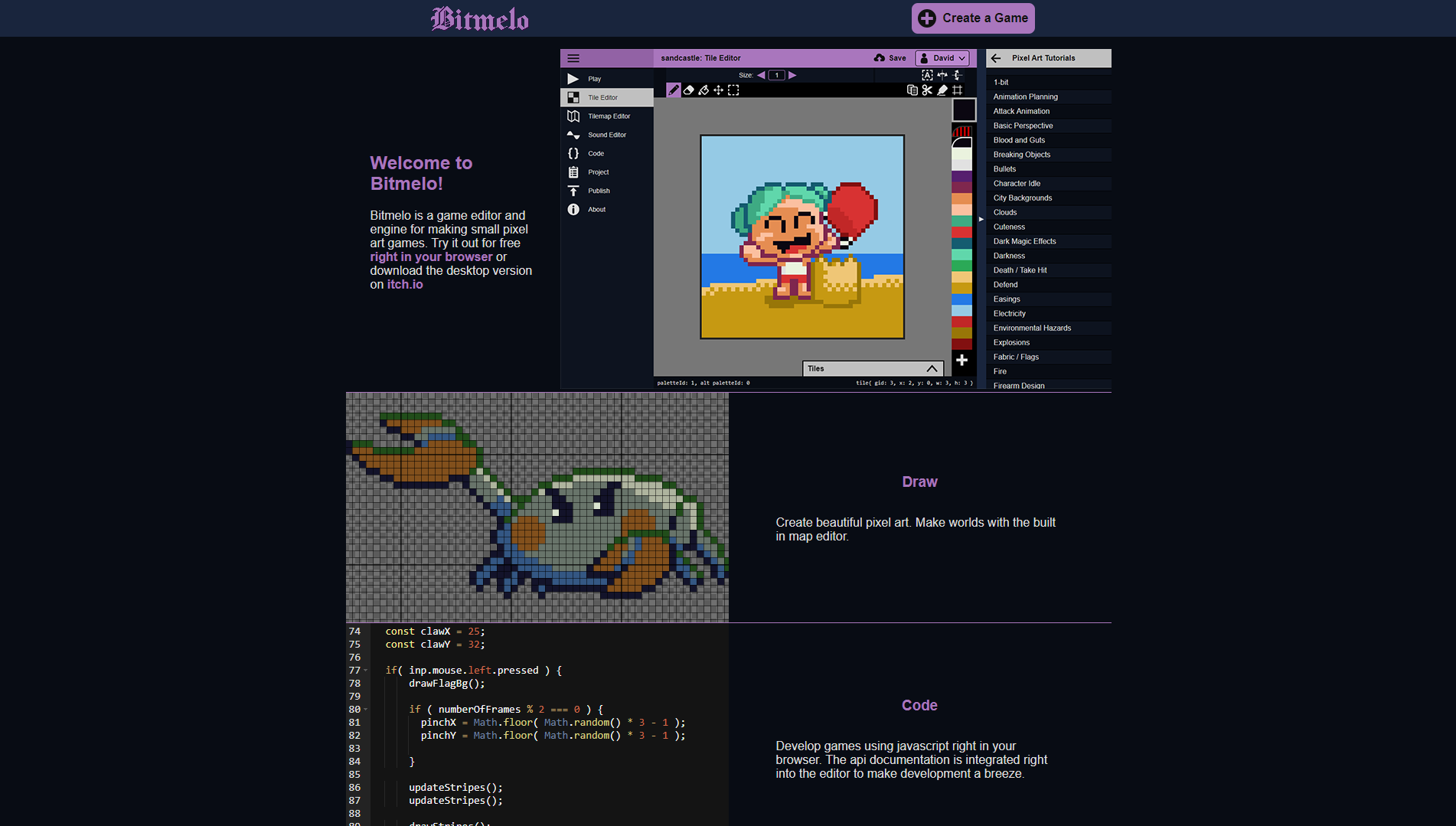

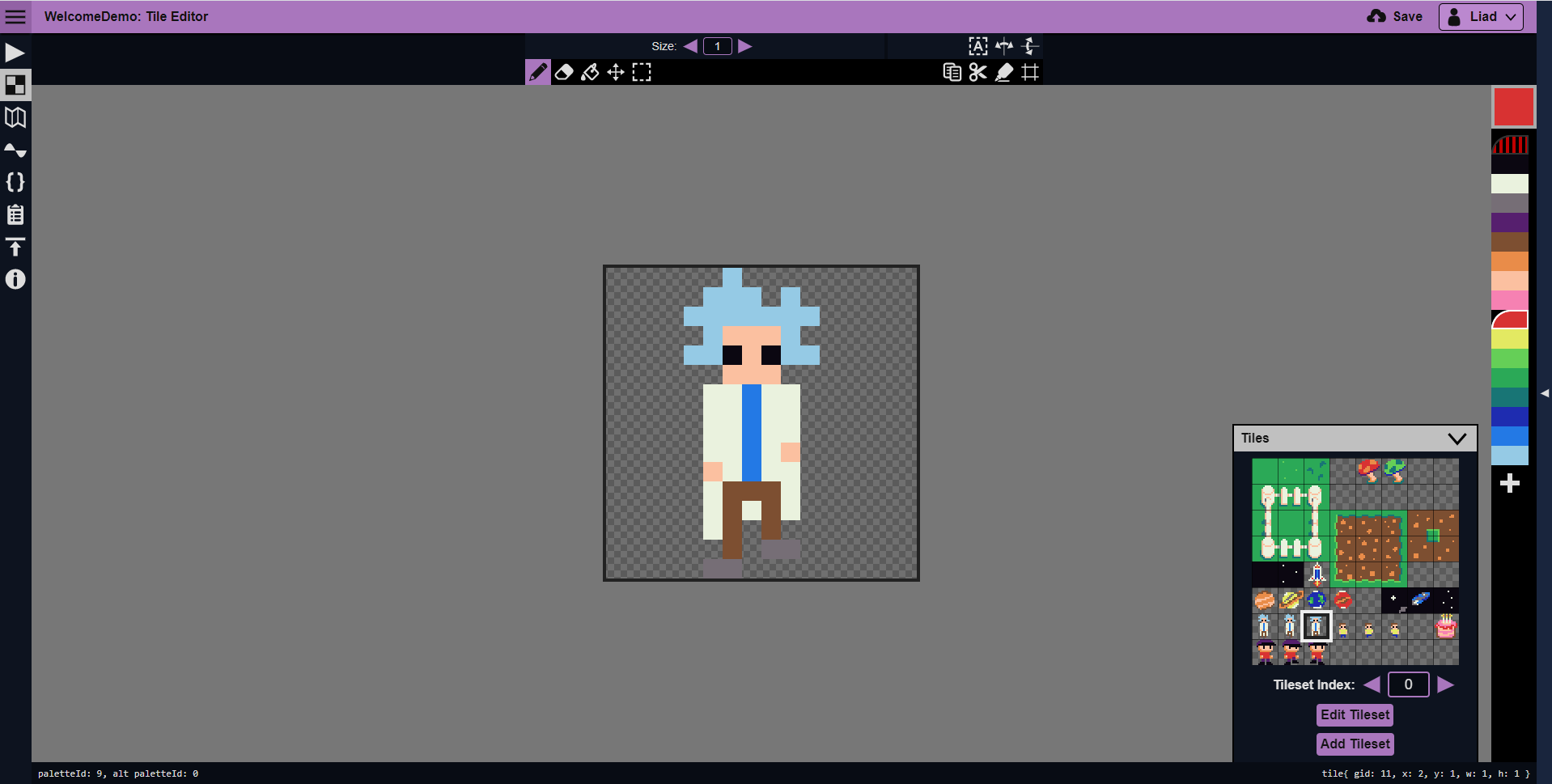

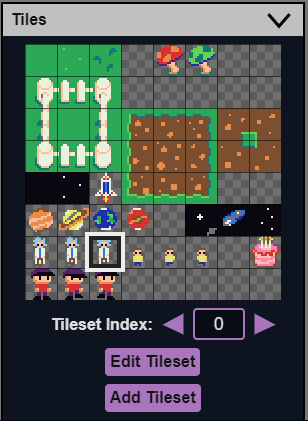

Game Engines to The Rescue!

Meadow of Efficiency

Chapter 3

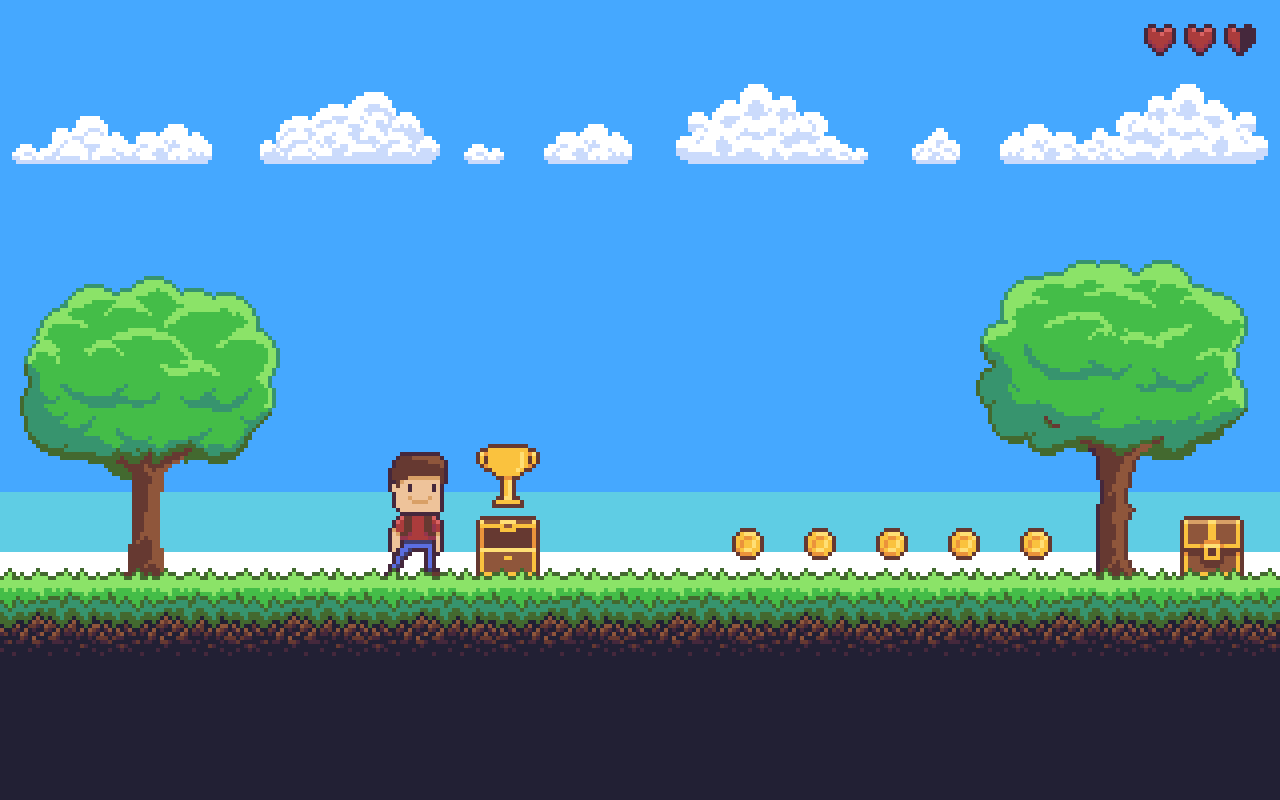

bitmelo.com

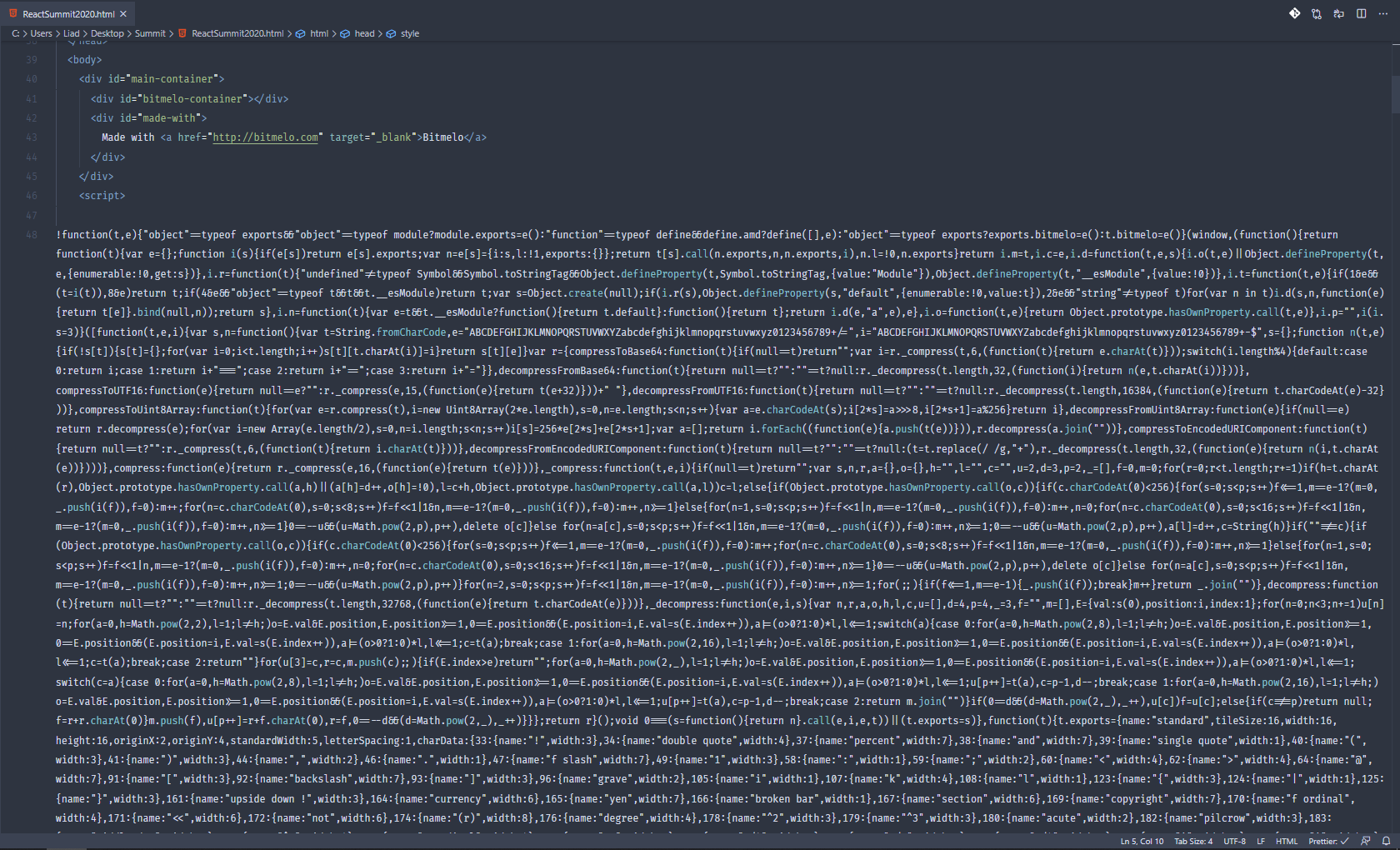

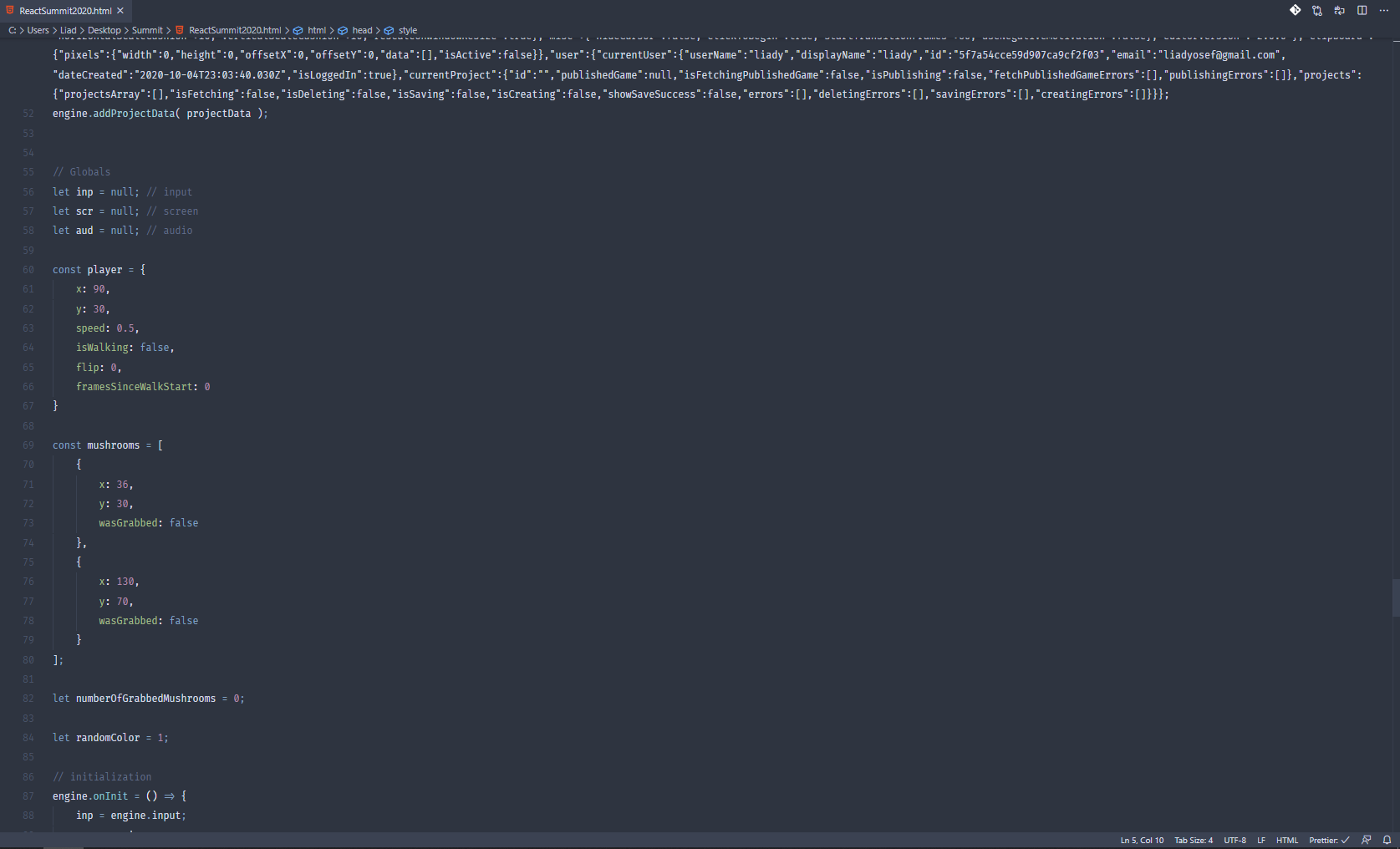

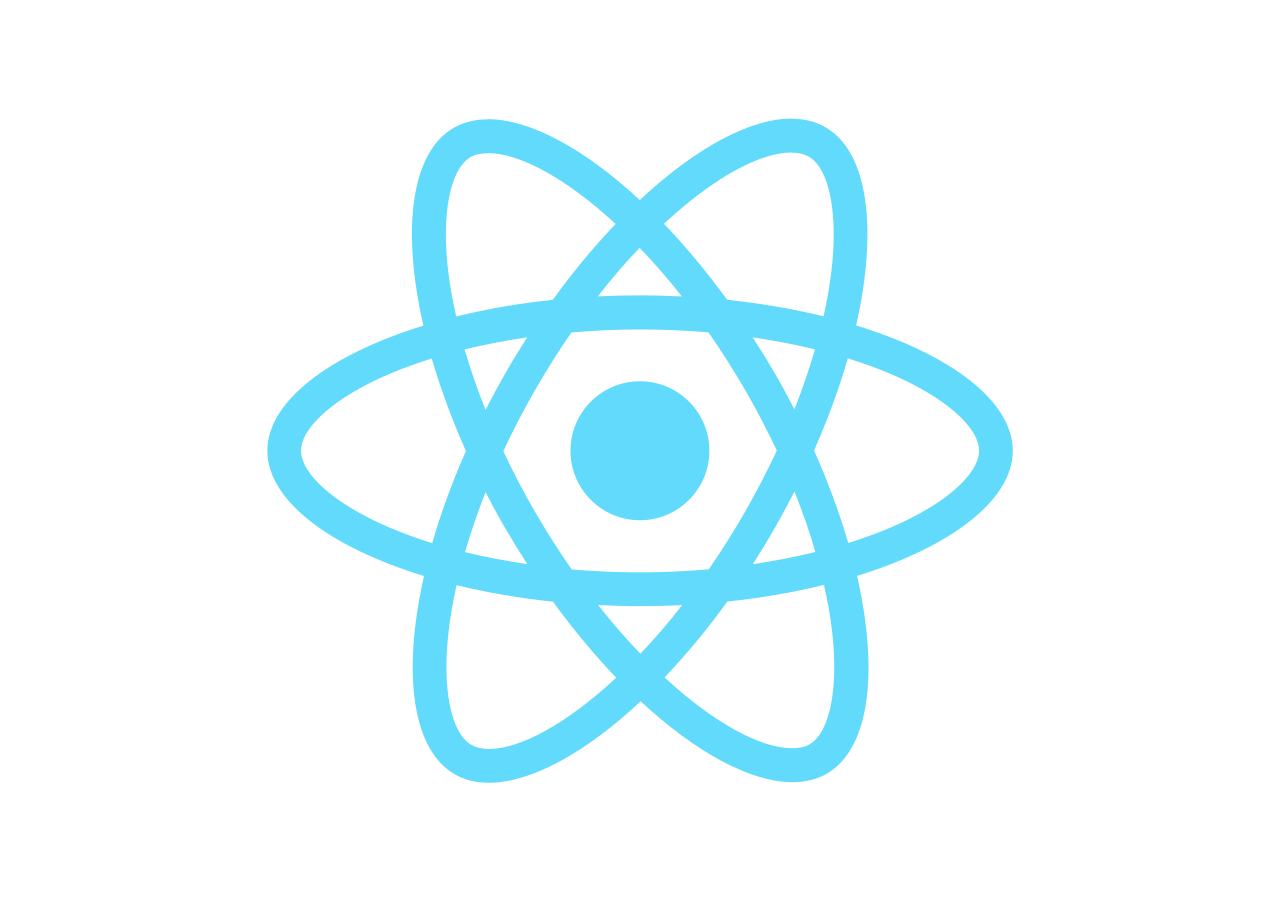

npx create-react-app 2d-game

// initialize engine

import projectData from "./projectData.json";

const engine = new window.bitmelo.Engine();

engine.addProjectData(projectData);

// game logic

export function init() {

engine.start();

}<head>

<script src="%PUBLIC_URL%/bitmelo.js"></script>

...

index.html

game.js

import * as game from './game/game.js';

function GameWrapper() {

useEffect(() => {

game.init();

}, [])

return <div className='main'>

<div id='bitmelo-container'></div>

...

</div>

}GameWrapper.jsx

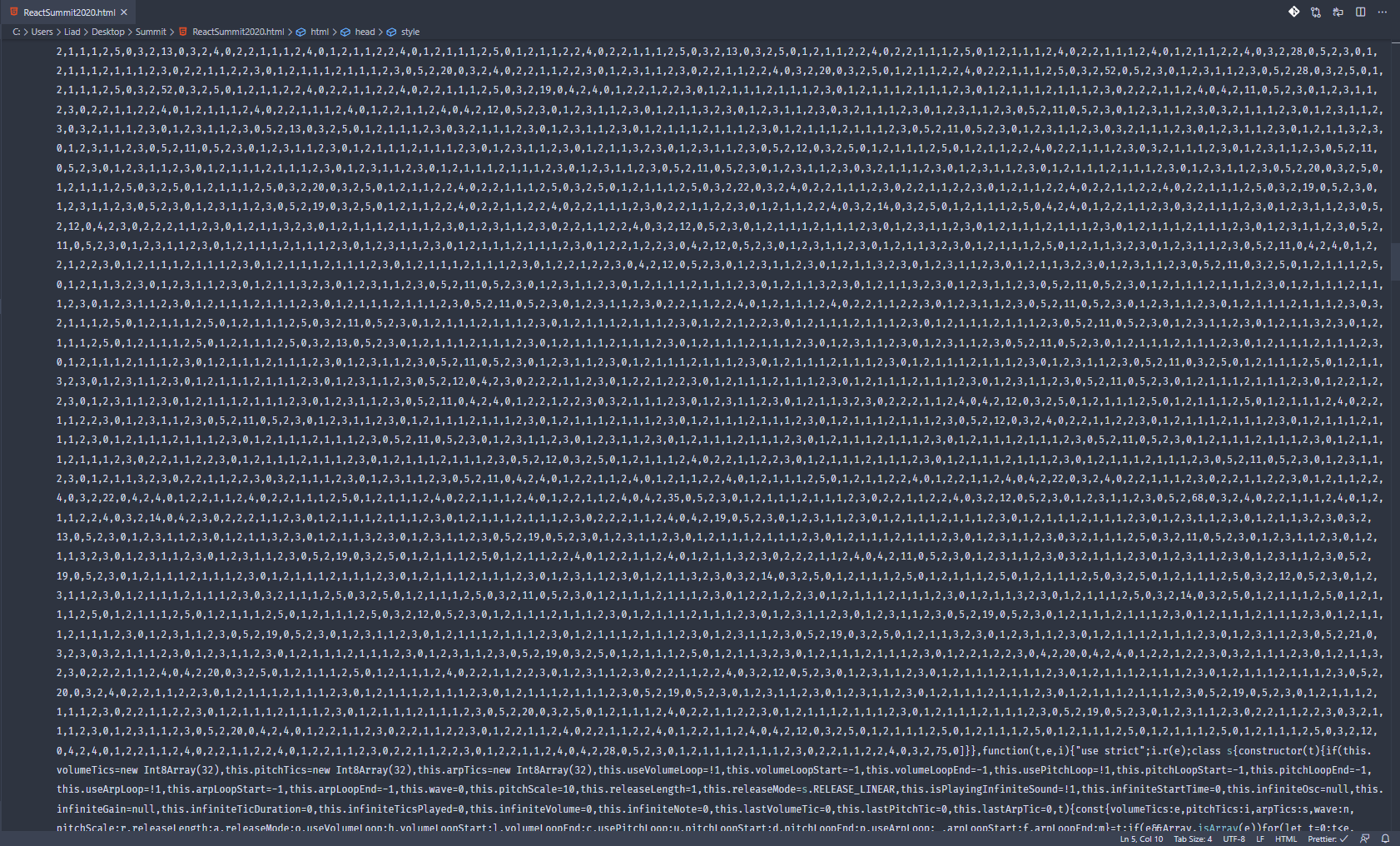

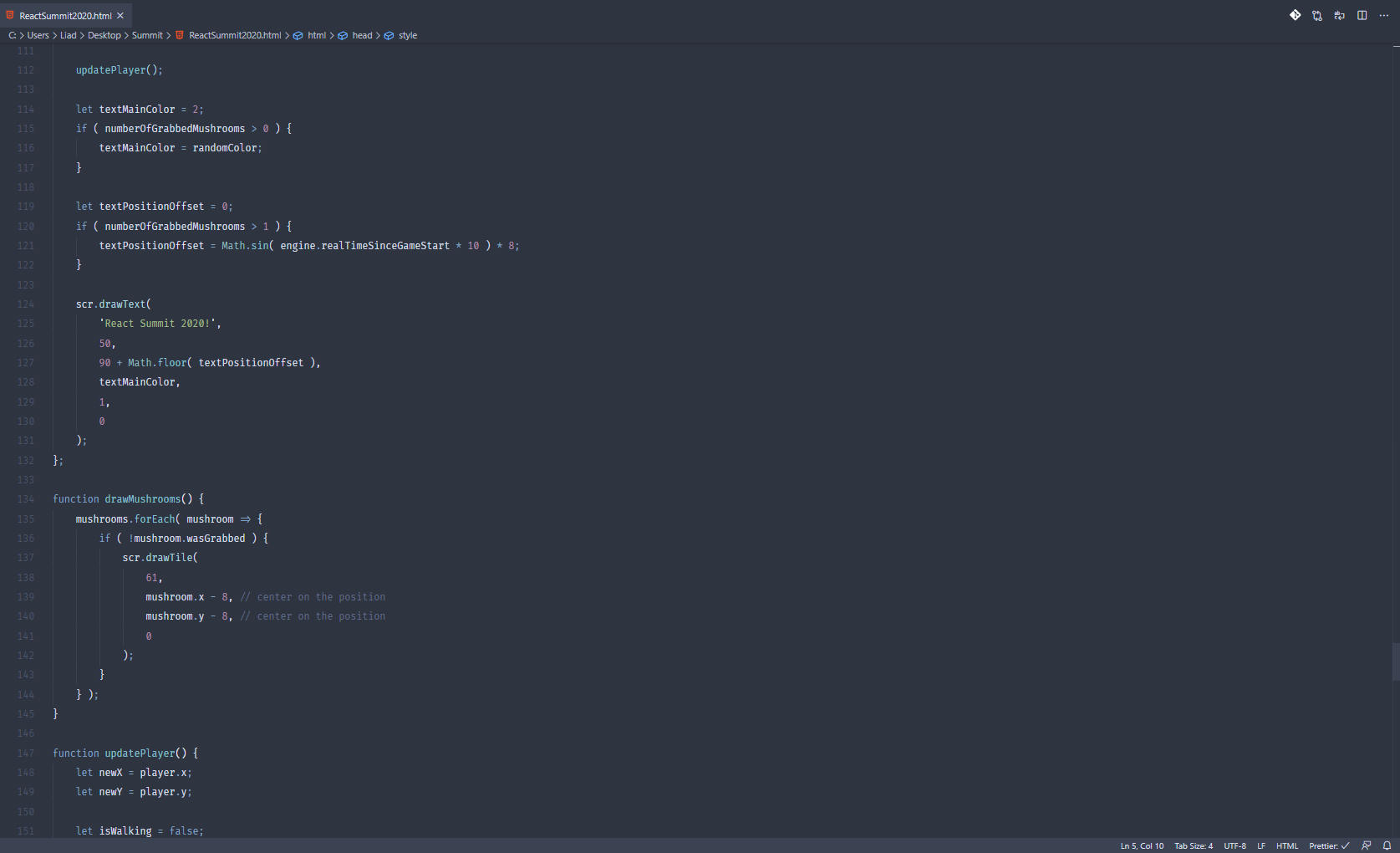

engine.onUpdate = () => {

scr.drawMap(0, 0, -1, -1, 0, 0, 0);

drawTargets();

updatePlayer();

scr.drawText("React Summit 2020!", 50, 90);

};function drawTargets() {

targets.forEach((target) => {

if (!target.wasGrabbed) {

scr.drawTile(

61,

target.x - 8,

target.y - 8,

0

);

}

});

}function updatePlayer() {

let newX = player.x;

let isWalking = false;

if (inp.left.pressed) {

newX -= player.speed;

isWalking = true;

player.flip = 1;

}

...

const distance = Math.sqrt(dX*dX + dY*dY);

// player has grabbed a target

if (distance <= 12) {

target.wasGrabbed = true;

}

...

// draw the player

let frameGID = 1;

if (player.isWalking) {

if (player.framesSinceWalkStart % 16 < 8) {

frameGID = frameGID + 1;

} else {

frameGID = frameGID + 2;

}

}

}

Mountain of Wisdom

Chapter 4

AI playing Games

Coding the rules

Inferring rules from data

if(player.x > enemy.x) {

if (player.health > 5) {

movePlayerRight();

} else {

movePlayerLeft();

}

}

if (player.y > enemy.y) {

if (player.health > 5) {

movePlayerUp();

} else {

movePlayerDown();

}

}const inputs = [

[input1, result1],

[input2, result2],

[input3, result3],

...

];

model.train(inputs);

const newResult = model.predict(newInput);

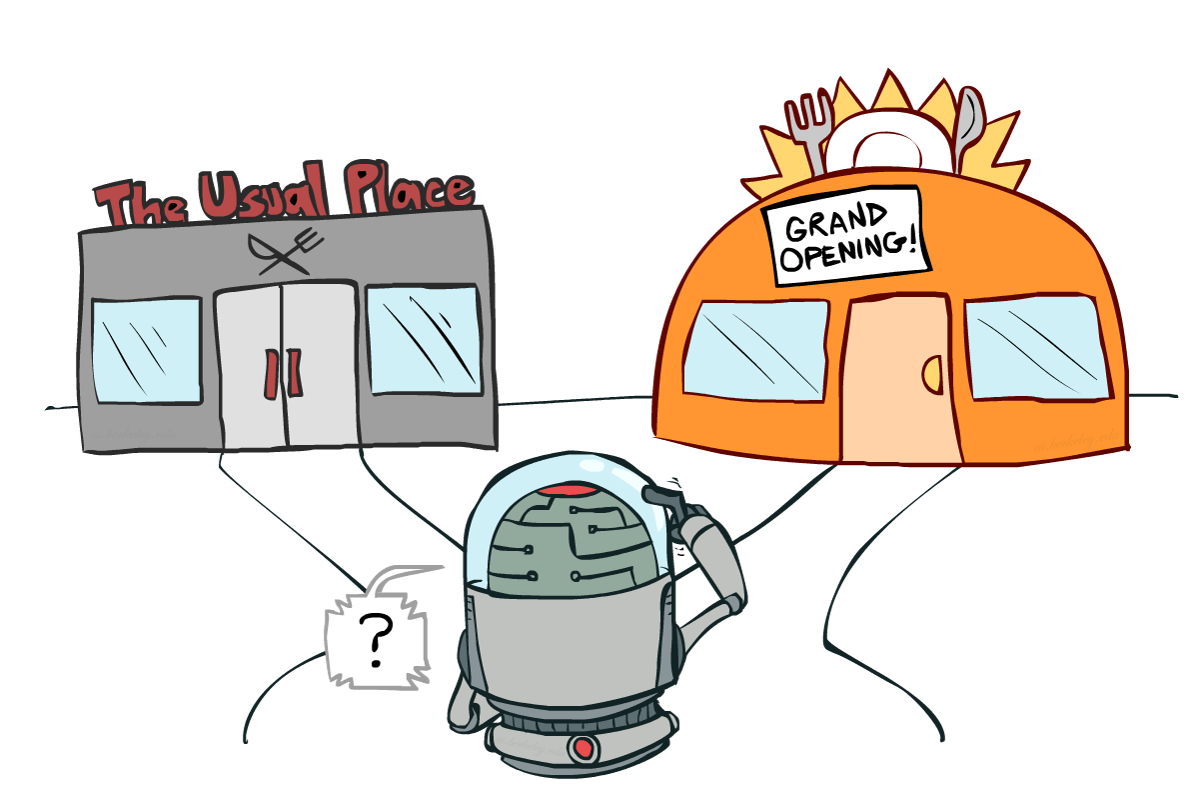

In games, we don't have all the inputs

Reinforcement Learning!

Reinforcement Learning!

Agent

Environment

Action

State

x: 30

y: 60

enemies: 2

lives: 3

coins: 4

Reinforcement Learning!

Agent

State

Environment

x: 30

y: 60

enemies: 2

lives: 3

coins: 4

Reward

Reinforcement Learning!

Agent

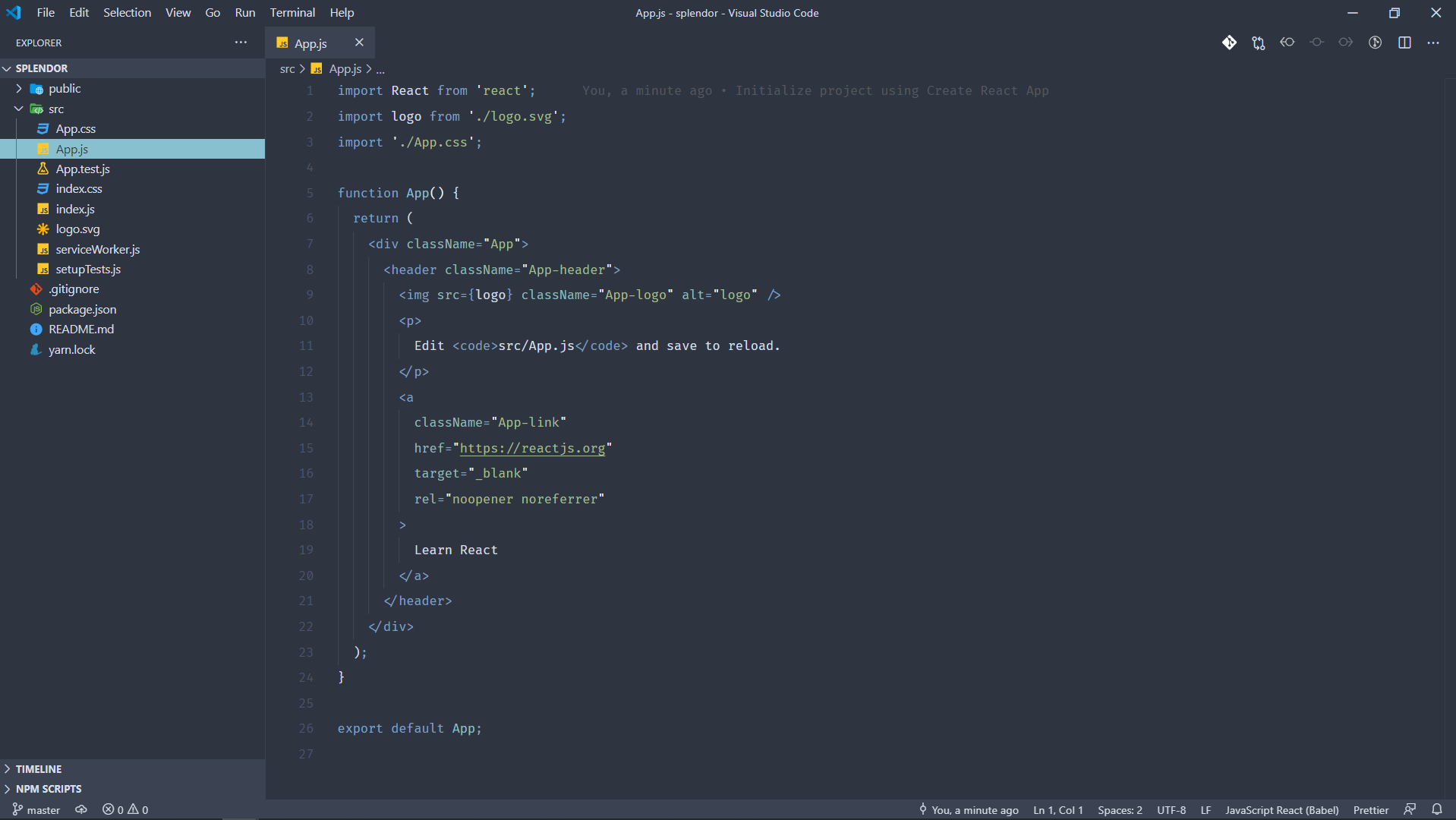

State

Environment

x: 30

y: 60

enemies: 2

lives: 3

coins: 4

Reinforcement Learning!

Agent

New State

Environment

x: 30

y: 60

enemies: 2

lives: 3

coins: 5

How good is this action?

+

Immediate Reward

Expected rewards from new state

Value Table

| UP | DOWN | LEFT | RIGHT | |

|---|---|---|---|---|

| State 1 (x: 20, y: 30) | 12 | 5 | 15 | 4 |

| State 2 (x: 10, y: 10) | 11 | 8 | 9 | 3 |

| State 3 (x: 10, y: 20) | 8 | 7 | 9 | 10 |

| ... | ... | ... | ... | ... |

Reward: 2

Value Table

| UP | DOWN | LEFT | RIGHT | |

|---|---|---|---|---|

| State 1 (x: 20, y: 30) | 12 | 5 | 15 | 4 |

| State 2 (x: 10, y: 10) | 11 | 8 | 9 | 3 |

| State 3 (x: 10, y: 20) | 8 | 7 | 9 | 10 |

| ... | ... | ... | ... | ... |

Value Table

| UP | DOWN | LEFT | RIGHT | |

|---|---|---|---|---|

| State 1 (x: 20, y: 30) | 12 | 5 | 15 | 4 |

| State 2 (x: 10, y: 10) | 12 | 8 | 9 | 3 |

| State 3 (x: 10, y: 20) | 8 | 7 | 9 | 10 |

| ... | ... | ... | ... | ... |

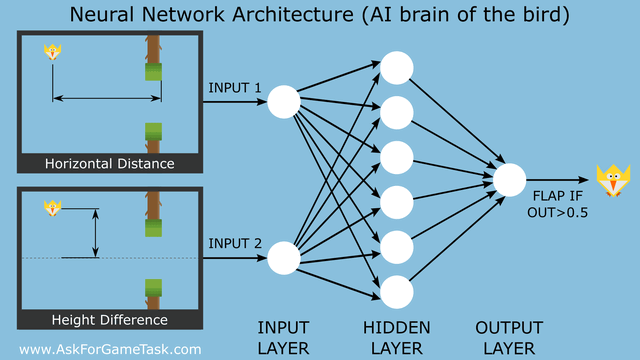

Value Table

| FLAP | NOTHING | |

|---|---|---|

| Distance1, Height1 | 12 | 5 |

| Distance2, Height2 | 12 | 8 |

| Distance3, Height3 | 8 | 7 |

| ... | ... | ... |

Value Network

Feeding Frames

Exploration

Exploration

Cool, but.

Cool, but.

https://github.com/aaronhma/awesome-tensorflow-js

https://www.metacar-project.com/

Cool, but.

Cool, but.

https://openai.com/blog/gym-retro/

// create an environment object

var env = {};

env.getNumStates = function() { return 8; }

env.getMaxNumActions = function() { return 4; }

// create the DQN agent

var spec = { alpha: 0.01 } // see full options on DQN page

agent = new RL.DQNAgent(env, spec);

setInterval(function(){ // start the learning loop

var action = agent.act(s); // s is an array of length 8

//... execute action in environment and get the reward

agent.learn(reward); // the agent improves its Q,policy,model, etc. reward is a float

}, 0);https://github.com/karpathy/reinforcejs

export function fireAction(key, wait = 0) {

fireWindowEvent(key, true);

setTimeout(async () => {

fireWindowEvent(key, false);

}, wait);

}

export function getActor() {

return [player.x, player.y];

}

export function getTarget() {

return [target.x, target.y];

}game.js

env.getNumStates = () => 4;

env.getMaxNumActions = () => 4;

agent = new RL.DQNAgent(env, spec);

[actor, target] = [getActor(), getTarget()];

const distance_before = getDistance(actor, target);

const action = agent.act([actor.x, actor.y, target.x, target.y]);

const actions = {

0: () => fireAction("ArrowRight"),

1: () => fireAction("ArrowLeft"),

2: () => fireAction("ArrowUp"),

3: () => fireAction("ArrowDown")

}

actions[action]();

[actor, target] = [getActor(), getTarget()];

const distance_after = getDistance(actor, target);

let reward =

actorInEdge

? -(distance_after / 300)

: (distance_before - distance_after) / (distance_before);

agent.learn(reward);ai.js

const saved = agent.toJSON();

...

agent.fromJSON(saved);

We have AI!

River of Opportunities

Chapter 5

Let's explore!

Let's explore!

let inputs = [actor.x, actor.y, target.x, target.y, target.direction]ai.js

let reward = (distance_before - distance_after) / (distance_before);ai.js

let reward = - (distance_before - distance_after) / (distance_before);let reward = (-1 * gettingCloserToMorty) + gettingCloserToTargetai.js

Village of Boredom

Chapter 1

Valley of Pixels

Chapter 2

River of Opportunities

Chapter 5

Chapter 3

Meadow of Efficiency

Pit of Despair

Chapter 1a

Chapter 4

Mountain of Wisdom

Just do it.

You're awesome!

QUESTIONS?

@liadyosef

https://github.com/liady/rl-games

https://rl-games-ai.netlify.app/

Games & AI

By Liad Yosef

Games & AI

Build games for your AI to play.

- 3,905