Reading Topic:

Deep Reinforcement Learning

KAUST-IVUL-July 20-2017

Guohao Li

lightaime@gmail.com

Deep Reinforcement Learning

- Q-Learning Methods

- Actor-Critic Methods

Deep Reinforcement Learning

- Q-Learning Methods

- Actor-Critic Methods

Q learning Methods

- Deep Q-network (Dec, 2013)

- Double DQN (Dec, 2015)

- Prioritized replay (Feb, 2016)

- Dueling network (Apr, 2016)

- Bootstrapped DQN (Jul, 2016)

Q learning Methods

- Deep Q-network (Dec, 2013)

- Double DQN (Dec, 2015)

- Prioritized replay (Feb, 2016)

- Dueling network (Apr, 2016)

- Bootstrapped DQN (Jul, 2016)

DQN (Dec 2013)

Deepmind proposed the first deep learning model to successfully learn control policies directly from high-dimensional sensory input using reinforcement learning

- raw pixels

- no hand-crafted features

- using CNN and MLP to approximate action-value function (Q function)

DQN (Dec 2013)

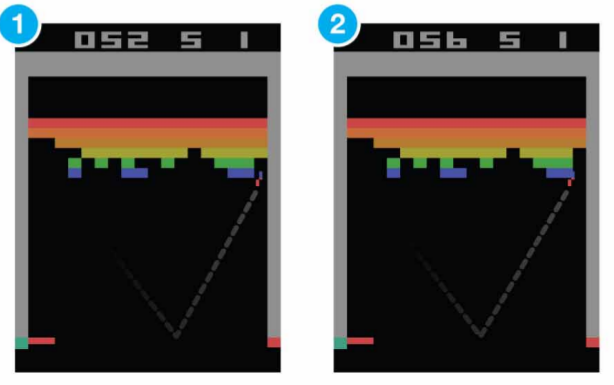

Neural Network Architecture

- input raw pixels

- using 3 CNN layers and 2 MLPs to approximate Q function

- output Q(s, a; θ)

DQN (Dec 2013)

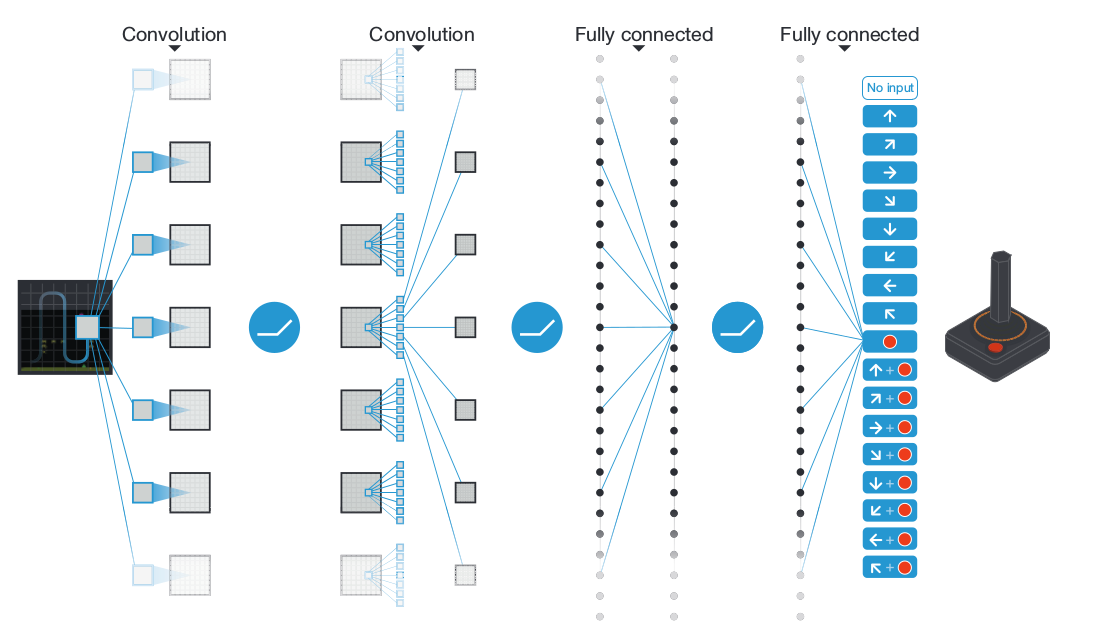

Algorithm Diagram (Nature 2015)

DQN (Dec 2013)

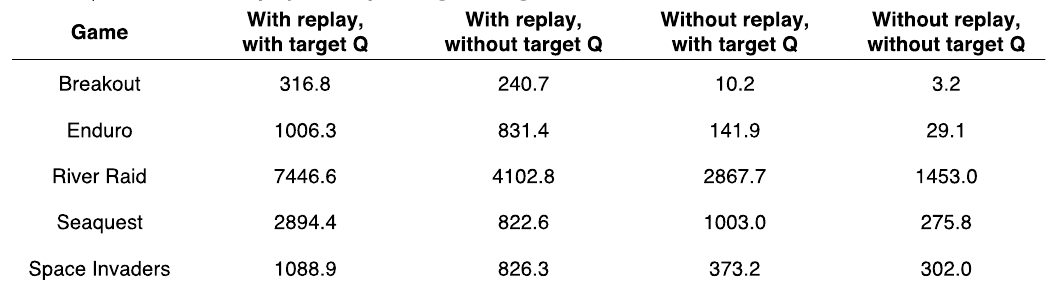

3 Tricks

experience replay

- greater data efficiency

- break correlations in data

target Q-network

- a separate network for generating target term

- more stable, prevent oscillations and divergence

clip the temporal diffierence error term

- adaptively to sensible range

- robust gradients

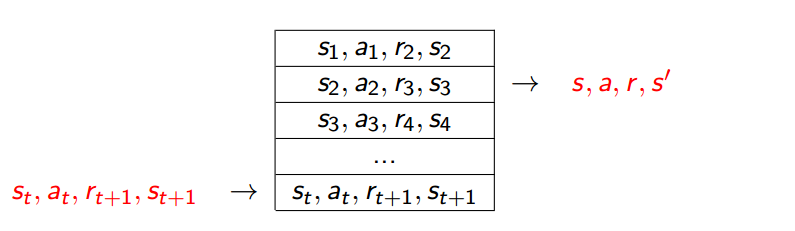

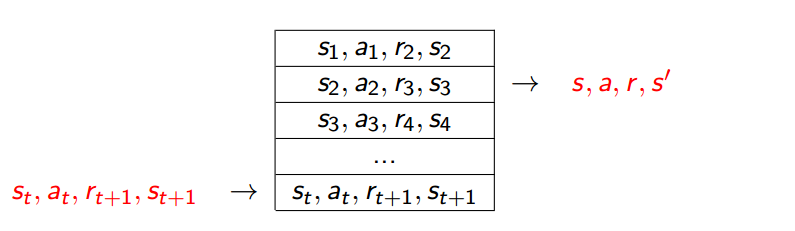

DQN (Dec 2013)

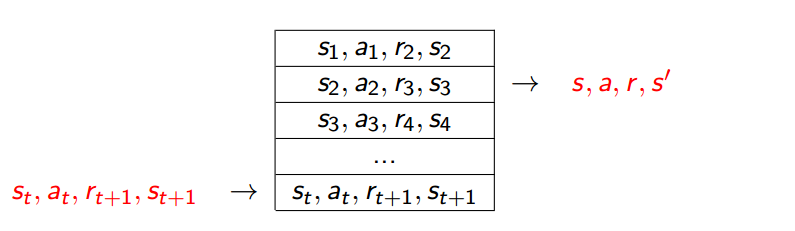

experience replay

- greater data efficiency

- break correlations in data

collecting experiences into replay memory and sample a min-batch to perform gradient descent to update

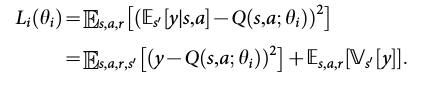

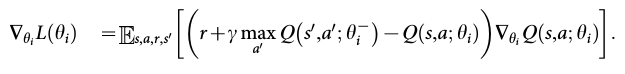

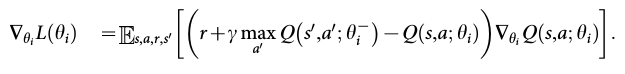

DQN (Dec 2013)

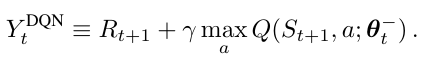

target Q-network

- a separate network for generating target term

- more stable, prevent oscillations and divergence

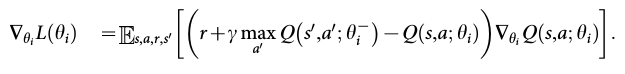

DQN (Dec 2013)

clip the temporal diffierence error term

- adaptively to sensible range

- robust gradients

clipping the error term to be between -1 and 1 responds to using |x| loss function for errors outside of the (-1, 1)

DQN (Dec 2013)

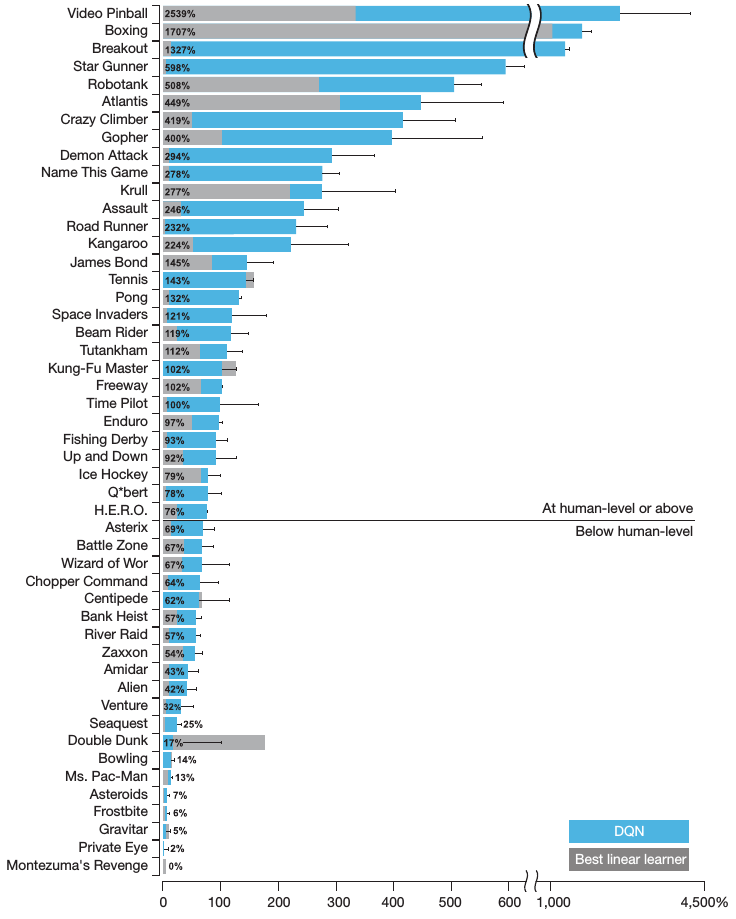

Result

perform at a level that is broadly comparable with or superior to a professional human games tester in marjority of games

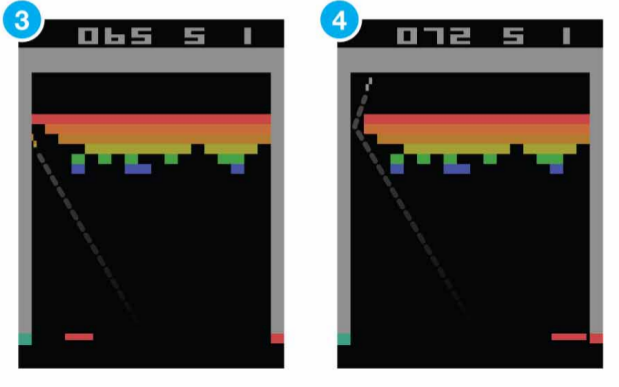

DQN (Dec 2013)

Result

Q learning Methods

Deep Q-network (Dec, 2013)- Double DQN (Dec, 2015)

- Prioritized replay (Feb, 2016)

- Dueling network (Apr, 2016)

- Bootstrapped DQN (Jul, 2016)

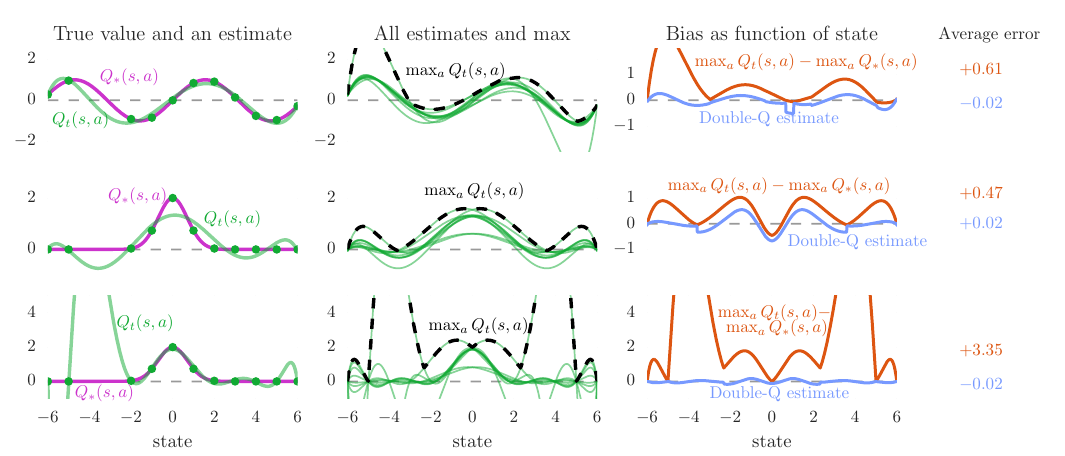

Double DQN (Dec 2015)

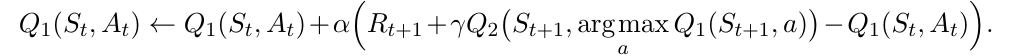

Double-Q reduce overestimations during learning

They generalized the Double Q-learning algorithm from tabular setting to arbitrary function approximation. This method yields more accurate value estimates and higher scores.

Double DQN (Dec 2015)

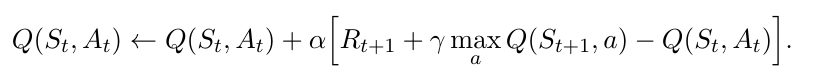

Maximization Bias and Double Q-Learning

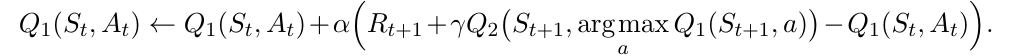

Max operator induces maximization bias. Double Q-learning learns two independent estimates to maximize action and estimate action separately.

Double DQN (Dec 2015)

Double Q-Learning in tabular setting

comparison of Q-learning and Double Q-learning

Double DQN (Dec 2015)

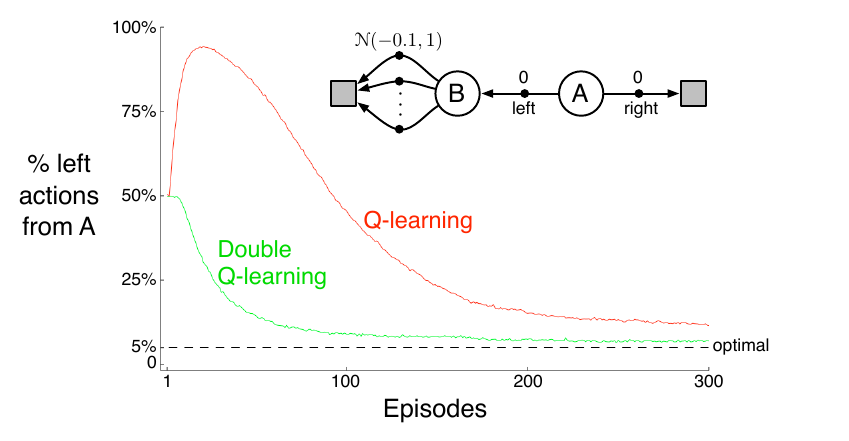

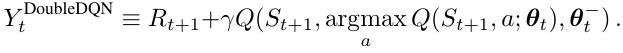

Double Q-Learning Network

generalize the Double Q-learning algorithm from tabular setting to DQN

using

target

Q-Network

to

estimate

Q learning Methods

Deep Q-network (Dec, 2013)Double DQN (Dec, 2015)- Prioritized replay (Feb, 2016)

- Dueling network (Apr, 2016)

- Bootstrapped DQN (Jul, 2016)

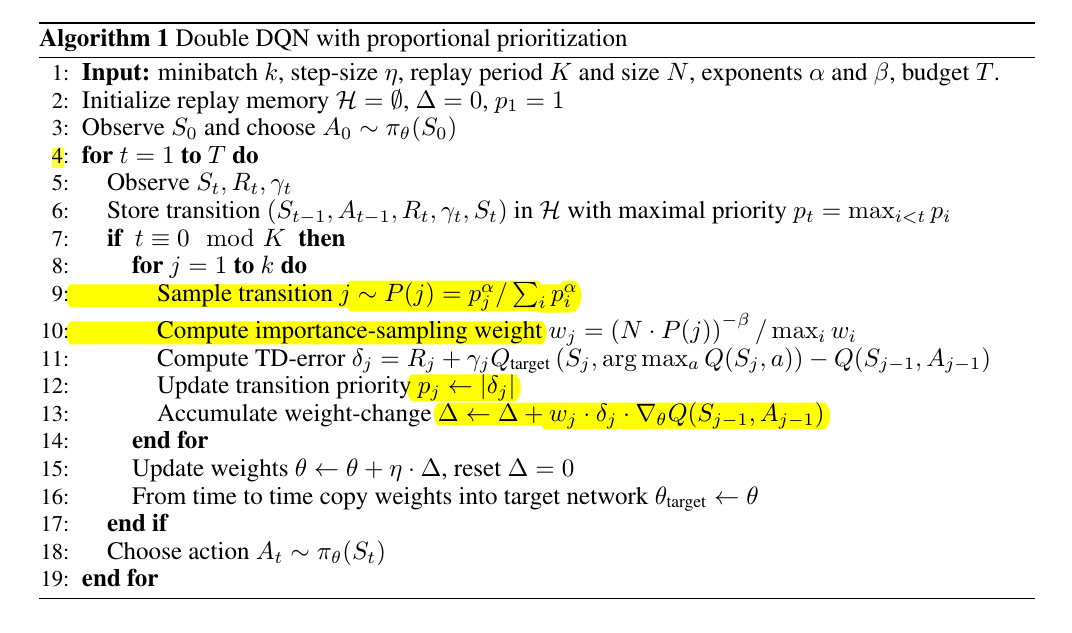

Prioritised replay (Feb 2016)

DQN and Double DQN simply replay transitions at the same frequency regardless of their significance. In this paper they proposed a framework for prioritizing experience by TD error.

mini-batch samples: i ~ uniform(i)

mini-batch samples: i ~ P(i)

Prioritised replay (Feb 2016)

Algorithm

Q learning Methods

Deep Q-network (Dec, 2013)Double DQN (Dec, 2015)Prioritized replay (Feb, 2016)- Dueling network (Apr, 2016)

- Bootstrapped DQN (Jul, 2016)

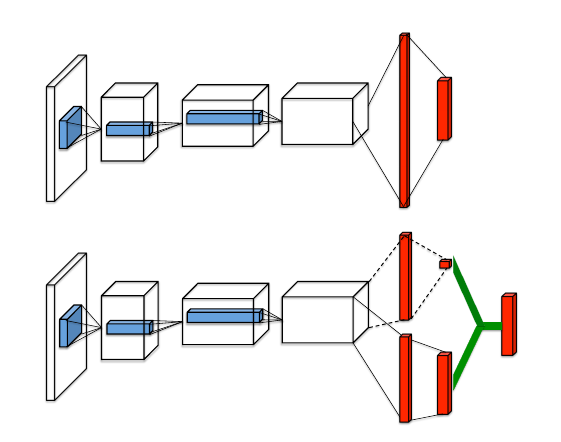

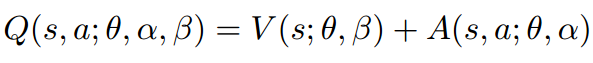

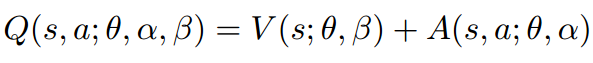

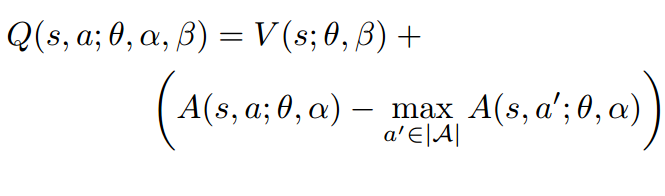

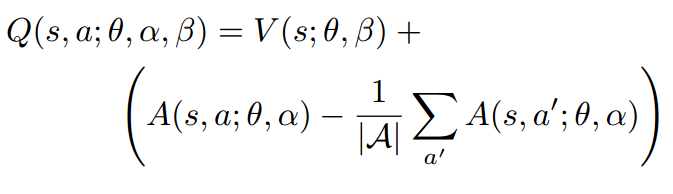

Dueling Network (Apr 2016)

They proposed an architecture that consists of two streams that represent the value(action independent) and advantage functions.

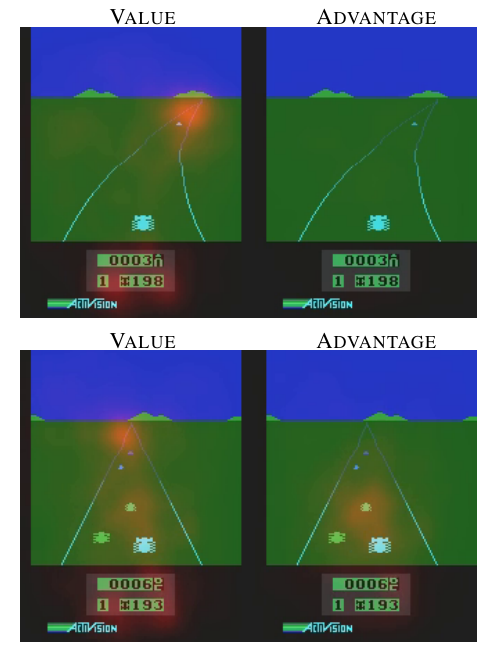

Dueling Network (Apr 2016)

Intuitively, the dueling architecture can learn which states are (or are not) valuable, without having to learn the effect of each action for each state.

value and advantage saliency maps

Dueling Network (Apr 2016)

The equation above is unidentifiable in the sense that the given Q we cannot recover V and A uniquely.

To address this issue of identifiability, they force the advantage function estimator to have zero advantage at the chosen action by following implementation.

or

Q learning Methods

Deep Q-network (Dec, 2013)Double DQN (Dec, 2015)Prioritized replay (Feb, 2016)Dueling network (Apr, 2016)- Bootstrapped DQN (Jul, 2016)

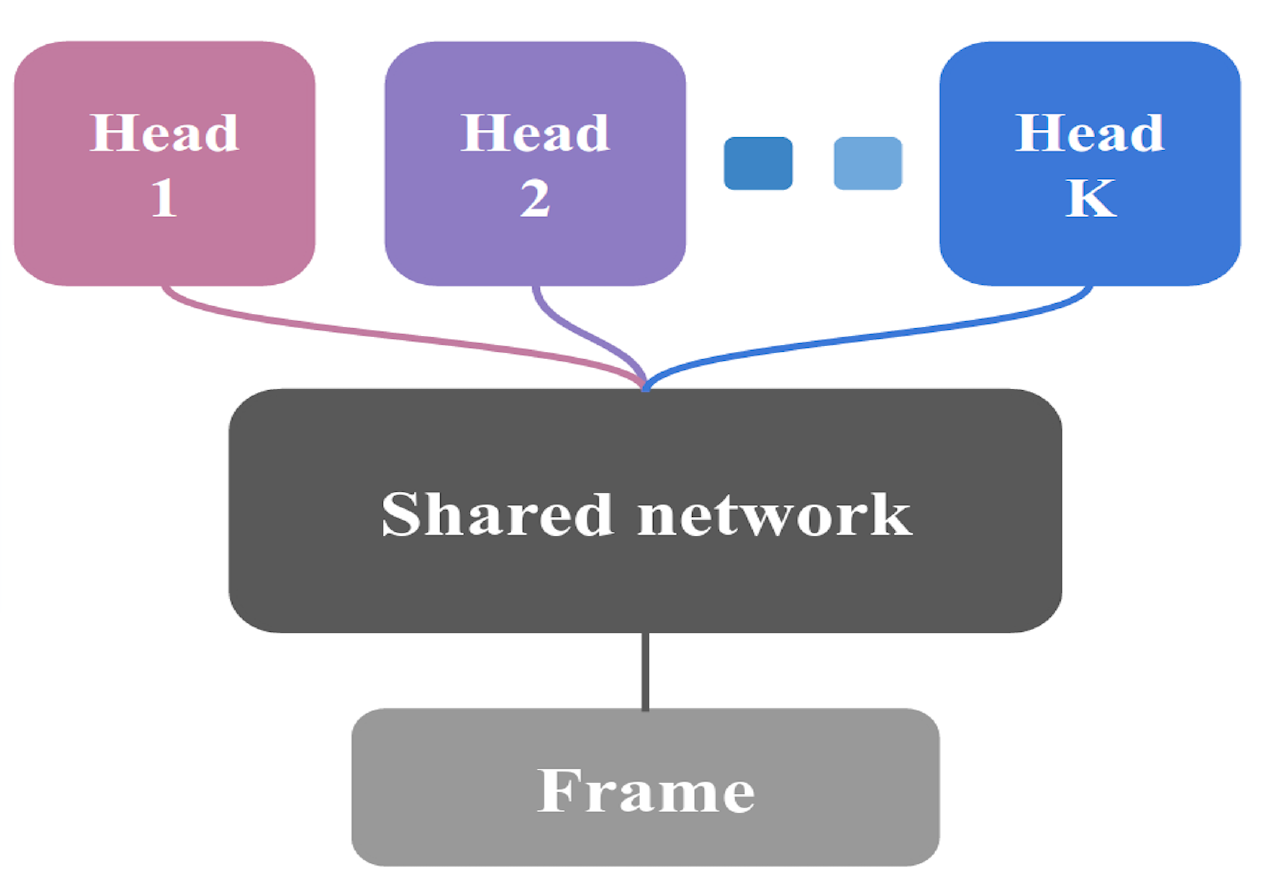

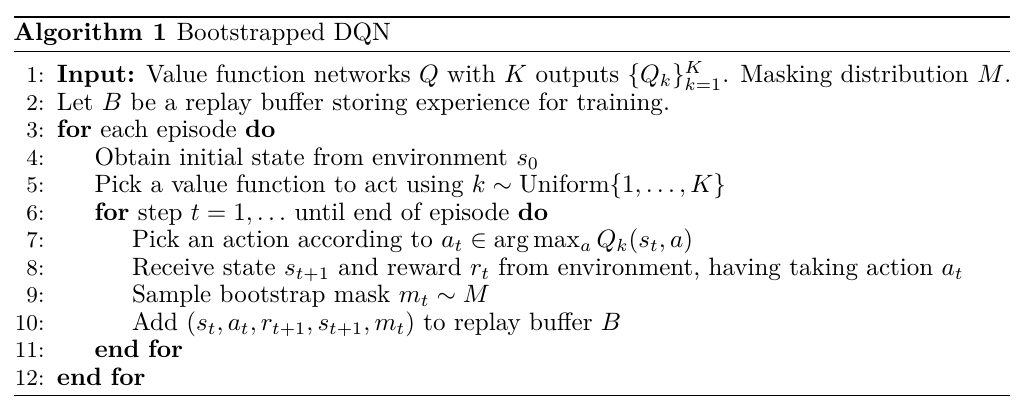

Bootstrapped DQN (Jul 2016)

They proposed an efficient and scalable exploration method for generating bootstrap samples from a large and deep neural network. The network consists of a shared architecture with K bootstrapped "heads" branching off independently.

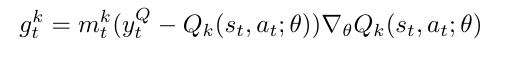

Bootstrapped DQN (Jul 2016)

Gradient of the Kth Q network:

Deep Reinforcement Learning

Q-Learning Methods- Actor-Critic Methods

Actor-Critic Methods

- DDPG (Feb 2016)

- A3C (Jun 2016)

- UNREAL (Nov 2016)

- ACER (Nov 2016)

- The Reactor (Apr 2017)

- NoisyNet (Jun 2017)

Actor-Critic Methods

- DDPG (Feb 2016)

- A3C (Jun 2016)

- UNREAL (Nov 2016)

- ACER (Nov 2016)

- The Reactor (Apr 2017)

- NoisyNet (Jun 2017)

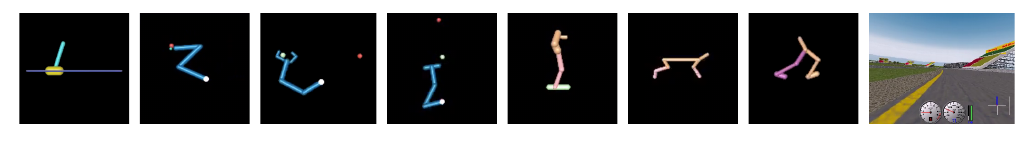

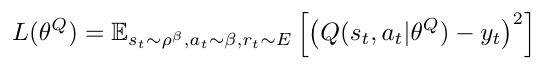

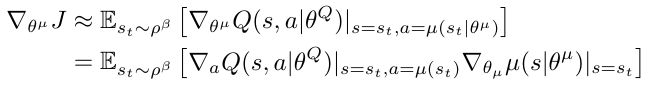

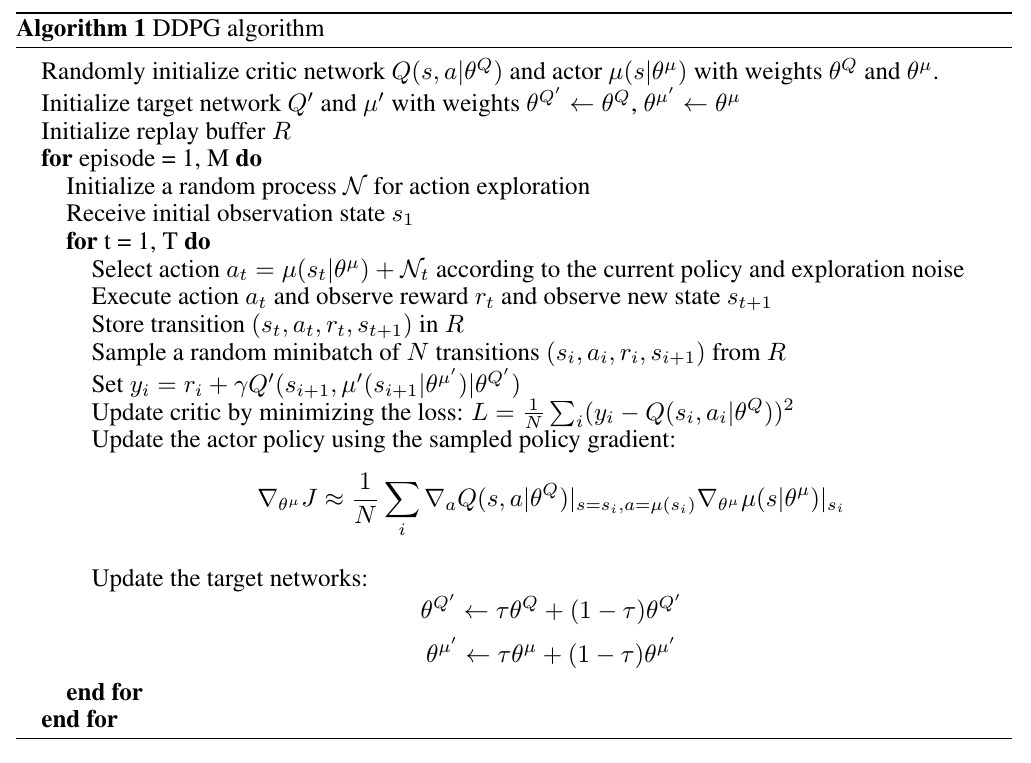

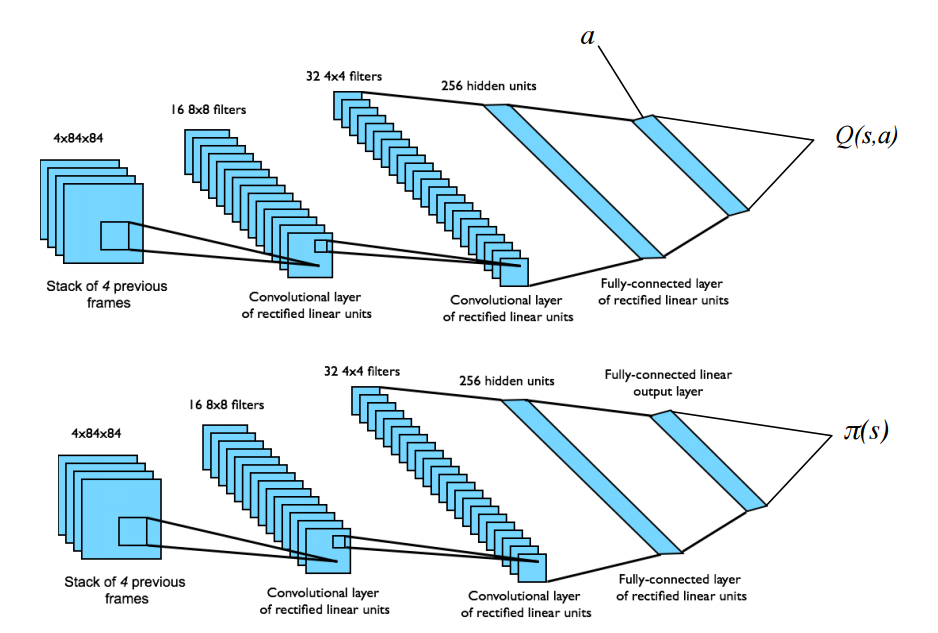

Deep Deterministic Policy Gradient (Feb 2016)

They proposed an actor-critic, model-free algorithm based on the deterministic policy gradient that can operate over continuous action spaces.

Deep Deterministic Policy Gradient (Feb 2016)

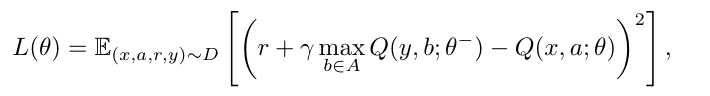

Critic loss:

Actor gradient:

critic estimates value of current policy by Q-learning

actor updates policy in direction that improves Q

Deep Deterministic Policy Gradient (Feb 2016)

This part of gradient will be calculated from critic network by backpropagating into a

Deep Deterministic Policy Gradient (Feb 2016)

Actor-Critic Methods

DDPG (Feb 2016)- A3C (Jun 2016)

- UNREAL (Nov 2016)

- ACER (Nov 2016)

- The Reactor (Apr 2017)

- NoisyNet (Jun 2017)

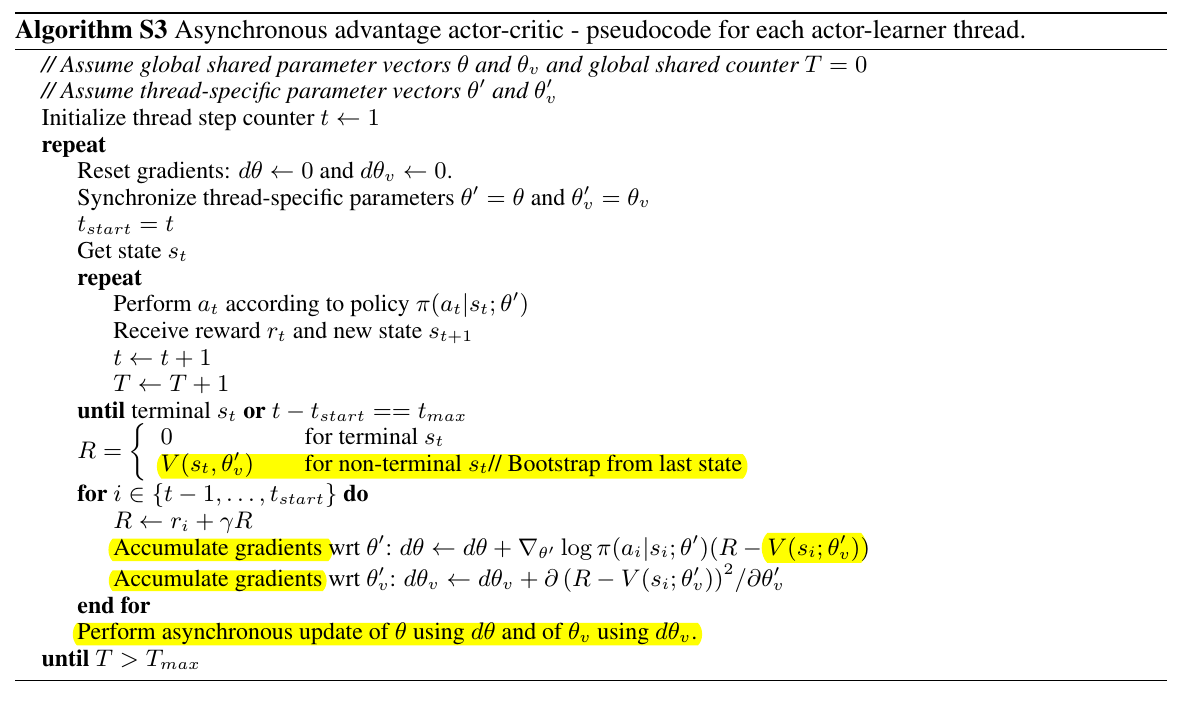

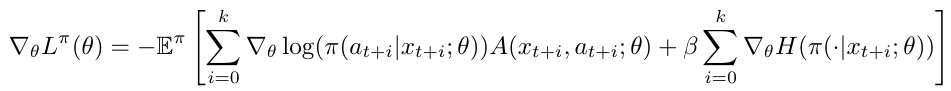

Asynchronous advantage actor-critic (Jun 2016)

They proposed asynchronous variants of four standard reinforcement learning algorithms and showed that an asynchronous variant of actor-critic surpassed the state-of-art.

estimation of advantage

Actor-Critic Methods

DDPG (Feb 2016)-

A3C (Jun 2016) - UNREAL (Nov 2016)

- ACER (Nov 2016)

- The Reactor (Apr 2017)

- NoisyNet (Jun 2017)

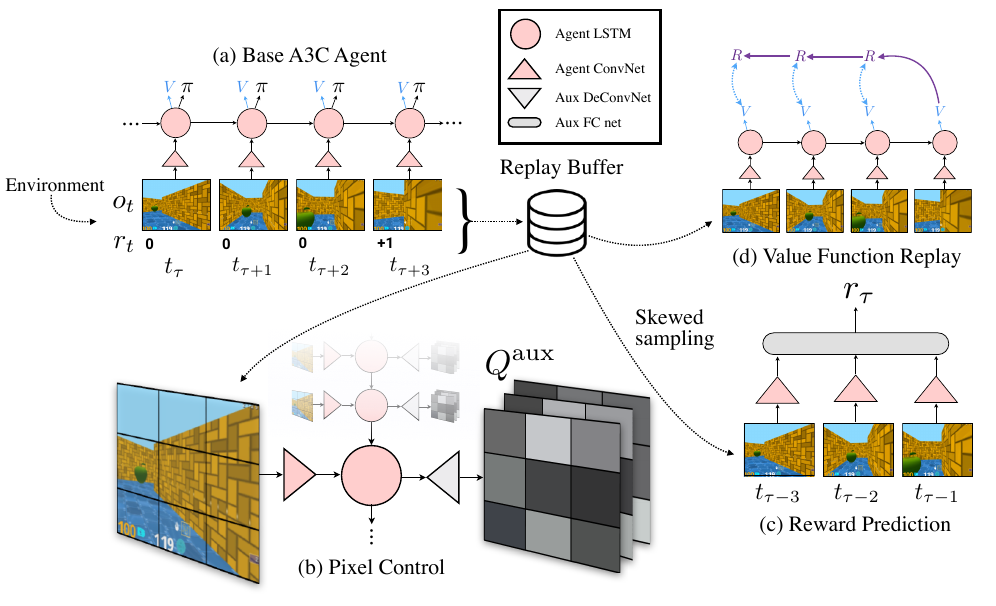

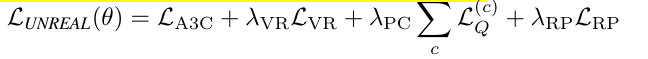

Reinforcement learning with unsupervised auxiliary tasks (Nov 2016)

auxiliary control tasks

- pixel changes

- network features

auxiliary reward tasks

- reward prediction

Actor-Critic Methods

DDPG (Feb 2016)-

A3C (Jun 2016) UNREAL (Nov 2016)- ACER (Nov 2016)

- The Reactor (Apr 2017)

- NoisyNet (Jun 2017)

Sample efficient actor-critic with experience replay (Nov 2016)

This paper presents a stable actor-critic deep reinforcment learning method with experience replay by truncated importance sampling with bias correction, stochastic dueling network architecture and a new trust region policy optimization method.

The Reactor: a sample-efficient actor-critic architecture (Apr 2017)

The reactor is sample-efficient thanks to the use of memory replay, and numerical efficient since it uses multi-step returns.

Actor-Critic Methods

DDPG (Feb 2016)-

A3C (Jun 2016) UNREAL (Nov 2016)ACER (Nov 2016)The Reactor (Apr 2017)- NoisyNet (Jun 2017)

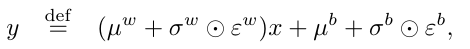

Noisy networks for exploration (Jun 2017)

Exploration

DQN - epsilon greedy

A3C - entropy loss over action space

NoisyNet- induce parametric noise by replacing the final linear layer in value network or policy network with noisy layer

noise random variables

Reference

- Mnih, Volodymyr, et al. "Human-level control through deep reinforcement learning." Nature 518.7540 (2015): 529-533.

- Van Hasselt, Hado, Arthur Guez, and David Silver. "Deep Reinforcement Learning with Double Q-Learning." AAAI. 2016.

- Schaul, Tom, et al. "Prioritized experience replay." arXiv preprint arXiv:1511.05952 (2015).

- Wang, Ziyu, et al. "Dueling network architectures for deep reinforcement learning." arXiv preprint arXiv:1511.06581 (2015).

- Osband, Ian, et al. "Deep exploration via bootstrapped DQN." Advances in Neural Information Processing Systems. 2016.

- Lillicrap, Timothy P., et al. "Continuous control with deep reinforcement learning." arXiv preprint arXiv:1509.02971 (2015).

- Mnih, Volodymyr, et al. "Asynchronous methods for deep reinforcement learning." International Conference on Machine Learning. 2016.

Reference

- Jaderberg, Max, et al. "Reinforcement learning with unsupervised auxiliary tasks." arXiv preprint arXiv:1611.05397 (2016).

- Wang, Ziyu, et al. "Sample efficient actor-critic with experience replay." arXiv preprint arXiv:1611.01224 (2016).

- Gruslys, Audrunas, et al. "The Reactor: A Sample-Efficient Actor-Critic Architecture." arXiv preprint arXiv:1704.04651 (2017).

- Fortunato, Meire, et al. "Noisy Networks for Exploration." arXiv preprint arXiv:1706.10295 (2017).

Thanks to Richard Sutton's book and David Silver's slides.

Thank you

deep reinforcemnt learning

By ligh1994

deep reinforcemnt learning

- 728