Krzysztof Borowski

Akka Paint -requirements

- Simple

- Real time changes

- Multiuser

- Scalable

Simple

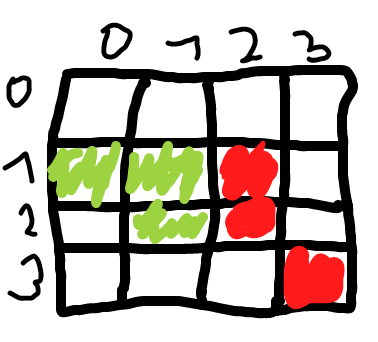

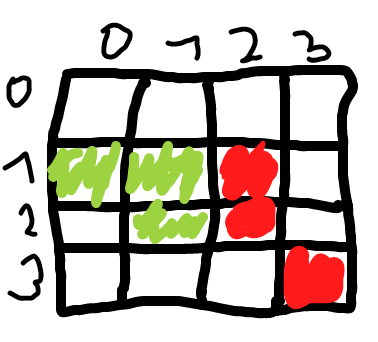

case class Pixel(x: Int, y: Int)

case class DrawEvent(

changes: Seq[Pixel],

color: String

)

//Board state

type Color = String

var drawballBoard = Map.empty[Pixel, Color]Changes as events

Simple

class DrawballActorSimple() extends PersistentActor {

var drawballBoard = Map.empty[Pixel, String]

override def persistenceId: String = "drawballActor"

override def receiveRecover: Receive = {

case d: DrawEvent => updateState(d)

}

override def receiveCommand: Receive = {

case Draw(changes, color) =>

persistAsync(DrawEvent(changes, color)) { de =>

updateState(de)

}

}

private def updateState(drawEvent: DrawEvent) = {

drawballBoard = drawEvent.changes.foldLeft(drawballBoard) {

case (newBoard, pixel) =>

newBoard.updated(pixel, drawEvent.color)

}

}

}

Board as a Persistent Actor

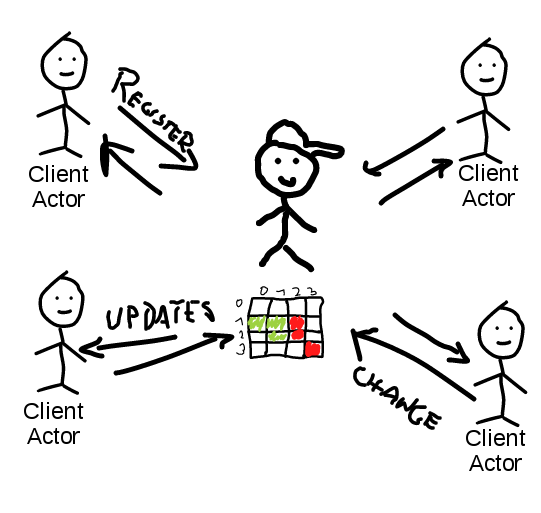

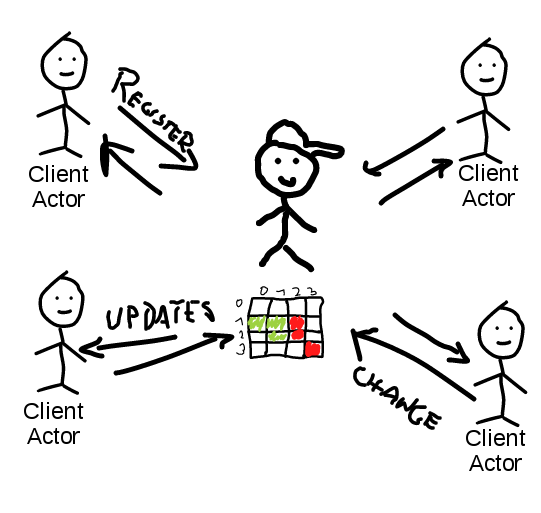

Multiuser

Multiuser and realtime

class DrawballActor() extends PersistentActor {

var drawballBoard = Map.empty[Pixel, String]

var registeredClients = Set.empty[ActorRef]

override def persistenceId: String = "drawballActor"

override def receiveRecover: Receive = {

case d: DrawEvent => updateState(d)

}

override def receiveCommand: Receive = {

case Draw(changes, color) =>

persistAsync(DrawEvent(changes, color)) { de =>

updateState(de)

(registeredClients - sender())

.foreach(_ ! Changes(de.changes, de.color))

}

case r: RegisterClient => {

registeredClients = registeredClients + r.client

convertBoardToUpdates(drawballBoard, Changes.apply)

.foreach(r.client ! _)

}

case ur: UnregisterClient => {

registeredClients = registeredClients - ur.client

}

}

private def updateState(drawEvent: DrawEvent) = {

...

}

}

Multiuser and real time

class ClientConnectionSimple(

browser: ActorRef,

drawBoardActor: ActorRef

) extends Actor {

drawBoardActor ! RegisterClient(self)

override def receive: Receive = {

case d: Draw =>

drawBoardActor ! d

case c @ Changes =>

browser ! c

}

override def postStop(): Unit = {

drawBoardActor ! UnregisterClient(self)

}

}

//Play! controller

def socket = WebSocket.accept[Draw, Changes](requestHeader => {

ActorFlow.actorRef[Draw, Changes](browser =>

ClientConnection.props(

browser,

drawballActor

))

})

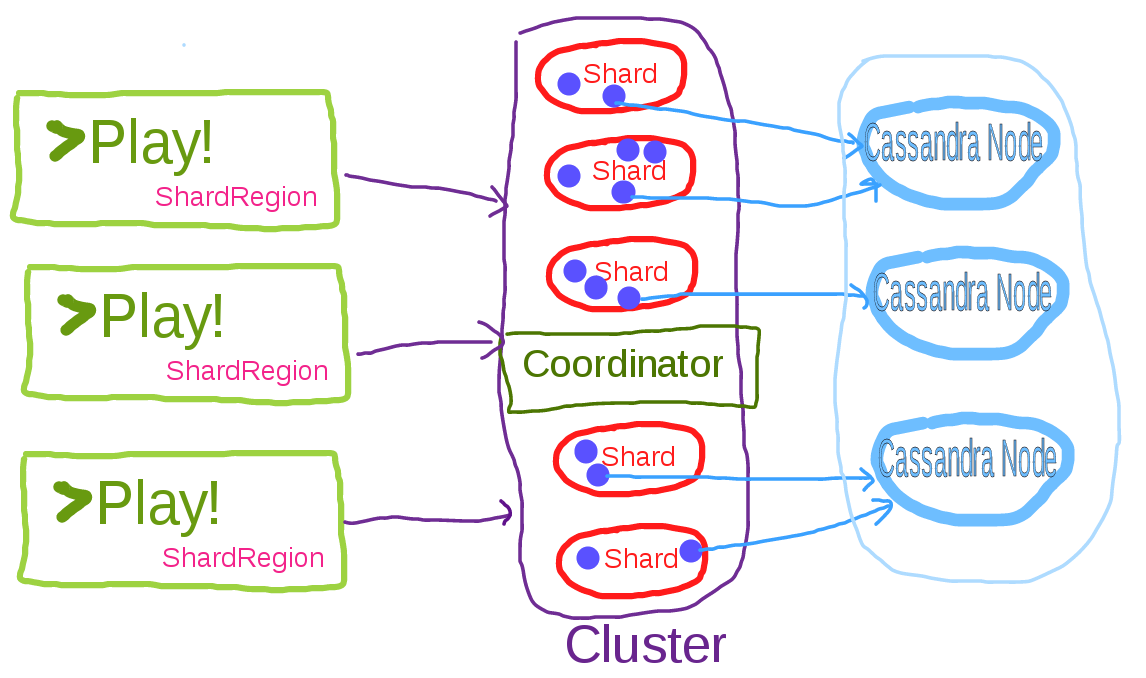

Scalable

Scalable

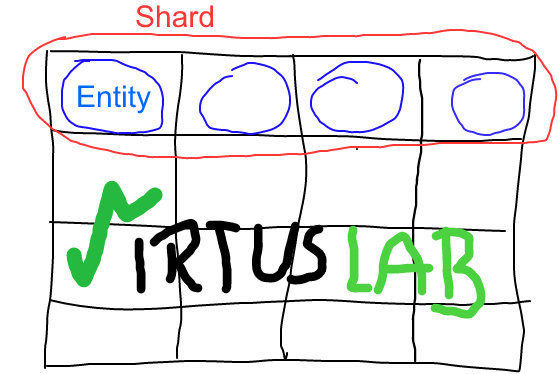

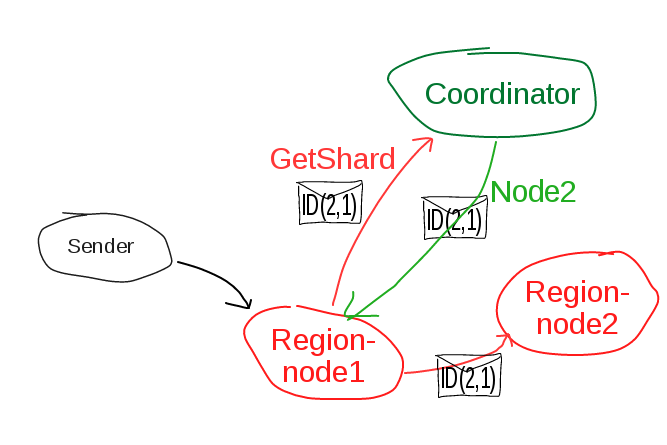

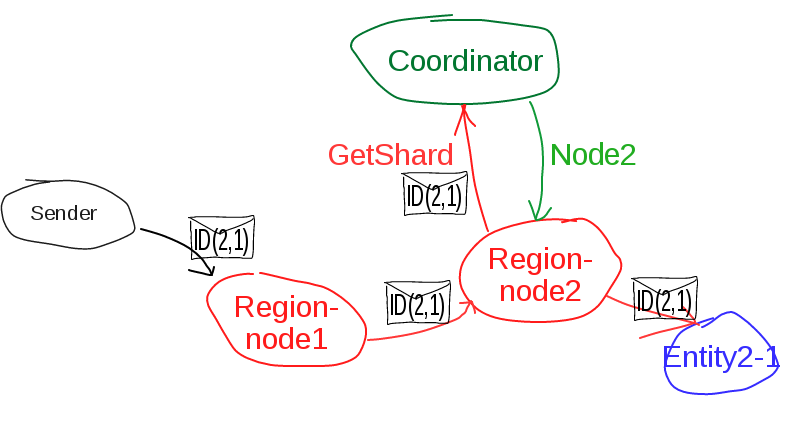

Akka Sharding

Akka Sharding to the rescue !

Akka Sharding to the rescue !

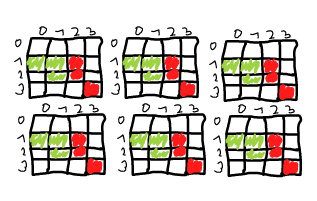

Sharding

def shardingPixels(changes: Iterable[Pixel], color: String): Iterable[DrawEntity] = {

changes.groupBy { pixel =>

(pixel.y / entitySize, pixel.x / entitySize)

}.map {

case ((shardId, entityId), pixels) =>

DrawEntity(shardId, entityId, pixels.toSeq, color)

}

}

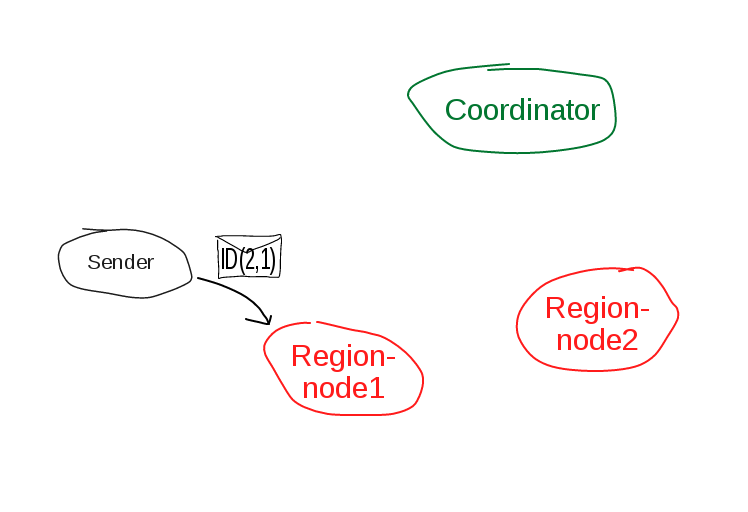

private val extractEntityId: ShardRegion.ExtractEntityId = {

case DrawEntity(_, entityId, pixels, color) ⇒

(entityId.toString, Draw(pixels, color))

case ShardingRegister(_, entityId, client) ⇒

(entityId.toString, RegisterClient(Serialization.serializedActorPath(client)))

case ShardingUnregister(_, entityId, client) ⇒

(entityId.toString, UnregisterClient(Serialization.serializedActorPath(client)))

}

private val extractShardId: ShardRegion.ExtractShardId = {

case DrawEntity(shardId, _, _, _) ⇒

shardId.toString

case ShardingRegister(shardId, _, _) ⇒

shardId.toString

case ShardingUnregister(shardId, _, _) ⇒

shardId.toString

}

Sharding Cluster

def initializeCluster(): ActorSystem = {

// Create an Akka system

val system = ActorSystem("DrawballSystem")

ClusterSharding(system).start(

typeName = entityName,

entityProps = Props[DrawballActor],

settings = ClusterShardingSettings(system),

extractEntityId = extractEntityId,

extractShardId = extractShardId

)

system

}

def shardRegion()(implicit actorSystem: ActorSystem) = {

ClusterSharding(actorSystem).shardRegion(entityName)

}

Scaling - architecture

Akka - snapshoting

override def receiveRecover: Receive = {

...

case SnapshotOffer(_, snapshot: DrawSnapshot) => {

snapshot.changes.foreach(updateState)

snapshot.clients.foreach(c => registerClient(RegisterClient(c)))

}

case RecoveryCompleted => {

registeredClients.foreach(c => c ! ReRegisterClient())

registeredClients = Set.empty

}

}

override def receiveCommand: Receive = {

case Draw(changes, color) =>

persistAsync(DrawEvent(changes, color)) { de =>

updateState(de)

changesNumber += 1

if (changesNumber > 1000) {

changesNumber = 0

self ! "snap"

}

(registeredClients - sender())

.foreach(_ ! Changes(de.changes, de.color))

}

case "snap" => saveSnapshot(DrawSnapshot(

convertBoardToUpdates(drawballBoard, DrawEvent.apply).toSeq,

registeredClients.map(Serialization.serializedActorPath).toSeq

))

...

}

DEMO

AkkaPaint

History feature

Requirements

- Look into history

- Real time

- Accumulated changes from different periods of time

General Idea

- Read every persisted event

- Aggregate events within different time periods

- Generate and save image

- Serve the history independently from AkkaPaint main application

General Idea - flow

akka-streams

Akka Streams provides a way to express and run a chain of asynchronous processing steps acting on a sequence of elements.

akka-streams

implicit val system = ActorSystem()

implicit val materializer = ActorMaterializer()

val source = Source(1 to 10)

source

.map(_ + 1)

.runWith(Sink.foreach(println))

val sink = Sink.fold[Int, Int](0)(_ + _)

val sum: Future[Int] = source.runWith(sink)

akka-streams

val writeAuthors: Sink[Author, NotUsed] = ???

val writeHashtags: Sink[Hashtag, NotUsed] = ???

val g = RunnableGraph.fromGraph(GraphDSL.create() { implicit b =>

import GraphDSL.Implicits._

val bcast = b.add(Broadcast[Tweet](2))

tweets ~> bcast.in

bcast.out(0) ~> Flow[Tweet].map(_.author) ~> writeAuthors

bcast.out(1) ~> Flow[Tweet].mapConcat(_.hashtags.toList) ~> writeHashtags

ClosedShape

})

g.run()Starting point - source

private val readJournal =

PersistenceQuery(context.system).readJournalFor[CassandraReadJournal](

CassandraReadJournal.Identifier

)

def originalEventStream(firstOffset: UUID):

Source[(DateTime, DrawEvent, TimeBasedUUID), NotUsed] =

readJournal

.eventsByTag("draw_event", TimeBasedUUID(firstOffset))

.map {

case EventEnvelope(offset @ TimeBasedUUID(time), _, _, d: DrawEvent) =>

val timestamp = new DateTime(UUIDToDate.getTimeFromUUID(time))

(timestamp, d, offset)

}

Generate image

def generate(timeSlot: ReadablePeriod) =

Flow[(DateTime, DrawEvent, Offset)].scan(

ImageUpdate(defaultDate, newDefaultImage(), offset = Offset.noOffset)

) {

case (

ImageUpdate(lastEmitDateTime, image, _, _),

(datetime, d: DrawEvent, offset)

) =>

updateImageAndEmit(image, offset, lastEmitDateTime, datetime, d, timeSlot)

}

.collect { case ImageUpdate(_, _, _, Some(emit)) => emit }

Save image

object CassandraFlow {

def apply[T](

parallelism: Int,

statement: PreparedStatement,

statementBinder: (T, PreparedStatement) => BoundStatement

)(implicit session: Session, ex: ExecutionContext): Flow[T, T, NotUsed] =

Flow[T]

.mapAsyncUnordered(parallelism) { t =>

session.executeAsync(statementBinder(t, statement)).asScala().map(_ => t)

}

}

Image per minute sink

override def apply(commitOffset: (Offset) => Unit)

: Sink[(DateTime, DrawEvent, Offset), NotUsed] = {

ImageAggregationFlowFactory().generate(org.joda.time.Minutes.ONE)

.via(flowForImagePerMinute)

.via(flowForSaveChangesList)

.to(Sink.foreach(t => commitOffset(t.offset)))

}

Projection skeleton

case class AkkaPaintDrawEventProjection(

persistenceId: String,

generateConsumer: (Offset => Unit) =>

Sink[(DateTime, DrawEvent, Offset), NotUsed]

) extends PersistentActor {

var lastOffset = readJournal.firstOffset

override def receiveCommand: Receive = {

case Start => startProjection()

}

override def receiveRecover: Receive = {

case NewOffsetSaved(offset) => {

lastOffset = UUID.fromString(offset)

}

case RecoveryCompleted => {

self ! Start

}

}

def startProjection() = {

case SaveNewOffset(offset) => persist(NewOffsetSaved(offset)) { e =>

lastOffset = UUID.fromString(offset)

}

}

}

Cluster Singleton

akkaPaintHistorySystem.actorOf(

ClusterSingletonManager.props(

singletonProps = Props(AkkaPaintDrawEventProjection(

persistenceId = "PerMinuteHistory",

akkaPaintHistoryConfig = akkaPaintHistoryConfig,

generateConsumer = FlowForImagePerMinute()

)),

terminationMessage = PoisonPill,

settings = ClusterSingletonManagerSettings(akkaPaintHistorySystem)

),

name = "PicturePerMinuteGenerator"

)

Cluster Singleton

//to get the reference to singleton

val historyGenerator = akkaPaintHistoryActorSystem.actorOf(

ClusterSingletonProxy.props(

singletonManagerPath = "/user/PicturePerMinuteGenerator",

settings = ClusterSingletonProxySettings(system)

),

name = "consumerProxy"

)

def regenerateHistory() = Action {

historyGenerator ! ResetProjection()

Ok(Json.toJson("Regeneration started"))

}Tips and tricks

- Use `ClusterSharding.startProxy` to not hold any entities on the node.

- Distributed coordinator - akka.extensions += "akka.cluster.ddata.DistributedData" (Experimental).

- Use Protocol Buffer.

- Be careful about message buffering.

- Tag events from the very beginning

Summary:

- first working version - lines of Code: 275!,

- multiuser,

- scalable,

- fault tolerant,

- with extensive history insight.

Bibliography

- http://www.slideshare.net/bantonsson/akka-persistencescaladays2014

- http://doc.akka.io/docs/akka/current/scala/cluster-sharding.html

- https://github.com/trueaccord/ScalaPB

AkkaPains - KJUG

By liosedhel

AkkaPains - KJUG

- 1,801