PV226 ML: Neural Networks

Content of this session

Introduction to NN

Keras

Different types of network and usages

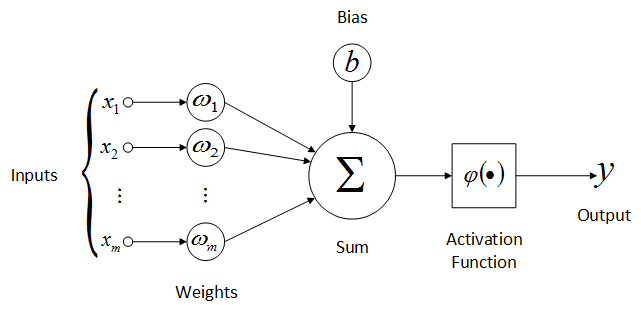

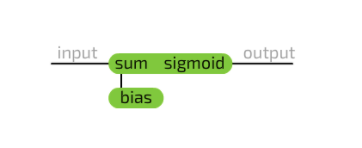

Artificial Neuron

Activation Functions

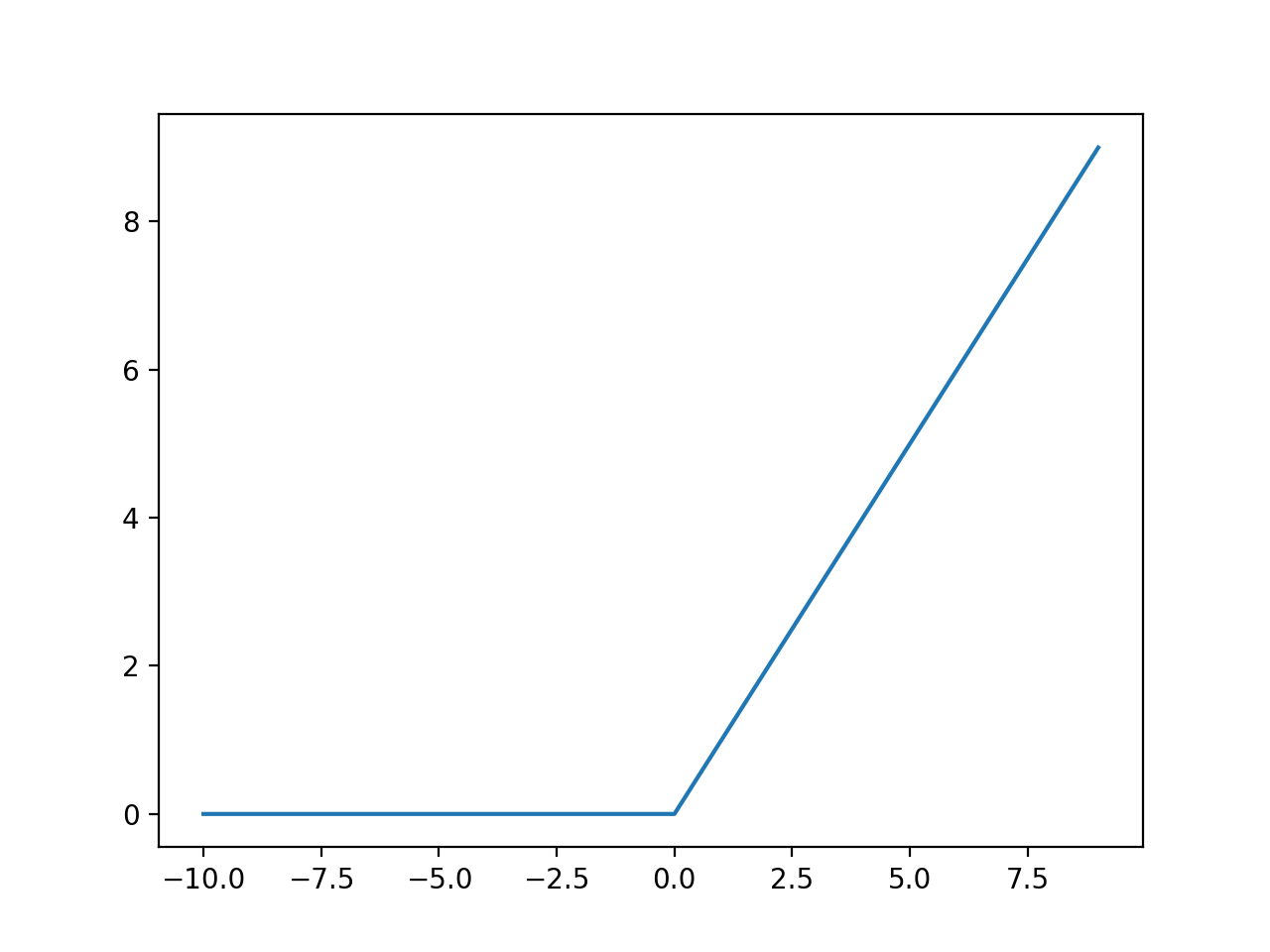

ReLU

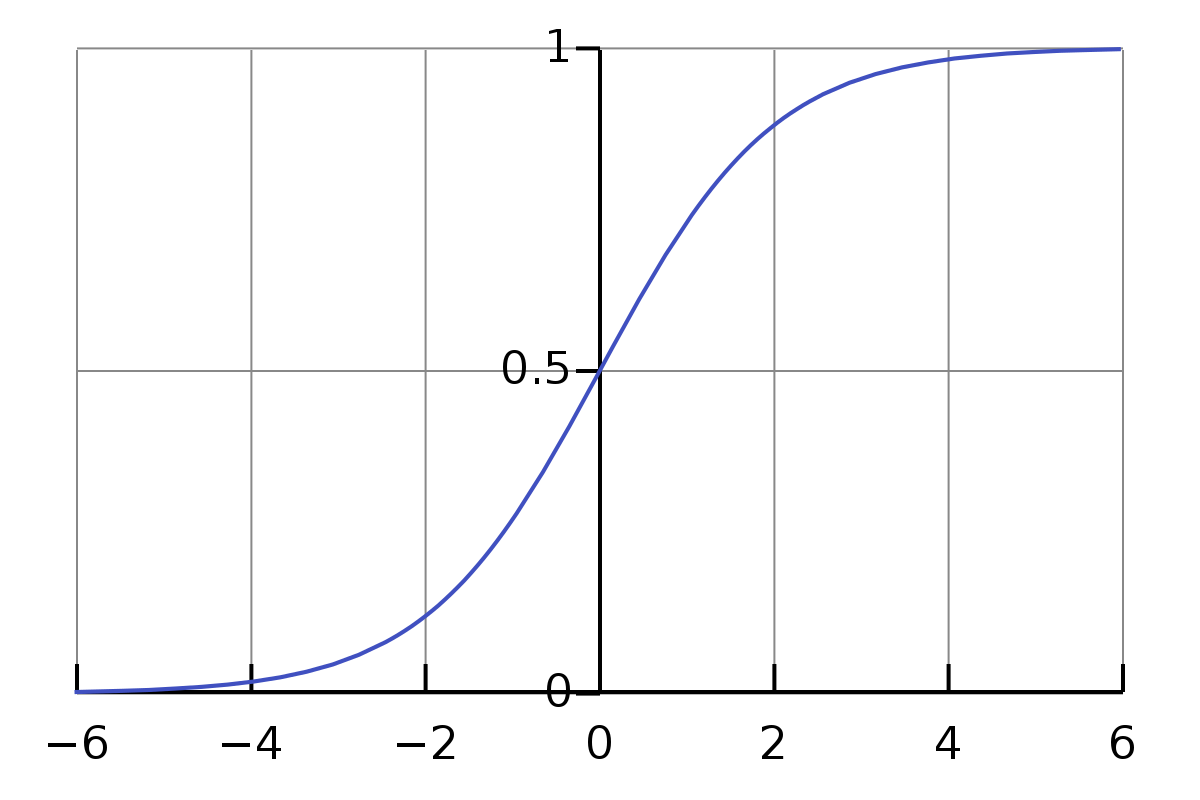

Sigmoid

Sigmoid Activation Function

-

Used for Binary Classification in the Logistic Regression model

-

The probabilities sum does not need to be 1

Softmax Activation Function

- Used for Multi-classification in the Logistics Regression model

- The probabilities sum will be 1

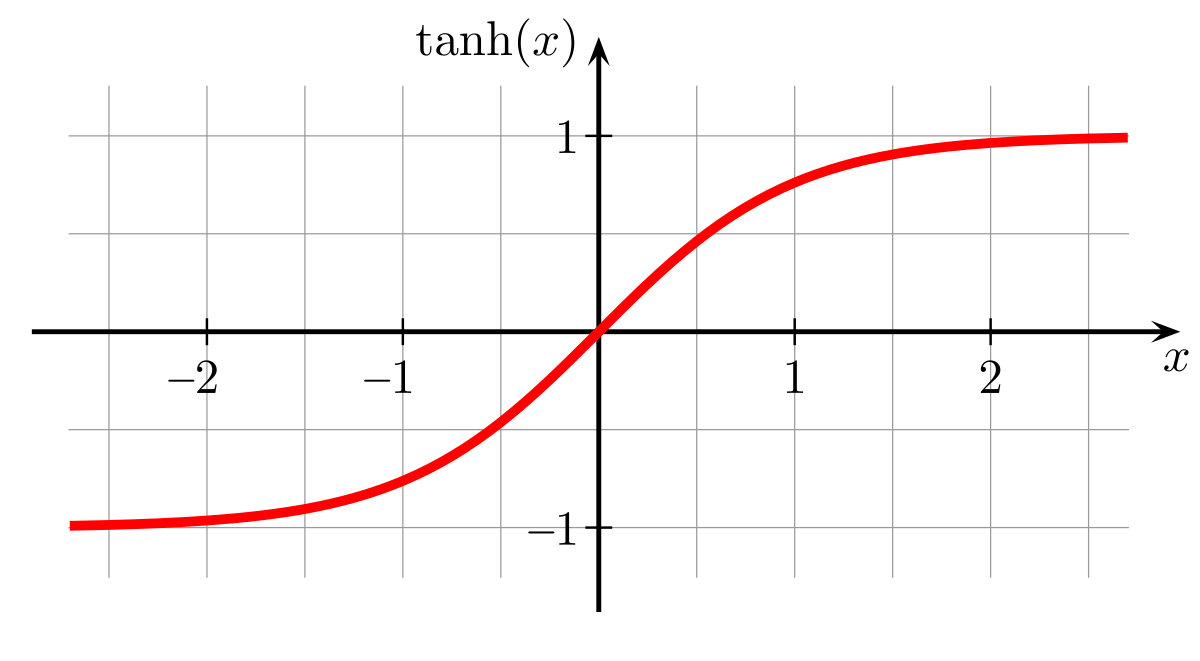

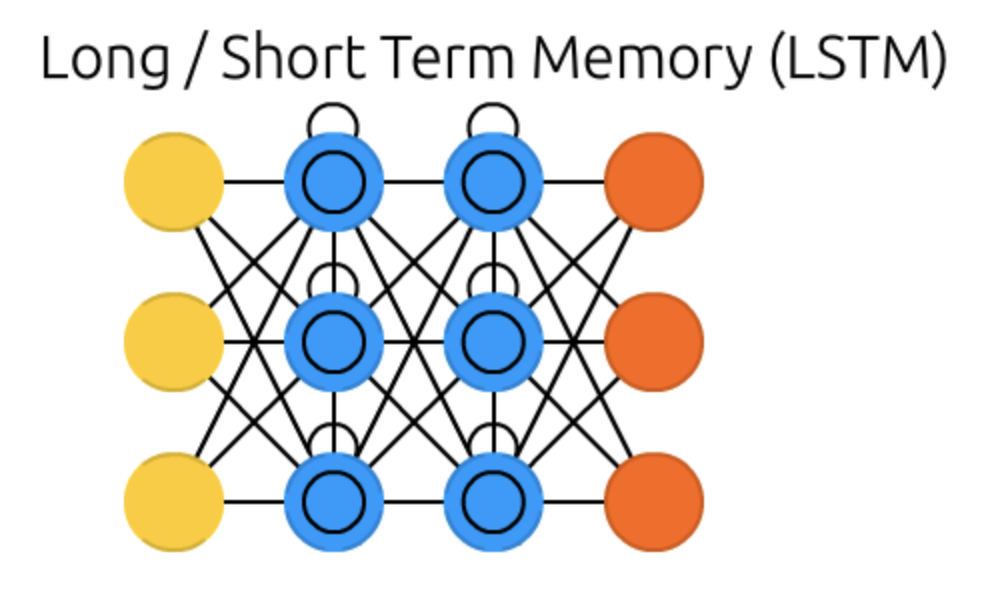

Tanh

Usage of tanh

- usually used in convolution layers

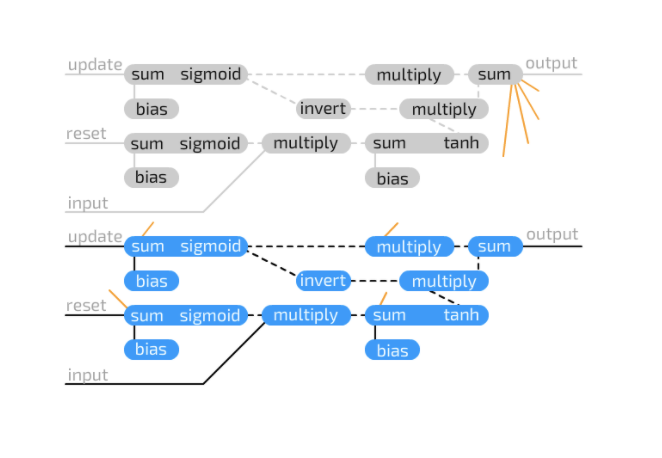

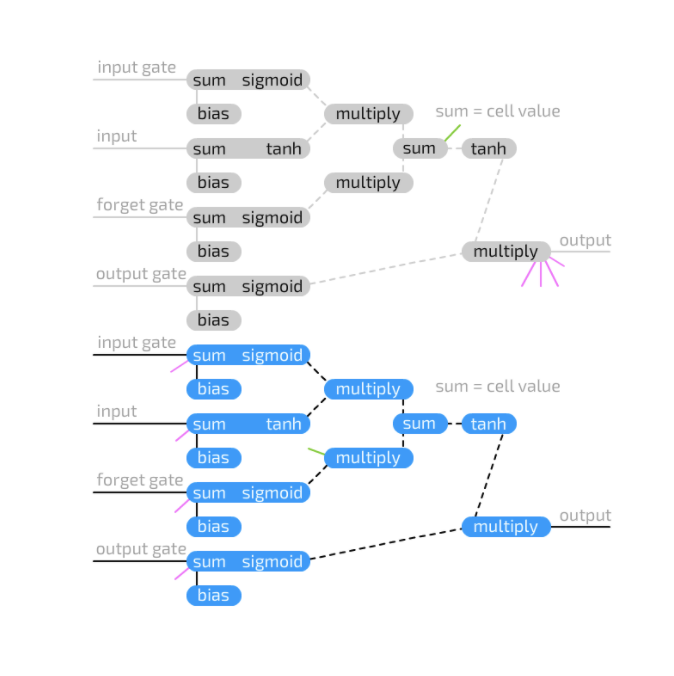

- or in LSTMs

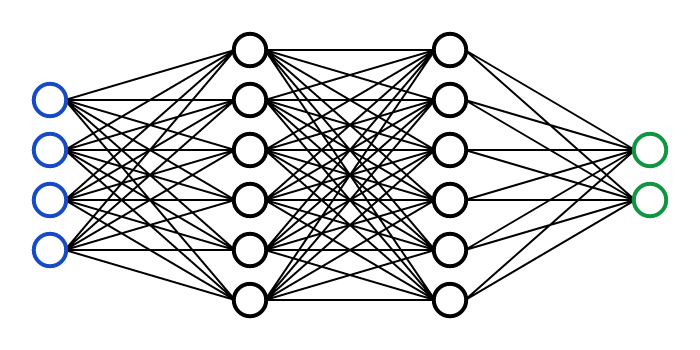

Deep Neural Network

Weight initialization

- random

- ones

- zeroes

- ...

Application

- classification

- predictions

on

- image recognition

- language modeling

- time series

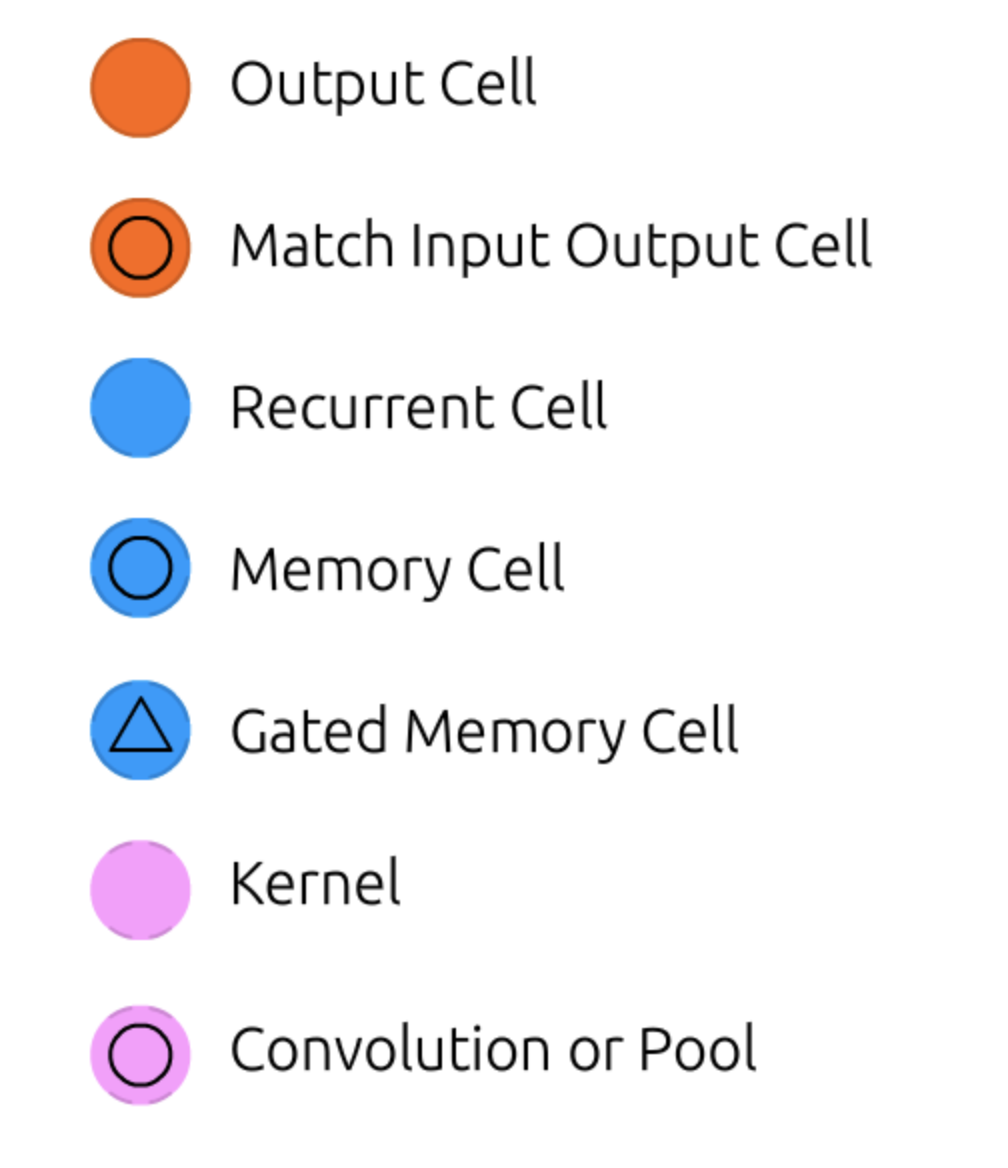

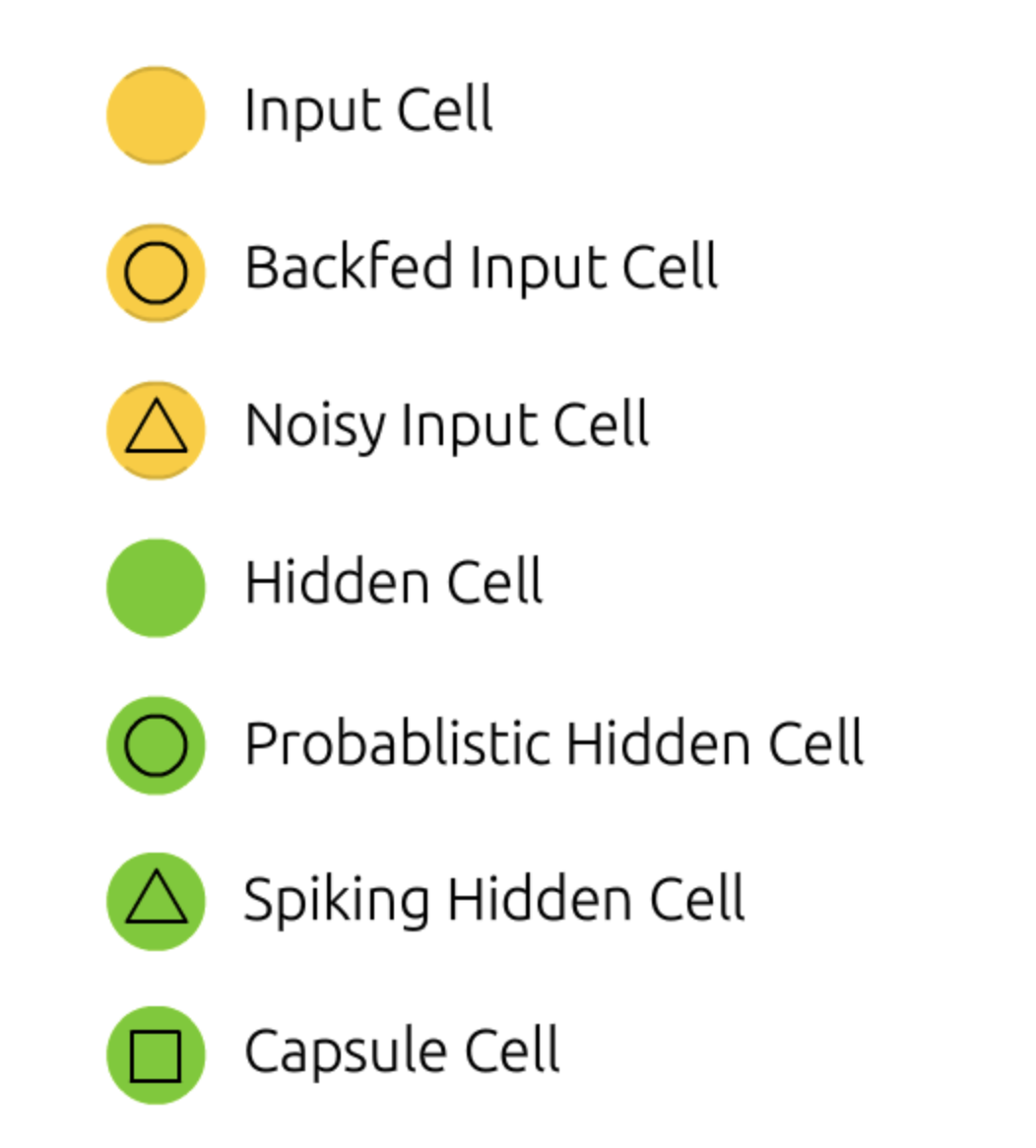

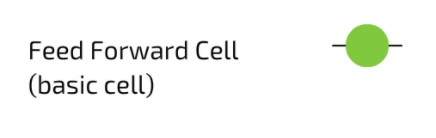

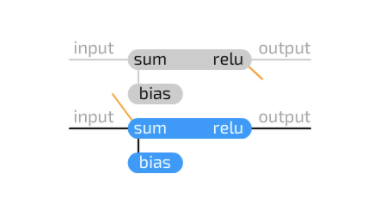

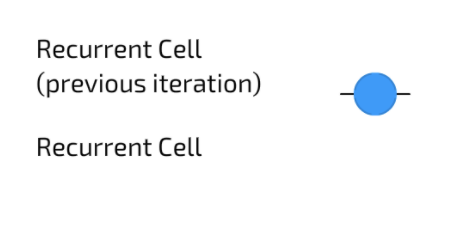

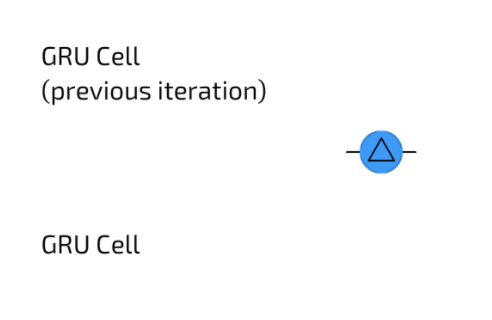

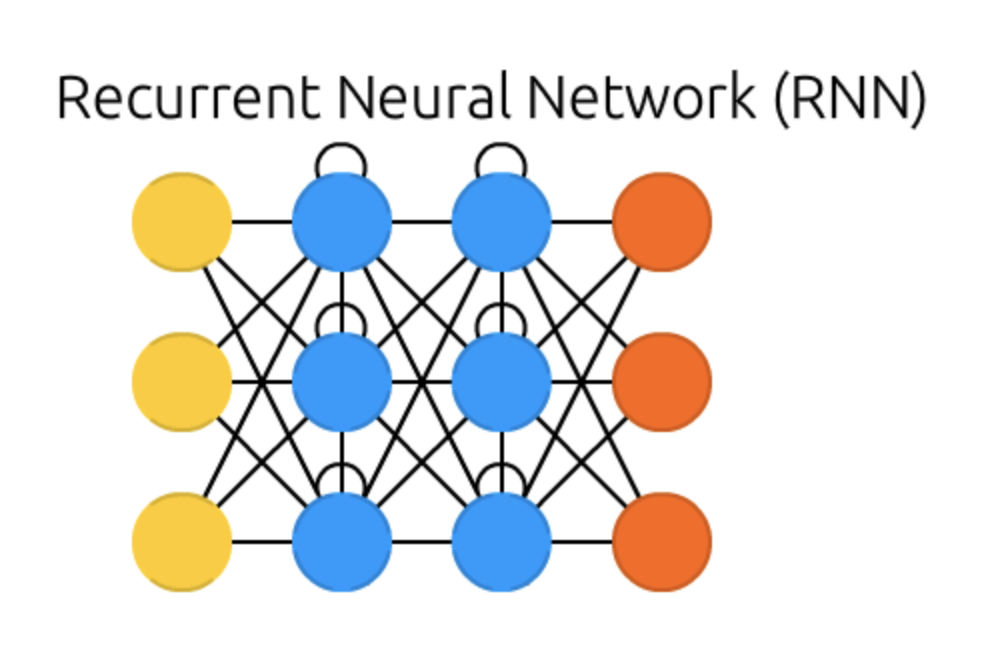

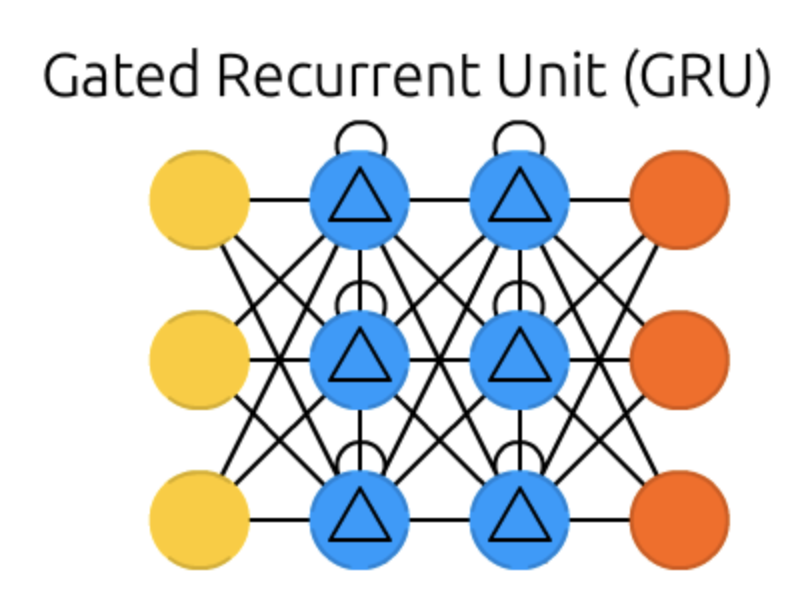

Neuron Types

From Neural Network Zoo

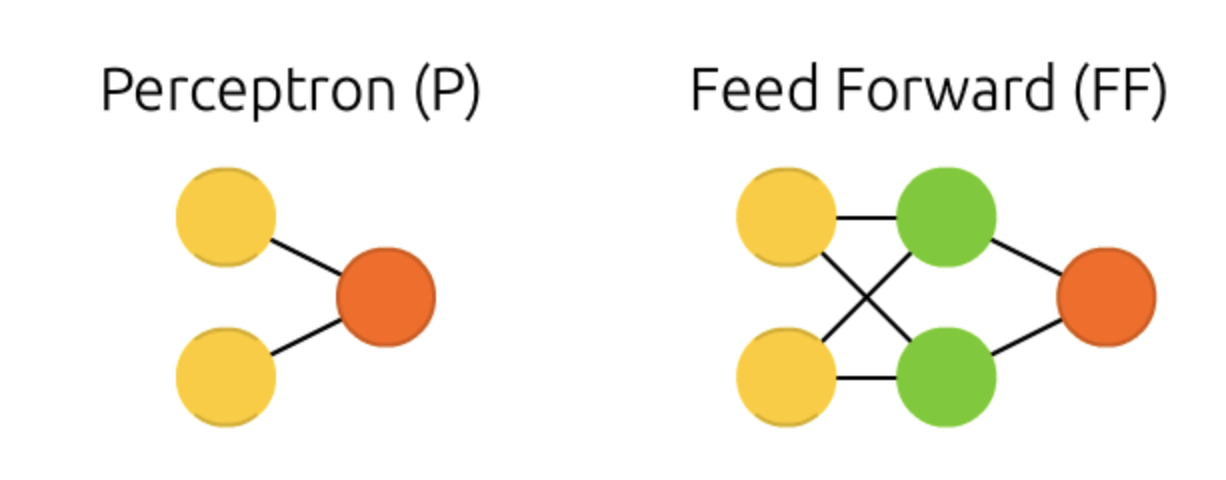

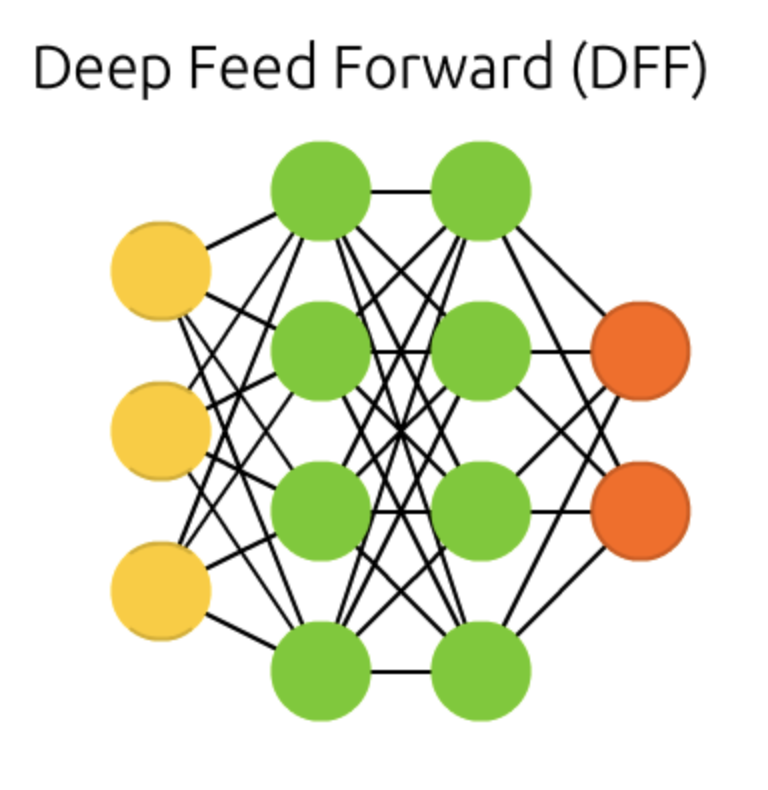

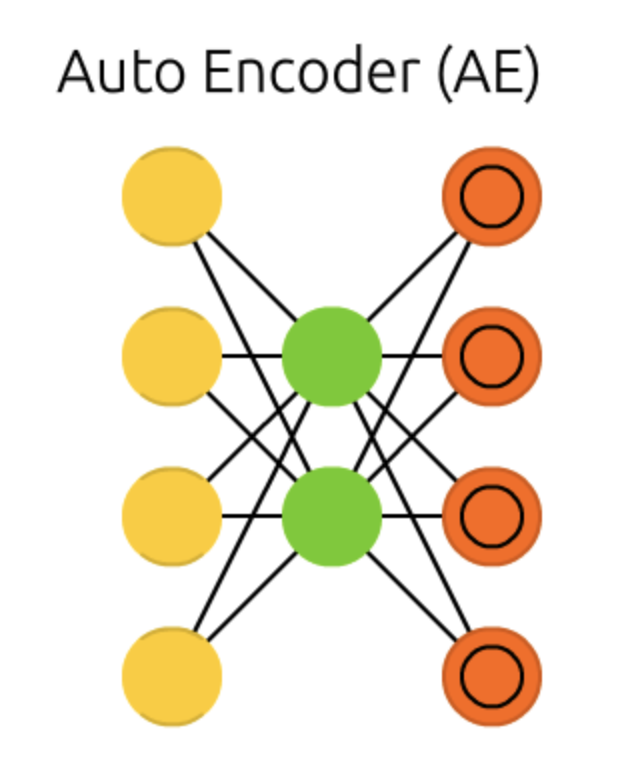

Most Basic Networks

Deep Network

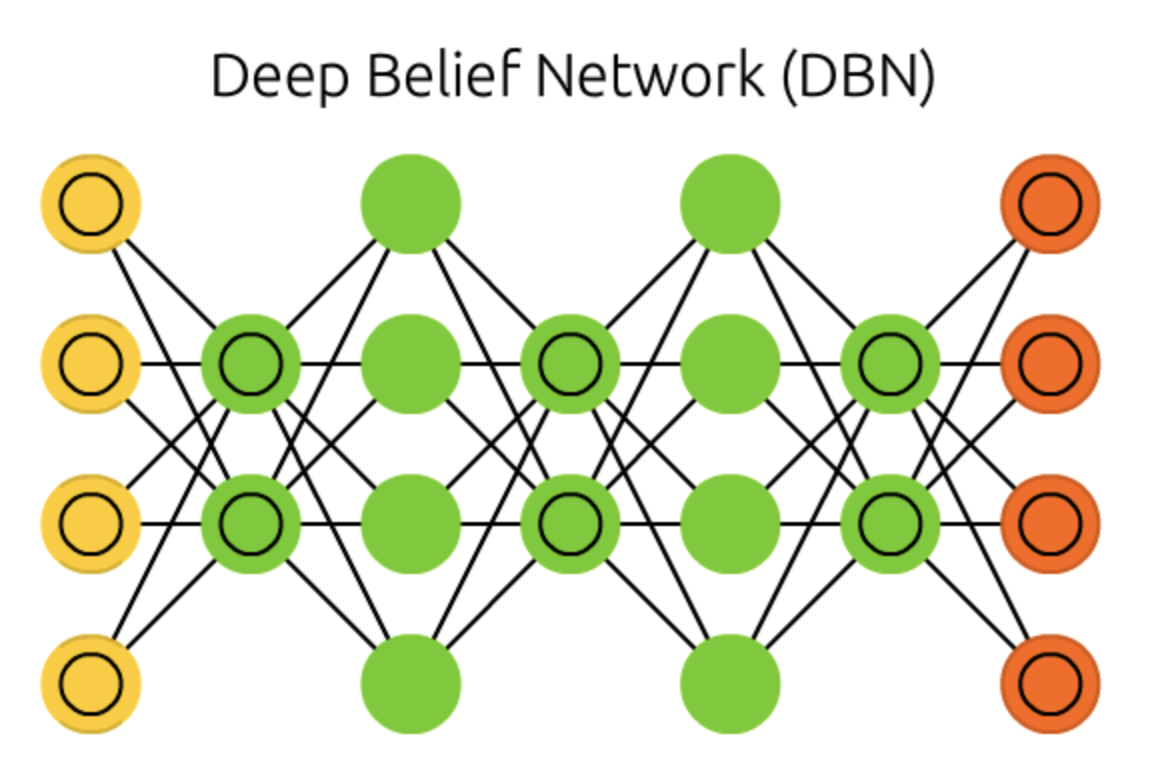

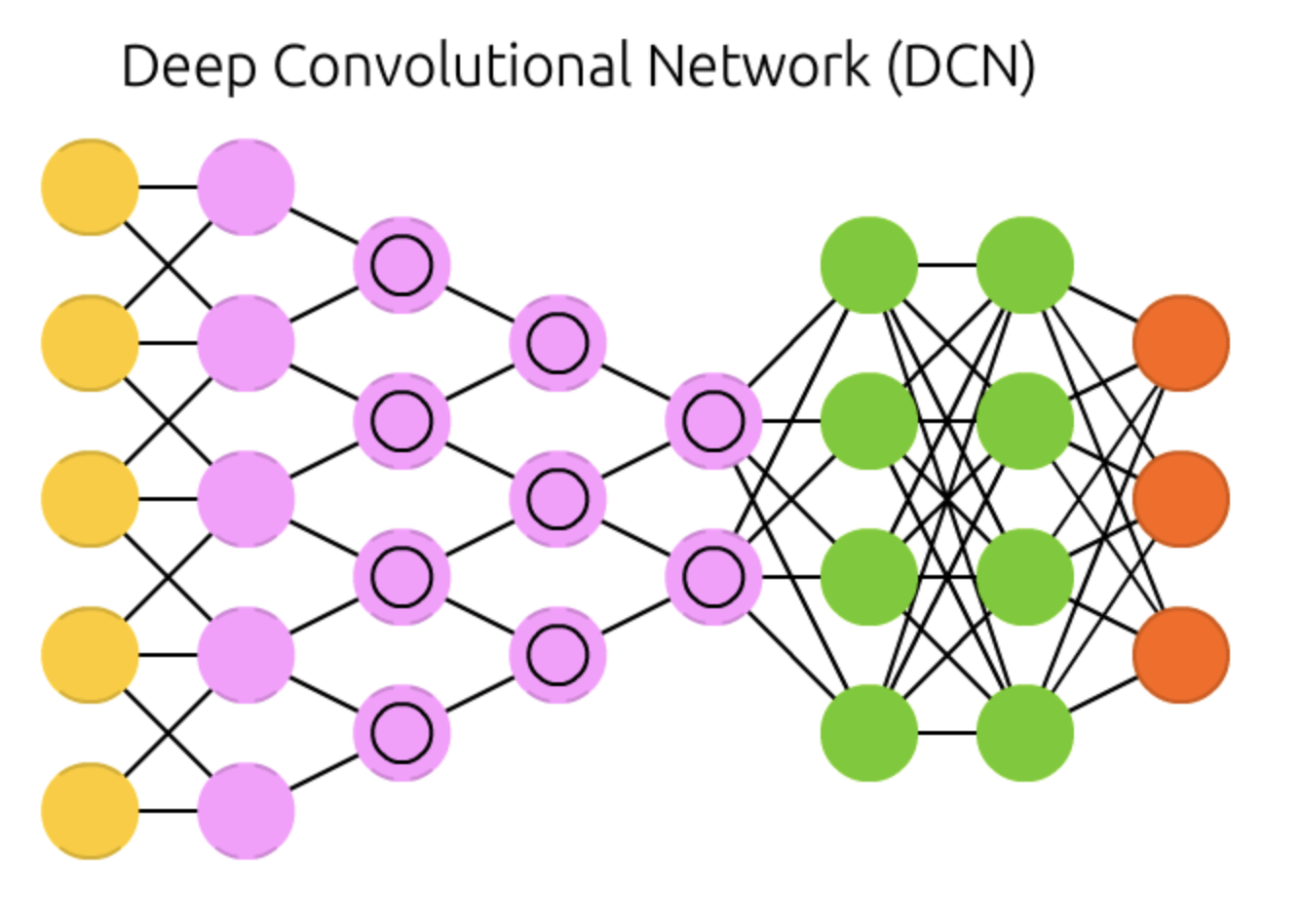

Now those useful networks

Keras

the high-level API of TensorFlow 2

Installation

pip install tensorflowUsage

model = keras.Sequential(

[

keras.Input(shape=input_shape),

layers.Conv2D(32, kernel_size=(3, 3), activation="relu"),

layers.MaxPooling2D(pool_size=(2, 2)),

layers.Conv2D(64, kernel_size=(3, 3), activation="relu"),

layers.MaxPooling2D(pool_size=(2, 2)),

layers.Flatten(),

layers.Dropout(0.5),

layers.Dense(num_classes, activation="softmax"),

]

)

model.summary()

batch_size = 128

epochs = 15

model.compile(loss="categorical_crossentropy", optimizer="adam", metrics=["accuracy"])

model.fit(

x_train, y_train, batch_size=batch_size,

epochs=epochs, validation_split=0.1

)Evaluation

score = model.evaluate(x_test, y_test, verbose=0)

print("Test loss:", score[0])

print("Test accuracy:", score[1])Any questions?

PV226: Neural Networks

By Lukáš Grolig

PV226: Neural Networks

- 629