PV226 ML: Boosting

Content of this session

Where to use gradient boosting

Catboost

Tree algorithms

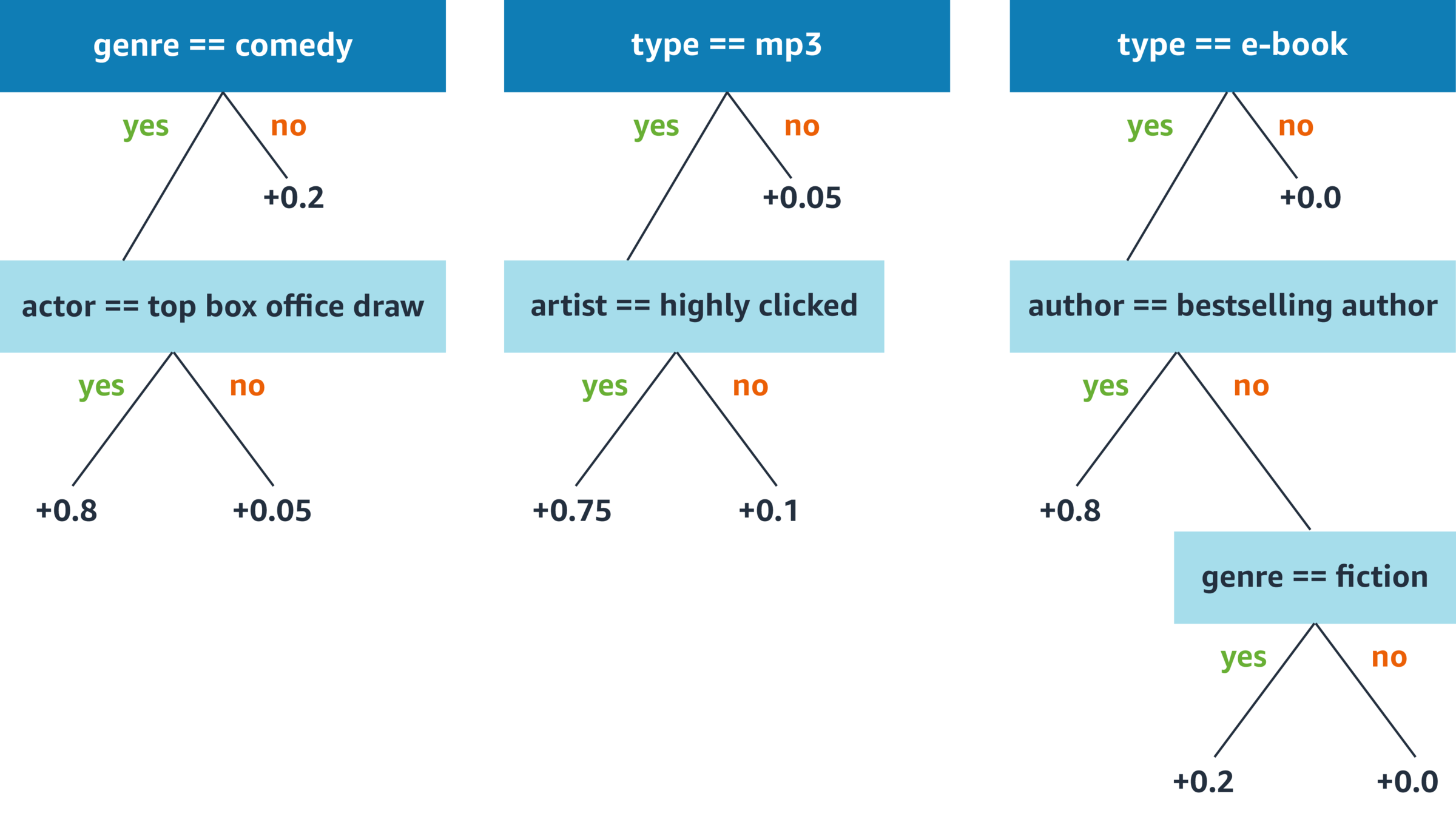

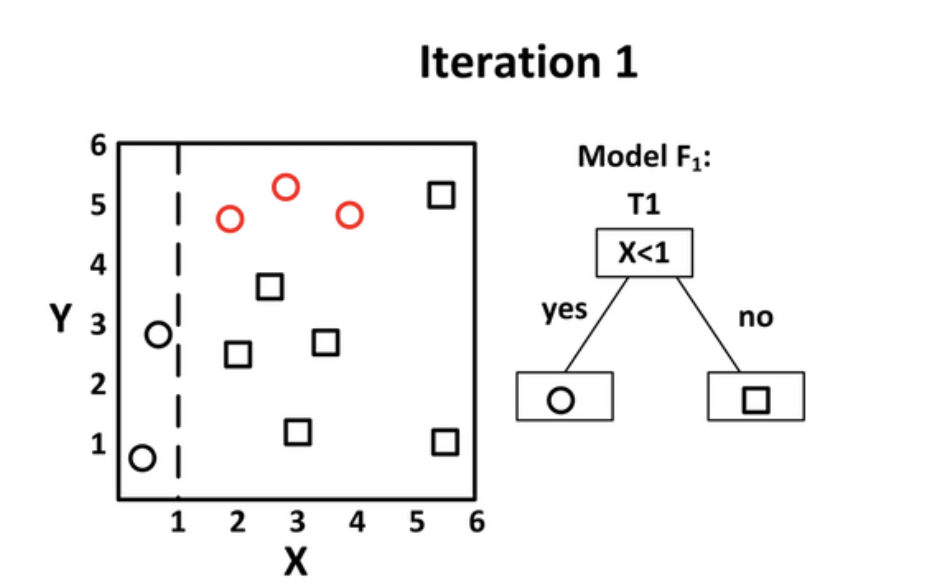

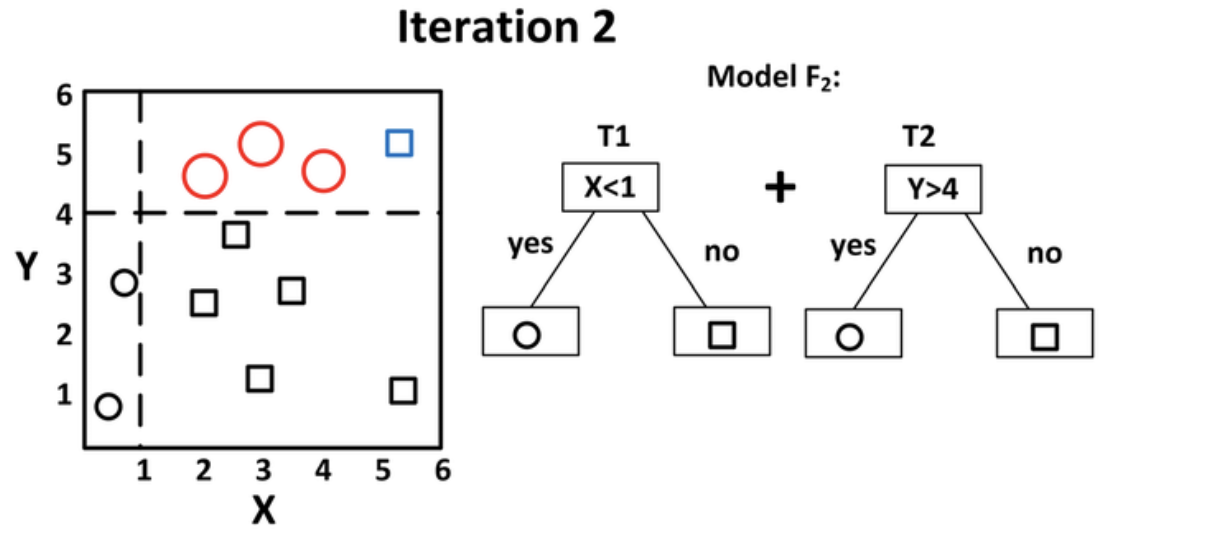

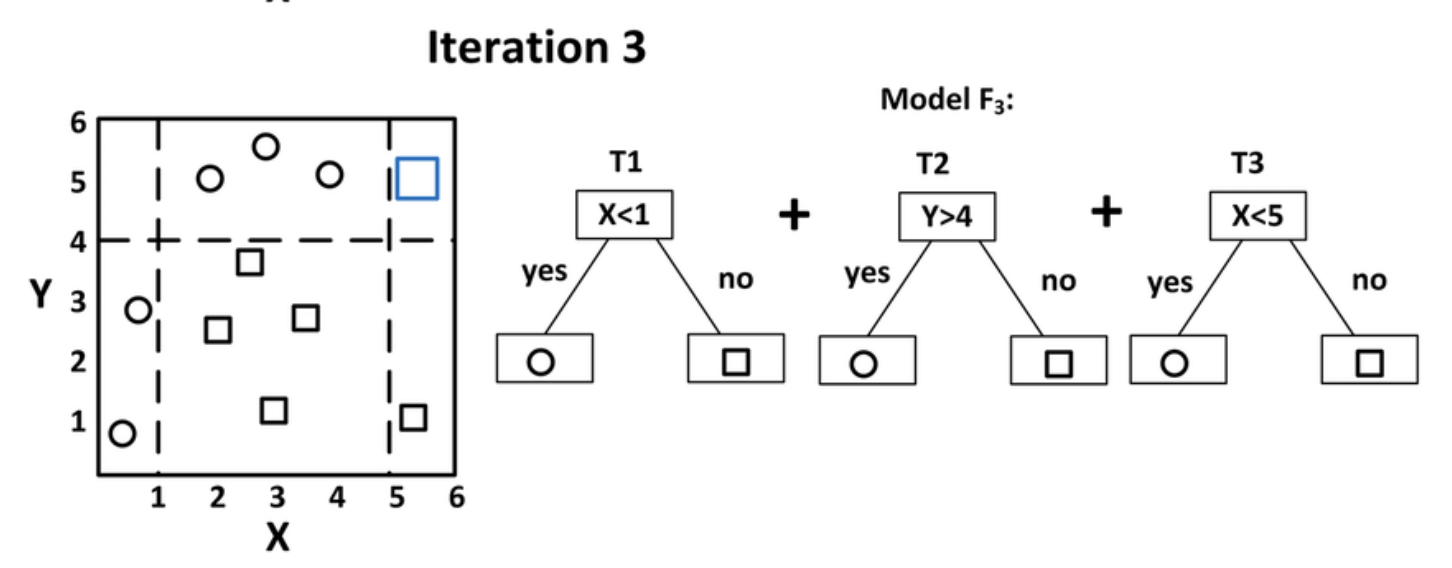

So what is gradient boosting?

Gradient boosting involves three elements:

- A loss function to be optimized.

- A weak learner to make predictions.

- An additive model to add weak learners to minimize the loss function.

When to use it?

primarily on heterogeneous data

for both classification and regression

that means it is usualy your first choice

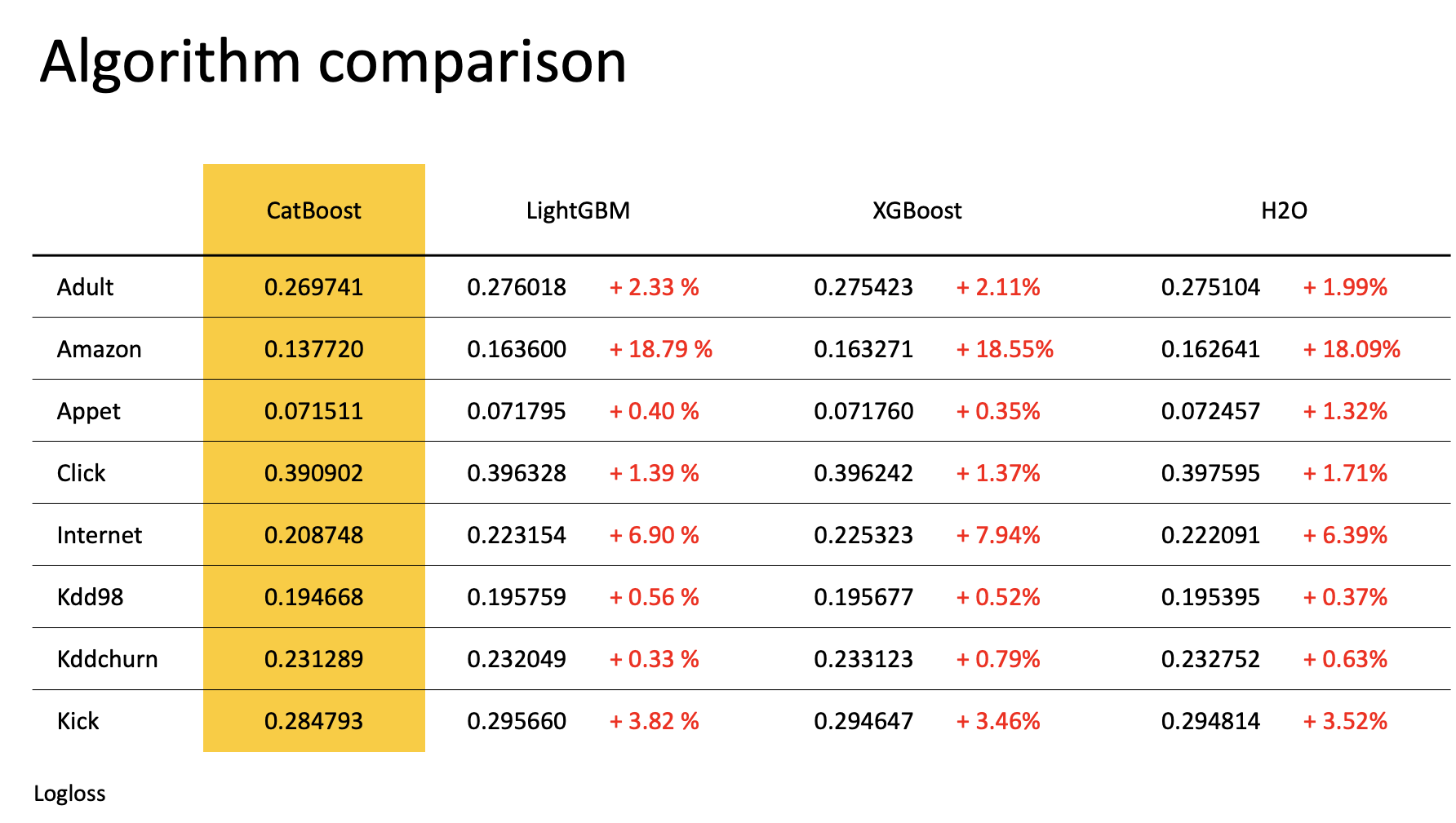

Commonly used algorithms

Random Forest

XGBM

LGBM

CatBoost

Catboost

Why to use it?

- easy to use - like AutoML

- great default settings

- means good results fast

- no need for much data preparation

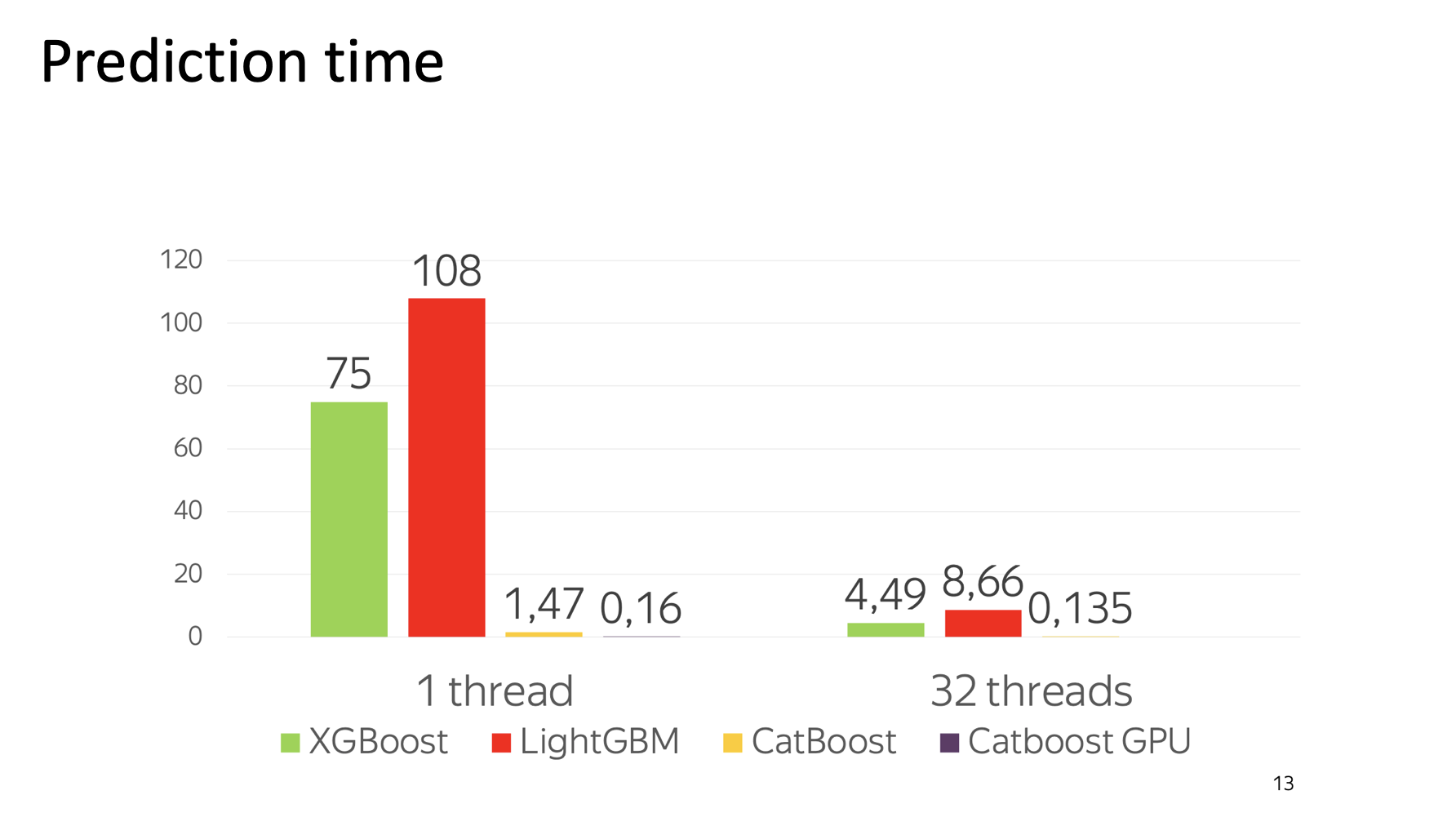

- blazing fast

Options

- Regression

- Multiregression

- Classification

- Multiclassification

- Ranking

Installation

pip install catboost shap ipywidgets sklearn

jupyter nbextension enable --py widgetsnbextensionUsage

from catboost import CatBoostClassifier, Pool

train_data = Pool(data=[[1, 4, 5, 6],

[4, 5, 6, 7],

[30, 40, 50, 60]],

label=[1, 1, -1],

weight=[0.1, 0.2, 0.3])

model = CatBoostClassifier(iterations=10)

model.fit(train_data)

preds_class = model.predict(train_data)Any questions?

PV226: Boosting

By Lukáš Grolig

PV226: Boosting

- 504