SEO in Angular

×

Angular Universal

Expectations

What you cannot expect

- Deep dive into Googlebot

- Covering all meta-tags for all social-media bots

@MartinaKraus11

What you can expect

- SEO best practices

- Introduction of crawling mechanism

- Improving SEO in your Angular App

- Introducing Angular Universal

GDE in Angular

Women Techmakers Ambassador

Trainer and Consultant

@MartinaKraus11

That's Me

Martina Kraus

martinakraus

@MartinaKraus11

@MartinaKraus11

Schedule

@MartinaKraus11

13:30 - 15:00

15:30 - 17:00

15:00 - 15:30

Part 1: SEO in Angular

Coffee break

Part 2: Angular Universal

Requirements for Hands On

@MartinaKraus11

-

Node.js (LTS)

-

Angular.CLI (Angular >= 10.x)

-

Google Chrome

-

Code Editor

Material

@MartinaKraus11

Example Repo and exercises:

https://github.com/martinakraus/angular-days-seo-2021

Slides:

https://slides.com/martinakraus/seo-workshop

Why SEO ? 🤔

How can we benefit from SEO?

@MartinaKraus11

- Increases organic discovery

- provides 24/7 Promotion

- Targets entire marketing funnel

- improves credibility and Trust

- good Return of investment

@MartinaKraus11

How does a search engine bot works?

Basic mechanism

@MartinaKraus11

Crawling

Indexing

Crawling

@MartinaKraus11

- process of visiting new and updated pages

- specific algorithm to determine how often and when to crawl

- holds a list of web page URLs

- mobile and desktop crawler

- changes, dead links etc. will be noted

Indexing

@MartinaKraus11

- understand what the page is about

- textual content, tags, attributes

- processing content types

- prevent indexing with noindex directive

Serving (and ranking)

@MartinaKraus11

- matching search query against the index

- based on different factors

- user-experience

- fast loading

- mobile friendly

@MartinaKraus11

- Feed the bot with information

- Getting content indexed quickly

Keys to successful SEO

Part 1: SEO in Angular

@MartinaKraus11

Meta-Tags

Sitemaps

robots.txt

Measurement

@MartinaKraus11

Meta-Tags

Sitemaps

robots.txt

Measurement

Part 1: SEO in Angular

@MartinaKraus11

Meta-Tags

- are not displayed on the page

- defines metadata about an HTML

- search bots checks specific meta tags for indexing

- description

- title

- instructions

@MartinaKraus11

Meta-Tags

@MartinaKraus11

Important Meta-Tags

| Name | Description |

|---|---|

| <meta name="description" content="..." /> | provide short description of the page |

| <meta name="robots" content="..., ..." /> | define behavior of search engine bot |

| <meta name="viewport" content="..."> | how to render a page on mobile device |

| <meta name="rating" content="adult" /> | labels a page as adult content |

@MartinaKraus11

Instructions for bots

<meta name="robots" content="noindex, nofollow" />

noindex: Do not show this page in search results.

nofollow: Do not follow the links on this page.

unavailable_after: [date/time]:

Do not show this page in search results after the specified date/time

@MartinaKraus11

Bot specific Meta Tags

<meta name="google" content="notranslate" />

<meta name="google" content="nopagereadaloud" />

<meta name="twitter:title" content="..." />

@MartinaKraus11

Meta-Tags in Angular

@MartinaKraus11

Meta-Tags in Angular

@MartinaKraus11

Meta-Tags in Angular

@MartinaKraus11

Meta-Tags in Angular

Exercise #1

@MartinaKraus11

Best practices

- Always use a different Title for each page

- Always use `Description` Meta-Tag

- no bot is indexing the keywords-Meta-Tag

- Update Title and Description for all pages to provide even more information

Page-Title

Description

@MartinaKraus11

Updating Meta-Tags

@MartinaKraus11

Updating Meta-Tags

@MartinaKraus11

Updating Meta-Tags

Exercise #2

@MartinaKraus11

Meta-Tags

Sitemaps

robots.txt

Measurement

Part 1: SEO in Angular

@MartinaKraus11

Sitemaps

- a file provides information about pages, media and other files

- helps bots to discover all of your links

- defines when page / media was updated (and needs to be indexed again)

@MartinaKraus11

When do I need a Sitemap?

- Your App has many isolated pages not linked properly

- Your App has a lot of rich media content

- Completely new App with rare links from other pages

- Your App is really large and bots could easily 'overlook' content

@MartinaKraus11

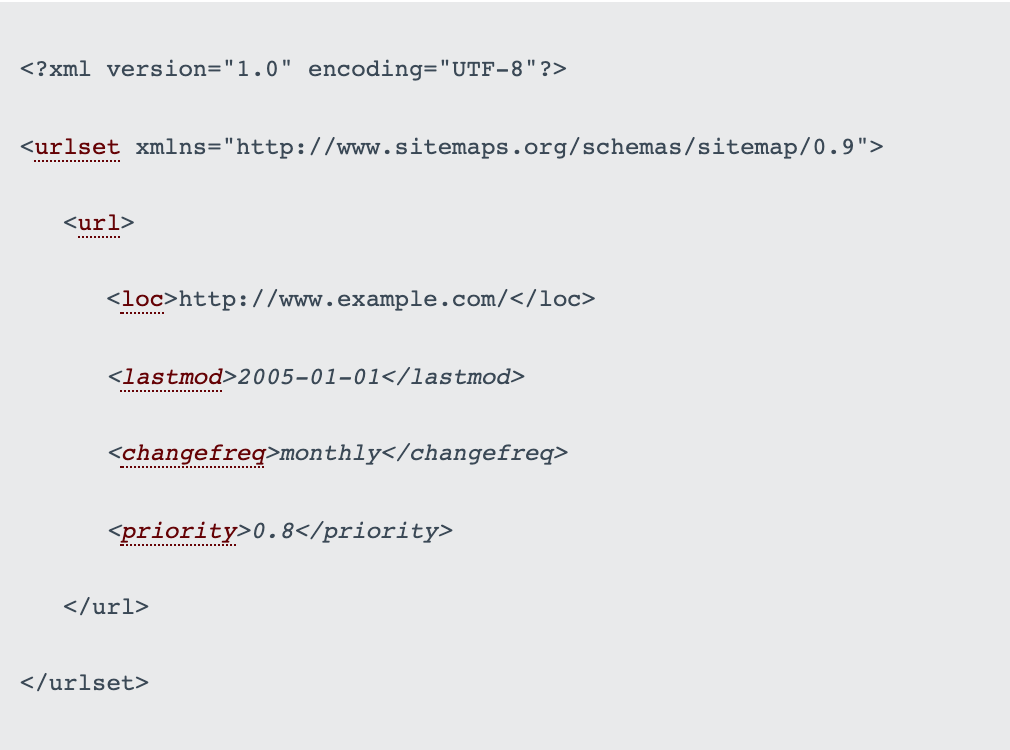

Example

https://www.sitemaps.org/protocol.html

@MartinaKraus11

Sitemap Configuration

| Attribute | Description |

|---|---|

| <loc> | Url of the page |

| <lastmod> | Date of last modification (should be in W3C Datetime format) |

| <changefreq> | How frequently the page is likely to change: always, hourly, daily, weekly, monthly, yearly, never |

| <priority> | The priority of this URL relative to other URLs on your site |

@MartinaKraus11

Creating a Sitemap manually

- Only recommended if you have just a few urls

- Create a sitemap.xml File inside your root Folder

- Needs to follow specific schema:

- http://www.sitemaps.org/schemas/sitemap/0.9/sitemap.xsd

@MartinaKraus11

Auto-generate Sitemap

- Various npm packages:

https://www.npmjs.com/package/sitemap

- Online generation tools:

https://www.xml-sitemaps.com/

DEMO

@MartinaKraus11

Meta-Tags

robots.txt

Measurement

Part 1: SEO in Angular

Sitemaps

@MartinaKraus11

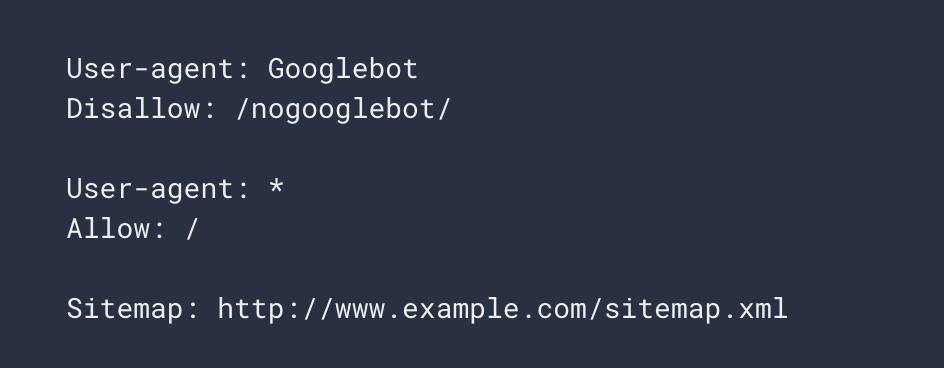

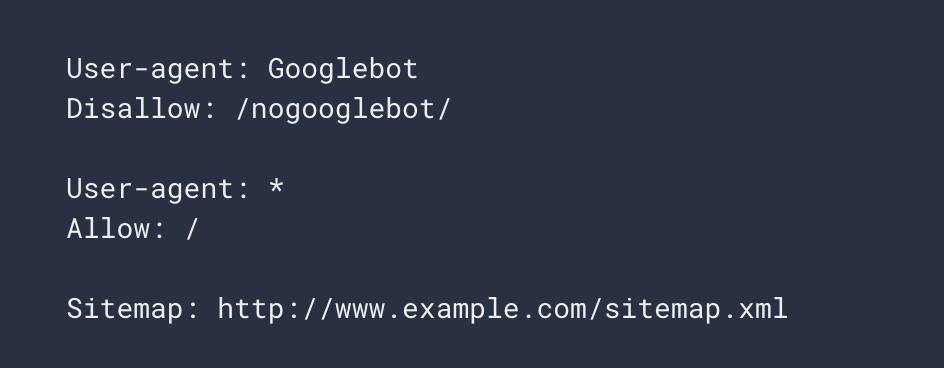

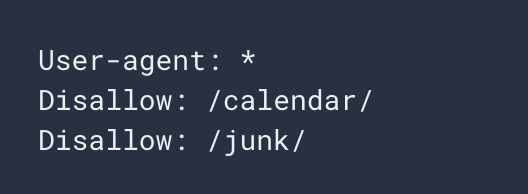

robots.txt

- tells search engine crawlers which URLs the crawler can access on your site

- avoid overloading your site with requests

- prevent media files appearing on search results

- manage crawling traffic on your website

- Most Content Management System providing robots.txt for you already

@MartinaKraus11

robots.txt - Limitation

- crawler bots are like browser: everyone interprets instructions differently

- not all instructions are supported by every crawler

- A page that's disallowed in robots.txt can still be indexed if linked to from other sites.

@MartinaKraus11

robots.txt - Example #1

@MartinaKraus11

robots.txt - Example #1

Useragent Googlebot is not allowed to crawl any URL

starting with /nogooglebot/

Location of sitemap.xml

@MartinaKraus11

Example #2

Disallow crawling of a directory and its contents

@MartinaKraus11

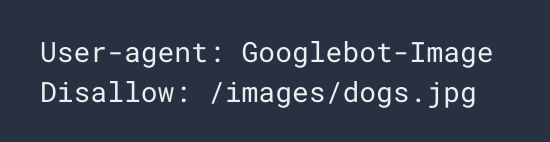

Example #3

Block a specific image from Google Images

@MartinaKraus11

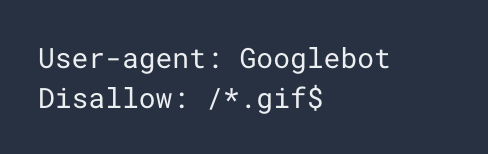

Example #4

Disallow crawling of files of a specific file type

DEMO

@MartinaKraus11

Meta-Tags

Measurement

Part 1: SEO in Angular

Sitemaps

robots.txt

@MartinaKraus11

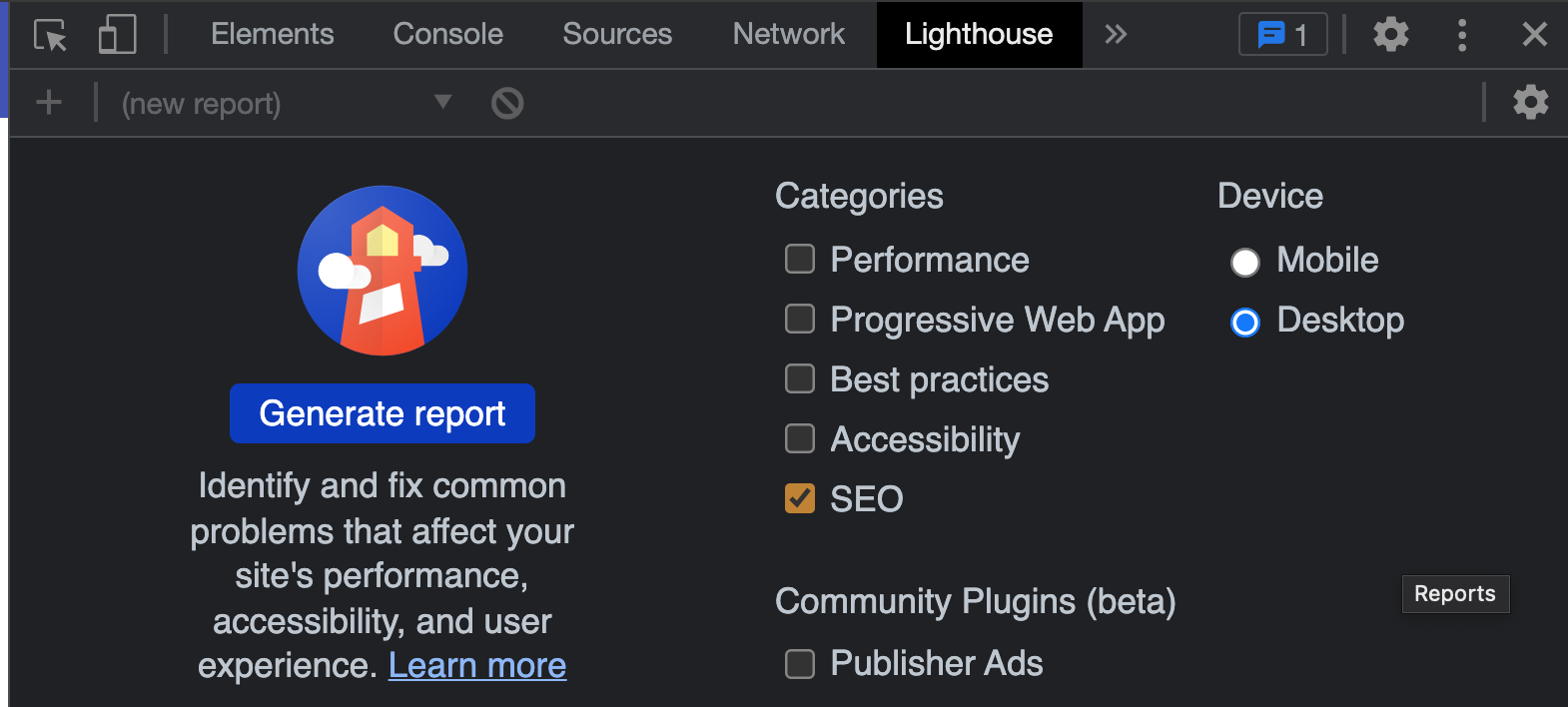

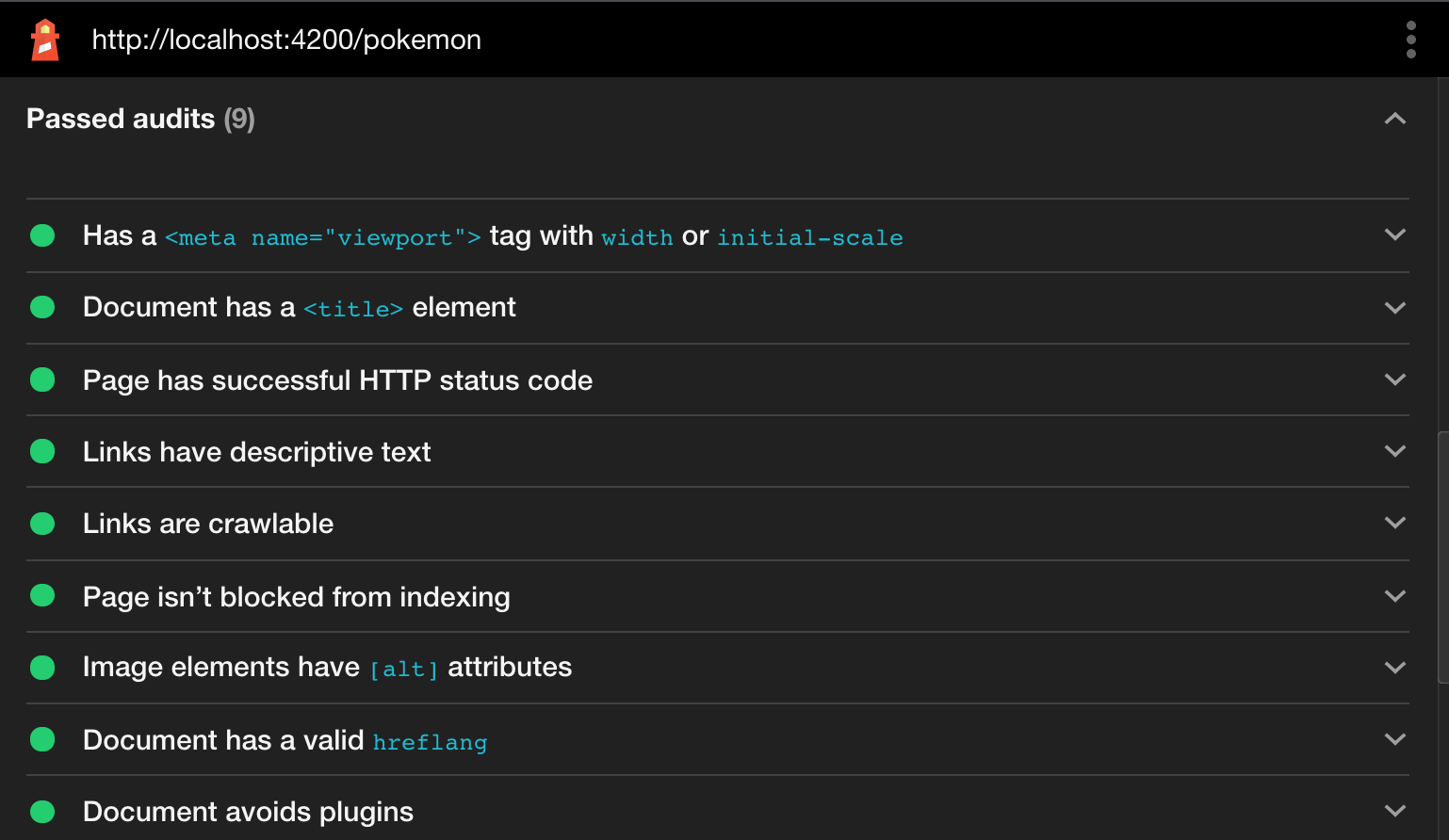

Validating your SEO

- Using Browser Extensions like Lighthouse:

https://developers.google.com/web/tools/lighthouse

- Google Search Console

@MartinaKraus11

Lighthouse

DEMO

@MartinaKraus11

Lighthouse

Bringing it all together

@MartinaKraus11

SEO Ready Checklist

- Every Site has a specific description and title

-

Your URLs are readable

-

Your website is mobile friendly

-

Your URLs are referenced in a sitemap

-

Everything is directly accessible through an URL

-

Your widgets use crawlable a tags with href attributes

-

Your content can get indexed quickly

Part 2:

Angular Universal

The issues of SPAs

@MartinaKraus11

Search engine bots crawling index.html

@MartinaKraus11

Crawling index.html

@MartinaKraus11

@MartinaKraus11

Googlebot ❤️ JavaScript

Googlebot

@MartinaKraus11

Crawling

Indexing

Rendering

other search engine bots?

@MartinaKraus11

Sources: https://moz.com/blog/search-engines-ready-for-javascript-crawling

@MartinaKraus11

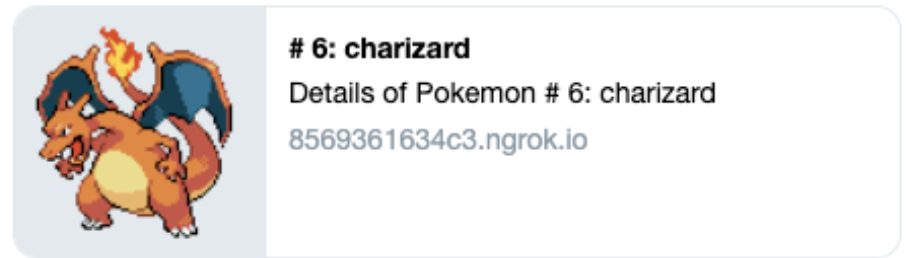

What about social media bots?

Social media bots

@MartinaKraus11

- used to engage in social media

- crawls specific meta tags for preview cards

- no prerendering of JavaScript

Example Twitter Bot

@MartinaKraus11

@MartinaKraus11

Angular Universal to the rescue

@MartinaKraus11

In a nutshell

- Solution for Pre-Render your Angular Code

- implement different options for pre-redering:

- build-time / ahead of time

- on the-fly / on user request

- options to deliver first server-side rendered HTML and full JavaScript rendered HTML afterwards

@MartinaKraus11

How does it work?

- Creates different entry point

- Creates different tsconfig/ dist output path

- Using different rendering layers

- Swaps out different implementation of specific services

@MartinaKraus11

Installation

@MartinaKraus11

Build && run

DEMO

@MartinaKraus11

Build && Run

Exercise #3

@MartinaKraus11

And the social media bots?

DEMO

@MartinaKraus11

Preview Card Validator

@MartinaKraus11

Preview Card Validator

Exercise #4

@MartinaKraus11

That's not enough?

So what about performance?

DEMO

@MartinaKraus11

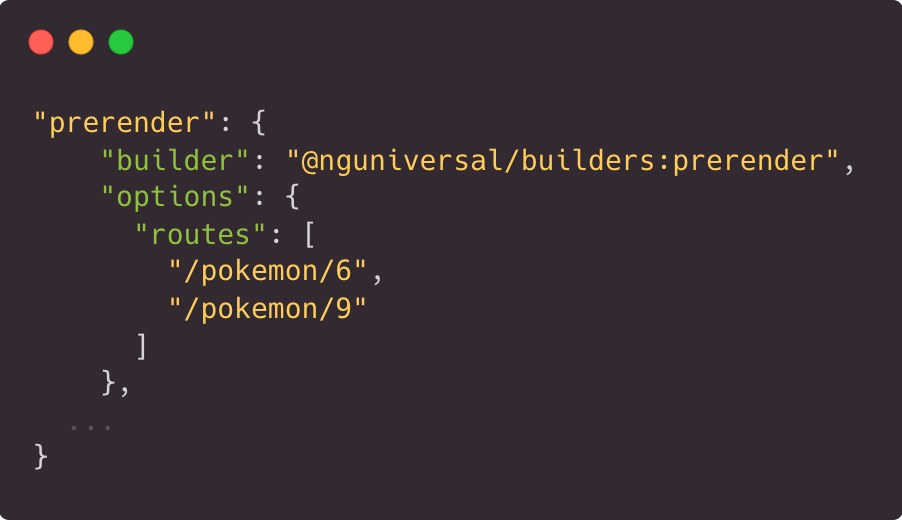

Prerendering

- Angular universal offers pre-rendering

- Renders HTML ahead of time

- configure routes to pre-render

- provides option to pre-render just for specific user agents (like crawler bots)

@MartinaKraus11

Prerendering specific routes

angular.json

@MartinaKraus11

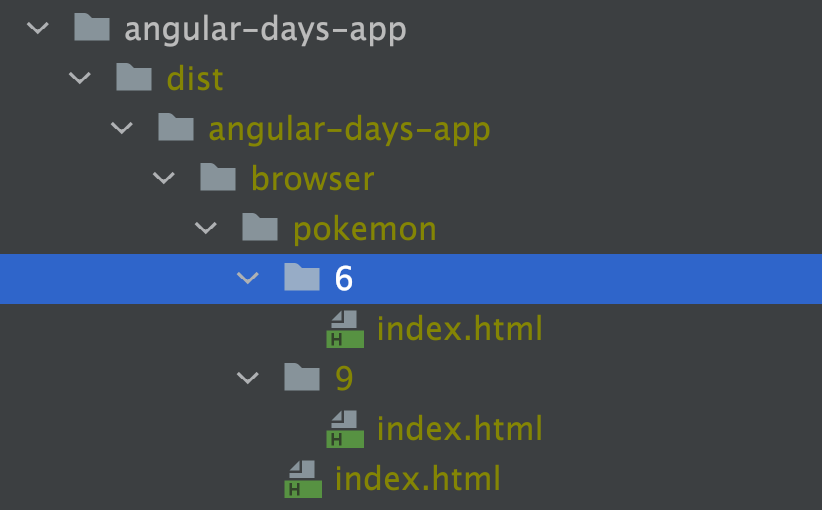

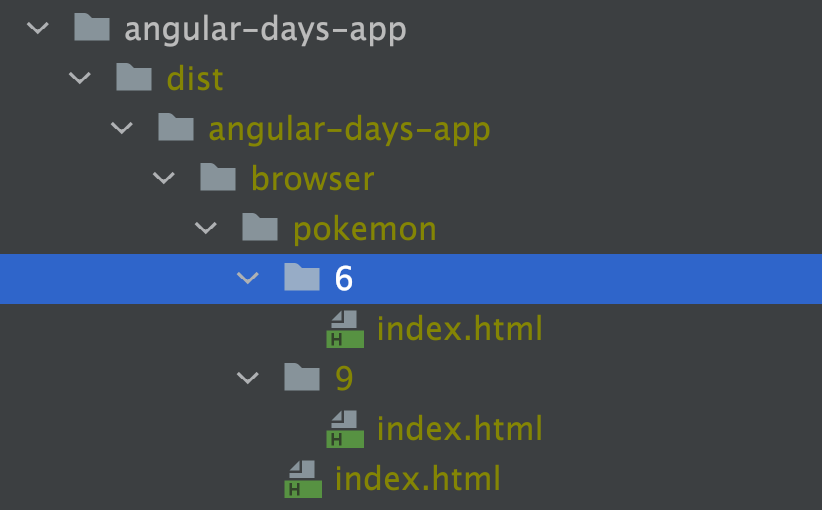

Prerendering specific routes

Will be stored as separated index.html files

npm run prerender

@MartinaKraus11

Prerendering specific routes

Will be stored as separated index.html files

npm run prerender

Exercise #5

@MartinaKraus11

Why not using Angular Universal?

- increases complexity (real server involved)

- lots more code - logging, monitoring

- harder mental model

- build times (but it's way better with bazel)

@MartinaKraus11

Summary

- pre-render HTML to satisfy social media bots

- Angular Universal for Server Side Rendering

- Using a different rendering layer

- You can also Pre-Render ahead of time

- and even better for performance :)

kraus.martina.m@googlemail.com

@MartinaKraus11

martina-kraus.io

Slides: https://slides.com/martinakraus/seo-workshop

Thank you !!!

Angular Universal - SEO in Angular

By Martina Kraus

Angular Universal - SEO in Angular

Angular SEO best practices and introduction in Angular Universal

- 816