HOW TO TRICK SQL INTO DOING ALL THE WORK FOR YOU

PyGotham 2018

Molly Leen

Molly Leen

Senior Software Engineer @

At Kyruus we help health systems match patients with the right providers and enhance patient access enterprise-wide

Who am I?

Talk Overview

LETS ADD THIS LATER

The Problem

- Large amount of data

- 2x the number of rows currently in our system

"BIG" data

Current Infrastructure

raw data

API server

- Post each record 1 at a time

- Estimated time: ~48 hours

POST

{

"first_name": "Molly",

"last_name": "Leen",

"conferences": ["PyGotham"]

}

ingestion script

INSERT

Why is this so slow?

- Network request for each record

- Single threaded

- API commits to db on EACH request

Processing one by one is SLOW

Can we process in bulk?

Use COPY to load all the rows in one command, instead of using a series of INSERT commands. The COPY command is optimized for loading large numbers of rows; it is less flexible than INSERT, but incurs significantly less overhead for large data loads.

From the PostgreSQL docs:

The Plan

API server

ONE request

raw data

ingestion script

COPY

What is COPY?

https://www.postgresql.org/docs/9.4/static/sql-copy.html

COPY takes data from a file which is formatted to match the structure of the table

"Spongebob","Squarepants","sponge"

"Patrick","Star","starfish"

"Squidward","Tentacles","squid"

"Eugene","Krabs","crab"

"Sandy","Cheeks","squirrel"

"Sheldon","Plankton","plankton"

"Gary","The Snail","snail"fname | lname | type

---------------------

| | COPY needs a structured file...

...but this file could be very large....

...we don't want to have to download the file to our API server....

...let's define some requirements....

...and the structure of the file needs to exactly match the table....

Requirements

- Bulk load data with COPY

- Do not download file to the Flask API server

- Create records as JSON as if creating only one record

Requirement #1

Bulk load data with COPY

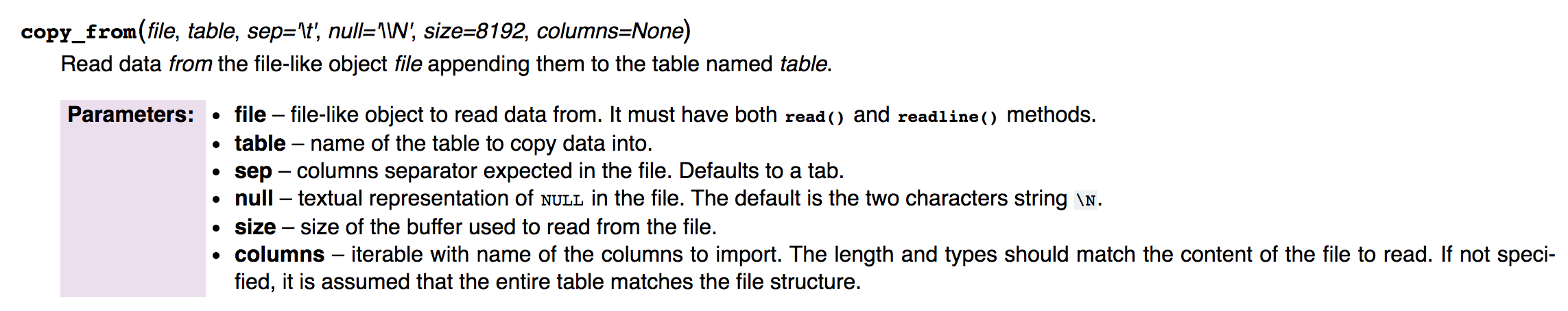

Psycopg2

- PostgreSQL adapter for use in Python apps/scripts/etc.

- reads data from a file-like object

- object must have read() and readline() methods

What is a file-like object?

According to the python docs:

- An object exposing a file-oriented API (with methods such as read() or write()) to an underlying resource. Depending on the way it was created, a file object can mediate access to a real on-disk file or to another type of storage or communication device (for example standard input/output, in-memory buffers, sockets, pipes, etc.).

- https://docs.python.org/3/glossary.html#term-file-object

Requirement #2

Do not download full file

We can use a file-like object.

Extracting data from s3

With a Pre-Signed s3 URL and python requests, we can iterate over the data line by line using a generator

- python requests: Python library for HTTP requests

- Pre-Signed s3 URL: Authenticated url to access s3 file via HTTP requests

- Generator: A python class that behaves like an iterator

with requests.get(url, stream=True) as data:

# Data is now a generator we can access either

# in a loop or by calling data.next()Generator -> File

We have a generator...

...We need a file-like object with read() and readline() methods...

...Let's build one!

def __init__(self, s3_data):

self._data = s3_data # generator of s3 data def readline(self):

try:

return self._data.next()

except StopIteration:

# Generators end with a StopIteration

# Files end with an empty string

return '' def read(self, size=-1):

# A file-like object stream takes

# a size param for read we'll

# accept but will ignore param

# because the copy reads line

# by line

return self.readline()Our File-Like Object

class MyFileLikeObject(object): def readline(self): def readline(self):

return self._data.next()Requirements

- Bulk load data with COPY

- Do not download file to disk

- Create records as JSON as if creating only one record

Requirement #3

Create records as JSON as if creating only one record

File as JSON

- Need to read file from s3

- Data needs to be in the same format as a single post

- s3 file will be JSONLines, 1 row of JSON per entry

{"fname": "Spongebob", "lname": "Squarepants", "type": "sponge"}

{"fname": "Patrick", "lname": "Star", "type": "starfish"}

{"fname": "Squidward", "lname": "Tentacles", "type": "squid"}

{"fname": "Eugene", "lname": "Krabs", "type": "crab"}

{"fname": "Sandy", "lname": "Cheeks", "type": "squirrel"}

{"fname": "Sheldon", "lname": "Plankton", "type": "plankton"}

{"fname": "Gary", "lname": "The Snail", "type": "snail"}Example data:

File Structure for COPY

- COPY requires the file it reads from is in the same format as the table

- So for the example table:

"Spongebob","Squarepants","sponge"

"Patrick","Star","starfish"

"Squidward","Tentacles","squid"

"Eugene","Krabs","crab"

"Sandy","Cheeks","squirrel"

"Sheldon","Plankton","plankton"

"Gary","The Snail","snail"fname | lname | type

---------------------

| | Table

File for COPY

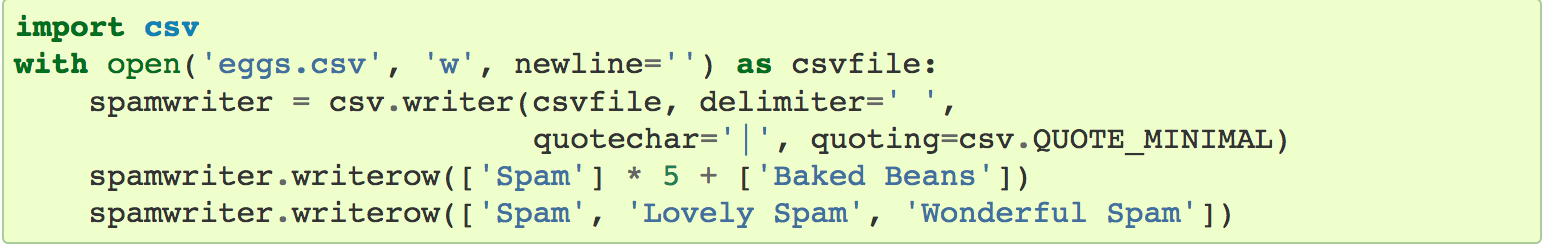

CSV Writer

- CSV writer will ensure all formatting is properly formatted and escaped

- CSV writer writes to a file-like object that has a write() fn

https://docs.python.org/3/library/csv.html#csv.writer

CSV Writer

CSV writer writes many lines to a single file...

...time for another file-like object..?

...we don't want to write all the data to a file on disk (Req. #1)...

...COPY expects data one line at a time...

CSV File-Like Object

class FileQueue(Queue):

def read(self):

return self.get()

def write(self, data):

self.put(data)- Use a FIFO queue as a file-like object so we can easily read and write data line by line

def __init__(self, s3_data):

self._data = s3_data # generator of s3 data def readline(self):

try:

return self._data.next()

except StopIteration:

# Generators end with a StopIteration

# Files end with an empty string

return '' def read(self, size=-1):

return self.readline()Our File-Like Object

class MyFileLikeObject(object): except StopIteration:

return ''data = # Assume we have a function that

# simply returns the values from

# the json data in order in a list

data_for_csv = prep_for_csv(data) self._file_queue = FileQueue()

self._writer = csv.writer(self._file_queue)self._writer.writerow(data_for_csv)return self._file_queue.read()But wait...

How does this "trick SQL into doing all the work for you"?

with requests.get(url, stream=True) as data:

file_like_data = MyFileLikeObject(s3_data=data)

table = 'spongebob_characters'

columns = ['fname', 'lname', 'type']

# Assume we have a psycopg2 cursor which

# is a class that has a connection to the

# database and executes SQL such as copy_from

cursor.copy_from(file_like_data, table,

columns=columns, sep=',')The copy_from command reads from our file

and all processing happens when data is read!

BUT WAS IT FASTER?!

Original Estimate: ~2 days

New Method: ~20 minutes!

Takeaways

- Optimizations can be found at all layers

- Objects may not always be what they seem

If it walks like a duck and it quacks like a duck, then it must be a duck

Copy of pygotham

By Molly Leen

Copy of pygotham

- 1,021